|

--- |

|

license: other |

|

--- |

|

I am learning how to make LoRAs with Oobabooga, these data are for experimental and research purposes. |

|

|

|

This is a Medical Knowledge LoRA made for use with this model: llama-2-70b-Guanaco-QLoRA-fp16 |

|

https://huggingface.co/TheBloke/llama-2-70b-Guanaco-QLoRA-fp16) (you can use the quantized version of the model too). |

|

|

|

--- |

|

|

|

Model lineage: |

|

|

|

https://huggingface.co/timdettmers/guanaco-65b -> https://huggingface.co/Mikael110/llama-2-70b-guanaco-qlora -> https://huggingface.co/TheBloke/llama-2-70b-Guanaco-QLoRA-fp16 |

|

|

|

--- |

|

|

|

Training Data and Formatting: |

|

|

|

Training data are garnered from: https://huggingface.co/datasets/BI55/MedText |

|

|

|

These training data were then formatted for use with the "Raw text file" training option in the Oobabooga text-generation-webui: |

|

(https://github.com/oobabooga/text-generation-webui) |

|

|

|

Training parameters are in the training_parameters.json file and there is a screenshot of the UI with the correct settings. |

|

|

|

--- |

|

|

|

Examples and Additional Information: |

|

|

|

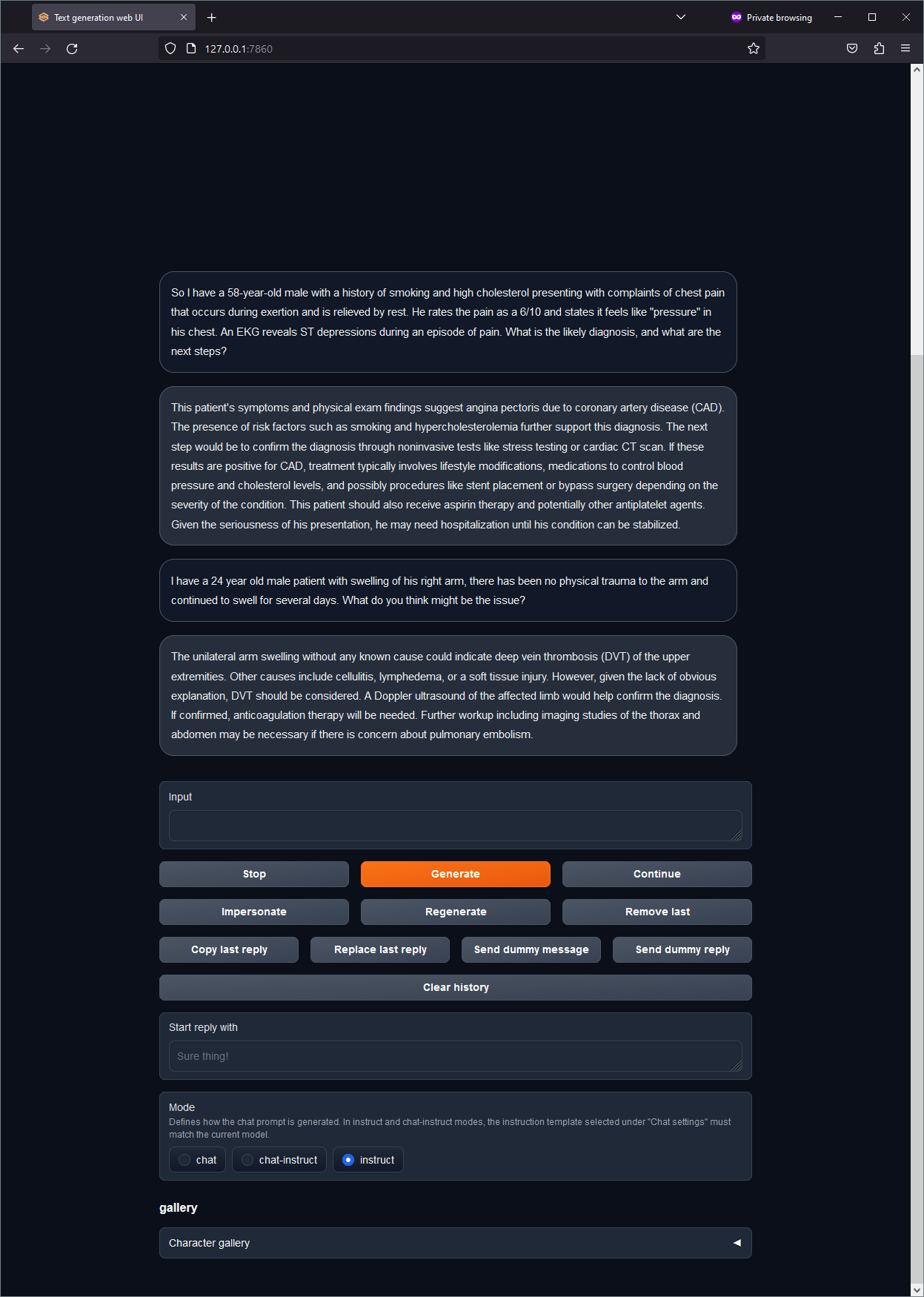

Check out the png files in the repo for an example conversation as well as other pieces of information that beginners might find useful. |

|

|

|

|

|

|

|

--- |

|

|

|

Current/Future Work: |

|

|

|

1. Finish training with "Structed Dataset" I have a .json file with a structured dataset for the Guanaco model, but it takes significantly longer to process in the Oobabooga webui. |

|

2. Train the vanilla LlamaV2 70B model, with Raw and Structured data. |

|

3. Merge LoRA with LLM so you don't need to load the LoRA seperately. |

|

|

|

--- |

|

|

|

Use at own risk, I am using this repo to both organize my results and potentially help others with LoRA training. |

|

It is not the intention of this repo to purport medical information. |

|

|

|

I want to thank and acknowledge the hard work of the people involved in the creation of the dataset and Guanaco models/LoRA! Your work is greatly appreciated <3 |