Sapphire 7B

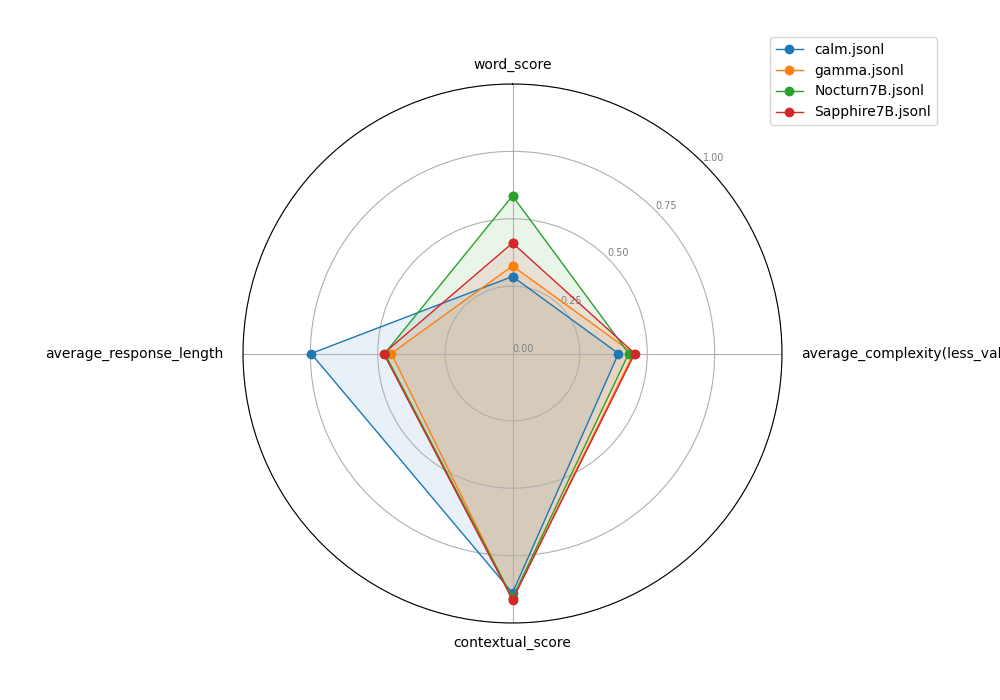

- word score: frequency of erotic words

- average complexity: it measures the diversity of the model's output per sentence. It it's low, it means the model tends to be repetitive and/or monotonous.

- contextual score: how well the model accords with the given context on a whole

- average response length: verbatim

result sample: Sapphire7B.md

Model Description

This is a 7B-parameter decoder-only Japanese language model fine-tuned on novel datasets, built on top of the base model Japanese Stable LM Base Gamma 7B. Japanese Stable LM Instruct Gamma 7B

Usage

Ensure you are using Transformers 4.34.0 or newer.

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("Elizezen/Sapphire-7B")

model = AutoModelForCausalLM.from_pretrained(

"Elizezen/Sapphire-7B",

torch_dtype="auto",

)

model.eval()

if torch.cuda.is_available():

model = model.to("cuda")

input_ids = tokenizer.encode(

"吾輩は猫である。名前はまだない",,

add_special_tokens=True,

return_tensors="pt"

)

tokens = model.generate(

input_ids.to(device=model.device),

max_new_tokens=512,

temperature=1,

top_p=0.95,

do_sample=True,

)

out = tokenizer.decode(tokens[0][input_ids.shape[1]:], skip_special_tokens=True).strip()

print(out)

Intended Use

The model is mainly intended to be used for generating novels. It may not be so capable with instruction-based responses. Good at both sfw ans nsfw.

- Downloads last month

- 23

This model does not have enough activity to be deployed to Inference API (serverless) yet. Increase its social

visibility and check back later, or deploy to Inference Endpoints (dedicated)

instead.