Post

4752

Hi everyone,

I am excited to introduce our latest work, LLaMAX. 😁😁😁

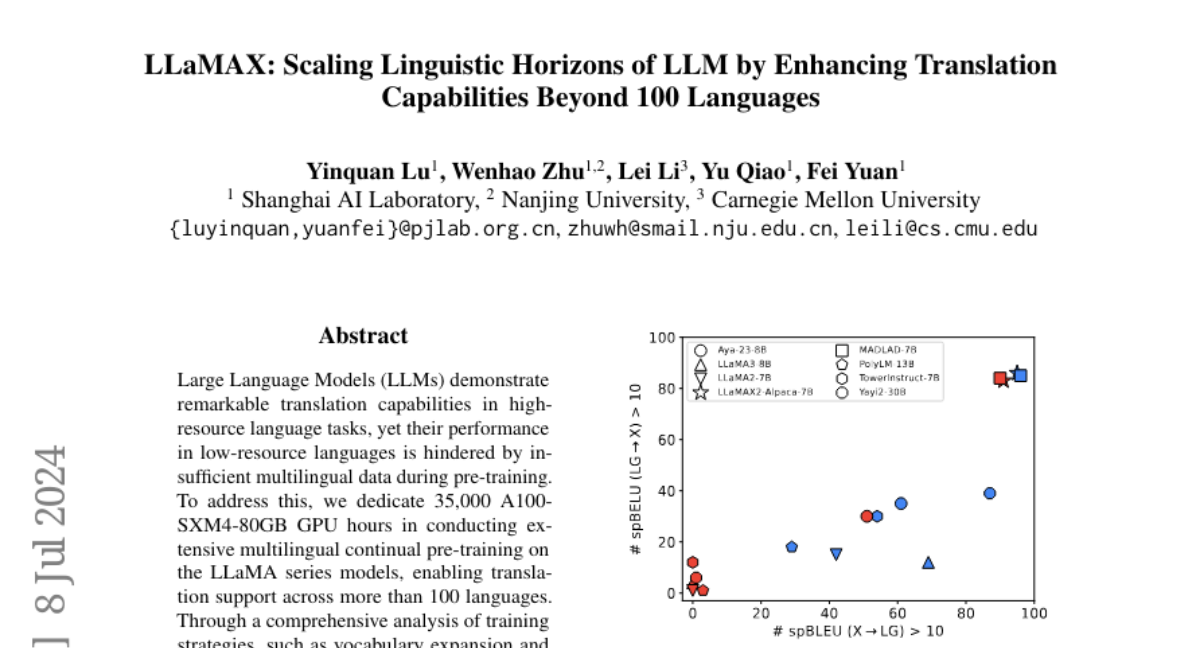

LLaMAX is a powerful language model created specifically for multilingual scenarios. Built upon Meta's LLaMA series models, LLaMAX undergoes extensive training across more than 100 languages.

Remarkably, it enhances its multilingual capabilities without compromising its generalization ability, surpassing existing LLMs.

✨Highlights:

🎈 LLaMAX supports the 102 languages covered by Flores-101, and its performance in translating between low-resource languages far surpasses other decoder-only LLMs.

🎈 Even for languages not covered in Flores-200, LLaMAX still shows significant improvements in translation performance.

🎈 By performing simple SFT on English task data, LLaMAX demonstrates impressive multilingual transfer abilities in downstream tasks.

🎈 In our paper, we discuss effective methods for enhancing the multilingual capabilities of LLMs during the continued training phase.

We welcome you to use our model and provide feedback.

More Details:

🎉 Code: https://github.com/CONE-MT/LLaMAX/

🎉 Model: https://huggingface.co/LLaMAX/

I am excited to introduce our latest work, LLaMAX. 😁😁😁

LLaMAX is a powerful language model created specifically for multilingual scenarios. Built upon Meta's LLaMA series models, LLaMAX undergoes extensive training across more than 100 languages.

Remarkably, it enhances its multilingual capabilities without compromising its generalization ability, surpassing existing LLMs.

✨Highlights:

🎈 LLaMAX supports the 102 languages covered by Flores-101, and its performance in translating between low-resource languages far surpasses other decoder-only LLMs.

🎈 Even for languages not covered in Flores-200, LLaMAX still shows significant improvements in translation performance.

🎈 By performing simple SFT on English task data, LLaMAX demonstrates impressive multilingual transfer abilities in downstream tasks.

🎈 In our paper, we discuss effective methods for enhancing the multilingual capabilities of LLMs during the continued training phase.

We welcome you to use our model and provide feedback.

More Details:

🎉 Code: https://github.com/CONE-MT/LLaMAX/

🎉 Model: https://huggingface.co/LLaMAX/