---

license: other

license_name: flux-1-dev-non-commercial-license

license_link: https://huggingface.co/black-forest-labs/FLUX.1-dev/blob/main/LICENSE.md

language:

- en

library_name: diffusers

pipeline_tag: text-to-image

tags:

- Text-to-Image

- IP-Adapter

- Flux.1-dev

- image-generation

- Stable Diffusion

base_model: black-forest-labs/FLUX.1-dev

---

# FLUX.1-dev-IP-Adapter

This repository contains a IP-Adapter for FLUX.1-dev model released by researchers from [InstantX Team](https://huggingface.co/InstantX), where image work just like text, so it may not be responsive or interfere with other text, but we do hope you enjoy this model, have fun and share your creative works with us [on Twitter](https://x.com/instantx_ai).

# Model Card

This is a regular IP-Adapter, where the new layers are added into 38 single and 19 double blocks. We use [google/siglip-so400m-patch14-384](https://huggingface.co/google/siglip-so400m-patch14-384) to encode image for its superior performance, and adopt a simple MLPProjModel of 2 linear layers to project. The image token number is set to 128. The currently released model is trained on the 10M open source dataset with a batch size of 128 and 80K training steps.

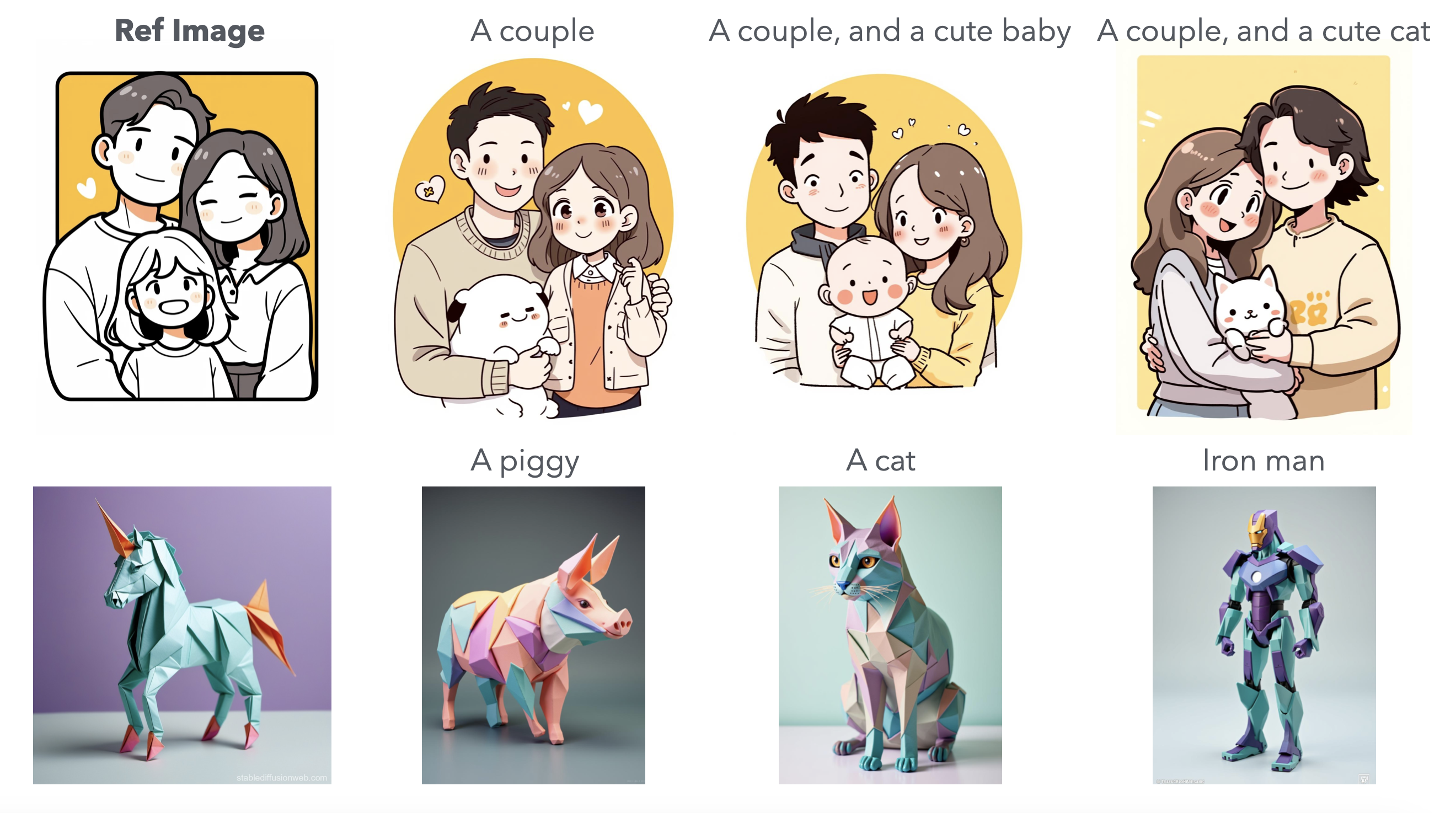

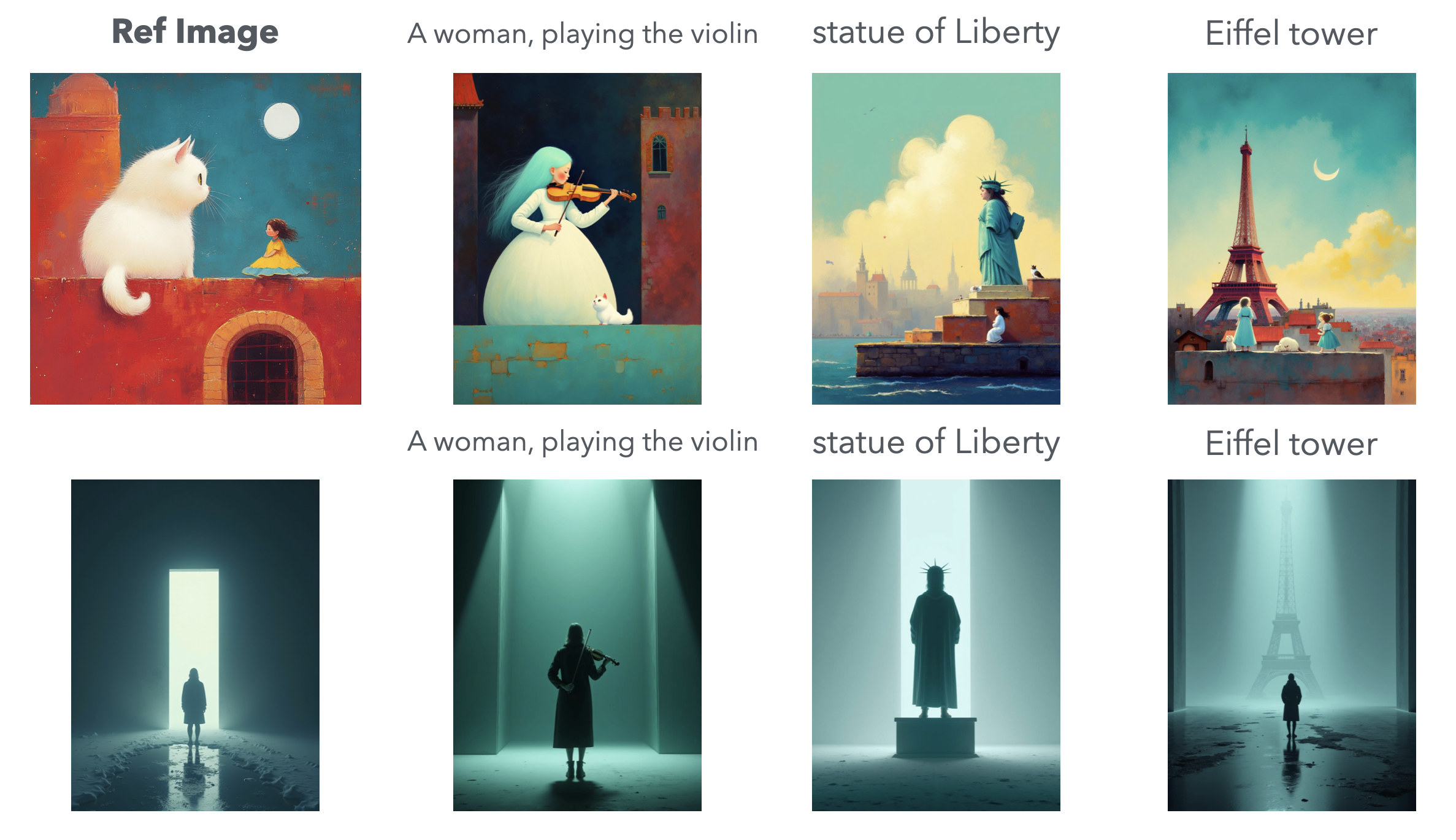

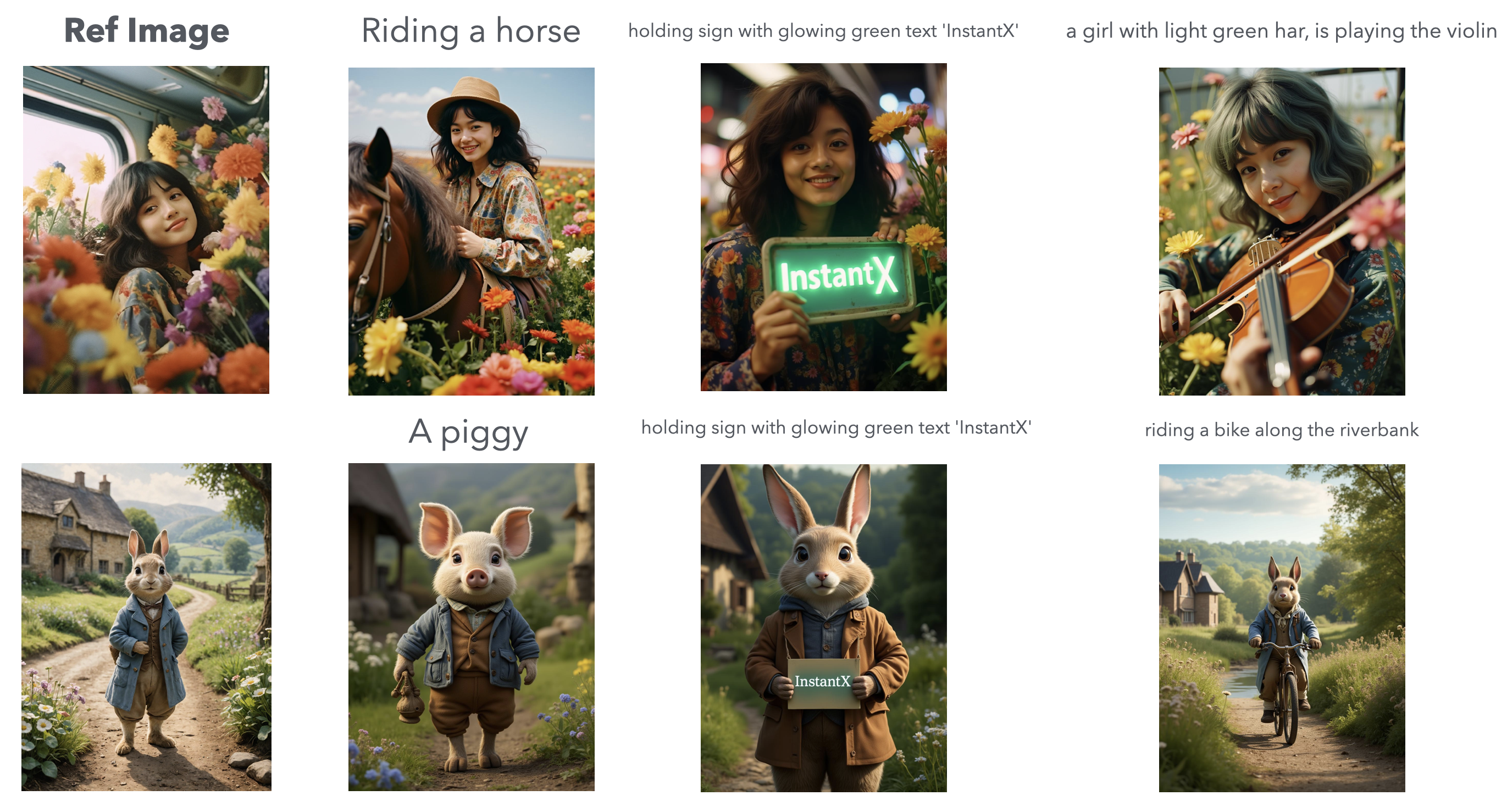

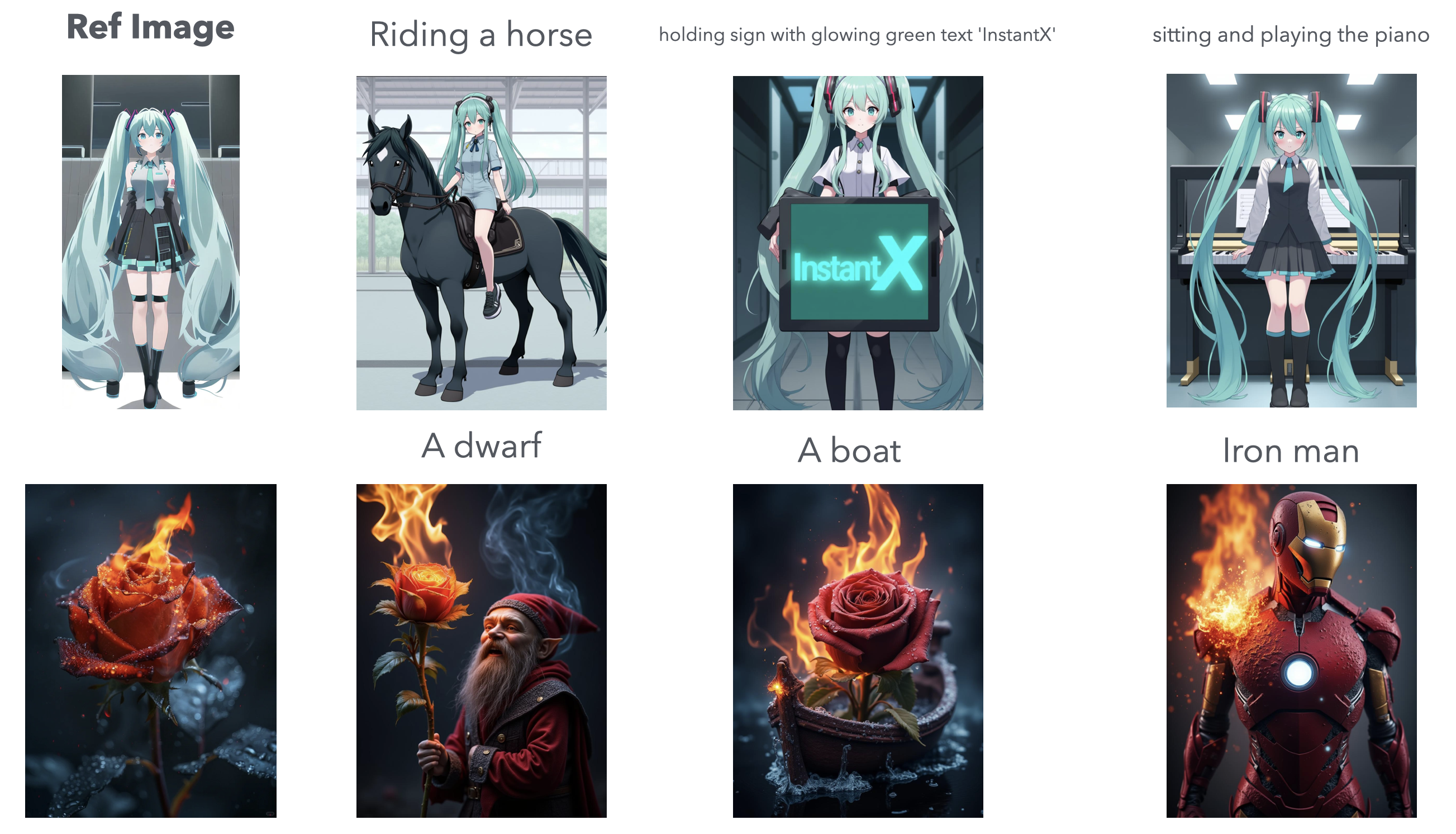

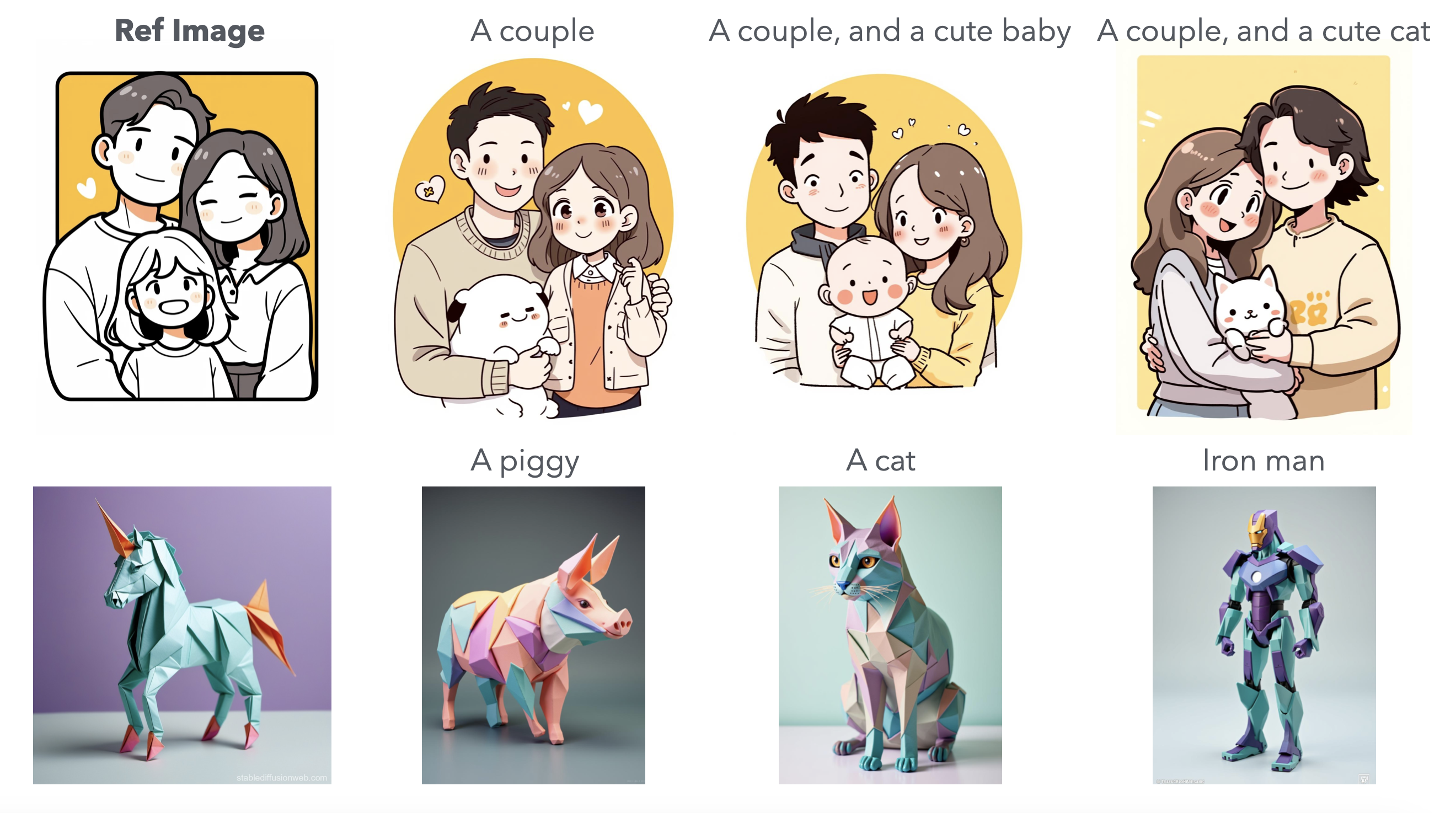

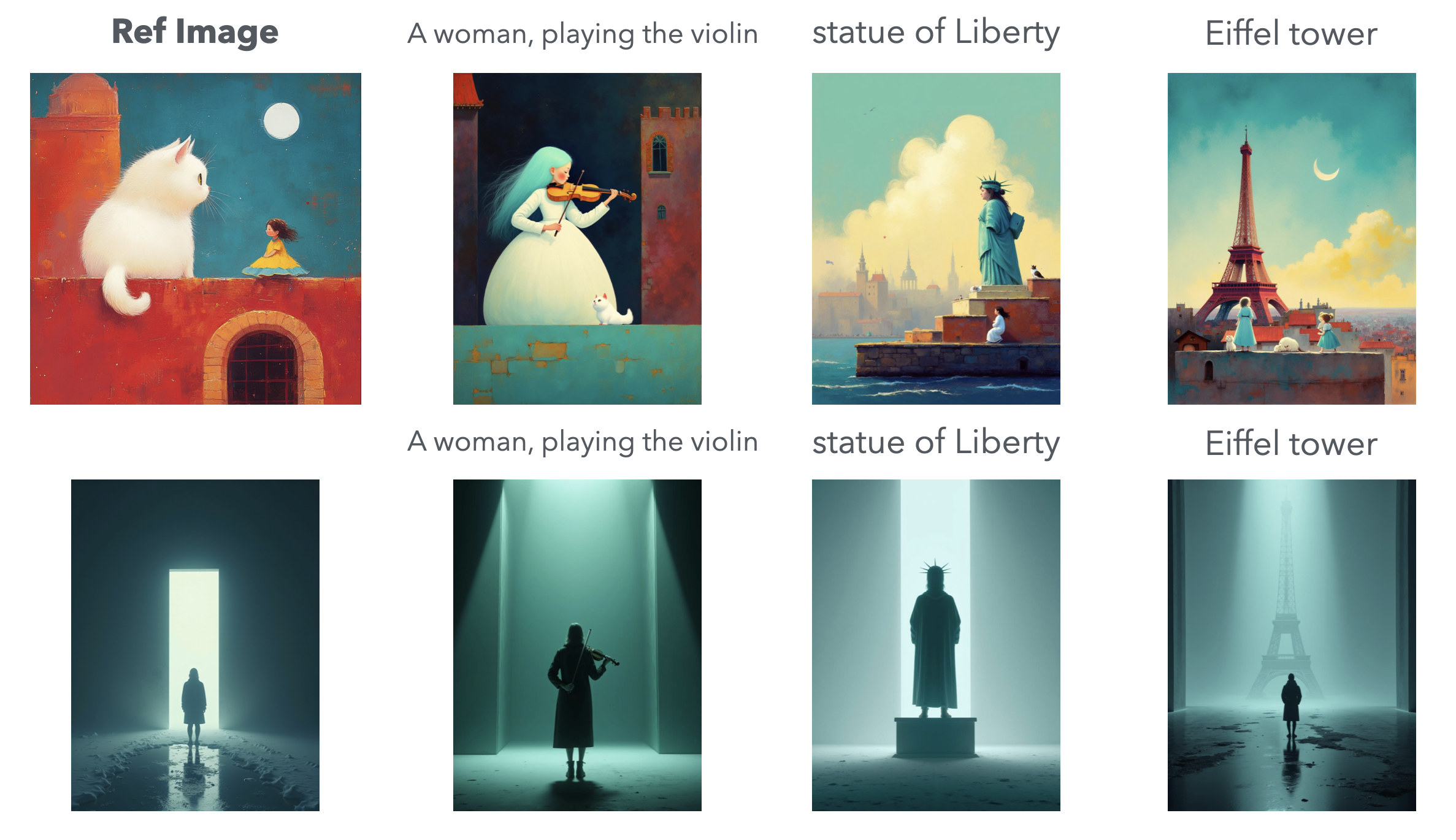

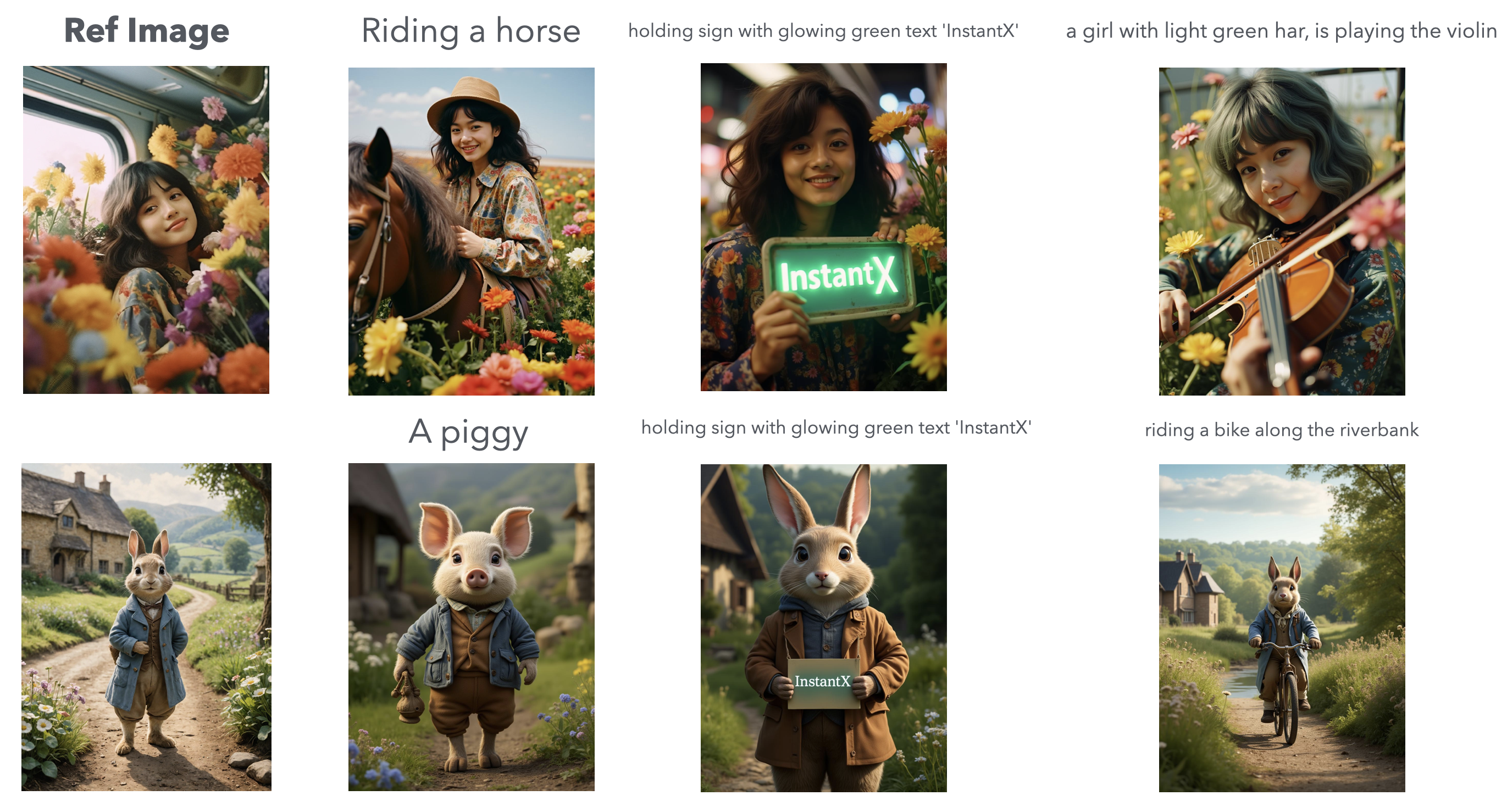

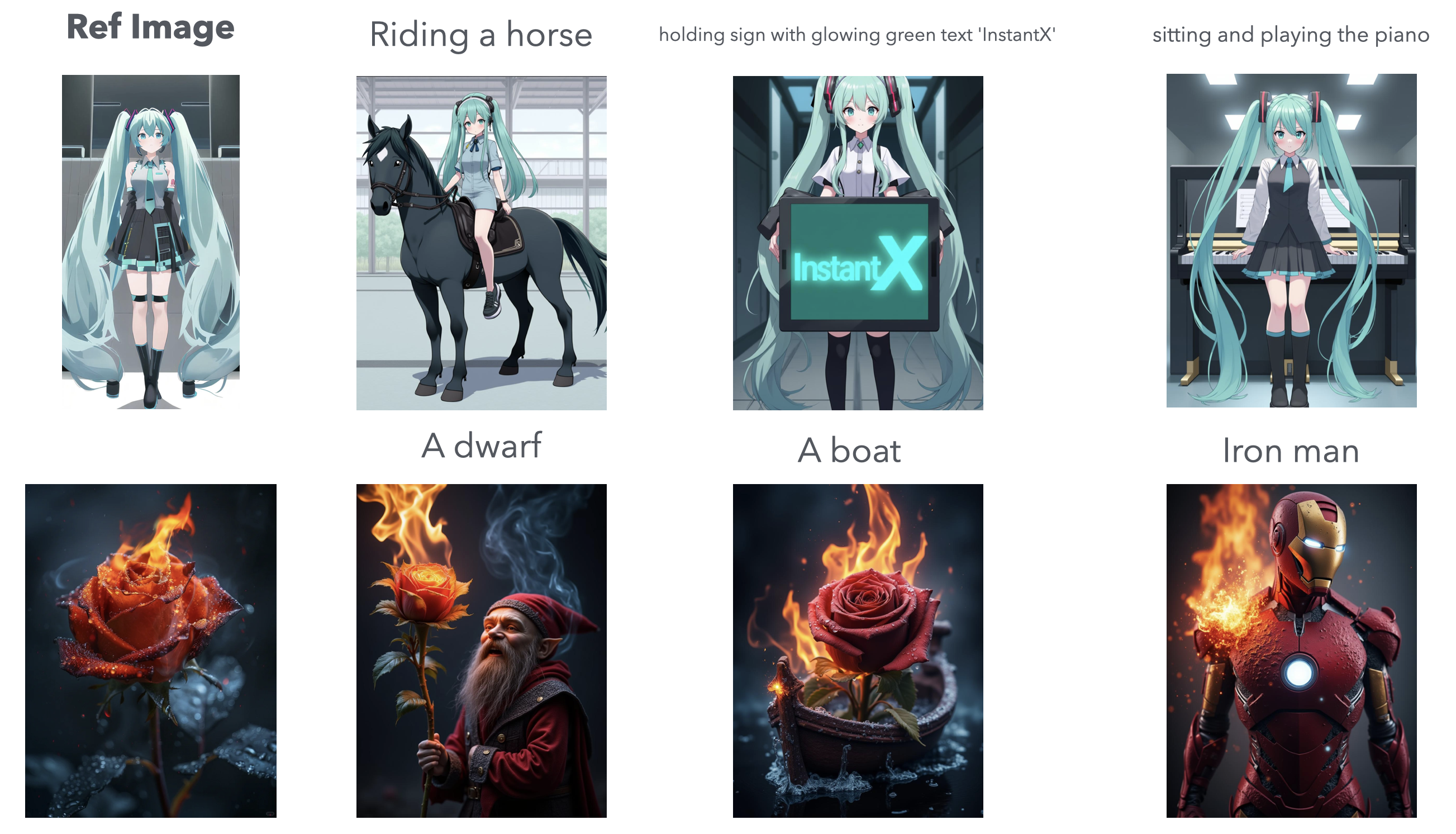

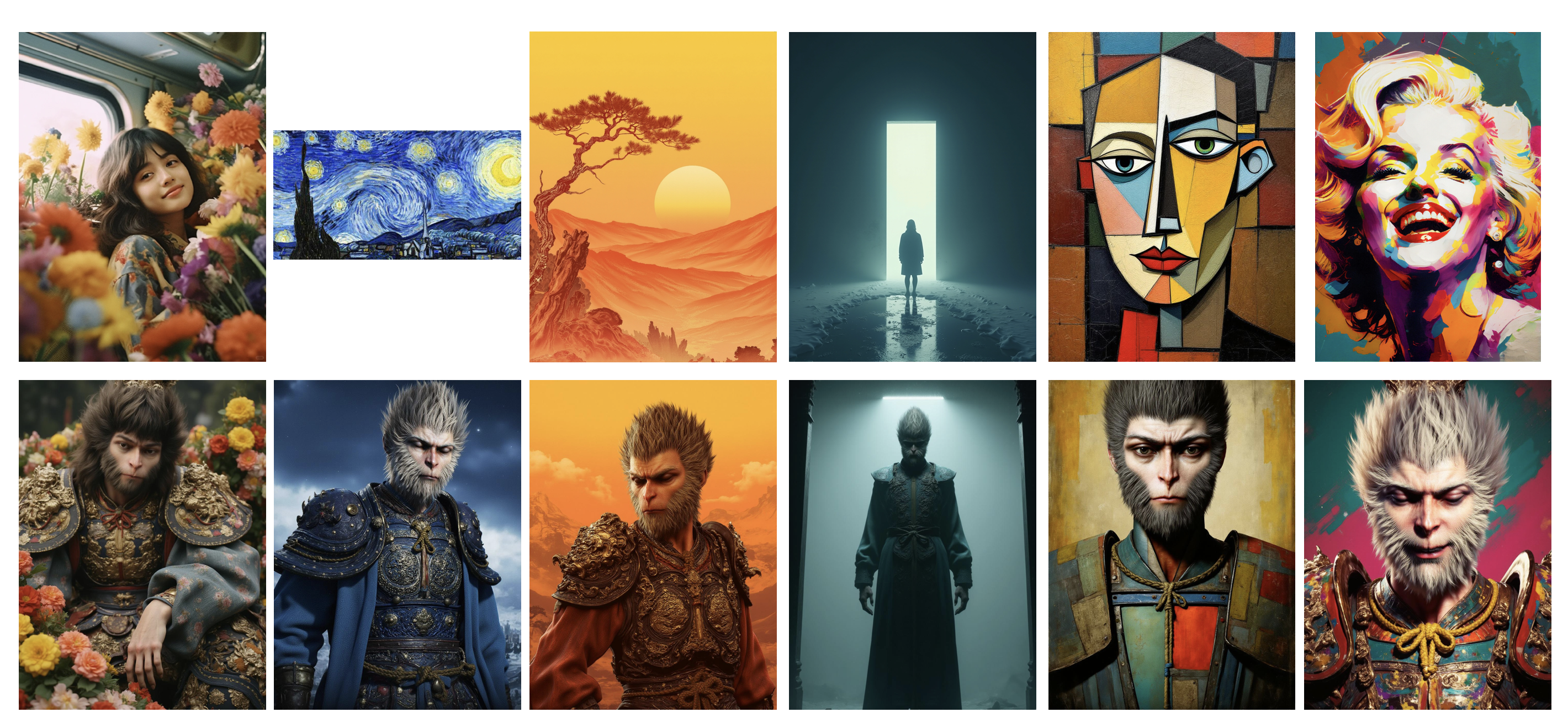

# Showcases

# Showcases (LoRA)

We adopt [Shakker-Labs/FLUX.1-dev-LoRA-collections](https://huggingface.co/Shakker-Labs/FLUX.1-dev-LoRA-collections) as a character LoRA and use its default prompt.

# Inference

The code has not been integrated into diffusers yet, please use our local files at this moment.

```python

import os

from PIL import Image

import torch

import torch.nn as nn

from pipeline_flux_ipa import FluxPipeline

from transformer_flux import FluxTransformer2DModel

from attention_processor import IPAFluxAttnProcessor2_0

from transformers import AutoProcessor, SiglipVisionModel

from infer_flux_ipa_siglip import resize_img, MLPProjModel, IPAdapter

image_encoder_path = "google/siglip-so400m-patch14-384"

ipadapter_path = "./ip-adapter.bin"

transformer = FluxTransformer2DModel.from_pretrained(

"black-forest-labs/FLUX.1-dev", subfolder="transformer", torch_dtype=torch.bfloat16

)

pipe = FluxPipeline.from_pretrained(

"black-forest-labs/FLUX.1-dev", transformer=transformer, torch_dtype=torch.bfloat16

)

ip_model = IPAdapter(pipe, image_encoder_path, ipadapter_path, device="cuda", num_tokens=128)

image_dir = "./assets/images/2.jpg"

image_name = image_dir.split("/")[-1]

image = Image.open(image_dir).convert("RGB")

image = resize_img(image)

prompt = "a young girl"

images = ip_model.generate(

pil_image=image,

prompt=prompt,

scale=0.7,

width=960, height=1280,

seed=42

)

images[0].save(f"results/{image_name}")

```

# ComfyUI

Please refer to [ComfyUI-IPAdapter-Flux](https://github.com/Shakker-Labs/ComfyUI-IPAdapter-Flux).

# Online Inference

You can also enjoy this model at [Shakker AI](https://www.shakker.ai/aigenerator?controlnet=ip_adapter).

# Limitations

This model supports image reference, but is not for fine-grained style transfer or character consistency, which means that there exists a trade-off between content leakage and style transfer. We don't find similar properties in FLUX.1-dev (DiT-based) as in [InstantStyle](https://instantstyle.github.io/) (UNet-based). It may take several attempts to get satisfied results. Furthermore, current released model may suffer from limited diversity, thus cannot cover some styles or concepts,

# License

The model is released under [flux-1-dev-non-commercial-license](https://huggingface.co/black-forest-labs/FLUX.1-dev/blob/main/LICENSE.md). All copyright reserved.

# Acknowledgements

This project is sponsored by [HuggingFace](https://huggingface.co/), [fal.ai](https://fal.ai/) and [Shakker Labs](https://huggingface.co/Shakker-Labs).

# Citation

If you find this project useful in your research, please cite us via

```

@misc{flux-ipa,

author = {InstantX Team},

title = {InstantX FLUX.1-dev IP-Adapter Page},

year = {2024},

}

```