---

license: apache-2.0

pipeline_tag: image-text-to-text

---

If our project helps you, please give us a star ⭐ on GitHub and cite our paper!

## 📰 News

- **[2024.05.31]** 🔥 Our [code](https://github.com/LINs-lab/DynMoE/) is released!

- **[2024.05.25]** 🔥 Our **checkpoints** are available now!

- **[2024.05.23]** 🔥 Our [paper](https://arxiv.org/abs/2405.14297) is released!

## 😎 What's Interesting?

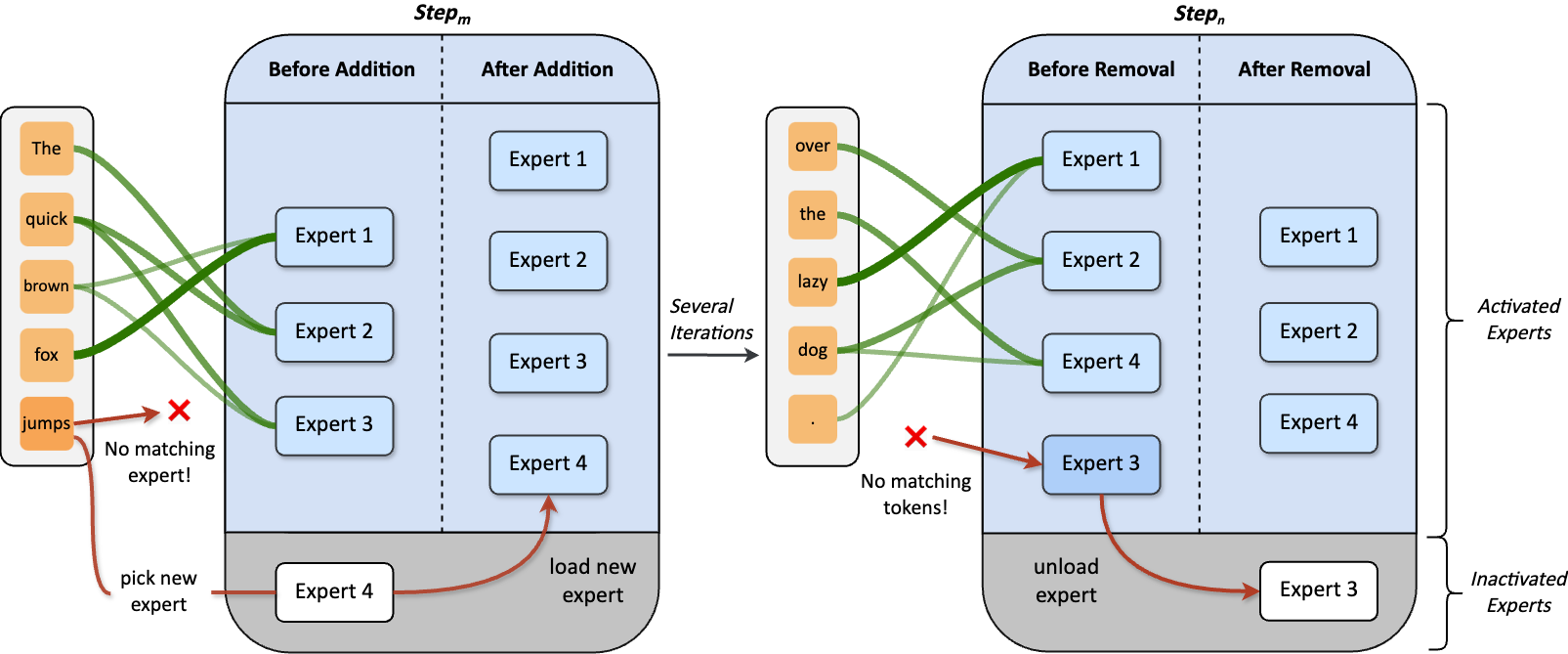

**Dynamic Mixture of Experts (DynMoE)** incorporates (1) a novel gating method that enables each token to automatically determine the number of experts to activate. (2) An adaptive process automatically adjusts the number of experts during training.

### Top-Any Gating

### Adaptive Training Process

## 💡 Model Details

- 🤔 DynMoE-Phi-2 is a MoE model with **dynamic top-k gating**, finetuned on [LanguageBind/MoE-LLaVA-Phi2-Stage2](https://huggingface.co/LanguageBind/MoE-LLaVA-Phi2-Stage2).

- 🚀 Our DynMoE-Phi-2-2.7B has totally 5.3B parameters, but **only 3.4B are activated!** (average top-k = 1.68)

- ⌛ With the DynMoE tuning stage, we can complete training on 8 A100 GPUs **within 2 days.**

## 👍 Acknowledgement

We are grateful for the following awesome projects:

- [tutel](https://github.com/microsoft/tutel)

- [DeepSpeed](https://github.com/microsoft/DeepSpeed)

- [GMoE](https://github.com/Luodian/Generalizable-Mixture-of-Experts)

- [EMoE](https://github.com/qiuzh20/EMoE)

- [MoE-LLaVA](https://github.com/PKU-YuanGroup/MoE-LLaVA)

- [GLUE-X](https://github.com/YangLinyi/GLUE-X)

## 🔒 License

This project is released under the Apache-2.0 license as found in the [LICENSE](https://huggingface.co/datasets/choosealicense/licenses/blob/main/markdown/mit.md) file.

## ✏️ Citation

```tex

@misc{guo2024dynamic,

title={Dynamic Mixture of Experts: An Auto-Tuning Approach for Efficient Transformer Models},

author={Yongxin Guo and Zhenglin Cheng and Xiaoying Tang and Tao Lin},

year={2024},

eprint={2405.14297},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

```