| Model | Perplexity |

|---|

| English models |

| meta-llama/Llama-2-7b-hf | 24.3 |

| meta-llama/Llama-2-13b-hf | 21.4 |

| mistralai/Mistral-7B-v0.1 | 21.4 |

| TinyLlama/TinyLlama-1.1B | 40.4 |

| Polish models |

| sdadas/polish-gpt2-small | 134.4 |

| sdadas/polish-gpt2-medium | 100.8 |

| sdadas/polish-gpt2-large | 93.2 |

| sdadas/polish-gpt2-xl | 94.1 |

| Azurro/APT3-275M-Base | 129.8 |

| Azurro/APT3-500M-Base | 153.1 |

| Azurro/APT3-1B-Base | 106.8 |

| eryk-mazus/polka-1.1b | 18.1 |

| szymonrucinski/Curie-7B-v1 | 13.5 |

| Qra models |

| OPI-PG/Qra-1b | 14.7 |

| OPI-PG/Qra-7b | 11.3 |

| OPI-PG/Qra-13b | 10.5 |

### Long documents (2024)

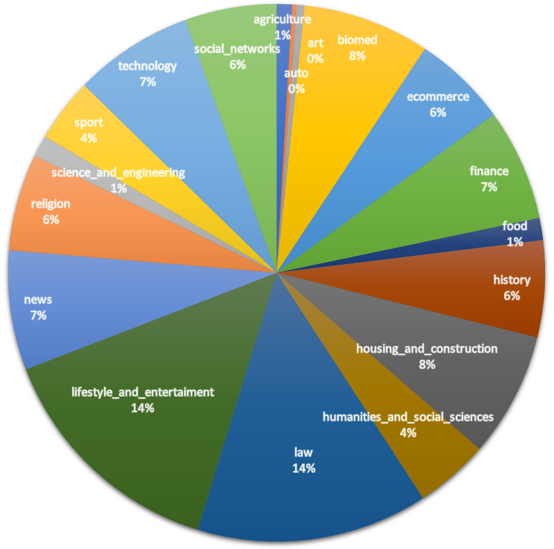

Currently, LLMs support contexts of thousands of tokens. Their practical applications usually also involve processing long documents. Therefore, evaluating perplexity on a sentence-based dataset such as PolEval-2018 may not be meaningful. Additionally, the PolEval corpus has been publicly available on the internet for the past few years, which raises the possibility that for some models the training sets have been contaminated by this data. For this reason, we have prepared a new collection consisting of long papers published exclusively in 2024, which will allow us to more reliably test the perplexities of the models on new knowledge that was not available to them at the time of training. The corpus consists of 5,000 documents ranging from several hundred to about 20,000 tokens. Half of the set consists of press texts from Polish news portals from February 2024, the other half are scientific articles published since January 2024. Most of the documents exceed the context size of the evaluated models. To calculate perplexity for these documents, we divided them into chunks of size equal to the model's context length with a stride of 512 tokens, following [this example](https://huggingface.co/docs/transformers/en/perplexity).