Commit

•

d116b3f

1

Parent(s):

dc6a982

Upload README.md with huggingface_hub

Browse files

README.md

ADDED

|

@@ -0,0 +1,59 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

---

|

| 3 |

+

|

| 4 |

+

inference: false

|

| 5 |

+

license: llama2

|

| 6 |

+

|

| 7 |

+

---

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

# QuantFactory/vicuna-13b-v1.5-GGUF

|

| 12 |

+

This is quantized version of [lmsys/vicuna-13b-v1.5](https://huggingface.co/lmsys/vicuna-13b-v1.5) created using llama.cpp

|

| 13 |

+

|

| 14 |

+

# Original Model Card

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

# Vicuna Model Card

|

| 18 |

+

|

| 19 |

+

## Model Details

|

| 20 |

+

|

| 21 |

+

Vicuna is a chat assistant trained by fine-tuning Llama 2 on user-shared conversations collected from ShareGPT.

|

| 22 |

+

|

| 23 |

+

- **Developed by:** [LMSYS](https://lmsys.org/)

|

| 24 |

+

- **Model type:** An auto-regressive language model based on the transformer architecture

|

| 25 |

+

- **License:** Llama 2 Community License Agreement

|

| 26 |

+

- **Finetuned from model:** [Llama 2](https://arxiv.org/abs/2307.09288)

|

| 27 |

+

|

| 28 |

+

### Model Sources

|

| 29 |

+

|

| 30 |

+

- **Repository:** https://github.com/lm-sys/FastChat

|

| 31 |

+

- **Blog:** https://lmsys.org/blog/2023-03-30-vicuna/

|

| 32 |

+

- **Paper:** https://arxiv.org/abs/2306.05685

|

| 33 |

+

- **Demo:** https://chat.lmsys.org/

|

| 34 |

+

|

| 35 |

+

## Uses

|

| 36 |

+

|

| 37 |

+

The primary use of Vicuna is research on large language models and chatbots.

|

| 38 |

+

The primary intended users of the model are researchers and hobbyists in natural language processing, machine learning, and artificial intelligence.

|

| 39 |

+

|

| 40 |

+

## How to Get Started with the Model

|

| 41 |

+

|

| 42 |

+

- Command line interface: https://github.com/lm-sys/FastChat#vicuna-weights

|

| 43 |

+

- APIs (OpenAI API, Huggingface API): https://github.com/lm-sys/FastChat/tree/main#api

|

| 44 |

+

|

| 45 |

+

## Training Details

|

| 46 |

+

|

| 47 |

+

Vicuna v1.5 is fine-tuned from Llama 2 with supervised instruction fine-tuning.

|

| 48 |

+

The training data is around 125K conversations collected from ShareGPT.com.

|

| 49 |

+

See more details in the "Training Details of Vicuna Models" section in the appendix of this [paper](https://arxiv.org/pdf/2306.05685.pdf).

|

| 50 |

+

|

| 51 |

+

## Evaluation

|

| 52 |

+

|

| 53 |

+

|

| 54 |

+

|

| 55 |

+

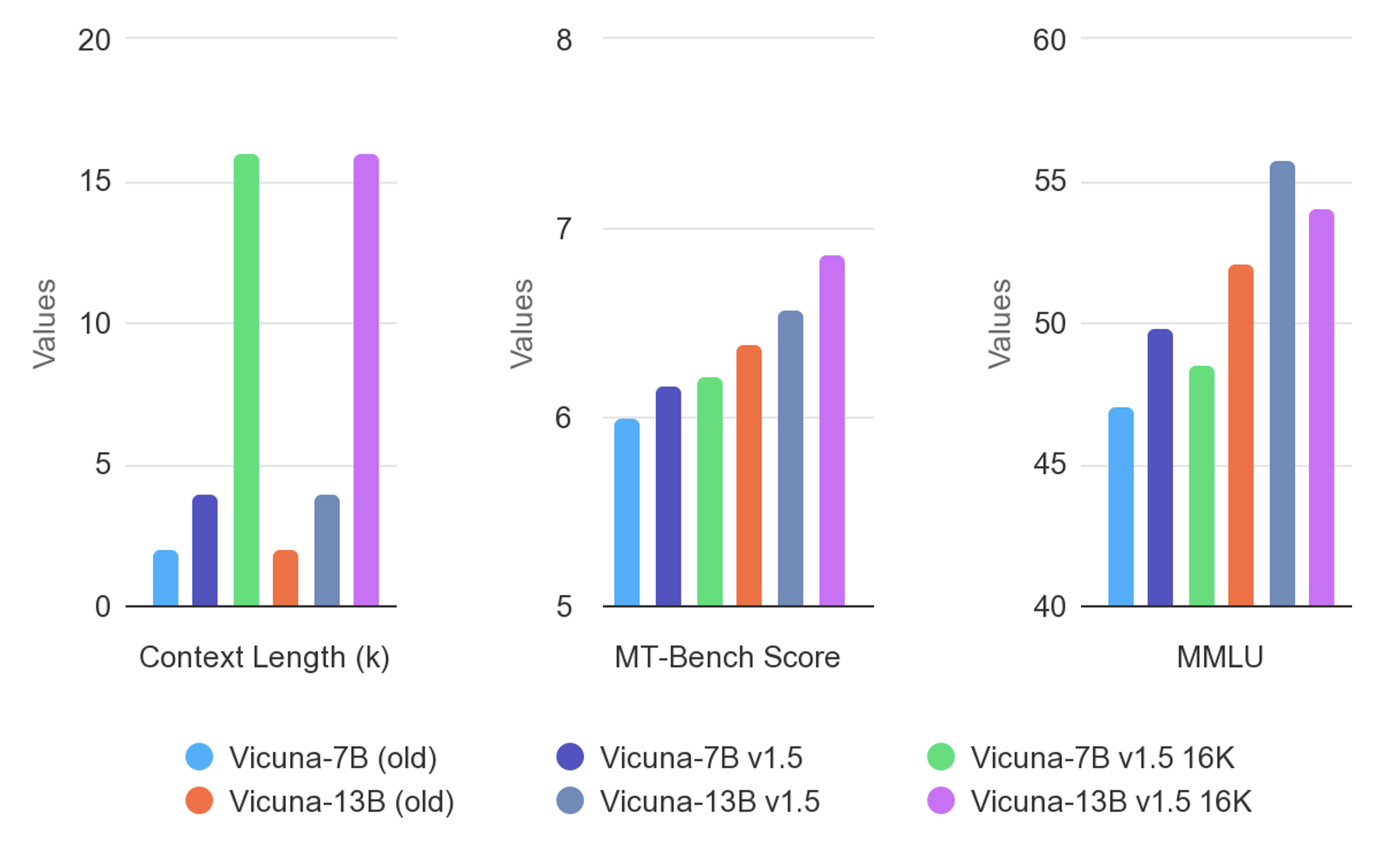

Vicuna is evaluated with standard benchmarks, human preference, and LLM-as-a-judge. See more details in this [paper](https://arxiv.org/pdf/2306.05685.pdf) and [leaderboard](https://huggingface.co/spaces/lmsys/chatbot-arena-leaderboard).

|

| 56 |

+

|

| 57 |

+

## Difference between different versions of Vicuna

|

| 58 |

+

|

| 59 |

+

See [vicuna_weights_version.md](https://github.com/lm-sys/FastChat/blob/main/docs/vicuna_weights_version.md)

|