File size: 17,176 Bytes

cb68ce6 |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 |

Quantization made by Richard Erkhov.

[Github](https://github.com/RichardErkhov)

[Discord](https://discord.gg/pvy7H8DZMG)

[Request more models](https://github.com/RichardErkhov/quant_request)

bloomz-3b-sft-chat - GGUF

- Model creator: https://huggingface.co/cmarkea/

- Original model: https://huggingface.co/cmarkea/bloomz-3b-sft-chat/

| Name | Quant method | Size |

| ---- | ---- | ---- |

| [bloomz-3b-sft-chat.Q2_K.gguf](https://huggingface.co/RichardErkhov/cmarkea_-_bloomz-3b-sft-chat-gguf/blob/main/bloomz-3b-sft-chat.Q2_K.gguf) | Q2_K | 1.52GB |

| [bloomz-3b-sft-chat.IQ3_XS.gguf](https://huggingface.co/RichardErkhov/cmarkea_-_bloomz-3b-sft-chat-gguf/blob/main/bloomz-3b-sft-chat.IQ3_XS.gguf) | IQ3_XS | 1.68GB |

| [bloomz-3b-sft-chat.IQ3_S.gguf](https://huggingface.co/RichardErkhov/cmarkea_-_bloomz-3b-sft-chat-gguf/blob/main/bloomz-3b-sft-chat.IQ3_S.gguf) | IQ3_S | 1.71GB |

| [bloomz-3b-sft-chat.Q3_K_S.gguf](https://huggingface.co/RichardErkhov/cmarkea_-_bloomz-3b-sft-chat-gguf/blob/main/bloomz-3b-sft-chat.Q3_K_S.gguf) | Q3_K_S | 1.71GB |

| [bloomz-3b-sft-chat.IQ3_M.gguf](https://huggingface.co/RichardErkhov/cmarkea_-_bloomz-3b-sft-chat-gguf/blob/main/bloomz-3b-sft-chat.IQ3_M.gguf) | IQ3_M | 1.81GB |

| [bloomz-3b-sft-chat.Q3_K.gguf](https://huggingface.co/RichardErkhov/cmarkea_-_bloomz-3b-sft-chat-gguf/blob/main/bloomz-3b-sft-chat.Q3_K.gguf) | Q3_K | 1.9GB |

| [bloomz-3b-sft-chat.Q3_K_M.gguf](https://huggingface.co/RichardErkhov/cmarkea_-_bloomz-3b-sft-chat-gguf/blob/main/bloomz-3b-sft-chat.Q3_K_M.gguf) | Q3_K_M | 1.9GB |

| [bloomz-3b-sft-chat.Q3_K_L.gguf](https://huggingface.co/RichardErkhov/cmarkea_-_bloomz-3b-sft-chat-gguf/blob/main/bloomz-3b-sft-chat.Q3_K_L.gguf) | Q3_K_L | 2.02GB |

| [bloomz-3b-sft-chat.IQ4_XS.gguf](https://huggingface.co/RichardErkhov/cmarkea_-_bloomz-3b-sft-chat-gguf/blob/main/bloomz-3b-sft-chat.IQ4_XS.gguf) | IQ4_XS | 2.0GB |

| [bloomz-3b-sft-chat.Q4_0.gguf](https://huggingface.co/RichardErkhov/cmarkea_-_bloomz-3b-sft-chat-gguf/blob/main/bloomz-3b-sft-chat.Q4_0.gguf) | Q4_0 | 2.08GB |

| [bloomz-3b-sft-chat.IQ4_NL.gguf](https://huggingface.co/RichardErkhov/cmarkea_-_bloomz-3b-sft-chat-gguf/blob/main/bloomz-3b-sft-chat.IQ4_NL.gguf) | IQ4_NL | 2.09GB |

| [bloomz-3b-sft-chat.Q4_K_S.gguf](https://huggingface.co/RichardErkhov/cmarkea_-_bloomz-3b-sft-chat-gguf/blob/main/bloomz-3b-sft-chat.Q4_K_S.gguf) | Q4_K_S | 2.09GB |

| [bloomz-3b-sft-chat.Q4_K.gguf](https://huggingface.co/RichardErkhov/cmarkea_-_bloomz-3b-sft-chat-gguf/blob/main/bloomz-3b-sft-chat.Q4_K.gguf) | Q4_K | 2.24GB |

| [bloomz-3b-sft-chat.Q4_K_M.gguf](https://huggingface.co/RichardErkhov/cmarkea_-_bloomz-3b-sft-chat-gguf/blob/main/bloomz-3b-sft-chat.Q4_K_M.gguf) | Q4_K_M | 2.24GB |

| [bloomz-3b-sft-chat.Q4_1.gguf](https://huggingface.co/RichardErkhov/cmarkea_-_bloomz-3b-sft-chat-gguf/blob/main/bloomz-3b-sft-chat.Q4_1.gguf) | Q4_1 | 2.25GB |

| [bloomz-3b-sft-chat.Q5_0.gguf](https://huggingface.co/RichardErkhov/cmarkea_-_bloomz-3b-sft-chat-gguf/blob/main/bloomz-3b-sft-chat.Q5_0.gguf) | Q5_0 | 2.43GB |

| [bloomz-3b-sft-chat.Q5_K_S.gguf](https://huggingface.co/RichardErkhov/cmarkea_-_bloomz-3b-sft-chat-gguf/blob/main/bloomz-3b-sft-chat.Q5_K_S.gguf) | Q5_K_S | 2.43GB |

| [bloomz-3b-sft-chat.Q5_K.gguf](https://huggingface.co/RichardErkhov/cmarkea_-_bloomz-3b-sft-chat-gguf/blob/main/bloomz-3b-sft-chat.Q5_K.gguf) | Q5_K | 2.55GB |

| [bloomz-3b-sft-chat.Q5_K_M.gguf](https://huggingface.co/RichardErkhov/cmarkea_-_bloomz-3b-sft-chat-gguf/blob/main/bloomz-3b-sft-chat.Q5_K_M.gguf) | Q5_K_M | 2.55GB |

| [bloomz-3b-sft-chat.Q5_1.gguf](https://huggingface.co/RichardErkhov/cmarkea_-_bloomz-3b-sft-chat-gguf/blob/main/bloomz-3b-sft-chat.Q5_1.gguf) | Q5_1 | 2.6GB |

| [bloomz-3b-sft-chat.Q6_K.gguf](https://huggingface.co/RichardErkhov/cmarkea_-_bloomz-3b-sft-chat-gguf/blob/main/bloomz-3b-sft-chat.Q6_K.gguf) | Q6_K | 2.8GB |

| [bloomz-3b-sft-chat.Q8_0.gguf](https://huggingface.co/RichardErkhov/cmarkea_-_bloomz-3b-sft-chat-gguf/blob/main/bloomz-3b-sft-chat.Q8_0.gguf) | Q8_0 | 3.62GB |

Original model description:

---

license: bigscience-bloom-rail-1.0

datasets:

- ehartford/wizard_vicuna_70k_unfiltered

- shahules786/orca-chat

- timdettmers/openassistant-guanaco

- laion/OIG

language:

- fr

- en

library_name: transformers

pipeline_tag: text-generation

inference:

parameters:

max_new_tokens: 128

widget:

- text: </s>Bonjour, qui es-tu ?<s>

- text: </s>Hello, who are you?<s>

---

bloomz-3b-sft-chat

--------------------

We introduce the bloomz-3b-sft-chat model, which is a fine-tuning of a Large Language Model (LLM) [bigscience/bloomz-3b](https://huggingface.co/bigscience/bloomz-3b). This model is notable for being pre-trained for a chatbot context and undergoing a transposition from float16 to bfloat16. Therefore, this model serves as a solid starting point for fine-tuning towards other more specific tasks.

The model was trained equally on both French and English data, ensuring maximum efficiency for these two languages (and their interactions). Due to the transition from float16 to bfloat16, we do not guarantee the preservation of the original model's multilingual capabilities. However, fine-tuning can restore reasonable performance on other languages.

The objective is to pre-train all three models (Bloomz-{560m, 3b, 7b1-mt}-sft-chat) to ensure high-performing, energy-efficient, and fast "foundation" models for inference on "realistic" infrastructures suitable for a business with standard industrial capabilities.

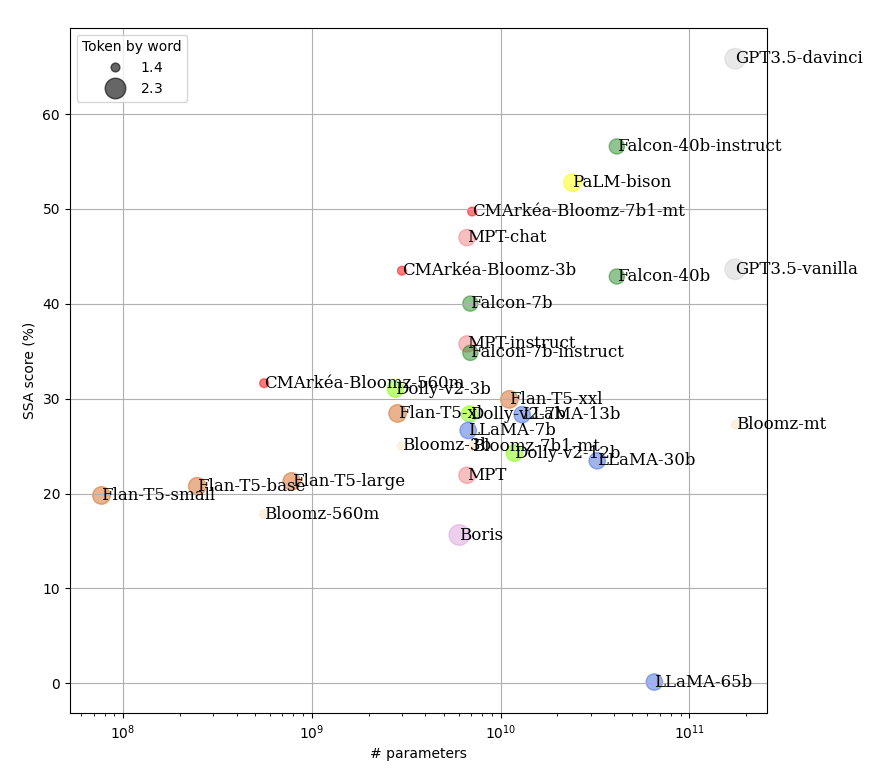

Bloomz, through its license, enables free and flexible industrial use. Its tokenizer has been designed with true multi-lingual context in mind, with a significantly lower token generation per word compared to other LLM models. This capability not only leads to improved performance but also enhanced efficiency during inference by making fewer model calls when generating text with shorter contexts. Here is a table illustrating our points using French as an example, where we tokenized Marcel Proust's longest sentence (823 words):

```

Sans honneur que précaire, sans liberté que provisoire, [...], et de façon qu’à eux-mêmes il ne leur paraisse pas un vice.

```

| model | GPT 3.5 | Boris | Flan-T5 | LLaMA | Dolly | MPT | Falcon | Bloomz |

|:--------------:|:-------:|:-----:|:-------:|:-----:|:-----:|:---:|:------:|:------:|

| tokens per word | 2.3 | 2.3 | 2 | 1.9 | 1.9 | 1.9 | 1.8 | 1.4 |

For comparison, with a specialized French tokenizer like [CamemBERT](https://huggingface.co/camembert/camembert-base) or [DistilCamemBERT](cmarkea/distilcamembert-base), we have 1.5 tokens per word. In addition to its positive impact on inference time and resource consumption, there has already been [shown that there is a direct relationship](https://arxiv.org/abs/2012.15613) between the number of tokens per word required for modeling and the predictive performance of the model.

Dataset

-------

After analyzing a substantial set of modelings, we have observed that the most effective pre-training for zero-shot use cases is pre-training for chatbot contexts. This study was conducted internally, focusing specifically on the French context. As a result, we trained the model on a dataset comprising 0.9 billion tokens. This dataset consists of interactions between an individual and a third party. To balance the French and English data, we utilized the Google Translate API.

Training

--------

Here is the table summarizing the architecture used for training, along with the training time and average inference speed per token on the target architecture in tokens per second:

| model | Architecture | Training time (h) | Inference speed (tokens per second) |

|:----------------------:|:-------------:|:-----------------:|:-----------------------------------:|

| [bloomz-560m-sft-chat](https://huggingface.co/cmarkea/bloomz-560m-sft-chat) | 1 x A100 40GB | 41 | 29 |

| [bloomz-3b-sft-chat](https://huggingface.co/cmarkea/bloomz-3b-sft-chat) | 1 x A100 40GB | 140 | 13 |

| [bloomz-7b1-mt-sft-chat](https://huggingface.co/cmarkea/bloomz-7b1-mt-sft-chat) | 4 x A100 40GB | 268 | 8 |

| Hyperparameter | Value |

|:---------------------:|:----------:|

| label smoothing | 0.05 |

| optimizer | AdamW |

| betas | 0.9, 0.999 |

| learning rate | 1e-5 |

| anneal strategy | cos |

| div factor | 100 |

| final div factor | 0.1 |

| batch size | 4 |

| gradient accumulation | 100 |

| max length | 1500 |

Experimentations

----------------

Since the model is trained only on English and French corpora, the performance of the model cannot be guaranteed in other languages. This degradation in performance in other languages is also due to the change in the model's data type from float16 to bfloat16. The conversation example below illustrates this point:

| French | English | Spanish |

|:---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------:|:----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------:|:---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------:|

| **human:** C'est quoi la première guerre mondiale ?<br>**bot:** La première guerre mondiale a commencé en 1914 et s'est terminée en 1918. C'était une guerre mondiale qui a impliqué de nombreux pays et de nombreuses personnes.<br>**human:** Quels pays ?<br>**bot:** La première guerre mondiale a été déclenchée par la Russie, l'Autriche-Hongrie, la France, la Grande-Bretagne, les États-Unis et l'Allemagne.<br>**human:** Comment cela s'est-il fini et quels ont été ses impacts ?<br>**bot:** La première guerre mondiale s'est terminée en 1918. Il y a eu beaucoup de morts et de destruction.<br>**human:** Combien de mort ?<br>**bot:** Il y a eu environ 100 millions de morts.<br>**human:** Tu es certain de ce chiffre, car ça me parait beaucoup, non ?<br>**bot:** Je suis sûr de ce chiffre. | **human:** What is the first world war?<br>**bot:** The first world war was fought between 1914 and 1918.<br>**human:** Who were the belligerents?<br>**bot:** The belligerents were the United Kingdom, France, Russia, Austria-Hungary, Serbia, and Montenegro.<br>**human:** How did it end and what were its impacts?<br>**bot:** The war ended with the Treaty of Versailles in 1919.<br>**human:** How many deaths?<br>**bot:** The war killed about 100 million people.<br>**human:** Are you sure about this number, because it seems like a lot to me, right?<br>**bot:** I’m not sure, but I think it’s a good estimate. | **human:** ¿Qué es la Primera Guerra Mundial?<br>**bot:** La Primera Guerra Mundial fue un conflicto armado que duró de 1914 a 1918<br>**human:** ¿En qué países participamos?<br>**bot:** La Primera Guerra Mundial fue un conflicto armado que duró de 1914 a 1918. |

Evaluating the performance of generative models can be challenging, and quantitative measures may not always provide the most relevant insights for this type of approach. In this regard, we conducted a qualitative performance study. It involves assessing the relevance of model responses to a pool of French questions using blind evaluation. The metric used for evaluation is the average of two criteria: response accuracy and completeness, similar to what the [SSA metric](https://arxiv.org/abs/2001.09977) aims to capture. Please note that this measure lacks rigorous scientific validity due to the limited number of questions in the test dataset and the fact that it involves only a few individuals with similar socio-demographic characteristics. The prompts take the same format for all models:

```

[Instruction]

Question : [Question]

Réponse :

```

As a result, the prompts do not exploit the structures of chatbot models to ensure fairness, and the evaluation quantifies performance in a purely instruction-based approach. The figure below illustrates the results. The higher a model is positioned in the top-left corner with a small circle radius, the better the model; conversely, if a model is towards the bottom-right with a large circle, it performs less favorably.

We observe that across all models, the performance gain is logarithmic in relation to the increase in model parameters. However, for models that undergo multiple pre-trainings (vanilla, instruction, and chat), models pre-trained on instruction and chat perform significantly better in zero-shot contexts, with a notable improvement for chat-based approaches. The models we have trained demonstrate promising efficiency in this test compared to the number of parameters, indicating cost-effectiveness in a production context.

How to use bloomz-3b-sft-chat

-------------------------------

There are no specific instructions for using these models in a normal causal inference context. However, to leverage the chatbot capability of the model, an individual's prompt should be preceded by the EOS token (</s>), and the generated part should be preceded by the BOS token (<s>). The structure takes the following form:

```

</s>[human prompt 1]<s>[bot answer 1]</s>[human prompt 2]<s>

```

For example, to load the model using the HuggingFace pipeline interface:

```python

from transformers import pipeline

model = pipeline("text-generation", "cmarkea/bloomz-3b-sft-chat")

result = model("</s>C'est quoi le deep learning ?<s>", max_new_tokens=512)

result

[{'generated_text': "</s>C'est quoi le deep learning ?<s>Le deep learning

est un sous-ensemble de l'intelligence artificielle qui utilise des

réseaux de neurones pour apprendre à partir de données."}]

```

Citation

--------

```bibtex

@online{DeBloomzChat,

AUTHOR = {Cyrile Delestre},

URL = {https://huggingface.co/cmarkea/bloomz-3b-sft-chat},

YEAR = {2023},

KEYWORDS = {NLP ; Transformers ; LLM ; Bloomz},

}

```

|