First model version

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitignore +12 -0

- LICENSE +21 -0

- README.md +289 -1

- lib/__init__.py +0 -0

- lib/config/__init__.py +2 -0

- lib/config/default.py +157 -0

- lib/core/__init__.py +1 -0

- lib/core/activations.py +72 -0

- lib/core/evaluate.py +278 -0

- lib/core/function.py +510 -0

- lib/core/general.py +466 -0

- lib/core/loss.py +237 -0

- lib/core/postprocess.py +244 -0

- lib/dataset/AutoDriveDataset.py +264 -0

- lib/dataset/DemoDataset.py +188 -0

- lib/dataset/__init__.py +3 -0

- lib/dataset/bdd.py +85 -0

- lib/dataset/convert.py +31 -0

- lib/dataset/hust.py +87 -0

- lib/models/YOLOP.py +596 -0

- lib/models/__init__.py +1 -0

- lib/models/common.py +265 -0

- lib/models/light.py +496 -0

- lib/utils/__init__.py +4 -0

- lib/utils/augmentations.py +253 -0

- lib/utils/autoanchor.py +134 -0

- lib/utils/plot.py +113 -0

- lib/utils/split_dataset.py +30 -0

- lib/utils/utils.py +163 -0

- pictures/da.png +0 -0

- pictures/detect.png +0 -0

- pictures/input1.gif +0 -0

- pictures/input2.gif +0 -0

- pictures/ll.png +0 -0

- pictures/output1.gif +0 -0

- pictures/output2.gif +0 -0

- pictures/yolop.png +0 -0

- requirements.txt +15 -0

- toolkits/deploy/CMakeLists.txt +45 -0

- toolkits/deploy/common.hpp +359 -0

- toolkits/deploy/cuda_utils.h +18 -0

- toolkits/deploy/gen_wts.py +21 -0

- toolkits/deploy/infer_files.cpp +200 -0

- toolkits/deploy/logging.h +503 -0

- toolkits/deploy/main.cpp +137 -0

- toolkits/deploy/utils.h +155 -0

- toolkits/deploy/yololayer.cu +333 -0

- toolkits/deploy/yololayer.h +143 -0

- toolkits/deploy/yolov5.hpp +286 -0

- toolkits/deploy/zedcam.hpp +31 -0

.gitignore

ADDED

|

@@ -0,0 +1,12 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

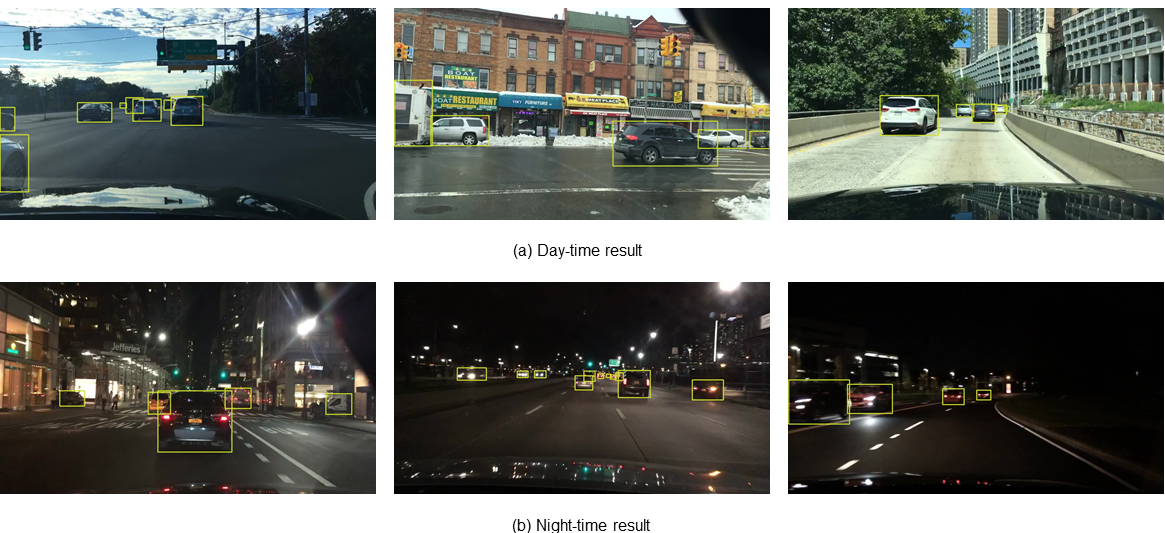

|

|

|

|

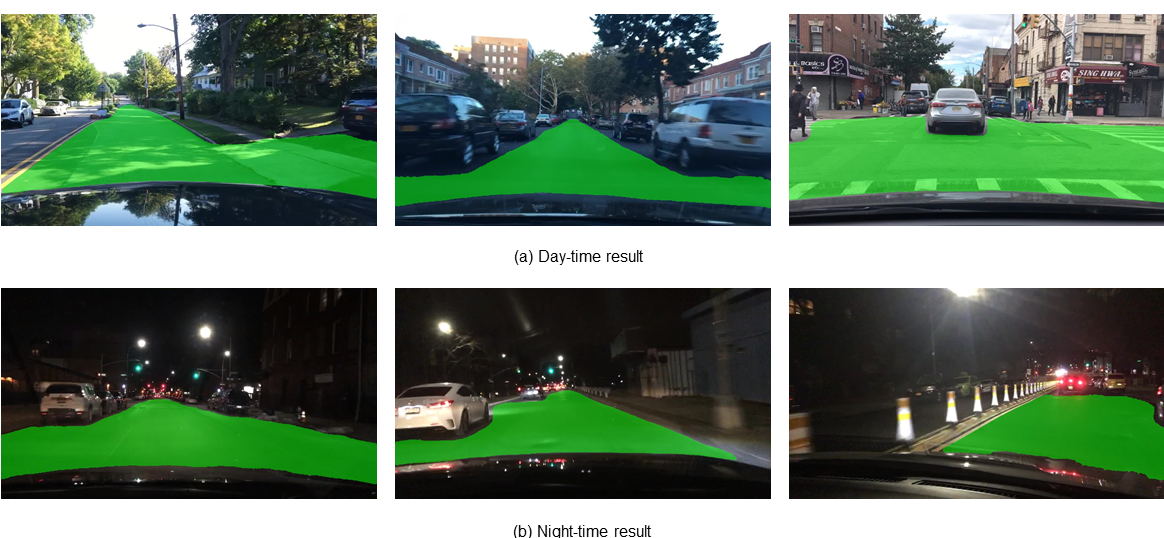

|

|

|

|

|

|

|

|

|

|

| 1 |

+

.DS_Store

|

| 2 |

+

__pycache__/

|

| 3 |

+

.idea/

|

| 4 |

+

.tmp/

|

| 5 |

+

.vscode/

|

| 6 |

+

bdd/

|

| 7 |

+

runs/

|

| 8 |

+

inference/

|

| 9 |

+

*.pth

|

| 10 |

+

*.pt

|

| 11 |

+

*.tar

|

| 12 |

+

*.tar.gz

|

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2021 Hust Visual Learning Team

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

README.md

CHANGED

|

@@ -1 +1,289 @@

|

|

| 1 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<div align="left">

|

| 2 |

+

|

| 3 |

+

## You Only :eyes: Once for Panoptic :car: Perception

|

| 4 |

+

> [**You Only Look at Once for Panoptic driving Perception**](https://arxiv.org/abs/2108.11250)

|

| 5 |

+

>

|

| 6 |

+

> by Dong Wu, Manwen Liao, Weitian Zhang, [Xinggang Wang](https://xinggangw.info/)<sup> :email:</sup> [*School of EIC, HUST*](http://eic.hust.edu.cn/English/Home.htm)

|

| 7 |

+

>

|

| 8 |

+

> (<sup>:email:</sup>) corresponding author.

|

| 9 |

+

>

|

| 10 |

+

> *arXiv technical report ([arXiv 2108.11250](https://arxiv.org/abs/2108.11250))*

|

| 11 |

+

|

| 12 |

+

---

|

| 13 |

+

|

| 14 |

+

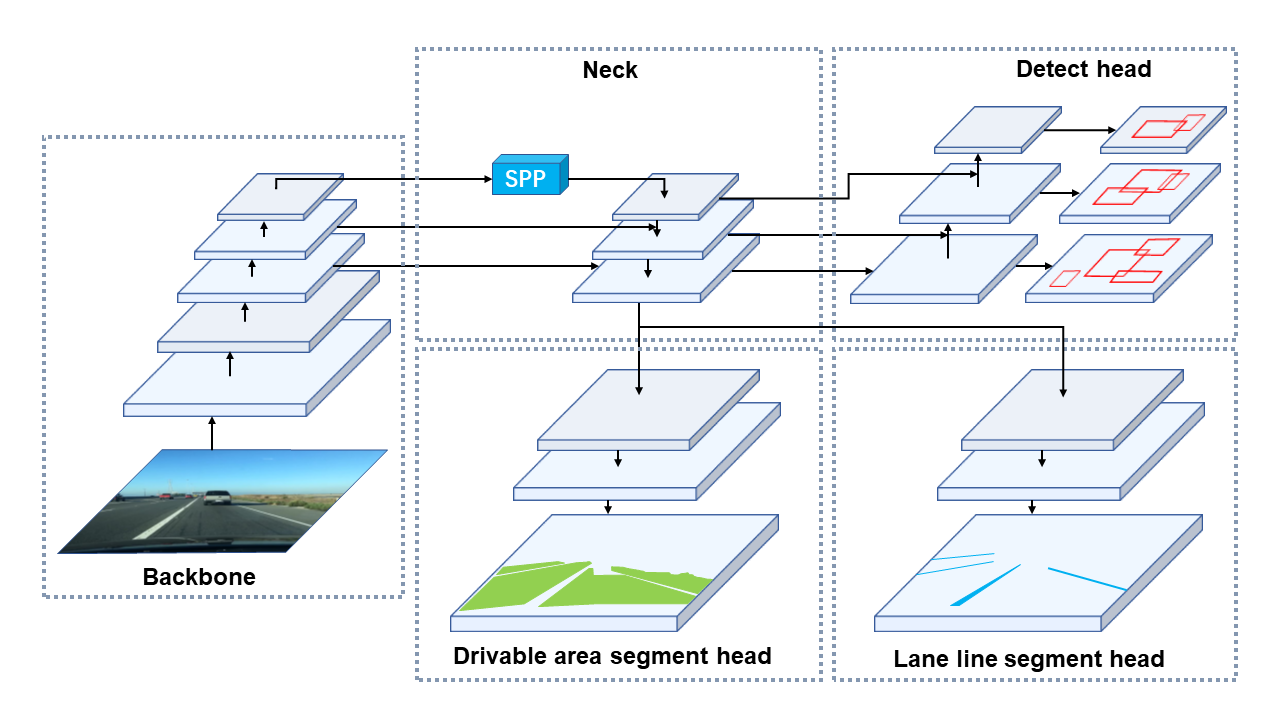

### The Illustration of YOLOP

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

### Contributions

|

| 19 |

+

|

| 20 |

+

* We put forward an efficient multi-task network that can jointly handle three crucial tasks in autonomous driving: object detection, drivable area segmentation and lane detection to save computational costs, reduce inference time as well as improve the performance of each task. Our work is the first to reach real-time on embedded devices while maintaining state-of-the-art level performance on the `BDD100K `dataset.

|

| 21 |

+

|

| 22 |

+

* We design the ablative experiments to verify the effectiveness of our multi-tasking scheme. It is proved that the three tasks can be learned jointly without tedious alternating optimization.

|

| 23 |

+

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

### Results

|

| 27 |

+

|

| 28 |

+

#### Traffic Object Detection Result

|

| 29 |

+

|

| 30 |

+

| Model | Recall(%) | mAP50(%) | Speed(fps) |

|

| 31 |

+

| -------------- | --------- | -------- | ---------- |

|

| 32 |

+

| `Multinet` | 81.3 | 60.2 | 8.6 |

|

| 33 |

+

| `DLT-Net` | 89.4 | 68.4 | 9.3 |

|

| 34 |

+

| `Faster R-CNN` | 77.2 | 55.6 | 5.3 |

|

| 35 |

+

| `YOLOv5s` | 86.8 | 77.2 | 82 |

|

| 36 |

+

| `YOLOP(ours)` | 89.2 | 76.5 | 41 |

|

| 37 |

+

#### Drivable Area Segmentation Result

|

| 38 |

+

|

| 39 |

+

| Model | mIOU(%) | Speed(fps) |

|

| 40 |

+

| ------------- | ------- | ---------- |

|

| 41 |

+

| `Multinet` | 71.6 | 8.6 |

|

| 42 |

+

| `DLT-Net` | 71.3 | 9.3 |

|

| 43 |

+

| `PSPNet` | 89.6 | 11.1 |

|

| 44 |

+

| `YOLOP(ours)` | 91.5 | 41 |

|

| 45 |

+

|

| 46 |

+

#### Lane Detection Result:

|

| 47 |

+

|

| 48 |

+

| Model | mIOU(%) | IOU(%) |

|

| 49 |

+

| ------------- | ------- | ------ |

|

| 50 |

+

| `ENet` | 34.12 | 14.64 |

|

| 51 |

+

| `SCNN` | 35.79 | 15.84 |

|

| 52 |

+

| `ENet-SAD` | 36.56 | 16.02 |

|

| 53 |

+

| `YOLOP(ours)` | 70.50 | 26.20 |

|

| 54 |

+

|

| 55 |

+

#### Ablation Studies 1: End-to-end v.s. Step-by-step:

|

| 56 |

+

|

| 57 |

+

| Training_method | Recall(%) | AP(%) | mIoU(%) | Accuracy(%) | IoU(%) |

|

| 58 |

+

| --------------- | --------- | ----- | ------- | ----------- | ------ |

|

| 59 |

+

| `ES-W` | 87.0 | 75.3 | 90.4 | 66.8 | 26.2 |

|

| 60 |

+

| `ED-W` | 87.3 | 76.0 | 91.6 | 71.2 | 26.1 |

|

| 61 |

+

| `ES-D-W` | 87.0 | 75.1 | 91.7 | 68.6 | 27.0 |

|

| 62 |

+

| `ED-S-W` | 87.5 | 76.1 | 91.6 | 68.0 | 26.8 |

|

| 63 |

+

| `End-to-end` | 89.2 | 76.5 | 91.5 | 70.5 | 26.2 |

|

| 64 |

+

|

| 65 |

+

#### Ablation Studies 2: Multi-task v.s. Single task:

|

| 66 |

+

|

| 67 |

+

| Training_method | Recall(%) | AP(%) | mIoU(%) | Accuracy(%) | IoU(%) | Speed(ms/frame) |

|

| 68 |

+

| --------------- | --------- | ----- | ------- | ----------- | ------ | --------------- |

|

| 69 |

+

| `Det(only)` | 88.2 | 76.9 | - | - | - | 15.7 |

|

| 70 |

+

| `Da-Seg(only)` | - | - | 92.0 | - | - | 14.8 |

|

| 71 |

+

| `Ll-Seg(only)` | - | - | - | 79.6 | 27.9 | 14.8 |

|

| 72 |

+

| `Multitask` | 89.2 | 76.5 | 91.5 | 70.5 | 26.2 | 24.4 |

|

| 73 |

+

|

| 74 |

+

**Notes**:

|

| 75 |

+

|

| 76 |

+

- The works we has use for reference including `Multinet` ([paper](https://arxiv.org/pdf/1612.07695.pdf?utm_campaign=affiliate-ir-Optimise%20media%28%20South%20East%20Asia%29%20Pte.%20ltd._156_-99_national_R_all_ACQ_cpa_en&utm_content=&utm_source=%20388939),[code](https://github.com/MarvinTeichmann/MultiNet)),`DLT-Net` ([paper](https://ieeexplore.ieee.org/abstract/document/8937825)),`Faster R-CNN` ([paper](https://proceedings.neurips.cc/paper/2015/file/14bfa6bb14875e45bba028a21ed38046-Paper.pdf),[code](https://github.com/ShaoqingRen/faster_rcnn)),`YOLOv5s`([code](https://github.com/ultralytics/yolov5)) ,`PSPNet`([paper](https://openaccess.thecvf.com/content_cvpr_2017/papers/Zhao_Pyramid_Scene_Parsing_CVPR_2017_paper.pdf),[code](https://github.com/hszhao/PSPNet)) ,`ENet`([paper](https://arxiv.org/pdf/1606.02147.pdf),[code](https://github.com/osmr/imgclsmob)) `SCNN`([paper](https://www.aaai.org/ocs/index.php/AAAI/AAAI18/paper/download/16802/16322),[code](https://github.com/XingangPan/SCNN)) `SAD-ENet`([paper](https://openaccess.thecvf.com/content_ICCV_2019/papers/Hou_Learning_Lightweight_Lane_Detection_CNNs_by_Self_Attention_Distillation_ICCV_2019_paper.pdf),[code](https://github.com/cardwing/Codes-for-Lane-Detection)). Thanks for their wonderful works.

|

| 77 |

+

- In table 4, E, D, S and W refer to Encoder, Detect head, two Segment heads and whole network. So the Algorithm (First, we only train Encoder and Detect head. Then we freeze the Encoder and Detect head as well as train two Segmentation heads. Finally, the entire network is trained jointly for all three tasks.) can be marked as ED-S-W, and the same for others.

|

| 78 |

+

|

| 79 |

+

---

|

| 80 |

+

|

| 81 |

+

### Visualization

|

| 82 |

+

|

| 83 |

+

#### Traffic Object Detection Result

|

| 84 |

+

|

| 85 |

+

|

| 86 |

+

|

| 87 |

+

#### Drivable Area Segmentation Result

|

| 88 |

+

|

| 89 |

+

|

| 90 |

+

|

| 91 |

+

#### Lane Detection Result

|

| 92 |

+

|

| 93 |

+

|

| 94 |

+

|

| 95 |

+

**Notes**:

|

| 96 |

+

|

| 97 |

+

- The visualization of lane detection result has been post processed by quadratic fitting.

|

| 98 |

+

|

| 99 |

+

---

|

| 100 |

+

|

| 101 |

+

### Project Structure

|

| 102 |

+

|

| 103 |

+

```python

|

| 104 |

+

├─inference

|

| 105 |

+

│ ├─images # inference images

|

| 106 |

+

│ ├─output # inference result

|

| 107 |

+

├─lib

|

| 108 |

+

│ ├─config/default # configuration of training and validation

|

| 109 |

+

│ ├─core

|

| 110 |

+

│ │ ├─activations.py # activation function

|

| 111 |

+

│ │ ├─evaluate.py # calculation of metric

|

| 112 |

+

│ │ ├─function.py # training and validation of model

|

| 113 |

+

│ │ ├─general.py #calculation of metric、nms、conversion of data-format、visualization

|

| 114 |

+

│ │ ├─loss.py # loss function

|

| 115 |

+

│ │ ├─postprocess.py # postprocess(refine da-seg and ll-seg, unrelated to paper)

|

| 116 |

+

│ ├─dataset

|

| 117 |

+

│ │ ├─AutoDriveDataset.py # Superclass dataset,general function

|

| 118 |

+

│ │ ├─bdd.py # Subclass dataset,specific function

|

| 119 |

+

│ │ ├─hust.py # Subclass dataset(Campus scene, unrelated to paper)

|

| 120 |

+

│ │ ├─convect.py

|

| 121 |

+

│ │ ├─DemoDataset.py # demo dataset(image, video and stream)

|

| 122 |

+

│ ├─models

|

| 123 |

+

│ │ ├─YOLOP.py # Setup and Configuration of model

|

| 124 |

+

│ │ ├─light.py # Model lightweight(unrelated to paper, zwt)

|

| 125 |

+

│ │ ├─commom.py # calculation module

|

| 126 |

+

│ ├─utils

|

| 127 |

+

│ │ ├─augmentations.py # data augumentation

|

| 128 |

+

│ │ ├─autoanchor.py # auto anchor(k-means)

|

| 129 |

+

│ │ ├─split_dataset.py # (Campus scene, unrelated to paper)

|

| 130 |

+

│ │ ├─utils.py # logging、device_select、time_measure、optimizer_select、model_save&initialize 、Distributed training

|

| 131 |

+

│ ├─run

|

| 132 |

+

│ │ ├─dataset/training time # Visualization, logging and model_save

|

| 133 |

+

├─tools

|

| 134 |

+

│ │ ├─demo.py # demo(folder、camera)

|

| 135 |

+

│ │ ├─test.py

|

| 136 |

+

│ │ ├─train.py

|

| 137 |

+

├─toolkits

|

| 138 |

+

│ │ ├─depoly # Deployment of model

|

| 139 |

+

├─weights # Pretraining model

|

| 140 |

+

```

|

| 141 |

+

|

| 142 |

+

---

|

| 143 |

+

|

| 144 |

+

### Requirement

|

| 145 |

+

|

| 146 |

+

This codebase has been developed with python version 3.7, PyTorch 1.7+ and torchvision 0.8+:

|

| 147 |

+

|

| 148 |

+

```

|

| 149 |

+

conda install pytorch==1.7.0 torchvision==0.8.0 cudatoolkit=10.2 -c pytorch

|

| 150 |

+

```

|

| 151 |

+

|

| 152 |

+

See `requirements.txt` for additional dependencies and version requirements.

|

| 153 |

+

|

| 154 |

+

```setup

|

| 155 |

+

pip install -r requirements.txt

|

| 156 |

+

```

|

| 157 |

+

|

| 158 |

+

### Data preparation

|

| 159 |

+

|

| 160 |

+

#### Download

|

| 161 |

+

|

| 162 |

+

- Download the images from [images](https://bdd-data.berkeley.edu/).

|

| 163 |

+

|

| 164 |

+

- Download the annotations of detection from [det_annotations](https://drive.google.com/file/d/1Ge-R8NTxG1eqd4zbryFo-1Uonuh0Nxyl/view?usp=sharing).

|

| 165 |

+

- Download the annotations of drivable area segmentation from [da_seg_annotations](https://drive.google.com/file/d/1xy_DhUZRHR8yrZG3OwTQAHhYTnXn7URv/view?usp=sharing).

|

| 166 |

+

- Download the annotations of lane line segmentation from [ll_seg_annotations](https://drive.google.com/file/d/1lDNTPIQj_YLNZVkksKM25CvCHuquJ8AP/view?usp=sharing).

|

| 167 |

+

|

| 168 |

+

We recommend the dataset directory structure to be the following:

|

| 169 |

+

|

| 170 |

+

```

|

| 171 |

+

# The id represent the correspondence relation

|

| 172 |

+

├─dataset root

|

| 173 |

+

│ ├─images

|

| 174 |

+

│ │ ├─train

|

| 175 |

+

│ │ ├─val

|

| 176 |

+

│ ├─det_annotations

|

| 177 |

+

│ │ ├─train

|

| 178 |

+

│ │ ├─val

|

| 179 |

+

│ ├─da_seg_annotations

|

| 180 |

+

│ │ ├─train

|

| 181 |

+

│ │ ├─val

|

| 182 |

+

│ ├─ll_seg_annotations

|

| 183 |

+

│ │ ├─train

|

| 184 |

+

│ │ ├─val

|

| 185 |

+

```

|

| 186 |

+

|

| 187 |

+

Update the your dataset path in the `./lib/config/default.py`.

|

| 188 |

+

|

| 189 |

+

### Training

|

| 190 |

+

|

| 191 |

+

You can set the training configuration in the `./lib/config/default.py`. (Including: the loading of preliminary model, loss, data augmentation, optimizer, warm-up and cosine annealing, auto-anchor, training epochs, batch_size).

|

| 192 |

+

|

| 193 |

+

If you want try alternating optimization or train model for single task, please modify the corresponding configuration in `./lib/config/default.py` to `True`. (As following, all configurations is `False`, which means training multiple tasks end to end).

|

| 194 |

+

|

| 195 |

+

```python

|

| 196 |

+

# Alternating optimization

|

| 197 |

+

_C.TRAIN.SEG_ONLY = False # Only train two segmentation branchs

|

| 198 |

+

_C.TRAIN.DET_ONLY = False # Only train detection branch

|

| 199 |

+

_C.TRAIN.ENC_SEG_ONLY = False # Only train encoder and two segmentation branchs

|

| 200 |

+

_C.TRAIN.ENC_DET_ONLY = False # Only train encoder and detection branch

|

| 201 |

+

|

| 202 |

+

# Single task

|

| 203 |

+

_C.TRAIN.DRIVABLE_ONLY = False # Only train da_segmentation task

|

| 204 |

+

_C.TRAIN.LANE_ONLY = False # Only train ll_segmentation task

|

| 205 |

+

_C.TRAIN.DET_ONLY = False # Only train detection task

|

| 206 |

+

```

|

| 207 |

+

|

| 208 |

+

Start training:

|

| 209 |

+

|

| 210 |

+

```shell

|

| 211 |

+

python tools/train.py

|

| 212 |

+

```

|

| 213 |

+

|

| 214 |

+

|

| 215 |

+

|

| 216 |

+

### Evaluation

|

| 217 |

+

|

| 218 |

+

You can set the evaluation configuration in the `./lib/config/default.py`. (Including: batch_size and threshold value for nms).

|

| 219 |

+

|

| 220 |

+

Start evaluating:

|

| 221 |

+

|

| 222 |

+

```shell

|

| 223 |

+

python tools/test.py --weights weights/End-to-end.pth

|

| 224 |

+

```

|

| 225 |

+

|

| 226 |

+

|

| 227 |

+

|

| 228 |

+

### Demo Test

|

| 229 |

+

|

| 230 |

+

We provide two testing method.

|

| 231 |

+

|

| 232 |

+

#### Folder

|

| 233 |

+

|

| 234 |

+

You can store the image or video in `--source`, and then save the reasoning result to `--save-dir`

|

| 235 |

+

|

| 236 |

+

```shell

|

| 237 |

+

python tools/demo --source inference/images

|

| 238 |

+

```

|

| 239 |

+

|

| 240 |

+

|

| 241 |

+

|

| 242 |

+

#### Camera

|

| 243 |

+

|

| 244 |

+

If there are any camera connected to your computer, you can set the `source` as the camera number(The default is 0).

|

| 245 |

+

|

| 246 |

+

```shell

|

| 247 |

+

python tools/demo --source 0

|

| 248 |

+

```

|

| 249 |

+

|

| 250 |

+

|

| 251 |

+

|

| 252 |

+

#### Demonstration

|

| 253 |

+

|

| 254 |

+

<table>

|

| 255 |

+

<tr>

|

| 256 |

+

<th>input</th>

|

| 257 |

+

<th>output</th>

|

| 258 |

+

</tr>

|

| 259 |

+

<tr>

|

| 260 |

+

<td><img src=pictures/input1.gif /></td>

|

| 261 |

+

<td><img src=pictures/output1.gif/></td>

|

| 262 |

+

</tr>

|

| 263 |

+

<tr>

|

| 264 |

+

<td><img src=pictures/input2.gif /></td>

|

| 265 |

+

<td><img src=pictures/output2.gif/></td>

|

| 266 |

+

</tr>

|

| 267 |

+

</table>

|

| 268 |

+

|

| 269 |

+

|

| 270 |

+

|

| 271 |

+

### Deployment

|

| 272 |

+

|

| 273 |

+

Our model can reason in real-time on `Jetson Tx2`, with `Zed Camera` to capture image. We use `TensorRT` tool for speeding up. We provide code for deployment and reasoning of model in `./tools/deploy`.

|

| 274 |

+

|

| 275 |

+

|

| 276 |

+

|

| 277 |

+

## Citation

|

| 278 |

+

|

| 279 |

+

If you find our paper and code useful for your research, please consider giving a star :star: and citation :pencil: :

|

| 280 |

+

|

| 281 |

+

```BibTeX

|

| 282 |

+

@misc{2108.11250,

|

| 283 |

+

Author = {Dong Wu and Manwen Liao and Weitian Zhang and Xinggang Wang},

|

| 284 |

+

Title = {YOLOP: You Only Look Once for Panoptic Driving Perception},

|

| 285 |

+

Year = {2021},

|

| 286 |

+

Eprint = {arXiv:2108.11250},

|

| 287 |

+

}

|

| 288 |

+

```

|

| 289 |

+

|

lib/__init__.py

ADDED

|

File without changes

|

lib/config/__init__.py

ADDED

|

@@ -0,0 +1,2 @@

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from .default import _C as cfg

|

| 2 |

+

from .default import update_config

|

lib/config/default.py

ADDED

|

@@ -0,0 +1,157 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

from yacs.config import CfgNode as CN

|

| 3 |

+

|

| 4 |

+

|

| 5 |

+

_C = CN()

|

| 6 |

+

|

| 7 |

+

_C.LOG_DIR = 'runs/'

|

| 8 |

+

_C.GPUS = (0,1)

|

| 9 |

+

_C.WORKERS = 8

|

| 10 |

+

_C.PIN_MEMORY = False

|

| 11 |

+

_C.PRINT_FREQ = 20

|

| 12 |

+

_C.AUTO_RESUME =False # Resume from the last training interrupt

|

| 13 |

+

_C.NEED_AUTOANCHOR = False # Re-select the prior anchor(k-means) When training from scratch (epoch=0), set it to be ture!

|

| 14 |

+

_C.DEBUG = False

|

| 15 |

+

_C.num_seg_class = 2

|

| 16 |

+

|

| 17 |

+

# Cudnn related params

|

| 18 |

+

_C.CUDNN = CN()

|

| 19 |

+

_C.CUDNN.BENCHMARK = True

|

| 20 |

+

_C.CUDNN.DETERMINISTIC = False

|

| 21 |

+

_C.CUDNN.ENABLED = True

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

# common params for NETWORK

|

| 25 |

+

_C.MODEL = CN(new_allowed=True)

|

| 26 |

+

_C.MODEL.NAME = ''

|

| 27 |

+

_C.MODEL.STRU_WITHSHARE = False #add share_block to segbranch

|

| 28 |

+

_C.MODEL.HEADS_NAME = ['']

|

| 29 |

+

_C.MODEL.PRETRAINED = ""

|

| 30 |

+

_C.MODEL.PRETRAINED_DET = ""

|

| 31 |

+

_C.MODEL.IMAGE_SIZE = [640, 640] # width * height, ex: 192 * 256

|

| 32 |

+

_C.MODEL.EXTRA = CN(new_allowed=True)

|

| 33 |

+

|

| 34 |

+

|

| 35 |

+

# loss params

|

| 36 |

+

_C.LOSS = CN(new_allowed=True)

|

| 37 |

+

_C.LOSS.LOSS_NAME = ''

|

| 38 |

+

_C.LOSS.MULTI_HEAD_LAMBDA = None

|

| 39 |

+

_C.LOSS.FL_GAMMA = 0.0 # focal loss gamma

|

| 40 |

+

_C.LOSS.CLS_POS_WEIGHT = 1.0 # classification loss positive weights

|

| 41 |

+

_C.LOSS.OBJ_POS_WEIGHT = 1.0 # object loss positive weights

|

| 42 |

+

_C.LOSS.SEG_POS_WEIGHT = 1.0 # segmentation loss positive weights

|

| 43 |

+

_C.LOSS.BOX_GAIN = 0.05 # box loss gain

|

| 44 |

+

_C.LOSS.CLS_GAIN = 0.5 # classification loss gain

|

| 45 |

+

_C.LOSS.OBJ_GAIN = 1.0 # object loss gain

|

| 46 |

+

_C.LOSS.DA_SEG_GAIN = 0.2 # driving area segmentation loss gain

|

| 47 |

+

_C.LOSS.LL_SEG_GAIN = 0.2 # lane line segmentation loss gain

|

| 48 |

+

_C.LOSS.LL_IOU_GAIN = 0.2 # lane line iou loss gain

|

| 49 |

+

|

| 50 |

+

|

| 51 |

+

# DATASET related params

|

| 52 |

+

_C.DATASET = CN(new_allowed=True)

|

| 53 |

+

_C.DATASET.DATAROOT = '/home/zwt/bdd/bdd100k/images/100k' # the path of images folder

|

| 54 |

+

_C.DATASET.LABELROOT = '/home/zwt/bdd/bdd100k/labels/100k' # the path of det_annotations folder

|

| 55 |

+

_C.DATASET.MASKROOT = '/home/zwt/bdd/bdd_seg_gt' # the path of da_seg_annotations folder

|

| 56 |

+

_C.DATASET.LANEROOT = '/home/zwt/bdd/bdd_lane_gt' # the path of ll_seg_annotations folder

|

| 57 |

+

_C.DATASET.DATASET = 'BddDataset'

|

| 58 |

+

_C.DATASET.TRAIN_SET = 'train'

|

| 59 |

+

_C.DATASET.TEST_SET = 'val'

|

| 60 |

+

_C.DATASET.DATA_FORMAT = 'jpg'

|

| 61 |

+

_C.DATASET.SELECT_DATA = False

|

| 62 |

+

_C.DATASET.ORG_IMG_SIZE = [720, 1280]

|

| 63 |

+

|

| 64 |

+

# training data augmentation

|

| 65 |

+

_C.DATASET.FLIP = True

|

| 66 |

+

_C.DATASET.SCALE_FACTOR = 0.25

|

| 67 |

+

_C.DATASET.ROT_FACTOR = 10

|

| 68 |

+

_C.DATASET.TRANSLATE = 0.1

|

| 69 |

+

_C.DATASET.SHEAR = 0.0

|

| 70 |

+

_C.DATASET.COLOR_RGB = False

|

| 71 |

+

_C.DATASET.HSV_H = 0.015 # image HSV-Hue augmentation (fraction)

|

| 72 |

+

_C.DATASET.HSV_S = 0.7 # image HSV-Saturation augmentation (fraction)

|

| 73 |

+

_C.DATASET.HSV_V = 0.4 # image HSV-Value augmentation (fraction)

|

| 74 |

+

# TODO: more augmet params to add

|

| 75 |

+

|

| 76 |

+

|

| 77 |

+

# train

|

| 78 |

+

_C.TRAIN = CN(new_allowed=True)

|

| 79 |

+

_C.TRAIN.LR0 = 0.001 # initial learning rate (SGD=1E-2, Adam=1E-3)

|

| 80 |

+

_C.TRAIN.LRF = 0.2 # final OneCycleLR learning rate (lr0 * lrf)

|

| 81 |

+

_C.TRAIN.WARMUP_EPOCHS = 3.0

|

| 82 |

+

_C.TRAIN.WARMUP_BIASE_LR = 0.1

|

| 83 |

+

_C.TRAIN.WARMUP_MOMENTUM = 0.8

|

| 84 |

+

|

| 85 |

+

_C.TRAIN.OPTIMIZER = 'adam'

|

| 86 |

+

_C.TRAIN.MOMENTUM = 0.937

|

| 87 |

+

_C.TRAIN.WD = 0.0005

|

| 88 |

+

_C.TRAIN.NESTEROV = True

|

| 89 |

+

_C.TRAIN.GAMMA1 = 0.99

|

| 90 |

+

_C.TRAIN.GAMMA2 = 0.0

|

| 91 |

+

|

| 92 |

+

_C.TRAIN.BEGIN_EPOCH = 0

|

| 93 |

+

_C.TRAIN.END_EPOCH = 240

|

| 94 |

+

|

| 95 |

+

_C.TRAIN.VAL_FREQ = 1

|

| 96 |

+

_C.TRAIN.BATCH_SIZE_PER_GPU =24

|

| 97 |

+

_C.TRAIN.SHUFFLE = True

|

| 98 |

+

|

| 99 |

+

_C.TRAIN.IOU_THRESHOLD = 0.2

|

| 100 |

+

_C.TRAIN.ANCHOR_THRESHOLD = 4.0

|

| 101 |

+

|

| 102 |

+

# if training 3 tasks end-to-end, set all parameters as True

|

| 103 |

+

# Alternating optimization

|

| 104 |

+

_C.TRAIN.SEG_ONLY = False # Only train two segmentation branchs

|

| 105 |

+

_C.TRAIN.DET_ONLY = False # Only train detection branch

|

| 106 |

+

_C.TRAIN.ENC_SEG_ONLY = False # Only train encoder and two segmentation branchs

|

| 107 |

+

_C.TRAIN.ENC_DET_ONLY = False # Only train encoder and detection branch

|

| 108 |

+

|

| 109 |

+

# Single task

|

| 110 |

+

_C.TRAIN.DRIVABLE_ONLY = False # Only train da_segmentation task

|

| 111 |

+

_C.TRAIN.LANE_ONLY = False # Only train ll_segmentation task

|

| 112 |

+

_C.TRAIN.DET_ONLY = False # Only train detection task

|

| 113 |

+

|

| 114 |

+

|

| 115 |

+

|

| 116 |

+

|

| 117 |

+

_C.TRAIN.PLOT = True #

|

| 118 |

+

|

| 119 |

+

# testing

|

| 120 |

+

_C.TEST = CN(new_allowed=True)

|

| 121 |

+

_C.TEST.BATCH_SIZE_PER_GPU = 24

|

| 122 |

+

_C.TEST.MODEL_FILE = ''

|

| 123 |

+

_C.TEST.SAVE_JSON = False

|

| 124 |

+

_C.TEST.SAVE_TXT = False

|

| 125 |

+

_C.TEST.PLOTS = True

|

| 126 |

+

_C.TEST.NMS_CONF_THRESHOLD = 0.001

|

| 127 |

+

_C.TEST.NMS_IOU_THRESHOLD = 0.6

|

| 128 |

+

|

| 129 |

+

|

| 130 |

+

def update_config(cfg, args):

|

| 131 |

+

cfg.defrost()

|

| 132 |

+

# cfg.merge_from_file(args.cfg)

|

| 133 |

+

|

| 134 |

+

if args.modelDir:

|

| 135 |

+

cfg.OUTPUT_DIR = args.modelDir

|

| 136 |

+

|

| 137 |

+

if args.logDir:

|

| 138 |

+

cfg.LOG_DIR = args.logDir

|

| 139 |

+

|

| 140 |

+

# if args.conf_thres:

|

| 141 |

+

# cfg.TEST.NMS_CONF_THRESHOLD = args.conf_thres

|

| 142 |

+

|

| 143 |

+

# if args.iou_thres:

|

| 144 |

+

# cfg.TEST.NMS_IOU_THRESHOLD = args.iou_thres

|

| 145 |

+

|

| 146 |

+

|

| 147 |

+

|

| 148 |

+

# cfg.MODEL.PRETRAINED = os.path.join(

|

| 149 |

+

# cfg.DATA_DIR, cfg.MODEL.PRETRAINED

|

| 150 |

+

# )

|

| 151 |

+

#

|

| 152 |

+

# if cfg.TEST.MODEL_FILE:

|

| 153 |

+

# cfg.TEST.MODEL_FILE = os.path.join(

|

| 154 |

+

# cfg.DATA_DIR, cfg.TEST.MODEL_FILE

|

| 155 |

+

# )

|

| 156 |

+

|

| 157 |

+

cfg.freeze()

|

lib/core/__init__.py

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

from .function import AverageMeter

|

lib/core/activations.py

ADDED

|

@@ -0,0 +1,72 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Activation functions

|

| 2 |

+

|

| 3 |

+

import torch

|

| 4 |

+

import torch.nn as nn

|

| 5 |

+

import torch.nn.functional as F

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

# Swish https://arxiv.org/pdf/1905.02244.pdf ---------------------------------------------------------------------------

|

| 9 |

+

class Swish(nn.Module): #

|

| 10 |

+

@staticmethod

|

| 11 |

+

def forward(x):

|

| 12 |

+

return x * torch.sigmoid(x)

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

class Hardswish(nn.Module): # export-friendly version of nn.Hardswish()

|

| 16 |

+

@staticmethod

|

| 17 |

+

def forward(x):

|

| 18 |

+

# return x * F.hardsigmoid(x) # for torchscript and CoreML

|

| 19 |

+

return x * F.hardtanh(x + 3, 0., 6.) / 6. # for torchscript, CoreML and ONNX

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

class MemoryEfficientSwish(nn.Module):

|

| 23 |

+

class F(torch.autograd.Function):

|

| 24 |

+

@staticmethod

|

| 25 |

+

def forward(ctx, x):

|

| 26 |

+

ctx.save_for_backward(x)

|

| 27 |

+

return x * torch.sigmoid(x)

|

| 28 |

+

|

| 29 |

+

@staticmethod

|

| 30 |

+

def backward(ctx, grad_output):

|

| 31 |

+

x = ctx.saved_tensors[0]

|

| 32 |

+

sx = torch.sigmoid(x)

|

| 33 |

+

return grad_output * (sx * (1 + x * (1 - sx)))

|

| 34 |

+

|

| 35 |

+

def forward(self, x):

|

| 36 |

+

return self.F.apply(x)

|

| 37 |

+

|

| 38 |

+

|

| 39 |

+

# Mish https://github.com/digantamisra98/Mish --------------------------------------------------------------------------

|

| 40 |

+

class Mish(nn.Module):

|

| 41 |

+

@staticmethod

|

| 42 |

+

def forward(x):

|

| 43 |

+

return x * F.softplus(x).tanh()

|

| 44 |

+

|

| 45 |

+

|

| 46 |

+

class MemoryEfficientMish(nn.Module):

|

| 47 |

+

class F(torch.autograd.Function):

|

| 48 |

+

@staticmethod

|

| 49 |

+

def forward(ctx, x):

|

| 50 |

+

ctx.save_for_backward(x)

|

| 51 |

+

return x.mul(torch.tanh(F.softplus(x))) # x * tanh(ln(1 + exp(x)))

|

| 52 |

+

|

| 53 |

+

@staticmethod

|

| 54 |

+

def backward(ctx, grad_output):

|

| 55 |

+

x = ctx.saved_tensors[0]

|

| 56 |

+

sx = torch.sigmoid(x)

|

| 57 |

+

fx = F.softplus(x).tanh()

|

| 58 |

+

return grad_output * (fx + x * sx * (1 - fx * fx))

|

| 59 |

+

|

| 60 |

+

def forward(self, x):

|

| 61 |

+

return self.F.apply(x)

|

| 62 |

+

|

| 63 |

+

|

| 64 |

+

# FReLU https://arxiv.org/abs/2007.11824 -------------------------------------------------------------------------------

|

| 65 |

+

class FReLU(nn.Module):

|

| 66 |

+

def __init__(self, c1, k=3): # ch_in, kernel

|

| 67 |

+

super().__init__()

|

| 68 |

+

self.conv = nn.Conv2d(c1, c1, k, 1, 1, groups=c1, bias=False)

|

| 69 |

+

self.bn = nn.BatchNorm2d(c1)

|

| 70 |

+

|

| 71 |

+

def forward(self, x):

|

| 72 |

+

return torch.max(x, self.bn(self.conv(x)))

|

lib/core/evaluate.py

ADDED

|

@@ -0,0 +1,278 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Model validation metrics

|

| 2 |

+

|

| 3 |

+

from pathlib import Path

|

| 4 |

+

|

| 5 |

+

import matplotlib.pyplot as plt

|

| 6 |

+

import numpy as np

|

| 7 |

+

import torch

|

| 8 |

+

|

| 9 |

+

from . import general

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

def fitness(x):

|

| 13 |

+

# Model fitness as a weighted combination of metrics

|

| 14 |

+

w = [0.0, 0.0, 0.1, 0.9] # weights for [P, R, [email protected], [email protected]:0.95]

|

| 15 |

+

return (x[:, :4] * w).sum(1)

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

def ap_per_class(tp, conf, pred_cls, target_cls, plot=False, save_dir='precision-recall_curve.png', names=[]):

|

| 19 |

+

""" Compute the average precision, given the recall and precision curves.

|

| 20 |

+

Source: https://github.com/rafaelpadilla/Object-Detection-Metrics.

|

| 21 |

+

# Arguments

|

| 22 |

+

tp: True positives (nparray, nx1 or nx10).

|

| 23 |

+

conf: Objectness value from 0-1 (nparray).

|

| 24 |

+

pred_cls: Predicted object classes (nparray).

|

| 25 |

+

target_cls: True object classes (nparray).

|

| 26 |

+

plot: Plot precision-recall curve at [email protected]

|

| 27 |

+

save_dir: Plot save directory

|

| 28 |

+

# Returns

|

| 29 |

+

The average precision as computed in py-faster-rcnn.

|

| 30 |

+

"""

|

| 31 |

+

|

| 32 |

+

# Sort by objectness

|

| 33 |

+

i = np.argsort(-conf) # sorted index from big to small

|

| 34 |

+

tp, conf, pred_cls = tp[i], conf[i], pred_cls[i]

|

| 35 |

+

|

| 36 |

+

# Find unique classes, each number just showed up once

|

| 37 |

+

unique_classes = np.unique(target_cls)

|

| 38 |

+

|

| 39 |

+

# Create Precision-Recall curve and compute AP for each class

|

| 40 |

+

px, py = np.linspace(0, 1, 1000), [] # for plotting

|

| 41 |

+

pr_score = 0.1 # score to evaluate P and R https://github.com/ultralytics/yolov3/issues/898

|

| 42 |

+

s = [unique_classes.shape[0], tp.shape[1]] # number class, number iou thresholds (i.e. 10 for mAP0.5...0.95)

|

| 43 |

+

ap, p, r = np.zeros(s), np.zeros((unique_classes.shape[0], 1000)), np.zeros((unique_classes.shape[0], 1000))

|

| 44 |

+

for ci, c in enumerate(unique_classes):

|

| 45 |

+

i = pred_cls == c

|

| 46 |

+

n_l = (target_cls == c).sum() # number of labels

|

| 47 |

+

n_p = i.sum() # number of predictions

|

| 48 |

+

|

| 49 |

+

if n_p == 0 or n_l == 0:

|

| 50 |

+

continue

|

| 51 |

+

else:

|

| 52 |

+

# Accumulate FPs and TPs

|

| 53 |

+

fpc = (1 - tp[i]).cumsum(0)

|

| 54 |

+

tpc = tp[i].cumsum(0)

|

| 55 |

+

|

| 56 |

+

# Recall

|

| 57 |

+

recall = tpc / (n_l + 1e-16) # recall curve

|

| 58 |

+

r[ci] = np.interp(-px, -conf[i], recall[:, 0], left=0) # r at pr_score, negative x, xp because xp decreases

|

| 59 |

+

|

| 60 |

+

# Precision

|

| 61 |

+

precision = tpc / (tpc + fpc) # precision curve

|

| 62 |

+

p[ci] = np.interp(-px, -conf[i], precision[:, 0], left=1) # p at pr_score

|

| 63 |

+

|

| 64 |

+

# AP from recall-precision curve

|

| 65 |

+

for j in range(tp.shape[1]):

|

| 66 |

+

ap[ci, j], mpre, mrec = compute_ap(recall[:, j], precision[:, j])

|

| 67 |

+

if plot and (j == 0):

|

| 68 |

+

py.append(np.interp(px, mrec, mpre)) # precision at [email protected]

|

| 69 |

+

|

| 70 |

+

# Compute F1 score (harmonic mean of precision and recall)

|

| 71 |

+

f1 = 2 * p * r / (p + r + 1e-16)

|

| 72 |

+

i = r.mean(0).argmax()

|

| 73 |

+

|

| 74 |

+

if plot:

|

| 75 |

+

plot_pr_curve(px, py, ap, save_dir, names)

|

| 76 |

+

|

| 77 |

+

return p[:, i], r[:, i], ap, f1, unique_classes.astype('int32')

|

| 78 |

+

|

| 79 |

+

|

| 80 |

+

def compute_ap(recall, precision):

|

| 81 |

+

""" Compute the average precision, given the recall and precision curves

|

| 82 |

+

# Arguments

|

| 83 |

+

recall: The recall curve (list)

|

| 84 |

+

precision: The precision curve (list)

|

| 85 |

+

# Returns

|

| 86 |

+

Average precision, precision curve, recall curve

|

| 87 |

+

"""

|

| 88 |

+

|

| 89 |

+

# Append sentinel values to beginning and end

|

| 90 |

+

mrec = np.concatenate(([0.], recall, [recall[-1] + 0.01]))

|

| 91 |

+

mpre = np.concatenate(([1.], precision, [0.]))

|

| 92 |

+

|

| 93 |

+

# Compute the precision envelope

|

| 94 |

+

mpre = np.flip(np.maximum.accumulate(np.flip(mpre)))

|

| 95 |

+

|

| 96 |

+

# Integrate area under curve

|

| 97 |

+

method = 'interp' # methods: 'continuous', 'interp'

|

| 98 |

+

if method == 'interp':

|

| 99 |

+

x = np.linspace(0, 1, 101) # 101-point interp (COCO)

|

| 100 |

+

ap = np.trapz(np.interp(x, mrec, mpre), x) # integrate

|

| 101 |

+

else: # 'continuous'

|

| 102 |

+

i = np.where(mrec[1:] != mrec[:-1])[0] # points where x axis (recall) changes

|

| 103 |

+

ap = np.sum((mrec[i + 1] - mrec[i]) * mpre[i + 1]) # area under curve

|

| 104 |

+

|

| 105 |

+

return ap, mpre, mrec

|

| 106 |

+

|

| 107 |

+

|

| 108 |

+

class ConfusionMatrix:

|

| 109 |

+

# Updated version of https://github.com/kaanakan/object_detection_confusion_matrix

|

| 110 |

+

def __init__(self, nc, conf=0.25, iou_thres=0.45):

|

| 111 |

+

self.matrix = np.zeros((nc + 1, nc + 1))

|

| 112 |

+

self.nc = nc # number of classes

|

| 113 |

+

self.conf = conf

|

| 114 |

+

self.iou_thres = iou_thres

|

| 115 |

+

|

| 116 |

+

def process_batch(self, detections, labels):

|

| 117 |

+

"""

|

| 118 |

+

Return intersection-over-union (Jaccard index) of boxes.

|

| 119 |

+

Both sets of boxes are expected to be in (x1, y1, x2, y2) format.

|

| 120 |

+

Arguments:

|

| 121 |

+

detections (Array[N, 6]), x1, y1, x2, y2, conf, class

|

| 122 |

+

labels (Array[M, 5]), class, x1, y1, x2, y2

|

| 123 |

+

Returns:

|

| 124 |

+

None, updates confusion matrix accordingly

|

| 125 |

+

"""

|

| 126 |

+

detections = detections[detections[:, 4] > self.conf]

|

| 127 |

+

gt_classes = labels[:, 0].int()

|

| 128 |

+

detection_classes = detections[:, 5].int()

|

| 129 |

+

iou = general.box_iou(labels[:, 1:], detections[:, :4])

|

| 130 |

+

|

| 131 |

+

x = torch.where(iou > self.iou_thres)

|

| 132 |

+

if x[0].shape[0]:

|

| 133 |

+

matches = torch.cat((torch.stack(x, 1), iou[x[0], x[1]][:, None]), 1).cpu().numpy()

|

| 134 |

+

if x[0].shape[0] > 1:

|

| 135 |

+

matches = matches[matches[:, 2].argsort()[::-1]]

|

| 136 |

+

matches = matches[np.unique(matches[:, 1], return_index=True)[1]]

|

| 137 |

+

matches = matches[matches[:, 2].argsort()[::-1]]

|

| 138 |

+

matches = matches[np.unique(matches[:, 0], return_index=True)[1]]

|

| 139 |

+

else:

|

| 140 |

+

matches = np.zeros((0, 3))

|

| 141 |

+

|

| 142 |

+

n = matches.shape[0] > 0

|

| 143 |

+

m0, m1, _ = matches.transpose().astype(np.int16)

|

| 144 |

+

for i, gc in enumerate(gt_classes):

|

| 145 |

+

j = m0 == i

|

| 146 |

+

if n and sum(j) == 1:

|

| 147 |

+

self.matrix[gc, detection_classes[m1[j]]] += 1 # correct

|

| 148 |

+

else:

|

| 149 |

+

self.matrix[gc, self.nc] += 1 # background FP

|

| 150 |

+

|

| 151 |

+

if n:

|

| 152 |

+

for i, dc in enumerate(detection_classes):

|

| 153 |

+

if not any(m1 == i):

|

| 154 |

+

self.matrix[self.nc, dc] += 1 # background FN

|

| 155 |

+

|

| 156 |

+

def matrix(self):

|

| 157 |

+

return self.matrix

|

| 158 |

+

|

| 159 |

+

def plot(self, save_dir='', names=()):

|

| 160 |

+

try:

|

| 161 |

+

import seaborn as sn

|

| 162 |

+

|

| 163 |

+

array = self.matrix / (self.matrix.sum(0).reshape(1, self.nc + 1) + 1E-6) # normalize

|

| 164 |

+

array[array < 0.005] = np.nan # don't annotate (would appear as 0.00)

|

| 165 |

+

|

| 166 |

+

fig = plt.figure(figsize=(12, 9), tight_layout=True)

|

| 167 |

+

sn.set(font_scale=1.0 if self.nc < 50 else 0.8) # for label size

|

| 168 |

+

labels = (0 < len(names) < 99) and len(names) == self.nc # apply names to ticklabels

|

| 169 |

+

sn.heatmap(array, annot=self.nc < 30, annot_kws={"size": 8}, cmap='Blues', fmt='.2f', square=True,

|

| 170 |

+

xticklabels=names + ['background FN'] if labels else "auto",

|

| 171 |

+

yticklabels=names + ['background FP'] if labels else "auto").set_facecolor((1, 1, 1))

|

| 172 |

+

fig.axes[0].set_xlabel('True')

|

| 173 |

+

fig.axes[0].set_ylabel('Predicted')

|

| 174 |

+

fig.savefig(Path(save_dir) / 'confusion_matrix.png', dpi=250)

|

| 175 |

+

except Exception as e:

|

| 176 |

+

pass

|

| 177 |

+

|

| 178 |

+

def print(self):

|

| 179 |

+

for i in range(self.nc + 1):

|

| 180 |

+

print(' '.join(map(str, self.matrix[i])))

|

| 181 |

+

|

| 182 |

+

class SegmentationMetric(object):

|

| 183 |

+

'''

|

| 184 |

+

imgLabel [batch_size, height(144), width(256)]

|

| 185 |

+

confusionMatrix [[0(TN),1(FP)],

|

| 186 |

+

[2(FN),3(TP)]]

|

| 187 |

+

'''

|

| 188 |

+

def __init__(self, numClass):

|

| 189 |

+

self.numClass = numClass

|

| 190 |

+

self.confusionMatrix = np.zeros((self.numClass,)*2)

|

| 191 |

+

|

| 192 |

+

def pixelAccuracy(self):

|

| 193 |

+

# return all class overall pixel accuracy

|

| 194 |

+

# acc = (TP + TN) / (TP + TN + FP + TN)

|

| 195 |

+

acc = np.diag(self.confusionMatrix).sum() / self.confusionMatrix.sum()

|

| 196 |

+

return acc

|

| 197 |

+

|

| 198 |

+

def lineAccuracy(self):

|

| 199 |

+

Acc = np.diag(self.confusionMatrix) / (self.confusionMatrix.sum(axis=1) + 1e-12)

|

| 200 |

+

return Acc[1]

|

| 201 |

+

|

| 202 |

+

def classPixelAccuracy(self):

|

| 203 |

+

# return each category pixel accuracy(A more accurate way to call it precision)

|

| 204 |

+

# acc = (TP) / TP + FP

|

| 205 |

+

classAcc = np.diag(self.confusionMatrix) / (self.confusionMatrix.sum(axis=0) + 1e-12)

|

| 206 |

+

return classAcc

|

| 207 |

+

|

| 208 |

+

def meanPixelAccuracy(self):

|

| 209 |

+

classAcc = self.classPixelAccuracy()

|

| 210 |

+

meanAcc = np.nanmean(classAcc)

|

| 211 |

+

return meanAcc

|

| 212 |

+

|

| 213 |

+

def meanIntersectionOverUnion(self):

|

| 214 |

+

# Intersection = TP Union = TP + FP + FN

|

| 215 |

+

# IoU = TP / (TP + FP + FN)

|

| 216 |

+

intersection = np.diag(self.confusionMatrix)

|

| 217 |

+

union = np.sum(self.confusionMatrix, axis=1) + np.sum(self.confusionMatrix, axis=0) - np.diag(self.confusionMatrix)

|

| 218 |

+

IoU = intersection / union

|

| 219 |

+

IoU[np.isnan(IoU)] = 0

|

| 220 |

+

mIoU = np.nanmean(IoU)

|

| 221 |

+

return mIoU

|

| 222 |

+

|

| 223 |

+

def IntersectionOverUnion(self):

|

| 224 |

+

intersection = np.diag(self.confusionMatrix)

|

| 225 |

+

union = np.sum(self.confusionMatrix, axis=1) + np.sum(self.confusionMatrix, axis=0) - np.diag(self.confusionMatrix)

|

| 226 |

+

IoU = intersection / union

|

| 227 |

+

IoU[np.isnan(IoU)] = 0

|

| 228 |

+

return IoU[1]

|

| 229 |

+

|

| 230 |

+

def genConfusionMatrix(self, imgPredict, imgLabel):

|

| 231 |

+

# remove classes from unlabeled pixels in gt image and predict

|

| 232 |

+

# print(imgLabel.shape)

|

| 233 |

+

mask = (imgLabel >= 0) & (imgLabel < self.numClass)

|

| 234 |

+

label = self.numClass * imgLabel[mask] + imgPredict[mask]

|

| 235 |

+

count = np.bincount(label, minlength=self.numClass**2)

|

| 236 |

+

confusionMatrix = count.reshape(self.numClass, self.numClass)

|

| 237 |

+

return confusionMatrix

|

| 238 |

+

|

| 239 |

+

def Frequency_Weighted_Intersection_over_Union(self):

|

| 240 |

+

# FWIOU = [(TP+FN)/(TP+FP+TN+FN)] *[TP / (TP + FP + FN)]

|

| 241 |

+

freq = np.sum(self.confusionMatrix, axis=1) / np.sum(self.confusionMatrix)

|

| 242 |

+

iu = np.diag(self.confusionMatrix) / (

|

| 243 |

+