SamMorgan

commited on

Commit

•

7f7b618

1

Parent(s):

5a3f3ba

Upload android files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- android/.gitignore +13 -0

- android/app/.gitignore +2 -0

- android/app/build.gradle +61 -0

- android/app/download_model.gradle +26 -0

- android/app/proguard-rules.pro +21 -0

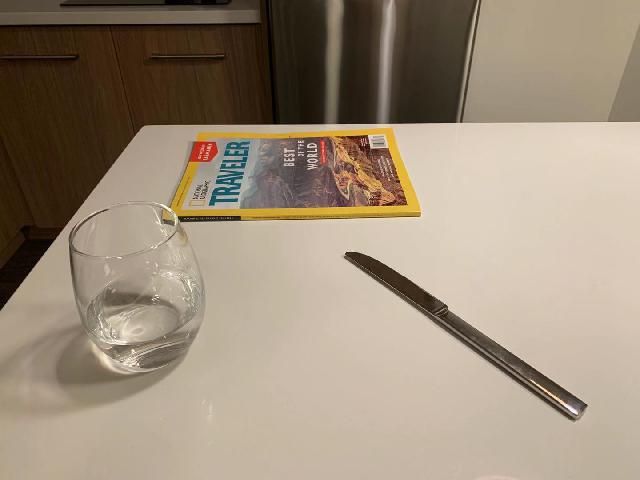

- android/app/src/androidTest/assets/table.jpg +0 -0

- android/app/src/androidTest/assets/table_results.txt +4 -0

- android/app/src/androidTest/java/AndroidManifest.xml +5 -0

- android/app/src/androidTest/java/org/tensorflow/lite/examples/detection/DetectorTest.java +165 -0

- android/app/src/main/AndroidManifest.xml +35 -0

- android/app/src/main/assets/coco.txt +80 -0

- android/app/src/main/assets/kite.jpg +0 -0

- android/app/src/main/assets/labelmap.txt +91 -0

- android/app/src/main/assets/yolov4-416-fp32.tflite +3 -0

- android/app/src/main/java/org/tensorflow/lite/examples/detection/CameraActivity.java +550 -0

- android/app/src/main/java/org/tensorflow/lite/examples/detection/CameraConnectionFragment.java +569 -0

- android/app/src/main/java/org/tensorflow/lite/examples/detection/DetectorActivity.java +266 -0

- android/app/src/main/java/org/tensorflow/lite/examples/detection/LegacyCameraConnectionFragment.java +199 -0

- android/app/src/main/java/org/tensorflow/lite/examples/detection/MainActivity.java +162 -0

- android/app/src/main/java/org/tensorflow/lite/examples/detection/customview/AutoFitTextureView.java +72 -0

- android/app/src/main/java/org/tensorflow/lite/examples/detection/customview/OverlayView.java +48 -0

- android/app/src/main/java/org/tensorflow/lite/examples/detection/customview/RecognitionScoreView.java +67 -0

- android/app/src/main/java/org/tensorflow/lite/examples/detection/customview/ResultsView.java +23 -0

- android/app/src/main/java/org/tensorflow/lite/examples/detection/env/BorderedText.java +128 -0

- android/app/src/main/java/org/tensorflow/lite/examples/detection/env/ImageUtils.java +219 -0

- android/app/src/main/java/org/tensorflow/lite/examples/detection/env/Logger.java +186 -0

- android/app/src/main/java/org/tensorflow/lite/examples/detection/env/Size.java +142 -0

- android/app/src/main/java/org/tensorflow/lite/examples/detection/env/Utils.java +188 -0

- android/app/src/main/java/org/tensorflow/lite/examples/detection/tflite/Classifier.java +134 -0

- android/app/src/main/java/org/tensorflow/lite/examples/detection/tflite/YoloV4Classifier.java +599 -0

- android/app/src/main/java/org/tensorflow/lite/examples/detection/tracking/MultiBoxTracker.java +211 -0

- android/app/src/main/res/drawable-hdpi/ic_launcher.png +0 -0

- android/app/src/main/res/drawable-mdpi/ic_launcher.png +0 -0

- android/app/src/main/res/drawable-v24/ic_launcher_foreground.xml +34 -0

- android/app/src/main/res/drawable-v24/kite.jpg +0 -0

- android/app/src/main/res/drawable-xxhdpi/ic_launcher.png +0 -0

- android/app/src/main/res/drawable-xxhdpi/icn_chevron_down.png +0 -0

- android/app/src/main/res/drawable-xxhdpi/icn_chevron_up.png +0 -0

- android/app/src/main/res/drawable-xxhdpi/tfl2_logo.png +0 -0

- android/app/src/main/res/drawable-xxhdpi/tfl2_logo_dark.png +0 -0

- android/app/src/main/res/drawable-xxxhdpi/caret.jpg +0 -0

- android/app/src/main/res/drawable-xxxhdpi/chair.jpg +0 -0

- android/app/src/main/res/drawable-xxxhdpi/sample_image.jpg +0 -0

- android/app/src/main/res/drawable/bottom_sheet_bg.xml +9 -0

- android/app/src/main/res/drawable/ic_baseline_add.xml +9 -0

- android/app/src/main/res/drawable/ic_baseline_remove.xml +9 -0

- android/app/src/main/res/drawable/ic_launcher_background.xml +170 -0

- android/app/src/main/res/drawable/rectangle.xml +13 -0

- android/app/src/main/res/layout/activity_main.xml +52 -0

- android/app/src/main/res/layout/tfe_od_activity_camera.xml +56 -0

android/.gitignore

ADDED

|

@@ -0,0 +1,13 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

*.iml

|

| 2 |

+

.gradle

|

| 3 |

+

/local.properties

|

| 4 |

+

/.idea/libraries

|

| 5 |

+

/.idea/modules.xml

|

| 6 |

+

/.idea/workspace.xml

|

| 7 |

+

.DS_Store

|

| 8 |

+

/build

|

| 9 |

+

/captures

|

| 10 |

+

.externalNativeBuild

|

| 11 |

+

|

| 12 |

+

/.gradle/

|

| 13 |

+

/.idea/

|

android/app/.gitignore

ADDED

|

@@ -0,0 +1,2 @@

|

|

|

|

|

|

|

|

|

|

| 1 |

+

/build

|

| 2 |

+

/build/

|

android/app/build.gradle

ADDED

|

@@ -0,0 +1,61 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

apply plugin: 'com.android.application'

|

| 2 |

+

apply plugin: 'de.undercouch.download'

|

| 3 |

+

|

| 4 |

+

android {

|

| 5 |

+

compileSdkVersion 28

|

| 6 |

+

buildToolsVersion '28.0.3'

|

| 7 |

+

defaultConfig {

|

| 8 |

+

applicationId "org.tensorflow.lite.examples.detection"

|

| 9 |

+

minSdkVersion 21

|

| 10 |

+

targetSdkVersion 28

|

| 11 |

+

versionCode 1

|

| 12 |

+

versionName "1.0"

|

| 13 |

+

|

| 14 |

+

// ndk {

|

| 15 |

+

// abiFilters 'armeabi-v7a', 'arm64-v8a'

|

| 16 |

+

// }

|

| 17 |

+

}

|

| 18 |

+

buildTypes {

|

| 19 |

+

release {

|

| 20 |

+

minifyEnabled false

|

| 21 |

+

proguardFiles getDefaultProguardFile('proguard-android.txt'), 'proguard-rules.pro'

|

| 22 |

+

}

|

| 23 |

+

}

|

| 24 |

+

aaptOptions {

|

| 25 |

+

noCompress "tflite"

|

| 26 |

+

}

|

| 27 |

+

compileOptions {

|

| 28 |

+

sourceCompatibility = '1.8'

|

| 29 |

+

targetCompatibility = '1.8'

|

| 30 |

+

}

|

| 31 |

+

lintOptions {

|

| 32 |

+

abortOnError false

|

| 33 |

+

}

|

| 34 |

+

}

|

| 35 |

+

|

| 36 |

+

// import DownloadModels task

|

| 37 |

+

project.ext.ASSET_DIR = projectDir.toString() + '/src/main/assets'

|

| 38 |

+

project.ext.TMP_DIR = project.buildDir.toString() + '/downloads'

|

| 39 |

+

|

| 40 |

+

// Download default models; if you wish to use your own models then

|

| 41 |

+

// place them in the "assets" directory and comment out this line.

|

| 42 |

+

//apply from: "download_model.gradle"

|

| 43 |

+

|

| 44 |

+

apply from: 'download_model.gradle'

|

| 45 |

+

|

| 46 |

+

dependencies {

|

| 47 |

+

implementation fileTree(dir: 'libs', include: ['*.jar', '*.aar'])

|

| 48 |

+

implementation 'androidx.appcompat:appcompat:1.1.0'

|

| 49 |

+

implementation 'androidx.coordinatorlayout:coordinatorlayout:1.1.0'

|

| 50 |

+

implementation 'com.google.android.material:material:1.1.0'

|

| 51 |

+

// implementation 'org.tensorflow:tensorflow-lite:0.0.0-nightly'

|

| 52 |

+

// implementation 'org.tensorflow:tensorflow-lite-gpu:0.0.0-nightly'

|

| 53 |

+

implementation 'org.tensorflow:tensorflow-lite:2.2.0'

|

| 54 |

+

implementation 'org.tensorflow:tensorflow-lite-gpu:2.2.0'

|

| 55 |

+

// implementation 'org.tensorflow:tensorflow-lite:0.0.0-gpu-experimental'

|

| 56 |

+

implementation 'androidx.constraintlayout:constraintlayout:1.1.3'

|

| 57 |

+

implementation 'com.google.code.gson:gson:2.8.6'

|

| 58 |

+

androidTestImplementation 'androidx.test.ext:junit:1.1.1'

|

| 59 |

+

androidTestImplementation 'com.android.support.test:rules:1.0.2'

|

| 60 |

+

androidTestImplementation 'com.google.truth:truth:1.0.1'

|

| 61 |

+

}

|

android/app/download_model.gradle

ADDED

|

@@ -0,0 +1,26 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

task downloadZipFile(type: Download) {

|

| 3 |

+

src 'http://storage.googleapis.com/download.tensorflow.org/models/tflite/coco_ssd_mobilenet_v1_1.0_quant_2018_06_29.zip'

|

| 4 |

+

dest new File(buildDir, 'zips/')

|

| 5 |

+

overwrite false

|

| 6 |

+

}

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

task downloadAndUnzipFile(dependsOn: downloadZipFile, type: Copy) {

|

| 10 |

+

from zipTree(downloadZipFile.dest)

|

| 11 |

+

into project.ext.ASSET_DIR

|

| 12 |

+

}

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

task extractModels(type: Copy) {

|

| 16 |

+

dependsOn downloadAndUnzipFile

|

| 17 |

+

}

|

| 18 |

+

|

| 19 |

+

tasks.whenTaskAdded { task ->

|

| 20 |

+

if (task.name == 'assembleDebug') {

|

| 21 |

+

task.dependsOn 'extractModels'

|

| 22 |

+

}

|

| 23 |

+

if (task.name == 'assembleRelease') {

|

| 24 |

+

task.dependsOn 'extractModels'

|

| 25 |

+

}

|

| 26 |

+

}

|

android/app/proguard-rules.pro

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Add project specific ProGuard rules here.

|

| 2 |

+

# You can control the set of applied configuration files using the

|

| 3 |

+

# proguardFiles setting in build.gradle.

|

| 4 |

+

#

|

| 5 |

+

# For more details, see

|

| 6 |

+

# http://developer.android.com/guide/developing/tools/proguard.html

|

| 7 |

+

|

| 8 |

+

# If your project uses WebView with JS, uncomment the following

|

| 9 |

+

# and specify the fully qualified class name to the JavaScript interface

|

| 10 |

+

# class:

|

| 11 |

+

#-keepclassmembers class fqcn.of.javascript.interface.for.webview {

|

| 12 |

+

# public *;

|

| 13 |

+

#}

|

| 14 |

+

|

| 15 |

+

# Uncomment this to preserve the line number information for

|

| 16 |

+

# debugging stack traces.

|

| 17 |

+

#-keepattributes SourceFile,LineNumberTable

|

| 18 |

+

|

| 19 |

+

# If you keep the line number information, uncomment this to

|

| 20 |

+

# hide the original source file name.

|

| 21 |

+

#-renamesourcefileattribute SourceFile

|

android/app/src/androidTest/assets/table.jpg

ADDED

|

android/app/src/androidTest/assets/table_results.txt

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

dining_table 27.492085 97.94615 623.1435 444.8627 0.48828125

|

| 2 |

+

knife 342.53433 243.71082 583.89185 416.34595 0.4765625

|

| 3 |

+

cup 68.025925 197.5857 202.02031 374.2206 0.4375

|

| 4 |

+

book 185.43098 139.64153 244.51149 203.37737 0.3125

|

android/app/src/androidTest/java/AndroidManifest.xml

ADDED

|

@@ -0,0 +1,5 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<?xml version="1.0" encoding="utf-8"?>

|

| 2 |

+

<manifest xmlns:android="http://schemas.android.com/apk/res/android"

|

| 3 |

+

package="org.tensorflow.lite.examples.detection">

|

| 4 |

+

<uses-sdk />

|

| 5 |

+

</manifest>

|

android/app/src/androidTest/java/org/tensorflow/lite/examples/detection/DetectorTest.java

ADDED

|

@@ -0,0 +1,165 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

/*

|

| 2 |

+

* Copyright 2020 The TensorFlow Authors. All Rights Reserved.

|

| 3 |

+

*

|

| 4 |

+

* Licensed under the Apache License, Version 2.0 (the "License");

|

| 5 |

+

* you may not use this file except in compliance with the License.

|

| 6 |

+

* You may obtain a copy of the License at

|

| 7 |

+

*

|

| 8 |

+

* http://www.apache.org/licenses/LICENSE-2.0

|

| 9 |

+

*

|

| 10 |

+

* Unless required by applicable law or agreed to in writing, software

|

| 11 |

+

* distributed under the License is distributed on an "AS IS" BASIS,

|

| 12 |

+

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 13 |

+

* See the License for the specific language governing permissions and

|

| 14 |

+

* limitations under the License.

|

| 15 |

+

*/

|

| 16 |

+

|

| 17 |

+

package org.tensorflow.lite.examples.detection;

|

| 18 |

+

|

| 19 |

+

import static com.google.common.truth.Truth.assertThat;

|

| 20 |

+

import static java.lang.Math.abs;

|

| 21 |

+

import static java.lang.Math.max;

|

| 22 |

+

import static java.lang.Math.min;

|

| 23 |

+

|

| 24 |

+

import android.content.res.AssetManager;

|

| 25 |

+

import android.graphics.Bitmap;

|

| 26 |

+

import android.graphics.Bitmap.Config;

|

| 27 |

+

import android.graphics.BitmapFactory;

|

| 28 |

+

import android.graphics.Canvas;

|

| 29 |

+

import android.graphics.Matrix;

|

| 30 |

+

import android.graphics.RectF;

|

| 31 |

+

import android.util.Size;

|

| 32 |

+

import androidx.test.ext.junit.runners.AndroidJUnit4;

|

| 33 |

+

import androidx.test.platform.app.InstrumentationRegistry;

|

| 34 |

+

import java.io.IOException;

|

| 35 |

+

import java.io.InputStream;

|

| 36 |

+

import java.util.ArrayList;

|

| 37 |

+

import java.util.List;

|

| 38 |

+

import java.util.Scanner;

|

| 39 |

+

import org.junit.Before;

|

| 40 |

+

import org.junit.Test;

|

| 41 |

+

import org.junit.runner.RunWith;

|

| 42 |

+

import org.tensorflow.lite.examples.detection.env.ImageUtils;

|

| 43 |

+

import org.tensorflow.lite.examples.detection.tflite.Classifier;

|

| 44 |

+

import org.tensorflow.lite.examples.detection.tflite.Classifier.Recognition;

|

| 45 |

+

import org.tensorflow.lite.examples.detection.tflite.TFLiteObjectDetectionAPIModel;

|

| 46 |

+

|

| 47 |

+

/** Golden test for Object Detection Reference app. */

|

| 48 |

+

@RunWith(AndroidJUnit4.class)

|

| 49 |

+

public class DetectorTest {

|

| 50 |

+

|

| 51 |

+

private static final int MODEL_INPUT_SIZE = 300;

|

| 52 |

+

private static final boolean IS_MODEL_QUANTIZED = true;

|

| 53 |

+

private static final String MODEL_FILE = "detect.tflite";

|

| 54 |

+

private static final String LABELS_FILE = "file:///android_asset/labelmap.txt";

|

| 55 |

+

private static final Size IMAGE_SIZE = new Size(640, 480);

|

| 56 |

+

|

| 57 |

+

private Classifier detector;

|

| 58 |

+

private Bitmap croppedBitmap;

|

| 59 |

+

private Matrix frameToCropTransform;

|

| 60 |

+

private Matrix cropToFrameTransform;

|

| 61 |

+

|

| 62 |

+

@Before

|

| 63 |

+

public void setUp() throws IOException {

|

| 64 |

+

AssetManager assetManager =

|

| 65 |

+

InstrumentationRegistry.getInstrumentation().getContext().getAssets();

|

| 66 |

+

detector =

|

| 67 |

+

TFLiteObjectDetectionAPIModel.create(

|

| 68 |

+

assetManager,

|

| 69 |

+

MODEL_FILE,

|

| 70 |

+

LABELS_FILE,

|

| 71 |

+

MODEL_INPUT_SIZE,

|

| 72 |

+

IS_MODEL_QUANTIZED);

|

| 73 |

+

int cropSize = MODEL_INPUT_SIZE;

|

| 74 |

+

int previewWidth = IMAGE_SIZE.getWidth();

|

| 75 |

+

int previewHeight = IMAGE_SIZE.getHeight();

|

| 76 |

+

int sensorOrientation = 0;

|

| 77 |

+

croppedBitmap = Bitmap.createBitmap(cropSize, cropSize, Config.ARGB_8888);

|

| 78 |

+

|

| 79 |

+

frameToCropTransform =

|

| 80 |

+

ImageUtils.getTransformationMatrix(

|

| 81 |

+

previewWidth, previewHeight,

|

| 82 |

+

cropSize, cropSize,

|

| 83 |

+

sensorOrientation, false);

|

| 84 |

+

cropToFrameTransform = new Matrix();

|

| 85 |

+

frameToCropTransform.invert(cropToFrameTransform);

|

| 86 |

+

}

|

| 87 |

+

|

| 88 |

+

@Test

|

| 89 |

+

public void detectionResultsShouldNotChange() throws Exception {

|

| 90 |

+

Canvas canvas = new Canvas(croppedBitmap);

|

| 91 |

+

canvas.drawBitmap(loadImage("table.jpg"), frameToCropTransform, null);

|

| 92 |

+

final List<Recognition> results = detector.recognizeImage(croppedBitmap);

|

| 93 |

+

final List<Recognition> expected = loadRecognitions("table_results.txt");

|

| 94 |

+

|

| 95 |

+

for (Recognition target : expected) {

|

| 96 |

+

// Find a matching result in results

|

| 97 |

+

boolean matched = false;

|

| 98 |

+

for (Recognition item : results) {

|

| 99 |

+

RectF bbox = new RectF();

|

| 100 |

+

cropToFrameTransform.mapRect(bbox, item.getLocation());

|

| 101 |

+

if (item.getTitle().equals(target.getTitle())

|

| 102 |

+

&& matchBoundingBoxes(bbox, target.getLocation())

|

| 103 |

+

&& matchConfidence(item.getConfidence(), target.getConfidence())) {

|

| 104 |

+

matched = true;

|

| 105 |

+

break;

|

| 106 |

+

}

|

| 107 |

+

}

|

| 108 |

+

assertThat(matched).isTrue();

|

| 109 |

+

}

|

| 110 |

+

}

|

| 111 |

+

|

| 112 |

+

// Confidence tolerance: absolute 1%

|

| 113 |

+

private static boolean matchConfidence(float a, float b) {

|

| 114 |

+

return abs(a - b) < 0.01;

|

| 115 |

+

}

|

| 116 |

+

|

| 117 |

+

// Bounding Box tolerance: overlapped area > 95% of each one

|

| 118 |

+

private static boolean matchBoundingBoxes(RectF a, RectF b) {

|

| 119 |

+

float areaA = a.width() * a.height();

|

| 120 |

+

float areaB = b.width() * b.height();

|

| 121 |

+

RectF overlapped =

|

| 122 |

+

new RectF(

|

| 123 |

+

max(a.left, b.left), max(a.top, b.top), min(a.right, b.right), min(a.bottom, b.bottom));

|

| 124 |

+

float overlappedArea = overlapped.width() * overlapped.height();

|

| 125 |

+

return overlappedArea > 0.95 * areaA && overlappedArea > 0.95 * areaB;

|

| 126 |

+

}

|

| 127 |

+

|

| 128 |

+

private static Bitmap loadImage(String fileName) throws Exception {

|

| 129 |

+

AssetManager assetManager =

|

| 130 |

+

InstrumentationRegistry.getInstrumentation().getContext().getAssets();

|

| 131 |

+

InputStream inputStream = assetManager.open(fileName);

|

| 132 |

+

return BitmapFactory.decodeStream(inputStream);

|

| 133 |

+

}

|

| 134 |

+

|

| 135 |

+

// The format of result:

|

| 136 |

+

// category bbox.left bbox.top bbox.right bbox.bottom confidence

|

| 137 |

+

// ...

|

| 138 |

+

// Example:

|

| 139 |

+

// Apple 99 25 30 75 80 0.99

|

| 140 |

+

// Banana 25 90 75 200 0.98

|

| 141 |

+

// ...

|

| 142 |

+

private static List<Recognition> loadRecognitions(String fileName) throws Exception {

|

| 143 |

+

AssetManager assetManager =

|

| 144 |

+

InstrumentationRegistry.getInstrumentation().getContext().getAssets();

|

| 145 |

+

InputStream inputStream = assetManager.open(fileName);

|

| 146 |

+

Scanner scanner = new Scanner(inputStream);

|

| 147 |

+

List<Recognition> result = new ArrayList<>();

|

| 148 |

+

while (scanner.hasNext()) {

|

| 149 |

+

String category = scanner.next();

|

| 150 |

+

category = category.replace('_', ' ');

|

| 151 |

+

if (!scanner.hasNextFloat()) {

|

| 152 |

+

break;

|

| 153 |

+

}

|

| 154 |

+

float left = scanner.nextFloat();

|

| 155 |

+

float top = scanner.nextFloat();

|

| 156 |

+

float right = scanner.nextFloat();

|

| 157 |

+

float bottom = scanner.nextFloat();

|

| 158 |

+

RectF boundingBox = new RectF(left, top, right, bottom);

|

| 159 |

+

float confidence = scanner.nextFloat();

|

| 160 |

+

Recognition recognition = new Recognition(null, category, confidence, boundingBox);

|

| 161 |

+

result.add(recognition);

|

| 162 |

+

}

|

| 163 |

+

return result;

|

| 164 |

+

}

|

| 165 |

+

}

|

android/app/src/main/AndroidManifest.xml

ADDED

|

@@ -0,0 +1,35 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<manifest xmlns:android="http://schemas.android.com/apk/res/android"

|

| 2 |

+

package="org.tensorflow.lite.examples.detection">

|

| 3 |

+

|

| 4 |

+

<uses-sdk />

|

| 5 |

+

|

| 6 |

+

<uses-permission android:name="android.permission.CAMERA" />

|

| 7 |

+

|

| 8 |

+

<uses-feature android:name="android.hardware.camera" />

|

| 9 |

+

<uses-feature android:name="android.hardware.camera.autofocus" />

|

| 10 |

+

<uses-permission android:name="android.permission.WRITE_EXTERNAL_STORAGE"/>

|

| 11 |

+

<uses-permission android:name="android.permission.READ_EXTERNAL_STORAGE"/>

|

| 12 |

+

|

| 13 |

+

<application

|

| 14 |

+

android:allowBackup="false"

|

| 15 |

+

android:icon="@mipmap/ic_launcher"

|

| 16 |

+

android:label="@string/tfe_od_app_name"

|

| 17 |

+

android:roundIcon="@mipmap/ic_launcher_round"

|

| 18 |

+

android:supportsRtl="true"

|

| 19 |

+

android:theme="@style/AppTheme.ObjectDetection">

|

| 20 |

+

|

| 21 |

+

<activity

|

| 22 |

+

android:name=".DetectorActivity"

|

| 23 |

+

android:label="@string/tfe_od_app_name"

|

| 24 |

+

android:screenOrientation="portrait">

|

| 25 |

+

</activity>

|

| 26 |

+

|

| 27 |

+

<activity android:name=".MainActivity">

|

| 28 |

+

<intent-filter>

|

| 29 |

+

<action android:name="android.intent.action.MAIN" />

|

| 30 |

+

<category android:name="android.intent.category.LAUNCHER" />

|

| 31 |

+

</intent-filter>

|

| 32 |

+

</activity>

|

| 33 |

+

|

| 34 |

+

</application>

|

| 35 |

+

</manifest>

|

android/app/src/main/assets/coco.txt

ADDED

|

@@ -0,0 +1,80 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

person

|

| 2 |

+

bicycle

|

| 3 |

+

car

|

| 4 |

+

motorbike

|

| 5 |

+

aeroplane

|

| 6 |

+

bus

|

| 7 |

+

train

|

| 8 |

+

truck

|

| 9 |

+

boat

|

| 10 |

+

traffic light

|

| 11 |

+

fire hydrant

|

| 12 |

+

stop sign

|

| 13 |

+

parking meter

|

| 14 |

+

bench

|

| 15 |

+

bird

|

| 16 |

+

cat

|

| 17 |

+

dog

|

| 18 |

+

horse

|

| 19 |

+

sheep

|

| 20 |

+

cow

|

| 21 |

+

elephant

|

| 22 |

+

bear

|

| 23 |

+

zebra

|

| 24 |

+

giraffe

|

| 25 |

+

backpack

|

| 26 |

+

umbrella

|

| 27 |

+

handbag

|

| 28 |

+

tie

|

| 29 |

+

suitcase

|

| 30 |

+

frisbee

|

| 31 |

+

skis

|

| 32 |

+

snowboard

|

| 33 |

+

sports ball

|

| 34 |

+

kite

|

| 35 |

+

baseball bat

|

| 36 |

+

baseball glove

|

| 37 |

+

skateboard

|

| 38 |

+

surfboard

|

| 39 |

+

tennis racket

|

| 40 |

+

bottle

|

| 41 |

+

wine glass

|

| 42 |

+

cup

|

| 43 |

+

fork

|

| 44 |

+

knife

|

| 45 |

+

spoon

|

| 46 |

+

bowl

|

| 47 |

+

banana

|

| 48 |

+

apple

|

| 49 |

+

sandwich

|

| 50 |

+

orange

|

| 51 |

+

broccoli

|

| 52 |

+

carrot

|

| 53 |

+

hot dog

|

| 54 |

+

pizza

|

| 55 |

+

donut

|

| 56 |

+

cake

|

| 57 |

+

chair

|

| 58 |

+

sofa

|

| 59 |

+

potted plant

|

| 60 |

+

bed

|

| 61 |

+

dining table

|

| 62 |

+

toilet

|

| 63 |

+

tvmonitor

|

| 64 |

+

laptop

|

| 65 |

+

mouse

|

| 66 |

+

remote

|

| 67 |

+

keyboard

|

| 68 |

+

cell phone

|

| 69 |

+

microwave

|

| 70 |

+

oven

|

| 71 |

+

toaster

|

| 72 |

+

sink

|

| 73 |

+

refrigerator

|

| 74 |

+

book

|

| 75 |

+

clock

|

| 76 |

+

vase

|

| 77 |

+

scissors

|

| 78 |

+

teddy bear

|

| 79 |

+

hair drier

|

| 80 |

+

toothbrush

|

android/app/src/main/assets/kite.jpg

ADDED

|

android/app/src/main/assets/labelmap.txt

ADDED

|

@@ -0,0 +1,91 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

???

|

| 2 |

+

person

|

| 3 |

+

bicycle

|

| 4 |

+

car

|

| 5 |

+

motorcycle

|

| 6 |

+

airplane

|

| 7 |

+

bus

|

| 8 |

+

train

|

| 9 |

+

truck

|

| 10 |

+

boat

|

| 11 |

+

traffic light

|

| 12 |

+

fire hydrant

|

| 13 |

+

???

|

| 14 |

+

stop sign

|

| 15 |

+

parking meter

|

| 16 |

+

bench

|

| 17 |

+

bird

|

| 18 |

+

cat

|

| 19 |

+

dog

|

| 20 |

+

horse

|

| 21 |

+

sheep

|

| 22 |

+

cow

|

| 23 |

+

elephant

|

| 24 |

+

bear

|

| 25 |

+

zebra

|

| 26 |

+

giraffe

|

| 27 |

+

???

|

| 28 |

+

backpack

|

| 29 |

+

umbrella

|

| 30 |

+

???

|

| 31 |

+

???

|

| 32 |

+

handbag

|

| 33 |

+

tie

|

| 34 |

+

suitcase

|

| 35 |

+

frisbee

|

| 36 |

+

skis

|

| 37 |

+

snowboard

|

| 38 |

+

sports ball

|

| 39 |

+

kite

|

| 40 |

+

baseball bat

|

| 41 |

+

baseball glove

|

| 42 |

+

skateboard

|

| 43 |

+

surfboard

|

| 44 |

+

tennis racket

|

| 45 |

+

bottle

|

| 46 |

+

???

|

| 47 |

+

wine glass

|

| 48 |

+

cup

|

| 49 |

+

fork

|

| 50 |

+

knife

|

| 51 |

+

spoon

|

| 52 |

+

bowl

|

| 53 |

+

banana

|

| 54 |

+

apple

|

| 55 |

+

sandwich

|

| 56 |

+

orange

|

| 57 |

+

broccoli

|

| 58 |

+

carrot

|

| 59 |

+

hot dog

|

| 60 |

+

pizza

|

| 61 |

+

donut

|

| 62 |

+

cake

|

| 63 |

+

chair

|

| 64 |

+

couch

|

| 65 |

+

potted plant

|

| 66 |

+

bed

|

| 67 |

+

???

|

| 68 |

+

dining table

|

| 69 |

+

???

|

| 70 |

+

???

|

| 71 |

+

toilet

|

| 72 |

+

???

|

| 73 |

+

tv

|

| 74 |

+

laptop

|

| 75 |

+

mouse

|

| 76 |

+

remote

|

| 77 |

+

keyboard

|

| 78 |

+

cell phone

|

| 79 |

+

microwave

|

| 80 |

+

oven

|

| 81 |

+

toaster

|

| 82 |

+

sink

|

| 83 |

+

refrigerator

|

| 84 |

+

???

|

| 85 |

+

book

|

| 86 |

+

clock

|

| 87 |

+

vase

|

| 88 |

+

scissors

|

| 89 |

+

teddy bear

|

| 90 |

+

hair drier

|

| 91 |

+

toothbrush

|

android/app/src/main/assets/yolov4-416-fp32.tflite

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:7160a2f3e58629a15506a6c77685fb5583cddf186dac3015be7998975d662465

|

| 3 |

+

size 24279948

|

android/app/src/main/java/org/tensorflow/lite/examples/detection/CameraActivity.java

ADDED

|

@@ -0,0 +1,550 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

/*

|

| 2 |

+

* Copyright 2019 The TensorFlow Authors. All Rights Reserved.

|

| 3 |

+

*

|

| 4 |

+

* Licensed under the Apache License, Version 2.0 (the "License");

|

| 5 |

+

* you may not use this file except in compliance with the License.

|

| 6 |

+

* You may obtain a copy of the License at

|

| 7 |

+

*

|

| 8 |

+

* http://www.apache.org/licenses/LICENSE-2.0

|

| 9 |

+

*

|

| 10 |

+

* Unless required by applicable law or agreed to in writing, software

|

| 11 |

+

* distributed under the License is distributed on an "AS IS" BASIS,

|

| 12 |

+

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 13 |

+

* See the License for the specific language governing permissions and

|

| 14 |

+

* limitations under the License.

|

| 15 |

+

*/

|

| 16 |

+

|

| 17 |

+

package org.tensorflow.lite.examples.detection;

|

| 18 |

+

|

| 19 |

+

import android.Manifest;

|

| 20 |

+

import android.app.Fragment;

|

| 21 |

+

import android.content.Context;

|

| 22 |

+

import android.content.pm.PackageManager;

|

| 23 |

+

import android.hardware.Camera;

|

| 24 |

+

import android.hardware.camera2.CameraAccessException;

|

| 25 |

+

import android.hardware.camera2.CameraCharacteristics;

|

| 26 |

+

import android.hardware.camera2.CameraManager;

|

| 27 |

+

import android.hardware.camera2.params.StreamConfigurationMap;

|

| 28 |

+

import android.media.Image;

|

| 29 |

+

import android.media.Image.Plane;

|

| 30 |

+

import android.media.ImageReader;

|

| 31 |

+

import android.media.ImageReader.OnImageAvailableListener;

|

| 32 |

+

import android.os.Build;

|

| 33 |

+

import android.os.Bundle;

|

| 34 |

+

import android.os.Handler;

|

| 35 |

+

import android.os.HandlerThread;

|

| 36 |

+

import android.os.Trace;

|

| 37 |

+

import androidx.annotation.NonNull;

|

| 38 |

+

import androidx.appcompat.app.AppCompatActivity;

|

| 39 |

+

import androidx.appcompat.widget.SwitchCompat;

|

| 40 |

+

import androidx.appcompat.widget.Toolbar;

|

| 41 |

+

import android.util.Size;

|

| 42 |

+

import android.view.Surface;

|

| 43 |

+

import android.view.View;

|

| 44 |

+

import android.view.ViewTreeObserver;

|

| 45 |

+

import android.view.WindowManager;

|

| 46 |

+

import android.widget.CompoundButton;

|

| 47 |

+

import android.widget.ImageView;

|

| 48 |

+

import android.widget.LinearLayout;

|

| 49 |

+

import android.widget.TextView;

|

| 50 |

+

import android.widget.Toast;

|

| 51 |

+

import com.google.android.material.bottomsheet.BottomSheetBehavior;

|

| 52 |

+

import java.nio.ByteBuffer;

|

| 53 |

+

import org.tensorflow.lite.examples.detection.env.ImageUtils;

|

| 54 |

+

import org.tensorflow.lite.examples.detection.env.Logger;

|

| 55 |

+

|

| 56 |

+

public abstract class CameraActivity extends AppCompatActivity

|

| 57 |

+

implements OnImageAvailableListener,

|

| 58 |

+

Camera.PreviewCallback,

|

| 59 |

+

CompoundButton.OnCheckedChangeListener,

|

| 60 |

+

View.OnClickListener {

|

| 61 |

+

private static final Logger LOGGER = new Logger();

|

| 62 |

+

|

| 63 |

+

private static final int PERMISSIONS_REQUEST = 1;

|

| 64 |

+

|

| 65 |

+

private static final String PERMISSION_CAMERA = Manifest.permission.CAMERA;

|

| 66 |

+

protected int previewWidth = 0;

|

| 67 |

+

protected int previewHeight = 0;

|

| 68 |

+

private boolean debug = false;

|

| 69 |

+

private Handler handler;

|

| 70 |

+

private HandlerThread handlerThread;

|

| 71 |

+

private boolean useCamera2API;

|

| 72 |

+

private boolean isProcessingFrame = false;

|

| 73 |

+

private byte[][] yuvBytes = new byte[3][];

|

| 74 |

+

private int[] rgbBytes = null;

|

| 75 |

+

private int yRowStride;

|

| 76 |

+

private Runnable postInferenceCallback;

|

| 77 |

+

private Runnable imageConverter;

|

| 78 |

+

|

| 79 |

+

private LinearLayout bottomSheetLayout;

|

| 80 |

+

private LinearLayout gestureLayout;

|

| 81 |

+

private BottomSheetBehavior<LinearLayout> sheetBehavior;

|

| 82 |

+

|

| 83 |

+

protected TextView frameValueTextView, cropValueTextView, inferenceTimeTextView;

|

| 84 |

+

protected ImageView bottomSheetArrowImageView;

|

| 85 |

+

private ImageView plusImageView, minusImageView;

|

| 86 |

+

private SwitchCompat apiSwitchCompat;

|

| 87 |

+

private TextView threadsTextView;

|

| 88 |

+

|

| 89 |

+

@Override

|

| 90 |

+

protected void onCreate(final Bundle savedInstanceState) {

|

| 91 |

+

LOGGER.d("onCreate " + this);

|

| 92 |

+

super.onCreate(null);

|

| 93 |

+

getWindow().addFlags(WindowManager.LayoutParams.FLAG_KEEP_SCREEN_ON);

|

| 94 |

+

|

| 95 |

+

setContentView(R.layout.tfe_od_activity_camera);

|

| 96 |

+

Toolbar toolbar = findViewById(R.id.toolbar);

|

| 97 |

+

setSupportActionBar(toolbar);

|

| 98 |

+

getSupportActionBar().setDisplayShowTitleEnabled(false);

|

| 99 |

+

|

| 100 |

+

if (hasPermission()) {

|

| 101 |

+

setFragment();

|

| 102 |

+

} else {

|

| 103 |

+

requestPermission();

|

| 104 |

+

}

|

| 105 |

+

|

| 106 |

+

threadsTextView = findViewById(R.id.threads);

|

| 107 |

+

plusImageView = findViewById(R.id.plus);

|

| 108 |

+

minusImageView = findViewById(R.id.minus);

|

| 109 |

+

apiSwitchCompat = findViewById(R.id.api_info_switch);

|

| 110 |

+

bottomSheetLayout = findViewById(R.id.bottom_sheet_layout);

|

| 111 |

+

gestureLayout = findViewById(R.id.gesture_layout);

|

| 112 |

+

sheetBehavior = BottomSheetBehavior.from(bottomSheetLayout);

|

| 113 |

+

bottomSheetArrowImageView = findViewById(R.id.bottom_sheet_arrow);

|

| 114 |

+

|

| 115 |

+

ViewTreeObserver vto = gestureLayout.getViewTreeObserver();

|

| 116 |

+

vto.addOnGlobalLayoutListener(

|

| 117 |

+

new ViewTreeObserver.OnGlobalLayoutListener() {

|

| 118 |

+

@Override

|

| 119 |

+

public void onGlobalLayout() {

|

| 120 |

+

if (Build.VERSION.SDK_INT < Build.VERSION_CODES.JELLY_BEAN) {

|

| 121 |

+

gestureLayout.getViewTreeObserver().removeGlobalOnLayoutListener(this);

|

| 122 |

+

} else {

|

| 123 |

+

gestureLayout.getViewTreeObserver().removeOnGlobalLayoutListener(this);

|

| 124 |

+

}

|

| 125 |

+

// int width = bottomSheetLayout.getMeasuredWidth();

|

| 126 |

+

int height = gestureLayout.getMeasuredHeight();

|

| 127 |

+

|

| 128 |

+

sheetBehavior.setPeekHeight(height);

|

| 129 |

+

}

|

| 130 |

+

});

|

| 131 |

+

sheetBehavior.setHideable(false);

|

| 132 |

+

|

| 133 |

+

sheetBehavior.setBottomSheetCallback(

|

| 134 |

+

new BottomSheetBehavior.BottomSheetCallback() {

|

| 135 |

+

@Override

|

| 136 |

+

public void onStateChanged(@NonNull View bottomSheet, int newState) {

|

| 137 |

+

switch (newState) {

|

| 138 |

+

case BottomSheetBehavior.STATE_HIDDEN:

|

| 139 |

+

break;

|

| 140 |

+

case BottomSheetBehavior.STATE_EXPANDED:

|

| 141 |

+

{

|

| 142 |

+

bottomSheetArrowImageView.setImageResource(R.drawable.icn_chevron_down);

|

| 143 |

+

}

|

| 144 |

+

break;

|

| 145 |

+

case BottomSheetBehavior.STATE_COLLAPSED:

|

| 146 |

+

{

|

| 147 |

+

bottomSheetArrowImageView.setImageResource(R.drawable.icn_chevron_up);

|

| 148 |

+

}

|

| 149 |

+

break;

|

| 150 |

+

case BottomSheetBehavior.STATE_DRAGGING:

|

| 151 |

+

break;

|

| 152 |

+

case BottomSheetBehavior.STATE_SETTLING:

|

| 153 |

+

bottomSheetArrowImageView.setImageResource(R.drawable.icn_chevron_up);

|

| 154 |

+

break;

|

| 155 |

+

}

|

| 156 |

+

}

|

| 157 |

+

|

| 158 |

+

@Override

|

| 159 |

+

public void onSlide(@NonNull View bottomSheet, float slideOffset) {}

|

| 160 |

+

});

|

| 161 |

+

|

| 162 |

+

frameValueTextView = findViewById(R.id.frame_info);

|

| 163 |

+

cropValueTextView = findViewById(R.id.crop_info);

|

| 164 |

+

inferenceTimeTextView = findViewById(R.id.inference_info);

|

| 165 |

+

|

| 166 |

+

apiSwitchCompat.setOnCheckedChangeListener(this);

|

| 167 |

+

|

| 168 |

+

plusImageView.setOnClickListener(this);

|

| 169 |

+

minusImageView.setOnClickListener(this);

|

| 170 |

+

}

|

| 171 |

+

|

| 172 |

+

protected int[] getRgbBytes() {

|

| 173 |

+

imageConverter.run();

|

| 174 |

+

return rgbBytes;

|

| 175 |

+

}

|

| 176 |

+

|

| 177 |

+

protected int getLuminanceStride() {

|

| 178 |

+

return yRowStride;

|

| 179 |

+

}

|

| 180 |

+

|

| 181 |

+

protected byte[] getLuminance() {

|

| 182 |

+

return yuvBytes[0];

|

| 183 |

+

}

|

| 184 |

+

|

| 185 |

+

/** Callback for android.hardware.Camera API */

|

| 186 |

+

@Override

|

| 187 |

+

public void onPreviewFrame(final byte[] bytes, final Camera camera) {

|

| 188 |

+

if (isProcessingFrame) {

|

| 189 |

+

LOGGER.w("Dropping frame!");

|

| 190 |

+

return;

|

| 191 |

+

}

|

| 192 |

+

|

| 193 |

+

try {

|

| 194 |

+

// Initialize the storage bitmaps once when the resolution is known.

|

| 195 |

+

if (rgbBytes == null) {

|

| 196 |

+

Camera.Size previewSize = camera.getParameters().getPreviewSize();

|

| 197 |

+

previewHeight = previewSize.height;

|

| 198 |

+

previewWidth = previewSize.width;

|

| 199 |

+

rgbBytes = new int[previewWidth * previewHeight];

|

| 200 |

+

onPreviewSizeChosen(new Size(previewSize.width, previewSize.height), 90);

|

| 201 |

+

}

|

| 202 |

+

} catch (final Exception e) {

|

| 203 |

+

LOGGER.e(e, "Exception!");

|

| 204 |

+

return;

|

| 205 |

+

}

|

| 206 |

+

|

| 207 |

+

isProcessingFrame = true;

|

| 208 |

+

yuvBytes[0] = bytes;

|

| 209 |

+

yRowStride = previewWidth;

|

| 210 |

+

|

| 211 |

+

imageConverter =

|

| 212 |

+

new Runnable() {

|

| 213 |

+

@Override

|

| 214 |

+

public void run() {

|

| 215 |

+

ImageUtils.convertYUV420SPToARGB8888(bytes, previewWidth, previewHeight, rgbBytes);

|

| 216 |

+

}

|

| 217 |

+

};

|

| 218 |

+

|

| 219 |

+

postInferenceCallback =

|