This is the basic model, no additional data was used except my uncensoring protocol.

# Model Details

- Censorship level: Low - Medium

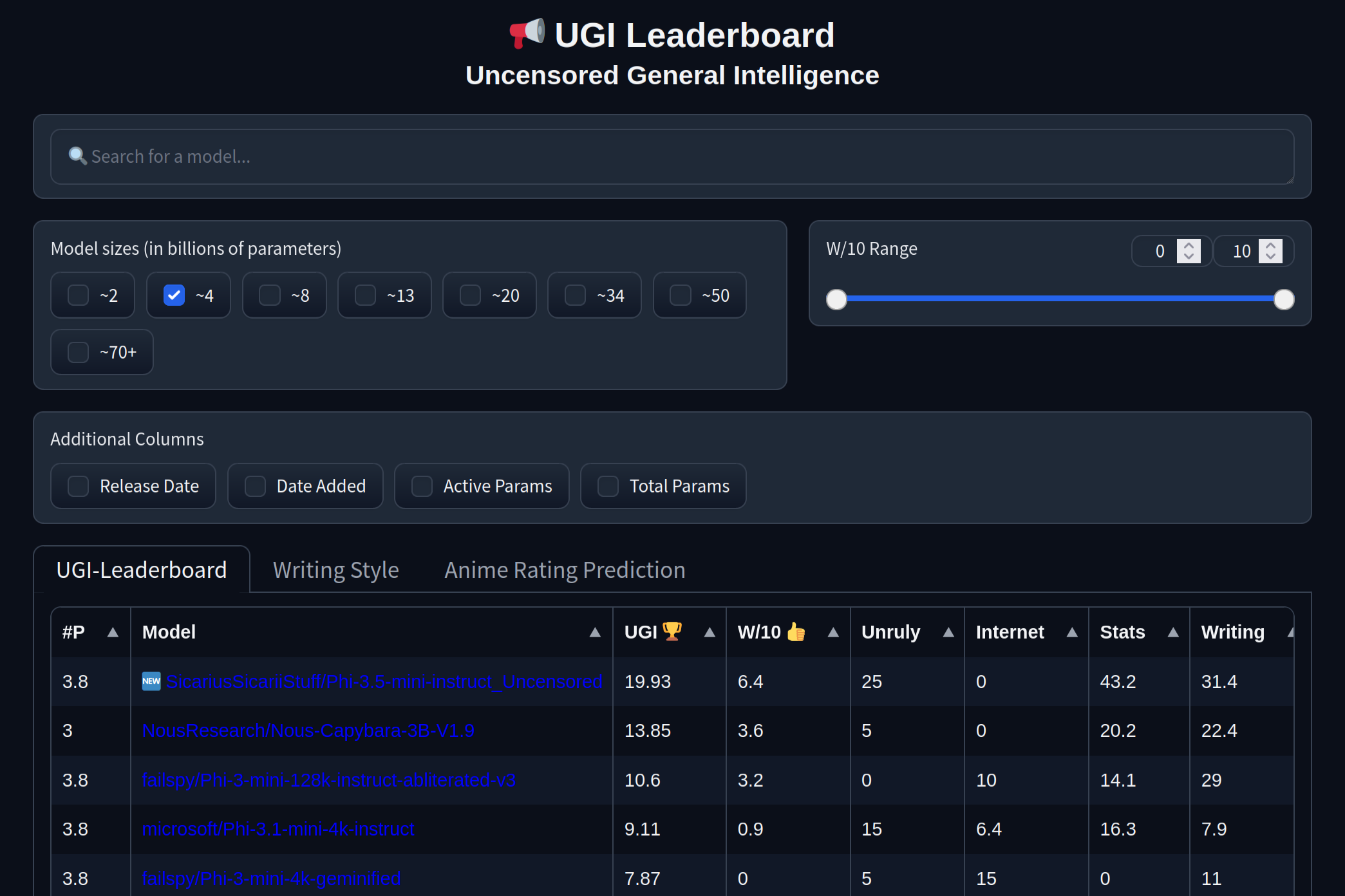

- **6.4** / 10 (10 completely uncensored)

- UGI score:

This is the basic model, no additional data was used except my uncensoring protocol.

# Model Details

- Censorship level: Low - Medium

- **6.4** / 10 (10 completely uncensored)

- UGI score:

## Phi-3.5-mini-instruct_Uncensored is available at the following quantizations:

- Original: [FP16](https://huggingface.co/SicariusSicariiStuff/Phi-3.5-mini-instruct_Uncensored)

- GGUF: [Static Quants](https://huggingface.co/SicariusSicariiStuff/Phi-3.5-mini-instruct_Uncensored_GGUFs) | [iMatrix_GGUF-bartowski](https://huggingface.co/bartowski/Phi-3.5-mini-instruct_Uncensored-GGUF) | [iMatrix_GGUF-mradermacher](https://huggingface.co/mradermacher/Phi-3.5-mini-instruct_Uncensored-i1-GGUF)

- EXL2: [3.0 bpw](https://huggingface.co/SicariusSicariiStuff/Phi-3.5-mini-instruct_Uncensored-EXL2-3.0bpw) | [4.0 bpw](https://huggingface.co/SicariusSicariiStuff/Phi-3.5-mini-instruct_Uncensored-EXL2-4.0bpw) | [5.0 bpw](https://huggingface.co/SicariusSicariiStuff/Phi-3.5-mini-instruct_Uncensored-EXL2-5.0bpw) | [6.0 bpw](https://huggingface.co/SicariusSicariiStuff/Phi-3.5-mini-instruct_Uncensored-EXL2-6.0bpw) | [7.0 bpw](https://huggingface.co/SicariusSicariiStuff/Phi-3.5-mini-instruct_Uncensored-EXL2-7.0bpw) | [8.0 bpw](https://huggingface.co/SicariusSicariiStuff/Phi-3.5-mini-instruct_Uncensored-EXL2-8.0bpw)

- Specialized: [FP8](https://huggingface.co/SicariusSicariiStuff/Phi-3.5-mini-instruct_Uncensored_FP8)

- Mobile (ARM): [Q4_0_X_X](https://huggingface.co/SicariusSicariiStuff/Phi-3.5-mini-instruct_Uncensored_ARM)

### Support

## Phi-3.5-mini-instruct_Uncensored is available at the following quantizations:

- Original: [FP16](https://huggingface.co/SicariusSicariiStuff/Phi-3.5-mini-instruct_Uncensored)

- GGUF: [Static Quants](https://huggingface.co/SicariusSicariiStuff/Phi-3.5-mini-instruct_Uncensored_GGUFs) | [iMatrix_GGUF-bartowski](https://huggingface.co/bartowski/Phi-3.5-mini-instruct_Uncensored-GGUF) | [iMatrix_GGUF-mradermacher](https://huggingface.co/mradermacher/Phi-3.5-mini-instruct_Uncensored-i1-GGUF)

- EXL2: [3.0 bpw](https://huggingface.co/SicariusSicariiStuff/Phi-3.5-mini-instruct_Uncensored-EXL2-3.0bpw) | [4.0 bpw](https://huggingface.co/SicariusSicariiStuff/Phi-3.5-mini-instruct_Uncensored-EXL2-4.0bpw) | [5.0 bpw](https://huggingface.co/SicariusSicariiStuff/Phi-3.5-mini-instruct_Uncensored-EXL2-5.0bpw) | [6.0 bpw](https://huggingface.co/SicariusSicariiStuff/Phi-3.5-mini-instruct_Uncensored-EXL2-6.0bpw) | [7.0 bpw](https://huggingface.co/SicariusSicariiStuff/Phi-3.5-mini-instruct_Uncensored-EXL2-7.0bpw) | [8.0 bpw](https://huggingface.co/SicariusSicariiStuff/Phi-3.5-mini-instruct_Uncensored-EXL2-8.0bpw)

- Specialized: [FP8](https://huggingface.co/SicariusSicariiStuff/Phi-3.5-mini-instruct_Uncensored_FP8)

- Mobile (ARM): [Q4_0_X_X](https://huggingface.co/SicariusSicariiStuff/Phi-3.5-mini-instruct_Uncensored_ARM)

### Support

- [My Ko-fi page](https://ko-fi.com/sicarius) ALL donations will go for research resources and compute, every bit is appreciated 🙏🏻

## Other stuff

- [Blog and updates](https://huggingface.co/SicariusSicariiStuff/Blog_And_Updates) Some updates, some rambles, sort of a mix between a diary and a blog.

- [LLAMA-3_8B_Unaligned](https://huggingface.co/SicariusSicariiStuff/LLAMA-3_8B_Unaligned) The grand project that started it all.

- [My Ko-fi page](https://ko-fi.com/sicarius) ALL donations will go for research resources and compute, every bit is appreciated 🙏🏻

## Other stuff

- [Blog and updates](https://huggingface.co/SicariusSicariiStuff/Blog_And_Updates) Some updates, some rambles, sort of a mix between a diary and a blog.

- [LLAMA-3_8B_Unaligned](https://huggingface.co/SicariusSicariiStuff/LLAMA-3_8B_Unaligned) The grand project that started it all.