Upload 2667 files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- opencompass-my-api/.codespellrc +5 -0

- opencompass-my-api/.gitignore +129 -0

- opencompass-my-api/.owners.yml +14 -0

- opencompass-my-api/.pre-commit-config-zh-cn.yaml +96 -0

- opencompass-my-api/.pre-commit-config.yaml +96 -0

- opencompass-my-api/.readthedocs.yml +14 -0

- opencompass-my-api/LICENSE +203 -0

- opencompass-my-api/README.md +520 -0

- opencompass-my-api/README_zh-CN.md +522 -0

- opencompass-my-api/build/lib/opencompass/__init__.py +1 -0

- opencompass-my-api/build/lib/opencompass/datasets/FinanceIQ.py +39 -0

- opencompass-my-api/build/lib/opencompass/datasets/GaokaoBench.py +132 -0

- opencompass-my-api/build/lib/opencompass/datasets/NPHardEval/__init__.py +9 -0

- opencompass-my-api/build/lib/opencompass/datasets/NPHardEval/cmp_GCP_D.py +161 -0

- opencompass-my-api/build/lib/opencompass/datasets/NPHardEval/cmp_KSP.py +183 -0

- opencompass-my-api/build/lib/opencompass/datasets/NPHardEval/cmp_TSP_D.py +150 -0

- opencompass-my-api/build/lib/opencompass/datasets/NPHardEval/hard_GCP.py +189 -0

- opencompass-my-api/build/lib/opencompass/datasets/NPHardEval/hard_MSP.py +203 -0

- opencompass-my-api/build/lib/opencompass/datasets/NPHardEval/hard_TSP.py +211 -0

- opencompass-my-api/build/lib/opencompass/datasets/NPHardEval/p_BSP.py +124 -0

- opencompass-my-api/build/lib/opencompass/datasets/NPHardEval/p_EDP.py +145 -0

- opencompass-my-api/build/lib/opencompass/datasets/NPHardEval/p_SPP.py +196 -0

- opencompass-my-api/build/lib/opencompass/datasets/NPHardEval/prompts.py +96 -0

- opencompass-my-api/build/lib/opencompass/datasets/NPHardEval/utils.py +43 -0

- opencompass-my-api/build/lib/opencompass/datasets/OpenFinData.py +47 -0

- opencompass-my-api/build/lib/opencompass/datasets/TheoremQA.py +38 -0

- opencompass-my-api/build/lib/opencompass/datasets/advglue.py +174 -0

- opencompass-my-api/build/lib/opencompass/datasets/afqmcd.py +21 -0

- opencompass-my-api/build/lib/opencompass/datasets/agieval/__init__.py +3 -0

- opencompass-my-api/build/lib/opencompass/datasets/agieval/agieval.py +99 -0

- opencompass-my-api/build/lib/opencompass/datasets/agieval/constructions.py +104 -0

- opencompass-my-api/build/lib/opencompass/datasets/agieval/dataset_loader.py +392 -0

- opencompass-my-api/build/lib/opencompass/datasets/agieval/evaluation.py +43 -0

- opencompass-my-api/build/lib/opencompass/datasets/agieval/math_equivalence.py +161 -0

- opencompass-my-api/build/lib/opencompass/datasets/agieval/post_process.py +198 -0

- opencompass-my-api/build/lib/opencompass/datasets/agieval/utils.py +43 -0

- opencompass-my-api/build/lib/opencompass/datasets/anli.py +18 -0

- opencompass-my-api/build/lib/opencompass/datasets/anthropics_evals.py +63 -0

- opencompass-my-api/build/lib/opencompass/datasets/arc.py +84 -0

- opencompass-my-api/build/lib/opencompass/datasets/ax.py +24 -0

- opencompass-my-api/build/lib/opencompass/datasets/base.py +28 -0

- opencompass-my-api/build/lib/opencompass/datasets/bbh.py +98 -0

- opencompass-my-api/build/lib/opencompass/datasets/boolq.py +56 -0

- opencompass-my-api/build/lib/opencompass/datasets/bustum.py +21 -0

- opencompass-my-api/build/lib/opencompass/datasets/c3.py +80 -0

- opencompass-my-api/build/lib/opencompass/datasets/cb.py +25 -0

- opencompass-my-api/build/lib/opencompass/datasets/ceval.py +76 -0

- opencompass-my-api/build/lib/opencompass/datasets/chid.py +43 -0

- opencompass-my-api/build/lib/opencompass/datasets/cibench.py +511 -0

- opencompass-my-api/build/lib/opencompass/datasets/circular.py +373 -0

opencompass-my-api/.codespellrc

ADDED

|

@@ -0,0 +1,5 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

[codespell]

|

| 2 |

+

skip = *.ipynb

|

| 3 |

+

count =

|

| 4 |

+

quiet-level = 3

|

| 5 |

+

ignore-words-list = nd, ans, ques, rouge, softwares, wit

|

opencompass-my-api/.gitignore

ADDED

|

@@ -0,0 +1,129 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

output_*/

|

| 3 |

+

outputs/

|

| 4 |

+

icl_inference_output/

|

| 5 |

+

.vscode/

|

| 6 |

+

tmp/

|

| 7 |

+

configs/eval_subjective_alignbench_test.py

|

| 8 |

+

configs/openai_key.py

|

| 9 |

+

configs/secrets.py

|

| 10 |

+

configs/datasets/log.json

|

| 11 |

+

configs/eval_debug*.py

|

| 12 |

+

configs/viz_*.py

|

| 13 |

+

data

|

| 14 |

+

work_dirs

|

| 15 |

+

models/*

|

| 16 |

+

configs/internal/

|

| 17 |

+

# Byte-compiled / optimized / DLL files

|

| 18 |

+

__pycache__/

|

| 19 |

+

*.py[cod]

|

| 20 |

+

*$py.class

|

| 21 |

+

*.ipynb

|

| 22 |

+

|

| 23 |

+

# C extensions

|

| 24 |

+

*.so

|

| 25 |

+

|

| 26 |

+

# Distribution / packaging

|

| 27 |

+

.Python

|

| 28 |

+

build/

|

| 29 |

+

develop-eggs/

|

| 30 |

+

dist/

|

| 31 |

+

downloads/

|

| 32 |

+

eggs/

|

| 33 |

+

.eggs/

|

| 34 |

+

lib/

|

| 35 |

+

lib64/

|

| 36 |

+

parts/

|

| 37 |

+

sdist/

|

| 38 |

+

var/

|

| 39 |

+

wheels/

|

| 40 |

+

*.egg-info/

|

| 41 |

+

.installed.cfg

|

| 42 |

+

*.egg

|

| 43 |

+

MANIFEST

|

| 44 |

+

|

| 45 |

+

# PyInstaller

|

| 46 |

+

# Usually these files are written by a python script from a template

|

| 47 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 48 |

+

*.manifest

|

| 49 |

+

*.spec

|

| 50 |

+

|

| 51 |

+

# Installer logs

|

| 52 |

+

pip-log.txt

|

| 53 |

+

pip-delete-this-directory.txt

|

| 54 |

+

|

| 55 |

+

# Unit test / coverage reports

|

| 56 |

+

htmlcov/

|

| 57 |

+

.tox/

|

| 58 |

+

.coverage

|

| 59 |

+

.coverage.*

|

| 60 |

+

.cache

|

| 61 |

+

nosetests.xml

|

| 62 |

+

coverage.xml

|

| 63 |

+

*.cover

|

| 64 |

+

.hypothesis/

|

| 65 |

+

.pytest_cache/

|

| 66 |

+

|

| 67 |

+

# Translations

|

| 68 |

+

*.mo

|

| 69 |

+

*.pot

|

| 70 |

+

|

| 71 |

+

# Django stuff:

|

| 72 |

+

*.log

|

| 73 |

+

local_settings.py

|

| 74 |

+

db.sqlite3

|

| 75 |

+

|

| 76 |

+

# Flask stuff:

|

| 77 |

+

instance/

|

| 78 |

+

.webassets-cache

|

| 79 |

+

|

| 80 |

+

# Scrapy stuff:

|

| 81 |

+

.scrapy

|

| 82 |

+

|

| 83 |

+

.idea

|

| 84 |

+

|

| 85 |

+

# Auto generate documentation

|

| 86 |

+

docs/en/_build/

|

| 87 |

+

docs/zh_cn/_build/

|

| 88 |

+

|

| 89 |

+

# .zip

|

| 90 |

+

*.zip

|

| 91 |

+

|

| 92 |

+

# sft config ignore list

|

| 93 |

+

configs/sft_cfg/*B_*

|

| 94 |

+

configs/sft_cfg/1B/*

|

| 95 |

+

configs/sft_cfg/7B/*

|

| 96 |

+

configs/sft_cfg/20B/*

|

| 97 |

+

configs/sft_cfg/60B/*

|

| 98 |

+

configs/sft_cfg/100B/*

|

| 99 |

+

|

| 100 |

+

configs/cky/

|

| 101 |

+

# in case llama clone in the opencompass

|

| 102 |

+

llama/

|

| 103 |

+

|

| 104 |

+

# in case ilagent clone in the opencompass

|

| 105 |

+

ilagent/

|

| 106 |

+

|

| 107 |

+

# ignore the config file for criticbench evaluation

|

| 108 |

+

configs/sft_cfg/criticbench_eval/*

|

| 109 |

+

|

| 110 |

+

# path of turbomind's model after runing `lmdeploy.serve.turbomind.deploy`

|

| 111 |

+

turbomind/

|

| 112 |

+

|

| 113 |

+

# cibench output

|

| 114 |

+

*.db

|

| 115 |

+

*.pth

|

| 116 |

+

*.pt

|

| 117 |

+

*.onnx

|

| 118 |

+

*.gz

|

| 119 |

+

*.gz.*

|

| 120 |

+

*.png

|

| 121 |

+

*.txt

|

| 122 |

+

*.jpg

|

| 123 |

+

*.json

|

| 124 |

+

*.csv

|

| 125 |

+

*.npy

|

| 126 |

+

*.c

|

| 127 |

+

|

| 128 |

+

# aliyun

|

| 129 |

+

core.*

|

opencompass-my-api/.owners.yml

ADDED

|

@@ -0,0 +1,14 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

assign:

|

| 2 |

+

issues: enabled

|

| 3 |

+

pull_requests: disabled

|

| 4 |

+

strategy:

|

| 5 |

+

# random

|

| 6 |

+

daily-shift-based

|

| 7 |

+

scedule:

|

| 8 |

+

'*/1 * * * *'

|

| 9 |

+

assignees:

|

| 10 |

+

- Leymore

|

| 11 |

+

- bittersweet1999

|

| 12 |

+

- yingfhu

|

| 13 |

+

- kennymckormick

|

| 14 |

+

- tonysy

|

opencompass-my-api/.pre-commit-config-zh-cn.yaml

ADDED

|

@@ -0,0 +1,96 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

exclude: |

|

| 2 |

+

(?x)^(

|

| 3 |

+

tests/data/|

|

| 4 |

+

opencompass/models/internal/|

|

| 5 |

+

opencompass/utils/internal/|

|

| 6 |

+

opencompass/openicl/icl_evaluator/hf_metrics/|

|

| 7 |

+

opencompass/datasets/lawbench/utils|

|

| 8 |

+

opencompass/datasets/lawbench/evaluation_functions/|

|

| 9 |

+

opencompass/datasets/medbench/|

|

| 10 |

+

opencompass/datasets/teval/|

|

| 11 |

+

opencompass/datasets/NPHardEval/|

|

| 12 |

+

docs/zh_cn/advanced_guides/compassbench_intro.md

|

| 13 |

+

)

|

| 14 |

+

repos:

|

| 15 |

+

- repo: https://gitee.com/openmmlab/mirrors-flake8

|

| 16 |

+

rev: 5.0.4

|

| 17 |

+

hooks:

|

| 18 |

+

- id: flake8

|

| 19 |

+

exclude: configs/

|

| 20 |

+

- repo: https://gitee.com/openmmlab/mirrors-isort

|

| 21 |

+

rev: 5.11.5

|

| 22 |

+

hooks:

|

| 23 |

+

- id: isort

|

| 24 |

+

exclude: configs/

|

| 25 |

+

- repo: https://gitee.com/openmmlab/mirrors-yapf

|

| 26 |

+

rev: v0.32.0

|

| 27 |

+

hooks:

|

| 28 |

+

- id: yapf

|

| 29 |

+

exclude: configs/

|

| 30 |

+

- repo: https://gitee.com/openmmlab/mirrors-codespell

|

| 31 |

+

rev: v2.2.1

|

| 32 |

+

hooks:

|

| 33 |

+

- id: codespell

|

| 34 |

+

exclude: |

|

| 35 |

+

(?x)^(

|

| 36 |

+

.*\.jsonl|

|

| 37 |

+

configs/

|

| 38 |

+

)

|

| 39 |

+

- repo: https://gitee.com/openmmlab/mirrors-pre-commit-hooks

|

| 40 |

+

rev: v4.3.0

|

| 41 |

+

hooks:

|

| 42 |

+

- id: trailing-whitespace

|

| 43 |

+

exclude: |

|

| 44 |

+

(?x)^(

|

| 45 |

+

dicts/|

|

| 46 |

+

projects/.*?/dicts/|

|

| 47 |

+

configs/

|

| 48 |

+

)

|

| 49 |

+

- id: check-yaml

|

| 50 |

+

- id: end-of-file-fixer

|

| 51 |

+

exclude: |

|

| 52 |

+

(?x)^(

|

| 53 |

+

dicts/|

|

| 54 |

+

projects/.*?/dicts/|

|

| 55 |

+

configs/

|

| 56 |

+

)

|

| 57 |

+

- id: requirements-txt-fixer

|

| 58 |

+

- id: double-quote-string-fixer

|

| 59 |

+

exclude: configs/

|

| 60 |

+

- id: check-merge-conflict

|

| 61 |

+

- id: fix-encoding-pragma

|

| 62 |

+

args: ["--remove"]

|

| 63 |

+

- id: mixed-line-ending

|

| 64 |

+

args: ["--fix=lf"]

|

| 65 |

+

- id: mixed-line-ending

|

| 66 |

+

args: ["--fix=lf"]

|

| 67 |

+

- repo: https://gitee.com/openmmlab/mirrors-mdformat

|

| 68 |

+

rev: 0.7.9

|

| 69 |

+

hooks:

|

| 70 |

+

- id: mdformat

|

| 71 |

+

args: ["--number", "--table-width", "200"]

|

| 72 |

+

additional_dependencies:

|

| 73 |

+

- mdformat-openmmlab

|

| 74 |

+

- mdformat_frontmatter

|

| 75 |

+

- linkify-it-py

|

| 76 |

+

exclude: configs/

|

| 77 |

+

- repo: https://gitee.com/openmmlab/mirrors-docformatter

|

| 78 |

+

rev: v1.3.1

|

| 79 |

+

hooks:

|

| 80 |

+

- id: docformatter

|

| 81 |

+

args: ["--in-place", "--wrap-descriptions", "79"]

|

| 82 |

+

- repo: local

|

| 83 |

+

hooks:

|

| 84 |

+

- id: update-dataset-suffix

|

| 85 |

+

name: dataset suffix updater

|

| 86 |

+

entry: ./tools/update_dataset_suffix.py

|

| 87 |

+

language: script

|

| 88 |

+

pass_filenames: true

|

| 89 |

+

require_serial: true

|

| 90 |

+

files: ^configs/datasets

|

| 91 |

+

# - repo: https://github.com/open-mmlab/pre-commit-hooks

|

| 92 |

+

# rev: v0.2.0 # Use the ref you want to point at

|

| 93 |

+

# hooks:

|

| 94 |

+

# - id: check-algo-readme

|

| 95 |

+

# - id: check-copyright

|

| 96 |

+

# args: ["mmocr", "tests", "tools"] # these directories will be checked

|

opencompass-my-api/.pre-commit-config.yaml

ADDED

|

@@ -0,0 +1,96 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

exclude: |

|

| 2 |

+

(?x)^(

|

| 3 |

+

tests/data/|

|

| 4 |

+

opencompass/models/internal/|

|

| 5 |

+

opencompass/utils/internal/|

|

| 6 |

+

opencompass/openicl/icl_evaluator/hf_metrics/|

|

| 7 |

+

opencompass/datasets/lawbench/utils|

|

| 8 |

+

opencompass/datasets/lawbench/evaluation_functions/|

|

| 9 |

+

opencompass/datasets/medbench/|

|

| 10 |

+

opencompass/datasets/teval/|

|

| 11 |

+

opencompass/datasets/NPHardEval/|

|

| 12 |

+

docs/zh_cn/advanced_guides/compassbench_intro.md

|

| 13 |

+

)

|

| 14 |

+

repos:

|

| 15 |

+

- repo: https://github.com/PyCQA/flake8

|

| 16 |

+

rev: 5.0.4

|

| 17 |

+

hooks:

|

| 18 |

+

- id: flake8

|

| 19 |

+

exclude: configs/

|

| 20 |

+

- repo: https://github.com/PyCQA/isort

|

| 21 |

+

rev: 5.11.5

|

| 22 |

+

hooks:

|

| 23 |

+

- id: isort

|

| 24 |

+

exclude: configs/

|

| 25 |

+

- repo: https://github.com/pre-commit/mirrors-yapf

|

| 26 |

+

rev: v0.32.0

|

| 27 |

+

hooks:

|

| 28 |

+

- id: yapf

|

| 29 |

+

exclude: configs/

|

| 30 |

+

- repo: https://github.com/codespell-project/codespell

|

| 31 |

+

rev: v2.2.1

|

| 32 |

+

hooks:

|

| 33 |

+

- id: codespell

|

| 34 |

+

exclude: |

|

| 35 |

+

(?x)^(

|

| 36 |

+

.*\.jsonl|

|

| 37 |

+

configs/

|

| 38 |

+

)

|

| 39 |

+

- repo: https://github.com/pre-commit/pre-commit-hooks

|

| 40 |

+

rev: v4.3.0

|

| 41 |

+

hooks:

|

| 42 |

+

- id: trailing-whitespace

|

| 43 |

+

exclude: |

|

| 44 |

+

(?x)^(

|

| 45 |

+

dicts/|

|

| 46 |

+

projects/.*?/dicts/|

|

| 47 |

+

configs/

|

| 48 |

+

)

|

| 49 |

+

- id: check-yaml

|

| 50 |

+

- id: end-of-file-fixer

|

| 51 |

+

exclude: |

|

| 52 |

+

(?x)^(

|

| 53 |

+

dicts/|

|

| 54 |

+

projects/.*?/dicts/|

|

| 55 |

+

configs/

|

| 56 |

+

)

|

| 57 |

+

- id: requirements-txt-fixer

|

| 58 |

+

- id: double-quote-string-fixer

|

| 59 |

+

exclude: configs/

|

| 60 |

+

- id: check-merge-conflict

|

| 61 |

+

- id: fix-encoding-pragma

|

| 62 |

+

args: ["--remove"]

|

| 63 |

+

- id: mixed-line-ending

|

| 64 |

+

args: ["--fix=lf"]

|

| 65 |

+

- id: mixed-line-ending

|

| 66 |

+

args: ["--fix=lf"]

|

| 67 |

+

- repo: https://github.com/executablebooks/mdformat

|

| 68 |

+

rev: 0.7.9

|

| 69 |

+

hooks:

|

| 70 |

+

- id: mdformat

|

| 71 |

+

args: ["--number", "--table-width", "200"]

|

| 72 |

+

additional_dependencies:

|

| 73 |

+

- mdformat-openmmlab

|

| 74 |

+

- mdformat_frontmatter

|

| 75 |

+

- linkify-it-py

|

| 76 |

+

exclude: configs/

|

| 77 |

+

- repo: https://github.com/myint/docformatter

|

| 78 |

+

rev: v1.3.1

|

| 79 |

+

hooks:

|

| 80 |

+

- id: docformatter

|

| 81 |

+

args: ["--in-place", "--wrap-descriptions", "79"]

|

| 82 |

+

- repo: local

|

| 83 |

+

hooks:

|

| 84 |

+

- id: update-dataset-suffix

|

| 85 |

+

name: dataset suffix updater

|

| 86 |

+

entry: ./tools/update_dataset_suffix.py

|

| 87 |

+

language: script

|

| 88 |

+

pass_filenames: true

|

| 89 |

+

require_serial: true

|

| 90 |

+

files: ^configs/datasets

|

| 91 |

+

# - repo: https://github.com/open-mmlab/pre-commit-hooks

|

| 92 |

+

# rev: v0.2.0 # Use the ref you want to point at

|

| 93 |

+

# hooks:

|

| 94 |

+

# - id: check-algo-readme

|

| 95 |

+

# - id: check-copyright

|

| 96 |

+

# args: ["mmocr", "tests", "tools"] # these directories will be checked

|

opencompass-my-api/.readthedocs.yml

ADDED

|

@@ -0,0 +1,14 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version: 2

|

| 2 |

+

|

| 3 |

+

# Set the version of Python and other tools you might need

|

| 4 |

+

build:

|

| 5 |

+

os: ubuntu-22.04

|

| 6 |

+

tools:

|

| 7 |

+

python: "3.8"

|

| 8 |

+

|

| 9 |

+

formats:

|

| 10 |

+

- epub

|

| 11 |

+

|

| 12 |

+

python:

|

| 13 |

+

install:

|

| 14 |

+

- requirements: requirements/docs.txt

|

opencompass-my-api/LICENSE

ADDED

|

@@ -0,0 +1,203 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Copyright 2020 OpenCompass Authors. All rights reserved.

|

| 2 |

+

|

| 3 |

+

Apache License

|

| 4 |

+

Version 2.0, January 2004

|

| 5 |

+

http://www.apache.org/licenses/

|

| 6 |

+

|

| 7 |

+

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

| 8 |

+

|

| 9 |

+

1. Definitions.

|

| 10 |

+

|

| 11 |

+

"License" shall mean the terms and conditions for use, reproduction,

|

| 12 |

+

and distribution as defined by Sections 1 through 9 of this document.

|

| 13 |

+

|

| 14 |

+

"Licensor" shall mean the copyright owner or entity authorized by

|

| 15 |

+

the copyright owner that is granting the License.

|

| 16 |

+

|

| 17 |

+

"Legal Entity" shall mean the union of the acting entity and all

|

| 18 |

+

other entities that control, are controlled by, or are under common

|

| 19 |

+

control with that entity. For the purposes of this definition,

|

| 20 |

+

"control" means (i) the power, direct or indirect, to cause the

|

| 21 |

+

direction or management of such entity, whether by contract or

|

| 22 |

+

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

| 23 |

+

outstanding shares, or (iii) beneficial ownership of such entity.

|

| 24 |

+

|

| 25 |

+

"You" (or "Your") shall mean an individual or Legal Entity

|

| 26 |

+

exercising permissions granted by this License.

|

| 27 |

+

|

| 28 |

+

"Source" form shall mean the preferred form for making modifications,

|

| 29 |

+

including but not limited to software source code, documentation

|

| 30 |

+

source, and configuration files.

|

| 31 |

+

|

| 32 |

+

"Object" form shall mean any form resulting from mechanical

|

| 33 |

+

transformation or translation of a Source form, including but

|

| 34 |

+

not limited to compiled object code, generated documentation,

|

| 35 |

+

and conversions to other media types.

|

| 36 |

+

|

| 37 |

+

"Work" shall mean the work of authorship, whether in Source or

|

| 38 |

+

Object form, made available under the License, as indicated by a

|

| 39 |

+

copyright notice that is included in or attached to the work

|

| 40 |

+

(an example is provided in the Appendix below).

|

| 41 |

+

|

| 42 |

+

"Derivative Works" shall mean any work, whether in Source or Object

|

| 43 |

+

form, that is based on (or derived from) the Work and for which the

|

| 44 |

+

editorial revisions, annotations, elaborations, or other modifications

|

| 45 |

+

represent, as a whole, an original work of authorship. For the purposes

|

| 46 |

+

of this License, Derivative Works shall not include works that remain

|

| 47 |

+

separable from, or merely link (or bind by name) to the interfaces of,

|

| 48 |

+

the Work and Derivative Works thereof.

|

| 49 |

+

|

| 50 |

+

"Contribution" shall mean any work of authorship, including

|

| 51 |

+

the original version of the Work and any modifications or additions

|

| 52 |

+

to that Work or Derivative Works thereof, that is intentionally

|

| 53 |

+

submitted to Licensor for inclusion in the Work by the copyright owner

|

| 54 |

+

or by an individual or Legal Entity authorized to submit on behalf of

|

| 55 |

+

the copyright owner. For the purposes of this definition, "submitted"

|

| 56 |

+

means any form of electronic, verbal, or written communication sent

|

| 57 |

+

to the Licensor or its representatives, including but not limited to

|

| 58 |

+

communication on electronic mailing lists, source code control systems,

|

| 59 |

+

and issue tracking systems that are managed by, or on behalf of, the

|

| 60 |

+

Licensor for the purpose of discussing and improving the Work, but

|

| 61 |

+

excluding communication that is conspicuously marked or otherwise

|

| 62 |

+

designated in writing by the copyright owner as "Not a Contribution."

|

| 63 |

+

|

| 64 |

+

"Contributor" shall mean Licensor and any individual or Legal Entity

|

| 65 |

+

on behalf of whom a Contribution has been received by Licensor and

|

| 66 |

+

subsequently incorporated within the Work.

|

| 67 |

+

|

| 68 |

+

2. Grant of Copyright License. Subject to the terms and conditions of

|

| 69 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 70 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 71 |

+

copyright license to reproduce, prepare Derivative Works of,

|

| 72 |

+

publicly display, publicly perform, sublicense, and distribute the

|

| 73 |

+

Work and such Derivative Works in Source or Object form.

|

| 74 |

+

|

| 75 |

+

3. Grant of Patent License. Subject to the terms and conditions of

|

| 76 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 77 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 78 |

+

(except as stated in this section) patent license to make, have made,

|

| 79 |

+

use, offer to sell, sell, import, and otherwise transfer the Work,

|

| 80 |

+

where such license applies only to those patent claims licensable

|

| 81 |

+

by such Contributor that are necessarily infringed by their

|

| 82 |

+

Contribution(s) alone or by combination of their Contribution(s)

|

| 83 |

+

with the Work to which such Contribution(s) was submitted. If You

|

| 84 |

+

institute patent litigation against any entity (including a

|

| 85 |

+

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

| 86 |

+

or a Contribution incorporated within the Work constitutes direct

|

| 87 |

+

or contributory patent infringement, then any patent licenses

|

| 88 |

+

granted to You under this License for that Work shall terminate

|

| 89 |

+

as of the date such litigation is filed.

|

| 90 |

+

|

| 91 |

+

4. Redistribution. You may reproduce and distribute copies of the

|

| 92 |

+

Work or Derivative Works thereof in any medium, with or without

|

| 93 |

+

modifications, and in Source or Object form, provided that You

|

| 94 |

+

meet the following conditions:

|

| 95 |

+

|

| 96 |

+

(a) You must give any other recipients of the Work or

|

| 97 |

+

Derivative Works a copy of this License; and

|

| 98 |

+

|

| 99 |

+

(b) You must cause any modified files to carry prominent notices

|

| 100 |

+

stating that You changed the files; and

|

| 101 |

+

|

| 102 |

+

(c) You must retain, in the Source form of any Derivative Works

|

| 103 |

+

that You distribute, all copyright, patent, trademark, and

|

| 104 |

+

attribution notices from the Source form of the Work,

|

| 105 |

+

excluding those notices that do not pertain to any part of

|

| 106 |

+

the Derivative Works; and

|

| 107 |

+

|

| 108 |

+

(d) If the Work includes a "NOTICE" text file as part of its

|

| 109 |

+

distribution, then any Derivative Works that You distribute must

|

| 110 |

+

include a readable copy of the attribution notices contained

|

| 111 |

+

within such NOTICE file, excluding those notices that do not

|

| 112 |

+

pertain to any part of the Derivative Works, in at least one

|

| 113 |

+

of the following places: within a NOTICE text file distributed

|

| 114 |

+

as part of the Derivative Works; within the Source form or

|

| 115 |

+

documentation, if provided along with the Derivative Works; or,

|

| 116 |

+

within a display generated by the Derivative Works, if and

|

| 117 |

+

wherever such third-party notices normally appear. The contents

|

| 118 |

+

of the NOTICE file are for informational purposes only and

|

| 119 |

+

do not modify the License. You may add Your own attribution

|

| 120 |

+

notices within Derivative Works that You distribute, alongside

|

| 121 |

+

or as an addendum to the NOTICE text from the Work, provided

|

| 122 |

+

that such additional attribution notices cannot be construed

|

| 123 |

+

as modifying the License.

|

| 124 |

+

|

| 125 |

+

You may add Your own copyright statement to Your modifications and

|

| 126 |

+

may provide additional or different license terms and conditions

|

| 127 |

+

for use, reproduction, or distribution of Your modifications, or

|

| 128 |

+

for any such Derivative Works as a whole, provided Your use,

|

| 129 |

+

reproduction, and distribution of the Work otherwise complies with

|

| 130 |

+

the conditions stated in this License.

|

| 131 |

+

|

| 132 |

+

5. Submission of Contributions. Unless You explicitly state otherwise,

|

| 133 |

+

any Contribution intentionally submitted for inclusion in the Work

|

| 134 |

+

by You to the Licensor shall be under the terms and conditions of

|

| 135 |

+

this License, without any additional terms or conditions.

|

| 136 |

+

Notwithstanding the above, nothing herein shall supersede or modify

|

| 137 |

+

the terms of any separate license agreement you may have executed

|

| 138 |

+

with Licensor regarding such Contributions.

|

| 139 |

+

|

| 140 |

+

6. Trademarks. This License does not grant permission to use the trade

|

| 141 |

+

names, trademarks, service marks, or product names of the Licensor,

|

| 142 |

+

except as required for reasonable and customary use in describing the

|

| 143 |

+

origin of the Work and reproducing the content of the NOTICE file.

|

| 144 |

+

|

| 145 |

+

7. Disclaimer of Warranty. Unless required by applicable law or

|

| 146 |

+

agreed to in writing, Licensor provides the Work (and each

|

| 147 |

+

Contributor provides its Contributions) on an "AS IS" BASIS,

|

| 148 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

| 149 |

+

implied, including, without limitation, any warranties or conditions

|

| 150 |

+

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

| 151 |

+

PARTICULAR PURPOSE. You are solely responsible for determining the

|

| 152 |

+

appropriateness of using or redistributing the Work and assume any

|

| 153 |

+

risks associated with Your exercise of permissions under this License.

|

| 154 |

+

|

| 155 |

+

8. Limitation of Liability. In no event and under no legal theory,

|

| 156 |

+

whether in tort (including negligence), contract, or otherwise,

|

| 157 |

+

unless required by applicable law (such as deliberate and grossly

|

| 158 |

+

negligent acts) or agreed to in writing, shall any Contributor be

|

| 159 |

+

liable to You for damages, including any direct, indirect, special,

|

| 160 |

+

incidental, or consequential damages of any character arising as a

|

| 161 |

+

result of this License or out of the use or inability to use the

|

| 162 |

+

Work (including but not limited to damages for loss of goodwill,

|

| 163 |

+

work stoppage, computer failure or malfunction, or any and all

|

| 164 |

+

other commercial damages or losses), even if such Contributor

|

| 165 |

+

has been advised of the possibility of such damages.

|

| 166 |

+

|

| 167 |

+

9. Accepting Warranty or Additional Liability. While redistributing

|

| 168 |

+

the Work or Derivative Works thereof, You may choose to offer,

|

| 169 |

+

and charge a fee for, acceptance of support, warranty, indemnity,

|

| 170 |

+

or other liability obligations and/or rights consistent with this

|

| 171 |

+

License. However, in accepting such obligations, You may act only

|

| 172 |

+

on Your own behalf and on Your sole responsibility, not on behalf

|

| 173 |

+

of any other Contributor, and only if You agree to indemnify,

|

| 174 |

+

defend, and hold each Contributor harmless for any liability

|

| 175 |

+

incurred by, or claims asserted against, such Contributor by reason

|

| 176 |

+

of your accepting any such warranty or additional liability.

|

| 177 |

+

|

| 178 |

+

END OF TERMS AND CONDITIONS

|

| 179 |

+

|

| 180 |

+

APPENDIX: How to apply the Apache License to your work.

|

| 181 |

+

|

| 182 |

+

To apply the Apache License to your work, attach the following

|

| 183 |

+

boilerplate notice, with the fields enclosed by brackets "[]"

|

| 184 |

+

replaced with your own identifying information. (Don't include

|

| 185 |

+

the brackets!) The text should be enclosed in the appropriate

|

| 186 |

+

comment syntax for the file format. We also recommend that a

|

| 187 |

+

file or class name and description of purpose be included on the

|

| 188 |

+

same "printed page" as the copyright notice for easier

|

| 189 |

+

identification within third-party archives.

|

| 190 |

+

|

| 191 |

+

Copyright 2020 OpenCompass Authors.

|

| 192 |

+

|

| 193 |

+

Licensed under the Apache License, Version 2.0 (the "License");

|

| 194 |

+

you may not use this file except in compliance with the License.

|

| 195 |

+

You may obtain a copy of the License at

|

| 196 |

+

|

| 197 |

+

http://www.apache.org/licenses/LICENSE-2.0

|

| 198 |

+

|

| 199 |

+

Unless required by applicable law or agreed to in writing, software

|

| 200 |

+

distributed under the License is distributed on an "AS IS" BASIS,

|

| 201 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 202 |

+

See the License for the specific language governing permissions and

|

| 203 |

+

limitations under the License.

|

opencompass-my-api/README.md

ADDED

|

@@ -0,0 +1,520 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<div align="center">

|

| 2 |

+

<img src="docs/en/_static/image/logo.svg" width="500px"/>

|

| 3 |

+

<br />

|

| 4 |

+

<br />

|

| 5 |

+

|

| 6 |

+

[](https://opencompass.readthedocs.io/en)

|

| 7 |

+

[](https://github.com/open-compass/opencompass/blob/main/LICENSE)

|

| 8 |

+

|

| 9 |

+

<!-- [](https://pypi.org/project/opencompass/) -->

|

| 10 |

+

|

| 11 |

+

[🌐Website](https://opencompass.org.cn/) |

|

| 12 |

+

[📘Documentation](https://opencompass.readthedocs.io/en/latest/) |

|

| 13 |

+

[🛠️Installation](https://opencompass.readthedocs.io/en/latest/get_started/installation.html) |

|

| 14 |

+

[🤔Reporting Issues](https://github.com/open-compass/opencompass/issues/new/choose)

|

| 15 |

+

|

| 16 |

+

English | [简体中文](README_zh-CN.md)

|

| 17 |

+

|

| 18 |

+

</div>

|

| 19 |

+

|

| 20 |

+

<p align="center">

|

| 21 |

+

👋 join us on <a href="https://discord.gg/KKwfEbFj7U" target="_blank">Discord</a> and <a href="https://r.vansin.top/?r=opencompass" target="_blank">WeChat</a>

|

| 22 |

+

</p>

|

| 23 |

+

|

| 24 |

+

## 📣 OpenCompass 2023 LLM Annual Leaderboard

|

| 25 |

+

|

| 26 |

+

We are honored to have witnessed the tremendous progress of artificial general intelligence together with the community in the past year, and we are also very pleased that **OpenCompass** can help numerous developers and users.

|

| 27 |

+

|

| 28 |

+

We announce the launch of the **OpenCompass 2023 LLM Annual Leaderboard** plan. We expect to release the annual leaderboard of the LLMs in January 2024, systematically evaluating the performance of LLMs in various capabilities such as language, knowledge, reasoning, creation, long-text, and agents.

|

| 29 |

+

|

| 30 |

+

At that time, we will release rankings for both open-source models and commercial API models, aiming to provide a comprehensive, objective, and neutral reference for the industry and research community.

|

| 31 |

+

|

| 32 |

+

We sincerely invite various large models to join the OpenCompass to showcase their performance advantages in different fields. At the same time, we also welcome researchers and developers to provide valuable suggestions and contributions to jointly promote the development of the LLMs. If you have any questions or needs, please feel free to [contact us](mailto:[email protected]). In addition, relevant evaluation contents, performance statistics, and evaluation methods will be open-source along with the leaderboard release.

|

| 33 |

+

|

| 34 |

+

We have provided the more details of the CompassBench 2023 in [Doc](docs/zh_cn/advanced_guides/compassbench_intro.md).

|

| 35 |

+

|

| 36 |

+

Let's look forward to the release of the OpenCompass 2023 LLM Annual Leaderboard!

|

| 37 |

+

|

| 38 |

+

## 🧭 Welcome

|

| 39 |

+

|

| 40 |

+

to **OpenCompass**!

|

| 41 |

+

|

| 42 |

+

Just like a compass guides us on our journey, OpenCompass will guide you through the complex landscape of evaluating large language models. With its powerful algorithms and intuitive interface, OpenCompass makes it easy to assess the quality and effectiveness of your NLP models.

|

| 43 |

+

|

| 44 |

+

🚩🚩🚩 Explore opportunities at OpenCompass! We're currently **hiring full-time researchers/engineers and interns**. If you're passionate about LLM and OpenCompass, don't hesitate to reach out to us via [email](mailto:[email protected]). We'd love to hear from you!

|

| 45 |

+

|

| 46 |

+

🔥🔥🔥 We are delighted to announce that **the OpenCompass has been recommended by the Meta AI**, click [Get Started](https://ai.meta.com/llama/get-started/#validation) of Llama for more information.

|

| 47 |

+

|

| 48 |

+

> **Attention**<br />

|

| 49 |

+

> We launch the OpenCompass Collaboration project, welcome to support diverse evaluation benchmarks into OpenCompass!

|

| 50 |

+

> Clike [Issue](https://github.com/open-compass/opencompass/issues/248) for more information.

|

| 51 |

+

> Let's work together to build a more powerful OpenCompass toolkit!

|

| 52 |

+

|

| 53 |

+

## 🚀 What's New <a><img width="35" height="20" src="https://user-images.githubusercontent.com/12782558/212848161-5e783dd6-11e8-4fe0-bbba-39ffb77730be.png"></a>

|

| 54 |

+

|

| 55 |

+

- **\[2024.01.17\]** We supported the evaluation of [InternLM2](https://github.com/open-compass/opencompass/blob/main/configs/eval_internlm2_keyset.py) and [InternLM2-Chat](https://github.com/open-compass/opencompass/blob/main/configs/eval_internlm2_chat_keyset.py), InternLM2 showed extremely strong performance in these tests, welcome to try! 🔥🔥🔥.

|

| 56 |

+

- **\[2024.01.17\]** We supported the needle in a haystack test with multiple needles, more information can be found [here](https://opencompass.readthedocs.io/en/latest/advanced_guides/needleinahaystack_eval.html#id8) 🔥🔥🔥.

|

| 57 |

+

- **\[2023.12.28\]** We have enabled seamless evaluation of all models developed using [LLaMA2-Accessory](https://github.com/Alpha-VLLM/LLaMA2-Accessory), a powerful toolkit for comprehensive LLM development. 🔥🔥🔥.

|

| 58 |

+

- **\[2023.12.22\]** We have released [T-Eval](https://github.com/open-compass/T-Eval), a step-by-step evaluation benchmark to gauge your LLMs on tool utilization. Welcome to our [Leaderboard](https://open-compass.github.io/T-Eval/leaderboard.html) for more details! 🔥🔥🔥.

|

| 59 |

+

- **\[2023.12.10\]** We have released [VLMEvalKit](https://github.com/open-compass/VLMEvalKit), a toolkit for evaluating vision-language models (VLMs), currently support 20+ VLMs and 7 multi-modal benchmarks (including MMBench series).

|

| 60 |

+

- **\[2023.12.10\]** We have supported Mistral AI's MoE LLM: **Mixtral-8x7B-32K**. Welcome to [MixtralKit](https://github.com/open-compass/MixtralKit) for more details about inference and evaluation.

|

| 61 |

+

|

| 62 |

+

> [More](docs/en/notes/news.md)

|

| 63 |

+

|

| 64 |

+

## ✨ Introduction

|

| 65 |

+

|

| 66 |

+

|

| 67 |

+

|

| 68 |

+

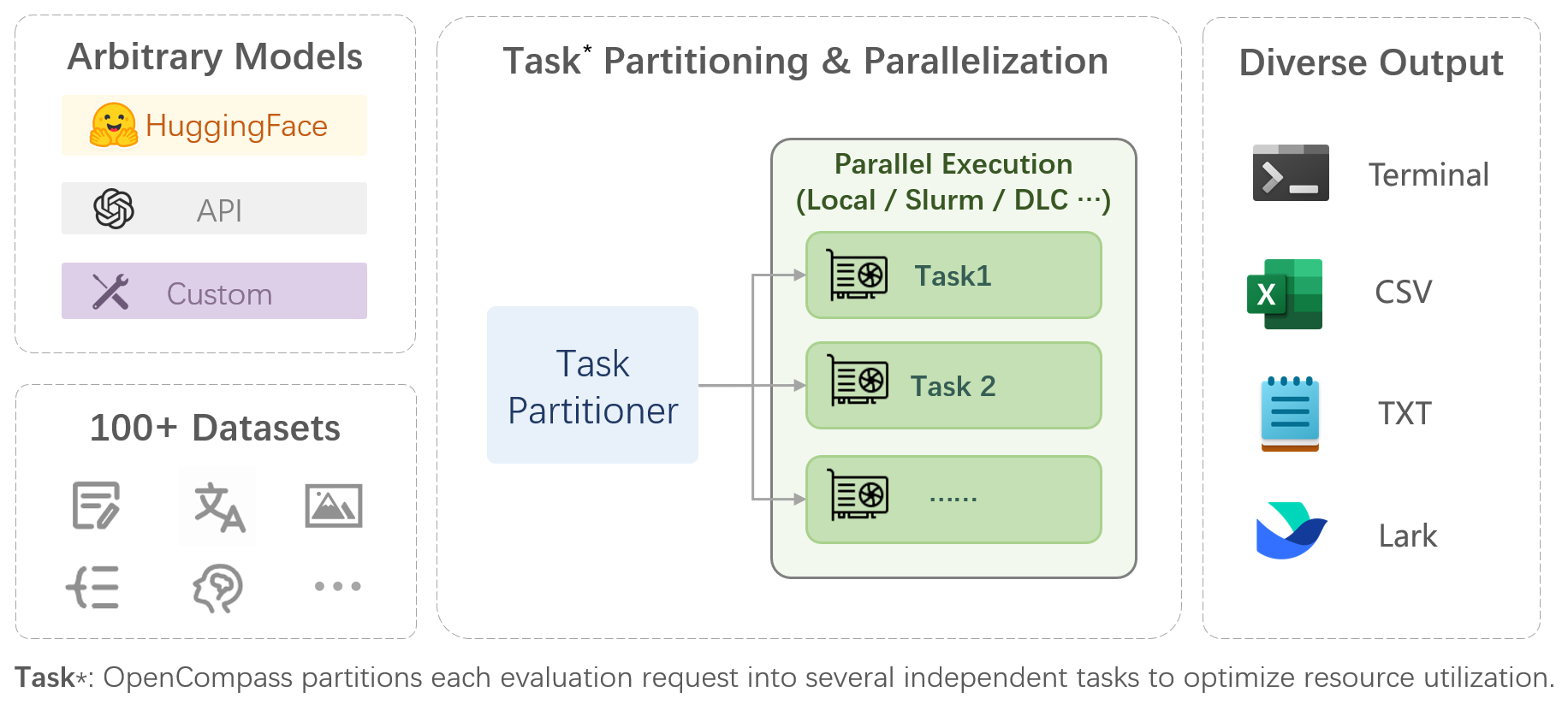

OpenCompass is a one-stop platform for large model evaluation, aiming to provide a fair, open, and reproducible benchmark for large model evaluation. Its main features include:

|

| 69 |

+

|

| 70 |

+

- **Comprehensive support for models and datasets**: Pre-support for 20+ HuggingFace and API models, a model evaluation scheme of 70+ datasets with about 400,000 questions, comprehensively evaluating the capabilities of the models in five dimensions.

|

| 71 |

+

|

| 72 |

+

- **Efficient distributed evaluation**: One line command to implement task division and distributed evaluation, completing the full evaluation of billion-scale models in just a few hours.

|

| 73 |

+

|

| 74 |

+

- **Diversified evaluation paradigms**: Support for zero-shot, few-shot, and chain-of-thought evaluations, combined with standard or dialogue-type prompt templates, to easily stimulate the maximum performance of various models.

|

| 75 |

+

|

| 76 |

+

- **Modular design with high extensibility**: Want to add new models or datasets, customize an advanced task division strategy, or even support a new cluster management system? Everything about OpenCompass can be easily expanded!

|

| 77 |

+

|

| 78 |

+