Reverse Thinking Makes LLMs Stronger Reasoners

Overview

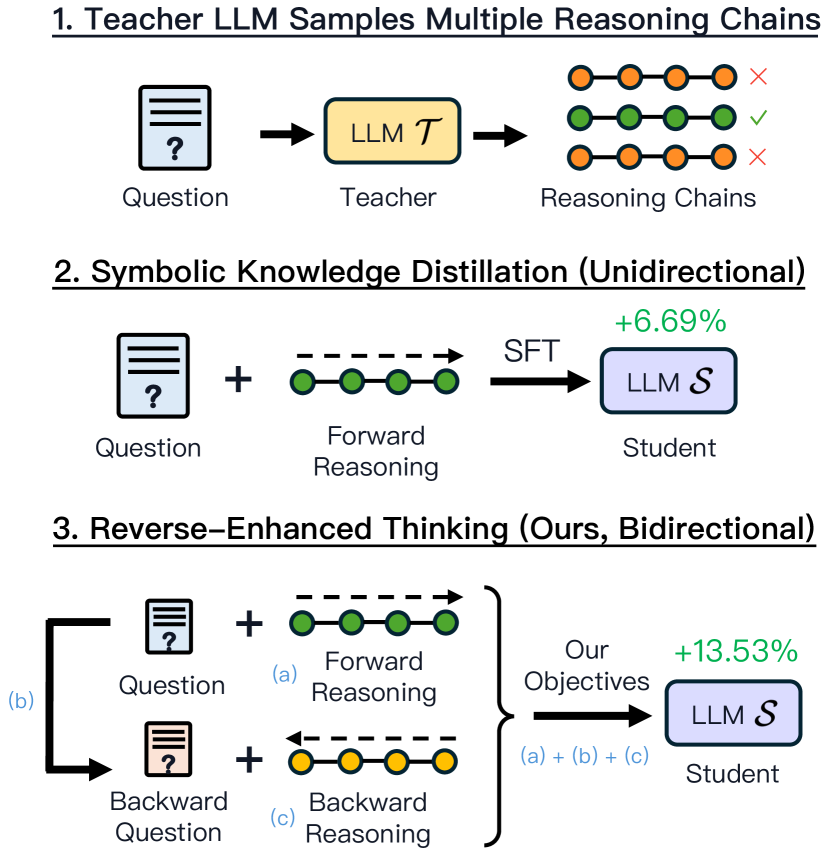

• Introduces "reverse thinking" to improve LLM reasoning capabilities • Tests on multiple reasoning benchmark datasets • Achieves significant performance improvements across various tasks • Works by having LLMs solve problems backward from the answer • Requires no additional training or model modifications

Plain English Explanation

Reverse thinking works like solving a maze from the end point first. Instead of starting at the beginning of a problem and working forward, the LLM starts with potential answers and works backward to verify if they make sense.

Think of it like planning a road trip. Rather than plotting from your starting point, you begin at your destination and trace the route back home. This approach helps catch errors and validate the reasoning path more effectively.

The method improves performance on tasks like math problems, logic puzzles, and common sense reasoning. It's similar to how students check their work by working backwards through math problems to verify their answers.

Key Findings

The backward reasoning approach improved performance across multiple benchmarks:

• 8.3% improvement on GSM8K math problems • 6.2% better results on CommonsenseQA • 5.4% enhancement on LogiQA logical reasoning tasks

The gains were consistent across different model sizes and types, suggesting this is a fundamental improvement to reasoning capability.

Technical Explanation

The implementation uses a two-stage process. First, the model generates potential answers through standard forward reasoning. Then it applies reverse verification to check each answer's validity.

The backward pass examines the logical connections between the answer and given information. This helps identify gaps or inconsistencies in the reasoning chain that might be missed going forward only.

The method requires no model fine-tuning or architectural changes. It works purely through prompt engineering and can be applied to any existing LLM.

Critical Analysis

While effective, the approach has some limitations:

• Doubles the inference time due to two-pass processing • May not help with problems requiring creative leaps • Could potentially reinforce existing biases in model reasoning

Future work could explore combining forward and reverse thinking dynamically, rather than always using both passes.

Conclusion

Reverse thinking represents a significant advance in LLM reasoning capabilities. The method's simplicity and effectiveness make it broadly applicable across different models and tasks.

The approach demonstrates how relatively simple changes to how we use LLMs can yield substantial improvements. This suggests there may be other fundamental reasoning techniques yet to be discovered that could further enhance AI capabilities.