SEFD: Semantic-Enhanced Framework for Detecting LLM-Generated Text

Overview

- Framework called SEFD for detecting AI-generated text

- Uses semantic analysis to identify content created by language models

- Combines different detection methods including word patterns and meaning

- Claims 98% accuracy in identifying AI writing

- Works across multiple language models including GPT variants

Plain English Explanation

Detecting AI-written text is becoming crucial as LLM-generated content grows more sophisticated. SEFD analyzes text in two main ways - looking at how words are used and examining the deeper meaning of content.

Think of it like a detective using multiple methods to spot a forgery. One detective might examine the paper and ink, while another studies the writing style. SEFD similarly combines different techniques to catch AI text.

The system breaks text into smaller chunks and analyzes them separately, similar to how a teacher might spot copied homework by looking at individual paragraphs rather than the whole essay at once.

Key Findings

The research showed that semantic analysis significantly improves detection accuracy. Key results include:

- 98% accuracy in identifying AI-written text

- Works effectively across different types of content

- Maintains performance even with paraphrased text

- Reduces false positives compared to existing methods

Technical Explanation

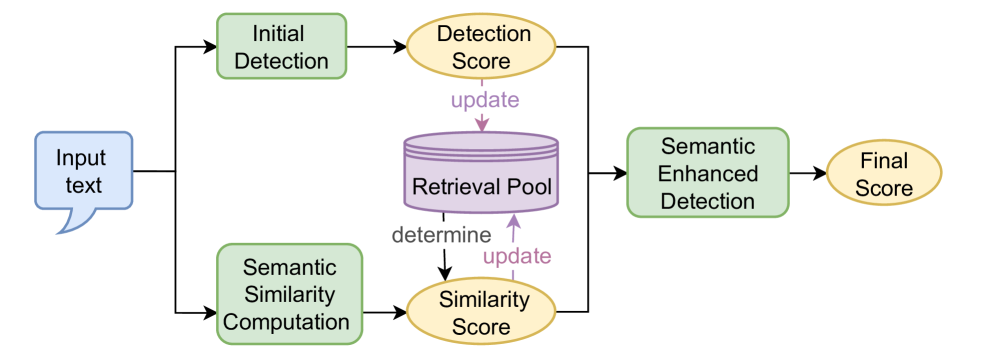

SEFD uses a two-stage detection process. The first stage examines surface-level patterns in the text. The second stage analyzes semantic relationships between words and concepts.

The framework employs transformer models to understand context and meaning. It uses special techniques to handle long documents by splitting them into manageable segments while maintaining context.

Detection methods include analyzing word distributions, semantic coherence, and contextual patterns. The system compares these features against known patterns in human-written text.

Critical Analysis

While SEFD shows promising results, several limitations exist:

- May struggle with highly technical or specialized content

- Performance could decrease as AI writing improves

- Limited testing across different languages

- Resource-intensive processing requirements

The research would benefit from broader testing across different writing styles and genres. Long-term effectiveness against evolving AI models remains uncertain.

Conclusion

SEFD represents a significant step forward in detecting AI-generated content. Its combination of semantic analysis with traditional detection methods provides a more robust solution than previous approaches.

The high accuracy and ability to handle paraphrased content make it particularly valuable for educational institutions and content platforms. However, ongoing development will be needed to keep pace with advancing AI technology.