Upload folder using huggingface_hub

Browse files- .gitattributes +4 -0

- Baichuan2-7B-Chat-rk3588-w8a8-opt-0-hybrid-ratio-0.0.rkllm +3 -0

- Baichuan2-7B-Chat-rk3588-w8a8-opt-1-hybrid-ratio-0.0.rkllm +3 -0

- Baichuan2-7B-Chat-rk3588-w8a8_g128-opt-0-hybrid-ratio-0.0.rkllm +3 -0

- Baichuan2-7B-Chat-rk3588-w8a8_g128-opt-1-hybrid-ratio-0.0.rkllm +3 -0

- README.md +211 -0

- config.json +29 -0

- generation_config.json +14 -0

- special_tokens_map.json +30 -0

- tokenizer_config.json +36 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,7 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

Baichuan2-7B-Chat-rk3588-w8a8-opt-0-hybrid-ratio-0.0.rkllm filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

Baichuan2-7B-Chat-rk3588-w8a8-opt-1-hybrid-ratio-0.0.rkllm filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

Baichuan2-7B-Chat-rk3588-w8a8_g128-opt-0-hybrid-ratio-0.0.rkllm filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

Baichuan2-7B-Chat-rk3588-w8a8_g128-opt-1-hybrid-ratio-0.0.rkllm filter=lfs diff=lfs merge=lfs -text

|

Baichuan2-7B-Chat-rk3588-w8a8-opt-0-hybrid-ratio-0.0.rkllm

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:47c9b88d869a3ce099c40d2e63fbf254840ea5567bdfabaa67b25d3edc9bb6cc

|

| 3 |

+

size 8050623604

|

Baichuan2-7B-Chat-rk3588-w8a8-opt-1-hybrid-ratio-0.0.rkllm

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:e849a802ff8cbc98b5fcc6b351b63deb61534ce51be3e30bcf1969ee97677428

|

| 3 |

+

size 8050623604

|

Baichuan2-7B-Chat-rk3588-w8a8_g128-opt-0-hybrid-ratio-0.0.rkllm

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

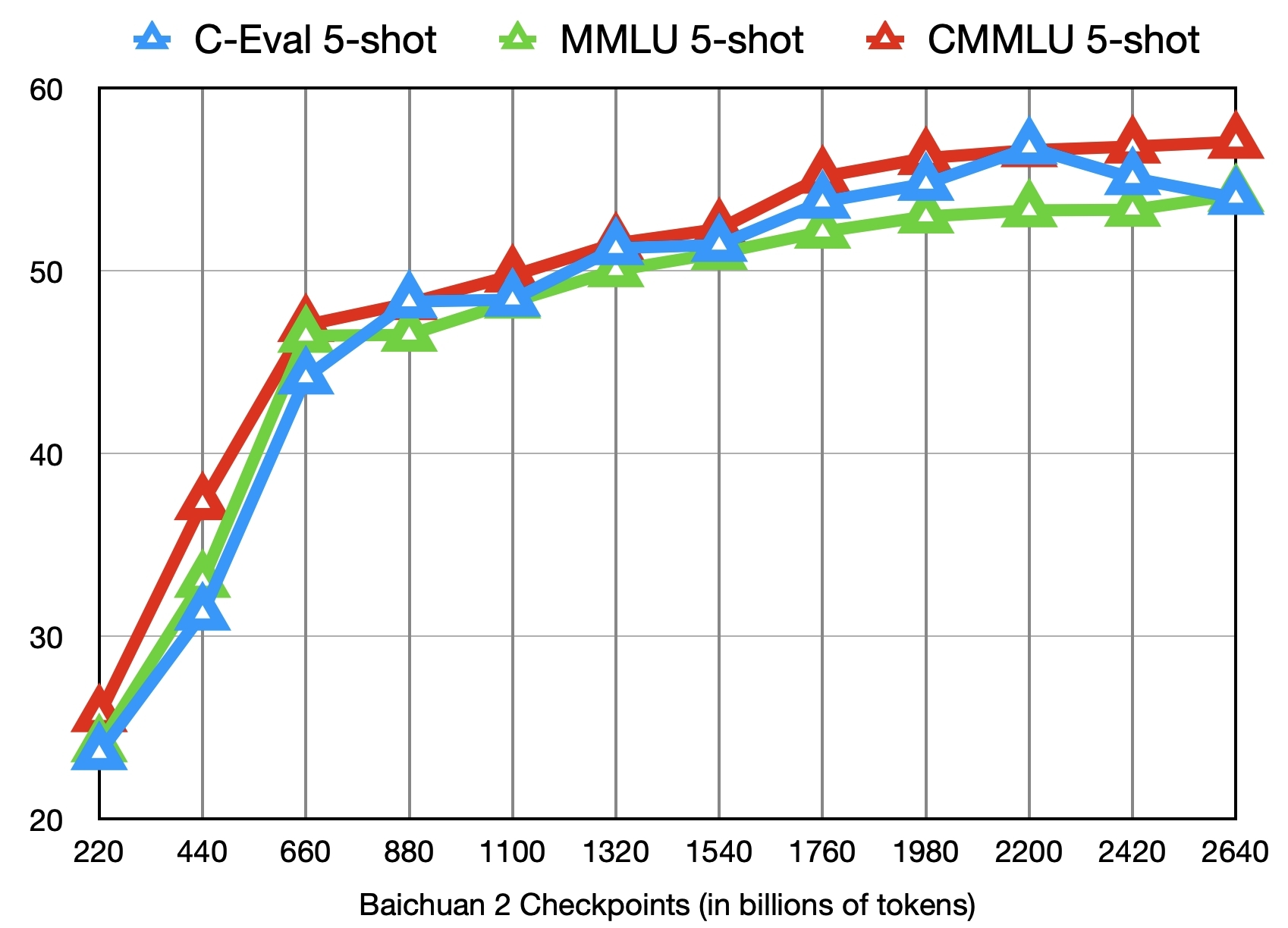

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:90e2500448cda44436ec61362c77ef7f3561b1ef3af1db9179e504138fe2a060

|

| 3 |

+

size 8538829036

|

Baichuan2-7B-Chat-rk3588-w8a8_g128-opt-1-hybrid-ratio-0.0.rkllm

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:04342ca17241e58e529e80db34a242c5e30817aeedec7e0bad2cba8f94321302

|

| 3 |

+

size 8538829036

|

README.md

ADDED

|

@@ -0,0 +1,211 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

language:

|

| 3 |

+

- en

|

| 4 |

+

- zh

|

| 5 |

+

license_name: baichuan2-community-license

|

| 6 |

+

license_link: https://huggingface.co/baichuan-inc/Baichuan2-7B-Chat/blob/main/Community%20License%20for%20Baichuan2%20Model.pdf

|

| 7 |

+

tasks:

|

| 8 |

+

- text-generation

|

| 9 |

+

---

|

| 10 |

+

# Baichuan2-7B-Chat-RK3588-1.1.1

|

| 11 |

+

|

| 12 |

+

This version of Baichuan2-7B-Chat has been converted to run on the RK3588 NPU using {'w8a8', 'w8a8_g128'} quantization.

|

| 13 |

+

|

| 14 |

+

This model has been optimized with the following LoRA:

|

| 15 |

+

|

| 16 |

+

Compatible with RKLLM version: 1.1.1

|

| 17 |

+

|

| 18 |

+

###Useful links:

|

| 19 |

+

[Official RKLLM GitHub](https://github.com/airockchip/rknn-llm)

|

| 20 |

+

|

| 21 |

+

[RockhipNPU Reddit](https://reddit.com/r/RockchipNPU)

|

| 22 |

+

|

| 23 |

+

[EZRKNN-LLM](https://github.com/Pelochus/ezrknn-llm/)

|

| 24 |

+

|

| 25 |

+

Pretty much anything by these folks: [marty1885][https://github.com/marty1885] and [happyme531](https://huggingface.co/happyme531)

|

| 26 |

+

|

| 27 |

+

# Original Model Card for base model, Baichuan2-7B-Chat, below:

|

| 28 |

+

|

| 29 |

+

|

| 30 |

+

<!-- markdownlint-disable first-line-h1 -->

|

| 31 |

+

<!-- markdownlint-disable html -->

|

| 32 |

+

<div align="center">

|

| 33 |

+

<h1>

|

| 34 |

+

Baichuan 2

|

| 35 |

+

</h1>

|

| 36 |

+

</div>

|

| 37 |

+

|

| 38 |

+

<div align="center">

|

| 39 |

+

<a href="https://github.com/baichuan-inc/Baichuan2" target="_blank">🦉GitHub</a> | <a href="https://github.com/baichuan-inc/Baichuan-7B/blob/main/media/wechat.jpeg?raw=true" target="_blank">💬WeChat</a>

|

| 40 |

+

</div>

|

| 41 |

+

<div align="center">

|

| 42 |

+

百川API支持搜索增强和192K长窗口,新增百川搜索增强知识库、限时免费!<br>

|

| 43 |

+

🚀 <a href="https://www.baichuan-ai.com/" target="_blank">百川大模型在线对话平台</a> 已正式向公众开放 🎉

|

| 44 |

+

</div>

|

| 45 |

+

|

| 46 |

+

# 目录/Table of Contents

|

| 47 |

+

|

| 48 |

+

- [📖 模型介绍/Introduction](#Introduction)

|

| 49 |

+

- [⚙️ 快速开始/Quick Start](#Start)

|

| 50 |

+

- [📊 Benchmark评估/Benchmark Evaluation](#Benchmark)

|

| 51 |

+

- [👥 社区与生态/Community](#Community)

|

| 52 |

+

- [📜 声明与协议/Terms and Conditions](#Terms)

|

| 53 |

+

|

| 54 |

+

|

| 55 |

+

# <span id="Introduction">模型介绍/Introduction</span>

|

| 56 |

+

|

| 57 |

+

Baichuan 2 是[百川智能]推出的新一代开源大语言模型,采用 **2.6 万亿** Tokens 的高质量语料训练,在权威的中文和英文 benchmark

|

| 58 |

+

上均取得同尺寸最好的效果。本次发布包含有 7B、13B 的 Base 和 Chat 版本,并提供了 Chat 版本的 4bits

|

| 59 |

+

量化,所有版本不仅对学术研究完全开放,开发者也仅需[邮件申请]并获得官方商用许可后,即可以免费商用。具体发布版本和下载见下表:

|

| 60 |

+

|

| 61 |

+

Baichuan 2 is the new generation of large-scale open-source language models launched by [Baichuan Intelligence inc.](https://www.baichuan-ai.com/).

|

| 62 |

+

It is trained on a high-quality corpus with 2.6 trillion tokens and has achieved the best performance in authoritative Chinese and English benchmarks of the same size.

|

| 63 |

+

This release includes 7B and 13B versions for both Base and Chat models, along with a 4bits quantized version for the Chat model.

|

| 64 |

+

All versions are fully open to academic research, and developers can also use them for free in commercial applications after obtaining an official commercial license through [email request](mailto:[email protected]).

|

| 65 |

+

The specific release versions and download links are listed in the table below:

|

| 66 |

+

|

| 67 |

+

| | Base Model | Chat Model | 4bits Quantized Chat Model |

|

| 68 |

+

|:---:|:--------------------:|:--------------------:|:--------------------------:|

|

| 69 |

+

| 7B | [Baichuan2-7B-Base](https://huggingface.co/baichuan-inc/Baichuan2-7B-Base) | [Baichuan2-7B-Chat](https://huggingface.co/baichuan-inc/Baichuan2-7B-Chat) | [Baichuan2-7B-Chat-4bits](https://huggingface.co/baichuan-inc/Baichuan2-7B-Base-4bits) |

|

| 70 |

+

| 13B | [Baichuan2-13B-Base](https://huggingface.co/baichuan-inc/Baichuan2-13B-Base) | [Baichuan2-13B-Chat](https://huggingface.co/baichuan-inc/Baichuan2-13B-Chat) | [Baichuan2-13B-Chat-4bits](https://huggingface.co/baichuan-inc/Baichuan2-13B-Chat-4bits) |

|

| 71 |

+

|

| 72 |

+

# <span id="Start">快速开始/Quick Start</span>

|

| 73 |

+

|

| 74 |

+

在Baichuan2系列模型中,我们为了加快推理速度使用了Pytorch2.0加入的新功能F.scaled_dot_product_attention,因此模型需要在Pytorch2.0环境下运行。

|

| 75 |

+

|

| 76 |

+

In the Baichuan 2 series models, we have utilized the new feature `F.scaled_dot_product_attention` introduced in PyTorch 2.0 to accelerate inference speed. Therefore, the model needs to be run in a PyTorch 2.0 environment.

|

| 77 |

+

|

| 78 |

+

|

| 79 |

+

```python

|

| 80 |

+

import torch

|

| 81 |

+

from transformers import AutoModelForCausalLM, AutoTokenizer

|

| 82 |

+

from transformers.generation.utils import GenerationConfig

|

| 83 |

+

tokenizer = AutoTokenizer.from_pretrained("baichuan-inc/Baichuan2-7B-Chat", use_fast=False, trust_remote_code=True)

|

| 84 |

+

model = AutoModelForCausalLM.from_pretrained("baichuan-inc/Baichuan2-7B-Chat", device_map="auto", torch_dtype=torch.bfloat16, trust_remote_code=True)

|

| 85 |

+

model.generation_config = GenerationConfig.from_pretrained("baichuan-inc/Baichuan2-7B-Chat")

|

| 86 |

+

messages = []

|

| 87 |

+

messages.append({"role": "user", "content": "解释一下“温故而知新”"})

|

| 88 |

+

response = model.chat(tokenizer, messages)

|

| 89 |

+

print(response)

|

| 90 |

+

"温故而知新"是一句中国古代的成语,出自《论语·为政》篇。这句话的意思是:通过回顾过去,我们可以发现新的知识和理解。换句话说,学习历史和经验可以让我们更好地理解现在和未来。

|

| 91 |

+

|

| 92 |

+

这句话鼓励我们在学习和生活中不断地回顾和反思过去的经验,从而获得新的启示和成长。通过重温旧的知识和经历,我们可以发现新的观点和理解,从而更好地应对不断变化的世界和挑战。

|

| 93 |

+

```

|

| 94 |

+

|

| 95 |

+

# <span id="Benchmark">Benchmark 结果/Benchmark Evaluation</span>

|

| 96 |

+

|

| 97 |

+

我们在[通用]、[法律]、[医疗]、[数学]、[代码]和[多语言翻译]六个领域的中英文权威数据集上对模型进行了广泛测试,更多详细测评结果可查看[GitHub]。

|

| 98 |

+

|

| 99 |

+

We have extensively tested the model on authoritative Chinese-English datasets across six domains: [General](https://github.com/baichuan-inc/Baichuan2/blob/main/README_EN.md#general-domain), [Legal](https://github.com/baichuan-inc/Baichuan2/blob/main/README_EN.md#law-and-medicine), [Medical](https://github.com/baichuan-inc/Baichuan2/blob/main/README_EN.md#law-and-medicine), [Mathematics](https://github.com/baichuan-inc/Baichuan2/blob/main/README_EN.md#mathematics-and-code), [Code](https://github.com/baichuan-inc/Baichuan2/blob/main/README_EN.md#mathematics-and-code), and [Multilingual Translation](https://github.com/baichuan-inc/Baichuan2/blob/main/README_EN.md#multilingual-translation). For more detailed evaluation results, please refer to [GitHub](https://github.com/baichuan-inc/Baichuan2/blob/main/README_EN.md).

|

| 100 |

+

|

| 101 |

+

### 7B Model Results

|

| 102 |

+

|

| 103 |

+

| | **C-Eval** | **MMLU** | **CMMLU** | **Gaokao** | **AGIEval** | **BBH** |

|

| 104 |

+

|:-----------------------:|:----------:|:--------:|:---------:|:----------:|:-----------:|:-------:|

|

| 105 |

+

| | 5-shot | 5-shot | 5-shot | 5-shot | 5-shot | 3-shot |

|

| 106 |

+

| **GPT-4** | 68.40 | 83.93 | 70.33 | 66.15 | 63.27 | 75.12 |

|

| 107 |

+

| **GPT-3.5 Turbo** | 51.10 | 68.54 | 54.06 | 47.07 | 46.13 | 61.59 |

|

| 108 |

+

| **LLaMA-7B** | 27.10 | 35.10 | 26.75 | 27.81 | 28.17 | 32.38 |

|

| 109 |

+

| **LLaMA2-7B** | 28.90 | 45.73 | 31.38 | 25.97 | 26.53 | 39.16 |

|

| 110 |

+

| **MPT-7B** | 27.15 | 27.93 | 26.00 | 26.54 | 24.83 | 35.20 |

|

| 111 |

+

| **Falcon-7B** | 24.23 | 26.03 | 25.66 | 24.24 | 24.10 | 28.77 |

|

| 112 |

+

| **ChatGLM2-6B** | 50.20 | 45.90 | 49.00 | 49.44 | 45.28 | 31.65 |

|

| 113 |

+

| **[Baichuan-7B]** | 42.80 | 42.30 | 44.02 | 36.34 | 34.44 | 32.48 |

|

| 114 |

+

| **[Baichuan2-7B-Base]** | 54.00 | 54.16 | 57.07 | 47.47 | 42.73 | 41.56 |

|

| 115 |

+

|

| 116 |

+

### 13B Model Results

|

| 117 |

+

|

| 118 |

+

| | **C-Eval** | **MMLU** | **CMMLU** | **Gaokao** | **AGIEval** | **BBH** |

|

| 119 |

+

|:---------------------------:|:----------:|:--------:|:---------:|:----------:|:-----------:|:-------:|

|

| 120 |

+

| | 5-shot | 5-shot | 5-shot | 5-shot | 5-shot | 3-shot |

|

| 121 |

+

| **GPT-4** | 68.40 | 83.93 | 70.33 | 66.15 | 63.27 | 75.12 |

|

| 122 |

+

| **GPT-3.5 Turbo** | 51.10 | 68.54 | 54.06 | 47.07 | 46.13 | 61.59 |

|

| 123 |

+

| **LLaMA-13B** | 28.50 | 46.30 | 31.15 | 28.23 | 28.22 | 37.89 |

|

| 124 |

+

| **LLaMA2-13B** | 35.80 | 55.09 | 37.99 | 30.83 | 32.29 | 46.98 |

|

| 125 |

+

| **Vicuna-13B** | 32.80 | 52.00 | 36.28 | 30.11 | 31.55 | 43.04 |

|

| 126 |

+

| **Chinese-Alpaca-Plus-13B** | 38.80 | 43.90 | 33.43 | 34.78 | 35.46 | 28.94 |

|

| 127 |

+

| **XVERSE-13B** | 53.70 | 55.21 | 58.44 | 44.69 | 42.54 | 38.06 |

|

| 128 |

+

| **[Baichuan-13B-Base]** | 52.40 | 51.60 | 55.30 | 49.69 | 43.20 | 43.01 |

|

| 129 |

+

| **[Baichuan2-13B-Base]** | 58.10 | 59.17 | 61.97 | 54.33 | 48.17 | 48.78 |

|

| 130 |

+

|

| 131 |

+

## 训练过程模型/Training Dynamics

|

| 132 |

+

|

| 133 |

+

除了训练了 2.6 万亿 Tokens 的 [Baichuan2-7B-Base](https://huggingface.co/baichuan-inc/Baichuan2-7B-Base) 模型,我们还提供了在此之前的另外 11 个中间过程的模型(分别对应训练了约 0.2 ~ 2.4 万亿 Tokens)供社区研究使用

|

| 134 |

+

([训练过程checkpoint下载](https://huggingface.co/baichuan-inc/Baichuan2-7B-Intermediate-Checkpoints))。下图给出了这些 checkpoints 在 C-Eval、MMLU、CMMLU 三个 benchmark 上的效果变化:

|

| 135 |

+

|

| 136 |

+

In addition to the [Baichuan2-7B-Base](https://huggingface.co/baichuan-inc/Baichuan2-7B-Base) model trained on 2.6 trillion tokens, we also offer 11 additional intermediate-stage models for community research, corresponding to training on approximately 0.2 to 2.4 trillion tokens each ([Intermediate Checkpoints Download](https://huggingface.co/baichuan-inc/Baichuan2-7B-Intermediate-Checkpoints)). The graph below shows the performance changes of these checkpoints on three benchmarks: C-Eval, MMLU, and CMMLU.

|

| 137 |

+

|

| 138 |

+

|

| 139 |

+

|

| 140 |

+

# <span id="Community">社区与生态/Community</span>

|

| 141 |

+

|

| 142 |

+

## Intel 酷睿 Ultra 平台运行百川大模型

|

| 143 |

+

|

| 144 |

+

使用酷睿™/至强® 可扩展处理器或配合锐炫™ GPU等进行部署[Baichuan2-7B-Chat],[Baichuan2-13B-Chat]模型,推荐使用 BigDL-LLM([CPU], [GPU])以发挥更好推理性能。

|

| 145 |

+

|

| 146 |

+

详细支持信息可参考[中文操作手册](https://github.com/intel-analytics/bigdl-llm-tutorial/tree/main/Chinese_Version),包括用notebook支持,[加载,优化,保存方法](https://github.com/intel-analytics/bigdl-llm-tutorial/blob/main/Chinese_Version/ch_3_AppDev_Basic/3_BasicApp.ipynb)等。

|

| 147 |

+

|

| 148 |

+

When deploy on Core™/Xeon® Scalable Processors or with Arc™ GPU, BigDL-LLM ([CPU], [GPU]) is recommended to take full advantage of better inference performance.

|

| 149 |

+

|

| 150 |

+

# <span id="Terms">声明与协议/Terms and Conditions</span>

|

| 151 |

+

|

| 152 |

+

## 声明

|

| 153 |

+

|

| 154 |

+

我们在此声明,我们的开发团队并未基于 Baichuan 2 模型开发任何应用,无论是在 iOS、Android、网页或任何其他平台。我们强烈呼吁所有使用者,不要利用

|

| 155 |

+

Baichuan 2 模型进行任何危害国家社会安全或违法的活动。另外,我们也要求使用者不要将 Baichuan 2

|

| 156 |

+

模型用于未经适当安全审查和备案的互联网服务。我们希望所有的使用者都能遵守这个原则,确保科技的发展能在规范和合法的环境下进行。

|

| 157 |

+

|

| 158 |

+

我们已经尽我们所能,来确保模型训练过程中使用的数据的合规性。然而,尽管我们已经做出了巨大的努力,但由于模型和数据的复杂性,仍有可能存在一些无法预见的问题。因此,如果由于使用

|

| 159 |

+

Baichuan 2 开源模型而导致的任何问题,包括但不限于数据安全问题、公共舆论风险,或模型被误导、滥用、传播或不当利用所带来的任何风险和问题,我们将不承担任何责任。

|

| 160 |

+

|

| 161 |

+

We hereby declare that our team has not developed any applications based on Baichuan 2 models, not on iOS, Android, the web, or any other platform. We strongly call on all users not to use Baichuan 2 models for any activities that harm national / social security or violate the law. Also, we ask users not to use Baichuan 2 models for Internet services that have not undergone appropriate security reviews and filings. We hope that all users can abide by this principle and ensure that the development of technology proceeds in a regulated and legal environment.

|

| 162 |

+

|

| 163 |

+

We have done our best to ensure the compliance of the data used in the model training process. However, despite our considerable efforts, there may still be some unforeseeable issues due to the complexity of the model and data. Therefore, if any problems arise due to the use of Baichuan 2 open-source models, including but not limited to data security issues, public opinion risks, or any risks and problems brought about by the model being misled, abused, spread or improperly exploited, we will not assume any responsibility.

|

| 164 |

+

|

| 165 |

+

## 协议

|

| 166 |

+

|

| 167 |

+

社区使用 Baichuan 2 模型需要遵循 [Apache 2.0](https://github.com/baichuan-inc/Baichuan2/blob/main/LICENSE) 和[《Baichuan 2 模型社区许可协议》](https://huggingface.co/baichuan-inc/Baichuan2-7B-Base/resolve/main/Baichuan%202%E6%A8%A1%E5%9E%8B%E7%A4%BE%E5%8C%BA%E8%AE%B8%E5%8F%AF%E5%8D%8F%E8%AE%AE.pdf)。Baichuan 2 模型支持商业用途,如果您计划将 Baichuan 2 模型或其衍生品用于商业目的,请您确认您的主体符合以下情况:

|

| 168 |

+

1. 您或您的关联方的服务或产品的日均用户活跃量(DAU)低于100万。

|

| 169 |

+

2. 您或您的关联方不是软件服务提供商、云服务提供商。

|

| 170 |

+

3. 您或您的关联方不存在将授予您的商用许可,未经百川许可二次授权给其他第三方的可能。

|

| 171 |

+

|

| 172 |

+

在符合以上条件的前提下,您需要通过以下联系邮箱 [email protected] ,提交《Baichuan 2 模型社区许可协议》要求的申请材料。审核通过后,百川将特此授予您一个非排他性、全球性、不可转让、不可再许可、可撤销的商用版权许可。

|

| 173 |

+

|

| 174 |

+

The community usage of Baichuan 2 model requires adherence to [Apache 2.0](https://github.com/baichuan-inc/Baichuan2/blob/main/LICENSE) and [Community License for Baichuan2 Model](https://huggingface.co/baichuan-inc/Baichuan2-7B-Base/resolve/main/Baichuan%202%E6%A8%A1%E5%9E%8B%E7%A4%BE%E5%8C%BA%E8%AE%B8%E5%8F%AF%E5%8D%8F%E8%AE%AE.pdf). The Baichuan 2 model supports commercial use. If you plan to use the Baichuan 2 model or its derivatives for commercial purposes, please ensure that your entity meets the following conditions:

|

| 175 |

+

|

| 176 |

+

1. The Daily Active Users (DAU) of your or your affiliate's service or product is less than 1 million.

|

| 177 |

+

2. Neither you nor your affiliates are software service providers or cloud service providers.

|

| 178 |

+

3. There is no possibility for you or your affiliates to grant the commercial license given to you, to reauthorize it to other third parties without Baichuan's permission.

|

| 179 |

+

|

| 180 |

+

Upon meeting the above conditions, you need to submit the application materials required by the Baichuan 2 Model Community License Agreement via the following contact email: [email protected]. Once approved, Baichuan will hereby grant you a non-exclusive, global, non-transferable, non-sublicensable, revocable commercial copyright license.

|

| 181 |

+

|

| 182 |

+

|

| 183 |

+

[GitHub]:https://github.com/baichuan-inc/Baichuan2

|

| 184 |

+

[Baichuan2]:https://github.com/baichuan-inc/Baichuan2

|

| 185 |

+

|

| 186 |

+

[Baichuan-7B]:https://huggingface.co/baichuan-inc/Baichuan-7B

|

| 187 |

+

[Baichuan2-7B-Base]:https://huggingface.co/baichuan-inc/Baichuan2-7B-Base

|

| 188 |

+

[Baichuan2-7B-Chat]:https://huggingface.co/baichuan-inc/Baichuan2-7B-Chat

|

| 189 |

+

[Baichuan2-7B-Chat-4bits]:https://huggingface.co/baichuan-inc/Baichuan2-7B-Chat-4bits

|

| 190 |

+

[Baichuan-13B-Base]:https://huggingface.co/baichuan-inc/Baichuan-13B-Base

|

| 191 |

+

[Baichuan2-13B-Base]:https://huggingface.co/baichuan-inc/Baichuan2-13B-Base

|

| 192 |

+

[Baichuan2-13B-Chat]:https://huggingface.co/baichuan-inc/Baichuan2-13B-Chat

|

| 193 |

+

[Baichuan2-13B-Chat-4bits]:https://huggingface.co/baichuan-inc/Baichuan2-13B-Chat-4bits

|

| 194 |

+

|

| 195 |

+

[通用]:https://github.com/baichuan-inc/Baichuan2#%E9%80%9A%E7%94%A8%E9%A2%86%E5%9F%9F

|

| 196 |

+

[法律]:https://github.com/baichuan-inc/Baichuan2#%E6%B3%95%E5%BE%8B%E5%8C%BB%E7%96%97

|

| 197 |

+

[医疗]:https://github.com/baichuan-inc/Baichuan2#%E6%B3%95%E5%BE%8B%E5%8C%BB%E7%96%97

|

| 198 |

+

[数学]:https://github.com/baichuan-inc/Baichuan2#%E6%95%B0%E5%AD%A6%E4%BB%A3%E7%A0%81

|

| 199 |

+

[代码]:https://github.com/baichuan-inc/Baichuan2#%E6%95%B0%E5%AD%A6%E4%BB%A3%E7%A0%81

|

| 200 |

+

[多语言翻译]:https://github.com/baichuan-inc/Baichuan2#%E5%A4%9A%E8%AF%AD%E8%A8%80%E7%BF%BB%E8%AF%91

|

| 201 |

+

|

| 202 |

+

[《Baichuan 2 模型社区许可协议》]:https://huggingface.co/baichuan-inc/Baichuan2-7B-Base/blob/main/Baichuan%202%E6%A8%A1%E5%9E%8B%E7%A4%BE%E5%8C%BA%E8%AE%B8%E5%8F%AF%E5%8D%8F%E8%AE%AE.pdf

|

| 203 |

+

|

| 204 |

+

[邮件申请]: mailto:[email protected]

|

| 205 |

+

[Email]: mailto:[email protected]

|

| 206 |

+

[[email protected]]: mailto:[email protected]

|

| 207 |

+

[训练过程heckpoint下载]: https://huggingface.co/baichuan-inc/Baichuan2-7B-Intermediate-Checkpoints

|

| 208 |

+

[百川智能]: https://www.baichuan-ai.com

|

| 209 |

+

|

| 210 |

+

[CPU]: https://github.com/intel-analytics/BigDL/tree/main/python/llm/example/CPU/HF-Transformers-AutoModels/Model/baichuan2

|

| 211 |

+

[GPU]: https://github.com/intel-analytics/BigDL/tree/main/python/llm/example/GPU/HF-Transformers-AutoModels/Model/baichuan2

|

config.json

ADDED

|

@@ -0,0 +1,29 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"architectures": [

|

| 3 |

+

"BaichuanForCausalLM"

|

| 4 |

+

],

|

| 5 |

+

"auto_map": {

|

| 6 |

+

"AutoConfig": "configuration_baichuan.BaichuanConfig",

|

| 7 |

+

"AutoModelForCausalLM": "modeling_baichuan.BaichuanForCausalLM"

|

| 8 |

+

},

|

| 9 |

+

"tokenizer_class": "BaichuanTokenizer",

|

| 10 |

+

"bos_token_id": 1,

|

| 11 |

+

"eos_token_id": 2,

|

| 12 |

+

"hidden_act": "silu",

|

| 13 |

+

"hidden_size": 4096,

|

| 14 |

+

"initializer_range": 0.02,

|

| 15 |

+

"intermediate_size": 11008,

|

| 16 |

+

"max_position_embeddings": 4096,

|

| 17 |

+

"model_max_length": 4096,

|

| 18 |

+

"model_type": "baichuan",

|

| 19 |

+

"num_attention_heads": 32,

|

| 20 |

+

"num_hidden_layers": 32,

|

| 21 |

+

"pad_token_id": 0,

|

| 22 |

+

"rms_norm_eps": 1e-06,

|

| 23 |

+

"_from_model_config": true,

|

| 24 |

+

"tie_word_embeddings": false,

|

| 25 |

+

"torch_dtype": "bfloat16",

|

| 26 |

+

"transformers_version": "4.29.2",

|

| 27 |

+

"use_cache": true,

|

| 28 |

+

"vocab_size": 125696

|

| 29 |

+

}

|

generation_config.json

ADDED

|

@@ -0,0 +1,14 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"pad_token_id": 0,

|

| 3 |

+

"bos_token_id": 1,

|

| 4 |

+

"eos_token_id": 2,

|

| 5 |

+

"user_token_id": 195,

|

| 6 |

+

"assistant_token_id": 196,

|

| 7 |

+

"max_new_tokens": 2048,

|

| 8 |

+

"temperature": 0.3,

|

| 9 |

+

"top_k": 5,

|

| 10 |

+

"top_p": 0.85,

|

| 11 |

+

"repetition_penalty": 1.05,

|

| 12 |

+

"do_sample": true,

|

| 13 |

+

"transformers_version": "4.29.2"

|

| 14 |

+

}

|

special_tokens_map.json

ADDED

|

@@ -0,0 +1,30 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"bos_token": {

|

| 3 |

+

"content": "<s>",

|

| 4 |

+

"lstrip": false,

|

| 5 |

+

"normalized": true,

|

| 6 |

+

"rstrip": false,

|

| 7 |

+

"single_word": false

|

| 8 |

+

},

|

| 9 |

+

"eos_token": {

|

| 10 |

+

"content": "</s>",

|

| 11 |

+

"lstrip": false,

|

| 12 |

+

"normalized": true,

|

| 13 |

+

"rstrip": false,

|

| 14 |

+

"single_word": false

|

| 15 |

+

},

|

| 16 |

+

"pad_token": {

|

| 17 |

+

"content": "<unk>",

|

| 18 |

+

"lstrip": false,

|

| 19 |

+

"normalized": true,

|

| 20 |

+

"rstrip": false,

|

| 21 |

+

"single_word": false

|

| 22 |

+

},

|

| 23 |

+

"unk_token": {

|

| 24 |

+

"content": "<unk>",

|

| 25 |

+

"lstrip": false,

|

| 26 |

+

"normalized": true,

|

| 27 |

+

"rstrip": false,

|

| 28 |

+

"single_word": false

|

| 29 |

+

}

|

| 30 |

+

}

|

tokenizer_config.json

ADDED

|

@@ -0,0 +1,36 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"auto_map": {

|

| 3 |

+

"AutoTokenizer": ["tokenization_baichuan.BaichuanTokenizer", null]

|

| 4 |

+

},

|

| 5 |

+

"add_bos_token": false,

|

| 6 |

+

"add_eos_token": false,

|

| 7 |

+

"use_fast": false,

|

| 8 |

+

"clean_up_tokenization_spaces": false,

|

| 9 |

+

"eos_token": {

|

| 10 |

+

"__type": "AddedToken",

|

| 11 |

+

"content": "</s>",

|

| 12 |

+

"lstrip": false,

|

| 13 |

+

"normalized": true,

|

| 14 |

+

"rstrip": false,

|

| 15 |

+

"single_word": true

|

| 16 |

+

},

|

| 17 |

+

"model_max_length": 4096,

|

| 18 |

+

"sp_model_kwargs": {},

|

| 19 |

+

"tokenizer_class": "BaichuanTokenizer",

|

| 20 |

+

"pad_token": {

|

| 21 |

+

"__type": "AddedToken",

|

| 22 |

+

"content": "<unk>",

|

| 23 |

+

"lstrip": false,

|

| 24 |

+

"normalized": true,

|

| 25 |

+

"rstrip": false,

|

| 26 |

+

"single_word": true

|

| 27 |

+

},

|

| 28 |

+

"unk_token": {

|

| 29 |

+

"__type": "AddedToken",

|

| 30 |

+

"content": "<unk>",

|

| 31 |

+

"lstrip": false,

|

| 32 |

+

"normalized": true,

|

| 33 |

+

"rstrip": false,

|

| 34 |

+

"single_word": true

|

| 35 |

+

}

|

| 36 |

+

}

|