---

license: mit

dataset_info:

features:

- name: repo_name

dtype: string

- name: sub_path

dtype: string

- name: file_name

dtype: string

- name: file_ext

dtype: string

- name: file_size_in_byte

dtype: int64

- name: line_count

dtype: int64

- name: lang

dtype: string

- name: program_lang

dtype: string

- name: doc_type

dtype: string

splits:

- name: The_Stack_V2

num_bytes: 46577045485

num_examples: 336845710

download_size: 20019085005

dataset_size: 46577045485

configs:

- config_name: default

data_files:

- split: The_Stack_V2

path: data/The_Stack_V2-*

---

This dataset consists of meta information (including the repository name and file path) of the raw code data from **RefineCode**. You can collect those files referring to this metadata and reproduce **RefineCode**!

***Note:** Currently, we have uploaded the meta data covered by The Stack V2 (About 50% file volume). Due to complex legal considerations, we are unable to provide the complete source code currently. We are working hard to make the remaining part available.*

---

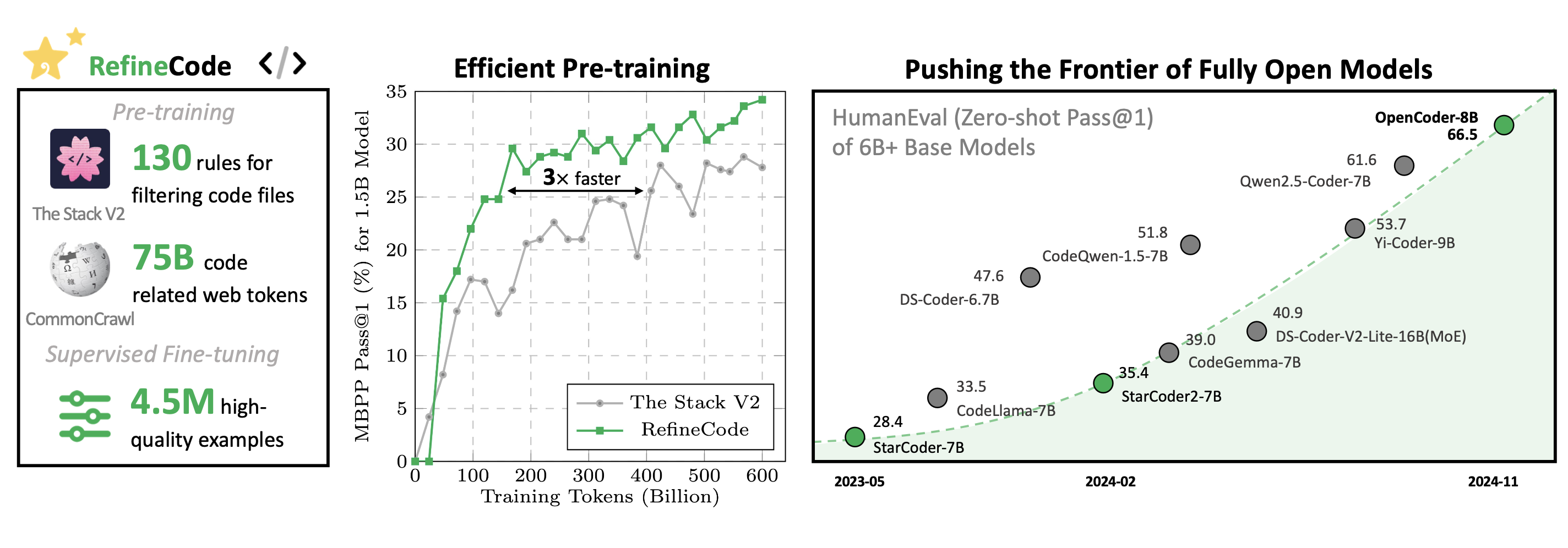

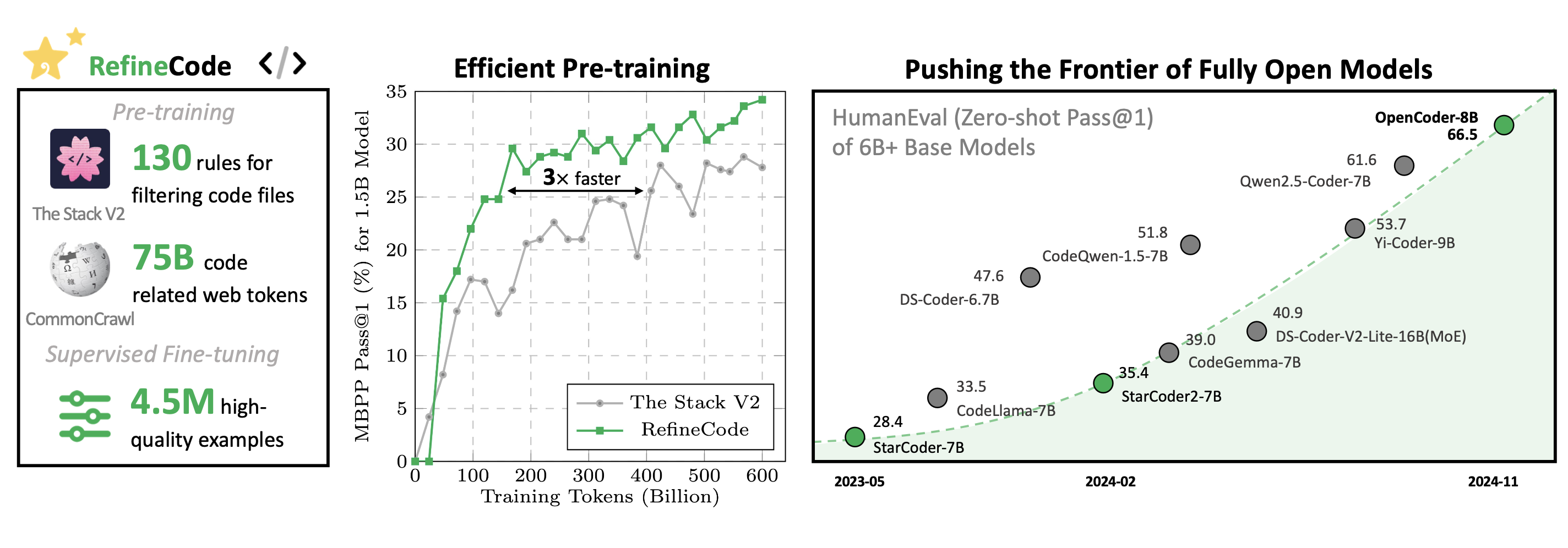

**RefineCode** is a **high-quality**, **reproducible** code pretraining corpus comprising **960 billion** tokens across **607** programming languages and **75 billion** code-related token recalled from web corpus, incorporating over **130** language-specific rules with customized weight assignments.

Our dataset shows better training efficacy and efficiency compared with the training subset of The Stack V2.

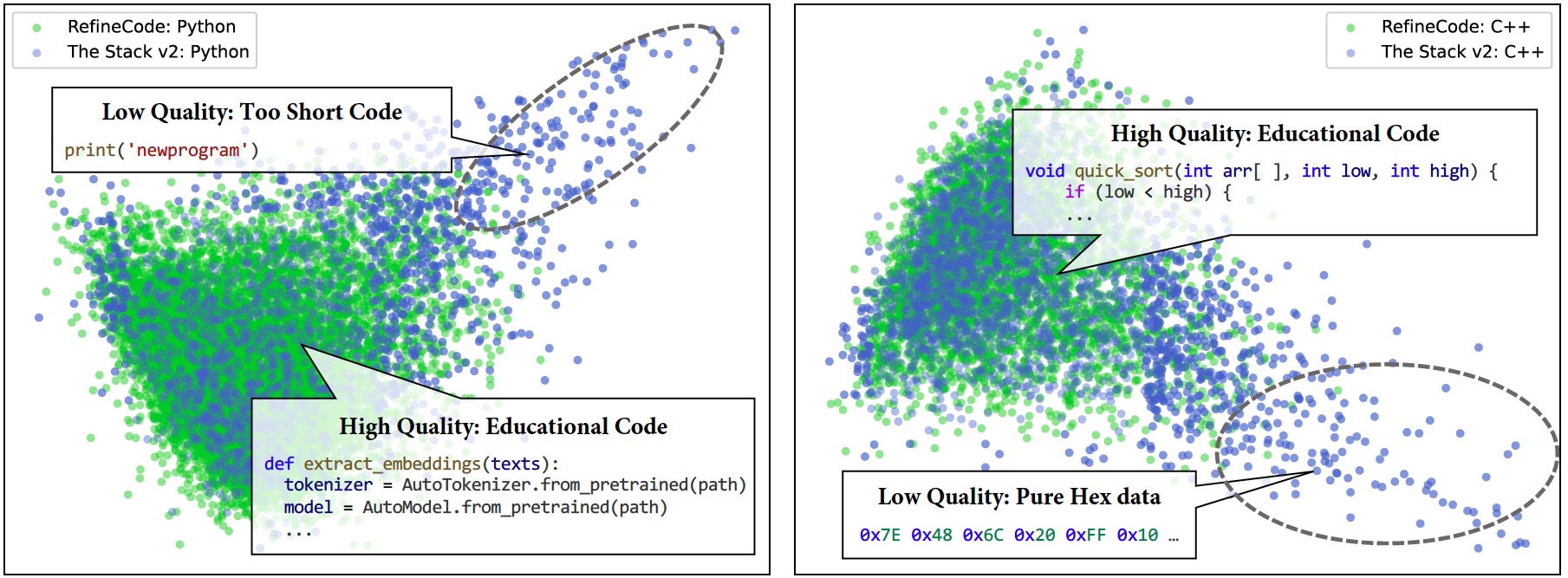

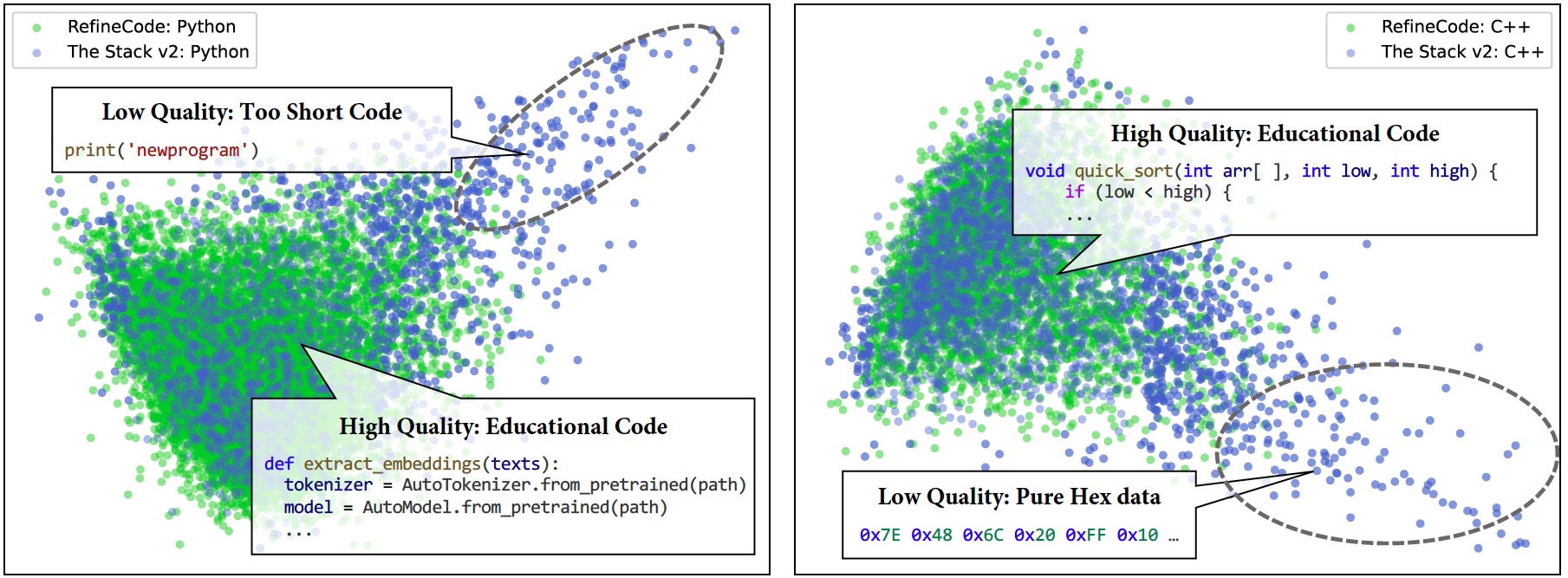

We also use PCA to visualize the embeddings extracted from CodeBERT for The Stack V2 and **RefineCode**, showing a clear advance of our pretraining dataset.

We also use PCA to visualize the embeddings extracted from CodeBERT for The Stack V2 and **RefineCode**, showing a clear advance of our pretraining dataset.

We also use PCA to visualize the embeddings extracted from CodeBERT for The Stack V2 and **RefineCode**, showing a clear advance of our pretraining dataset.

We also use PCA to visualize the embeddings extracted from CodeBERT for The Stack V2 and **RefineCode**, showing a clear advance of our pretraining dataset.