Datasets:

Tasks:

Visual Question Answering

Formats:

parquet

Languages:

Chinese

Size:

100K - 1M

ArXiv:

DOI:

License:

File size: 16,892 Bytes

fe72243 a86a925 fe72243 a86a925 432f9de f614c56 432f9de f614c56 432f9de fc28628 432f9de f614c56 432f9de f614c56 fc28628 f614c56 432f9de f614c56 432f9de f614c56 fc28628 f614c56 fc28628 432f9de b53b2cc a86a925 fc28628 b53b2cc fc28628 b53b2cc fc28628 b53b2cc 3d98269 a86a925 b53b2cc 3d98269 b53b2cc fc28628 b53b2cc fc28628 b53b2cc fc28628 3d98269 a86a925 b53b2cc 3d98269 b53b2cc 3d98269 b53b2cc 3d98269 b53b2cc 3d98269 b53b2cc 3d98269 b53b2cc fc28628 b53b2cc fc28628 b53b2cc a86a925 fc28628 a86a925 |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 |

---

dataset_info:

features:

- name: image

dtype: image

- name: caption

dtype: string

- name: stacked_image

dtype: image

- name: only_it_image

dtype: image

- name: only_it_image_small

dtype: image

- name: crossed_text

sequence: string

splits:

- name: test

num_bytes: 1005851898.7488415

num_examples: 5000

- name: validation

num_bytes: 1007605144.261219

num_examples: 5000

- name: train

num_bytes: 67801347115.279724

num_examples: 336448

download_size: 69580595287

dataset_size: 69814804158.28978

configs:

- config_name: default

data_files:

- split: test

path: data/test-*

- split: validation

path: data/validation-*

- split: train

path: data/train-*

license: cc-by-sa-4.0

source_datasets:

- wikimedia/wit_base

task_categories:

- visual-question-answering

language:

- zh

pretty_name: VCR

arxiv: 2406.06462

size_categories:

- 100K<n<1M

---

# The VCR-Wiki Dataset for Visual Caption Restoration (VCR)

🏠 [Paper](https://arxiv.org/abs/2406.06462) | 👩🏻💻 [GitHub](https://github.com/tianyu-z/vcr) | 🤗 [Huggingface Datasets](https://huggingface.co/vcr-org) | 📏 [Evaluation with lmms-eval](https://github.com/EvolvingLMMs-Lab/lmms-eval)

This is the official Hugging Face dataset for VCR-Wiki, a dataset for the [Visual Caption Restoration (VCR)](https://arxiv.org/abs/2406.06462) task.

VCR is designed to measure vision-language models' capability to accurately restore partially obscured texts using pixel-level hints within images. text-based processing becomes ineffective in VCR as accurate text restoration depends on the combined information from provided images, context, and subtle cues from the tiny exposed areas of masked texts.

We found that OCR and text-based processing become ineffective in VCR as accurate text restoration depends on the combined information from provided images, context, and subtle cues from the tiny exposed areas of masked texts. We develop a pipeline to generate synthetic images for the VCR task using image-caption pairs, with adjustable caption visibility to control the task difficulty. However, this task is generally easy for native speakers of the corresponding language. Initial results indicate that current vision-language models fall short compared to human performance on this task.

## Dataset Description

- **GitHub:** [VCR GitHub](https://github.com/tianyu-z/vcr)

- **Paper:** [VCR: Visual Caption Restoration](https://arxiv.org/abs/2406.06462)

- **Point of Contact:** [Tianyu Zhang](mailto:[email protected])

# Benchmark

EM means `"Exact Match"` and Jaccard means `"Jaccard Similarity"`. The best in closed source and open source are highlighted in **bold**. The second best are highlighted in *italic*. Closed source models are evaluated based on [500 test samples](https://huggingface.co/collections/vcr-org/vcr-visual-caption-restoration-500-test-subsets-6667c9efd77c55f2363b34a1), while open source models are evaluated based on [5000 test samples](https://huggingface.co/collections/vcr-org/vcr-visual-caption-restoration-6661393b1761e2aff7b967b9).

| Model | Size (unknown for closed source) | En Easy EM | En Easy Jaccard | En Hard EM | En Hard Jaccard | Zh Easy EM | Zh Easy Jaccard | Zh Hard EM | Zh Hard Jaccard |

|---|---|---|---|---|---|---|---|---|---|

| Claude 3 Opus | - | 62.0 | 77.67 | 37.8 | 57.68 | 0.9 | 11.5 | 0.3 | 9.22 |

| Claude 3.5 Sonnet | - | 63.85 | 74.65 | 41.74 | 56.15 | 1.0 | 7.54 | 0.2 | 4.0 |

| GPT-4 Turbo | - | *78.74* | *88.54* | *45.15* | *65.72* | 0.2 | 8.42 | 0.0 | *8.58* |

| GPT-4V | - | 52.04 | 65.36 | 25.83 | 44.63 | - | - | - | - |

| GPT-4o | - | **91.55** | **96.44** | **73.2** | **86.17** | **14.87** | **39.05** | **2.2** | **22.72** |

| GPT-4o-mini | - | 83.60 | 87.77 | 54.04 | 73.09 | 1.10 | 5.03 | 0 | 2.02 |

| Gemini 1.5 Pro | - | 62.73 | 77.71 | 28.07 | 51.9 | 1.1 | 11.1 | 0.7 | 11.82 |

| Qwen-VL-Max | - | 76.8 | 85.71 | 41.65 | 61.18 | *6.34* | *13.45* | *0.89* | 5.4 |

| Reka Core | - | 66.46 | 84.23 | 6.71 | 25.84 | 0.0 | 3.43 | 0.0 | 3.35 |

| Cambrian-1 | 34B | 79.69 | 89.27 | *27.20* | 50.04 | 0.03 | 1.27 | 0.00 | 1.37 |

| Cambrian-1 | 13B | 49.35 | 65.11 | 8.37 | 29.12 | - | - | - | - |

| Cambrian-1 | 8B | 71.13 | 83.68 | 13.78 | 35.78 | - | - | - | - |

| CogVLM | 17B | 73.88 | 86.24 | 34.58 | 57.17 | - | - | - | - |

| CogVLM2 | 19B | *83.25* | *89.75* | **37.98** | **59.99** | 9.15 | 17.12 | 0.08 | 3.67 |

| CogVLM2-Chinese | 19B | 79.90 | 87.42 | 25.13 | 48.76 | **33.24** | **57.57** | **1.34** | **17.35** |

| DeepSeek-VL | 1.3B | 23.04 | 46.84 | 0.16 | 11.89 | 0.0 | 6.56 | 0.0 | 6.46 |

| DeepSeek-VL | 7B | 38.01 | 60.02 | 1.0 | 15.9 | 0.0 | 4.08 | 0.0 | 5.11 |

| DocOwl-1.5-Omni | 8B | 0.84 | 13.34 | 0.04 | 7.76 | 0.0 | 1.14 | 0.0 | 1.37 |

| GLM-4v | 9B | 43.72 | 74.73 | 24.83 | *53.82* | *31.78* | *52.57* | *1.20* | *14.73* |

| Idefics2 | 8B | 15.75 | 31.97 | 0.65 | 9.93 | - | - | - | - |

| InternLM-XComposer2-VL | 7B | 46.64 | 70.99 | 0.7 | 12.51 | 0.27 | 12.32 | 0.07 | 8.97 |

| InternLM-XComposer2-VL-4KHD | 7B | 5.32 | 22.14 | 0.21 | 9.52 | 0.46 | 12.31 | 0.05 | 7.67 |

| InternLM-XComposer2.5-VL | 7B | 41.35 | 63.04 | 0.93 | 13.82 | 0.46 | 12.97 | 0.11 | 10.95 |

| InternVL-V1.5 | 26B | 14.65 | 51.42 | 1.99 | 16.73 | 4.78 | 26.43 | 0.03 | 8.46 |

| InternVL-V2 | 26B | 74.51 | 86.74 | 6.18 | 24.52 | 9.02 | 32.50 | 0.05 | 9.49 |

| InternVL-V2 | 40B | **84.67** | **92.64** | 13.10 | 33.64 | 22.09 | 47.62 | 0.48 | 12.57 |

| InternVL-V2 | 76B | 83.20 | 91.26 | 18.45 | 41.16 | 20.58 | 44.59 | 0.56 | 15.31 |

| InternVL-V2-Pro | - | 77.41 | 86.59 | 12.94 | 35.01 | 19.58 | 43.98 | 0.84 | 13.97 |

| MiniCPM-V2.5 | 8B | 31.81 | 53.24 | 1.41 | 11.94 | 4.1 | 18.03 | 0.09 | 7.39 |

| Monkey | 7B | 50.66 | 67.6 | 1.96 | 14.02 | 0.62 | 8.34 | 0.12 | 6.36 |

| Qwen-VL | 7B | 49.71 | 69.94 | 2.0 | 15.04 | 0.04 | 1.5 | 0.01 | 1.17 |

| Yi-VL | 34B | 0.82 | 5.59 | 0.07 | 4.31 | 0.0 | 4.44 | 0.0 | 4.12 |

| Yi-VL | 6B | 0.75 | 5.54 | 0.06 | 4.46 | 0.00 | 4.37 | 0.00 | 4.0 |

# Model Evaluation

## Method 1: use the evaluation script

### Open-source evaluation

We support open-source model_id:

```python

["openbmb/MiniCPM-Llama3-V-2_5",

"OpenGVLab/InternVL-Chat-V1-5",

"internlm/internlm-xcomposer2-vl-7b",

"internlm/internlm-xcomposer2-4khd-7b",

"internlm/internlm-xcomposer2d5-7b",

"HuggingFaceM4/idefics2-8b",

"Qwen/Qwen-VL-Chat",

"THUDM/cogvlm2-llama3-chinese-chat-19B",

"THUDM/cogvlm2-llama3-chat-19B",

"THUDM/cogvlm-chat-hf",

"echo840/Monkey-Chat",

"THUDM/glm-4v-9b",

"nyu-visionx/cambrian-phi3-3b",

"nyu-visionx/cambrian-8b",

"nyu-visionx/cambrian-13b",

"nyu-visionx/cambrian-34b",

"OpenGVLab/InternVL2-26B",

"OpenGVLab/InternVL2-40B"

"OpenGVLab/InternVL2-Llama3-76B",]

```

For the models not on list, they are not intergated with huggingface, please refer to their github repo to create the evaluation pipeline. Examples of the inference logic are in `src/evaluation/inference.py`

```bash

pip install -r requirements.txt

# We use HuggingFaceM4/idefics2-8b and vcr_wiki_en_easy as an example

cd src/evaluation

# Evaluate the results and save the evaluation metrics to {model_id}_{difficulty}_{language}_evaluation_result.json

python3 evaluation_pipeline.py --dataset_handler "vcr-org/VCR-wiki-en-easy-test" --model_id HuggingFaceM4/idefics2-8b --device "cuda" --output_path . --bootstrap --end_index 5000

```

For large models like "OpenGVLab/InternVL2-Llama3-76B", you may have to use multi-GPU to do the evaluation. You can specify --device to None to use all GPUs available.

### Close-source evaluation (using API)

We provide the evaluation script for the close-source models in `src/evaluation/closed_source_eval.py`.

You need an API Key, a pre-saved testing dataset and specify the path of the data saving the paper

```bash

pip install -r requirements.txt

cd src/evaluation

# [download images to inference locally option 1] save the testing dataset to the path using script from huggingface

python3 save_image_from_dataset.py --output_path .

# [download images to inference locally option 2] save the testing dataset to the path using github repo

# use en-easy-test-500 as an example

git clone https://github.com/tianyu-z/VCR-wiki-en-easy-test-500.git

# specify your image path if you would like to inference using the image stored locally by --image_path "path_to_image", otherwise, the script will streaming the images from github repo

python3 closed_source_eval.py --model_id gpt4o --dataset_handler "VCR-wiki-en-easy-test-500" --api_key "Your_API_Key"

# Evaluate the results and save the evaluation metrics to {model_id}_{difficulty}_{language}_evaluation_result.json

python3 evaluation_metrics.py --model_id gpt4o --output_path . --json_filename "gpt4o_en_easy.json" --dataset_handler "vcr-org/VCR-wiki-en-easy-test-500"

# To get the mean score of all the `{model_id}_{difficulty}_{language}_evaluation_result.json` in `jsons_path` (and the std, confidence interval if `--bootstrap`) of the evaluation metrics

python3 gather_results.py --jsons_path .

```

## Method 2: use [VLMEvalKit](https://github.com/open-compass/VLMEvalKit) framework

You may need to incorporate the inference method of your model if the VLMEvalKit framework does not support it. For details, please refer to [here](https://github.com/open-compass/VLMEvalKit/blob/main/docs/en/Development.md)

```bash

git clone https://github.com/open-compass/VLMEvalKit.git

cd VLMEvalKit

# We use HuggingFaceM4/idefics2-8b and VCR_EN_EASY_ALL as an example

python run.py --data VCR_EN_EASY_ALL --model idefics2_8b --verbose

```

You may find the supported model list [here](https://github.com/open-compass/VLMEvalKit/blob/main/vlmeval/config.py).

`VLMEvalKit` supports the following VCR `--data` settings:

* English

* Easy

* `VCR_EN_EASY_ALL` (full test set, 5000 instances)

* `VCR_EN_EASY_500` (first 500 instances in the VCR_EN_EASY_ALL setting)

* `VCR_EN_EASY_100` (first 100 instances in the VCR_EN_EASY_ALL setting)

* Hard

* `VCR_EN_HARD_ALL` (full test set, 5000 instances)

* `VCR_EN_HARD_500` (first 500 instances in the VCR_EN_HARD_ALL setting)

* `VCR_EN_HARD_100` (first 100 instances in the VCR_EN_HARD_ALL setting)

* Chinese

* Easy

* `VCR_ZH_EASY_ALL` (full test set, 5000 instances)

* `VCR_ZH_EASY_500` (first 500 instances in the VCR_ZH_EASY_ALL setting)

* `VCR_ZH_EASY_100` (first 100 instances in the VCR_ZH_EASY_ALL setting)

* Hard

* `VCR_ZH_HARD_ALL` (full test set, 5000 instances)

* `VCR_ZH_HARD_500` (first 500 instances in the VCR_ZH_HARD_ALL setting)

* `VCR_ZH_HARD_100` (first 100 instances in the VCR_ZH_HARD_ALL setting)

## Method 3: use lmms-eval framework

You may need to incorporate the inference method of your model if the lmms-eval framework does not support it. For details, please refer to [here](https://github.com/EvolvingLMMs-Lab/lmms-eval/blob/main/docs/model_guide.md)

```bash

pip install git+https://github.com/EvolvingLMMs-Lab/lmms-eval.git

# We use HuggingFaceM4/idefics2-8b and vcr_wiki_en_easy as an example

python3 -m accelerate.commands.launch --num_processes=8 -m lmms_eval --model idefics2 --model_args pretrained="HuggingFaceM4/idefics2-8b" --tasks vcr_wiki_en_easy --batch_size 1 --log_samples --log_samples_suffix HuggingFaceM4_idefics2-8b_vcr_wiki_en_easy --output_path ./logs/

```

You may find the supported model list [here](https://github.com/EvolvingLMMs-Lab/lmms-eval/tree/main/lmms_eval/models).

`lmms-eval` supports the following VCR `--tasks` settings:

* English

* Easy

* `vcr_wiki_en_easy` (full test set, 5000 instances)

* `vcr_wiki_en_easy_500` (first 500 instances in the vcr_wiki_en_easy setting)

* `vcr_wiki_en_easy_100` (first 100 instances in the vcr_wiki_en_easy setting)

* Hard

* `vcr_wiki_en_hard` (full test set, 5000 instances)

* `vcr_wiki_en_hard_500` (first 500 instances in the vcr_wiki_en_hard setting)

* `vcr_wiki_en_hard_100` (first 100 instances in the vcr_wiki_en_hard setting)

* Chinese

* Easy

* `vcr_wiki_zh_easy` (full test set, 5000 instances)

* `vcr_wiki_zh_easy_500` (first 500 instances in the vcr_wiki_zh_easy setting)

* `vcr_wiki_zh_easy_100` (first 100 instances in the vcr_wiki_zh_easy setting)

* Hard

* `vcr_wiki_zh_hard` (full test set, 5000 instances)

* `vcr_wiki_zh_hard_500` (first 500 instances in the vcr_wiki_zh_hard setting)

* `vcr_wiki_zh_hard_100` (first 100 instances in the vcr_wiki_zh_hard setting)

## Dataset Statistics

We show the statistics of the original VCR-Wiki dataset below:

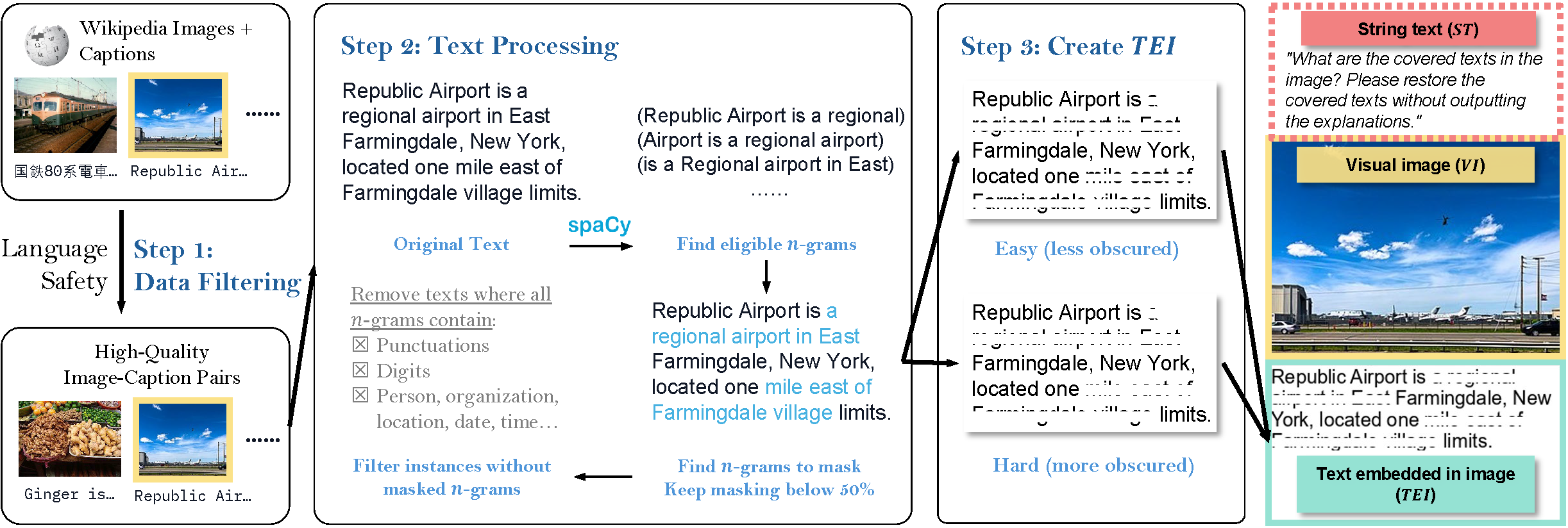

## Dataset Construction

* **Data Collection and Initial Filtering**: The original data is collected from [wikimedia/wit_base](https://huggingface.co/datasets/wikimedia/wit_base). Before constructing the dataset, we first filter out the instances with sensitive content, including NSFW and crime-related terms, to mitigate AI risk and biases.

* **N-gram selection**: We first truncate the description of each entry to be less than 5 lines with our predefined font and size settings. We then tokenize the description for each entry with spaCy and randomly mask out 5-grams, where the masked 5-grams do not contain numbers, person names, religious or political groups, facilities, organizations, locations, dates and time labeled by spaCy, and the total masked token does not exceed 50\% of the tokens in the caption.

* **Create text embedded in images**: We create text embedded in images (TEI) for the description, resize its width to 300 pixels, and mask out the selected 5-grams with white rectangles. The size of the rectangle reflects the difficulty of the task: (1) in easy versions, the task is easy for native speakers but open-source OCR models almost always fail, and (2) in hard versions, the revealed part consists of only one to two pixels for the majority of letters or characters, yet the restoration task remains feasible for native speakers of the language.

* **Concatenate Images**: We concatenate TEI with the main visual image (VI) to get the stacked image.

* **Second-round Filtering**: We filter out all entries with no masked n-grams or have a height exceeding 900 pixels.

## Data Fields

* `question_id`: `int64`, the instance id in the current split.

* `image`: `PIL.Image.Image`, the original visual image (VI).

* `stacked_image`: `PIL.Image.Image`, the stacked VI+TEI image containing both the original visual image and the masked text embedded in image.

* `only_id_image`: `PIL.Image.Image`, the masked TEI image.

* `caption`: `str`, the unmasked original text presented in the TEI image.

* `crossed_text`: `List[str]`, the masked n-grams in the current instance.

## Disclaimer for the VCR-Wiki dataset and Its Subsets

The VCR-Wiki dataset and/or its subsets are provided under the Creative Commons Attribution-ShareAlike 4.0 International (CC BY-SA 4.0) license. This dataset is intended solely for research and educational purposes in the field of visual caption restoration and related vision-language tasks.

Important Considerations:

1. **Accuracy and Reliability**: While the VCR-Wiki dataset has undergone filtering to exclude sensitive content, it may still contain inaccuracies or unintended biases. Users are encouraged to critically evaluate the dataset's content and applicability to their specific research objectives.

2. **Ethical Use**: Users must ensure that their use of the VCR-Wiki dataset aligns with ethical guidelines and standards, particularly in avoiding harm, perpetuating biases, or misusing the data in ways that could negatively impact individuals or groups.

3. **Modifications and Derivatives**: Any modifications or derivative works based on the VCR-Wiki dataset must be shared under the same license (CC BY-SA 4.0).

4. **Commercial Use**: Commercial use of the VCR-Wiki dataset is permitted under the CC BY-SA 4.0 license, provided that proper attribution is given and any derivative works are shared under the same license.

By using the VCR-Wiki dataset and/or its subsets, you agree to the terms and conditions outlined in this disclaimer and the associated license. The creators of the dataset are not liable for any direct or indirect damages resulting from its use.

## Citation

If you find VCR useful for your research and applications, please cite using this BibTeX:

```bibtex

@article{zhang2024vcr,

title = {VCR: Visual Caption Restoration},

author = {Tianyu Zhang and Suyuchen Wang and Lu Li and Ge Zhang and Perouz Taslakian and Sai Rajeswar and Jie Fu and Bang Liu and Yoshua Bengio},

year = {2024},

journal = {arXiv preprint arXiv: 2406.06462}

}

``` |