File size: 1,815 Bytes

8f8c7e2 eba8083 8f8c7e2 eba8083 8f8c7e2 eba8083 8f8c7e2 eba8083 8f8c7e2 eba8083 85b8dea eba8083 85b8dea eba8083 85b8dea eba8083 85b8dea eba8083 85b8dea eba8083 85b8dea eba8083 85b8dea eba8083 85b8dea |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 |

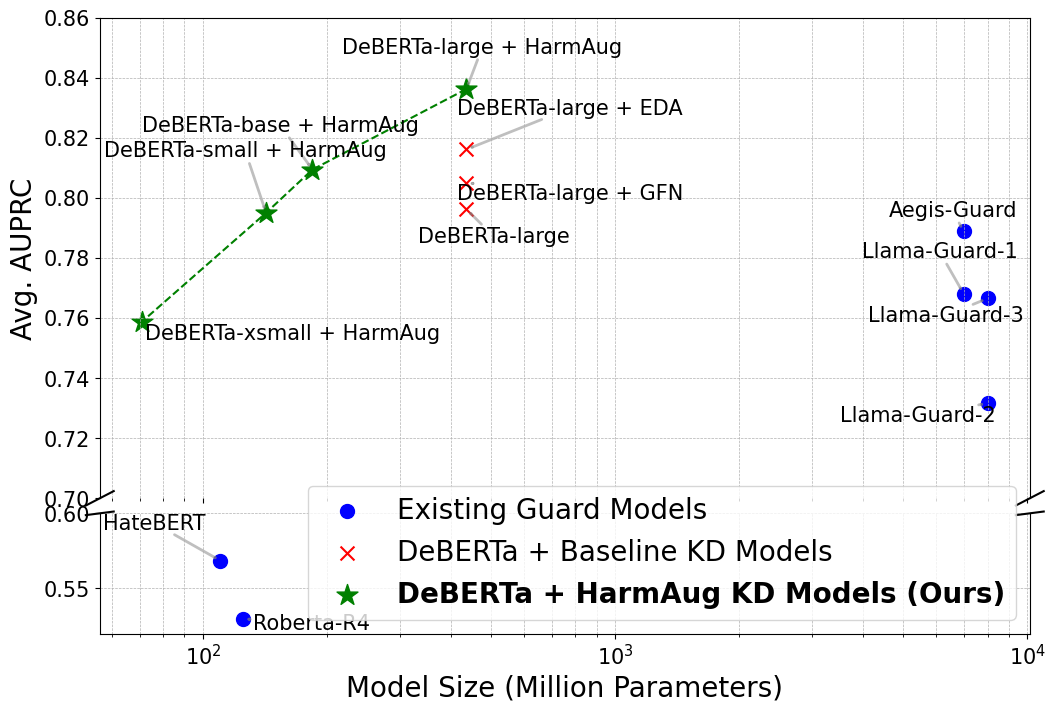

# HarmAug: Effective Data Augmentation for Knowledge Distillation of Safety Guard Models

This repository contains code for reproducing HarmAug introduced in

**HarmAug: Effective Data Augmentation for Knowledge Distillation of Safety Guard Models**

Seanie Lee*, Haebin Seong*, Dong Bok Lee, Minki Kang, Xiaoyin Chen, Dominik Wagner, Yoshua Bengio, Juho Lee, Sung Ju Hwang (*: Equal contribution)

[[arXiv link]](https://arxiv.org/abs/2410.01524)

[[Model link]](https://huggingface.co/AnonHB/HarmAug_Guard_Model_deberta_v3_large_finetuned)

[[Dataset link]](https://huggingface.co/datasets/AnonHB/HarmAug_generated_dataset)

## Reproduction Steps

First, we recommend to create a conda environment with python 3.10.

```

conda create -n harmaug python=3.10

conda activate harmaug

```

After that, install the requirements.

```

pip install -r requirements.txt

```

Then, download necessary files from [Google Drive](https://drive.google.com/drive/folders/1oLUMPauXYtEBP7rvbULXL4hHp9Ck_yqg?usp=drive_link) and put them into their appropriate folders.

```

mv [email protected] ./data

```

Finally, you can start the knowledge distillation process.

```

bash script/kd.sh

```

## Reference

To cite our paper, please use this BibTex

```bibtex

@article{lee2024harmaug,

title={{HarmAug}: Effective Data Augmentation for Knowledge Distillation of Safety Guard Models},

author={Lee, Seanie and Seong, Haebin and Lee, Dong Bok and Kang, Minki and Chen, Xiaoyin and Wagner, Dominik and Bengio, Yoshua and Lee, Juho and Hwang, Sung Ju},

journal={arXiv preprint arXiv:2410.01524},

year={2024}

}

``` |