AI & ML interests

lora module composition, parameter-efficient tuning

Organization Card

The official collection for our paper LoraHub: Efficient Cross-Task Generalization via Dynamic LoRA Composition, from Chengsong Huang*, Qian Liu*, Bill Yuchen Lin*, Tianyu Pang, Chao Du and Min Lin.

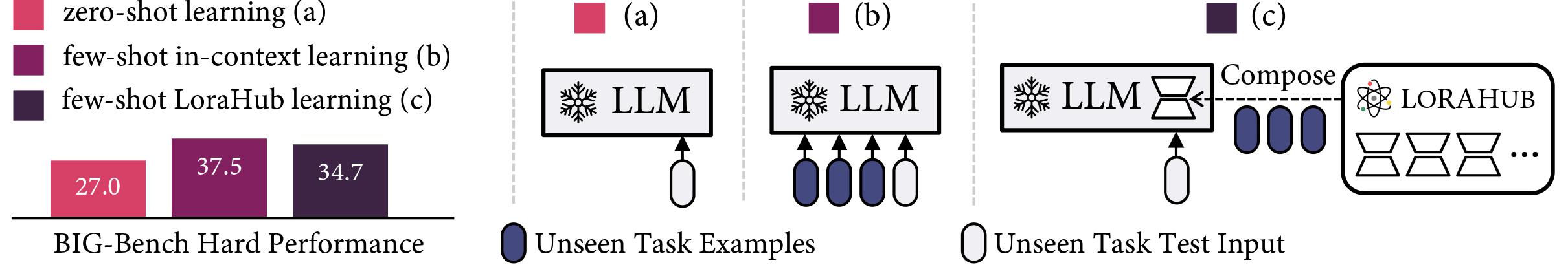

LoraHub is a framework that allows composing multiple LoRA modules trained on different tasks. The goal is to achieve good performance on unseen tasks using just a few examples, without needing extra parameters or training. And we want to build a marketplace where users can share their trained LoRA modules, thereby facilitating the application of these modules to new tasks.

- Code: https://github.com/sail-sg/lorahub

- Install: pip install lorahub

models

337

lorahub/flan_t5_xl-ropes_prompt_bottom_no_hint

Updated

•

3

lorahub/flan_t5_xl-super_glue_cb

Updated

•

2

lorahub/flan_t5_xl-amazon_polarity_user_satisfied

Updated

•

2

lorahub/flan_t5_xl-definite_pronoun_resolution

Updated

•

2

lorahub/flan_t5_xl-wiki_bio_key_content

Updated

•

41

lorahub/flan_t5_xl-trivia_qa_rc

Updated

•

1

lorahub/flan_t5_xl-super_glue_multirc

Updated

lorahub/flan_t5_xl-dream_read_the_following_conversation_and_answer_the_question

Updated

•

1

lorahub/flan_t5_xl-yelp_polarity_reviews

Updated

lorahub/flan_t5_xl-adversarial_qa_dbert_tell_what_it_is

Updated

•

3