add the ONNX-TensorRT way of model conversion

Browse files- README.md +39 -0

- configs/inference.json +18 -9

- configs/inference_trt.json +11 -0

- configs/metadata.json +3 -2

- docs/README.md +39 -0

- scripts/detection_inferer.py +10 -3

README.md

CHANGED

|

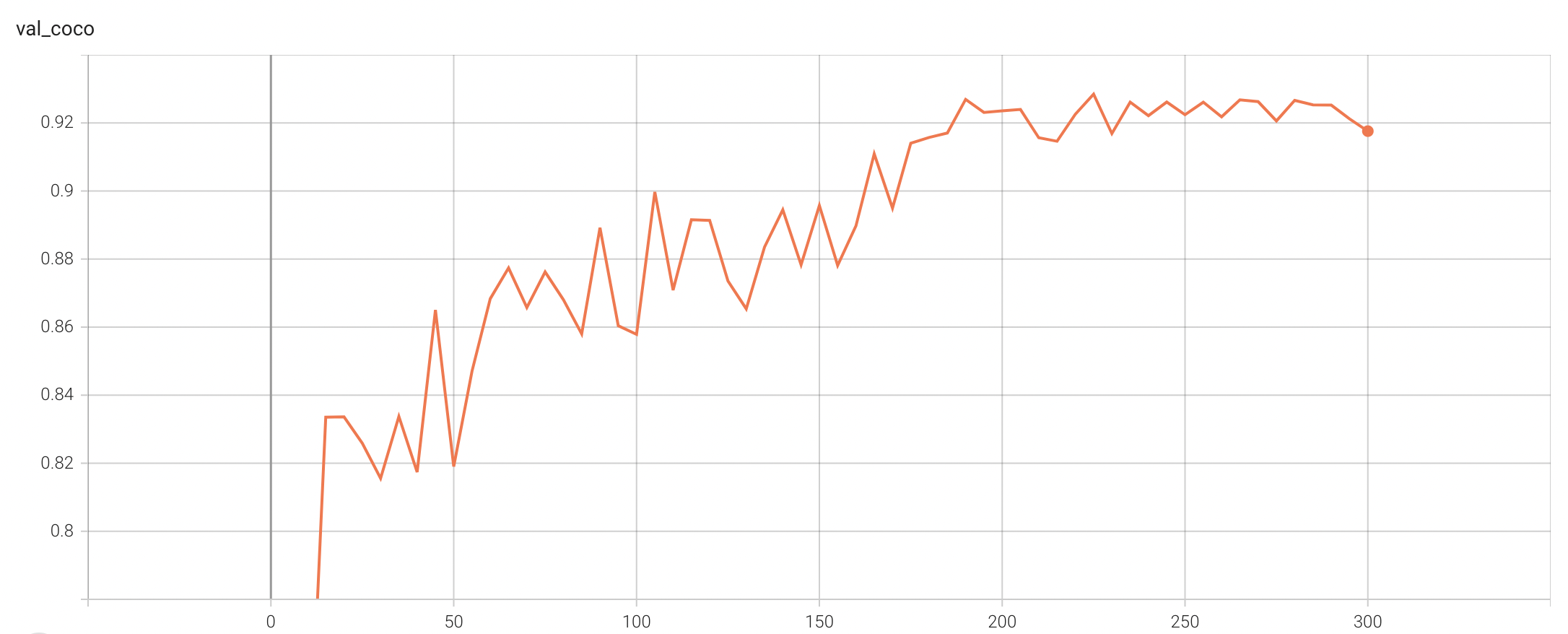

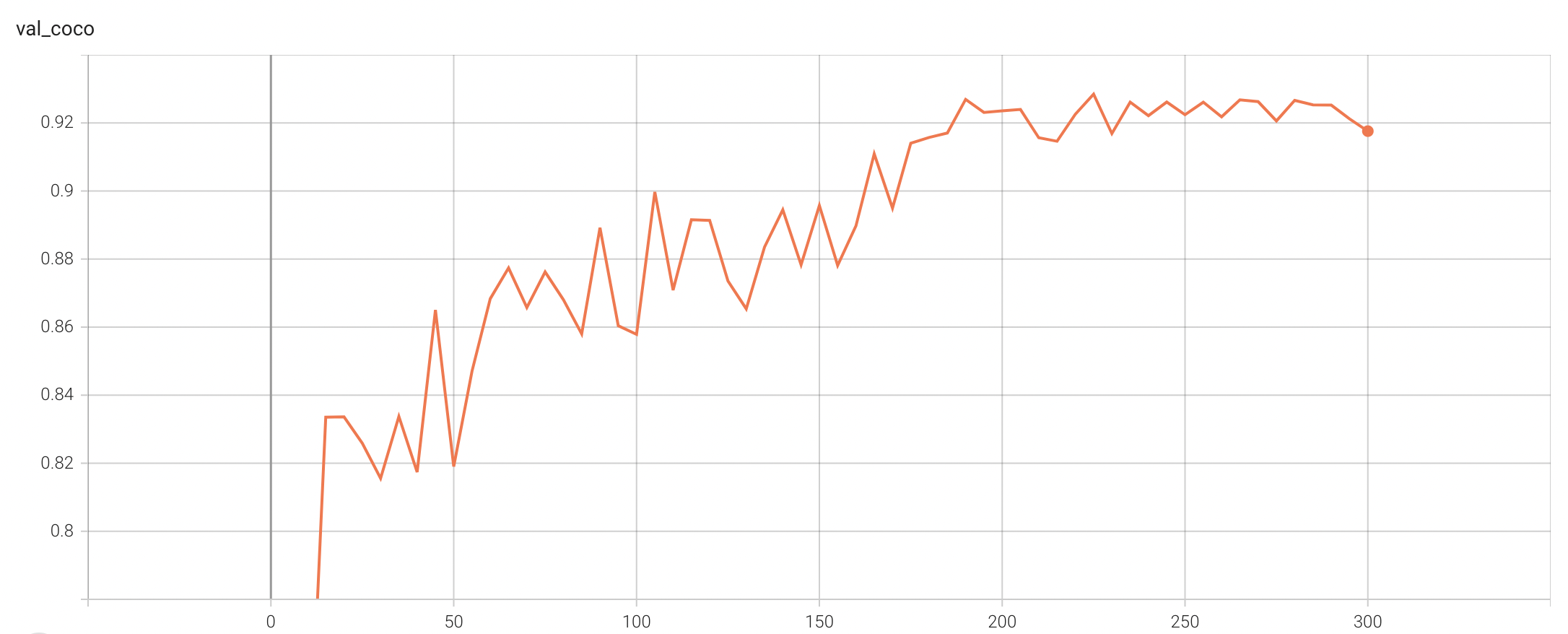

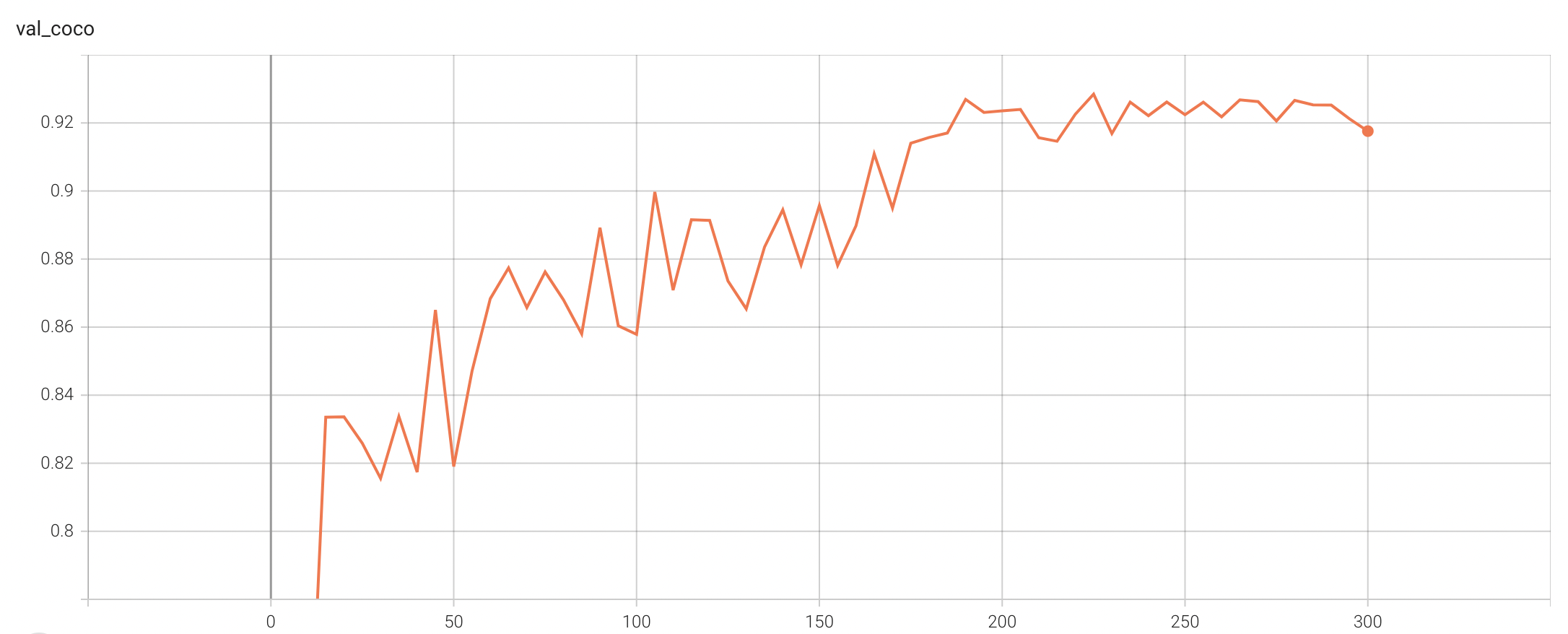

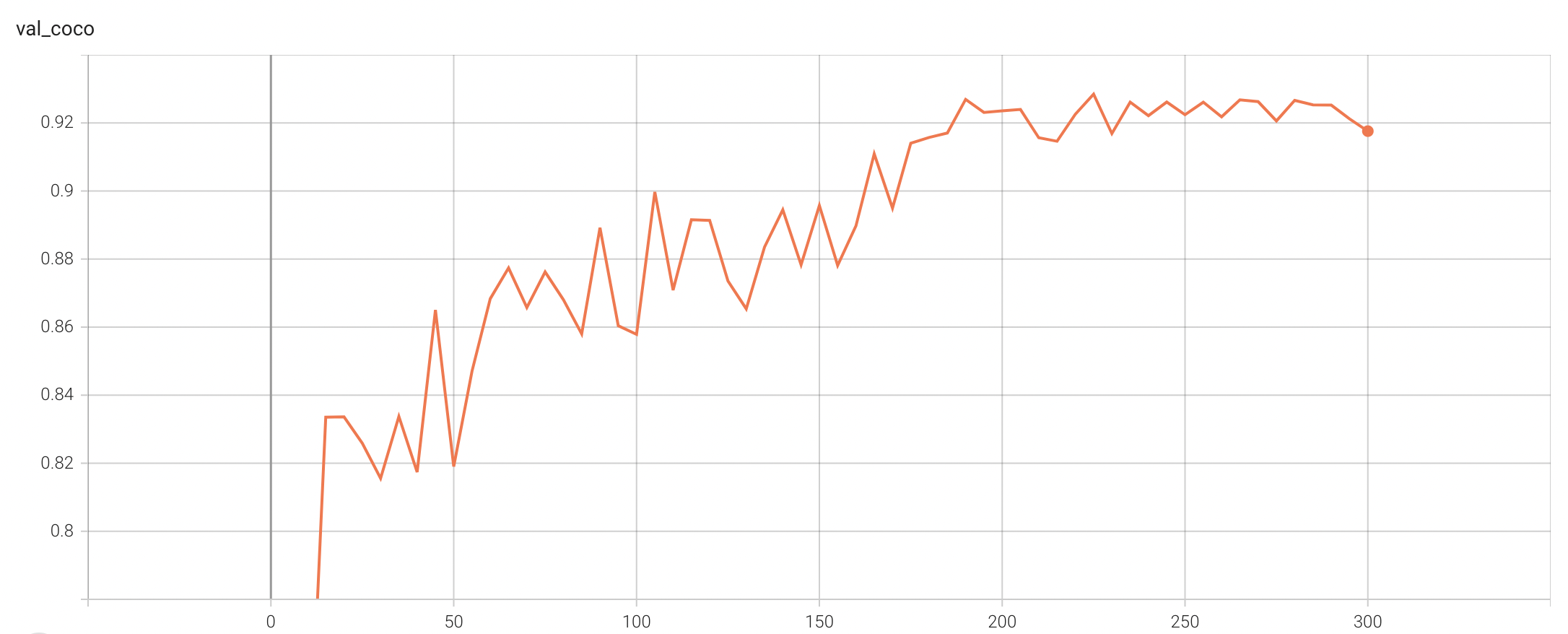

@@ -70,6 +70,33 @@ The validation accuracy in this curve is the mean of mAP, mAR, AP(IoU=0.1), and

|

|

| 70 |

|

| 71 |

|

| 72 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 73 |

## MONAI Bundle Commands

|

| 74 |

In addition to the Pythonic APIs, a few command line interfaces (CLI) are provided to interact with the bundle. The CLI supports flexible use cases, such as overriding configs at runtime and predefining arguments in a file.

|

| 75 |

|

|

@@ -98,6 +125,18 @@ Note that in inference.json, the transform "LoadImaged" in "preprocessing" and "

|

|

| 98 |

This depends on the input images. LUNA16 needs `"affine_lps_to_ras": true`.

|

| 99 |

It is possible that your inference dataset should set `"affine_lps_to_ras": false`.

|

| 100 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 101 |

# References

|

| 102 |

[1] Lin, Tsung-Yi, et al. "Focal loss for dense object detection." ICCV 2017. https://arxiv.org/abs/1708.02002)

|

| 103 |

|

|

|

|

| 70 |

|

| 71 |

|

| 72 |

|

| 73 |

+

#### TensorRT speedup

|

| 74 |

+

The `lung_nodule_ct_detection` bundle supports acceleration with TensorRT through the ONNX-TensorRT method. The table below displays the speedup ratios observed on an A100 80G GPU. Please note that when using the TensorRT model for inference, the `force_sliding_window` parameter in the `inference.json` file must be set to `true`. This ensures that the bundle uses the `SlidingWindowInferer` during inference and maintains the input spatial size of the network. Otherwise, if given an input with spatial size less than the `infer_patch_size`, the input spatial size of the network would be changed.

|

| 75 |

+

|

| 76 |

+

| method | torch_fp32(ms) | torch_amp(ms) | trt_fp32(ms) | trt_fp16(ms) | speedup amp | speedup fp32 | speedup fp16 | amp vs fp16|

|

| 77 |

+

| :---: | :---: | :---: | :---: | :---: | :---: | :---: | :---: | :---: |

|

| 78 |

+

| model computation | 7449.84 | 996.08 | 976.67 | 626.90 | 7.63 | 7.63 | 11.88 | 1.56 |

|

| 79 |

+

| end2end | 36458.26 | 7259.35 | 6420.60 | 4698.34 | 5.02 | 5.68 | 7.76 | 1.55 |

|

| 80 |

+

|

| 81 |

+

Where:

|

| 82 |

+

- `model computation` means the speedup ratio of model's inference with a random input without preprocessing and postprocessing

|

| 83 |

+

- `end2end` means run the bundle end-to-end with the TensorRT based model.

|

| 84 |

+

- `torch_fp32` and `torch_amp` are for the PyTorch models with or without `amp` mode.

|

| 85 |

+

- `trt_fp32` and `trt_fp16` are for the TensorRT based models converted in corresponding precision.

|

| 86 |

+

- `speedup amp`, `speedup fp32` and `speedup fp16` are the speedup ratios of corresponding models versus the PyTorch float32 model

|

| 87 |

+

- `amp vs fp16` is the speedup ratio between the PyTorch amp model and the TensorRT float16 based model.

|

| 88 |

+

|

| 89 |

+

Currently, the only available method to accelerate this model is through ONNX-TensorRT. However, the Torch-TensorRT method is under development and will be available in the near future.

|

| 90 |

+

|

| 91 |

+

This result is benchmarked under:

|

| 92 |

+

- TensorRT: 8.5.3+cuda11.8

|

| 93 |

+

- Torch-TensorRT Version: 1.4.0

|

| 94 |

+

- CPU Architecture: x86-64

|

| 95 |

+

- OS: ubuntu 20.04

|

| 96 |

+

- Python version:3.8.10

|

| 97 |

+

- CUDA version: 12.0

|

| 98 |

+

- GPU models and configuration: A100 80G

|

| 99 |

+

|

| 100 |

## MONAI Bundle Commands

|

| 101 |

In addition to the Pythonic APIs, a few command line interfaces (CLI) are provided to interact with the bundle. The CLI supports flexible use cases, such as overriding configs at runtime and predefining arguments in a file.

|

| 102 |

|

|

|

|

| 125 |

This depends on the input images. LUNA16 needs `"affine_lps_to_ras": true`.

|

| 126 |

It is possible that your inference dataset should set `"affine_lps_to_ras": false`.

|

| 127 |

|

| 128 |

+

#### Export checkpoint to TensorRT based models with fp32 or fp16 precision

|

| 129 |

+

|

| 130 |

+

```bash

|

| 131 |

+

python -m monai.bundle trt_export --net_id network_def --filepath models/model_trt.ts --ckpt_file models/model.pt --meta_file configs/metadata.json --config_file configs/inference.json --precision <fp32/fp16> --input_shape "[1, 1, 512, 512, 192]" --use_onnx "True" --use_trace "True" --onnx_output_names "['output_0', 'output_1', 'output_2', 'output_3', 'output_4', 'output_5']" --network_def#use_list_output "True"

|

| 132 |

+

```

|

| 133 |

+

|

| 134 |

+

#### Execute inference with the TensorRT model

|

| 135 |

+

|

| 136 |

+

```

|

| 137 |

+

python -m monai.bundle run --config_file "['configs/inference.json', 'configs/inference_trt.json']"

|

| 138 |

+

```

|

| 139 |

+

|

| 140 |

# References

|

| 141 |

[1] Lin, Tsung-Yi, et al. "Focal loss for dense object detection." ICCV 2017. https://arxiv.org/abs/1708.02002)

|

| 142 |

|

configs/inference.json

CHANGED

|

@@ -13,6 +13,14 @@

|

|

| 13 |

"test_datalist": "$monai.data.load_decathlon_datalist(@data_list_file_path, is_segmentation=True, data_list_key='validation', base_dir=@dataset_dir)",

|

| 14 |

"device": "$torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')",

|

| 15 |

"amp": true,

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 16 |

"infer_patch_size": [

|

| 17 |

512,

|

| 18 |

512,

|

|

@@ -47,22 +55,22 @@

|

|

| 47 |

"feature_extractor": "$monai.apps.detection.networks.retinanet_network.resnet_fpn_feature_extractor(@backbone,3,False,[1,2],None)",

|

| 48 |

"network_def": {

|

| 49 |

"_target_": "RetinaNet",

|

| 50 |

-

"spatial_dims":

|

| 51 |

-

"num_classes":

|

| 52 |

"num_anchors": 3,

|

| 53 |

"feature_extractor": "@feature_extractor",

|

| 54 |

-

"size_divisible":

|

| 55 |

-

|

| 56 |

-

16,

|

| 57 |

-

8

|

| 58 |

-

]

|

| 59 |

},

|

| 60 |

"network": "$@network_def.to(@device)",

|

| 61 |

"detector": {

|

| 62 |

"_target_": "RetinaNetDetector",

|

| 63 |

"network": "@network",

|

| 64 |

"anchor_generator": "@anchor_generator",

|

| 65 |

-

"debug": false

|

|

|

|

|

|

|

|

|

|

| 66 |

},

|

| 67 |

"detector_ops": [

|

| 68 |

"[email protected]_target_keys(box_key='box', label_key='label')",

|

|

@@ -136,7 +144,8 @@

|

|

| 136 |

},

|

| 137 |

"inferer": {

|

| 138 |

"_target_": "scripts.detection_inferer.RetinaNetInferer",

|

| 139 |

-

"detector": "@detector"

|

|

|

|

| 140 |

},

|

| 141 |

"postprocessing": {

|

| 142 |

"_target_": "Compose",

|

|

|

|

| 13 |

"test_datalist": "$monai.data.load_decathlon_datalist(@data_list_file_path, is_segmentation=True, data_list_key='validation', base_dir=@dataset_dir)",

|

| 14 |

"device": "$torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')",

|

| 15 |

"amp": true,

|

| 16 |

+

"spatial_dims": 3,

|

| 17 |

+

"num_classes": 1,

|

| 18 |

+

"force_sliding_window": false,

|

| 19 |

+

"size_divisible": [

|

| 20 |

+

16,

|

| 21 |

+

16,

|

| 22 |

+

8

|

| 23 |

+

],

|

| 24 |

"infer_patch_size": [

|

| 25 |

512,

|

| 26 |

512,

|

|

|

|

| 55 |

"feature_extractor": "$monai.apps.detection.networks.retinanet_network.resnet_fpn_feature_extractor(@backbone,3,False,[1,2],None)",

|

| 56 |

"network_def": {

|

| 57 |

"_target_": "RetinaNet",

|

| 58 |

+

"spatial_dims": "@spatial_dims",

|

| 59 |

+

"num_classes": "@num_classes",

|

| 60 |

"num_anchors": 3,

|

| 61 |

"feature_extractor": "@feature_extractor",

|

| 62 |

+

"size_divisible": "@size_divisible",

|

| 63 |

+

"use_list_output": false

|

|

|

|

|

|

|

|

|

|

| 64 |

},

|

| 65 |

"network": "$@network_def.to(@device)",

|

| 66 |

"detector": {

|

| 67 |

"_target_": "RetinaNetDetector",

|

| 68 |

"network": "@network",

|

| 69 |

"anchor_generator": "@anchor_generator",

|

| 70 |

+

"debug": false,

|

| 71 |

+

"spatial_dims": "@spatial_dims",

|

| 72 |

+

"num_classes": "@num_classes",

|

| 73 |

+

"size_divisible": "@size_divisible"

|

| 74 |

},

|

| 75 |

"detector_ops": [

|

| 76 |

"[email protected]_target_keys(box_key='box', label_key='label')",

|

|

|

|

| 144 |

},

|

| 145 |

"inferer": {

|

| 146 |

"_target_": "scripts.detection_inferer.RetinaNetInferer",

|

| 147 |

+

"detector": "@detector",

|

| 148 |

+

"force_sliding_window": "@force_sliding_window"

|

| 149 |

},

|

| 150 |

"postprocessing": {

|

| 151 |

"_target_": "Compose",

|

configs/inference_trt.json

ADDED

|

@@ -0,0 +1,11 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"imports": [

|

| 3 |

+

"$import glob",

|

| 4 |

+

"$import os",

|

| 5 |

+

"$import torch_tensorrt"

|

| 6 |

+

],

|

| 7 |

+

"force_sliding_window": true,

|

| 8 |

+

"handlers#0#_disabled_": true,

|

| 9 |

+

"network_def": "$torch.jit.load(@bundle_root + '/models/model_trt.ts')",

|

| 10 |

+

"evaluator#amp": false

|

| 11 |

+

}

|

configs/metadata.json

CHANGED

|

@@ -1,7 +1,8 @@

|

|

| 1 |

{

|

| 2 |

"schema": "https://github.com/Project-MONAI/MONAI-extra-test-data/releases/download/0.8.1/meta_schema_20220324.json",

|

| 3 |

-

"version": "0.5.

|

| 4 |

"changelog": {

|

|

|

|

| 5 |

"0.5.5": "update retrained validation results and training curve",

|

| 6 |

"0.5.4": "add non-deterministic note",

|

| 7 |

"0.5.3": "adapt to BundleWorkflow interface",

|

|

@@ -19,7 +20,7 @@

|

|

| 19 |

"0.1.1": "add reference for LIDC dataset",

|

| 20 |

"0.1.0": "complete the model package"

|

| 21 |

},

|

| 22 |

-

"monai_version": "1.2.

|

| 23 |

"pytorch_version": "1.13.1",

|

| 24 |

"numpy_version": "1.22.2",

|

| 25 |

"optional_packages_version": {

|

|

|

|

| 1 |

{

|

| 2 |

"schema": "https://github.com/Project-MONAI/MONAI-extra-test-data/releases/download/0.8.1/meta_schema_20220324.json",

|

| 3 |

+

"version": "0.5.6",

|

| 4 |

"changelog": {

|

| 5 |

+

"0.5.6": "add the ONNX-TensorRT way of model conversion",

|

| 6 |

"0.5.5": "update retrained validation results and training curve",

|

| 7 |

"0.5.4": "add non-deterministic note",

|

| 8 |

"0.5.3": "adapt to BundleWorkflow interface",

|

|

|

|

| 20 |

"0.1.1": "add reference for LIDC dataset",

|

| 21 |

"0.1.0": "complete the model package"

|

| 22 |

},

|

| 23 |

+

"monai_version": "1.2.0rc5",

|

| 24 |

"pytorch_version": "1.13.1",

|

| 25 |

"numpy_version": "1.22.2",

|

| 26 |

"optional_packages_version": {

|

docs/README.md

CHANGED

|

@@ -63,6 +63,33 @@ The validation accuracy in this curve is the mean of mAP, mAR, AP(IoU=0.1), and

|

|

| 63 |

|

| 64 |

|

| 65 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 66 |

## MONAI Bundle Commands

|

| 67 |

In addition to the Pythonic APIs, a few command line interfaces (CLI) are provided to interact with the bundle. The CLI supports flexible use cases, such as overriding configs at runtime and predefining arguments in a file.

|

| 68 |

|

|

@@ -91,6 +118,18 @@ Note that in inference.json, the transform "LoadImaged" in "preprocessing" and "

|

|

| 91 |

This depends on the input images. LUNA16 needs `"affine_lps_to_ras": true`.

|

| 92 |

It is possible that your inference dataset should set `"affine_lps_to_ras": false`.

|

| 93 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 94 |

# References

|

| 95 |

[1] Lin, Tsung-Yi, et al. "Focal loss for dense object detection." ICCV 2017. https://arxiv.org/abs/1708.02002)

|

| 96 |

|

|

|

|

| 63 |

|

| 64 |

|

| 65 |

|

| 66 |

+

#### TensorRT speedup

|

| 67 |

+

The `lung_nodule_ct_detection` bundle supports acceleration with TensorRT through the ONNX-TensorRT method. The table below displays the speedup ratios observed on an A100 80G GPU. Please note that when using the TensorRT model for inference, the `force_sliding_window` parameter in the `inference.json` file must be set to `true`. This ensures that the bundle uses the `SlidingWindowInferer` during inference and maintains the input spatial size of the network. Otherwise, if given an input with spatial size less than the `infer_patch_size`, the input spatial size of the network would be changed.

|

| 68 |

+

|

| 69 |

+

| method | torch_fp32(ms) | torch_amp(ms) | trt_fp32(ms) | trt_fp16(ms) | speedup amp | speedup fp32 | speedup fp16 | amp vs fp16|

|

| 70 |

+

| :---: | :---: | :---: | :---: | :---: | :---: | :---: | :---: | :---: |

|

| 71 |

+

| model computation | 7449.84 | 996.08 | 976.67 | 626.90 | 7.63 | 7.63 | 11.88 | 1.56 |

|

| 72 |

+

| end2end | 36458.26 | 7259.35 | 6420.60 | 4698.34 | 5.02 | 5.68 | 7.76 | 1.55 |

|

| 73 |

+

|

| 74 |

+

Where:

|

| 75 |

+

- `model computation` means the speedup ratio of model's inference with a random input without preprocessing and postprocessing

|

| 76 |

+

- `end2end` means run the bundle end-to-end with the TensorRT based model.

|

| 77 |

+

- `torch_fp32` and `torch_amp` are for the PyTorch models with or without `amp` mode.

|

| 78 |

+

- `trt_fp32` and `trt_fp16` are for the TensorRT based models converted in corresponding precision.

|

| 79 |

+

- `speedup amp`, `speedup fp32` and `speedup fp16` are the speedup ratios of corresponding models versus the PyTorch float32 model

|

| 80 |

+

- `amp vs fp16` is the speedup ratio between the PyTorch amp model and the TensorRT float16 based model.

|

| 81 |

+

|

| 82 |

+

Currently, the only available method to accelerate this model is through ONNX-TensorRT. However, the Torch-TensorRT method is under development and will be available in the near future.

|

| 83 |

+

|

| 84 |

+

This result is benchmarked under:

|

| 85 |

+

- TensorRT: 8.5.3+cuda11.8

|

| 86 |

+

- Torch-TensorRT Version: 1.4.0

|

| 87 |

+

- CPU Architecture: x86-64

|

| 88 |

+

- OS: ubuntu 20.04

|

| 89 |

+

- Python version:3.8.10

|

| 90 |

+

- CUDA version: 12.0

|

| 91 |

+

- GPU models and configuration: A100 80G

|

| 92 |

+

|

| 93 |

## MONAI Bundle Commands

|

| 94 |

In addition to the Pythonic APIs, a few command line interfaces (CLI) are provided to interact with the bundle. The CLI supports flexible use cases, such as overriding configs at runtime and predefining arguments in a file.

|

| 95 |

|

|

|

|

| 118 |

This depends on the input images. LUNA16 needs `"affine_lps_to_ras": true`.

|

| 119 |

It is possible that your inference dataset should set `"affine_lps_to_ras": false`.

|

| 120 |

|

| 121 |

+

#### Export checkpoint to TensorRT based models with fp32 or fp16 precision

|

| 122 |

+

|

| 123 |

+

```bash

|

| 124 |

+

python -m monai.bundle trt_export --net_id network_def --filepath models/model_trt.ts --ckpt_file models/model.pt --meta_file configs/metadata.json --config_file configs/inference.json --precision <fp32/fp16> --input_shape "[1, 1, 512, 512, 192]" --use_onnx "True" --use_trace "True" --onnx_output_names "['output_0', 'output_1', 'output_2', 'output_3', 'output_4', 'output_5']" --network_def#use_list_output "True"

|

| 125 |

+

```

|

| 126 |

+

|

| 127 |

+

#### Execute inference with the TensorRT model

|

| 128 |

+

|

| 129 |

+

```

|

| 130 |

+

python -m monai.bundle run --config_file "['configs/inference.json', 'configs/inference_trt.json']"

|

| 131 |

+

```

|

| 132 |

+

|

| 133 |

# References

|

| 134 |

[1] Lin, Tsung-Yi, et al. "Focal loss for dense object detection." ICCV 2017. https://arxiv.org/abs/1708.02002)

|

| 135 |

|

scripts/detection_inferer.py

CHANGED

|

@@ -25,14 +25,19 @@ class RetinaNetInferer(Inferer):

|

|

| 25 |

Args:

|

| 26 |

detector: the RetinaNetDetector that converts network output BxCxMxN or BxCxMxNxP

|

| 27 |

map into boxes and classification scores.

|

|

|

|

|

|

|

|

|

|

|

|

|

| 28 |

args: other optional args to be passed to detector.

|

| 29 |

kwargs: other optional keyword args to be passed to detector.

|

| 30 |

"""

|

| 31 |

|

| 32 |

-

def __init__(self, detector: RetinaNetDetector,

|

| 33 |

Inferer.__init__(self)

|

| 34 |

self.detector = detector

|

| 35 |

self.sliding_window_size = None

|

|

|

|

| 36 |

if self.detector.inferer is not None:

|

| 37 |

if hasattr(self.detector.inferer, "roi_size"):

|

| 38 |

self.sliding_window_size = np.prod(self.detector.inferer.roi_size)

|

|

@@ -52,8 +57,10 @@ class RetinaNetInferer(Inferer):

|

|

| 52 |

|

| 53 |

# if image smaller than sliding window roi size, no need to use sliding window inferer

|

| 54 |

# use sliding window inferer only when image is large

|

| 55 |

-

use_inferer =

|

| 56 |

-

|

|

|

|

|

|

|

| 57 |

)

|

| 58 |

|

| 59 |

return self.detector(inputs, use_inferer=use_inferer, *args, **kwargs)

|

|

|

|

| 25 |

Args:

|

| 26 |

detector: the RetinaNetDetector that converts network output BxCxMxN or BxCxMxNxP

|

| 27 |

map into boxes and classification scores.

|

| 28 |

+

force_sliding_window: whether to force using a SlidingWindowInferer to do the inference.

|

| 29 |

+

If False, will check the input spatial size to decide whether to simply

|

| 30 |

+

forward the network or using SlidingWindowInferer.

|

| 31 |

+

If True, will force using SlidingWindowInferer to do the inference.

|

| 32 |

args: other optional args to be passed to detector.

|

| 33 |

kwargs: other optional keyword args to be passed to detector.

|

| 34 |

"""

|

| 35 |

|

| 36 |

+

def __init__(self, detector: RetinaNetDetector, force_sliding_window: bool = False) -> None:

|

| 37 |

Inferer.__init__(self)

|

| 38 |

self.detector = detector

|

| 39 |

self.sliding_window_size = None

|

| 40 |

+

self.force_sliding_window = force_sliding_window

|

| 41 |

if self.detector.inferer is not None:

|

| 42 |

if hasattr(self.detector.inferer, "roi_size"):

|

| 43 |

self.sliding_window_size = np.prod(self.detector.inferer.roi_size)

|

|

|

|

| 57 |

|

| 58 |

# if image smaller than sliding window roi size, no need to use sliding window inferer

|

| 59 |

# use sliding window inferer only when image is large

|

| 60 |

+

use_inferer = (

|

| 61 |

+

self.force_sliding_window

|

| 62 |

+

or self.sliding_window_size is not None

|

| 63 |

+

and not all([data_i[0, ...].numel() < self.sliding_window_size for data_i in inputs])

|

| 64 |

)

|

| 65 |

|

| 66 |

return self.detector(inputs, use_inferer=use_inferer, *args, **kwargs)

|