complete the model package

Browse files- LICENSE +201 -0

- README.md +154 -0

- configs/evaluate.json +38 -0

- configs/inference.json +151 -0

- configs/logging.conf +21 -0

- configs/metadata.json +115 -0

- configs/multi_gpu_train.json +36 -0

- configs/train.json +525 -0

- docs/README.md +147 -0

- docs/data_license.txt +6 -0

- models/model.pt +3 -0

- models/stage0/model.pt +3 -0

- scripts/prepare_patches.py +232 -0

LICENSE

ADDED

|

@@ -0,0 +1,201 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Apache License

|

| 2 |

+

Version 2.0, January 2004

|

| 3 |

+

http://www.apache.org/licenses/

|

| 4 |

+

|

| 5 |

+

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

| 6 |

+

|

| 7 |

+

1. Definitions.

|

| 8 |

+

|

| 9 |

+

"License" shall mean the terms and conditions for use, reproduction,

|

| 10 |

+

and distribution as defined by Sections 1 through 9 of this document.

|

| 11 |

+

|

| 12 |

+

"Licensor" shall mean the copyright owner or entity authorized by

|

| 13 |

+

the copyright owner that is granting the License.

|

| 14 |

+

|

| 15 |

+

"Legal Entity" shall mean the union of the acting entity and all

|

| 16 |

+

other entities that control, are controlled by, or are under common

|

| 17 |

+

control with that entity. For the purposes of this definition,

|

| 18 |

+

"control" means (i) the power, direct or indirect, to cause the

|

| 19 |

+

direction or management of such entity, whether by contract or

|

| 20 |

+

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

| 21 |

+

outstanding shares, or (iii) beneficial ownership of such entity.

|

| 22 |

+

|

| 23 |

+

"You" (or "Your") shall mean an individual or Legal Entity

|

| 24 |

+

exercising permissions granted by this License.

|

| 25 |

+

|

| 26 |

+

"Source" form shall mean the preferred form for making modifications,

|

| 27 |

+

including but not limited to software source code, documentation

|

| 28 |

+

source, and configuration files.

|

| 29 |

+

|

| 30 |

+

"Object" form shall mean any form resulting from mechanical

|

| 31 |

+

transformation or translation of a Source form, including but

|

| 32 |

+

not limited to compiled object code, generated documentation,

|

| 33 |

+

and conversions to other media types.

|

| 34 |

+

|

| 35 |

+

"Work" shall mean the work of authorship, whether in Source or

|

| 36 |

+

Object form, made available under the License, as indicated by a

|

| 37 |

+

copyright notice that is included in or attached to the work

|

| 38 |

+

(an example is provided in the Appendix below).

|

| 39 |

+

|

| 40 |

+

"Derivative Works" shall mean any work, whether in Source or Object

|

| 41 |

+

form, that is based on (or derived from) the Work and for which the

|

| 42 |

+

editorial revisions, annotations, elaborations, or other modifications

|

| 43 |

+

represent, as a whole, an original work of authorship. For the purposes

|

| 44 |

+

of this License, Derivative Works shall not include works that remain

|

| 45 |

+

separable from, or merely link (or bind by name) to the interfaces of,

|

| 46 |

+

the Work and Derivative Works thereof.

|

| 47 |

+

|

| 48 |

+

"Contribution" shall mean any work of authorship, including

|

| 49 |

+

the original version of the Work and any modifications or additions

|

| 50 |

+

to that Work or Derivative Works thereof, that is intentionally

|

| 51 |

+

submitted to Licensor for inclusion in the Work by the copyright owner

|

| 52 |

+

or by an individual or Legal Entity authorized to submit on behalf of

|

| 53 |

+

the copyright owner. For the purposes of this definition, "submitted"

|

| 54 |

+

means any form of electronic, verbal, or written communication sent

|

| 55 |

+

to the Licensor or its representatives, including but not limited to

|

| 56 |

+

communication on electronic mailing lists, source code control systems,

|

| 57 |

+

and issue tracking systems that are managed by, or on behalf of, the

|

| 58 |

+

Licensor for the purpose of discussing and improving the Work, but

|

| 59 |

+

excluding communication that is conspicuously marked or otherwise

|

| 60 |

+

designated in writing by the copyright owner as "Not a Contribution."

|

| 61 |

+

|

| 62 |

+

"Contributor" shall mean Licensor and any individual or Legal Entity

|

| 63 |

+

on behalf of whom a Contribution has been received by Licensor and

|

| 64 |

+

subsequently incorporated within the Work.

|

| 65 |

+

|

| 66 |

+

2. Grant of Copyright License. Subject to the terms and conditions of

|

| 67 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 68 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 69 |

+

copyright license to reproduce, prepare Derivative Works of,

|

| 70 |

+

publicly display, publicly perform, sublicense, and distribute the

|

| 71 |

+

Work and such Derivative Works in Source or Object form.

|

| 72 |

+

|

| 73 |

+

3. Grant of Patent License. Subject to the terms and conditions of

|

| 74 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 75 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 76 |

+

(except as stated in this section) patent license to make, have made,

|

| 77 |

+

use, offer to sell, sell, import, and otherwise transfer the Work,

|

| 78 |

+

where such license applies only to those patent claims licensable

|

| 79 |

+

by such Contributor that are necessarily infringed by their

|

| 80 |

+

Contribution(s) alone or by combination of their Contribution(s)

|

| 81 |

+

with the Work to which such Contribution(s) was submitted. If You

|

| 82 |

+

institute patent litigation against any entity (including a

|

| 83 |

+

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

| 84 |

+

or a Contribution incorporated within the Work constitutes direct

|

| 85 |

+

or contributory patent infringement, then any patent licenses

|

| 86 |

+

granted to You under this License for that Work shall terminate

|

| 87 |

+

as of the date such litigation is filed.

|

| 88 |

+

|

| 89 |

+

4. Redistribution. You may reproduce and distribute copies of the

|

| 90 |

+

Work or Derivative Works thereof in any medium, with or without

|

| 91 |

+

modifications, and in Source or Object form, provided that You

|

| 92 |

+

meet the following conditions:

|

| 93 |

+

|

| 94 |

+

(a) You must give any other recipients of the Work or

|

| 95 |

+

Derivative Works a copy of this License; and

|

| 96 |

+

|

| 97 |

+

(b) You must cause any modified files to carry prominent notices

|

| 98 |

+

stating that You changed the files; and

|

| 99 |

+

|

| 100 |

+

(c) You must retain, in the Source form of any Derivative Works

|

| 101 |

+

that You distribute, all copyright, patent, trademark, and

|

| 102 |

+

attribution notices from the Source form of the Work,

|

| 103 |

+

excluding those notices that do not pertain to any part of

|

| 104 |

+

the Derivative Works; and

|

| 105 |

+

|

| 106 |

+

(d) If the Work includes a "NOTICE" text file as part of its

|

| 107 |

+

distribution, then any Derivative Works that You distribute must

|

| 108 |

+

include a readable copy of the attribution notices contained

|

| 109 |

+

within such NOTICE file, excluding those notices that do not

|

| 110 |

+

pertain to any part of the Derivative Works, in at least one

|

| 111 |

+

of the following places: within a NOTICE text file distributed

|

| 112 |

+

as part of the Derivative Works; within the Source form or

|

| 113 |

+

documentation, if provided along with the Derivative Works; or,

|

| 114 |

+

within a display generated by the Derivative Works, if and

|

| 115 |

+

wherever such third-party notices normally appear. The contents

|

| 116 |

+

of the NOTICE file are for informational purposes only and

|

| 117 |

+

do not modify the License. You may add Your own attribution

|

| 118 |

+

notices within Derivative Works that You distribute, alongside

|

| 119 |

+

or as an addendum to the NOTICE text from the Work, provided

|

| 120 |

+

that such additional attribution notices cannot be construed

|

| 121 |

+

as modifying the License.

|

| 122 |

+

|

| 123 |

+

You may add Your own copyright statement to Your modifications and

|

| 124 |

+

may provide additional or different license terms and conditions

|

| 125 |

+

for use, reproduction, or distribution of Your modifications, or

|

| 126 |

+

for any such Derivative Works as a whole, provided Your use,

|

| 127 |

+

reproduction, and distribution of the Work otherwise complies with

|

| 128 |

+

the conditions stated in this License.

|

| 129 |

+

|

| 130 |

+

5. Submission of Contributions. Unless You explicitly state otherwise,

|

| 131 |

+

any Contribution intentionally submitted for inclusion in the Work

|

| 132 |

+

by You to the Licensor shall be under the terms and conditions of

|

| 133 |

+

this License, without any additional terms or conditions.

|

| 134 |

+

Notwithstanding the above, nothing herein shall supersede or modify

|

| 135 |

+

the terms of any separate license agreement you may have executed

|

| 136 |

+

with Licensor regarding such Contributions.

|

| 137 |

+

|

| 138 |

+

6. Trademarks. This License does not grant permission to use the trade

|

| 139 |

+

names, trademarks, service marks, or product names of the Licensor,

|

| 140 |

+

except as required for reasonable and customary use in describing the

|

| 141 |

+

origin of the Work and reproducing the content of the NOTICE file.

|

| 142 |

+

|

| 143 |

+

7. Disclaimer of Warranty. Unless required by applicable law or

|

| 144 |

+

agreed to in writing, Licensor provides the Work (and each

|

| 145 |

+

Contributor provides its Contributions) on an "AS IS" BASIS,

|

| 146 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

| 147 |

+

implied, including, without limitation, any warranties or conditions

|

| 148 |

+

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

| 149 |

+

PARTICULAR PURPOSE. You are solely responsible for determining the

|

| 150 |

+

appropriateness of using or redistributing the Work and assume any

|

| 151 |

+

risks associated with Your exercise of permissions under this License.

|

| 152 |

+

|

| 153 |

+

8. Limitation of Liability. In no event and under no legal theory,

|

| 154 |

+

whether in tort (including negligence), contract, or otherwise,

|

| 155 |

+

unless required by applicable law (such as deliberate and grossly

|

| 156 |

+

negligent acts) or agreed to in writing, shall any Contributor be

|

| 157 |

+

liable to You for damages, including any direct, indirect, special,

|

| 158 |

+

incidental, or consequential damages of any character arising as a

|

| 159 |

+

result of this License or out of the use or inability to use the

|

| 160 |

+

Work (including but not limited to damages for loss of goodwill,

|

| 161 |

+

work stoppage, computer failure or malfunction, or any and all

|

| 162 |

+

other commercial damages or losses), even if such Contributor

|

| 163 |

+

has been advised of the possibility of such damages.

|

| 164 |

+

|

| 165 |

+

9. Accepting Warranty or Additional Liability. While redistributing

|

| 166 |

+

the Work or Derivative Works thereof, You may choose to offer,

|

| 167 |

+

and charge a fee for, acceptance of support, warranty, indemnity,

|

| 168 |

+

or other liability obligations and/or rights consistent with this

|

| 169 |

+

License. However, in accepting such obligations, You may act only

|

| 170 |

+

on Your own behalf and on Your sole responsibility, not on behalf

|

| 171 |

+

of any other Contributor, and only if You agree to indemnify,

|

| 172 |

+

defend, and hold each Contributor harmless for any liability

|

| 173 |

+

incurred by, or claims asserted against, such Contributor by reason

|

| 174 |

+

of your accepting any such warranty or additional liability.

|

| 175 |

+

|

| 176 |

+

END OF TERMS AND CONDITIONS

|

| 177 |

+

|

| 178 |

+

APPENDIX: How to apply the Apache License to your work.

|

| 179 |

+

|

| 180 |

+

To apply the Apache License to your work, attach the following

|

| 181 |

+

boilerplate notice, with the fields enclosed by brackets "[]"

|

| 182 |

+

replaced with your own identifying information. (Don't include

|

| 183 |

+

the brackets!) The text should be enclosed in the appropriate

|

| 184 |

+

comment syntax for the file format. We also recommend that a

|

| 185 |

+

file or class name and description of purpose be included on the

|

| 186 |

+

same "printed page" as the copyright notice for easier

|

| 187 |

+

identification within third-party archives.

|

| 188 |

+

|

| 189 |

+

Copyright [yyyy] [name of copyright owner]

|

| 190 |

+

|

| 191 |

+

Licensed under the Apache License, Version 2.0 (the "License");

|

| 192 |

+

you may not use this file except in compliance with the License.

|

| 193 |

+

You may obtain a copy of the License at

|

| 194 |

+

|

| 195 |

+

http://www.apache.org/licenses/LICENSE-2.0

|

| 196 |

+

|

| 197 |

+

Unless required by applicable law or agreed to in writing, software

|

| 198 |

+

distributed under the License is distributed on an "AS IS" BASIS,

|

| 199 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 200 |

+

See the License for the specific language governing permissions and

|

| 201 |

+

limitations under the License.

|

README.md

ADDED

|

@@ -0,0 +1,154 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

tags:

|

| 3 |

+

- monai

|

| 4 |

+

- medical

|

| 5 |

+

library_name: monai

|

| 6 |

+

license: apache-2.0

|

| 7 |

+

---

|

| 8 |

+

# Model Overview

|

| 9 |

+

|

| 10 |

+

A pre-trained model for simultaneous segmentation and classification of nuclei within multi-tissue histology images based on CoNSeP data. The details of the model can be found in [1].

|

| 11 |

+

|

| 12 |

+

## Workflow

|

| 13 |

+

|

| 14 |

+

The model is trained to simultaneous segment and classify nuclei. Training is done via a two-stage approach. First initialized the model with pre-trained weights on the [ImageNet dataset](https://ieeexplore.ieee.org/document/5206848), trained only the decoders for the first 50 epochs, and then fine-tuned all layers for another 50 epochs. There are two training modes in total. If "original" mode is specified, it uses [270, 270] and [80, 80] for `patch_size` and `out_size` respectively. If "fast" mode is specified, it uses [256, 256] and [164, 164] for `patch_size` and `out_size` respectively. The results we show below are based on the "fast" model.

|

| 15 |

+

|

| 16 |

+

- We train the first stage with pre-trained weights from some internal data.

|

| 17 |

+

|

| 18 |

+

- The original author's repo also has pre-trained weights which is for non-commercial use. Each user is responsible for checking the content of models/datasets and the applicable licenses and determining if suitable for the intended use. The license for the pre-trained model is different than MONAI license. Please check the source where these weights are obtained from: <https://github.com/vqdang/hover_net#data-format>

|

| 19 |

+

|

| 20 |

+

`PRETRAIN_MODEL_URL` is "https://drive.google.com/u/1/uc?id=1KntZge40tAHgyXmHYVqZZ5d2p_4Qr2l5&export=download" which can be used in bash code below.

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

## Data

|

| 25 |

+

|

| 26 |

+

The training data is from <https://warwick.ac.uk/fac/cross_fac/tia/data/hovernet/>.

|

| 27 |

+

|

| 28 |

+

- Target: segment instance-level nuclei and classify the nuclei type

|

| 29 |

+

- Task: Segmentation and classification

|

| 30 |

+

- Modality: RGB images

|

| 31 |

+

- Size: 41 image tiles (2009 patches)

|

| 32 |

+

|

| 33 |

+

The provided labelled data was partitioned, based on the original split, into training (27 tiles) and testing (14 tiles) datasets.

|

| 34 |

+

|

| 35 |

+

After download the datasets, please run `scripts/prepare_patches.py` to prepare patches from tiles. Prepared patches are saved in `your-concep-dataset-path`/Prepared. The implementation is referring to <https://github.com/vqdang/hover_net/blob/master/extract_patches.py>. The command is like:

|

| 36 |

+

|

| 37 |

+

```

|

| 38 |

+

python scripts/prepare_patches.py -root your-concep-dataset-path

|

| 39 |

+

```

|

| 40 |

+

|

| 41 |

+

## Training configuration

|

| 42 |

+

|

| 43 |

+

This model utilized a two-stage approach. The training was performed with the following:

|

| 44 |

+

|

| 45 |

+

- GPU: At least 24GB of GPU memory.

|

| 46 |

+

- Actual Model Input: 256 x 256

|

| 47 |

+

- AMP: True

|

| 48 |

+

- Optimizer: Adam

|

| 49 |

+

- Learning Rate: 1e-4

|

| 50 |

+

- Loss: HoVerNetLoss

|

| 51 |

+

|

| 52 |

+

## Input

|

| 53 |

+

|

| 54 |

+

Input: RGB images

|

| 55 |

+

|

| 56 |

+

## Output

|

| 57 |

+

|

| 58 |

+

Output: a dictionary with the following keys:

|

| 59 |

+

|

| 60 |

+

1. nucleus_prediction: predict whether or not a pixel belongs to the nuclei or background

|

| 61 |

+

2. horizontal_vertical: predict the horizontal and vertical distances of nuclear pixels to their centres of mass

|

| 62 |

+

3. type_prediction: predict the type of nucleus for each pixel

|

| 63 |

+

|

| 64 |

+

## Model Performance

|

| 65 |

+

|

| 66 |

+

The achieved metrics on the validation data are:

|

| 67 |

+

|

| 68 |

+

Fast mode:

|

| 69 |

+

- Binary Dice: 0.8293

|

| 70 |

+

- PQ: 0.4936

|

| 71 |

+

- F1d: 0.7480

|

| 72 |

+

|

| 73 |

+

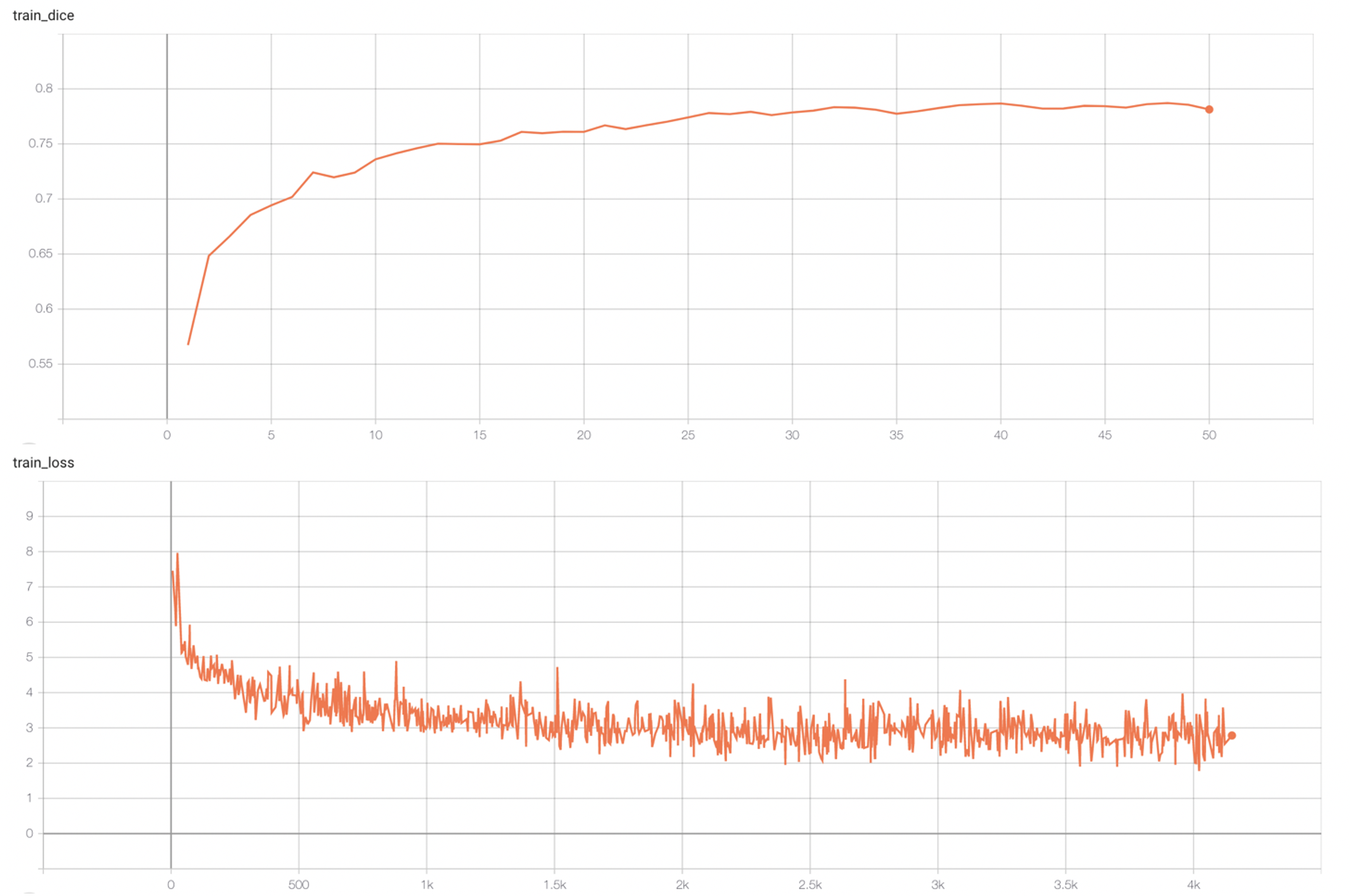

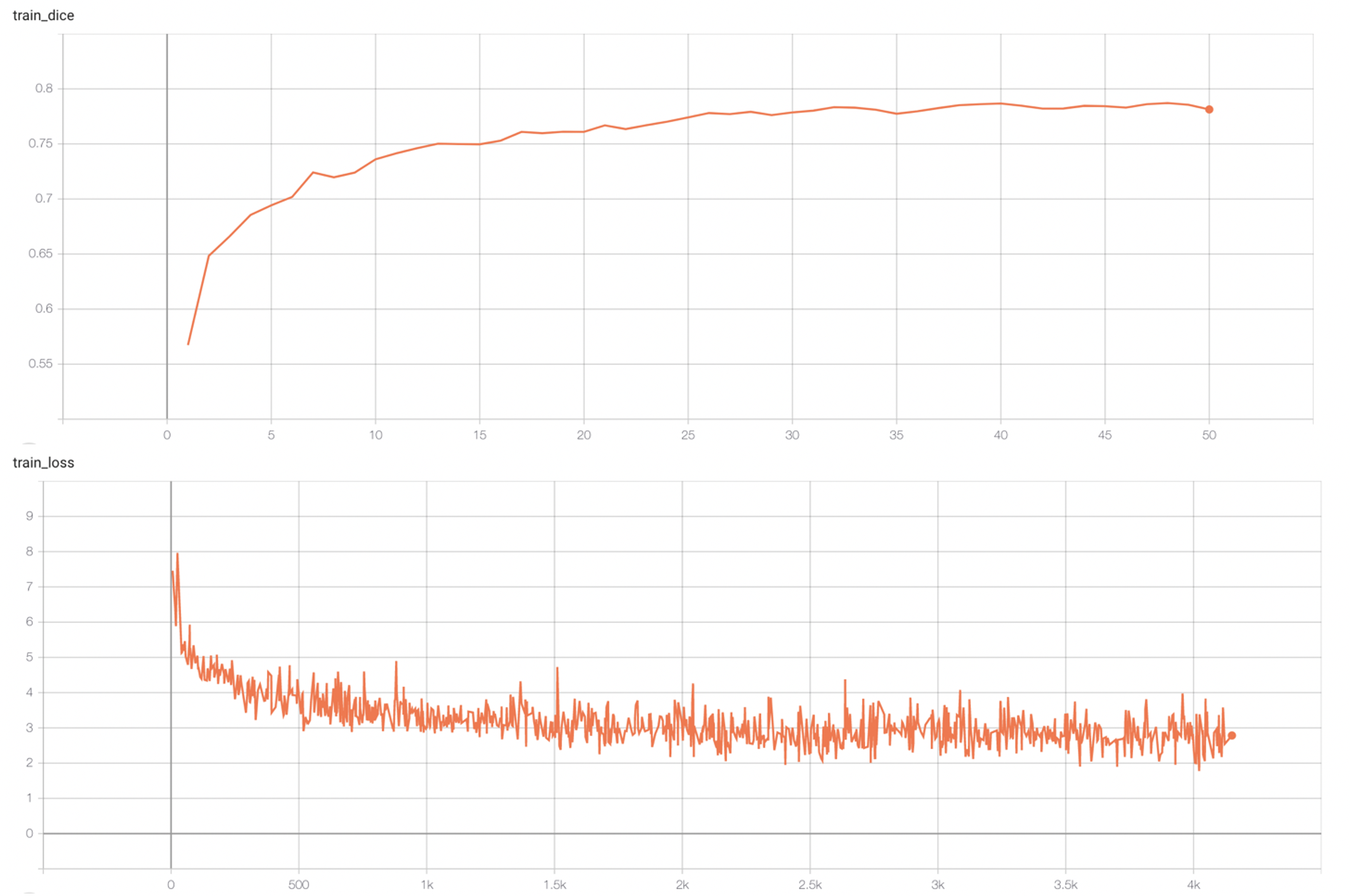

#### Training Loss and Dice

|

| 74 |

+

|

| 75 |

+

stage1:

|

| 76 |

+

|

| 77 |

+

|

| 78 |

+

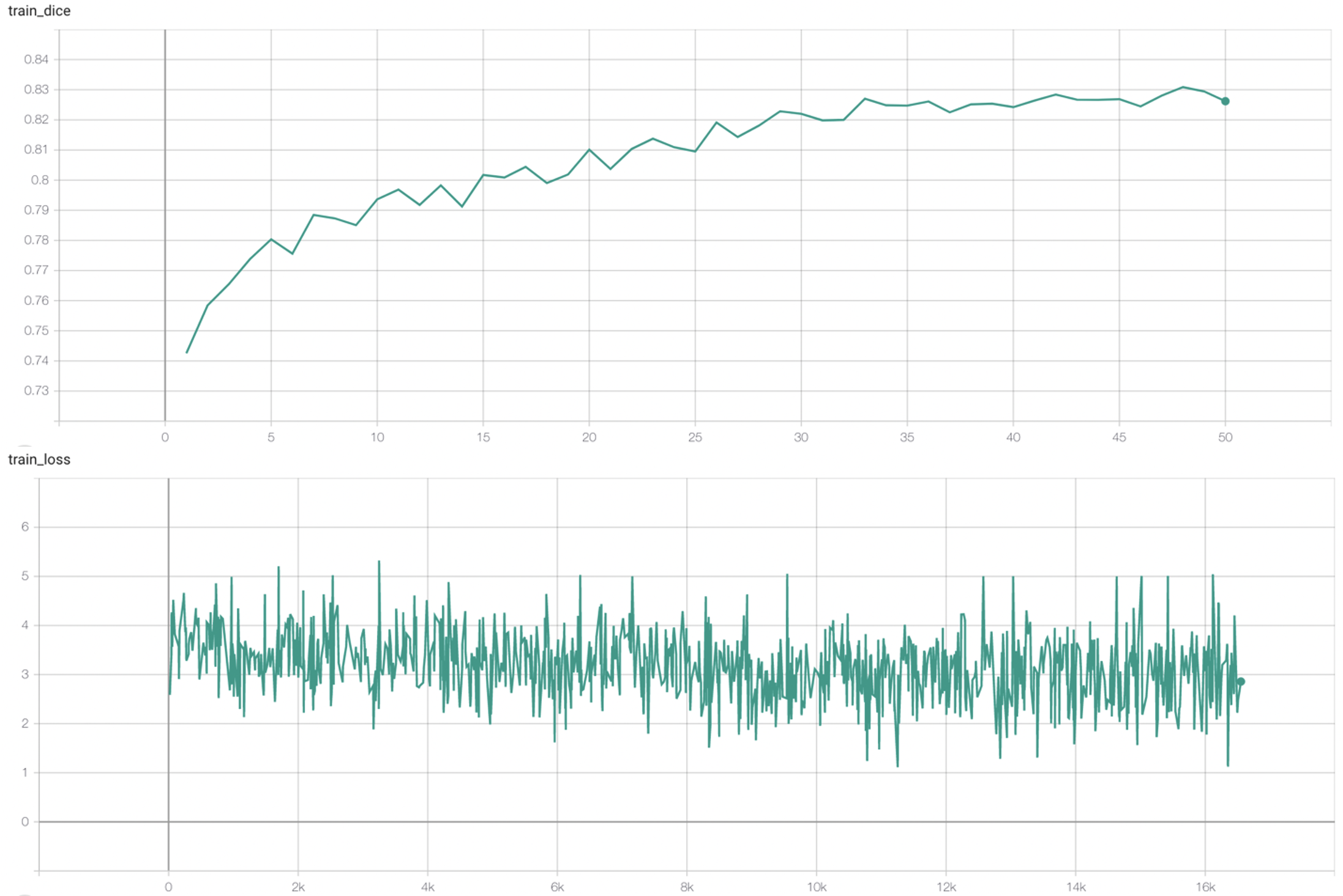

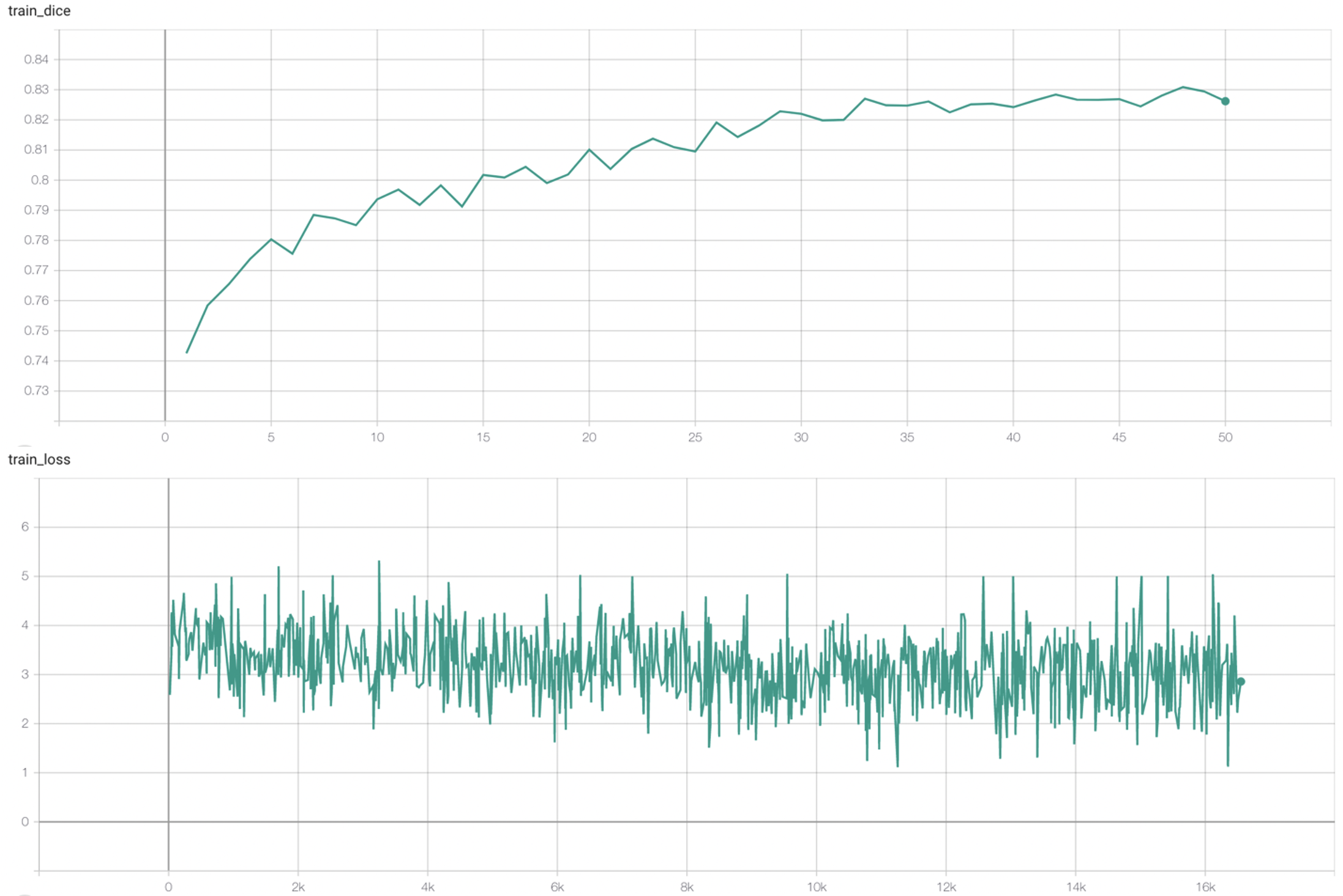

stage2:

|

| 79 |

+

|

| 80 |

+

|

| 81 |

+

#### Validation Dice

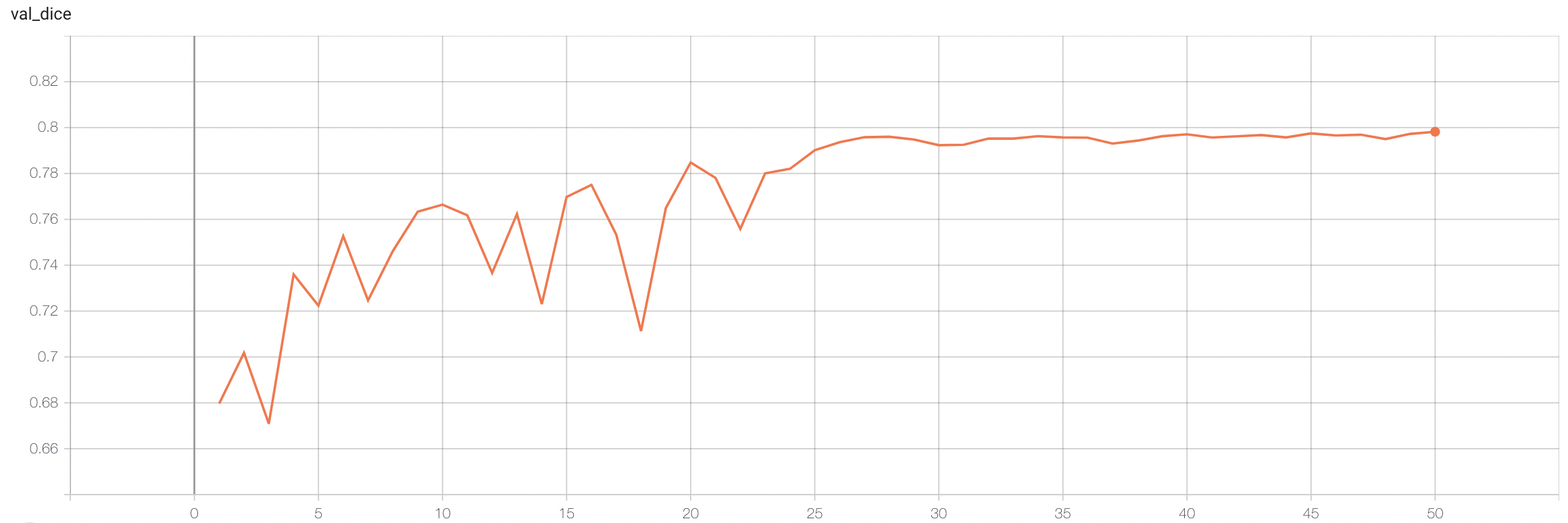

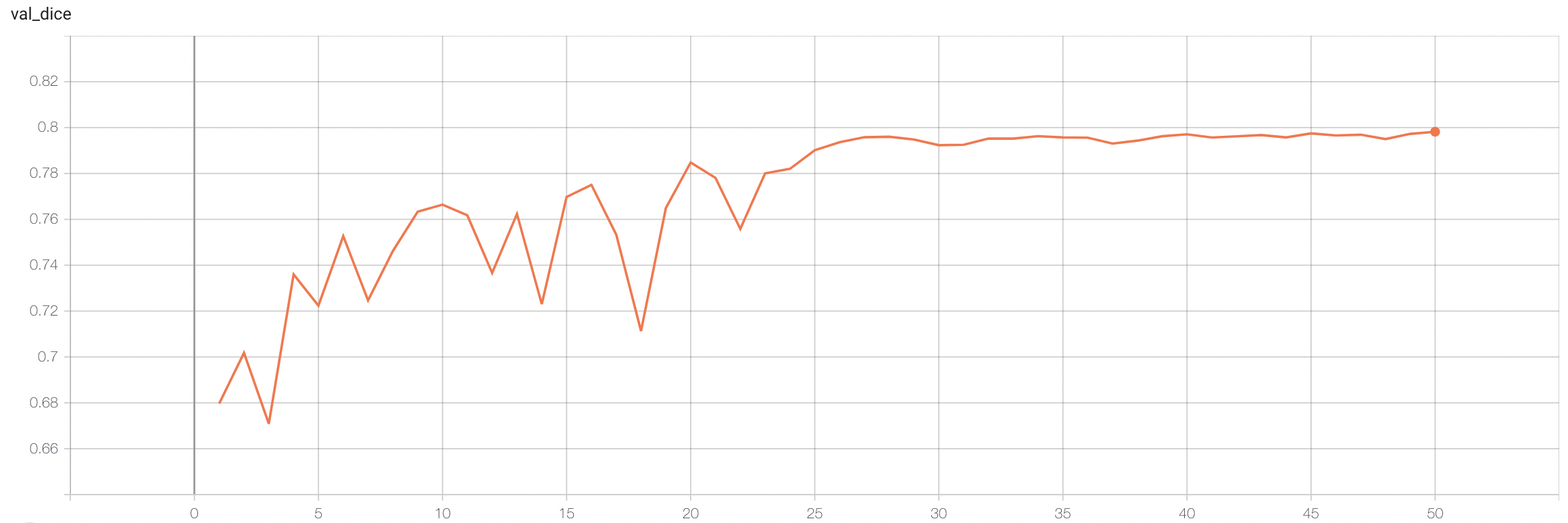

|

| 82 |

+

|

| 83 |

+

stage1:

|

| 84 |

+

|

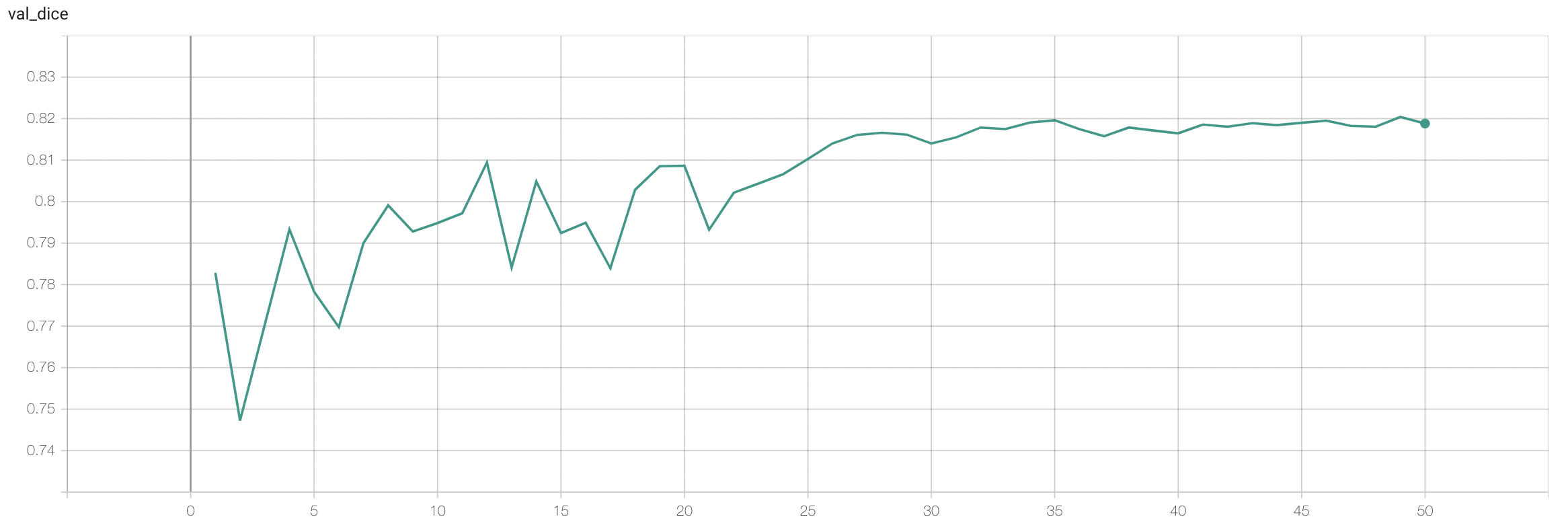

| 85 |

+

|

| 86 |

+

|

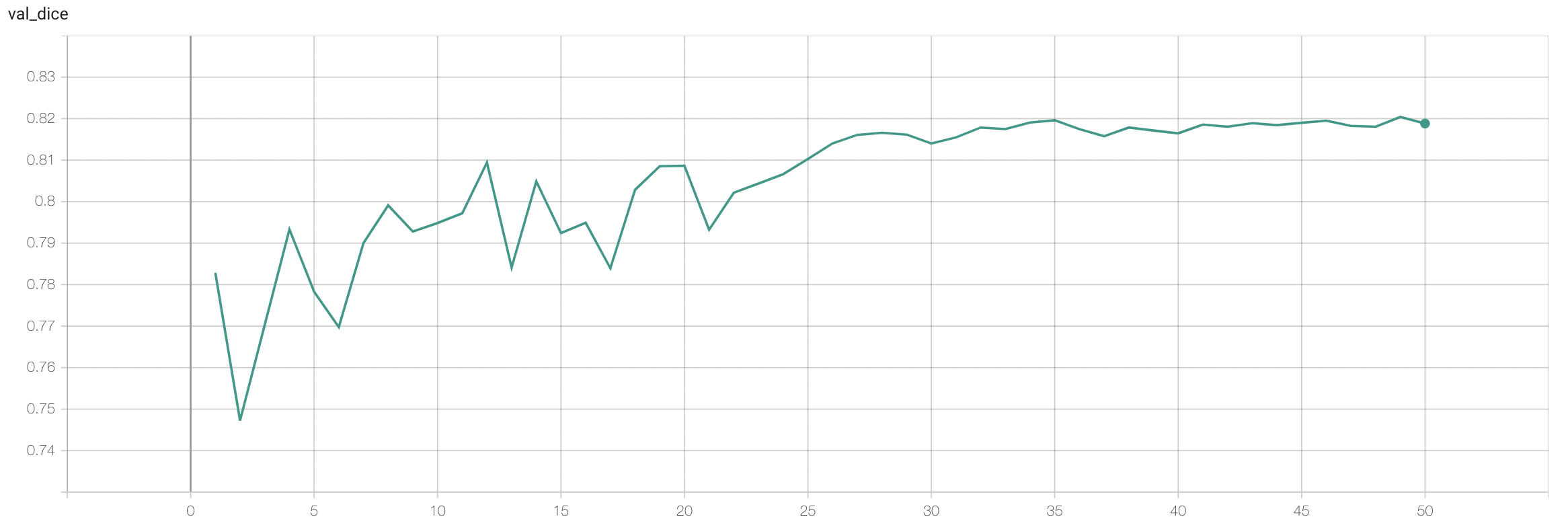

| 87 |

+

stage2:

|

| 88 |

+

|

| 89 |

+

|

| 90 |

+

|

| 91 |

+

## commands example

|

| 92 |

+

|

| 93 |

+

Execute training:

|

| 94 |

+

|

| 95 |

+

- Run first stage

|

| 96 |

+

|

| 97 |

+

```

|

| 98 |

+

python -m monai.bundle run training --meta_file configs/metadata.json --config_file configs/train.json --logging_file configs/logging.conf --network_def#pretrained_url `PRETRAIN_MODEL_URL` --stage 0

|

| 99 |

+

```

|

| 100 |

+

|

| 101 |

+

- Run second stage

|

| 102 |

+

|

| 103 |

+

```

|

| 104 |

+

python -m monai.bundle run training --meta_file configs/metadata.json --config_file configs/train.json --logging_file configs/logging.conf --network_def#freeze_encoder false --network_def#pretrained_url None --stage 1

|

| 105 |

+

```

|

| 106 |

+

|

| 107 |

+

Override the `train` config to execute multi-GPU training:

|

| 108 |

+

|

| 109 |

+

- Run first stage

|

| 110 |

+

|

| 111 |

+

```

|

| 112 |

+

torchrun --standalone --nnodes=1 --nproc_per_node=2 -m monai.bundle run training --meta_file configs/metadata.json --config_file "['configs/train.json','configs/multi_gpu_train.json']" --logging_file configs/logging.conf --train#dataloader#batch_size 8 --network_def#freeze_encoder true --network_def#pretrained_url `PRETRAIN_MODEL_URL` --stage 0

|

| 113 |

+

```

|

| 114 |

+

|

| 115 |

+

- Run second stage

|

| 116 |

+

|

| 117 |

+

```

|

| 118 |

+

torchrun --standalone --nnodes=1 --nproc_per_node=2 -m monai.bundle run training --meta_file configs/metadata.json --config_file "['configs/train.json','configs/multi_gpu_train.json']" --logging_file configs/logging.conf --train#dataloader#batch_size 4 --network_def#freeze_encoder false --network_def#pretrained_url None --stage 1

|

| 119 |

+

```

|

| 120 |

+

|

| 121 |

+

Override the `train` config to execute evaluation with the trained model:

|

| 122 |

+

|

| 123 |

+

```

|

| 124 |

+

python -m monai.bundle run evaluating --meta_file configs/metadata.json --config_file "['configs/train.json','configs/evaluate.json']" --logging_file configs/logging.conf

|

| 125 |

+

```

|

| 126 |

+

|

| 127 |

+

### Execute inference

|

| 128 |

+

|

| 129 |

+

```

|

| 130 |

+

python -m monai.bundle run evaluating --meta_file configs/metadata.json --config_file configs/inference.json --logging_file configs/logging.conf

|

| 131 |

+

```

|

| 132 |

+

|

| 133 |

+

# Disclaimer

|

| 134 |

+

|

| 135 |

+

This is an example, not to be used for diagnostic purposes.

|

| 136 |

+

|

| 137 |

+

# References

|

| 138 |

+

|

| 139 |

+

[1] Simon Graham, Quoc Dang Vu, Shan E Ahmed Raza, Ayesha Azam, Yee Wah Tsang, Jin Tae Kwak, Nasir Rajpoot, Hover-Net: Simultaneous segmentation and classification of nuclei in multi-tissue histology images, Medical Image Analysis, 2019 https://doi.org/10.1016/j.media.2019.101563

|

| 140 |

+

|

| 141 |

+

# License

|

| 142 |

+

Copyright (c) MONAI Consortium

|

| 143 |

+

|

| 144 |

+

Licensed under the Apache License, Version 2.0 (the "License");

|

| 145 |

+

you may not use this file except in compliance with the License.

|

| 146 |

+

You may obtain a copy of the License at

|

| 147 |

+

|

| 148 |

+

http://www.apache.org/licenses/LICENSE-2.0

|

| 149 |

+

|

| 150 |

+

Unless required by applicable law or agreed to in writing, software

|

| 151 |

+

distributed under the License is distributed on an "AS IS" BASIS,

|

| 152 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 153 |

+

See the License for the specific language governing permissions and

|

| 154 |

+

limitations under the License.

|

configs/evaluate.json

ADDED

|

@@ -0,0 +1,38 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"network_def": {

|

| 3 |

+

"_target_": "HoVerNet",

|

| 4 |

+

"mode": "@hovernet_mode",

|

| 5 |

+

"adapt_standard_resnet": true,

|

| 6 |

+

"in_channels": 3,

|

| 7 |

+

"out_classes": 5

|

| 8 |

+

},

|

| 9 |

+

"validate#handlers": [

|

| 10 |

+

{

|

| 11 |

+

"_target_": "CheckpointLoader",

|

| 12 |

+

"load_path": "$os.path.join(@bundle_root, 'models', 'model.pt')",

|

| 13 |

+

"load_dict": {

|

| 14 |

+

"model": "@network"

|

| 15 |

+

}

|

| 16 |

+

},

|

| 17 |

+

{

|

| 18 |

+

"_target_": "StatsHandler",

|

| 19 |

+

"iteration_log": false

|

| 20 |

+

},

|

| 21 |

+

{

|

| 22 |

+

"_target_": "MetricsSaver",

|

| 23 |

+

"save_dir": "@output_dir",

|

| 24 |

+

"metrics": [

|

| 25 |

+

"val_mean_dice"

|

| 26 |

+

],

|

| 27 |

+

"metric_details": [

|

| 28 |

+

"val_mean_dice"

|

| 29 |

+

],

|

| 30 |

+

"batch_transform": "$monai.handlers.from_engine(['image_meta_dict'])",

|

| 31 |

+

"summary_ops": "*"

|

| 32 |

+

}

|

| 33 |

+

],

|

| 34 |

+

"evaluating": [

|

| 35 |

+

"$setattr(torch.backends.cudnn, 'benchmark', True)",

|

| 36 |

+

"$@validate#evaluator.run()"

|

| 37 |

+

]

|

| 38 |

+

}

|

configs/inference.json

ADDED

|

@@ -0,0 +1,151 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"imports": [

|

| 3 |

+

"$import glob",

|

| 4 |

+

"$import os"

|

| 5 |

+

],

|

| 6 |

+

"bundle_root": "$os.getcwd()",

|

| 7 |

+

"output_dir": "$os.path.join(@bundle_root, 'eval')",

|

| 8 |

+

"dataset_dir": "/workspace/Data/Pathology/CoNSeP/Test/Images",

|

| 9 |

+

"num_cpus": 2,

|

| 10 |

+

"batch_size": 1,

|

| 11 |

+

"sw_batch_size": 16,

|

| 12 |

+

"hovernet_mode": "fast",

|

| 13 |

+

"patch_size": 256,

|

| 14 |

+

"out_size": 164,

|

| 15 |

+

"device": "$torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')",

|

| 16 |

+

"network_def": {

|

| 17 |

+

"_target_": "HoVerNet",

|

| 18 |

+

"mode": "@hovernet_mode",

|

| 19 |

+

"adapt_standard_resnet": true,

|

| 20 |

+

"in_channels": 3,

|

| 21 |

+

"out_classes": 5

|

| 22 |

+

},

|

| 23 |

+

"network": "$@network_def.to(@device)",

|

| 24 |

+

"preprocessing": {

|

| 25 |

+

"_target_": "Compose",

|

| 26 |

+

"transforms": [

|

| 27 |

+

{

|

| 28 |

+

"_target_": "LoadImaged",

|

| 29 |

+

"keys": "image",

|

| 30 |

+

"reader": "$monai.data.PILReader",

|

| 31 |

+

"converter": "$lambda x: x.convert('RGB')"

|

| 32 |

+

},

|

| 33 |

+

{

|

| 34 |

+

"_target_": "EnsureChannelFirstd",

|

| 35 |

+

"keys": "image"

|

| 36 |

+

},

|

| 37 |

+

{

|

| 38 |

+

"_target_": "CastToTyped",

|

| 39 |

+

"keys": "image",

|

| 40 |

+

"dtype": "float32"

|

| 41 |

+

},

|

| 42 |

+

{

|

| 43 |

+

"_target_": "ScaleIntensityRanged",

|

| 44 |

+

"keys": "image",

|

| 45 |

+

"a_min": 0.0,

|

| 46 |

+

"a_max": 255.0,

|

| 47 |

+

"b_min": 0.0,

|

| 48 |

+

"b_max": 1.0,

|

| 49 |

+

"clip": true

|

| 50 |

+

}

|

| 51 |

+

]

|

| 52 |

+

},

|

| 53 |

+

"data_list": "$[{'image': image} for image in glob.glob(os.path.join(@dataset_dir, '*.png'))]",

|

| 54 |

+

"dataset": {

|

| 55 |

+

"_target_": "Dataset",

|

| 56 |

+

"data": "@data_list",

|

| 57 |

+

"transform": "@preprocessing"

|

| 58 |

+

},

|

| 59 |

+

"dataloader": {

|

| 60 |

+

"_target_": "DataLoader",

|

| 61 |

+

"dataset": "@dataset",

|

| 62 |

+

"batch_size": "@batch_size",

|

| 63 |

+

"shuffle": false,

|

| 64 |

+

"num_workers": "@num_cpus",

|

| 65 |

+

"pin_memory": true

|

| 66 |

+

},

|

| 67 |

+

"inferer": {

|

| 68 |

+

"_target_": "SlidingWindowHoVerNetInferer",

|

| 69 |

+

"roi_size": "@patch_size",

|

| 70 |

+

"sw_batch_size": "@sw_batch_size",

|

| 71 |

+

"overlap": "$1.0 - float(@out_size) / float(@patch_size)",

|

| 72 |

+

"padding_mode": "constant",

|

| 73 |

+

"cval": 0,

|

| 74 |

+

"progress": true,

|

| 75 |

+

"extra_input_padding": "$((@patch_size - @out_size) // 2,) * 4"

|

| 76 |

+

},

|

| 77 |

+

"postprocessing": {

|

| 78 |

+

"_target_": "Compose",

|

| 79 |

+

"transforms": [

|

| 80 |

+

{

|

| 81 |

+

"_target_": "FlattenSubKeysd",

|

| 82 |

+

"keys": "pred",

|

| 83 |

+

"sub_keys": [

|

| 84 |

+

"horizontal_vertical",

|

| 85 |

+

"nucleus_prediction",

|

| 86 |

+

"type_prediction"

|

| 87 |

+

],

|

| 88 |

+

"delete_keys": true

|

| 89 |

+

},

|

| 90 |

+

{

|

| 91 |

+

"_target_": "HoVerNetInstanceMapPostProcessingd",

|

| 92 |

+

"sobel_kernel_size": 21,

|

| 93 |

+

"marker_threshold": 0.4,

|

| 94 |

+

"marker_radius": 2

|

| 95 |

+

},

|

| 96 |

+

{

|

| 97 |

+

"_target_": "HoVerNetNuclearTypePostProcessingd"

|

| 98 |

+

},

|

| 99 |

+

{

|

| 100 |

+

"_target_": "FromMetaTensord",

|

| 101 |

+

"keys": [

|

| 102 |

+

"image"

|

| 103 |

+

]

|

| 104 |

+

},

|

| 105 |

+

{

|

| 106 |

+

"_target_": "SaveImaged",

|

| 107 |

+

"keys": "instance_map",

|

| 108 |

+

"meta_keys": "image_meta_dict",

|

| 109 |

+

"output_ext": ".nii.gz",

|

| 110 |

+

"output_dir": "@output_dir",

|

| 111 |

+

"output_postfix": "instance_map",

|

| 112 |

+

"output_dtype": "uint32",

|

| 113 |

+

"separate_folder": false

|

| 114 |

+

},

|

| 115 |

+

{

|

| 116 |

+

"_target_": "SaveImaged",

|

| 117 |

+

"keys": "type_map",

|

| 118 |

+

"meta_keys": "image_meta_dict",

|

| 119 |

+

"output_ext": ".nii.gz",

|

| 120 |

+

"output_dir": "@output_dir",

|

| 121 |

+

"output_postfix": "type_map",

|

| 122 |

+

"output_dtype": "uint8",

|

| 123 |

+

"separate_folder": false

|

| 124 |

+

}

|

| 125 |

+

]

|

| 126 |

+

},

|

| 127 |

+

"handlers": [

|

| 128 |

+

{

|

| 129 |

+

"_target_": "CheckpointLoader",

|

| 130 |

+

"load_path": "$os.path.join(@bundle_root, 'models', 'model.pt')",

|

| 131 |

+

"map_location": "@device",

|

| 132 |

+

"load_dict": {

|

| 133 |

+

"model": "@network"

|

| 134 |

+

}

|

| 135 |

+

}

|

| 136 |

+

],

|

| 137 |

+

"evaluator": {

|

| 138 |

+

"_target_": "SupervisedEvaluator",

|

| 139 |

+

"device": "@device",

|

| 140 |

+

"val_data_loader": "@dataloader",

|

| 141 |

+

"val_handlers": "@handlers",

|

| 142 |

+

"network": "@network",

|

| 143 |

+

"postprocessing": "@postprocessing",

|

| 144 |

+

"inferer": "@inferer",

|

| 145 |

+

"amp": true

|

| 146 |

+

},

|

| 147 |

+

"evaluating": [

|

| 148 |

+

"$setattr(torch.backends.cudnn, 'benchmark', True)",

|

| 149 |

+

"[email protected]()"

|

| 150 |

+

]

|

| 151 |

+

}

|

configs/logging.conf

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

[loggers]

|

| 2 |

+

keys=root

|

| 3 |

+

|

| 4 |

+

[handlers]

|

| 5 |

+

keys=consoleHandler

|

| 6 |

+

|

| 7 |

+

[formatters]

|

| 8 |

+

keys=fullFormatter

|

| 9 |

+

|

| 10 |

+

[logger_root]

|

| 11 |

+

level=INFO

|

| 12 |

+

handlers=consoleHandler

|

| 13 |

+

|

| 14 |

+

[handler_consoleHandler]

|

| 15 |

+

class=StreamHandler

|

| 16 |

+

level=INFO

|

| 17 |

+

formatter=fullFormatter

|

| 18 |

+

args=(sys.stdout,)

|

| 19 |

+

|

| 20 |

+

[formatter_fullFormatter]

|

| 21 |

+

format=%(asctime)s - %(name)s - %(levelname)s - %(message)s

|

configs/metadata.json

ADDED

|

@@ -0,0 +1,115 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"schema": "https://github.com/Project-MONAI/MONAI-extra-test-data/releases/download/0.8.1/meta_schema_hovernet_20221124.json",

|

| 3 |

+

"version": "0.1.0",

|

| 4 |

+

"changelog": {

|

| 5 |

+

"0.1.0": "complete the model package"

|

| 6 |

+

},

|

| 7 |

+

"monai_version": "1.1.0rc2",

|

| 8 |

+

"pytorch_version": "1.13.0",

|

| 9 |

+

"numpy_version": "1.22.2",

|

| 10 |

+

"optional_packages_version": {

|

| 11 |

+

"scikit-image": "0.19.3",

|

| 12 |

+

"scipy": "1.8.1",

|

| 13 |

+

"tqdm": "4.64.1",

|

| 14 |

+

"pillow": "9.0.1"

|

| 15 |

+

},

|

| 16 |

+

"task": "Nuclear segmentation and classification",

|

| 17 |

+

"description": "A simultaneous segmentation and classification of nuclei within multitissue histology images based on CoNSeP data",

|

| 18 |

+

"authors": "MONAI team",

|

| 19 |

+

"copyright": "Copyright (c) MONAI Consortium",

|

| 20 |

+

"data_source": "https://warwick.ac.uk/fac/cross_fac/tia/data/hovernet/",

|

| 21 |

+

"data_type": "numpy",

|

| 22 |

+

"image_classes": "RGB image with intensity between 0 and 255",

|

| 23 |

+

"label_classes": "a dictionary contains binary nuclear segmentation, hover map and pixel-level classification",

|

| 24 |

+

"pred_classes": "a dictionary contains scalar probability for binary nuclear segmentation, hover map and pixel-level classification",

|

| 25 |

+

"eval_metrics": {

|

| 26 |

+

"Binary Dice": 0.8293,

|

| 27 |

+

"PQ": 0.4936,

|

| 28 |

+

"F1d": 0.748

|

| 29 |

+

},

|

| 30 |

+

"intended_use": "This is an example, not to be used for diagnostic purposes",

|

| 31 |

+

"references": [

|

| 32 |

+

"Simon Graham. 'HoVer-Net: Simultaneous Segmentation and Classification of Nuclei in Multi-Tissue Histology Images.' Medical Image Analysis, 2019. https://arxiv.org/abs/1812.06499"

|

| 33 |

+

],

|

| 34 |

+

"network_data_format": {

|

| 35 |

+

"inputs": {

|

| 36 |

+

"image": {

|

| 37 |

+

"type": "image",

|

| 38 |

+

"format": "magnitude",

|

| 39 |

+

"num_channels": 3,

|

| 40 |

+

"spatial_shape": [

|

| 41 |

+

"256",

|

| 42 |

+

"256"

|

| 43 |

+

],

|

| 44 |

+

"dtype": "float32",

|

| 45 |

+

"value_range": [

|

| 46 |

+

0,

|

| 47 |

+

255

|

| 48 |

+

],

|

| 49 |

+

"is_patch_data": true,

|

| 50 |

+

"channel_def": {

|

| 51 |

+

"0": "image"

|

| 52 |

+

}

|

| 53 |

+

}

|

| 54 |

+

},

|

| 55 |

+

"outputs": {

|

| 56 |

+

"nucleus_prediction": {

|

| 57 |

+

"type": "probability",

|

| 58 |

+

"format": "segmentation",

|

| 59 |

+

"num_channels": 3,

|

| 60 |

+

"spatial_shape": [

|

| 61 |

+

"164",

|

| 62 |

+

"164"

|

| 63 |

+

],

|

| 64 |

+

"dtype": "float32",

|

| 65 |

+

"value_range": [

|

| 66 |

+

0,

|

| 67 |

+

1

|

| 68 |

+

],

|

| 69 |

+

"is_patch_data": true,

|

| 70 |

+

"channel_def": {

|

| 71 |

+

"0": "background",

|

| 72 |

+

"1": "nuclei"

|

| 73 |

+

}

|

| 74 |

+

},

|

| 75 |

+

"horizontal_vertical": {

|

| 76 |

+

"type": "probability",

|

| 77 |

+

"format": "regression",

|

| 78 |

+

"num_channels": 2,

|

| 79 |

+

"spatial_shape": [

|

| 80 |

+

"164",

|

| 81 |

+

"164"

|

| 82 |

+

],

|

| 83 |

+

"dtype": "float32",

|

| 84 |

+

"value_range": [

|

| 85 |

+

0,

|

| 86 |

+

1

|

| 87 |

+

],

|

| 88 |

+

"is_patch_data": true,

|

| 89 |

+

"channel_def": {

|

| 90 |

+

"0": "horizontal distances map",

|

| 91 |

+

"1": "vertical distances map"

|

| 92 |

+

}

|

| 93 |

+

},

|

| 94 |

+

"type_prediction": {

|

| 95 |

+

"type": "probability",

|

| 96 |

+

"format": "classification",

|

| 97 |

+

"num_channels": 2,

|

| 98 |

+

"spatial_shape": [

|

| 99 |

+

"164",

|

| 100 |

+

"164"

|

| 101 |

+

],

|

| 102 |

+

"dtype": "float32",

|

| 103 |

+

"value_range": [

|

| 104 |

+

0,

|

| 105 |

+

1

|

| 106 |

+

],

|

| 107 |

+

"is_patch_data": true,

|

| 108 |

+

"channel_def": {

|

| 109 |

+

"0": "background",

|

| 110 |

+

"1": "type of nucleus for each pixel"

|

| 111 |

+

}

|

| 112 |

+

}

|

| 113 |

+

}

|

| 114 |

+

}

|

| 115 |

+

}

|

configs/multi_gpu_train.json

ADDED

|

@@ -0,0 +1,36 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"device": "$torch.device(f'cuda:{dist.get_rank()}')",

|

| 3 |

+

"network": {

|

| 4 |

+

"_target_": "torch.nn.parallel.DistributedDataParallel",

|

| 5 |

+

"module": "$@network_def.to(@device)",

|

| 6 |

+

"device_ids": [

|

| 7 |

+

"@device"

|

| 8 |

+

]

|

| 9 |

+

},

|

| 10 |

+

"train#sampler": {

|

| 11 |

+

"_target_": "DistributedSampler",

|

| 12 |

+

"dataset": "@train#dataset",

|

| 13 |

+

"even_divisible": true,

|

| 14 |

+

"shuffle": true

|

| 15 |

+

},

|

| 16 |

+

"train#dataloader#sampler": "@train#sampler",

|

| 17 |

+

"train#dataloader#shuffle": false,

|

| 18 |

+

"train#trainer#train_handlers": "$@train#train_handlers[: -2 if dist.get_rank() > 0 else None]",

|

| 19 |

+

"validate#sampler": {

|

| 20 |

+

"_target_": "DistributedSampler",

|

| 21 |

+

"dataset": "@validate#dataset",

|

| 22 |

+

"even_divisible": false,

|

| 23 |

+

"shuffle": false

|

| 24 |

+

},

|

| 25 |

+

"validate#dataloader#sampler": "@validate#sampler",

|

| 26 |

+

"validate#evaluator#val_handlers": "$None if dist.get_rank() > 0 else @validate#handlers",

|

| 27 |

+

"training": [

|

| 28 |

+

"$import torch.distributed as dist",

|

| 29 |

+

"$dist.init_process_group(backend='nccl')",

|

| 30 |

+

"$torch.cuda.set_device(@device)",

|

| 31 |

+

"$monai.utils.set_determinism(seed=321)",

|

| 32 |

+

"$setattr(torch.backends.cudnn, 'benchmark', True)",

|

| 33 |

+

"$@train#trainer.run()",

|

| 34 |

+

"$dist.destroy_process_group()"

|

| 35 |

+

]

|

| 36 |

+

}

|

configs/train.json

ADDED

|

@@ -0,0 +1,525 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|