complete the model package

Browse files- .gitattributes +1 -0

- README.md +171 -0

- configs/inference.json +136 -0

- configs/logging.conf +21 -0

- configs/metadata.json +210 -0

- docs/3DSlicer_use.png +0 -0

- docs/README.md +164 -0

- docs/demo.png +3 -0

- docs/license.txt +4 -0

- docs/unest.png +0 -0

- docs/wholebrain.png +0 -0

- models/model.pt +3 -0

- scripts/__init__.py +10 -0

- scripts/networks/__init__.py +10 -0

- scripts/networks/nest/__init__.py +16 -0

- scripts/networks/nest/utils.py +485 -0

- scripts/networks/nest_transformer_3D.py +489 -0

- scripts/networks/patchEmbed3D.py +190 -0

- scripts/networks/unest_base_patch_4.py +249 -0

- scripts/networks/unest_block.py +245 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

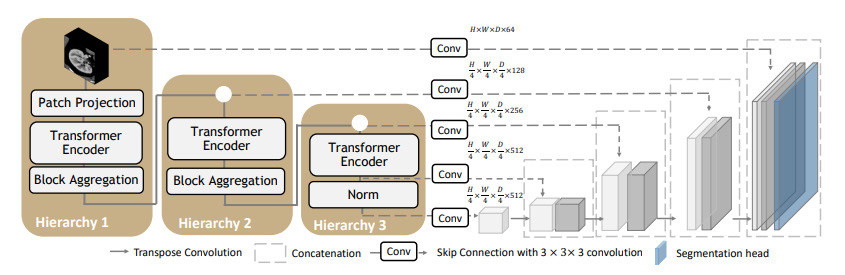

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

docs/demo.png filter=lfs diff=lfs merge=lfs -text

|

README.md

ADDED

|

@@ -0,0 +1,171 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

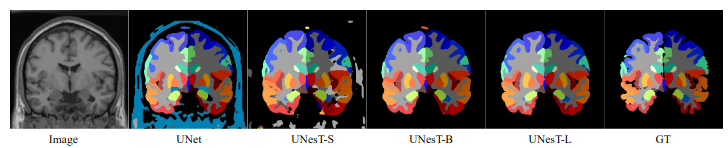

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

tags:

|

| 3 |

+

- monai

|

| 4 |

+

- medical

|

| 5 |

+

library_name: monai

|

| 6 |

+

license: unknown

|

| 7 |

+

---

|

| 8 |

+

# Description

|

| 9 |

+

Detailed whole brain segmentation is an essential quantitative technique in medical image analysis, which provides a non-invasive way of measuring brain regions from a clinical acquired structural magnetic resonance imaging (MRI).

|

| 10 |

+

We provide the pre-trained model for inferencing whole brain segmentation with 133 structures.

|

| 11 |

+

|

| 12 |

+

A tutorial and release of model for whole brain segmentation using the 3D transformer-based segmentation model UNEST.

|

| 13 |

+

|

| 14 |

+

Authors:

|

| 15 |

+

Xin Yu ([email protected]) (Primary)

|

| 16 |

+

|

| 17 |

+

Yinchi Zhou ([email protected]) | Yucheng Tang ([email protected])

|

| 18 |

+

|

| 19 |

+

<p align="center">

|

| 20 |

+

-------------------------------------------------------------------------------------

|

| 21 |

+

</p>

|

| 22 |

+

|

| 23 |

+

<br>

|

| 24 |

+

<p align="center">

|

| 25 |

+

Fig.1 - The demonstration of T1w MRI images registered in MNI space and the whole brain segmentation labels with 133 classes</p>

|

| 26 |

+

|

| 27 |

+

|

| 28 |

+

|

| 29 |

+

# Model Overview

|

| 30 |

+

A pre-trained larger UNEST base model [1] for volumetric (3D) whole brain segmentation with T1w MR images.

|

| 31 |

+

To leverage information across embedded sequences, ”shifted window” transformers

|

| 32 |

+

are proposed for dense predictions and modeling multi-scale features. However, these

|

| 33 |

+

attempts that aim to complicate the self-attention range often yield high computation

|

| 34 |

+

complexity and data inefficiency. Inspired by the aggregation function in the nested

|

| 35 |

+

ViT, we propose a new design of a 3D U-shape medical segmentation model with

|

| 36 |

+

Nested Transformers (UNesT) hierarchically with the 3D block aggregation function,

|

| 37 |

+

that learn locality behaviors for small structures or small dataset. This design retains

|

| 38 |

+

the original global self-attention mechanism and achieves information communication

|

| 39 |

+

across patches by stacking transformer encoders hierarchically.

|

| 40 |

+

|

| 41 |

+

<br>

|

| 42 |

+

<p align="center">

|

| 43 |

+

Fig.2 - The network architecture of UNEST Base model

|

| 44 |

+

</p>

|

| 45 |

+

|

| 46 |

+

|

| 47 |

+

## Data

|

| 48 |

+

The training data is from the Vanderbilt University and Vanderbilt University Medical Center with public released OASIS and CANDI datsets.

|

| 49 |

+

Training and testing data are MRI T1-weighted (T1w) 3D volumes coming from 3 different sites. There are a total of 133 classes in the whole brain segmentation task.

|

| 50 |

+

Among 50 T1w MRI scans from Open Access Series on Imaging Studies (OASIS) (Marcus et al., 2007) dataset, 45 scans are used for training and the other 5 for validation.

|

| 51 |

+

The testing cohort contains Colin27 T1w scan (Aubert-Broche et al., 2006) and 13 T1w MRI scans from the Child and Adolescent Neuro Development Initiative (CANDI)

|

| 52 |

+

(Kennedy et al., 2012). All data are registered to the MNI space using the MNI305 (Evans et al., 1993) template and preprocessed follow the method in (Huo et al., 2019). Input images are randomly cropped to the size of 96 × 96 × 96.

|

| 53 |

+

|

| 54 |

+

### Important

|

| 55 |

+

|

| 56 |

+

```diff

|

| 57 |

+

+ All the brain MRI images for training are registered to Affine registration from the target image to the MNI305 template using NiftyReg.

|

| 58 |

+

+ The data should be in the MNI305 space before inference.

|

| 59 |

+

|

| 60 |

+

|

| 61 |

+

Registration to MNI Space: Sample suggestion. E.g., use ANTS or other tools for registering T1 MRI image to MNI305 Space.

|

| 62 |

+

|

| 63 |

+

|

| 64 |

+

```

|

| 65 |

+

pip install antspyx

|

| 66 |

+

```

|

| 67 |

+

Sample ANTS registration

|

| 68 |

+

```

|

| 69 |

+

|

| 70 |

+

import ants

|

| 71 |

+

import sys

|

| 72 |

+

import os

|

| 73 |

+

|

| 74 |

+

fixed_image = ants.image_read('<fixed_image_path>')

|

| 75 |

+

moving_image = ants.image_read('<moving_image_path>')

|

| 76 |

+

transform = ants.registration(fixed_image,moving_image,'Affine')

|

| 77 |

+

|

| 78 |

+

reg3t = ants.apply_transforms(fixed_image,moving_image,transform['fwdtransforms'][0])

|

| 79 |

+

ants.image_write(reg3t,output_image_path)

|

| 80 |

+

|

| 81 |

+

```

|

| 82 |

+

## Training configuration

|

| 83 |

+

The training and inference was performed with at least one 24GB-memory GPU.

|

| 84 |

+

|

| 85 |

+

Actual Model Input: 96 x 96 x 96

|

| 86 |

+

|

| 87 |

+

## Input and output formats

|

| 88 |

+

Input: 1 channel T1w MRI image in MNI305 Space.

|

| 89 |

+

|

| 90 |

+

|

| 91 |

+

## commands example

|

| 92 |

+

Download trained checkpoint model to ./model/model.pt:

|

| 93 |

+

|

| 94 |

+

|

| 95 |

+

Add scripts component: To run the workflow with customized components, PYTHONPATH should be revised to include the path to the customized component:

|

| 96 |

+

|

| 97 |

+

```

|

| 98 |

+

export PYTHONPATH=$PYTHONPATH: '<path to the bundle root dir>/scripts'

|

| 99 |

+

|

| 100 |

+

```

|

| 101 |

+

|

| 102 |

+

|

| 103 |

+

Execute inference:

|

| 104 |

+

|

| 105 |

+

```

|

| 106 |

+

python -m monai.bundle run evaluating --meta_file configs/metadata.json --config_file configs/inference.json --logging_file configs/logging.conf

|

| 107 |

+

```

|

| 108 |

+

|

| 109 |

+

|

| 110 |

+

## More examples output

|

| 111 |

+

<br>

|

| 112 |

+

<p align="center">

|

| 113 |

+

Fig.3 - The output prediction comparison with variant and ground truth

|

| 114 |

+

</p>

|

| 115 |

+

|

| 116 |

+

|

| 117 |

+

## Complete ROI of the whole brain segmentation

|

| 118 |

+

133 brain structures are segmented.

|

| 119 |

+

|

| 120 |

+

| #1 | #2 | #3 | #4 |

|

| 121 |

+

| :------------ | :---------- | :-------- | :-------- |

|

| 122 |

+

| 0: background | 1 : 3rd-Ventricle | 2 : 4th-Ventricle | 3 : Right-Accumbens-Area |

|

| 123 |

+

| 4 : Left-Accumbens-Area | 5 : Right-Amygdala | 6 : Left-Amygdala | 7 : Brain-Stem |

|

| 124 |

+

| 8 : Right-Caudate | 9 : Left-Caudate | 10 : Right-Cerebellum-Exterior | 11 : Left-Cerebellum-Exterior |

|

| 125 |

+

| 12 : Right-Cerebellum-White-Matter | 13 : Left-Cerebellum-White-Matter | 14 : Right-Cerebral-White-Matter | 15 : Left-Cerebral-White-Matter |

|

| 126 |

+

| 16 : Right-Hippocampus | 17 : Left-Hippocampus | 18 : Right-Inf-Lat-Vent | 19 : Left-Inf-Lat-Vent |

|

| 127 |

+

| 20 : Right-Lateral-Ventricle | 21 : Left-Lateral-Ventricle | 22 : Right-Pallidum | 23 : Left-Pallidum |

|

| 128 |

+

| 24 : Right-Putamen | 25 : Left-Putamen | 26 : Right-Thalamus-Proper | 27 : Left-Thalamus-Proper |

|

| 129 |

+

| 28 : Right-Ventral-DC | 29 : Left-Ventral-DC | 30 : Cerebellar-Vermal-Lobules-I-V | 31 : Cerebellar-Vermal-Lobules-VI-VII |

|

| 130 |

+

| 32 : Cerebellar-Vermal-Lobules-VIII-X | 33 : Left-Basal-Forebrain | 34 : Right-Basal-Forebrain | 35 : Right-ACgG--anterior-cingulate-gyrus |

|

| 131 |

+

| 36 : Left-ACgG--anterior-cingulate-gyrus | 37 : Right-AIns--anterior-insula | 38 : Left-AIns--anterior-insula | 39 : Right-AOrG--anterior-orbital-gyrus |

|

| 132 |

+

| 40 : Left-AOrG--anterior-orbital-gyrus | 41 : Right-AnG---angular-gyrus | 42 : Left-AnG---angular-gyrus | 43 : Right-Calc--calcarine-cortex |

|

| 133 |

+

| 44 : Left-Calc--calcarine-cortex | 45 : Right-CO----central-operculum | 46 : Left-CO----central-operculum | 47 : Right-Cun---cuneus |

|

| 134 |

+

| 48 : Left-Cun---cuneus | 49 : Right-Ent---entorhinal-area | 50 : Left-Ent---entorhinal-area | 51 : Right-FO----frontal-operculum |

|

| 135 |

+

| 52 : Left-FO----frontal-operculum | 53 : Right-FRP---frontal-pole | 54 : Left-FRP---frontal-pole | 55 : Right-FuG---fusiform-gyrus |

|

| 136 |

+

| 56 : Left-FuG---fusiform-gyrus | 57 : Right-GRe---gyrus-rectus | 58 : Left-GRe---gyrus-rectus | 59 : Right-IOG---inferior-occipital-gyrus ,

|

| 137 |

+

| 60 : Left-IOG---inferior-occipital-gyrus | 61 : Right-ITG---inferior-temporal-gyrus | 62 : Left-ITG---inferior-temporal-gyrus | 63 : Right-LiG---lingual-gyrus |

|

| 138 |

+

| 64 : Left-LiG---lingual-gyrus | 65 : Right-LOrG--lateral-orbital-gyrus | 66 : Left-LOrG--lateral-orbital-gyrus | 67 : Right-MCgG--middle-cingulate-gyrus |

|

| 139 |

+

| 68 : Left-MCgG--middle-cingulate-gyrus | 69 : Right-MFC---medial-frontal-cortex | 70 : Left-MFC---medial-frontal-cortex | 71 : Right-MFG---middle-frontal-gyrus |

|

| 140 |

+

| 72 : Left-MFG---middle-frontal-gyrus | 73 : Right-MOG---middle-occipital-gyrus | 74 : Left-MOG---middle-occipital-gyrus | 75 : Right-MOrG--medial-orbital-gyrus |

|

| 141 |

+

| 76 : Left-MOrG--medial-orbital-gyrus | 77 : Right-MPoG--postcentral-gyrus | 78 : Left-MPoG--postcentral-gyrus | 79 : Right-MPrG--precentral-gyrus |

|

| 142 |

+

| 80 : Left-MPrG--precentral-gyrus | 81 : Right-MSFG--superior-frontal-gyrus | 82 : Left-MSFG--superior-frontal-gyrus | 83 : Right-MTG---middle-temporal-gyrus |

|

| 143 |

+

| 84 : Left-MTG---middle-temporal-gyrus | 85 : Right-OCP---occipital-pole | 86 : Left-OCP---occipital-pole | 87 : Right-OFuG--occipital-fusiform-gyrus |

|

| 144 |

+

| 88 : Left-OFuG--occipital-fusiform-gyrus | 89 : Right-OpIFG-opercular-part-of-the-IFG | 90 : Left-OpIFG-opercular-part-of-the-IFG | 91 : Right-OrIFG-orbital-part-of-the-IFG |

|

| 145 |

+

| 92 : Left-OrIFG-orbital-part-of-the-IFG | 93 : Right-PCgG--posterior-cingulate-gyrus | 94 : Left-PCgG--posterior-cingulate-gyrus | 95 : Right-PCu---precuneus |

|

| 146 |

+

| 96 : Left-PCu---precuneus | 97 : Right-PHG---parahippocampal-gyrus | 98 : Left-PHG---parahippocampal-gyrus | 99 : Right-PIns--posterior-insula |

|

| 147 |

+

| 100 : Left-PIns--posterior-insula | 101 : Right-PO----parietal-operculum | 102 : Left-PO----parietal-operculum | 103 : Right-PoG---postcentral-gyrus |

|

| 148 |

+

| 104 : Left-PoG---postcentral-gyrus | 105 : Right-POrG--posterior-orbital-gyrus | 106 : Left-POrG--posterior-orbital-gyrus | 107 : Right-PP----planum-polare |

|

| 149 |

+

| 108 : Left-PP----planum-polare | 109 : Right-PrG---precentral-gyrus | 110 : Left-PrG---precentral-gyrus | 111 : Right-PT----planum-temporale |

|

| 150 |

+

| 112 : Left-PT----planum-temporale | 113 : Right-SCA---subcallosal-area | 114 : Left-SCA---subcallosal-area | 115 : Right-SFG---superior-frontal-gyrus |

|

| 151 |

+

| 116 : Left-SFG---superior-frontal-gyrus | 117 : Right-SMC---supplementary-motor-cortex | 118 : Left-SMC---supplementary-motor-cortex | 119 : Right-SMG---supramarginal-gyrus |

|

| 152 |

+

| 120 : Left-SMG---supramarginal-gyrus | 121 : Right-SOG---superior-occipital-gyrus | 122 : Left-SOG---superior-occipital-gyrus | 123 : Right-SPL---superior-parietal-lobule |

|

| 153 |

+

| 124 : Left-SPL---superior-parietal-lobule | 125 : Right-STG---superior-temporal-gyrus | 126 : Left-STG---superior-temporal-gyrus | 127 : Right-TMP---temporal-pole |

|

| 154 |

+

| 128 : Left-TMP---temporal-pole | 129 : Right-TrIFG-triangular-part-of-the-IFG | 130 : Left-TrIFG-triangular-part-of-the-IFG | 131 : Right-TTG---transverse-temporal-gyrus |

|

| 155 |

+

| 132 : Left-TTG---transverse-temporal-gyrus |

|

| 156 |

+

|

| 157 |

+

|

| 158 |

+

## Bundle Integration in MONAI Lable

|

| 159 |

+

The inference pipleine can be easily used by the MONAI Label server and 3D Slicer for fast labeling T1w MRI images in MNI space.

|

| 160 |

+

|

| 161 |

+

<br>

|

| 162 |

+

|

| 163 |

+

# Disclaimer

|

| 164 |

+

This is an example, not to be used for diagnostic purposes.

|

| 165 |

+

|

| 166 |

+

# References

|

| 167 |

+

[1] Yu, Xin, Yinchi Zhou, Yucheng Tang et al. Characterizing Renal Structures with 3D Block Aggregate Transformers. arXiv preprint arXiv:2203.02430 (2022). https://arxiv.org/pdf/2203.02430.pdf

|

| 168 |

+

|

| 169 |

+

[2] Zizhao Zhang et al. Nested Hierarchical Transformer: Towards Accurate, Data-Efficient and Interpretable Visual Understanding. AAAI Conference on Artificial Intelligence (AAAI) 2022

|

| 170 |

+

|

| 171 |

+

[3] Huo, Yuankai, et al. 3D whole brain segmentation using spatially localized atlas network tiles. NeuroImage 194 (2019): 105-119.

|

configs/inference.json

ADDED

|

@@ -0,0 +1,136 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"imports": [

|

| 3 |

+

"$import glob",

|

| 4 |

+

"$import os"

|

| 5 |

+

],

|

| 6 |

+

"bundle_root": ".",

|

| 7 |

+

"output_dir": "$@bundle_root + '/eval'",

|

| 8 |

+

"dataset_dir": "$@bundle_root + '/dataset/images'",

|

| 9 |

+

"datalist": "$list(sorted(glob.glob(@dataset_dir + '/*.nii.gz')))",

|

| 10 |

+

"device": "$torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')",

|

| 11 |

+

"network_def": {

|

| 12 |

+

"_target_": "scripts.networks.unest_base_patch_4.UNesT",

|

| 13 |

+

"in_channels": 1,

|

| 14 |

+

"out_channels": 133,

|

| 15 |

+

"patch_size": 4,

|

| 16 |

+

"depths": [

|

| 17 |

+

2,

|

| 18 |

+

2,

|

| 19 |

+

8

|

| 20 |

+

],

|

| 21 |

+

"embed_dim": [

|

| 22 |

+

128,

|

| 23 |

+

256,

|

| 24 |

+

512

|

| 25 |

+

],

|

| 26 |

+

"num_heads": [

|

| 27 |

+

4,

|

| 28 |

+

8,

|

| 29 |

+

16

|

| 30 |

+

]

|

| 31 |

+

},

|

| 32 |

+

"network": "$@network_def.to(@device)",

|

| 33 |

+

"preprocessing": {

|

| 34 |

+

"_target_": "Compose",

|

| 35 |

+

"transforms": [

|

| 36 |

+

{

|

| 37 |

+

"_target_": "LoadImaged",

|

| 38 |

+

"keys": "image"

|

| 39 |

+

},

|

| 40 |

+

{

|

| 41 |

+

"_target_": "EnsureChannelFirstd",

|

| 42 |

+

"keys": "image"

|

| 43 |

+

},

|

| 44 |

+

{

|

| 45 |

+

"_target_": "NormalizeIntensityd",

|

| 46 |

+

"keys": "image",

|

| 47 |

+

"nonzero": "True",

|

| 48 |

+

"channel_wise": "True"

|

| 49 |

+

},

|

| 50 |

+

{

|

| 51 |

+

"_target_": "EnsureTyped",

|

| 52 |

+

"keys": "image"

|

| 53 |

+

}

|

| 54 |

+

]

|

| 55 |

+

},

|

| 56 |

+

"dataset": {

|

| 57 |

+

"_target_": "Dataset",

|

| 58 |

+

"data": "$[{'image': i} for i in @datalist]",

|

| 59 |

+

"transform": "@preprocessing"

|

| 60 |

+

},

|

| 61 |

+

"dataloader": {

|

| 62 |

+

"_target_": "DataLoader",

|

| 63 |

+

"dataset": "@dataset",

|

| 64 |

+

"batch_size": 1,

|

| 65 |

+

"shuffle": false,

|

| 66 |

+

"num_workers": 4

|

| 67 |

+

},

|

| 68 |

+

"inferer": {

|

| 69 |

+

"_target_": "SlidingWindowInferer",

|

| 70 |

+

"roi_size": [

|

| 71 |

+

96,

|

| 72 |

+

96,

|

| 73 |

+

96

|

| 74 |

+

],

|

| 75 |

+

"sw_batch_size": 4,

|

| 76 |

+

"overlap": 0.7

|

| 77 |

+

},

|

| 78 |

+

"postprocessing": {

|

| 79 |

+

"_target_": "Compose",

|

| 80 |

+

"transforms": [

|

| 81 |

+

{

|

| 82 |

+

"_target_": "Activationsd",

|

| 83 |

+

"keys": "pred",

|

| 84 |

+

"softmax": true

|

| 85 |

+

},

|

| 86 |

+

{

|

| 87 |

+

"_target_": "Invertd",

|

| 88 |

+

"keys": "pred",

|

| 89 |

+

"transform": "@preprocessing",

|

| 90 |

+

"orig_keys": "image",

|

| 91 |

+

"meta_key_postfix": "meta_dict",

|

| 92 |

+

"nearest_interp": false,

|

| 93 |

+

"to_tensor": true

|

| 94 |

+

},

|

| 95 |

+

{

|

| 96 |

+

"_target_": "AsDiscreted",

|

| 97 |

+

"keys": "pred",

|

| 98 |

+

"argmax": true

|

| 99 |

+

},

|

| 100 |

+

{

|

| 101 |

+

"_target_": "SaveImaged",

|

| 102 |

+

"keys": "pred",

|

| 103 |

+

"meta_keys": "pred_meta_dict",

|

| 104 |

+

"output_dir": "@output_dir"

|

| 105 |

+

}

|

| 106 |

+

]

|

| 107 |

+

},

|

| 108 |

+

"handlers": [

|

| 109 |

+

{

|

| 110 |

+

"_target_": "CheckpointLoader",

|

| 111 |

+

"load_path": "$@bundle_root + '/models/model.pt'",

|

| 112 |

+

"load_dict": {

|

| 113 |

+

"model": "@network"

|

| 114 |

+

},

|

| 115 |

+

"strict": "True"

|

| 116 |

+

},

|

| 117 |

+

{

|

| 118 |

+

"_target_": "StatsHandler",

|

| 119 |

+

"iteration_log": false

|

| 120 |

+

}

|

| 121 |

+

],

|

| 122 |

+

"evaluator": {

|

| 123 |

+

"_target_": "SupervisedEvaluator",

|

| 124 |

+

"device": "@device",

|

| 125 |

+

"val_data_loader": "@dataloader",

|

| 126 |

+

"network": "@network",

|

| 127 |

+

"inferer": "@inferer",

|

| 128 |

+

"postprocessing": "@postprocessing",

|

| 129 |

+

"val_handlers": "@handlers",

|

| 130 |

+

"amp": false

|

| 131 |

+

},

|

| 132 |

+

"evaluating": [

|

| 133 |

+

"$setattr(torch.backends.cudnn, 'benchmark', True)",

|

| 134 |

+

"[email protected]()"

|

| 135 |

+

]

|

| 136 |

+

}

|

configs/logging.conf

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

[loggers]

|

| 2 |

+

keys=root

|

| 3 |

+

|

| 4 |

+

[handlers]

|

| 5 |

+

keys=consoleHandler

|

| 6 |

+

|

| 7 |

+

[formatters]

|

| 8 |

+

keys=fullFormatter

|

| 9 |

+

|

| 10 |

+

[logger_root]

|

| 11 |

+

level=INFO

|

| 12 |

+

handlers=consoleHandler

|

| 13 |

+

|

| 14 |

+

[handler_consoleHandler]

|

| 15 |

+

class=StreamHandler

|

| 16 |

+

level=INFO

|

| 17 |

+

formatter=fullFormatter

|

| 18 |

+

args=(sys.stdout,)

|

| 19 |

+

|

| 20 |

+

[formatter_fullFormatter]

|

| 21 |

+

format=%(asctime)s - %(name)s - %(levelname)s - %(message)s

|

configs/metadata.json

ADDED

|

@@ -0,0 +1,210 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"schema": "https://github.com/Project-MONAI/MONAI-extra-test-data/releases/download/0.8.1/meta_schema_20220324.json",

|

| 3 |

+

"version": "0.1.0",

|

| 4 |

+

"changelog": {

|

| 5 |

+

"0.1.0": "complete the model package"

|

| 6 |

+

},

|

| 7 |

+

"monai_version": "0.9.1",

|

| 8 |

+

"pytorch_version": "1.10.0",

|

| 9 |

+

"numpy_version": "1.21.2",

|

| 10 |

+

"optional_packages_version": {

|

| 11 |

+

"nibabel": "3.2.1",

|

| 12 |

+

"pytorch-ignite": "0.4.8",

|

| 13 |

+

"einops": "0.4.1",

|

| 14 |

+

"fire": "0.4.0",

|

| 15 |

+

"timm": "0.6.7"

|

| 16 |

+

},

|

| 17 |

+

"task": "Whole Brain Segmentation",

|

| 18 |

+

"description": "A 3D transformer-based model for whole brain segmentation from T1W MRI image",

|

| 19 |

+

"authors": "Vanderbilt University + MONAI team",

|

| 20 |

+

"copyright": "Copyright (c) MONAI Consortium",

|

| 21 |

+

"data_source": "",

|

| 22 |

+

"data_type": "nibabel",

|

| 23 |

+

"image_classes": "single channel data, intensity scaled to [0, 1]",

|

| 24 |

+

"label_classes": "133 Classes",

|

| 25 |

+

"pred_classes": "133 Classes",

|

| 26 |

+

"eval_metrics": {

|

| 27 |

+

"mean_dice": 0.71

|

| 28 |

+

},

|

| 29 |

+

"intended_use": "This is an example, not to be used for diagnostic purposes",

|

| 30 |

+

"references": [

|

| 31 |

+

"Xin, et al. Characterizing Renal Structures with 3D Block Aggregate Transformers. arXiv preprint arXiv:2203.02430 (2022). https://arxiv.org/pdf/2203.02430.pdf"

|

| 32 |

+

],

|

| 33 |

+

"network_data_format": {

|

| 34 |

+

"inputs": {

|

| 35 |

+

"image": {

|

| 36 |

+

"type": "image",

|

| 37 |

+

"format": "hounsfield",

|

| 38 |

+

"modality": "MRI",

|

| 39 |

+

"num_channels": 1,

|

| 40 |

+

"spatial_shape": [

|

| 41 |

+

96,

|

| 42 |

+

96,

|

| 43 |

+

96

|

| 44 |

+

],

|

| 45 |

+

"dtype": "float32",

|

| 46 |

+

"value_range": [

|

| 47 |

+

0,

|

| 48 |

+

1

|

| 49 |

+

],

|

| 50 |

+

"is_patch_data": true,

|

| 51 |

+

"channel_def": {

|

| 52 |

+

"0": "image"

|

| 53 |

+

}

|

| 54 |

+

}

|

| 55 |

+

},

|

| 56 |

+

"outputs": {

|

| 57 |

+

"pred": {

|

| 58 |

+

"type": "image",

|

| 59 |

+

"format": "segmentation",

|

| 60 |

+

"num_channels": 133,

|

| 61 |

+

"spatial_shape": [

|

| 62 |

+

96,

|

| 63 |

+

96,

|

| 64 |

+

96

|

| 65 |

+

],

|

| 66 |

+

"dtype": "float32",

|

| 67 |

+

"value_range": [

|

| 68 |

+

0,

|

| 69 |

+

1

|

| 70 |

+

],

|

| 71 |

+

"is_patch_data": true,

|

| 72 |

+

"channel_def": {

|

| 73 |

+

"0": "background",

|

| 74 |

+

"1": "3rd-Ventricle",

|

| 75 |

+

"2": "4th-Ventricle",

|

| 76 |

+

"3": "Right-Accumbens-Area",

|

| 77 |

+

"4": "Left-Accumbens-Area",

|

| 78 |

+

"5": "Right-Amygdala",

|

| 79 |

+

"6": "Left-Amygdala",

|

| 80 |

+

"7": "Brain-Stem",

|

| 81 |

+

"8": "Right-Caudate",

|

| 82 |

+

"9": "Left-Caudate",

|

| 83 |

+

"10": "Right-Cerebellum-Exterior",

|

| 84 |

+

"11": "Left-Cerebellum-Exterior",

|

| 85 |

+

"12": "Right-Cerebellum-White-Matter",

|

| 86 |

+

"13": "Left-Cerebellum-White-Matter",

|

| 87 |

+

"14": "Right-Cerebral-White-Matter",

|

| 88 |

+

"15": "Left-Cerebral-White-Matter",

|

| 89 |

+

"16": "Right-Hippocampus",

|

| 90 |

+

"17": "Left-Hippocampus",

|

| 91 |

+

"18": "Right-Inf-Lat-Vent",

|

| 92 |

+

"19": "Left-Inf-Lat-Vent",

|

| 93 |

+

"20": "Right-Lateral-Ventricle",

|

| 94 |

+

"21": "Left-Lateral-Ventricle",

|

| 95 |

+

"22": "Right-Pallidum",

|

| 96 |

+

"23": "Left-Pallidum",

|

| 97 |

+

"24": "Right-Putamen",

|

| 98 |

+

"25": "Left-Putamen",

|

| 99 |

+

"26": "Right-Thalamus-Proper",

|

| 100 |

+

"27": "Left-Thalamus-Proper",

|

| 101 |

+

"28": "Right-Ventral-DC",

|

| 102 |

+

"29": "Left-Ventral-DC",

|

| 103 |

+

"30": "Cerebellar-Vermal-Lobules-I-V",

|

| 104 |

+

"31": "Cerebellar-Vermal-Lobules-VI-VII",

|

| 105 |

+

"32": "Cerebellar-Vermal-Lobules-VIII-X",

|

| 106 |

+

"33": "Left-Basal-Forebrain",

|

| 107 |

+

"34": "Right-Basal-Forebrain",

|

| 108 |

+

"35": "Right-ACgG--anterior-cingulate-gyrus",

|

| 109 |

+

"36": "Left-ACgG--anterior-cingulate-gyrus",

|

| 110 |

+

"37": "Right-AIns--anterior-insula",

|

| 111 |

+

"38": "Left-AIns--anterior-insula",

|

| 112 |

+

"39": "Right-AOrG--anterior-orbital-gyrus",

|

| 113 |

+

"40": "Left-AOrG--anterior-orbital-gyrus",

|

| 114 |

+

"41": "Right-AnG---angular-gyrus",

|

| 115 |

+

"42": "Left-AnG---angular-gyrus",

|

| 116 |

+

"43": "Right-Calc--calcarine-cortex",

|

| 117 |

+

"44": "Left-Calc--calcarine-cortex",

|

| 118 |

+

"45": "Right-CO----central-operculum",

|

| 119 |

+

"46": "Left-CO----central-operculum",

|

| 120 |

+

"47": "Right-Cun---cuneus",

|

| 121 |

+

"48": "Left-Cun---cuneus",

|

| 122 |

+

"49": "Right-Ent---entorhinal-area",

|

| 123 |

+

"50": "Left-Ent---entorhinal-area",

|

| 124 |

+

"51": "Right-FO----frontal-operculum",

|

| 125 |

+

"52": "Left-FO----frontal-operculum",

|

| 126 |

+

"53": "Right-FRP---frontal-pole",

|

| 127 |

+

"54": "Left-FRP---frontal-pole",

|

| 128 |

+

"55": "Right-FuG---fusiform-gyrus ",

|

| 129 |

+

"56": "Left-FuG---fusiform-gyrus",

|

| 130 |

+

"57": "Right-GRe---gyrus-rectus",

|

| 131 |

+

"58": "Left-GRe---gyrus-rectus",

|

| 132 |

+

"59": "Right-IOG---inferior-occipital-gyrus",

|

| 133 |

+

"60": "Left-IOG---inferior-occipital-gyrus",

|

| 134 |

+

"61": "Right-ITG---inferior-temporal-gyrus",

|

| 135 |

+

"62": "Left-ITG---inferior-temporal-gyrus",

|

| 136 |

+

"63": "Right-LiG---lingual-gyrus",

|

| 137 |

+

"64": "Left-LiG---lingual-gyrus",

|

| 138 |

+

"65": "Right-LOrG--lateral-orbital-gyrus",

|

| 139 |

+

"66": "Left-LOrG--lateral-orbital-gyrus",

|

| 140 |

+

"67": "Right-MCgG--middle-cingulate-gyrus",

|

| 141 |

+

"68": "Left-MCgG--middle-cingulate-gyrus",

|

| 142 |

+

"69": "Right-MFC---medial-frontal-cortex",

|

| 143 |

+

"70": "Left-MFC---medial-frontal-cortex",

|

| 144 |

+

"71": "Right-MFG---middle-frontal-gyrus",

|

| 145 |

+

"72": "Left-MFG---middle-frontal-gyrus",

|

| 146 |

+

"73": "Right-MOG---middle-occipital-gyrus",

|

| 147 |

+

"74": "Left-MOG---middle-occipital-gyrus",

|

| 148 |

+

"75": "Right-MOrG--medial-orbital-gyrus",

|

| 149 |

+

"76": "Left-MOrG--medial-orbital-gyrus",

|

| 150 |

+

"77": "Right-MPoG--postcentral-gyrus",

|

| 151 |

+

"78": "Left-MPoG--postcentral-gyrus",

|

| 152 |

+

"79": "Right-MPrG--precentral-gyrus",

|

| 153 |

+

"80": "Left-MPrG--precentral-gyrus",

|

| 154 |

+

"81": "Right-MSFG--superior-frontal-gyrus",

|

| 155 |

+

"82": "Left-MSFG--superior-frontal-gyrus",

|

| 156 |

+

"83": "Right-MTG---middle-temporal-gyrus",

|

| 157 |

+

"84": "Left-MTG---middle-temporal-gyrus",

|

| 158 |

+

"85": "Right-OCP---occipital-pole",

|

| 159 |

+

"86": "Left-OCP---occipital-pole",

|

| 160 |

+

"87": "Right-OFuG--occipital-fusiform-gyrus",

|

| 161 |

+

"88": "Left-OFuG--occipital-fusiform-gyrus",

|

| 162 |

+

"89": "Right-OpIFG-opercular-part-of-the-IFG",

|

| 163 |

+

"90": "Left-OpIFG-opercular-part-of-the-IFG",

|

| 164 |

+

"91": "Right-OrIFG-orbital-part-of-the-IFG",

|

| 165 |

+

"92": "Left-OrIFG-orbital-part-of-the-IFG",

|

| 166 |

+

"93": "Right-PCgG--posterior-cingulate-gyrus",

|

| 167 |

+

"94": "Left-PCgG--posterior-cingulate-gyrus",

|

| 168 |

+

"95": "Right-PCu---precuneus",

|

| 169 |

+

"96": "Left-PCu---precuneus",

|

| 170 |

+

"97": "Right-PHG---parahippocampal-gyrus",

|

| 171 |

+

"98": "Left-PHG---parahippocampal-gyrus",

|

| 172 |

+

"99": "Right-PIns--posterior-insula",

|

| 173 |

+

"100": "Left-PIns--posterior-insula",

|

| 174 |

+

"101": "Right-PO----parietal-operculum",

|

| 175 |

+

"102": "Left-PO----parietal-operculum",

|

| 176 |

+

"103": "Right-PoG---postcentral-gyrus",

|

| 177 |

+

"104": "Left-PoG---postcentral-gyrus",

|

| 178 |

+

"105": "Right-POrG--posterior-orbital-gyrus",

|

| 179 |

+

"106": "Left-POrG--posterior-orbital-gyrus",

|

| 180 |

+

"107": "Right-PP----planum-polare",

|

| 181 |

+

"108": "Left-PP----planum-polare",

|

| 182 |

+

"109": "Right-PrG---precentral-gyrus",

|

| 183 |

+

"110": "Left-PrG---precentral-gyrus",

|

| 184 |

+

"111": "Right-PT----planum-temporale",

|

| 185 |

+

"112": "Left-PT----planum-temporale",

|

| 186 |

+

"113": "Right-SCA---subcallosal-area",

|

| 187 |

+

"114": "Left-SCA---subcallosal-area",

|

| 188 |

+

"115": "Right-SFG---superior-frontal-gyrus",

|

| 189 |

+

"116": "Left-SFG---superior-frontal-gyrus",

|

| 190 |

+

"117": "Right-SMC---supplementary-motor-cortex",

|

| 191 |

+

"118": "Left-SMC---supplementary-motor-cortex",

|

| 192 |

+

"119": "Right-SMG---supramarginal-gyrus",

|

| 193 |

+

"120": "Left-SMG---supramarginal-gyrus",

|

| 194 |

+

"121": "Right-SOG---superior-occipital-gyrus",

|

| 195 |

+

"122": "Left-SOG---superior-occipital-gyrus",

|

| 196 |

+

"123": "Right-SPL---superior-parietal-lobule",

|

| 197 |

+

"124": "Left-SPL---superior-parietal-lobule",

|

| 198 |

+

"125": "Right-STG---superior-temporal-gyrus",

|

| 199 |

+

"126": "Left-STG---superior-temporal-gyrus",

|

| 200 |

+

"127": "Right-TMP---temporal-pole",

|

| 201 |

+

"128": "Left-TMP---temporal-pole",

|

| 202 |

+

"129": "Right-TrIFG-triangular-part-of-the-IFG",

|

| 203 |

+

"130": "Left-TrIFG-triangular-part-of-the-IFG",

|

| 204 |

+

"131": "Right-TTG---transverse-temporal-gyrus",

|

| 205 |

+

"132": "Left-TTG---transverse-temporal-gyrus"

|

| 206 |

+

}

|

| 207 |

+

}

|

| 208 |

+

}

|

| 209 |

+

}

|

| 210 |

+

}

|

docs/3DSlicer_use.png

ADDED

|

docs/README.md

ADDED

|

@@ -0,0 +1,164 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Description

|

| 2 |

+

Detailed whole brain segmentation is an essential quantitative technique in medical image analysis, which provides a non-invasive way of measuring brain regions from a clinical acquired structural magnetic resonance imaging (MRI).

|

| 3 |

+

We provide the pre-trained model for inferencing whole brain segmentation with 133 structures.

|

| 4 |

+

|

| 5 |

+

A tutorial and release of model for whole brain segmentation using the 3D transformer-based segmentation model UNEST.

|

| 6 |

+

|

| 7 |

+

Authors:

|

| 8 |

+

Xin Yu ([email protected]) (Primary)

|

| 9 |

+

|

| 10 |

+

Yinchi Zhou ([email protected]) | Yucheng Tang ([email protected])

|

| 11 |

+

|

| 12 |

+

<p align="center">

|

| 13 |

+

-------------------------------------------------------------------------------------

|

| 14 |

+

</p>

|

| 15 |

+

|

| 16 |

+

<br>

|

| 17 |

+

<p align="center">

|

| 18 |

+

Fig.1 - The demonstration of T1w MRI images registered in MNI space and the whole brain segmentation labels with 133 classes</p>

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

# Model Overview

|

| 23 |

+

A pre-trained larger UNEST base model [1] for volumetric (3D) whole brain segmentation with T1w MR images.

|

| 24 |

+

To leverage information across embedded sequences, ”shifted window” transformers

|

| 25 |

+

are proposed for dense predictions and modeling multi-scale features. However, these

|

| 26 |

+

attempts that aim to complicate the self-attention range often yield high computation

|

| 27 |

+

complexity and data inefficiency. Inspired by the aggregation function in the nested

|

| 28 |

+

ViT, we propose a new design of a 3D U-shape medical segmentation model with

|

| 29 |

+

Nested Transformers (UNesT) hierarchically with the 3D block aggregation function,

|

| 30 |

+

that learn locality behaviors for small structures or small dataset. This design retains

|

| 31 |

+

the original global self-attention mechanism and achieves information communication

|

| 32 |

+

across patches by stacking transformer encoders hierarchically.

|

| 33 |

+

|

| 34 |

+

<br>

|

| 35 |

+

<p align="center">

|

| 36 |

+

Fig.2 - The network architecture of UNEST Base model

|

| 37 |

+

</p>

|

| 38 |

+

|

| 39 |

+

|

| 40 |

+

## Data

|

| 41 |

+

The training data is from the Vanderbilt University and Vanderbilt University Medical Center with public released OASIS and CANDI datsets.

|

| 42 |

+

Training and testing data are MRI T1-weighted (T1w) 3D volumes coming from 3 different sites. There are a total of 133 classes in the whole brain segmentation task.

|

| 43 |

+

Among 50 T1w MRI scans from Open Access Series on Imaging Studies (OASIS) (Marcus et al., 2007) dataset, 45 scans are used for training and the other 5 for validation.

|

| 44 |

+

The testing cohort contains Colin27 T1w scan (Aubert-Broche et al., 2006) and 13 T1w MRI scans from the Child and Adolescent Neuro Development Initiative (CANDI)

|

| 45 |

+

(Kennedy et al., 2012). All data are registered to the MNI space using the MNI305 (Evans et al., 1993) template and preprocessed follow the method in (Huo et al., 2019). Input images are randomly cropped to the size of 96 × 96 × 96.

|

| 46 |

+

|

| 47 |

+

### Important

|

| 48 |

+

|

| 49 |

+

```diff

|

| 50 |

+

+ All the brain MRI images for training are registered to Affine registration from the target image to the MNI305 template using NiftyReg.

|

| 51 |

+

+ The data should be in the MNI305 space before inference.

|

| 52 |

+

|

| 53 |

+

|

| 54 |

+

Registration to MNI Space: Sample suggestion. E.g., use ANTS or other tools for registering T1 MRI image to MNI305 Space.

|

| 55 |

+

|

| 56 |

+

|

| 57 |

+

```

|

| 58 |

+

pip install antspyx

|

| 59 |

+

```

|

| 60 |

+

Sample ANTS registration

|

| 61 |

+

```

|

| 62 |

+

|

| 63 |

+

import ants

|

| 64 |

+

import sys

|

| 65 |

+

import os

|

| 66 |

+

|

| 67 |

+

fixed_image = ants.image_read('<fixed_image_path>')

|

| 68 |

+

moving_image = ants.image_read('<moving_image_path>')

|

| 69 |

+

transform = ants.registration(fixed_image,moving_image,'Affine')

|

| 70 |

+

|

| 71 |

+

reg3t = ants.apply_transforms(fixed_image,moving_image,transform['fwdtransforms'][0])

|

| 72 |

+

ants.image_write(reg3t,output_image_path)

|

| 73 |

+

|

| 74 |

+

```

|

| 75 |

+

## Training configuration

|

| 76 |

+

The training and inference was performed with at least one 24GB-memory GPU.

|

| 77 |

+

|

| 78 |

+

Actual Model Input: 96 x 96 x 96

|

| 79 |

+

|

| 80 |

+

## Input and output formats

|

| 81 |

+

Input: 1 channel T1w MRI image in MNI305 Space.

|

| 82 |

+

|

| 83 |

+

|

| 84 |

+

## commands example

|

| 85 |

+

Download trained checkpoint model to ./model/model.pt:

|

| 86 |

+

|

| 87 |

+

|

| 88 |

+

Add scripts component: To run the workflow with customized components, PYTHONPATH should be revised to include the path to the customized component:

|

| 89 |

+

|

| 90 |

+

```

|

| 91 |

+

export PYTHONPATH=$PYTHONPATH: '<path to the bundle root dir>/scripts'

|

| 92 |

+

|

| 93 |

+

```

|

| 94 |

+

|

| 95 |

+

|

| 96 |

+

Execute inference:

|

| 97 |

+

|

| 98 |

+

```

|

| 99 |

+

python -m monai.bundle run evaluating --meta_file configs/metadata.json --config_file configs/inference.json --logging_file configs/logging.conf

|

| 100 |

+

```

|

| 101 |

+

|

| 102 |

+

|

| 103 |

+

## More examples output

|

| 104 |

+

<br>

|

| 105 |

+

<p align="center">

|

| 106 |

+

Fig.3 - The output prediction comparison with variant and ground truth

|

| 107 |

+

</p>

|

| 108 |

+

|

| 109 |

+

|

| 110 |

+

## Complete ROI of the whole brain segmentation

|

| 111 |

+

133 brain structures are segmented.

|

| 112 |

+

|

| 113 |

+

| #1 | #2 | #3 | #4 |

|

| 114 |

+

| :------------ | :---------- | :-------- | :-------- |

|

| 115 |

+

| 0: background | 1 : 3rd-Ventricle | 2 : 4th-Ventricle | 3 : Right-Accumbens-Area |

|

| 116 |

+

| 4 : Left-Accumbens-Area | 5 : Right-Amygdala | 6 : Left-Amygdala | 7 : Brain-Stem |

|

| 117 |

+

| 8 : Right-Caudate | 9 : Left-Caudate | 10 : Right-Cerebellum-Exterior | 11 : Left-Cerebellum-Exterior |

|

| 118 |

+

| 12 : Right-Cerebellum-White-Matter | 13 : Left-Cerebellum-White-Matter | 14 : Right-Cerebral-White-Matter | 15 : Left-Cerebral-White-Matter |

|

| 119 |

+

| 16 : Right-Hippocampus | 17 : Left-Hippocampus | 18 : Right-Inf-Lat-Vent | 19 : Left-Inf-Lat-Vent |

|

| 120 |

+

| 20 : Right-Lateral-Ventricle | 21 : Left-Lateral-Ventricle | 22 : Right-Pallidum | 23 : Left-Pallidum |

|

| 121 |

+

| 24 : Right-Putamen | 25 : Left-Putamen | 26 : Right-Thalamus-Proper | 27 : Left-Thalamus-Proper |

|

| 122 |

+

| 28 : Right-Ventral-DC | 29 : Left-Ventral-DC | 30 : Cerebellar-Vermal-Lobules-I-V | 31 : Cerebellar-Vermal-Lobules-VI-VII |

|

| 123 |

+

| 32 : Cerebellar-Vermal-Lobules-VIII-X | 33 : Left-Basal-Forebrain | 34 : Right-Basal-Forebrain | 35 : Right-ACgG--anterior-cingulate-gyrus |

|

| 124 |

+

| 36 : Left-ACgG--anterior-cingulate-gyrus | 37 : Right-AIns--anterior-insula | 38 : Left-AIns--anterior-insula | 39 : Right-AOrG--anterior-orbital-gyrus |

|

| 125 |

+

| 40 : Left-AOrG--anterior-orbital-gyrus | 41 : Right-AnG---angular-gyrus | 42 : Left-AnG---angular-gyrus | 43 : Right-Calc--calcarine-cortex |

|

| 126 |

+

| 44 : Left-Calc--calcarine-cortex | 45 : Right-CO----central-operculum | 46 : Left-CO----central-operculum | 47 : Right-Cun---cuneus |

|

| 127 |

+

| 48 : Left-Cun---cuneus | 49 : Right-Ent---entorhinal-area | 50 : Left-Ent---entorhinal-area | 51 : Right-FO----frontal-operculum |

|

| 128 |

+

| 52 : Left-FO----frontal-operculum | 53 : Right-FRP---frontal-pole | 54 : Left-FRP---frontal-pole | 55 : Right-FuG---fusiform-gyrus |

|

| 129 |

+

| 56 : Left-FuG---fusiform-gyrus | 57 : Right-GRe---gyrus-rectus | 58 : Left-GRe---gyrus-rectus | 59 : Right-IOG---inferior-occipital-gyrus ,

|

| 130 |

+

| 60 : Left-IOG---inferior-occipital-gyrus | 61 : Right-ITG---inferior-temporal-gyrus | 62 : Left-ITG---inferior-temporal-gyrus | 63 : Right-LiG---lingual-gyrus |

|

| 131 |

+

| 64 : Left-LiG---lingual-gyrus | 65 : Right-LOrG--lateral-orbital-gyrus | 66 : Left-LOrG--lateral-orbital-gyrus | 67 : Right-MCgG--middle-cingulate-gyrus |

|

| 132 |

+

| 68 : Left-MCgG--middle-cingulate-gyrus | 69 : Right-MFC---medial-frontal-cortex | 70 : Left-MFC---medial-frontal-cortex | 71 : Right-MFG---middle-frontal-gyrus |

|

| 133 |

+

| 72 : Left-MFG---middle-frontal-gyrus | 73 : Right-MOG---middle-occipital-gyrus | 74 : Left-MOG---middle-occipital-gyrus | 75 : Right-MOrG--medial-orbital-gyrus |

|

| 134 |

+

| 76 : Left-MOrG--medial-orbital-gyrus | 77 : Right-MPoG--postcentral-gyrus | 78 : Left-MPoG--postcentral-gyrus | 79 : Right-MPrG--precentral-gyrus |

|

| 135 |

+

| 80 : Left-MPrG--precentral-gyrus | 81 : Right-MSFG--superior-frontal-gyrus | 82 : Left-MSFG--superior-frontal-gyrus | 83 : Right-MTG---middle-temporal-gyrus |

|

| 136 |

+

| 84 : Left-MTG---middle-temporal-gyrus | 85 : Right-OCP---occipital-pole | 86 : Left-OCP---occipital-pole | 87 : Right-OFuG--occipital-fusiform-gyrus |

|

| 137 |

+

| 88 : Left-OFuG--occipital-fusiform-gyrus | 89 : Right-OpIFG-opercular-part-of-the-IFG | 90 : Left-OpIFG-opercular-part-of-the-IFG | 91 : Right-OrIFG-orbital-part-of-the-IFG |

|

| 138 |

+

| 92 : Left-OrIFG-orbital-part-of-the-IFG | 93 : Right-PCgG--posterior-cingulate-gyrus | 94 : Left-PCgG--posterior-cingulate-gyrus | 95 : Right-PCu---precuneus |

|

| 139 |

+

| 96 : Left-PCu---precuneus | 97 : Right-PHG---parahippocampal-gyrus | 98 : Left-PHG---parahippocampal-gyrus | 99 : Right-PIns--posterior-insula |

|

| 140 |

+

| 100 : Left-PIns--posterior-insula | 101 : Right-PO----parietal-operculum | 102 : Left-PO----parietal-operculum | 103 : Right-PoG---postcentral-gyrus |

|

| 141 |

+

| 104 : Left-PoG---postcentral-gyrus | 105 : Right-POrG--posterior-orbital-gyrus | 106 : Left-POrG--posterior-orbital-gyrus | 107 : Right-PP----planum-polare |

|

| 142 |

+

| 108 : Left-PP----planum-polare | 109 : Right-PrG---precentral-gyrus | 110 : Left-PrG---precentral-gyrus | 111 : Right-PT----planum-temporale |

|

| 143 |

+

| 112 : Left-PT----planum-temporale | 113 : Right-SCA---subcallosal-area | 114 : Left-SCA---subcallosal-area | 115 : Right-SFG---superior-frontal-gyrus |

|

| 144 |

+

| 116 : Left-SFG---superior-frontal-gyrus | 117 : Right-SMC---supplementary-motor-cortex | 118 : Left-SMC---supplementary-motor-cortex | 119 : Right-SMG---supramarginal-gyrus |

|

| 145 |

+

| 120 : Left-SMG---supramarginal-gyrus | 121 : Right-SOG---superior-occipital-gyrus | 122 : Left-SOG---superior-occipital-gyrus | 123 : Right-SPL---superior-parietal-lobule |

|

| 146 |

+

| 124 : Left-SPL---superior-parietal-lobule | 125 : Right-STG---superior-temporal-gyrus | 126 : Left-STG---superior-temporal-gyrus | 127 : Right-TMP---temporal-pole |

|

| 147 |

+

| 128 : Left-TMP---temporal-pole | 129 : Right-TrIFG-triangular-part-of-the-IFG | 130 : Left-TrIFG-triangular-part-of-the-IFG | 131 : Right-TTG---transverse-temporal-gyrus |

|

| 148 |

+

| 132 : Left-TTG---transverse-temporal-gyrus |

|

| 149 |

+

|

| 150 |

+

|

| 151 |

+

## Bundle Integration in MONAI Lable

|

| 152 |

+

The inference pipleine can be easily used by the MONAI Label server and 3D Slicer for fast labeling T1w MRI images in MNI space.

|

| 153 |

+

|

| 154 |

+

<br>

|

| 155 |

+

|

| 156 |

+

# Disclaimer

|

| 157 |

+

This is an example, not to be used for diagnostic purposes.

|

| 158 |

+

|

| 159 |

+

# References

|

| 160 |

+

[1] Yu, Xin, Yinchi Zhou, Yucheng Tang et al. Characterizing Renal Structures with 3D Block Aggregate Transformers. arXiv preprint arXiv:2203.02430 (2022). https://arxiv.org/pdf/2203.02430.pdf

|

| 161 |

+

|

| 162 |

+

[2] Zizhao Zhang et al. Nested Hierarchical Transformer: Towards Accurate, Data-Efficient and Interpretable Visual Understanding. AAAI Conference on Artificial Intelligence (AAAI) 2022

|

| 163 |

+

|

| 164 |

+

[3] Huo, Yuankai, et al. 3D whole brain segmentation using spatially localized atlas network tiles. NeuroImage 194 (2019): 105-119.

|

docs/demo.png

ADDED

|

Git LFS Details

|

docs/license.txt

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Third Party Licenses

|

| 2 |

+

-----------------------------------------------------------------------

|

| 3 |

+

|

| 4 |

+

/*********************************************************************/

|

docs/unest.png

ADDED

|

docs/wholebrain.png

ADDED

|

models/model.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:79a52ccd77bc35d05410f39788a1b063af3eb3b809b42241335c18aed27ec422

|

| 3 |

+

size 348901503

|

scripts/__init__.py

ADDED

|

@@ -0,0 +1,10 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Copyright (c) MONAI Consortium

|

| 2 |

+

# Licensed under the Apache License, Version 2.0 (the "License");

|

| 3 |

+

# you may not use this file except in compliance with the License.

|

| 4 |

+

# You may obtain a copy of the License at

|

| 5 |

+

# http://www.apache.org/licenses/LICENSE-2.0

|

| 6 |

+

# Unless required by applicable law or agreed to in writing, software

|

| 7 |

+

# distributed under the License is distributed on an "AS IS" BASIS,

|

| 8 |

+

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 9 |

+

# See the License for the specific language governing permissions and

|

| 10 |

+

# limitations under the License.

|

scripts/networks/__init__.py

ADDED

|

@@ -0,0 +1,10 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Copyright (c) MONAI Consortium

|

| 2 |

+

# Licensed under the Apache License, Version 2.0 (the "License");

|

| 3 |

+

# you may not use this file except in compliance with the License.

|

| 4 |

+

# You may obtain a copy of the License at

|

| 5 |

+

# http://www.apache.org/licenses/LICENSE-2.0

|

| 6 |

+

# Unless required by applicable law or agreed to in writing, software

|

| 7 |

+

# distributed under the License is distributed on an "AS IS" BASIS,

|

| 8 |

+

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 9 |

+

# See the License for the specific language governing permissions and

|

| 10 |

+

# limitations under the License.

|

scripts/networks/nest/__init__.py

ADDED

|

@@ -0,0 +1,16 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|