Commit

•

908f278

1

Parent(s):

fda340c

Update model

Browse files- config.yaml +176 -0

- images/backward_time.png +0 -0

- images/forward_time.png +0 -0

- images/gpu_max_cached_mem_GB.png +0 -0

- images/iter_time.png +0 -0

- images/loss.png +0 -0

- images/optim0_lr0.png +0 -0

- images/optim_step_time.png +0 -0

- images/train_time.png +0 -0

- tokens.txt +53 -0

- valid.loss.ave.pth +3 -0

- valid.loss.best.pth +3 -0

config.yaml

ADDED

|

@@ -0,0 +1,176 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

config: conf/train_lm_rnn_unit1024_nlayers3_dropout0.2.yaml

|

| 2 |

+

print_config: false

|

| 3 |

+

log_level: INFO

|

| 4 |

+

dry_run: false

|

| 5 |

+

iterator_type: sequence

|

| 6 |

+

output_dir: exp/lm_train_lm_rnn_unit1024_nlayers3_dropout0.2_word

|

| 7 |

+

ngpu: 1

|

| 8 |

+

seed: 0

|

| 9 |

+

num_workers: 1

|

| 10 |

+

num_att_plot: 3

|

| 11 |

+

dist_backend: nccl

|

| 12 |

+

dist_init_method: env://

|

| 13 |

+

dist_world_size: 2

|

| 14 |

+

dist_rank: 0

|

| 15 |

+

local_rank: 0

|

| 16 |

+

dist_master_addr: localhost

|

| 17 |

+

dist_master_port: 35485

|

| 18 |

+

dist_launcher: null

|

| 19 |

+

multiprocessing_distributed: true

|

| 20 |

+

unused_parameters: false

|

| 21 |

+

sharded_ddp: false

|

| 22 |

+

cudnn_enabled: true

|

| 23 |

+

cudnn_benchmark: false

|

| 24 |

+

cudnn_deterministic: true

|

| 25 |

+

collect_stats: false

|

| 26 |

+

write_collected_feats: false

|

| 27 |

+

max_epoch: 40

|

| 28 |

+

patience: null

|

| 29 |

+

val_scheduler_criterion:

|

| 30 |

+

- valid

|

| 31 |

+

- loss

|

| 32 |

+

early_stopping_criterion:

|

| 33 |

+

- valid

|

| 34 |

+

- loss

|

| 35 |

+

- min

|

| 36 |

+

best_model_criterion:

|

| 37 |

+

- - valid

|

| 38 |

+

- loss

|

| 39 |

+

- min

|

| 40 |

+

keep_nbest_models: 1

|

| 41 |

+

nbest_averaging_interval: 0

|

| 42 |

+

grad_clip: 5.0

|

| 43 |

+

grad_clip_type: 2.0

|

| 44 |

+

grad_noise: false

|

| 45 |

+

accum_grad: 1

|

| 46 |

+

no_forward_run: false

|

| 47 |

+

resume: true

|

| 48 |

+

train_dtype: float32

|

| 49 |

+

use_amp: true

|

| 50 |

+

log_interval: null

|

| 51 |

+

use_matplotlib: true

|

| 52 |

+

use_tensorboard: true

|

| 53 |

+

create_graph_in_tensorboard: false

|

| 54 |

+

use_wandb: false

|

| 55 |

+

wandb_project: null

|

| 56 |

+

wandb_id: null

|

| 57 |

+

wandb_entity: null

|

| 58 |

+

wandb_name: null

|

| 59 |

+

wandb_model_log_interval: -1

|

| 60 |

+

detect_anomaly: false

|

| 61 |

+

pretrain_path: null

|

| 62 |

+

init_param: []

|

| 63 |

+

ignore_init_mismatch: false

|

| 64 |

+

freeze_param: []

|

| 65 |

+

num_iters_per_epoch: null

|

| 66 |

+

batch_size: 20

|

| 67 |

+

valid_batch_size: null

|

| 68 |

+

batch_bins: 10000000

|

| 69 |

+

valid_batch_bins: null

|

| 70 |

+

train_shape_file:

|

| 71 |

+

- exp/lm_stats_word/train/text_shape.word

|

| 72 |

+

valid_shape_file:

|

| 73 |

+

- exp/lm_stats_word/valid/text_shape.word

|

| 74 |

+

batch_type: numel

|

| 75 |

+

valid_batch_type: null

|

| 76 |

+

fold_length:

|

| 77 |

+

- 150

|

| 78 |

+

sort_in_batch: descending

|

| 79 |

+

sort_batch: descending

|

| 80 |

+

multiple_iterator: false

|

| 81 |

+

chunk_length: 500

|

| 82 |

+

chunk_shift_ratio: 0.5

|

| 83 |

+

num_cache_chunks: 1024

|

| 84 |

+

train_data_path_and_name_and_type:

|

| 85 |

+

- - dump/raw/lm_train.txt

|

| 86 |

+

- text

|

| 87 |

+

- text

|

| 88 |

+

valid_data_path_and_name_and_type:

|

| 89 |

+

- - nunit_50_rep/lm_valid_50_rep.txt

|

| 90 |

+

- text

|

| 91 |

+

- text

|

| 92 |

+

allow_variable_data_keys: false

|

| 93 |

+

max_cache_size: 0.0

|

| 94 |

+

max_cache_fd: 32

|

| 95 |

+

valid_max_cache_size: null

|

| 96 |

+

optim: adam

|

| 97 |

+

optim_conf:

|

| 98 |

+

lr: 0.002

|

| 99 |

+

scheduler: reducelronplateau

|

| 100 |

+

scheduler_conf:

|

| 101 |

+

mode: min

|

| 102 |

+

factor: 0.5

|

| 103 |

+

patience: 2

|

| 104 |

+

token_list:

|

| 105 |

+

- <blank>

|

| 106 |

+

- <unk>

|

| 107 |

+

- '7'

|

| 108 |

+

- '35'

|

| 109 |

+

- '44'

|

| 110 |

+

- '49'

|

| 111 |

+

- '20'

|

| 112 |

+

- '47'

|

| 113 |

+

- '0'

|

| 114 |

+

- '3'

|

| 115 |

+

- '46'

|

| 116 |

+

- '45'

|

| 117 |

+

- '31'

|

| 118 |

+

- '11'

|

| 119 |

+

- '28'

|

| 120 |

+

- '4'

|

| 121 |

+

- '37'

|

| 122 |

+

- '43'

|

| 123 |

+

- '26'

|

| 124 |

+

- '36'

|

| 125 |

+

- '18'

|

| 126 |

+

- '32'

|

| 127 |

+

- '5'

|

| 128 |

+

- '14'

|

| 129 |

+

- '33'

|

| 130 |

+

- '16'

|

| 131 |

+

- '9'

|

| 132 |

+

- '8'

|

| 133 |

+

- '17'

|

| 134 |

+

- '30'

|

| 135 |

+

- '24'

|

| 136 |

+

- '48'

|

| 137 |

+

- '21'

|

| 138 |

+

- '34'

|

| 139 |

+

- '6'

|

| 140 |

+

- '29'

|

| 141 |

+

- '38'

|

| 142 |

+

- '23'

|

| 143 |

+

- '39'

|

| 144 |

+

- '10'

|

| 145 |

+

- '27'

|

| 146 |

+

- '19'

|

| 147 |

+

- '40'

|

| 148 |

+

- '42'

|

| 149 |

+

- '25'

|

| 150 |

+

- '41'

|

| 151 |

+

- '12'

|

| 152 |

+

- '15'

|

| 153 |

+

- '1'

|

| 154 |

+

- '2'

|

| 155 |

+

- '13'

|

| 156 |

+

- '22'

|

| 157 |

+

- <sos/eos>

|

| 158 |

+

init: null

|

| 159 |

+

model_conf:

|

| 160 |

+

ignore_id: 0

|

| 161 |

+

use_preprocessor: true

|

| 162 |

+

token_type: word

|

| 163 |

+

bpemodel: null

|

| 164 |

+

non_linguistic_symbols: null

|

| 165 |

+

cleaner: null

|

| 166 |

+

g2p: null

|

| 167 |

+

lm: seq_rnn

|

| 168 |

+

lm_conf:

|

| 169 |

+

unit: 1024

|

| 170 |

+

nlayers: 3

|

| 171 |

+

dropout_rate: 0.2

|

| 172 |

+

required:

|

| 173 |

+

- output_dir

|

| 174 |

+

- token_list

|

| 175 |

+

version: '202207'

|

| 176 |

+

distributed: true

|

images/backward_time.png

ADDED

|

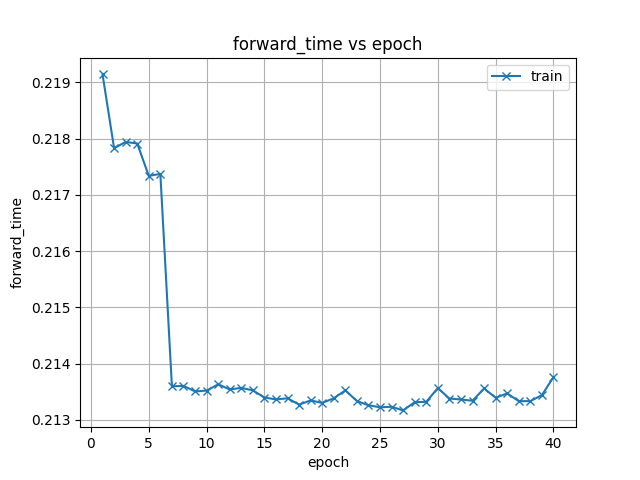

images/forward_time.png

ADDED

|

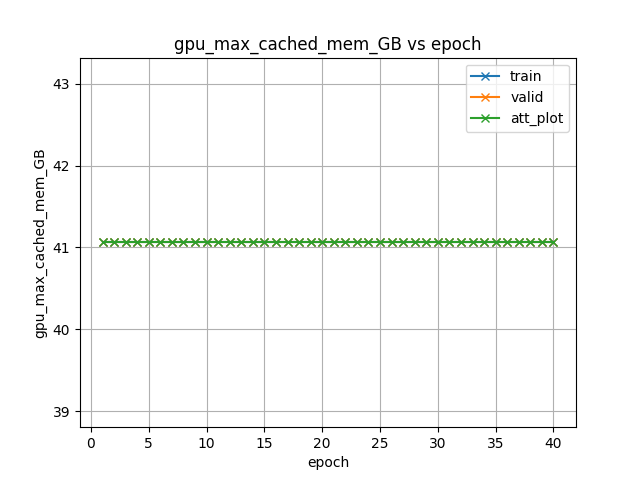

images/gpu_max_cached_mem_GB.png

ADDED

|

images/iter_time.png

ADDED

|

images/loss.png

ADDED

|

images/optim0_lr0.png

ADDED

|

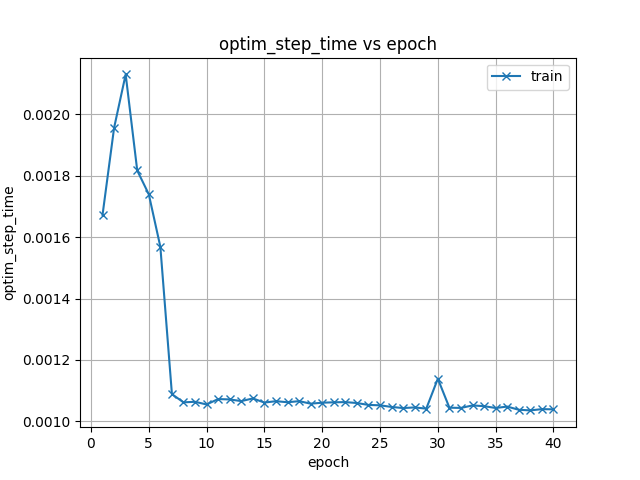

images/optim_step_time.png

ADDED

|

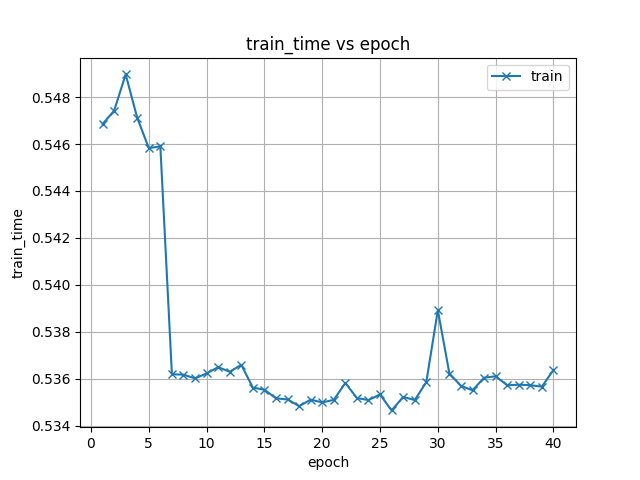

images/train_time.png

ADDED

|

tokens.txt

ADDED

|

@@ -0,0 +1,53 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<blank>

|

| 2 |

+

<unk>

|

| 3 |

+

7

|

| 4 |

+

35

|

| 5 |

+

44

|

| 6 |

+

49

|

| 7 |

+

20

|

| 8 |

+

47

|

| 9 |

+

0

|

| 10 |

+

3

|

| 11 |

+

46

|

| 12 |

+

45

|

| 13 |

+

31

|

| 14 |

+

11

|

| 15 |

+

28

|

| 16 |

+

4

|

| 17 |

+

37

|

| 18 |

+

43

|

| 19 |

+

26

|

| 20 |

+

36

|

| 21 |

+

18

|

| 22 |

+

32

|

| 23 |

+

5

|

| 24 |

+

14

|

| 25 |

+

33

|

| 26 |

+

16

|

| 27 |

+

9

|

| 28 |

+

8

|

| 29 |

+

17

|

| 30 |

+

30

|

| 31 |

+

24

|

| 32 |

+

48

|

| 33 |

+

21

|

| 34 |

+

34

|

| 35 |

+

6

|

| 36 |

+

29

|

| 37 |

+

38

|

| 38 |

+

23

|

| 39 |

+

39

|

| 40 |

+

10

|

| 41 |

+

27

|

| 42 |

+

19

|

| 43 |

+

40

|

| 44 |

+

42

|

| 45 |

+

25

|

| 46 |

+

41

|

| 47 |

+

12

|

| 48 |

+

15

|

| 49 |

+

1

|

| 50 |

+

2

|

| 51 |

+

13

|

| 52 |

+

22

|

| 53 |

+

<sos/eos>

|

valid.loss.ave.pth

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:0ff751697701ba255d73e6bd0446a9553c941c45525dba8b64a6abcb1f1f8d9c

|

| 3 |

+

size 101198559

|

valid.loss.best.pth

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:0ff751697701ba255d73e6bd0446a9553c941c45525dba8b64a6abcb1f1f8d9c

|

| 3 |

+

size 101198559

|