Spaces:

Sleeping

Sleeping

Commit

•

07cf2eb

1

Parent(s):

a983caa

content

Browse files- .gitattributes +5 -0

- app.py +8 -1

- content/inpainting_after.png +3 -0

- content/inpainting_before.jpg +3 -0

- content/inpainting_sidebar.png +3 -0

- content/regen_example.png +3 -0

- explanation.py +30 -0

- image.png +0 -0

- test.py +0 -50

.gitattributes

CHANGED

|

@@ -32,3 +32,8 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 32 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 32 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 35 |

+

track filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

content/inpainting_after.png filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

content/inpainting_before.jpg filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

content/inpainting_sidebar.png filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

content/regen_example.png filter=lfs diff=lfs merge=lfs -text

|

app.py

CHANGED

|

@@ -12,7 +12,7 @@ from segmentation import segment_image

|

|

| 12 |

from config import HEIGHT, WIDTH, POS_PROMPT, NEG_PROMPT, COLOR_MAPPING, map_colors, map_colors_rgb

|

| 13 |

from palette import COLOR_MAPPING_CATEGORY

|

| 14 |

from preprocessing import preprocess_seg_mask, get_image, get_mask

|

| 15 |

-

|

| 16 |

# wide layout

|

| 17 |

st.set_page_config(layout="wide")

|

| 18 |

|

|

@@ -276,6 +276,13 @@ def main():

|

|

| 276 |

|

| 277 |

_reset_state = check_reset_state()

|

| 278 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 279 |

col1, col2 = st.columns(2)

|

| 280 |

with col1:

|

| 281 |

make_editing_canvas(canvas_color=color_chooser,

|

|

|

|

| 12 |

from config import HEIGHT, WIDTH, POS_PROMPT, NEG_PROMPT, COLOR_MAPPING, map_colors, map_colors_rgb

|

| 13 |

from palette import COLOR_MAPPING_CATEGORY

|

| 14 |

from preprocessing import preprocess_seg_mask, get_image, get_mask

|

| 15 |

+

from explanation import make_inpainting_explanation, make_regeneration_explanation, make_segmentation_explanation

|

| 16 |

# wide layout

|

| 17 |

st.set_page_config(layout="wide")

|

| 18 |

|

|

|

|

| 276 |

|

| 277 |

_reset_state = check_reset_state()

|

| 278 |

|

| 279 |

+

if generation_mode == "Inpainting":

|

| 280 |

+

make_inpainting_explanation()

|

| 281 |

+

elif generation_mode == "Segmentation conditioning":

|

| 282 |

+

make_segmentation_explanation()

|

| 283 |

+

elif generation_mode == "Re-generate objects":

|

| 284 |

+

make_regeneration_explanation()

|

| 285 |

+

|

| 286 |

col1, col2 = st.columns(2)

|

| 287 |

with col1:

|

| 288 |

make_editing_canvas(canvas_color=color_chooser,

|

content/inpainting_after.png

ADDED

|

Git LFS Details

|

content/inpainting_before.jpg

ADDED

|

Git LFS Details

|

content/inpainting_sidebar.png

ADDED

|

Git LFS Details

|

content/regen_example.png

ADDED

|

Git LFS Details

|

explanation.py

ADDED

|

@@ -0,0 +1,30 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

|

| 3 |

+

def make_inpainting_explanation():

|

| 4 |

+

with st.expander("Explanation inpainting", expanded=False):

|

| 5 |

+

st.write("In the inpainting mode, you can draw regions on the input image that you want to regenerate. "

|

| 6 |

+

"This can be useful to remove unwanted objects from the image or to improve the consistency of the image."

|

| 7 |

+

)

|

| 8 |

+

st.image("content/inpainting_sidebar.png", caption="Image before inpainting, note the ornaments on the wall", width=100)

|

| 9 |

+

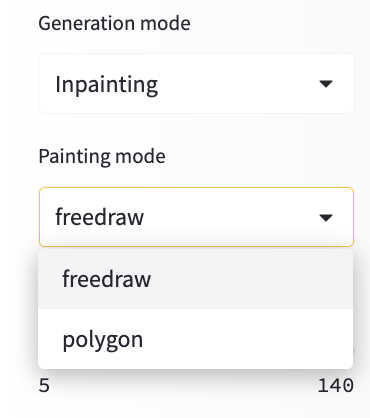

st.write("You can find drawing options in the sidebar. There are two modes: freedraw and polygon. Freedraw allows the user to draw with a pencil of a certain width. "

|

| 10 |

+

"Polygon allows the user to draw a polygon by clicking on the image to add a point. The polygon is closed by right clicking.")

|

| 11 |

+

|

| 12 |

+

st.write("### Example inpainting")

|

| 13 |

+

st.write("In the example below, the ornaments on the wall are removed. The inpainting is done by drawing a mask on the image.")

|

| 14 |

+

st.image("content/inpainting_before.jpg", caption="Image before inpainting, note the ornaments on the wall", width=400)

|

| 15 |

+

st.image("content/inpainting_after.png", caption="Image before inpainting, note the ornaments on the wall", width=400)

|

| 16 |

+

|

| 17 |

+

def make_regeneration_explanation():

|

| 18 |

+

with st.expander("Explanation object regeneration"):

|

| 19 |

+

st.write("In this object regeneration mode, the model calculates which objects occur in the image. "

|

| 20 |

+

"The user can then select which objects can be regenerated by the controlnet model by adding them in the multiselect box. "

|

| 21 |

+

"All the object classes that are not selected will remain the same as in the original image."

|

| 22 |

+

)

|

| 23 |

+

st.write("### Example object regeneration")

|

| 24 |

+

st.write("In the example below, the room consists of various objects such as wall, ceiling, floor, lamp, bed, ... "

|

| 25 |

+

"In the multiselect box, all the objects except for 'lamp', 'bed and 'table' are selected to be regenerated. "

|

| 26 |

+

)

|

| 27 |

+

st.image("content/regen_example.png", caption="Room where all concepts except for 'bed', 'lamp', 'table' are regenerated", width=400)

|

| 28 |

+

|

| 29 |

+

def make_segmentation_explanation():

|

| 30 |

+

pass

|

image.png

DELETED

|

Binary file (684 kB)

|

|

|

test.py

DELETED

|

@@ -1,50 +0,0 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

class FondantInferenceModel:

|

| 4 |

-

"""FondantInferenceModel class that abstracts the model loading and inference.

|

| 5 |

-

User needs to implement an inference, pre/postprocess step and pass the class to the FondantInferenceComponent.

|

| 6 |

-

The FondantInferenceComponent will then load the model and prepare it for inference.

|

| 7 |

-

The examples folder can then show examples for a pytorch / huggingface / tensorflow / ... model.

|

| 8 |

-

"""

|

| 9 |

-

def __init__(self, device: str = "cpu"):

|

| 10 |

-

self.device = device

|

| 11 |

-

# load model

|

| 12 |

-

self.model = self.load_model()

|

| 13 |

-

# set model to eval mode

|

| 14 |

-

self.eval()

|

| 15 |

-

|

| 16 |

-

def load_model(self):

|

| 17 |

-

# load model

|

| 18 |

-

...

|

| 19 |

-

|

| 20 |

-

def eval(self):

|

| 21 |

-

# prepare for inference

|

| 22 |

-

self.model = self.model.eval()

|

| 23 |

-

self.model = self.model.to(self.device)

|

| 24 |

-

|

| 25 |

-

def preprocess(self, input):

|

| 26 |

-

# preprocess input

|

| 27 |

-

...

|

| 28 |

-

|

| 29 |

-

def postprocess(self, output):

|

| 30 |

-

# postprocess output

|

| 31 |

-

...

|

| 32 |

-

|

| 33 |

-

def __call__(self, *args, **kwargs):

|

| 34 |

-

processed_inputs = self.preprocess(*args, **kwargs)

|

| 35 |

-

outputs = self.model(*processed_inputs)

|

| 36 |

-

processed_outputs = self.postprocess(outputs)

|

| 37 |

-

return processed_outputs

|

| 38 |

-

|

| 39 |

-

|

| 40 |

-

class FondantInferenceComponent(FondantTransformComponent, FondantInferenceModel):

|

| 41 |

-

# loads the model and prepares it for inference

|

| 42 |

-

|

| 43 |

-

def transform(

|

| 44 |

-

self, args: argparse.Namespace, dataframe: dd.DataFrame

|

| 45 |

-

) -> dd.DataFrame:

|

| 46 |

-

# by using the InferenceComponent, the model is automatically loaded and prepared for inference

|

| 47 |

-

# you just need to call the infer method

|

| 48 |

-

# the self.infer method calls the model.__call__ method of the FondantInferenceModel

|

| 49 |

-

output = self.infer(args.image)

|

| 50 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|