Upload 36 files

Browse files- .gitattributes +1 -0

- ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/.gitignore +163 -0

- ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/LICENSE +21 -0

- ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/Pytorch_Retinaface/data/__init__.py +1 -0

- ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/Pytorch_Retinaface/data/config.py +42 -0

- ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/Pytorch_Retinaface/images/test.jpg +0 -0

- ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/Pytorch_Retinaface/images/test.webp +0 -0

- ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/Pytorch_Retinaface/layers/__init__.py +1 -0

- ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/Pytorch_Retinaface/layers/functions/prior_box.py +34 -0

- ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/Pytorch_Retinaface/models/__init__.py +0 -0

- ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/Pytorch_Retinaface/models/net.py +137 -0

- ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/Pytorch_Retinaface/models/retinaface.py +130 -0

- ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/Pytorch_Retinaface/pytorch_retinaface.py +174 -0

- ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/Pytorch_Retinaface/utils/__init__.py +0 -0

- ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/Pytorch_Retinaface/utils/box_utils.py +330 -0

- ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/Pytorch_Retinaface/utils/nms/__init__.py +0 -0

- ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/Pytorch_Retinaface/utils/nms/py_cpu_nms.py +38 -0

- ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/Pytorch_Retinaface/weights/mobilenet0.25_Final.pth +3 -0

- ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/Pytorch_Retinaface/weights/mobilenetV1X0.25_pretrain.tar +3 -0

- ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/README.md +41 -0

- ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/__init__.py +177 -0

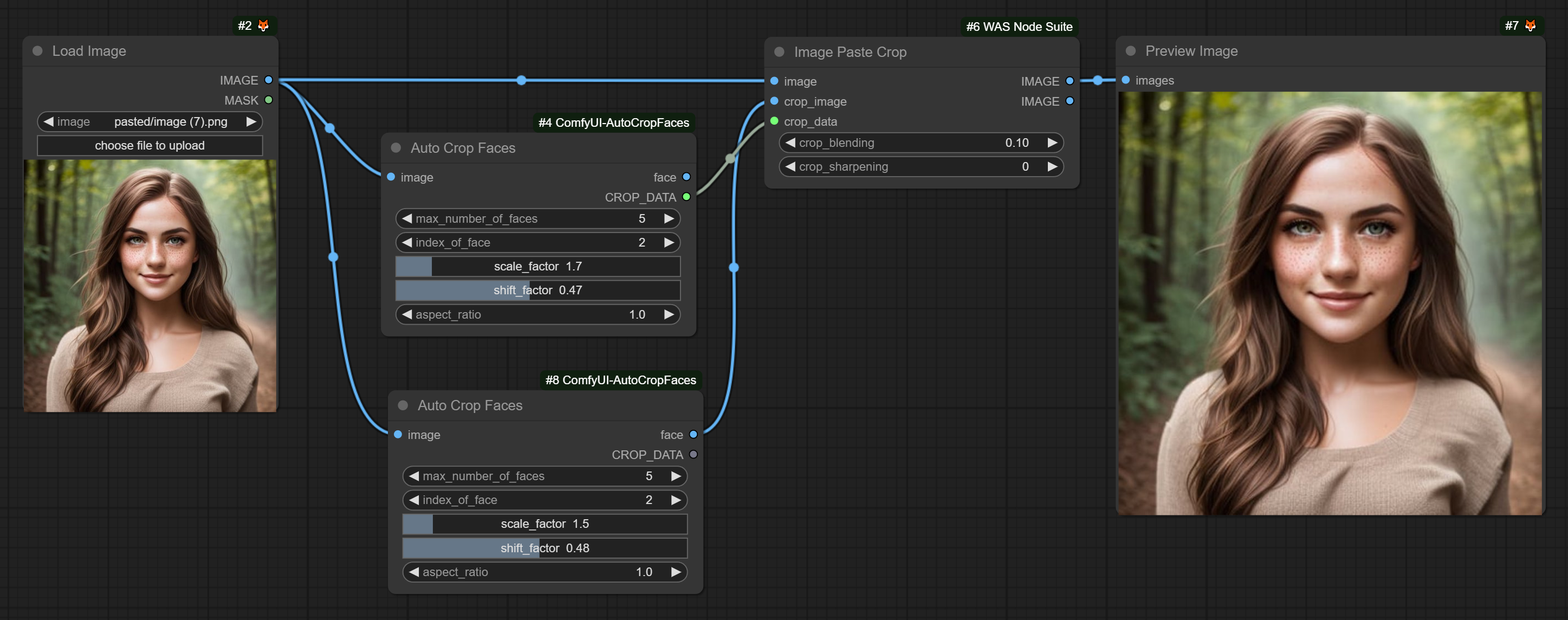

- ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/images/Crop_Data.png +3 -0

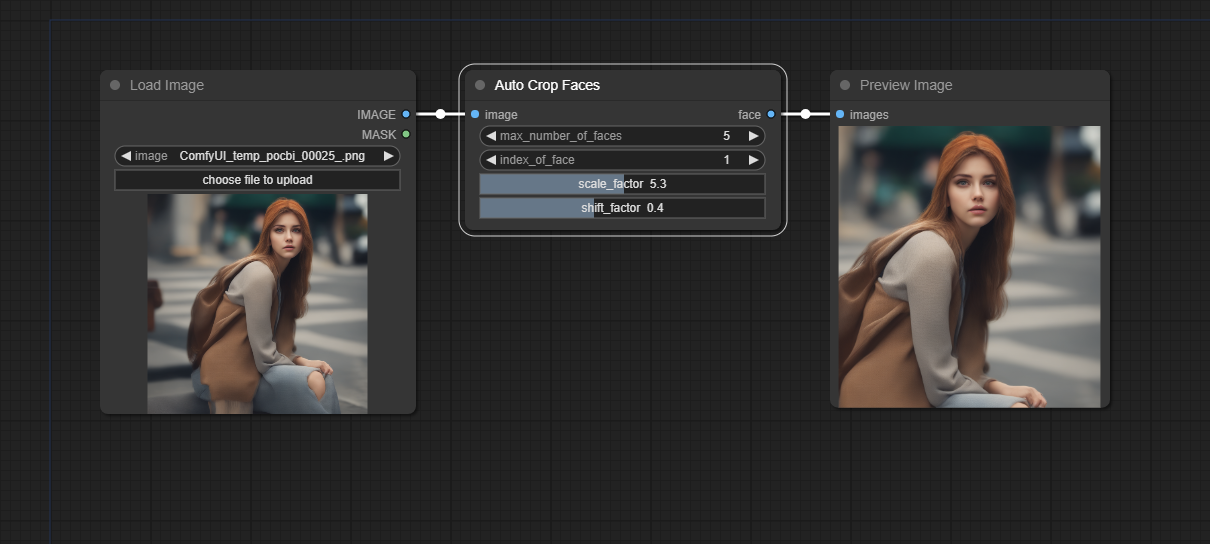

- ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/images/workflow-AutoCropFaces-Simple.png +0 -0

- ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/images/workflow-AutoCropFaces-bottom.png +0 -0

- ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/images/workflow-AutoCropFaces-with-Constrain.png +0 -0

- ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/test.py +46 -0

- ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/tests/AutoCropFaces-Testing.json +1997 -0

- ComfyUI/custom_nodes/ComfyUI-Switch/README.md +6 -0

- ComfyUI/custom_nodes/ComfyUI-Switch/Switch.py +35 -0

- ComfyUI/custom_nodes/ComfyUI-Switch/__init__.py +3 -0

- ComfyUI/custom_nodes/example_node.py.example +128 -0

- ComfyUI/custom_nodes/image-resize-comfyui/LICENSE +21 -0

- ComfyUI/custom_nodes/image-resize-comfyui/README.md +51 -0

- ComfyUI/custom_nodes/image-resize-comfyui/__init__.py +3 -0

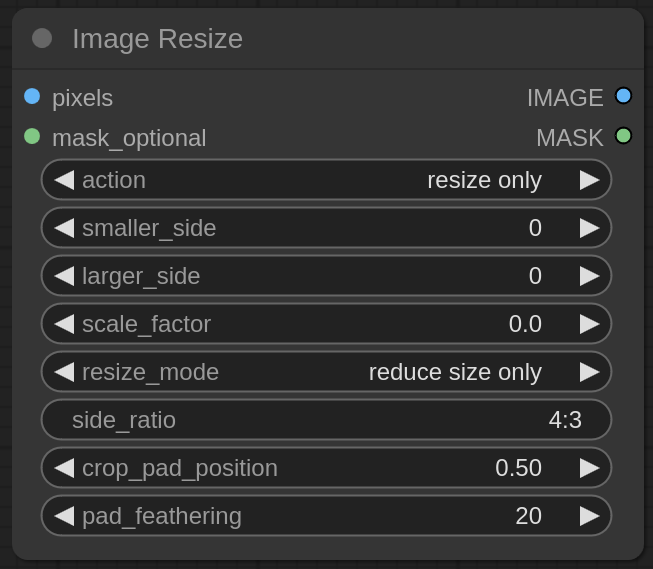

- ComfyUI/custom_nodes/image-resize-comfyui/image_resize.png +0 -0

- ComfyUI/custom_nodes/image-resize-comfyui/image_resize.py +163 -0

- ComfyUI/custom_nodes/websocket_image_save.py +45 -0

.gitattributes

CHANGED

|

@@ -40,3 +40,4 @@ ComfyUI/temp/ComfyUI_temp_zprxs_00002_.png filter=lfs diff=lfs merge=lfs -text

|

|

| 40 |

ComfyUI/custom_nodes/merge_cross_images/result3.png filter=lfs diff=lfs merge=lfs -text

|

| 41 |

ComfyUI/custom_nodes/comfyui_controlnet_aux/examples/example_mesh_graphormer.png filter=lfs diff=lfs merge=lfs -text

|

| 42 |

ComfyUI/custom_nodes/comfyui_controlnet_aux/src/controlnet_aux/mesh_graphormer/hand_landmarker.task filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 40 |

ComfyUI/custom_nodes/merge_cross_images/result3.png filter=lfs diff=lfs merge=lfs -text

|

| 41 |

ComfyUI/custom_nodes/comfyui_controlnet_aux/examples/example_mesh_graphormer.png filter=lfs diff=lfs merge=lfs -text

|

| 42 |

ComfyUI/custom_nodes/comfyui_controlnet_aux/src/controlnet_aux/mesh_graphormer/hand_landmarker.task filter=lfs diff=lfs merge=lfs -text

|

| 43 |

+

ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/images/Crop_Data.png filter=lfs diff=lfs merge=lfs -text

|

ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/.gitignore

ADDED

|

@@ -0,0 +1,163 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

outputs/

|

| 2 |

+

**/Resnet50_Final.pth

|

| 3 |

+

|

| 4 |

+

# Byte-compiled / optimized / DLL files

|

| 5 |

+

__pycache__/

|

| 6 |

+

*.py[cod]

|

| 7 |

+

*$py.class

|

| 8 |

+

|

| 9 |

+

# C extensions

|

| 10 |

+

*.so

|

| 11 |

+

|

| 12 |

+

# Distribution / packaging

|

| 13 |

+

.Python

|

| 14 |

+

build/

|

| 15 |

+

develop-eggs/

|

| 16 |

+

dist/

|

| 17 |

+

downloads/

|

| 18 |

+

eggs/

|

| 19 |

+

.eggs/

|

| 20 |

+

lib/

|

| 21 |

+

lib64/

|

| 22 |

+

parts/

|

| 23 |

+

sdist/

|

| 24 |

+

var/

|

| 25 |

+

wheels/

|

| 26 |

+

share/python-wheels/

|

| 27 |

+

*.egg-info/

|

| 28 |

+

.installed.cfg

|

| 29 |

+

*.egg

|

| 30 |

+

MANIFEST

|

| 31 |

+

|

| 32 |

+

# PyInstaller

|

| 33 |

+

# Usually these files are written by a python script from a template

|

| 34 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 35 |

+

*.manifest

|

| 36 |

+

*.spec

|

| 37 |

+

|

| 38 |

+

# Installer logs

|

| 39 |

+

pip-log.txt

|

| 40 |

+

pip-delete-this-directory.txt

|

| 41 |

+

|

| 42 |

+

# Unit test / coverage reports

|

| 43 |

+

htmlcov/

|

| 44 |

+

.tox/

|

| 45 |

+

.nox/

|

| 46 |

+

.coverage

|

| 47 |

+

.coverage.*

|

| 48 |

+

.cache

|

| 49 |

+

nosetests.xml

|

| 50 |

+

coverage.xml

|

| 51 |

+

*.cover

|

| 52 |

+

*.py,cover

|

| 53 |

+

.hypothesis/

|

| 54 |

+

.pytest_cache/

|

| 55 |

+

cover/

|

| 56 |

+

|

| 57 |

+

# Translations

|

| 58 |

+

*.mo

|

| 59 |

+

*.pot

|

| 60 |

+

|

| 61 |

+

# Django stuff:

|

| 62 |

+

*.log

|

| 63 |

+

local_settings.py

|

| 64 |

+

db.sqlite3

|

| 65 |

+

db.sqlite3-journal

|

| 66 |

+

|

| 67 |

+

# Flask stuff:

|

| 68 |

+

instance/

|

| 69 |

+

.webassets-cache

|

| 70 |

+

|

| 71 |

+

# Scrapy stuff:

|

| 72 |

+

.scrapy

|

| 73 |

+

|

| 74 |

+

# Sphinx documentation

|

| 75 |

+

docs/_build/

|

| 76 |

+

|

| 77 |

+

# PyBuilder

|

| 78 |

+

.pybuilder/

|

| 79 |

+

target/

|

| 80 |

+

|

| 81 |

+

# Jupyter Notebook

|

| 82 |

+

.ipynb_checkpoints

|

| 83 |

+

|

| 84 |

+

# IPython

|

| 85 |

+

profile_default/

|

| 86 |

+

ipython_config.py

|

| 87 |

+

|

| 88 |

+

# pyenv

|

| 89 |

+

# For a library or package, you might want to ignore these files since the code is

|

| 90 |

+

# intended to run in multiple environments; otherwise, check them in:

|

| 91 |

+

# .python-version

|

| 92 |

+

|

| 93 |

+

# pipenv

|

| 94 |

+

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

| 95 |

+

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

| 96 |

+

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

| 97 |

+

# install all needed dependencies.

|

| 98 |

+

#Pipfile.lock

|

| 99 |

+

|

| 100 |

+

# poetry

|

| 101 |

+

# Similar to Pipfile.lock, it is generally recommended to include poetry.lock in version control.

|

| 102 |

+

# This is especially recommended for binary packages to ensure reproducibility, and is more

|

| 103 |

+

# commonly ignored for libraries.

|

| 104 |

+

# https://python-poetry.org/docs/basic-usage/#commit-your-poetrylock-file-to-version-control

|

| 105 |

+

#poetry.lock

|

| 106 |

+

|

| 107 |

+

# pdm

|

| 108 |

+

# Similar to Pipfile.lock, it is generally recommended to include pdm.lock in version control.

|

| 109 |

+

#pdm.lock

|

| 110 |

+

# pdm stores project-wide configurations in .pdm.toml, but it is recommended to not include it

|

| 111 |

+

# in version control.

|

| 112 |

+

# https://pdm.fming.dev/#use-with-ide

|

| 113 |

+

.pdm.toml

|

| 114 |

+

|

| 115 |

+

# PEP 582; used by e.g. github.com/David-OConnor/pyflow and github.com/pdm-project/pdm

|

| 116 |

+

__pypackages__/

|

| 117 |

+

|

| 118 |

+

# Celery stuff

|

| 119 |

+

celerybeat-schedule

|

| 120 |

+

celerybeat.pid

|

| 121 |

+

|

| 122 |

+

# SageMath parsed files

|

| 123 |

+

*.sage.py

|

| 124 |

+

|

| 125 |

+

# Environments

|

| 126 |

+

.env

|

| 127 |

+

.venv

|

| 128 |

+

env/

|

| 129 |

+

venv/

|

| 130 |

+

ENV/

|

| 131 |

+

env.bak/

|

| 132 |

+

venv.bak/

|

| 133 |

+

|

| 134 |

+

# Spyder project settings

|

| 135 |

+

.spyderproject

|

| 136 |

+

.spyproject

|

| 137 |

+

|

| 138 |

+

# Rope project settings

|

| 139 |

+

.ropeproject

|

| 140 |

+

|

| 141 |

+

# mkdocs documentation

|

| 142 |

+

/site

|

| 143 |

+

|

| 144 |

+

# mypy

|

| 145 |

+

.mypy_cache/

|

| 146 |

+

.dmypy.json

|

| 147 |

+

dmypy.json

|

| 148 |

+

|

| 149 |

+

# Pyre type checker

|

| 150 |

+

.pyre/

|

| 151 |

+

|

| 152 |

+

# pytype static type analyzer

|

| 153 |

+

.pytype/

|

| 154 |

+

|

| 155 |

+

# Cython debug symbols

|

| 156 |

+

cython_debug/

|

| 157 |

+

|

| 158 |

+

# PyCharm

|

| 159 |

+

# JetBrains specific template is maintained in a separate JetBrains.gitignore that can

|

| 160 |

+

# be found at https://github.com/github/gitignore/blob/main/Global/JetBrains.gitignore

|

| 161 |

+

# and can be added to the global gitignore or merged into this file. For a more nuclear

|

| 162 |

+

# option (not recommended) you can uncomment the following to ignore the entire idea folder.

|

| 163 |

+

#.idea/

|

ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2024 Liu Sida

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/Pytorch_Retinaface/data/__init__.py

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

from .config import *

|

ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/Pytorch_Retinaface/data/config.py

ADDED

|

@@ -0,0 +1,42 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# config.py

|

| 2 |

+

|

| 3 |

+

cfg_mnet = {

|

| 4 |

+

'name': 'mobilenet0.25',

|

| 5 |

+

'min_sizes': [[16, 32], [64, 128], [256, 512]],

|

| 6 |

+

'steps': [8, 16, 32],

|

| 7 |

+

'variance': [0.1, 0.2],

|

| 8 |

+

'clip': False,

|

| 9 |

+

'loc_weight': 2.0,

|

| 10 |

+

'gpu_train': True,

|

| 11 |

+

'batch_size': 32,

|

| 12 |

+

'ngpu': 1,

|

| 13 |

+

'epoch': 250,

|

| 14 |

+

'decay1': 190,

|

| 15 |

+

'decay2': 220,

|

| 16 |

+

'image_size': 640,

|

| 17 |

+

'pretrain': True,

|

| 18 |

+

'return_layers': {'stage1': 1, 'stage2': 2, 'stage3': 3},

|

| 19 |

+

'in_channel': 32,

|

| 20 |

+

'out_channel': 64

|

| 21 |

+

}

|

| 22 |

+

|

| 23 |

+

cfg_re50 = {

|

| 24 |

+

'name': 'Resnet50',

|

| 25 |

+

'min_sizes': [[16, 32], [64, 128], [256, 512]],

|

| 26 |

+

'steps': [8, 16, 32],

|

| 27 |

+

'variance': [0.1, 0.2],

|

| 28 |

+

'clip': False,

|

| 29 |

+

'loc_weight': 2.0,

|

| 30 |

+

'gpu_train': True,

|

| 31 |

+

'batch_size': 24,

|

| 32 |

+

'ngpu': 4,

|

| 33 |

+

'epoch': 100,

|

| 34 |

+

'decay1': 70,

|

| 35 |

+

'decay2': 90,

|

| 36 |

+

'image_size': 840,

|

| 37 |

+

'pretrain': True,

|

| 38 |

+

'return_layers': {'layer2': 1, 'layer3': 2, 'layer4': 3},

|

| 39 |

+

'in_channel': 256,

|

| 40 |

+

'out_channel': 256

|

| 41 |

+

}

|

| 42 |

+

|

ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/Pytorch_Retinaface/images/test.jpg

ADDED

|

ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/Pytorch_Retinaface/images/test.webp

ADDED

|

ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/Pytorch_Retinaface/layers/__init__.py

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

from .functions import *

|

ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/Pytorch_Retinaface/layers/functions/prior_box.py

ADDED

|

@@ -0,0 +1,34 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

from itertools import product as product

|

| 3 |

+

import numpy as np

|

| 4 |

+

from math import ceil

|

| 5 |

+

|

| 6 |

+

|

| 7 |

+

class PriorBox(object):

|

| 8 |

+

def __init__(self, cfg, image_size=None, phase='train'):

|

| 9 |

+

super(PriorBox, self).__init__()

|

| 10 |

+

self.min_sizes = cfg['min_sizes']

|

| 11 |

+

self.steps = cfg['steps']

|

| 12 |

+

self.clip = cfg['clip']

|

| 13 |

+

self.image_size = image_size

|

| 14 |

+

self.feature_maps = [[ceil(self.image_size[0]/step), ceil(self.image_size[1]/step)] for step in self.steps]

|

| 15 |

+

self.name = "s"

|

| 16 |

+

|

| 17 |

+

def forward(self):

|

| 18 |

+

anchors = []

|

| 19 |

+

for k, f in enumerate(self.feature_maps):

|

| 20 |

+

min_sizes = self.min_sizes[k]

|

| 21 |

+

for i, j in product(range(f[0]), range(f[1])):

|

| 22 |

+

for min_size in min_sizes:

|

| 23 |

+

s_kx = min_size / self.image_size[1]

|

| 24 |

+

s_ky = min_size / self.image_size[0]

|

| 25 |

+

dense_cx = [x * self.steps[k] / self.image_size[1] for x in [j + 0.5]]

|

| 26 |

+

dense_cy = [y * self.steps[k] / self.image_size[0] for y in [i + 0.5]]

|

| 27 |

+

for cy, cx in product(dense_cy, dense_cx):

|

| 28 |

+

anchors += [cx, cy, s_kx, s_ky]

|

| 29 |

+

|

| 30 |

+

# back to torch land

|

| 31 |

+

output = torch.Tensor(anchors).view(-1, 4)

|

| 32 |

+

if self.clip:

|

| 33 |

+

output.clamp_(max=1, min=0)

|

| 34 |

+

return output

|

ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/Pytorch_Retinaface/models/__init__.py

ADDED

|

File without changes

|

ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/Pytorch_Retinaface/models/net.py

ADDED

|

@@ -0,0 +1,137 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import time

|

| 2 |

+

import torch

|

| 3 |

+

import torch.nn as nn

|

| 4 |

+

import torchvision.models._utils as _utils

|

| 5 |

+

import torchvision.models as models

|

| 6 |

+

import torch.nn.functional as F

|

| 7 |

+

from torch.autograd import Variable

|

| 8 |

+

|

| 9 |

+

def conv_bn(inp, oup, stride = 1, leaky = 0):

|

| 10 |

+

return nn.Sequential(

|

| 11 |

+

nn.Conv2d(inp, oup, 3, stride, 1, bias=False),

|

| 12 |

+

nn.BatchNorm2d(oup),

|

| 13 |

+

nn.LeakyReLU(negative_slope=leaky, inplace=True)

|

| 14 |

+

)

|

| 15 |

+

|

| 16 |

+

def conv_bn_no_relu(inp, oup, stride):

|

| 17 |

+

return nn.Sequential(

|

| 18 |

+

nn.Conv2d(inp, oup, 3, stride, 1, bias=False),

|

| 19 |

+

nn.BatchNorm2d(oup),

|

| 20 |

+

)

|

| 21 |

+

|

| 22 |

+

def conv_bn1X1(inp, oup, stride, leaky=0):

|

| 23 |

+

return nn.Sequential(

|

| 24 |

+

nn.Conv2d(inp, oup, 1, stride, padding=0, bias=False),

|

| 25 |

+

nn.BatchNorm2d(oup),

|

| 26 |

+

nn.LeakyReLU(negative_slope=leaky, inplace=True)

|

| 27 |

+

)

|

| 28 |

+

|

| 29 |

+

def conv_dw(inp, oup, stride, leaky=0.1):

|

| 30 |

+

return nn.Sequential(

|

| 31 |

+

nn.Conv2d(inp, inp, 3, stride, 1, groups=inp, bias=False),

|

| 32 |

+

nn.BatchNorm2d(inp),

|

| 33 |

+

nn.LeakyReLU(negative_slope= leaky,inplace=True),

|

| 34 |

+

|

| 35 |

+

nn.Conv2d(inp, oup, 1, 1, 0, bias=False),

|

| 36 |

+

nn.BatchNorm2d(oup),

|

| 37 |

+

nn.LeakyReLU(negative_slope= leaky,inplace=True),

|

| 38 |

+

)

|

| 39 |

+

|

| 40 |

+

class SSH(nn.Module):

|

| 41 |

+

def __init__(self, in_channel, out_channel):

|

| 42 |

+

super(SSH, self).__init__()

|

| 43 |

+

assert out_channel % 4 == 0

|

| 44 |

+

leaky = 0

|

| 45 |

+

if (out_channel <= 64):

|

| 46 |

+

leaky = 0.1

|

| 47 |

+

self.conv3X3 = conv_bn_no_relu(in_channel, out_channel//2, stride=1)

|

| 48 |

+

|

| 49 |

+

self.conv5X5_1 = conv_bn(in_channel, out_channel//4, stride=1, leaky = leaky)

|

| 50 |

+

self.conv5X5_2 = conv_bn_no_relu(out_channel//4, out_channel//4, stride=1)

|

| 51 |

+

|

| 52 |

+

self.conv7X7_2 = conv_bn(out_channel//4, out_channel//4, stride=1, leaky = leaky)

|

| 53 |

+

self.conv7x7_3 = conv_bn_no_relu(out_channel//4, out_channel//4, stride=1)

|

| 54 |

+

|

| 55 |

+

def forward(self, input):

|

| 56 |

+

conv3X3 = self.conv3X3(input)

|

| 57 |

+

|

| 58 |

+

conv5X5_1 = self.conv5X5_1(input)

|

| 59 |

+

conv5X5 = self.conv5X5_2(conv5X5_1)

|

| 60 |

+

|

| 61 |

+

conv7X7_2 = self.conv7X7_2(conv5X5_1)

|

| 62 |

+

conv7X7 = self.conv7x7_3(conv7X7_2)

|

| 63 |

+

|

| 64 |

+

out = torch.cat([conv3X3, conv5X5, conv7X7], dim=1)

|

| 65 |

+

out = F.relu(out)

|

| 66 |

+

return out

|

| 67 |

+

|

| 68 |

+

class FPN(nn.Module):

|

| 69 |

+

def __init__(self,in_channels_list,out_channels):

|

| 70 |

+

super(FPN,self).__init__()

|

| 71 |

+

leaky = 0

|

| 72 |

+

if (out_channels <= 64):

|

| 73 |

+

leaky = 0.1

|

| 74 |

+

self.output1 = conv_bn1X1(in_channels_list[0], out_channels, stride = 1, leaky = leaky)

|

| 75 |

+

self.output2 = conv_bn1X1(in_channels_list[1], out_channels, stride = 1, leaky = leaky)

|

| 76 |

+

self.output3 = conv_bn1X1(in_channels_list[2], out_channels, stride = 1, leaky = leaky)

|

| 77 |

+

|

| 78 |

+

self.merge1 = conv_bn(out_channels, out_channels, leaky = leaky)

|

| 79 |

+

self.merge2 = conv_bn(out_channels, out_channels, leaky = leaky)

|

| 80 |

+

|

| 81 |

+

def forward(self, input):

|

| 82 |

+

# names = list(input.keys())

|

| 83 |

+

input = list(input.values())

|

| 84 |

+

|

| 85 |

+

output1 = self.output1(input[0])

|

| 86 |

+

output2 = self.output2(input[1])

|

| 87 |

+

output3 = self.output3(input[2])

|

| 88 |

+

|

| 89 |

+

up3 = F.interpolate(output3, size=[output2.size(2), output2.size(3)], mode="nearest")

|

| 90 |

+

output2 = output2 + up3

|

| 91 |

+

output2 = self.merge2(output2)

|

| 92 |

+

|

| 93 |

+

up2 = F.interpolate(output2, size=[output1.size(2), output1.size(3)], mode="nearest")

|

| 94 |

+

output1 = output1 + up2

|

| 95 |

+

output1 = self.merge1(output1)

|

| 96 |

+

|

| 97 |

+

out = [output1, output2, output3]

|

| 98 |

+

return out

|

| 99 |

+

|

| 100 |

+

|

| 101 |

+

|

| 102 |

+

class MobileNetV1(nn.Module):

|

| 103 |

+

def __init__(self):

|

| 104 |

+

super(MobileNetV1, self).__init__()

|

| 105 |

+

self.stage1 = nn.Sequential(

|

| 106 |

+

conv_bn(3, 8, 2, leaky = 0.1), # 3

|

| 107 |

+

conv_dw(8, 16, 1), # 7

|

| 108 |

+

conv_dw(16, 32, 2), # 11

|

| 109 |

+

conv_dw(32, 32, 1), # 19

|

| 110 |

+

conv_dw(32, 64, 2), # 27

|

| 111 |

+

conv_dw(64, 64, 1), # 43

|

| 112 |

+

)

|

| 113 |

+

self.stage2 = nn.Sequential(

|

| 114 |

+

conv_dw(64, 128, 2), # 43 + 16 = 59

|

| 115 |

+

conv_dw(128, 128, 1), # 59 + 32 = 91

|

| 116 |

+

conv_dw(128, 128, 1), # 91 + 32 = 123

|

| 117 |

+

conv_dw(128, 128, 1), # 123 + 32 = 155

|

| 118 |

+

conv_dw(128, 128, 1), # 155 + 32 = 187

|

| 119 |

+

conv_dw(128, 128, 1), # 187 + 32 = 219

|

| 120 |

+

)

|

| 121 |

+

self.stage3 = nn.Sequential(

|

| 122 |

+

conv_dw(128, 256, 2), # 219 +3 2 = 241

|

| 123 |

+

conv_dw(256, 256, 1), # 241 + 64 = 301

|

| 124 |

+

)

|

| 125 |

+

self.avg = nn.AdaptiveAvgPool2d((1,1))

|

| 126 |

+

self.fc = nn.Linear(256, 1000)

|

| 127 |

+

|

| 128 |

+

def forward(self, x):

|

| 129 |

+

x = self.stage1(x)

|

| 130 |

+

x = self.stage2(x)

|

| 131 |

+

x = self.stage3(x)

|

| 132 |

+

x = self.avg(x)

|

| 133 |

+

# x = self.model(x)

|

| 134 |

+

x = x.view(-1, 256)

|

| 135 |

+

x = self.fc(x)

|

| 136 |

+

return x

|

| 137 |

+

|

ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/Pytorch_Retinaface/models/retinaface.py

ADDED

|

@@ -0,0 +1,130 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import torch

|

| 3 |

+

import torch.nn as nn

|

| 4 |

+

import torchvision.models.detection.backbone_utils as backbone_utils

|

| 5 |

+

import torchvision.models._utils as _utils

|

| 6 |

+

import torch.nn.functional as F

|

| 7 |

+

from collections import OrderedDict

|

| 8 |

+

|

| 9 |

+

from .net import MobileNetV1 as MobileNetV1

|

| 10 |

+

from .net import FPN as FPN

|

| 11 |

+

from .net import SSH as SSH

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

class ClassHead(nn.Module):

|

| 16 |

+

def __init__(self,inchannels=512,num_anchors=3):

|

| 17 |

+

super(ClassHead,self).__init__()

|

| 18 |

+

self.num_anchors = num_anchors

|

| 19 |

+

self.conv1x1 = nn.Conv2d(inchannels,self.num_anchors*2,kernel_size=(1,1),stride=1,padding=0)

|

| 20 |

+

|

| 21 |

+

def forward(self,x):

|

| 22 |

+

out = self.conv1x1(x)

|

| 23 |

+

out = out.permute(0,2,3,1).contiguous()

|

| 24 |

+

|

| 25 |

+

return out.view(out.shape[0], -1, 2)

|

| 26 |

+

|

| 27 |

+

class BboxHead(nn.Module):

|

| 28 |

+

def __init__(self,inchannels=512,num_anchors=3):

|

| 29 |

+

super(BboxHead,self).__init__()

|

| 30 |

+

self.conv1x1 = nn.Conv2d(inchannels,num_anchors*4,kernel_size=(1,1),stride=1,padding=0)

|

| 31 |

+

|

| 32 |

+

def forward(self,x):

|

| 33 |

+

out = self.conv1x1(x)

|

| 34 |

+

out = out.permute(0,2,3,1).contiguous()

|

| 35 |

+

|

| 36 |

+

return out.view(out.shape[0], -1, 4)

|

| 37 |

+

|

| 38 |

+

class LandmarkHead(nn.Module):

|

| 39 |

+

def __init__(self,inchannels=512,num_anchors=3):

|

| 40 |

+

super(LandmarkHead,self).__init__()

|

| 41 |

+

self.conv1x1 = nn.Conv2d(inchannels,num_anchors*10,kernel_size=(1,1),stride=1,padding=0)

|

| 42 |

+

|

| 43 |

+

def forward(self,x):

|

| 44 |

+

out = self.conv1x1(x)

|

| 45 |

+

out = out.permute(0,2,3,1).contiguous()

|

| 46 |

+

|

| 47 |

+

return out.view(out.shape[0], -1, 10)

|

| 48 |

+

|

| 49 |

+

class RetinaFace(nn.Module):

|

| 50 |

+

def __init__(self, cfg = None, phase = 'train', weights_path='', device="cuda"):

|

| 51 |

+

"""

|

| 52 |

+

:param cfg: Network related settings.

|

| 53 |

+

:param phase: train or test.

|

| 54 |

+

"""

|

| 55 |

+

weights_path = os.path.join(os.path.dirname(os.path.dirname(os.path.abspath(__file__))), weights_path)

|

| 56 |

+

|

| 57 |

+

super(RetinaFace,self).__init__()

|

| 58 |

+

self.phase = phase

|

| 59 |

+

backbone = None

|

| 60 |

+

if cfg['name'] == 'mobilenet0.25':

|

| 61 |

+

backbone = MobileNetV1()

|

| 62 |

+

if cfg['pretrain']:

|

| 63 |

+

checkpoint = torch.load(weights_path, map_location=torch.device(device))

|

| 64 |

+

from collections import OrderedDict

|

| 65 |

+

new_state_dict = OrderedDict()

|

| 66 |

+

for k, v in checkpoint['state_dict'].items():

|

| 67 |

+

name = k[7:] # remove module.

|

| 68 |

+

new_state_dict[name] = v

|

| 69 |

+

# load params

|

| 70 |

+

backbone.load_state_dict(new_state_dict)

|

| 71 |

+

elif cfg['name'] == 'Resnet50':

|

| 72 |

+

import torchvision.models as models

|

| 73 |

+

backbone = models.resnet50(pretrained=cfg['pretrain'])

|

| 74 |

+

|

| 75 |

+

self.body = _utils.IntermediateLayerGetter(backbone, cfg['return_layers'])

|

| 76 |

+

in_channels_stage2 = cfg['in_channel']

|

| 77 |

+

in_channels_list = [

|

| 78 |

+

in_channels_stage2 * 2,

|

| 79 |

+

in_channels_stage2 * 4,

|

| 80 |

+

in_channels_stage2 * 8,

|

| 81 |

+

]

|

| 82 |

+

out_channels = cfg['out_channel']

|

| 83 |

+

self.fpn = FPN(in_channels_list,out_channels)

|

| 84 |

+

self.ssh1 = SSH(out_channels, out_channels)

|

| 85 |

+

self.ssh2 = SSH(out_channels, out_channels)

|

| 86 |

+

self.ssh3 = SSH(out_channels, out_channels)

|

| 87 |

+

|

| 88 |

+

self.ClassHead = self._make_class_head(fpn_num=3, inchannels=cfg['out_channel'])

|

| 89 |

+

self.BboxHead = self._make_bbox_head(fpn_num=3, inchannels=cfg['out_channel'])

|

| 90 |

+

self.LandmarkHead = self._make_landmark_head(fpn_num=3, inchannels=cfg['out_channel'])

|

| 91 |

+

|

| 92 |

+

def _make_class_head(self,fpn_num=3,inchannels=64,anchor_num=2):

|

| 93 |

+

classhead = nn.ModuleList()

|

| 94 |

+

for i in range(fpn_num):

|

| 95 |

+

classhead.append(ClassHead(inchannels,anchor_num))

|

| 96 |

+

return classhead

|

| 97 |

+

|

| 98 |

+

def _make_bbox_head(self,fpn_num=3,inchannels=64,anchor_num=2):

|

| 99 |

+

bboxhead = nn.ModuleList()

|

| 100 |

+

for i in range(fpn_num):

|

| 101 |

+

bboxhead.append(BboxHead(inchannels,anchor_num))

|

| 102 |

+

return bboxhead

|

| 103 |

+

|

| 104 |

+

def _make_landmark_head(self,fpn_num=3,inchannels=64,anchor_num=2):

|

| 105 |

+

landmarkhead = nn.ModuleList()

|

| 106 |

+

for i in range(fpn_num):

|

| 107 |

+

landmarkhead.append(LandmarkHead(inchannels,anchor_num))

|

| 108 |

+

return landmarkhead

|

| 109 |

+

|

| 110 |

+

def forward(self,inputs):

|

| 111 |

+

out = self.body(inputs)

|

| 112 |

+

|

| 113 |

+

# FPN

|

| 114 |

+

fpn = self.fpn(out)

|

| 115 |

+

|

| 116 |

+

# SSH

|

| 117 |

+

feature1 = self.ssh1(fpn[0])

|

| 118 |

+

feature2 = self.ssh2(fpn[1])

|

| 119 |

+

feature3 = self.ssh3(fpn[2])

|

| 120 |

+

features = [feature1, feature2, feature3]

|

| 121 |

+

|

| 122 |

+

bbox_regressions = torch.cat([self.BboxHead[i](feature) for i, feature in enumerate(features)], dim=1)

|

| 123 |

+

classifications = torch.cat([self.ClassHead[i](feature) for i, feature in enumerate(features)],dim=1)

|

| 124 |

+

ldm_regressions = torch.cat([self.LandmarkHead[i](feature) for i, feature in enumerate(features)], dim=1)

|

| 125 |

+

|

| 126 |

+

if self.phase == 'train':

|

| 127 |

+

output = (bbox_regressions, classifications, ldm_regressions)

|

| 128 |

+

else:

|

| 129 |

+

output = (bbox_regressions, F.softmax(classifications, dim=-1), ldm_regressions)

|

| 130 |

+

return output

|

ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/Pytorch_Retinaface/pytorch_retinaface.py

ADDED

|

@@ -0,0 +1,174 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import time

|

| 3 |

+

import math

|

| 4 |

+

import torch

|

| 5 |

+

import torch.backends.cudnn as cudnn

|

| 6 |

+

import numpy as np

|

| 7 |

+

|

| 8 |

+

from .data import cfg_mnet, cfg_re50

|

| 9 |

+

from .layers.functions.prior_box import PriorBox

|

| 10 |

+

from .utils.nms.py_cpu_nms import py_cpu_nms

|

| 11 |

+

from .models.retinaface import RetinaFace

|

| 12 |

+

from .utils.box_utils import decode, decode_landm

|

| 13 |

+

|

| 14 |

+

class Pytorch_RetinaFace:

|

| 15 |

+

def __init__(self, cfg="mobile0.25", pretrained_path="./weights/mobilenet0.25_Final.pth", weights_path="./weights/mobilenetV1X0.25_pretrain.tar", device="auto", vis_thres=0.6, top_k=5000, keep_top_k=750, nms_threshold=0.4, confidence_threshold=0.02):

|

| 16 |

+

self.vis_thres = vis_thres

|

| 17 |

+

self.top_k = top_k

|

| 18 |

+

self.keep_top_k = keep_top_k

|

| 19 |

+

self.nms_threshold = nms_threshold

|

| 20 |

+

self.confidence_threshold = confidence_threshold

|

| 21 |

+

self.cfg = cfg_mnet if cfg=="mobile0.25" else cfg_re50

|

| 22 |

+

|

| 23 |

+

if device == 'auto':

|

| 24 |

+

# Automatically choose the device

|

| 25 |

+

if torch.cuda.is_available():

|

| 26 |

+

self.device = torch.device("cuda")

|

| 27 |

+

elif torch.backends.mps.is_available():

|

| 28 |

+

self.device = torch.device("mps")

|

| 29 |

+

else:

|

| 30 |

+

self.device = torch.device("cpu")

|

| 31 |

+

else:

|

| 32 |

+

# Use the specified device

|

| 33 |

+

self.device = torch.device(device)

|

| 34 |

+

|

| 35 |

+

print("Using device:", self.device)

|

| 36 |

+

|

| 37 |

+

self.net = RetinaFace(cfg=self.cfg, weights_path=weights_path, phase='test', device=self.device).to(self.device)

|

| 38 |

+

self.load_model_weights(pretrained_path)

|

| 39 |

+

self.net.eval()

|

| 40 |

+

|

| 41 |

+

def check_keys(self, model, pretrained_state_dict):

|

| 42 |

+

ckpt_keys = set(pretrained_state_dict.keys())

|

| 43 |

+

model_keys = set(model.state_dict().keys())

|

| 44 |

+

used_pretrained_keys = model_keys & ckpt_keys

|

| 45 |

+

unused_pretrained_keys = ckpt_keys - model_keys

|

| 46 |

+

missing_keys = model_keys - ckpt_keys

|

| 47 |

+

print('Missing keys:{}'.format(len(missing_keys)))

|

| 48 |

+

print('Unused checkpoint keys:{}'.format(len(unused_pretrained_keys)))

|

| 49 |

+

print('Used keys:{}'.format(len(used_pretrained_keys)))

|

| 50 |

+

assert len(used_pretrained_keys) > 0, 'load NONE from pretrained checkpoint'

|

| 51 |

+

return True

|

| 52 |

+

|

| 53 |

+

|

| 54 |

+

def remove_prefix(self, state_dict, prefix):

|

| 55 |

+

''' Old style model is stored with all names of parameters sharing common prefix 'module.' '''

|

| 56 |

+

print('remove prefix \'{}\''.format(prefix))

|

| 57 |

+

f = lambda x: x.split(prefix, 1)[-1] if x.startswith(prefix) else x

|

| 58 |

+

return {f(key): value for key, value in state_dict.items()}

|

| 59 |

+

|

| 60 |

+

|

| 61 |

+

def load_model_weights(self, pretrained_path):

|

| 62 |

+

pretrained_path = os.path.join(os.path.dirname(os.path.abspath(__file__)), pretrained_path)

|

| 63 |

+

print('Loading pretrained model from {}'.format(pretrained_path))

|

| 64 |

+

pretrained_dict = torch.load(pretrained_path, map_location=self.device)

|

| 65 |

+

if "state_dict" in pretrained_dict.keys():

|

| 66 |

+

pretrained_dict = self.remove_prefix(pretrained_dict['state_dict'], 'module.')

|

| 67 |

+

else:

|

| 68 |

+

pretrained_dict = self.remove_prefix(pretrained_dict, 'module.')

|

| 69 |

+

self.check_keys(self.net, pretrained_dict)

|

| 70 |

+

self.net.load_state_dict(pretrained_dict, strict=False)

|

| 71 |

+

self.net.to(self.device)

|

| 72 |

+

return self.net

|

| 73 |

+

|

| 74 |

+

def center_and_crop_rescale(self, image, dets, scale_factor=4, shift_factor=0.35, aspect_ratio=1.0):

|

| 75 |

+

cropped_imgs = []

|

| 76 |

+

bbox_infos = []

|

| 77 |

+

for index, bbox in enumerate(dets):

|

| 78 |

+

if bbox[4] < self.vis_thres:

|

| 79 |

+

continue

|

| 80 |

+

|

| 81 |

+

x1, y1, x2, y2 = map(int, bbox[:4])

|

| 82 |

+

face_width = x2 - x1

|

| 83 |

+

face_height = y2 - y1

|

| 84 |

+

|

| 85 |

+

default_area = face_width * face_height

|

| 86 |

+

default_area *= scale_factor

|

| 87 |

+

default_side = math.sqrt(default_area)

|

| 88 |

+

|

| 89 |

+

# New height and width based on aspect_ratio

|

| 90 |

+

new_face_width = int(default_side * math.sqrt(aspect_ratio))

|

| 91 |

+

new_face_height = int(default_side / math.sqrt(aspect_ratio))

|

| 92 |

+

|

| 93 |

+

# Center coordinates of the detected face

|

| 94 |

+

center_x = x1 + face_width // 2

|

| 95 |

+

center_y = y1 + face_height // 2 + int(new_face_height * (0.5 - shift_factor))

|

| 96 |

+

|

| 97 |

+

original_crop_x1 = center_x - new_face_width // 2

|

| 98 |

+

original_crop_x2 = center_x + new_face_width // 2

|

| 99 |

+

original_crop_y1 = center_y - new_face_height // 2

|

| 100 |

+

original_crop_y2 = center_y + new_face_height // 2

|

| 101 |

+

# Crop coordinates, adjusted to the image boundaries

|

| 102 |

+

crop_x1 = max(0, original_crop_x1)

|

| 103 |

+

crop_x2 = min(image.shape[1], original_crop_x2)

|

| 104 |

+

crop_y1 = max(0, original_crop_y1)

|

| 105 |

+

crop_y2 = min(image.shape[0], original_crop_y2)

|

| 106 |

+

|

| 107 |

+

# Crop the region and add padding to form a square

|

| 108 |

+

cropped_imgs.append(image[crop_y1:crop_y2, crop_x1:crop_x2])

|

| 109 |

+

bbox_infos.append((original_crop_x1, original_crop_y1, original_crop_x2, original_crop_y2))

|

| 110 |

+

return cropped_imgs, bbox_infos

|

| 111 |

+

|

| 112 |

+

def detect_faces(self, img):

|

| 113 |

+

resize = 1

|

| 114 |

+

|

| 115 |

+

if len(img.shape) == 4 and img.shape[0] == 1:

|

| 116 |

+

img = torch.squeeze(img, 0)

|

| 117 |

+

if isinstance(img, np.ndarray):

|

| 118 |

+

img = torch.from_numpy(img)

|

| 119 |

+

img = img.to(self.device)

|

| 120 |

+

|

| 121 |

+

im_height, im_width, _ = img.shape

|

| 122 |

+

scale = torch.Tensor([img.shape[1], img.shape[0], img.shape[1], img.shape[0]])

|

| 123 |

+

mean_values = torch.tensor([104, 117, 123], dtype=torch.float32).to(self.device)

|

| 124 |

+

mean_values = mean_values.view(1, 1, 3)

|

| 125 |

+

img -= mean_values

|

| 126 |

+

img = img.permute(2, 0, 1)

|

| 127 |

+

img = img.unsqueeze(0)

|

| 128 |

+

scale = scale.to(self.device)

|

| 129 |

+

|

| 130 |

+

tic = time.time()

|

| 131 |

+

with torch.no_grad():

|

| 132 |

+

loc, conf, landms = self.net(img) # forward pass

|

| 133 |

+

print('net forward time: {:.4f}'.format(time.time() - tic))

|

| 134 |

+

|

| 135 |

+

priorbox = PriorBox(self.cfg, image_size=(im_height, im_width))

|

| 136 |

+

priors = priorbox.forward()

|

| 137 |

+

priors = priors.to(self.device)

|

| 138 |

+

prior_data = priors.data

|

| 139 |

+

boxes = decode(loc.data.squeeze(0), prior_data, self.cfg['variance'])

|

| 140 |

+

boxes = boxes * scale / resize

|

| 141 |

+

boxes = boxes.cpu().numpy()

|

| 142 |

+

scores = conf.squeeze(0).data.cpu().numpy()[:, 1]

|

| 143 |

+

landms = decode_landm(landms.data.squeeze(0), prior_data, self.cfg['variance'])

|

| 144 |

+

scale1 = torch.Tensor([img.shape[3], img.shape[2], img.shape[3], img.shape[2],

|

| 145 |

+

img.shape[3], img.shape[2], img.shape[3], img.shape[2],

|

| 146 |

+

img.shape[3], img.shape[2]])

|

| 147 |

+

scale1 = scale1.to(self.device)

|

| 148 |

+

landms = landms * scale1 / resize

|

| 149 |

+

landms = landms.cpu().numpy()

|

| 150 |

+

|

| 151 |

+

# Ignore low scores

|

| 152 |

+

inds = np.where(scores > self.confidence_threshold)[0]

|

| 153 |

+

boxes = boxes[inds]

|

| 154 |

+

landms = landms[inds]

|

| 155 |

+

scores = scores[inds]

|

| 156 |

+

|

| 157 |

+

# Keep top-K before NMS

|

| 158 |

+

order = scores.argsort()[::-1][:self.top_k]

|

| 159 |

+

boxes = boxes[order]

|

| 160 |

+

landms = landms[order]

|

| 161 |

+

scores = scores[order]

|

| 162 |

+

|

| 163 |

+

# Perform NMS

|

| 164 |

+

dets = np.hstack((boxes, scores[:, np.newaxis])).astype(np.float32, copy=False)

|

| 165 |

+

keep = py_cpu_nms(dets, self.nms_threshold)

|

| 166 |

+

dets = dets[keep, :]

|

| 167 |

+

landms = landms[keep]

|

| 168 |

+

|

| 169 |

+

# Keep top-K faster NMS

|

| 170 |

+

dets = dets[:self.keep_top_k, :]

|

| 171 |

+

landms = landms[:self.keep_top_k, :]

|

| 172 |

+

|

| 173 |

+

dets = np.concatenate((dets, landms), axis=1)

|

| 174 |

+

return dets

|

ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/Pytorch_Retinaface/utils/__init__.py

ADDED

|

File without changes

|

ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/Pytorch_Retinaface/utils/box_utils.py

ADDED

|

@@ -0,0 +1,330 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|