Spaces:

Runtime error

Runtime error

Upload 33 files

Browse files- LICENSE +21 -0

- README.md +204 -13

- images/webui_dl_model.png +0 -0

- images/webui_generate.png +0 -0

- images/webui_upload_model.png +0 -0

- mdxnet_models/model_data.json +340 -0

- no_ui.py +60 -0

- requirements.txt +23 -0

- rvc_models/MODELS.txt +2 -0

- rvc_models/public_models.json +626 -0

- song_output/OUTPUT.txt +1 -0

- src/configs/32k.json +46 -0

- src/configs/32k_v2.json +46 -0

- src/configs/40k.json +46 -0

- src/configs/48k.json +46 -0

- src/configs/48k_v2.json +46 -0

- src/download_models.py +31 -0

- src/infer_pack/attentions.py +417 -0

- src/infer_pack/commons.py +166 -0

- src/infer_pack/models.py +1124 -0

- src/infer_pack/models_onnx.py +818 -0

- src/infer_pack/models_onnx_moess.py +849 -0

- src/infer_pack/modules.py +522 -0

- src/infer_pack/transforms.py +209 -0

- src/main.py +363 -0

- src/mdx.py +289 -0

- src/my_utils.py +21 -0

- src/rmvpe.py +409 -0

- src/rvc.py +151 -0

- src/trainset_preprocess_pipeline_print.py +146 -0

- src/vc_infer_pipeline.py +653 -0

- src/webui.py +337 -0

- tes.py +4 -0

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2023 SociallyIneptWeeb

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

README.md

CHANGED

|

@@ -1,13 +1,204 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

| 13 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# AICoverGen

|

| 2 |

+

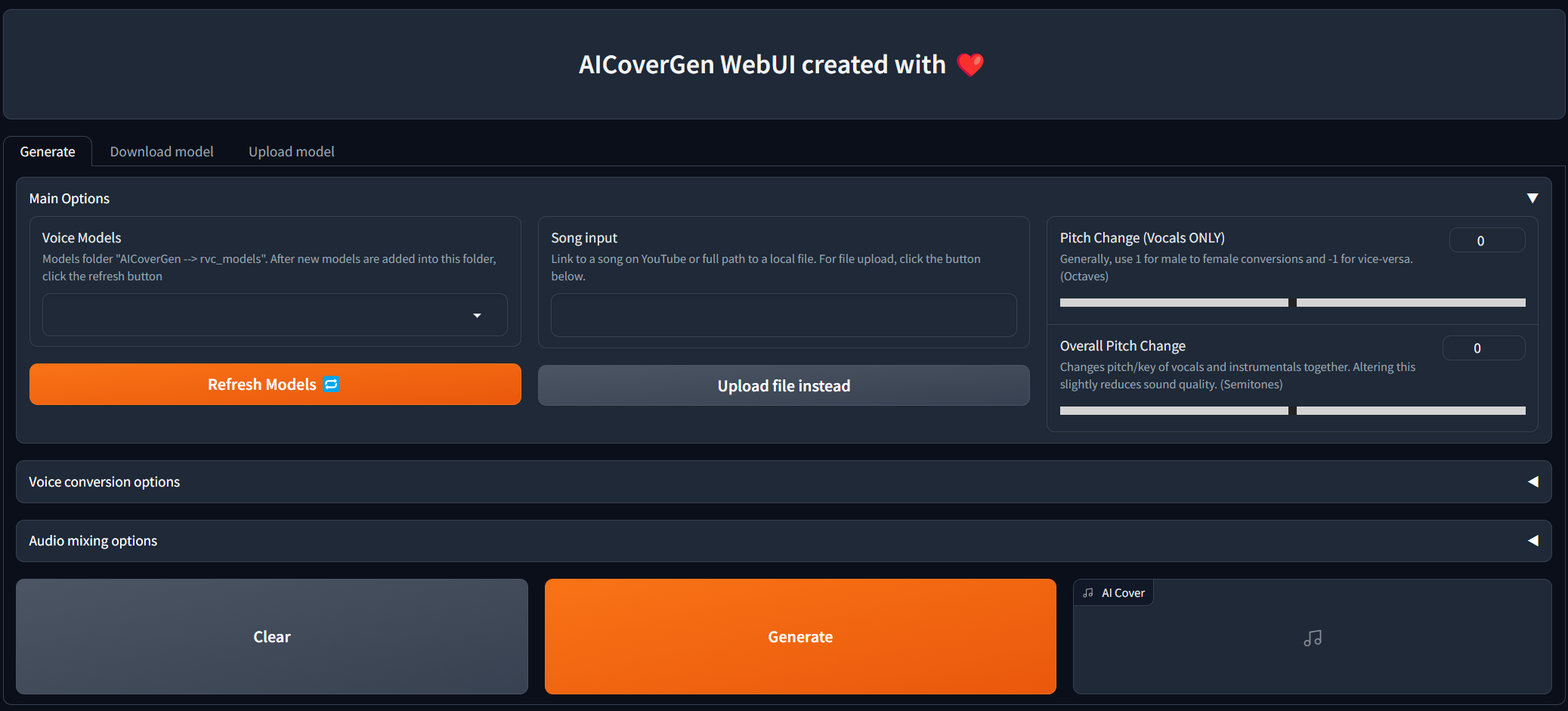

An autonomous pipeline to create covers with any RVC v2 trained AI voice from YouTube videos or a local audio file. For developers who may want to add a singing functionality into their AI assistant/chatbot/vtuber, or for people who want to hear their favourite characters sing their favourite song.

|

| 3 |

+

|

| 4 |

+

Showcase: https://www.youtube.com/watch?v=2qZuE4WM7CM

|

| 5 |

+

|

| 6 |

+

Setup Guide: https://www.youtube.com/watch?v=pdlhk4vVHQk

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

WebUI is under constant development and testing, but you can try it out right now on both local and colab!

|

| 11 |

+

|

| 12 |

+

## Changelog

|

| 13 |

+

|

| 14 |

+

- WebUI for easier conversions and downloading of voice models

|

| 15 |

+

- Support for cover generations from a local audio file

|

| 16 |

+

- Option to keep intermediate files generated. e.g. Isolated vocals/instrumentals

|

| 17 |

+

- Download suggested public voice models from table with search/tag filters

|

| 18 |

+

- Support for Pixeldrain download links for voice models

|

| 19 |

+

- Implement new rmvpe pitch extraction technique for faster and higher quality vocal conversions

|

| 20 |

+

- Volume control for AI main vocals, backup vocals and instrumentals

|

| 21 |

+

- Index Rate for Voice conversion

|

| 22 |

+

- Reverb Control for AI main vocals

|

| 23 |

+

- Local network sharing option for webui

|

| 24 |

+

- Extra RVC options - filter_radius, rms_mix_rate, protect

|

| 25 |

+

- Local file upload via file browser option

|

| 26 |

+

- Upload of locally trained RVC v2 models via WebUI

|

| 27 |

+

- Pitch detection method control, e.g. rmvpe/mangio-crepe

|

| 28 |

+

- Pitch change for vocals and instrumentals together. Same effect as changing key of song in Karaoke.

|

| 29 |

+

- Audio output format option: wav or mp3.

|

| 30 |

+

|

| 31 |

+

## Update AICoverGen to latest version

|

| 32 |

+

|

| 33 |

+

Install and pull any new requirements and changes by opening a command line window in the `AICoverGen` directory and running the following commands.

|

| 34 |

+

|

| 35 |

+

```

|

| 36 |

+

pip install -r requirements.txt

|

| 37 |

+

git pull

|

| 38 |

+

```

|

| 39 |

+

|

| 40 |

+

For colab users, simply click `Runtime` in the top navigation bar of the colab notebook and `Disconnect and delete runtime` in the dropdown menu.

|

| 41 |

+

Then follow the instructions in the notebook to run the webui.

|

| 42 |

+

|

| 43 |

+

## Colab notebook

|

| 44 |

+

|

| 45 |

+

For those without a powerful enough NVIDIA GPU, you may try AICoverGen out using Google Colab.

|

| 46 |

+

|

| 47 |

+

[](https://colab.research.google.com/github/SociallyIneptWeeb/AICoverGen/blob/main/AICoverGen_colab.ipynb)

|

| 48 |

+

|

| 49 |

+

For those who want to run this locally, follow the setup guide below.

|

| 50 |

+

|

| 51 |

+

## Setup

|

| 52 |

+

|

| 53 |

+

### Install Git and Python

|

| 54 |

+

|

| 55 |

+

Follow the instructions [here](https://git-scm.com/book/en/v2/Getting-Started-Installing-Git) to install Git on your computer. Also follow this [guide](https://realpython.com/installing-python/) to install Python **VERSION 3.9** if you haven't already. Using other versions of Python may result in dependency conflicts.

|

| 56 |

+

|

| 57 |

+

### Install ffmpeg

|

| 58 |

+

|

| 59 |

+

Follow the instructions [here](https://www.hostinger.com/tutorials/how-to-install-ffmpeg) to install ffmpeg on your computer.

|

| 60 |

+

|

| 61 |

+

### Install sox

|

| 62 |

+

|

| 63 |

+

Follow the instructions [here](https://www.tutorialexample.com/a-step-guide-to-install-sox-sound-exchange-on-windows-10-python-tutorial/) to install sox and add it to your Windows path environment.

|

| 64 |

+

|

| 65 |

+

### Clone AICoverGen repository

|

| 66 |

+

|

| 67 |

+

Open a command line window and run these commands to clone this entire repository and install the additional dependencies required.

|

| 68 |

+

|

| 69 |

+

```

|

| 70 |

+

git clone https://github.com/SociallyIneptWeeb/AICoverGen

|

| 71 |

+

cd AICoverGen

|

| 72 |

+

pip install -r requirements.txt

|

| 73 |

+

```

|

| 74 |

+

|

| 75 |

+

### Download required models

|

| 76 |

+

|

| 77 |

+

Run the following command to download the required MDXNET vocal separation models and hubert base model.

|

| 78 |

+

|

| 79 |

+

```

|

| 80 |

+

python src/download_models.py

|

| 81 |

+

```

|

| 82 |

+

|

| 83 |

+

|

| 84 |

+

## Usage with WebUI

|

| 85 |

+

|

| 86 |

+

To run the AICoverGen WebUI, run the following command.

|

| 87 |

+

|

| 88 |

+

```

|

| 89 |

+

python src/webui.py

|

| 90 |

+

```

|

| 91 |

+

|

| 92 |

+

| Flag | Description |

|

| 93 |

+

|--------------------------------------------|-------------|

|

| 94 |

+

| `-h`, `--help` | Show this help message and exit. |

|

| 95 |

+

| `--share` | Create a public URL. This is useful for running the web UI on Google Colab. |

|

| 96 |

+

| `--listen` | Make the web UI reachable from your local network. |

|

| 97 |

+

| `--listen-host LISTEN_HOST` | The hostname that the server will use. |

|

| 98 |

+

| `--listen-port LISTEN_PORT` | The listening port that the server will use. |

|

| 99 |

+

|

| 100 |

+

Once the following output message `Running on local URL: http://127.0.0.1:7860` appears, you can click on the link to open a tab with the WebUI.

|

| 101 |

+

|

| 102 |

+

### Download RVC models via WebUI

|

| 103 |

+

|

| 104 |

+

|

| 105 |

+

|

| 106 |

+

Navigate to the `Download model` tab, and paste the download link to the RVC model and give it a unique name.

|

| 107 |

+

You may search the [AI Hub Discord](https://discord.gg/aihub) where already trained voice models are available for download. You may refer to the examples for how the download link should look like.

|

| 108 |

+

The downloaded zip file should contain the .pth model file and an optional .index file.

|

| 109 |

+

|

| 110 |

+

Once the 2 input fields are filled in, simply click `Download`! Once the output message says `[NAME] Model successfully downloaded!`, you should be able to use it in the `Generate` tab after clicking the refresh models button!

|

| 111 |

+

|

| 112 |

+

### Upload RVC models via WebUI

|

| 113 |

+

|

| 114 |

+

|

| 115 |

+

|

| 116 |

+

For people who have trained RVC v2 models locally and would like to use them for AI Cover generations.

|

| 117 |

+

Navigate to the `Upload model` tab, and follow the instructions.

|

| 118 |

+

Once the output message says `[NAME] Model successfully uploaded!`, you should be able to use it in the `Generate` tab after clicking the refresh models button!

|

| 119 |

+

|

| 120 |

+

|

| 121 |

+

### Running the pipeline via WebUI

|

| 122 |

+

|

| 123 |

+

|

| 124 |

+

|

| 125 |

+

- From the Voice Models dropdown menu, select the voice model to use. Click `Update` if you added the files manually to the [rvc_models](rvc_models) directory to refresh the list.

|

| 126 |

+

- In the song input field, copy and paste the link to any song on YouTube or the full path to a local audio file.

|

| 127 |

+

- Pitch should be set to either -12, 0, or 12 depending on the original vocals and the RVC AI modal. This ensures the voice is not *out of tune*.

|

| 128 |

+

- Other advanced options for Voice conversion and audio mixing can be viewed by clicking the accordion arrow to expand.

|

| 129 |

+

|

| 130 |

+

Once all Main Options are filled in, click `Generate` and the AI generated cover should appear in a less than a few minutes depending on your GPU.

|

| 131 |

+

|

| 132 |

+

## Usage with CLI

|

| 133 |

+

|

| 134 |

+

### Manual Download of RVC models

|

| 135 |

+

|

| 136 |

+

Unzip (if needed) and transfer the `.pth` and `.index` files to a new folder in the [rvc_models](rvc_models) directory. Each folder should only contain one `.pth` and one `.index` file.

|

| 137 |

+

|

| 138 |

+

The directory structure should look something like this:

|

| 139 |

+

```

|

| 140 |

+

├── rvc_models

|

| 141 |

+

│ ├── John

|

| 142 |

+

│ │ ├── JohnV2.pth

|

| 143 |

+

│ │ └── added_IVF2237_Flat_nprobe_1_v2.index

|

| 144 |

+

│ ├── May

|

| 145 |

+

│ │ ├── May.pth

|

| 146 |

+

│ │ └── added_IVF2237_Flat_nprobe_1_v2.index

|

| 147 |

+

│ ├── MODELS.txt

|

| 148 |

+

│ └── hubert_base.pt

|

| 149 |

+

├── mdxnet_models

|

| 150 |

+

├── song_output

|

| 151 |

+

└── src

|

| 152 |

+

```

|

| 153 |

+

|

| 154 |

+

### Running the pipeline

|

| 155 |

+

|

| 156 |

+

To run the AI cover generation pipeline using the command line, run the following command.

|

| 157 |

+

|

| 158 |

+

```

|

| 159 |

+

python src/main.py [-h] -i SONG_INPUT -dir RVC_DIRNAME -p PITCH_CHANGE [-k | --keep-files | --no-keep-files] [-ir INDEX_RATE] [-fr FILTER_RADIUS] [-rms RMS_MIX_RATE] [-palgo PITCH_DETECTION_ALGO] [-hop CREPE_HOP_LENGTH] [-pro PROTECT] [-mv MAIN_VOL] [-bv BACKUP_VOL] [-iv INST_VOL] [-pall PITCH_CHANGE_ALL] [-rsize REVERB_SIZE] [-rwet REVERB_WETNESS] [-rdry REVERB_DRYNESS] [-rdamp REVERB_DAMPING] [-oformat OUTPUT_FORMAT]

|

| 160 |

+

```

|

| 161 |

+

|

| 162 |

+

| Flag | Description |

|

| 163 |

+

|--------------------------------------------|-------------|

|

| 164 |

+

| `-h`, `--help` | Show this help message and exit. |

|

| 165 |

+

| `-i SONG_INPUT` | Link to a song on YouTube or path to a local audio file. Should be enclosed in double quotes for Windows and single quotes for Unix-like systems. |

|

| 166 |

+

| `-dir MODEL_DIR_NAME` | Name of folder in [rvc_models](rvc_models) directory containing your `.pth` and `.index` files for a specific voice. |

|

| 167 |

+

| `-p PITCH_CHANGE` | Change pitch of AI vocals in octaves. Set to 0 for no change. Generally, use 1 for male to female conversions and -1 for vice-versa. |

|

| 168 |

+

| `-k` | Optional. Can be added to keep all intermediate audio files generated. e.g. Isolated AI vocals/instrumentals. Leave out to save space. |

|

| 169 |

+

| `-ir INDEX_RATE` | Optional. Default 0.5. Control how much of the AI's accent to leave in the vocals. 0 <= INDEX_RATE <= 1. |

|

| 170 |

+

| `-fr FILTER_RADIUS` | Optional. Default 3. If >=3: apply median filtering median filtering to the harvested pitch results. 0 <= FILTER_RADIUS <= 7. |

|

| 171 |

+

| `-rms RMS_MIX_RATE` | Optional. Default 0.25. Control how much to use the original vocal's loudness (0) or a fixed loudness (1). 0 <= RMS_MIX_RATE <= 1. |

|

| 172 |

+

| `-palgo PITCH_DETECTION_ALGO` | Optional. Default rmvpe. Best option is rmvpe (clarity in vocals), then mangio-crepe (smoother vocals). |

|

| 173 |

+

| `-hop CREPE_HOP_LENGTH` | Optional. Default 128. Controls how often it checks for pitch changes in milliseconds when using mangio-crepe algo specifically. Lower values leads to longer conversions and higher risk of voice cracks, but better pitch accuracy. |

|

| 174 |

+

| `-pro PROTECT` | Optional. Default 0.33. Control how much of the original vocals' breath and voiceless consonants to leave in the AI vocals. Set 0.5 to disable. 0 <= PROTECT <= 0.5. |

|

| 175 |

+

| `-mv MAIN_VOCALS_VOLUME_CHANGE` | Optional. Default 0. Control volume of main AI vocals. Use -3 to decrease the volume by 3 decibels, or 3 to increase the volume by 3 decibels. |

|

| 176 |

+

| `-bv BACKUP_VOCALS_VOLUME_CHANGE` | Optional. Default 0. Control volume of backup AI vocals. |

|

| 177 |

+

| `-iv INSTRUMENTAL_VOLUME_CHANGE` | Optional. Default 0. Control volume of the background music/instrumentals. |

|

| 178 |

+

| `-pall PITCH_CHANGE_ALL` | Optional. Default 0. Change pitch/key of background music, backup vocals and AI vocals in semitones. Reduces sound quality slightly. |

|

| 179 |

+

| `-rsize REVERB_SIZE` | Optional. Default 0.15. The larger the room, the longer the reverb time. 0 <= REVERB_SIZE <= 1. |

|

| 180 |

+

| `-rwet REVERB_WETNESS` | Optional. Default 0.2. Level of AI vocals with reverb. 0 <= REVERB_WETNESS <= 1. |

|

| 181 |

+

| `-rdry REVERB_DRYNESS` | Optional. Default 0.8. Level of AI vocals without reverb. 0 <= REVERB_DRYNESS <= 1. |

|

| 182 |

+

| `-rdamp REVERB_DAMPING` | Optional. Default 0.7. Absorption of high frequencies in the reverb. 0 <= REVERB_DAMPING <= 1. |

|

| 183 |

+

| `-oformat OUTPUT_FORMAT` | Optional. Default mp3. wav for best quality and large file size, mp3 for decent quality and small file size. |

|

| 184 |

+

|

| 185 |

+

|

| 186 |

+

## Terms of Use

|

| 187 |

+

|

| 188 |

+

The use of the converted voice for the following purposes is prohibited.

|

| 189 |

+

|

| 190 |

+

* Criticizing or attacking individuals.

|

| 191 |

+

|

| 192 |

+

* Advocating for or opposing specific political positions, religions, or ideologies.

|

| 193 |

+

|

| 194 |

+

* Publicly displaying strongly stimulating expressions without proper zoning.

|

| 195 |

+

|

| 196 |

+

* Selling of voice models and generated voice clips.

|

| 197 |

+

|

| 198 |

+

* Impersonation of the original owner of the voice with malicious intentions to harm/hurt others.

|

| 199 |

+

|

| 200 |

+

* Fraudulent purposes that lead to identity theft or fraudulent phone calls.

|

| 201 |

+

|

| 202 |

+

## Disclaimer

|

| 203 |

+

|

| 204 |

+

I am not liable for any direct, indirect, consequential, incidental, or special damages arising out of or in any way connected with the use/misuse or inability to use this software.

|

images/webui_dl_model.png

ADDED

|

images/webui_generate.png

ADDED

|

images/webui_upload_model.png

ADDED

|

mdxnet_models/model_data.json

ADDED

|

@@ -0,0 +1,340 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"0ddfc0eb5792638ad5dc27850236c246": {

|

| 3 |

+

"compensate": 1.035,

|

| 4 |

+

"mdx_dim_f_set": 2048,

|

| 5 |

+

"mdx_dim_t_set": 8,

|

| 6 |

+

"mdx_n_fft_scale_set": 6144,

|

| 7 |

+

"primary_stem": "Vocals"

|

| 8 |

+

},

|

| 9 |

+

"26d308f91f3423a67dc69a6d12a8793d": {

|

| 10 |

+

"compensate": 1.035,

|

| 11 |

+

"mdx_dim_f_set": 2048,

|

| 12 |

+

"mdx_dim_t_set": 9,

|

| 13 |

+

"mdx_n_fft_scale_set": 8192,

|

| 14 |

+

"primary_stem": "Other"

|

| 15 |

+

},

|

| 16 |

+

"2cdd429caac38f0194b133884160f2c6": {

|

| 17 |

+

"compensate": 1.045,

|

| 18 |

+

"mdx_dim_f_set": 3072,

|

| 19 |

+

"mdx_dim_t_set": 8,

|

| 20 |

+

"mdx_n_fft_scale_set": 7680,

|

| 21 |

+

"primary_stem": "Instrumental"

|

| 22 |

+

},

|

| 23 |

+

"2f5501189a2f6db6349916fabe8c90de": {

|

| 24 |

+

"compensate": 1.035,

|

| 25 |

+

"mdx_dim_f_set": 2048,

|

| 26 |

+

"mdx_dim_t_set": 8,

|

| 27 |

+

"mdx_n_fft_scale_set": 6144,

|

| 28 |

+

"primary_stem": "Vocals"

|

| 29 |

+

},

|

| 30 |

+

"398580b6d5d973af3120df54cee6759d": {

|

| 31 |

+

"compensate": 1.75,

|

| 32 |

+

"mdx_dim_f_set": 3072,

|

| 33 |

+

"mdx_dim_t_set": 8,

|

| 34 |

+

"mdx_n_fft_scale_set": 7680,

|

| 35 |

+

"primary_stem": "Vocals"

|

| 36 |

+

},

|

| 37 |

+

"488b3e6f8bd3717d9d7c428476be2d75": {

|

| 38 |

+

"compensate": 1.035,

|

| 39 |

+

"mdx_dim_f_set": 3072,

|

| 40 |

+

"mdx_dim_t_set": 8,

|

| 41 |

+

"mdx_n_fft_scale_set": 7680,

|

| 42 |

+

"primary_stem": "Instrumental"

|

| 43 |

+

},

|

| 44 |

+

"4910e7827f335048bdac11fa967772f9": {

|

| 45 |

+

"compensate": 1.035,

|

| 46 |

+

"mdx_dim_f_set": 2048,

|

| 47 |

+

"mdx_dim_t_set": 7,

|

| 48 |

+

"mdx_n_fft_scale_set": 4096,

|

| 49 |

+

"primary_stem": "Drums"

|

| 50 |

+

},

|

| 51 |

+

"53c4baf4d12c3e6c3831bb8f5b532b93": {

|

| 52 |

+

"compensate": 1.043,

|

| 53 |

+

"mdx_dim_f_set": 3072,

|

| 54 |

+

"mdx_dim_t_set": 8,

|

| 55 |

+

"mdx_n_fft_scale_set": 7680,

|

| 56 |

+

"primary_stem": "Vocals"

|

| 57 |

+

},

|

| 58 |

+

"5d343409ef0df48c7d78cce9f0106781": {

|

| 59 |

+

"compensate": 1.075,

|

| 60 |

+

"mdx_dim_f_set": 3072,

|

| 61 |

+

"mdx_dim_t_set": 8,

|

| 62 |

+

"mdx_n_fft_scale_set": 7680,

|

| 63 |

+

"primary_stem": "Vocals"

|

| 64 |

+

},

|

| 65 |

+

"5f6483271e1efb9bfb59e4a3e6d4d098": {

|

| 66 |

+

"compensate": 1.035,

|

| 67 |

+

"mdx_dim_f_set": 2048,

|

| 68 |

+

"mdx_dim_t_set": 9,

|

| 69 |

+

"mdx_n_fft_scale_set": 6144,

|

| 70 |

+

"primary_stem": "Vocals"

|

| 71 |

+

},

|

| 72 |

+

"65ab5919372a128e4167f5e01a8fda85": {

|

| 73 |

+

"compensate": 1.035,

|

| 74 |

+

"mdx_dim_f_set": 2048,

|

| 75 |

+

"mdx_dim_t_set": 8,

|

| 76 |

+

"mdx_n_fft_scale_set": 8192,

|

| 77 |

+

"primary_stem": "Other"

|

| 78 |

+

},

|

| 79 |

+

"6703e39f36f18aa7855ee1047765621d": {

|

| 80 |

+

"compensate": 1.035,

|

| 81 |

+

"mdx_dim_f_set": 2048,

|

| 82 |

+

"mdx_dim_t_set": 9,

|

| 83 |

+

"mdx_n_fft_scale_set": 16384,

|

| 84 |

+

"primary_stem": "Bass"

|

| 85 |

+

},

|

| 86 |

+

"6b31de20e84392859a3d09d43f089515": {

|

| 87 |

+

"compensate": 1.035,

|

| 88 |

+

"mdx_dim_f_set": 2048,

|

| 89 |

+

"mdx_dim_t_set": 8,

|

| 90 |

+

"mdx_n_fft_scale_set": 6144,

|

| 91 |

+

"primary_stem": "Vocals"

|

| 92 |

+

},

|

| 93 |

+

"867595e9de46f6ab699008295df62798": {

|

| 94 |

+

"compensate": 1.03,

|

| 95 |

+

"mdx_dim_f_set": 3072,

|

| 96 |

+

"mdx_dim_t_set": 8,

|

| 97 |

+

"mdx_n_fft_scale_set": 7680,

|

| 98 |

+

"primary_stem": "Vocals"

|

| 99 |

+

},

|

| 100 |

+

"a3cd63058945e777505c01d2507daf37": {

|

| 101 |

+

"compensate": 1.03,

|

| 102 |

+

"mdx_dim_f_set": 2048,

|

| 103 |

+

"mdx_dim_t_set": 8,

|

| 104 |

+

"mdx_n_fft_scale_set": 6144,

|

| 105 |

+

"primary_stem": "Vocals"

|

| 106 |

+

},

|

| 107 |

+

"b33d9b3950b6cbf5fe90a32608924700": {

|

| 108 |

+

"compensate": 1.03,

|

| 109 |

+

"mdx_dim_f_set": 3072,

|

| 110 |

+

"mdx_dim_t_set": 8,

|

| 111 |

+

"mdx_n_fft_scale_set": 7680,

|

| 112 |

+

"primary_stem": "Vocals"

|

| 113 |

+

},

|

| 114 |

+

"c3b29bdce8c4fa17ec609e16220330ab": {

|

| 115 |

+

"compensate": 1.035,

|

| 116 |

+

"mdx_dim_f_set": 2048,

|

| 117 |

+

"mdx_dim_t_set": 8,

|

| 118 |

+

"mdx_n_fft_scale_set": 16384,

|

| 119 |

+

"primary_stem": "Bass"

|

| 120 |

+

},

|

| 121 |

+

"ceed671467c1f64ebdfac8a2490d0d52": {

|

| 122 |

+

"compensate": 1.035,

|

| 123 |

+

"mdx_dim_f_set": 3072,

|

| 124 |

+

"mdx_dim_t_set": 8,

|

| 125 |

+

"mdx_n_fft_scale_set": 7680,

|

| 126 |

+

"primary_stem": "Instrumental"

|

| 127 |

+

},

|

| 128 |

+

"d2a1376f310e4f7fa37fb9b5774eb701": {

|

| 129 |

+

"compensate": 1.035,

|

| 130 |

+

"mdx_dim_f_set": 3072,

|

| 131 |

+

"mdx_dim_t_set": 8,

|

| 132 |

+

"mdx_n_fft_scale_set": 7680,

|

| 133 |

+

"primary_stem": "Instrumental"

|

| 134 |

+

},

|

| 135 |

+

"d7bff498db9324db933d913388cba6be": {

|

| 136 |

+

"compensate": 1.035,

|

| 137 |

+

"mdx_dim_f_set": 2048,

|

| 138 |

+

"mdx_dim_t_set": 8,

|

| 139 |

+

"mdx_n_fft_scale_set": 6144,

|

| 140 |

+

"primary_stem": "Vocals"

|

| 141 |

+

},

|

| 142 |

+

"d94058f8c7f1fae4164868ae8ae66b20": {

|

| 143 |

+

"compensate": 1.035,

|

| 144 |

+

"mdx_dim_f_set": 2048,

|

| 145 |

+

"mdx_dim_t_set": 8,

|

| 146 |

+

"mdx_n_fft_scale_set": 6144,

|

| 147 |

+

"primary_stem": "Vocals"

|

| 148 |

+

},

|

| 149 |

+

"dc41ede5961d50f277eb846db17f5319": {

|

| 150 |

+

"compensate": 1.035,

|

| 151 |

+

"mdx_dim_f_set": 2048,

|

| 152 |

+

"mdx_dim_t_set": 9,

|

| 153 |

+

"mdx_n_fft_scale_set": 4096,

|

| 154 |

+

"primary_stem": "Drums"

|

| 155 |

+

},

|

| 156 |

+

"e5572e58abf111f80d8241d2e44e7fa4": {

|

| 157 |

+

"compensate": 1.028,

|

| 158 |

+

"mdx_dim_f_set": 3072,

|

| 159 |

+

"mdx_dim_t_set": 8,

|

| 160 |

+

"mdx_n_fft_scale_set": 7680,

|

| 161 |

+

"primary_stem": "Instrumental"

|

| 162 |

+

},

|

| 163 |

+

"e7324c873b1f615c35c1967f912db92a": {

|

| 164 |

+

"compensate": 1.03,

|

| 165 |

+

"mdx_dim_f_set": 3072,

|

| 166 |

+

"mdx_dim_t_set": 8,

|

| 167 |

+

"mdx_n_fft_scale_set": 7680,

|

| 168 |

+

"primary_stem": "Vocals"

|

| 169 |

+

},

|

| 170 |

+

"1c56ec0224f1d559c42fd6fd2a67b154": {

|

| 171 |

+

"compensate": 1.025,

|

| 172 |

+

"mdx_dim_f_set": 2048,

|

| 173 |

+

"mdx_dim_t_set": 8,

|

| 174 |

+

"mdx_n_fft_scale_set": 5120,

|

| 175 |

+

"primary_stem": "Instrumental"

|

| 176 |

+

},

|

| 177 |

+

"f2df6d6863d8f435436d8b561594ff49": {

|

| 178 |

+

"compensate": 1.035,

|

| 179 |

+

"mdx_dim_f_set": 3072,

|

| 180 |

+

"mdx_dim_t_set": 8,

|

| 181 |

+

"mdx_n_fft_scale_set": 7680,

|

| 182 |

+

"primary_stem": "Instrumental"

|

| 183 |

+

},

|

| 184 |

+

"b06327a00d5e5fbc7d96e1781bbdb596": {

|

| 185 |

+

"compensate": 1.035,

|

| 186 |

+

"mdx_dim_f_set": 3072,

|

| 187 |

+

"mdx_dim_t_set": 8,

|

| 188 |

+

"mdx_n_fft_scale_set": 6144,

|

| 189 |

+

"primary_stem": "Instrumental"

|

| 190 |

+

},

|

| 191 |

+

"94ff780b977d3ca07c7a343dab2e25dd": {

|

| 192 |

+

"compensate": 1.039,

|

| 193 |

+

"mdx_dim_f_set": 3072,

|

| 194 |

+

"mdx_dim_t_set": 8,

|

| 195 |

+

"mdx_n_fft_scale_set": 6144,

|

| 196 |

+

"primary_stem": "Instrumental"

|

| 197 |

+

},

|

| 198 |

+

"73492b58195c3b52d34590d5474452f6": {

|

| 199 |

+

"compensate": 1.043,

|

| 200 |

+

"mdx_dim_f_set": 3072,

|

| 201 |

+

"mdx_dim_t_set": 8,

|

| 202 |

+

"mdx_n_fft_scale_set": 7680,

|

| 203 |

+

"primary_stem": "Vocals"

|

| 204 |

+

},

|

| 205 |

+

"970b3f9492014d18fefeedfe4773cb42": {

|

| 206 |

+

"compensate": 1.009,

|

| 207 |

+

"mdx_dim_f_set": 3072,

|

| 208 |

+

"mdx_dim_t_set": 8,

|

| 209 |

+

"mdx_n_fft_scale_set": 7680,

|

| 210 |

+

"primary_stem": "Vocals"

|

| 211 |

+

},

|

| 212 |

+

"1d64a6d2c30f709b8c9b4ce1366d96ee": {

|

| 213 |

+

"compensate": 1.035,

|

| 214 |

+

"mdx_dim_f_set": 2048,

|

| 215 |

+

"mdx_dim_t_set": 8,

|

| 216 |

+

"mdx_n_fft_scale_set": 5120,

|

| 217 |

+

"primary_stem": "Instrumental"

|

| 218 |

+

},

|

| 219 |

+

"203f2a3955221b64df85a41af87cf8f0": {

|

| 220 |

+

"compensate": 1.035,

|

| 221 |

+

"mdx_dim_f_set": 3072,

|

| 222 |

+

"mdx_dim_t_set": 8,

|

| 223 |

+

"mdx_n_fft_scale_set": 6144,

|

| 224 |

+

"primary_stem": "Instrumental"

|

| 225 |

+

},

|

| 226 |

+

"291c2049608edb52648b96e27eb80e95": {

|

| 227 |

+

"compensate": 1.035,

|

| 228 |

+

"mdx_dim_f_set": 3072,

|

| 229 |

+

"mdx_dim_t_set": 8,

|

| 230 |

+

"mdx_n_fft_scale_set": 6144,

|

| 231 |

+

"primary_stem": "Instrumental"

|

| 232 |

+

},

|

| 233 |

+

"ead8d05dab12ec571d67549b3aab03fc": {

|

| 234 |

+

"compensate": 1.035,

|

| 235 |

+

"mdx_dim_f_set": 3072,

|

| 236 |

+

"mdx_dim_t_set": 8,

|

| 237 |

+

"mdx_n_fft_scale_set": 6144,

|

| 238 |

+

"primary_stem": "Instrumental"

|

| 239 |

+

},

|

| 240 |

+

"cc63408db3d80b4d85b0287d1d7c9632": {

|

| 241 |

+

"compensate": 1.033,

|

| 242 |

+

"mdx_dim_f_set": 3072,

|

| 243 |

+

"mdx_dim_t_set": 8,

|

| 244 |

+

"mdx_n_fft_scale_set": 6144,

|

| 245 |

+

"primary_stem": "Instrumental"

|

| 246 |

+

},

|

| 247 |

+

"cd5b2989ad863f116c855db1dfe24e39": {

|

| 248 |

+

"compensate": 1.035,

|

| 249 |

+

"mdx_dim_f_set": 3072,

|

| 250 |

+

"mdx_dim_t_set": 9,

|

| 251 |

+

"mdx_n_fft_scale_set": 6144,

|

| 252 |

+

"primary_stem": "Other"

|

| 253 |

+

},

|

| 254 |

+

"55657dd70583b0fedfba5f67df11d711": {

|

| 255 |

+

"compensate": 1.022,

|

| 256 |

+

"mdx_dim_f_set": 3072,

|

| 257 |

+

"mdx_dim_t_set": 8,

|

| 258 |

+

"mdx_n_fft_scale_set": 6144,

|

| 259 |

+

"primary_stem": "Instrumental"

|

| 260 |

+

},

|

| 261 |

+

"b6bccda408a436db8500083ef3491e8b": {

|

| 262 |

+

"compensate": 1.02,

|

| 263 |

+

"mdx_dim_f_set": 3072,

|

| 264 |

+

"mdx_dim_t_set": 8,

|

| 265 |

+

"mdx_n_fft_scale_set": 7680,

|

| 266 |

+

"primary_stem": "Instrumental"

|

| 267 |

+

},

|

| 268 |

+

"8a88db95c7fb5dbe6a095ff2ffb428b1": {

|

| 269 |

+

"compensate": 1.026,

|

| 270 |

+

"mdx_dim_f_set": 2048,

|

| 271 |

+

"mdx_dim_t_set": 8,

|

| 272 |

+

"mdx_n_fft_scale_set": 5120,

|

| 273 |

+

"primary_stem": "Instrumental"

|

| 274 |

+

},

|

| 275 |

+

"b78da4afc6512f98e4756f5977f5c6b9": {

|

| 276 |

+

"compensate": 1.021,

|

| 277 |

+

"mdx_dim_f_set": 3072,

|

| 278 |

+

"mdx_dim_t_set": 8,

|

| 279 |

+

"mdx_n_fft_scale_set": 7680,

|

| 280 |

+

"primary_stem": "Instrumental"

|

| 281 |

+

},

|

| 282 |

+

"77d07b2667ddf05b9e3175941b4454a0": {

|

| 283 |

+

"compensate": 1.021,

|

| 284 |

+

"mdx_dim_f_set": 3072,

|

| 285 |

+

"mdx_dim_t_set": 8,

|

| 286 |

+

"mdx_n_fft_scale_set": 7680,

|

| 287 |

+

"primary_stem": "Vocals"

|

| 288 |

+

},

|

| 289 |

+

"2154254ee89b2945b97a7efed6e88820": {

|

| 290 |

+

"config_yaml": "model_2_stem_061321.yaml"

|

| 291 |

+

},

|

| 292 |

+

"063aadd735d58150722926dcbf5852a9": {

|

| 293 |

+

"config_yaml": "model_2_stem_061321.yaml"

|

| 294 |

+

},

|

| 295 |

+

"fe96801369f6a148df2720f5ced88c19": {

|

| 296 |

+

"config_yaml": "model3.yaml"

|

| 297 |

+

},

|

| 298 |

+

"02e8b226f85fb566e5db894b9931c640": {

|

| 299 |

+

"config_yaml": "model2.yaml"

|

| 300 |

+

},

|

| 301 |

+

"e3de6d861635ab9c1d766149edd680d6": {

|

| 302 |

+

"config_yaml": "model1.yaml"

|

| 303 |

+

},

|

| 304 |

+

"3f2936c554ab73ce2e396d54636bd373": {

|

| 305 |

+

"config_yaml": "modelB.yaml"

|

| 306 |

+

},

|

| 307 |

+

"890d0f6f82d7574bca741a9e8bcb8168": {

|

| 308 |

+

"config_yaml": "modelB.yaml"

|

| 309 |

+

},

|

| 310 |

+

"63a3cb8c37c474681049be4ad1ba8815": {

|

| 311 |

+

"config_yaml": "modelB.yaml"

|

| 312 |

+

},

|

| 313 |

+

"a7fc5d719743c7fd6b61bd2b4d48b9f0": {

|

| 314 |

+

"config_yaml": "modelA.yaml"

|

| 315 |

+

},

|

| 316 |

+

"3567f3dee6e77bf366fcb1c7b8bc3745": {

|

| 317 |

+

"config_yaml": "modelA.yaml"

|

| 318 |

+

},

|

| 319 |

+

"a28f4d717bd0d34cd2ff7a3b0a3d065e": {

|

| 320 |

+

"config_yaml": "modelA.yaml"

|

| 321 |

+

},

|

| 322 |

+

"c9971a18da20911822593dc81caa8be9": {

|

| 323 |

+

"config_yaml": "sndfx.yaml"

|

| 324 |

+

},

|

| 325 |

+

"57d94d5ed705460d21c75a5ac829a605": {

|

| 326 |

+

"config_yaml": "sndfx.yaml"

|

| 327 |

+

},

|

| 328 |

+

"e7a25f8764f25a52c1b96c4946e66ba2": {

|

| 329 |

+

"config_yaml": "sndfx.yaml"

|

| 330 |

+

},

|

| 331 |

+

"104081d24e37217086ce5fde09147ee1": {

|

| 332 |

+

"config_yaml": "model_2_stem_061321.yaml"

|

| 333 |

+

},

|

| 334 |

+

"1e6165b601539f38d0a9330f3facffeb": {

|

| 335 |

+

"config_yaml": "model_2_stem_061321.yaml"

|

| 336 |

+

},

|

| 337 |

+

"fe0108464ce0d8271be5ab810891bd7c": {

|

| 338 |

+

"config_yaml": "model_2_stem_full_band.yaml"

|

| 339 |

+

}

|

| 340 |

+

}

|

no_ui.py

ADDED

|

@@ -0,0 +1,60 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# @title Generate | Output generated inside "AICoverGen\song_output\random_number"

|

| 2 |

+

# @markdown Main Option | You also can input audio path inside "SONG_INPUT"

|

| 3 |

+

SONG_INPUT = input("Enter Youtube URL: ") # @param {type:"string"}

|

| 4 |

+

RVC_DIRNAME = "Daemi" # @param {type:"string"}

|

| 5 |

+

PITCH_CHANGE = 0 # @param {type:"integer"}

|

| 6 |

+

PITCH_CHANGE_ALL = 0 # @param {type:"integer"}

|

| 7 |

+

# @markdown Voice Conversion Options

|

| 8 |

+

INDEX_RATE = 0.2 # @param {type:"number"}

|

| 9 |

+

FILTER_RADIUS = 3 # @param {type:"integer"}

|

| 10 |

+

PITCH_DETECTION_ALGO = "rmvpe" # @param ["rmvpe", "mangio-crepe"]

|

| 11 |

+

CREPE_HOP_LENGTH = 128 # @param {type:"integer"}

|

| 12 |

+

PROTECT = 0.33 # @param {type:"number"}

|

| 13 |

+

REMIX_MIX_RATE = 0.25 # @param {type:"number"}

|

| 14 |

+

# @markdown Audio Mixing Options

|

| 15 |

+

MAIN_VOL = 0 # @param {type:"integer"}

|

| 16 |

+

BACKUP_VOL = 0 # @param {type:"integer"}

|

| 17 |

+

INST_VOL = 0 # @param {type:"integer"}

|

| 18 |

+

# @markdown Reverb Control

|

| 19 |

+

REVERB_SIZE = 0.15 # @param {type:"number"}

|

| 20 |

+

REVERB_WETNESS = 0.2 # @param {type:"number"}

|

| 21 |

+

REVERB_DRYNESS = 0.8 # @param {type:"number"}

|

| 22 |

+

REVERB_DAMPING = 0.7 # @param {type:"number"}

|

| 23 |

+

# @markdown Output Format

|

| 24 |

+

OUTPUT_FORMAT = "wav" # @param ["mp3", "wav"]

|

| 25 |

+

|

| 26 |

+

import subprocess

|

| 27 |

+

|

| 28 |

+

command = [

|

| 29 |

+

"python",

|

| 30 |

+

"src/main.py",

|

| 31 |

+

"-i", SONG_INPUT,

|

| 32 |

+

"-dir", RVC_DIRNAME,

|

| 33 |

+

"-p", str(PITCH_CHANGE),

|

| 34 |

+

"-k",

|

| 35 |

+

"-ir", str(INDEX_RATE),

|

| 36 |

+

"-fr", str(FILTER_RADIUS),

|

| 37 |

+

"-rms", str(REMIX_MIX_RATE),

|

| 38 |

+

"-palgo", PITCH_DETECTION_ALGO,

|

| 39 |

+

"-hop", str(CREPE_HOP_LENGTH),

|

| 40 |

+

"-pro", str(PROTECT),

|

| 41 |

+

"-mv", str(MAIN_VOL),

|

| 42 |

+

"-bv", str(BACKUP_VOL),

|

| 43 |

+

"-iv", str(INST_VOL),

|

| 44 |

+

"-pall", str(PITCH_CHANGE_ALL),

|

| 45 |

+

"-rsize", str(REVERB_SIZE),

|

| 46 |

+

"-rwet", str(REVERB_WETNESS),

|

| 47 |

+

"-rdry", str(REVERB_DRYNESS),

|

| 48 |

+

"-rdamp", str(REVERB_DAMPING),

|

| 49 |

+

"-oformat", OUTPUT_FORMAT

|

| 50 |

+

]

|

| 51 |

+

|

| 52 |

+

# Open a subprocess and capture its output

|

| 53 |

+

process = subprocess.Popen(command, stdout=subprocess.PIPE, stderr=subprocess.STDOUT, universal_newlines=True)

|

| 54 |

+

|

| 55 |

+

# Print the output in real-time

|

| 56 |

+

for line in process.stdout:

|

| 57 |

+

print(line, end='')

|

| 58 |

+

|

| 59 |

+

# Wait for the process to finish

|

| 60 |

+

process.wait()

|

requirements.txt

ADDED

|

@@ -0,0 +1,23 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

deemix

|

| 2 |

+

fairseq==0.12.2

|

| 3 |

+

faiss-cpu==1.7.3

|

| 4 |

+

ffmpeg-python>=0.2.0

|

| 5 |

+

gradio==4.13.0

|

| 6 |

+

lib==4.0.0

|

| 7 |

+

librosa==0.9.1

|

| 8 |

+

numpy==1.23.5

|

| 9 |

+

onnxruntime_gpu

|

| 10 |

+

praat-parselmouth>=0.4.2

|

| 11 |

+

pedalboard==0.7.7

|

| 12 |

+

pydub==0.25.1

|

| 13 |

+

pyworld==0.3.4

|

| 14 |

+

Requests==2.31.0

|

| 15 |

+

scipy==1.11.1

|

| 16 |

+

soundfile==0.12.1

|

| 17 |

+

--find-links https://download.pytorch.org/whl/torch_stable.html

|

| 18 |

+

torch==2.0.1+cu118

|

| 19 |

+

torchcrepe==0.0.20

|

| 20 |

+

tqdm==4.65.0

|

| 21 |

+

yt_dlp==2023.7.6

|

| 22 |

+

sox==1.4.1

|

| 23 |

+

gdown==4.6.3

|

rvc_models/MODELS.txt

ADDED

|

@@ -0,0 +1,2 @@

|

|

|

|

|

|

|

|

|

|

| 1 |

+

RVC Models can be added as a folder here. Each folder should contain the model file (.pth extension), and an index file (.index extension).

|

| 2 |

+

For example, a folder called Maya, containing 2 files, Maya.pth and added_IVF1905_Flat_nprobe_Maya_v2.index.

|

rvc_models/public_models.json

ADDED

|

@@ -0,0 +1,626 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|