Spaces:

Paused

Paused

Commit

•

9e548ce

1

Parent(s):

9d72f44

Upload 45 files

Browse files- .gitattributes +1 -0

- whisper/.flake8 +4 -0

- whisper/.gitattributes +3 -0

- whisper/.github/workflows/python-publish.yml +37 -0

- whisper/.github/workflows/test.yml +56 -0

- whisper/.gitignore +11 -0

- whisper/.pre-commit-config.yaml +28 -0

- whisper/CHANGELOG.md +69 -0

- whisper/LICENSE +21 -0

- whisper/MANIFEST.in +5 -0

- whisper/README.md +147 -0

- whisper/approach.png +0 -0

- whisper/data/README.md +118 -0

- whisper/data/meanwhile.json +322 -0

- whisper/language-breakdown.svg +0 -0

- whisper/model-card.md +69 -0

- whisper/notebooks/LibriSpeech.ipynb +958 -0

- whisper/notebooks/Multilingual_ASR.ipynb +0 -0

- whisper/pyproject.toml +8 -0

- whisper/requirements.txt +6 -0

- whisper/setup.py +43 -0

- whisper/tests/conftest.py +14 -0

- whisper/tests/jfk.flac +3 -0

- whisper/tests/test_audio.py +19 -0

- whisper/tests/test_normalizer.py +96 -0

- whisper/tests/test_timing.py +96 -0

- whisper/tests/test_tokenizer.py +24 -0

- whisper/tests/test_transcribe.py +42 -0

- whisper/whisper/__init__.py +154 -0

- whisper/whisper/__main__.py +3 -0

- whisper/whisper/assets/gpt2.tiktoken +0 -0

- whisper/whisper/assets/mel_filters.npz +3 -0

- whisper/whisper/assets/multilingual.tiktoken +0 -0

- whisper/whisper/audio.py +157 -0

- whisper/whisper/decoding.py +821 -0

- whisper/whisper/model.py +309 -0

- whisper/whisper/normalizers/__init__.py +2 -0

- whisper/whisper/normalizers/basic.py +76 -0

- whisper/whisper/normalizers/english.json +1741 -0

- whisper/whisper/normalizers/english.py +550 -0

- whisper/whisper/timing.py +385 -0

- whisper/whisper/tokenizer.py +386 -0

- whisper/whisper/transcribe.py +461 -0

- whisper/whisper/triton_ops.py +109 -0

- whisper/whisper/utils.py +258 -0

- whisper/whisper/version.py +1 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

whisper/tests/jfk.flac filter=lfs diff=lfs merge=lfs -text

|

whisper/.flake8

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

[flake8]

|

| 2 |

+

per-file-ignores =

|

| 3 |

+

*/__init__.py: F401

|

| 4 |

+

|

whisper/.gitattributes

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Override jupyter in Github language stats for more accurate estimate of repo code languages

|

| 2 |

+

# reference: https://github.com/github/linguist/blob/master/docs/overrides.md#generated-code

|

| 3 |

+

*.ipynb linguist-generated

|

whisper/.github/workflows/python-publish.yml

ADDED

|

@@ -0,0 +1,37 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: Release

|

| 2 |

+

|

| 3 |

+

on:

|

| 4 |

+

push:

|

| 5 |

+

branches:

|

| 6 |

+

- main

|

| 7 |

+

jobs:

|

| 8 |

+

deploy:

|

| 9 |

+

runs-on: ubuntu-latest

|

| 10 |

+

steps:

|

| 11 |

+

- uses: actions/checkout@v3

|

| 12 |

+

- uses: actions-ecosystem/action-regex-match@v2

|

| 13 |

+

id: regex-match

|

| 14 |

+

with:

|

| 15 |

+

text: ${{ github.event.head_commit.message }}

|

| 16 |

+

regex: '^Release ([^ ]+)'

|

| 17 |

+

- name: Set up Python

|

| 18 |

+

uses: actions/setup-python@v4

|

| 19 |

+

with:

|

| 20 |

+

python-version: '3.8'

|

| 21 |

+

- name: Install dependencies

|

| 22 |

+

run: |

|

| 23 |

+

python -m pip install --upgrade pip

|

| 24 |

+

pip install setuptools wheel twine

|

| 25 |

+

- name: Release

|

| 26 |

+

if: ${{ steps.regex-match.outputs.match != '' }}

|

| 27 |

+

uses: softprops/action-gh-release@v1

|

| 28 |

+

with:

|

| 29 |

+

tag_name: v${{ steps.regex-match.outputs.group1 }}

|

| 30 |

+

- name: Build and publish

|

| 31 |

+

if: ${{ steps.regex-match.outputs.match != '' }}

|

| 32 |

+

env:

|

| 33 |

+

TWINE_USERNAME: __token__

|

| 34 |

+

TWINE_PASSWORD: ${{ secrets.PYPI_API_TOKEN }}

|

| 35 |

+

run: |

|

| 36 |

+

python setup.py sdist

|

| 37 |

+

twine upload dist/*

|

whisper/.github/workflows/test.yml

ADDED

|

@@ -0,0 +1,56 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: test

|

| 2 |

+

on:

|

| 3 |

+

push:

|

| 4 |

+

branches:

|

| 5 |

+

- main

|

| 6 |

+

pull_request:

|

| 7 |

+

branches:

|

| 8 |

+

- main

|

| 9 |

+

|

| 10 |

+

jobs:

|

| 11 |

+

pre-commit:

|

| 12 |

+

runs-on: ubuntu-latest

|

| 13 |

+

steps:

|

| 14 |

+

- uses: actions/checkout@v3

|

| 15 |

+

- name: Fetch base branch

|

| 16 |

+

run: git fetch origin ${{ github.base_ref }}

|

| 17 |

+

- uses: actions/setup-python@v4

|

| 18 |

+

with:

|

| 19 |

+

python-version: "3.8"

|

| 20 |

+

architecture: x64

|

| 21 |

+

- name: Get pip cache dir

|

| 22 |

+

id: pip-cache

|

| 23 |

+

run: |

|

| 24 |

+

echo "dir=$(pip cache dir)" >> $GITHUB_OUTPUT

|

| 25 |

+

- name: pip/pre-commit cache

|

| 26 |

+

uses: actions/cache@v3

|

| 27 |

+

with:

|

| 28 |

+

path: |

|

| 29 |

+

${{ steps.pip-cache.outputs.dir }}

|

| 30 |

+

~/.cache/pre-commit

|

| 31 |

+

key: ${{ runner.os }}-pip-pre-commit-${{ hashFiles('**/.pre-commit-config.yaml') }}

|

| 32 |

+

restore-keys: |

|

| 33 |

+

${{ runner.os }}-pip-pre-commit

|

| 34 |

+

- name: pre-commit

|

| 35 |

+

run: |

|

| 36 |

+

pip install -U pre-commit

|

| 37 |

+

pre-commit install --install-hooks

|

| 38 |

+

pre-commit run --all-files

|

| 39 |

+

whisper-test:

|

| 40 |

+

needs: pre-commit

|

| 41 |

+

runs-on: ubuntu-latest

|

| 42 |

+

strategy:

|

| 43 |

+

matrix:

|

| 44 |

+

python-version: ['3.8', '3.9', '3.10', '3.11']

|

| 45 |

+

pytorch-version: [1.13.1, 2.0.0]

|

| 46 |

+

exclude:

|

| 47 |

+

- python-version: '3.11'

|

| 48 |

+

pytorch-version: 1.13.1

|

| 49 |

+

steps:

|

| 50 |

+

- uses: conda-incubator/setup-miniconda@v2

|

| 51 |

+

- run: conda install -n test ffmpeg python=${{ matrix.python-version }}

|

| 52 |

+

- run: pip3 install torch==${{ matrix.pytorch-version }}+cpu --index-url https://download.pytorch.org/whl/cpu

|

| 53 |

+

- uses: actions/checkout@v3

|

| 54 |

+

- run: echo "$CONDA/envs/test/bin" >> $GITHUB_PATH

|

| 55 |

+

- run: pip install .["dev"]

|

| 56 |

+

- run: pytest --durations=0 -vv -k 'not test_transcribe or test_transcribe[tiny] or test_transcribe[tiny.en]' -m 'not requires_cuda'

|

whisper/.gitignore

ADDED

|

@@ -0,0 +1,11 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

__pycache__/

|

| 2 |

+

*.py[cod]

|

| 3 |

+

*$py.class

|

| 4 |

+

*.egg-info

|

| 5 |

+

.pytest_cache

|

| 6 |

+

.ipynb_checkpoints

|

| 7 |

+

|

| 8 |

+

thumbs.db

|

| 9 |

+

.DS_Store

|

| 10 |

+

.idea

|

| 11 |

+

|

whisper/.pre-commit-config.yaml

ADDED

|

@@ -0,0 +1,28 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

repos:

|

| 2 |

+

- repo: https://github.com/pre-commit/pre-commit-hooks

|

| 3 |

+

rev: v4.0.1

|

| 4 |

+

hooks:

|

| 5 |

+

- id: check-json

|

| 6 |

+

- id: end-of-file-fixer

|

| 7 |

+

types: [file, python]

|

| 8 |

+

- id: trailing-whitespace

|

| 9 |

+

types: [file, python]

|

| 10 |

+

- id: mixed-line-ending

|

| 11 |

+

- id: check-added-large-files

|

| 12 |

+

args: [--maxkb=4096]

|

| 13 |

+

- repo: https://github.com/psf/black

|

| 14 |

+

rev: 23.7.0

|

| 15 |

+

hooks:

|

| 16 |

+

- id: black

|

| 17 |

+

- repo: https://github.com/pycqa/isort

|

| 18 |

+

rev: 5.12.0

|

| 19 |

+

hooks:

|

| 20 |

+

- id: isort

|

| 21 |

+

name: isort (python)

|

| 22 |

+

args: ["--profile", "black", "-l", "88", "--trailing-comma", "--multi-line", "3"]

|

| 23 |

+

- repo: https://github.com/pycqa/flake8.git

|

| 24 |

+

rev: 6.0.0

|

| 25 |

+

hooks:

|

| 26 |

+

- id: flake8

|

| 27 |

+

types: [python]

|

| 28 |

+

args: ["--max-line-length", "88", "--ignore", "E203,E501,W503,W504"]

|

whisper/CHANGELOG.md

ADDED

|

@@ -0,0 +1,69 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# CHANGELOG

|

| 2 |

+

|

| 3 |

+

## [v20230918](https://github.com/openai/whisper/releases/tag/v20230918)

|

| 4 |

+

|

| 5 |

+

* Add .pre-commit-config.yaml ([#1528](https://github.com/openai/whisper/pull/1528))

|

| 6 |

+

* fix doc of TextDecoder ([#1526](https://github.com/openai/whisper/pull/1526))

|

| 7 |

+

* Update model-card.md ([#1643](https://github.com/openai/whisper/pull/1643))

|

| 8 |

+

* word timing tweaks ([#1559](https://github.com/openai/whisper/pull/1559))

|

| 9 |

+

* Avoid rearranging all caches ([#1483](https://github.com/openai/whisper/pull/1483))

|

| 10 |

+

* Improve timestamp heuristics. ([#1461](https://github.com/openai/whisper/pull/1461))

|

| 11 |

+

* fix condition_on_previous_text ([#1224](https://github.com/openai/whisper/pull/1224))

|

| 12 |

+

* Fix numba depreceation notice ([#1233](https://github.com/openai/whisper/pull/1233))

|

| 13 |

+

* Updated README.md to provide more insight on BLEU and specific appendices ([#1236](https://github.com/openai/whisper/pull/1236))

|

| 14 |

+

* Avoid computing higher temperatures on no_speech segments ([#1279](https://github.com/openai/whisper/pull/1279))

|

| 15 |

+

* Dropped unused execute bit from mel_filters.npz. ([#1254](https://github.com/openai/whisper/pull/1254))

|

| 16 |

+

* Drop ffmpeg-python dependency and call ffmpeg directly. ([#1242](https://github.com/openai/whisper/pull/1242))

|

| 17 |

+

* Python 3.11 ([#1171](https://github.com/openai/whisper/pull/1171))

|

| 18 |

+

* Update decoding.py ([#1219](https://github.com/openai/whisper/pull/1219))

|

| 19 |

+

* Update decoding.py ([#1155](https://github.com/openai/whisper/pull/1155))

|

| 20 |

+

* Update README.md to reference tiktoken ([#1105](https://github.com/openai/whisper/pull/1105))

|

| 21 |

+

* Implement max line width and max line count, and make word highlighting optional ([#1184](https://github.com/openai/whisper/pull/1184))

|

| 22 |

+

* Squash long words at window and sentence boundaries. ([#1114](https://github.com/openai/whisper/pull/1114))

|

| 23 |

+

* python-publish.yml: bump actions version to fix node warning ([#1211](https://github.com/openai/whisper/pull/1211))

|

| 24 |

+

* Update tokenizer.py ([#1163](https://github.com/openai/whisper/pull/1163))

|

| 25 |

+

|

| 26 |

+

## [v20230314](https://github.com/openai/whisper/releases/tag/v20230314)

|

| 27 |

+

|

| 28 |

+

* abort find_alignment on empty input ([#1090](https://github.com/openai/whisper/pull/1090))

|

| 29 |

+

* Fix truncated words list when the replacement character is decoded ([#1089](https://github.com/openai/whisper/pull/1089))

|

| 30 |

+

* fix github language stats getting dominated by jupyter notebook ([#1076](https://github.com/openai/whisper/pull/1076))

|

| 31 |

+

* Fix alignment between the segments and the list of words ([#1087](https://github.com/openai/whisper/pull/1087))

|

| 32 |

+

* Use tiktoken ([#1044](https://github.com/openai/whisper/pull/1044))

|

| 33 |

+

|

| 34 |

+

## [v20230308](https://github.com/openai/whisper/releases/tag/v20230308)

|

| 35 |

+

|

| 36 |

+

* kwargs in decode() for convenience ([#1061](https://github.com/openai/whisper/pull/1061))

|

| 37 |

+

* fix all_tokens handling that caused more repetitions and discrepancy in JSON ([#1060](https://github.com/openai/whisper/pull/1060))

|

| 38 |

+

* fix typo in CHANGELOG.md

|

| 39 |

+

|

| 40 |

+

## [v20230307](https://github.com/openai/whisper/releases/tag/v20230307)

|

| 41 |

+

|

| 42 |

+

* Fix the repetition/hallucination issue identified in #1046 ([#1052](https://github.com/openai/whisper/pull/1052))

|

| 43 |

+

* Use triton==2.0.0 ([#1053](https://github.com/openai/whisper/pull/1053))

|

| 44 |

+

* Install triton in x86_64 linux only ([#1051](https://github.com/openai/whisper/pull/1051))

|

| 45 |

+

* update setup.py to specify python >= 3.8 requirement

|

| 46 |

+

|

| 47 |

+

## [v20230306](https://github.com/openai/whisper/releases/tag/v20230306)

|

| 48 |

+

|

| 49 |

+

* remove auxiliary audio extension ([#1021](https://github.com/openai/whisper/pull/1021))

|

| 50 |

+

* apply formatting with `black`, `isort`, and `flake8` ([#1038](https://github.com/openai/whisper/pull/1038))

|

| 51 |

+

* word-level timestamps in `transcribe()` ([#869](https://github.com/openai/whisper/pull/869))

|

| 52 |

+

* Decoding improvements ([#1033](https://github.com/openai/whisper/pull/1033))

|

| 53 |

+

* Update README.md ([#894](https://github.com/openai/whisper/pull/894))

|

| 54 |

+

* Fix infinite loop caused by incorrect timestamp tokens prediction ([#914](https://github.com/openai/whisper/pull/914))

|

| 55 |

+

* drop python 3.7 support ([#889](https://github.com/openai/whisper/pull/889))

|

| 56 |

+

|

| 57 |

+

## [v20230124](https://github.com/openai/whisper/releases/tag/v20230124)

|

| 58 |

+

|

| 59 |

+

* handle printing even if sys.stdout.buffer is not available ([#887](https://github.com/openai/whisper/pull/887))

|

| 60 |

+

* Add TSV formatted output in transcript, using integer start/end time in milliseconds ([#228](https://github.com/openai/whisper/pull/228))

|

| 61 |

+

* Added `--output_format` option ([#333](https://github.com/openai/whisper/pull/333))

|

| 62 |

+

* Handle `XDG_CACHE_HOME` properly for `download_root` ([#864](https://github.com/openai/whisper/pull/864))

|

| 63 |

+

* use stdout for printing transcription progress ([#867](https://github.com/openai/whisper/pull/867))

|

| 64 |

+

* Fix bug where mm is mistakenly replaced with hmm in e.g. 20mm ([#659](https://github.com/openai/whisper/pull/659))

|

| 65 |

+

* print '?' if a letter can't be encoded using the system default encoding ([#859](https://github.com/openai/whisper/pull/859))

|

| 66 |

+

|

| 67 |

+

## [v20230117](https://github.com/openai/whisper/releases/tag/v20230117)

|

| 68 |

+

|

| 69 |

+

The first versioned release available on [PyPI](https://pypi.org/project/openai-whisper/)

|

whisper/LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2022 OpenAI

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

whisper/MANIFEST.in

ADDED

|

@@ -0,0 +1,5 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

include requirements.txt

|

| 2 |

+

include README.md

|

| 3 |

+

include LICENSE

|

| 4 |

+

include whisper/assets/*

|

| 5 |

+

include whisper/normalizers/english.json

|

whisper/README.md

ADDED

|

@@ -0,0 +1,147 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Whisper

|

| 2 |

+

|

| 3 |

+

[[Blog]](https://openai.com/blog/whisper)

|

| 4 |

+

[[Paper]](https://arxiv.org/abs/2212.04356)

|

| 5 |

+

[[Model card]](https://github.com/openai/whisper/blob/main/model-card.md)

|

| 6 |

+

[[Colab example]](https://colab.research.google.com/github/openai/whisper/blob/master/notebooks/LibriSpeech.ipynb)

|

| 7 |

+

|

| 8 |

+

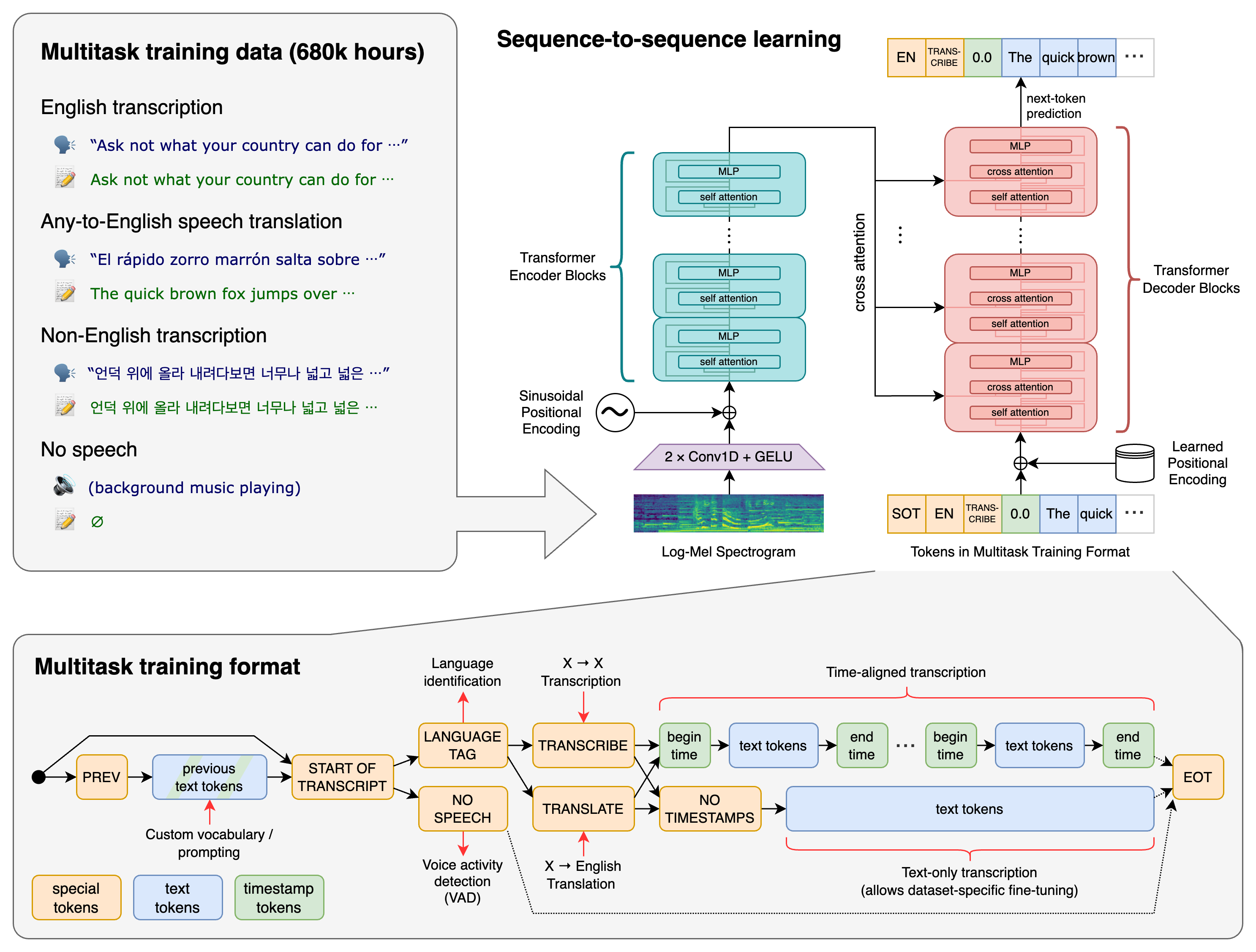

Whisper is a general-purpose speech recognition model. It is trained on a large dataset of diverse audio and is also a multitasking model that can perform multilingual speech recognition, speech translation, and language identification.

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

## Approach

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

A Transformer sequence-to-sequence model is trained on various speech processing tasks, including multilingual speech recognition, speech translation, spoken language identification, and voice activity detection. These tasks are jointly represented as a sequence of tokens to be predicted by the decoder, allowing a single model to replace many stages of a traditional speech-processing pipeline. The multitask training format uses a set of special tokens that serve as task specifiers or classification targets.

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

## Setup

|

| 19 |

+

|

| 20 |

+

We used Python 3.9.9 and [PyTorch](https://pytorch.org/) 1.10.1 to train and test our models, but the codebase is expected to be compatible with Python 3.8-3.11 and recent PyTorch versions. The codebase also depends on a few Python packages, most notably [OpenAI's tiktoken](https://github.com/openai/tiktoken) for their fast tokenizer implementation. You can download and install (or update to) the latest release of Whisper with the following command:

|

| 21 |

+

|

| 22 |

+

pip install -U openai-whisper

|

| 23 |

+

|

| 24 |

+

Alternatively, the following command will pull and install the latest commit from this repository, along with its Python dependencies:

|

| 25 |

+

|

| 26 |

+

pip install git+https://github.com/openai/whisper.git

|

| 27 |

+

|

| 28 |

+

To update the package to the latest version of this repository, please run:

|

| 29 |

+

|

| 30 |

+

pip install --upgrade --no-deps --force-reinstall git+https://github.com/openai/whisper.git

|

| 31 |

+

|

| 32 |

+

It also requires the command-line tool [`ffmpeg`](https://ffmpeg.org/) to be installed on your system, which is available from most package managers:

|

| 33 |

+

|

| 34 |

+

```bash

|

| 35 |

+

# on Ubuntu or Debian

|

| 36 |

+

sudo apt update && sudo apt install ffmpeg

|

| 37 |

+

|

| 38 |

+

# on Arch Linux

|

| 39 |

+

sudo pacman -S ffmpeg

|

| 40 |

+

|

| 41 |

+

# on MacOS using Homebrew (https://brew.sh/)

|

| 42 |

+

brew install ffmpeg

|

| 43 |

+

|

| 44 |

+

# on Windows using Chocolatey (https://chocolatey.org/)

|

| 45 |

+

choco install ffmpeg

|

| 46 |

+

|

| 47 |

+

# on Windows using Scoop (https://scoop.sh/)

|

| 48 |

+

scoop install ffmpeg

|

| 49 |

+

```

|

| 50 |

+

|

| 51 |

+

You may need [`rust`](http://rust-lang.org) installed as well, in case [tiktoken](https://github.com/openai/tiktoken) does not provide a pre-built wheel for your platform. If you see installation errors during the `pip install` command above, please follow the [Getting started page](https://www.rust-lang.org/learn/get-started) to install Rust development environment. Additionally, you may need to configure the `PATH` environment variable, e.g. `export PATH="$HOME/.cargo/bin:$PATH"`. If the installation fails with `No module named 'setuptools_rust'`, you need to install `setuptools_rust`, e.g. by running:

|

| 52 |

+

|

| 53 |

+

```bash

|

| 54 |

+

pip install setuptools-rust

|

| 55 |

+

```

|

| 56 |

+

|

| 57 |

+

|

| 58 |

+

## Available models and languages

|

| 59 |

+

|

| 60 |

+

There are five model sizes, four with English-only versions, offering speed and accuracy tradeoffs. Below are the names of the available models and their approximate memory requirements and relative speed.

|

| 61 |

+

|

| 62 |

+

|

| 63 |

+

| Size | Parameters | English-only model | Multilingual model | Required VRAM | Relative speed |

|

| 64 |

+

|:------:|:----------:|:------------------:|:------------------:|:-------------:|:--------------:|

|

| 65 |

+

| tiny | 39 M | `tiny.en` | `tiny` | ~1 GB | ~32x |

|

| 66 |

+

| base | 74 M | `base.en` | `base` | ~1 GB | ~16x |

|

| 67 |

+

| small | 244 M | `small.en` | `small` | ~2 GB | ~6x |

|

| 68 |

+

| medium | 769 M | `medium.en` | `medium` | ~5 GB | ~2x |

|

| 69 |

+

| large | 1550 M | N/A | `large` | ~10 GB | 1x |

|

| 70 |

+

|

| 71 |

+

The `.en` models for English-only applications tend to perform better, especially for the `tiny.en` and `base.en` models. We observed that the difference becomes less significant for the `small.en` and `medium.en` models.

|

| 72 |

+

|

| 73 |

+

Whisper's performance varies widely depending on the language. The figure below shows a WER (Word Error Rate) breakdown by languages of the Fleurs dataset using the `large-v2` model (The smaller the numbers, the better the performance). Additional WER scores corresponding to the other models and datasets can be found in Appendix D.1, D.2, and D.4. Meanwhile, more BLEU (Bilingual Evaluation Understudy) scores can be found in Appendix D.3. Both are found in [the paper](https://arxiv.org/abs/2212.04356).

|

| 74 |

+

|

| 75 |

+

|

| 76 |

+

|

| 77 |

+

|

| 78 |

+

|

| 79 |

+

## Command-line usage

|

| 80 |

+

|

| 81 |

+

The following command will transcribe speech in audio files, using the `medium` model:

|

| 82 |

+

|

| 83 |

+

whisper audio.flac audio.mp3 audio.wav --model medium

|

| 84 |

+

|

| 85 |

+

The default setting (which selects the `small` model) works well for transcribing English. To transcribe an audio file containing non-English speech, you can specify the language using the `--language` option:

|

| 86 |

+

|

| 87 |

+

whisper japanese.wav --language Japanese

|

| 88 |

+

|

| 89 |

+

Adding `--task translate` will translate the speech into English:

|

| 90 |

+

|

| 91 |

+

whisper japanese.wav --language Japanese --task translate

|

| 92 |

+

|

| 93 |

+

Run the following to view all available options:

|

| 94 |

+

|

| 95 |

+

whisper --help

|

| 96 |

+

|

| 97 |

+

See [tokenizer.py](https://github.com/openai/whisper/blob/main/whisper/tokenizer.py) for the list of all available languages.

|

| 98 |

+

|

| 99 |

+

|

| 100 |

+

## Python usage

|

| 101 |

+

|

| 102 |

+

Transcription can also be performed within Python:

|

| 103 |

+

|

| 104 |

+

```python

|

| 105 |

+

import whisper

|

| 106 |

+

|

| 107 |

+

model = whisper.load_model("base")

|

| 108 |

+

result = model.transcribe("audio.mp3")

|

| 109 |

+

print(result["text"])

|

| 110 |

+

```

|

| 111 |

+

|

| 112 |

+

Internally, the `transcribe()` method reads the entire file and processes the audio with a sliding 30-second window, performing autoregressive sequence-to-sequence predictions on each window.

|

| 113 |

+

|

| 114 |

+

Below is an example usage of `whisper.detect_language()` and `whisper.decode()` which provide lower-level access to the model.

|

| 115 |

+

|

| 116 |

+

```python

|

| 117 |

+

import whisper

|

| 118 |

+

|

| 119 |

+

model = whisper.load_model("base")

|

| 120 |

+

|

| 121 |

+

# load audio and pad/trim it to fit 30 seconds

|

| 122 |

+

audio = whisper.load_audio("audio.mp3")

|

| 123 |

+

audio = whisper.pad_or_trim(audio)

|

| 124 |

+

|

| 125 |

+

# make log-Mel spectrogram and move to the same device as the model

|

| 126 |

+

mel = whisper.log_mel_spectrogram(audio).to(model.device)

|

| 127 |

+

|

| 128 |

+

# detect the spoken language

|

| 129 |

+

_, probs = model.detect_language(mel)

|

| 130 |

+

print(f"Detected language: {max(probs, key=probs.get)}")

|

| 131 |

+

|

| 132 |

+

# decode the audio

|

| 133 |

+

options = whisper.DecodingOptions()

|

| 134 |

+

result = whisper.decode(model, mel, options)

|

| 135 |

+

|

| 136 |

+

# print the recognized text

|

| 137 |

+

print(result.text)

|

| 138 |

+

```

|

| 139 |

+

|

| 140 |

+

## More examples

|

| 141 |

+

|

| 142 |

+

Please use the [🙌 Show and tell](https://github.com/openai/whisper/discussions/categories/show-and-tell) category in Discussions for sharing more example usages of Whisper and third-party extensions such as web demos, integrations with other tools, ports for different platforms, etc.

|

| 143 |

+

|

| 144 |

+

|

| 145 |

+

## License

|

| 146 |

+

|

| 147 |

+

Whisper's code and model weights are released under the MIT License. See [LICENSE](https://github.com/openai/whisper/blob/main/LICENSE) for further details.

|

whisper/approach.png

ADDED

|

whisper/data/README.md

ADDED

|

@@ -0,0 +1,118 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

This directory supplements the paper with more details on how we prepared the data for evaluation, to help replicate our experiments.

|

| 2 |

+

|

| 3 |

+

## Short-form English-only datasets

|

| 4 |

+

|

| 5 |

+

### LibriSpeech

|

| 6 |

+

|

| 7 |

+

We used the test-clean and test-other splits from the [LibriSpeech ASR corpus](https://www.openslr.org/12).

|

| 8 |

+

|

| 9 |

+

### TED-LIUM 3

|

| 10 |

+

|

| 11 |

+

We used the test split of [TED-LIUM Release 3](https://www.openslr.org/51/), using the segmented manual transcripts included in the release.

|

| 12 |

+

|

| 13 |

+

### Common Voice 5.1

|

| 14 |

+

|

| 15 |

+

We downloaded the English subset of Common Voice Corpus 5.1 from [the official website](https://commonvoice.mozilla.org/en/datasets)

|

| 16 |

+

|

| 17 |

+

### Artie

|

| 18 |

+

|

| 19 |

+

We used the [Artie bias corpus](https://github.com/artie-inc/artie-bias-corpus). This is a subset of the Common Voice dataset.

|

| 20 |

+

|

| 21 |

+

### CallHome & Switchboard

|

| 22 |

+

|

| 23 |

+

We used the two corpora from [LDC2002S09](https://catalog.ldc.upenn.edu/LDC2002S09) and [LDC2002T43](https://catalog.ldc.upenn.edu/LDC2002T43) and followed the [eval2000_data_prep.sh](https://github.com/kaldi-asr/kaldi/blob/master/egs/fisher_swbd/s5/local/eval2000_data_prep.sh) script for preprocessing. The `wav.scp` files can be converted to WAV files with the following bash commands:

|

| 24 |

+

|

| 25 |

+

```bash

|

| 26 |

+

mkdir -p wav

|

| 27 |

+

while read name cmd; do

|

| 28 |

+

echo $name

|

| 29 |

+

echo ${cmd/\|/} wav/$name.wav | bash

|

| 30 |

+

done < wav.scp

|

| 31 |

+

```

|

| 32 |

+

|

| 33 |

+

|

| 34 |

+

### WSJ

|

| 35 |

+

|

| 36 |

+

We used [LDC93S6B](https://catalog.ldc.upenn.edu/LDC93S6B) and [LDC94S13B](https://catalog.ldc.upenn.edu/LDC94S13B) and followed the [s5 recipe](https://github.com/kaldi-asr/kaldi/tree/master/egs/wsj/s5) to preprocess the dataset.

|

| 37 |

+

|

| 38 |

+

### CORAAL

|

| 39 |

+

|

| 40 |

+

We used the 231 interviews from [CORAAL (v. 2021.07)](https://oraal.uoregon.edu/coraal) and used the segmentations from [the FairSpeech project](https://github.com/stanford-policylab/asr-disparities/blob/master/input/CORAAL_transcripts.csv).

|

| 41 |

+

|

| 42 |

+

### CHiME-6

|

| 43 |

+

|

| 44 |

+

We downloaded the [CHiME-5 dataset](https://spandh.dcs.shef.ac.uk//chime_challenge/CHiME5/download.html) and followed the stage 0 of the [s5_track1 recipe](https://github.com/kaldi-asr/kaldi/tree/master/egs/chime6/s5_track1) to create the CHiME-6 dataset which fixes synchronization. We then used the binaural recordings (`*_P??.wav`) and the corresponding transcripts.

|

| 45 |

+

|

| 46 |

+

### AMI-IHM, AMI-SDM1

|

| 47 |

+

|

| 48 |

+

We preprocessed the [AMI Corpus](https://groups.inf.ed.ac.uk/ami/corpus/overview.shtml) by following the stage 0 ad 2 of the [s5b recipe](https://github.com/kaldi-asr/kaldi/tree/master/egs/ami/s5b).

|

| 49 |

+

|

| 50 |

+

|

| 51 |

+

## Long-form English-only datasets

|

| 52 |

+

|

| 53 |

+

### TED-LIUM 3

|

| 54 |

+

|

| 55 |

+

To create a long-form transcription dataset from the [TED-LIUM3](https://www.openslr.org/51/) dataset, we sliced the audio between the beginning of the first labeled segment and the end of the last labeled segment of each talk, and we used the concatenated text as the label. Below are the timestamps used for slicing each of the 11 TED talks in the test split.

|

| 56 |

+

|

| 57 |

+

| Filename | Begin time (s) | End time (s) |

|

| 58 |

+

|---------------------|----------------|--------------|

|

| 59 |

+

| DanBarber_2010 | 16.09 | 1116.24 |

|

| 60 |

+

| JaneMcGonigal_2010 | 15.476 | 1187.61 |

|

| 61 |

+

| BillGates_2010 | 15.861 | 1656.94 |

|

| 62 |

+

| TomWujec_2010U | 16.26 | 402.17 |

|

| 63 |

+

| GaryFlake_2010 | 16.06 | 367.14 |

|

| 64 |

+

| EricMead_2009P | 18.434 | 536.44 |

|

| 65 |

+

| MichaelSpecter_2010 | 16.11 | 979.312 |

|

| 66 |

+

| DanielKahneman_2010 | 15.8 | 1199.44 |

|

| 67 |

+

| AimeeMullins_2009P | 17.82 | 1296.59 |

|

| 68 |

+

| JamesCameron_2010 | 16.75 | 1010.65 |

|

| 69 |

+

| RobertGupta_2010U | 16.8 | 387.03 |

|

| 70 |

+

|

| 71 |

+

### Meanwhile

|

| 72 |

+

|

| 73 |

+

This dataset consists of 64 segments from The Late Show with Stephen Colbert. The YouTube video ID, start and end timestamps, and the labels can be found in [meanwhile.json](meanwhile.json). The labels are collected from the closed-caption data for each video and corrected with manual inspection.

|

| 74 |

+

|

| 75 |

+

### Rev16

|

| 76 |

+

|

| 77 |

+

We use a subset of 16 files from the 30 podcast episodes in [Rev.AI's Podcast Transcription Benchmark](https://www.rev.ai/blog/podcast-transcription-benchmark-part-1/), after finding that there are multiple cases where a significant portion of the audio and the labels did not match, mostly on the parts introducing the sponsors. We selected 16 episodes that do not have this error, whose "file number" are:

|

| 78 |

+

|

| 79 |

+

3 4 9 10 11 14 17 18 20 21 23 24 26 27 29 32

|

| 80 |

+

|

| 81 |

+

### Kincaid46

|

| 82 |

+

|

| 83 |

+

This dataset consists of 46 audio files and the corresponding transcripts compiled in the blog article [Which automatic transcription service is the most accurate - 2018](https://medium.com/descript/which-automatic-transcription-service-is-the-most-accurate-2018-2e859b23ed19) by Jason Kincaid. We used the 46 audio files and reference transcripts from the Airtable widget in the article.

|

| 84 |

+

|

| 85 |

+

For the human transcription benchmark in the paper, we use a subset of 25 examples from this data, whose "Ref ID" are:

|

| 86 |

+

|

| 87 |

+

2 4 5 8 9 10 12 13 14 16 19 21 23 25 26 28 29 30 33 35 36 37 42 43 45

|

| 88 |

+

|

| 89 |

+

### Earnings-21, Earnings-22

|

| 90 |

+

|

| 91 |

+

For these datasets, we used the files available in [the speech-datasets repository](https://github.com/revdotcom/speech-datasets), as of their `202206` version.

|

| 92 |

+

|

| 93 |

+

### CORAAL

|

| 94 |

+

|

| 95 |

+

We used the 231 interviews from [CORAAL (v. 2021.07)](https://oraal.uoregon.edu/coraal) and used the full-length interview files and transcripts.

|

| 96 |

+

|

| 97 |

+

|

| 98 |

+

## Multilingual datasets

|

| 99 |

+

|

| 100 |

+

### Multilingual LibriSpeech

|

| 101 |

+

|

| 102 |

+

We used the test splits from each language in [the Multilingual LibriSpeech (MLS) corpus](https://www.openslr.org/94/).

|

| 103 |

+

|

| 104 |

+

### Fleurs

|

| 105 |

+

|

| 106 |

+

We collected audio files and transcripts using the implementation available as [HuggingFace datasets](https://huggingface.co/datasets/google/fleurs/blob/main/fleurs.py). To use as a translation dataset, we matched the numerical utterance IDs to find the corresponding transcript in English.

|

| 107 |

+

|

| 108 |

+

### VoxPopuli

|

| 109 |

+

|

| 110 |

+

We used the `get_asr_data.py` script from [the official repository](https://github.com/facebookresearch/voxpopuli) to collect the ASR data in 14 languages.

|

| 111 |

+

|

| 112 |

+

### Common Voice 9

|

| 113 |

+

|

| 114 |

+

We downloaded the Common Voice Corpus 9 from [the official website](https://commonvoice.mozilla.org/en/datasets)

|

| 115 |

+

|

| 116 |

+

### CoVOST 2

|

| 117 |

+

|

| 118 |

+

We collected the `X into English` data collected using [the official repository](https://github.com/facebookresearch/covost).

|

whisper/data/meanwhile.json

ADDED

|

@@ -0,0 +1,322 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"1YOmY-Vjy-o": {

|

| 3 |

+

"begin": "1:04.0",

|

| 4 |

+

"end": "2:11.0",

|

| 5 |

+

"text": "FOLKS, IF YOU WATCH THE SHOW,\nYOU KNOW I SPEND A LOT OF TIME\nRIGHT OVER THERE, PATIENTLY AND\nASTUTELY SCRUTINIZING THE\nBOXWOOD AND MAHOGANY CHESS SET\nOF THE DAY'S BIGGEST STORIES,\nDEVELOPING THE CENTRAL\nHEADLINE-PAWNS, DEFTLY\nMANEUVERING AN OH-SO-TOPICAL\nKNIGHT TO F-6, FEIGNING A\nCLASSIC SICILIAN-NAJDORF\nVARIATION ON THE NEWS, ALL THE\nWHILE, SEEING EIGHT MOVES DEEP\nAND PATIENTLY MARSHALING THE\nLATEST PRESS RELEASES INTO A\nFISCHER SOZIN LIPNITZKY ATTACK\nTHAT CULMINATES IN THE ELEGANT,\nLETHAL, SLOW-PLAYED EN PASSANT\nCHECKMATE THAT IS MY NIGHTLY\nMONOLOGUE.\nBUT SOMETIMES, SOMETIMES,\nFOLKS-- I,\nSOMETIMES,\nI STARTLE AWAKE UPSIDE DOWN ON\nTHE MONKEY BARS OF A CONDEMNED\nPLAYGROUND ON A SUPERFUND SITE,\nGET ALL HEPPED UP ON GOOFBALLS,\nRUMMAGE THROUGH A DISCARDED TAG\nBAG OF DEFECTIVE TOYS, YANK\nOUT A FISTFUL OF DISEMBODIED\nDOLL LIMBS, TOSS THEM ON A\nSTAINED KID'S PLACEMAT FROM A\nDEFUNCT DENNY'S, SET UP A TABLE\nINSIDE A RUSTY CARGO CONTAINER\nDOWN BY THE WHARF, AND CHALLENGE\nTOOTHLESS DRIFTERS TO THE\nGODLESS BUGHOUSE BLITZ\nOF TOURNAMENT OF NEWS THAT IS MY\nSEGMENT:\nMEANWHILE!\n"

|

| 6 |

+

},

|

| 7 |

+

"3P_XnxdlXu0": {

|

| 8 |

+

"begin": "2:08.3",

|

| 9 |

+

"end": "3:02.3",

|

| 10 |

+

"text": "FOLKS, I SPEND A LOT OF TIME\nRIGHT OVER THERE, NIGHT AFTER NIGHT ACTUALLY, CAREFULLY\nSELECTING FOR YOU THE DAY'S NEWSIEST,\nMOST AERODYNAMIC HEADLINES,\nSTRESS TESTING THE MOST TOPICAL\nANTILOCK BRAKES AND POWER\nSTEERING, PAINSTAKINGLY\nSTITCHING LEATHER SEATING SO\nSOFT, IT WOULD MAKE J.D. POWER\nAND HER ASSOCIATES BLUSH, TO\nCREATE THE LUXURY SEDAN THAT IS\nMY NIGHTLY MONOLOGUE.\nBUT SOMETIMES, JUST SOMETIMES,\nFOLKS, I LURCH TO CONSCIOUSNESS\nIN THE BACK OF AN ABANDONED\nSCHOOL BUS AND SLAP MYSELF AWAKE\nWITH A CRUSTY FLOOR MAT BEFORE\nUSING A MOUSE-BITTEN TIMING BELT\nTO STRAP SOME OLD PLYWOOD TO A\nCOUPLE OF DISCARDED OIL DRUMS.\nTHEN, BY THE LIGHT OF A HEATHEN\nMOON, RENDER A GAS TANK OUT OF\nAN EMPTY BIG GULP, FILL IT WITH\nWHITE CLAW AND DENATURED\nALCOHOL, THEN LIGHT A MATCH AND\nLET HER RIP, IN THE DEMENTED\nONE-MAN SOAP BOX DERBY OF NEWS\nTHAT IS MY SEGMENT: MEANWHILE!"

|

| 11 |

+

},

|

| 12 |

+

"3elIlQzJEQ0": {

|

| 13 |

+

"begin": "1:08.5",

|

| 14 |

+

"end": "1:58.5",

|

| 15 |

+

"text": "LADIES AND GENTLEMEN, YOU KNOW, I SPEND A\nLOT OF TIME RIGHT OVER THERE,\nRAISING THE FINEST HOLSTEIN NEWS\nCATTLE, FIRMLY, YET TENDERLY,\nMILKING THE LATEST HEADLINES\nFROM THEIR JOKE-SWOLLEN TEATS,\nCHURNING THE DAILY STORIES INTO\nTHE DECADENT, PROVENCAL-STYLE\nTRIPLE CREME BRIE THAT IS MY\nNIGHTLY MONOLOGUE.\nBUT SOMETIMES, SOMETIMES, FOLKS,\nI STAGGER HOME HUNGRY AFTER\nBEING RELEASED BY THE POLICE,\nAND ROOT AROUND IN THE NEIGHBOR'S\nTRASH CAN FOR AN OLD MILK\nCARTON, SCRAPE OUT THE BLOOMING\nDAIRY RESIDUE ONTO THE REMAINS\nOF A WET CHEESE RIND I WON\nFROM A RAT IN A PRE-DAWN STREET\nFIGHT, PUT IT IN A DISCARDED\nPAINT CAN, AND LEAVE IT TO\nFERMENT NEXT TO A TRASH FIRE,\nTHEN HUNKER DOWN AND HALLUCINATE\nWHILE EATING THE LISTERIA-LADEN\nDEMON CUSTARD OF NEWS THAT IS\nMY SEGMENT: MEANWHILE!"

|

| 16 |

+

},

|

| 17 |

+

"43P4q1KGKEU": {

|

| 18 |

+

"begin": "0:29.3",

|

| 19 |

+

"end": "1:58.3",

|

| 20 |

+

"text": "FOLKS, IF YOU WATCH THE SHOW, YOU KNOW I SPEND MOST\nOF MY TIME, RIGHT OVER THERE.\nCAREFULLY SORTING THROUGH THE\nDAY'S BIGGEST STORIES, AND\nSELECTING ONLY THE MOST\nSUPPLE AND UNBLEMISHED OSTRICH\nAND CROCODILE NEWS LEATHER,\nWHICH I THEN ENTRUST TO ARTISAN\nGRADUATES OF THE \"ECOLE\nGREGOIRE-FERRANDI,\" WHO\nCAREFULLY DYE THEM IN A PALETTE\nOF BRIGHT, ZESTY SHADES, AND\nADORN THEM WITH THE FINEST, MOST\nTOPICAL INLAY WORK USING HAND\nTOOLS AND DOUBLE MAGNIFYING\nGLASSES, THEN ASSEMBLE THEM\nACCORDING TO NOW CLASSIC AND\nELEGANT GEOMETRY USING OUR\nSIGNATURE SADDLE STITCHING, AND\nLINE IT WITH BEESWAX-COATED\nLINEN, AND FINALLY ATTACH A\nMALLET-HAMMERED STRAP, PEARLED\nHARDWARE, AND A CLOCHETTE TO\nCREATE FOR YOU THE ONE-OF-A-KIND\nHAUTE COUTURE HERMES BIRKIN BAG\nTHAT IS MY MONOLOGUE.\nBUT SOMETIMES, SOMETIMES, FOLKS,\nSOMETIMES, SOMETIMES I WAKE UP IN THE\nLAST CAR OF AN ABANDONED ROLLER\nCOASTER AT CONEY ISLAND, WHERE\nI'M HIDING FROM THE TRIADS, I\nHUFF SOME ENGINE LUBRICANTS OUT\nOF A SAFEWAY BAG AND STAGGER\nDOWN THE SHORE TO TEAR THE SAIL\nOFF A BEACHED SCHOONER, THEN I\nRIP THE CO-AXIAL CABLE OUT OF\nTHE R.V. OF AN ELDERLY COUPLE\nFROM UTAH, HANK AND MABEL,\nLOVELY FOLKS, AND USE IT TO\nSTITCH THE SAIL INTO A LOOSE,\nPOUCH-LIKE RUCKSACK, THEN I\nSTOW AWAY IN THE BACK OF A\nGARBAGE TRUCK TO THE JUNK YARD\nWHERE I PICK THROUGH THE DEBRIS\nFOR ONLY THE BROKEN TOYS THAT\nMAKE ME THE SADDEST UNTIL I HAVE\nLOADED, FOR YOU, THE HOBO\nFUGITIVE'S BUG-OUT BINDLE OF\nNEWS THAT IS MY SEGMENT:\nMEANWHILE!"

|

| 21 |

+

},

|

| 22 |

+

"4ktyaJkLMfo": {

|

| 23 |

+

"begin": "0:42.5",

|

| 24 |

+

"end": "1:26.5",

|

| 25 |

+

"text": "YOU KNOW, FOLKS, I SPEND A LOT\nOF TIME CRAFTING FOR YOU A\nBESPOKE PLAYLIST OF THE DAY'S\nBIGGEST STORIES, RIGHT OVER THERE, METICULOUSLY\nSELECTING THE MOST TOPICAL\nCHAKRA-AFFIRMING SCENTED\nCANDLES, AND USING FENG SHUI TO\nPERFECTLY ALIGN THE JOKE ENERGY\nIN THE EXCLUSIVE BOUTIQUE YOGA\nRETREAT THAT IS MY MONOLOGUE.\nBUT SOMETIMES, JUST SOMETIMES,\nI GO TO THE DUMPSTER BEHIND THE\nWAFFLE HOUSE AT 3:00 IN THE\nMORNING, TAKE OFF MY SHIRT,\nCOVER MYSELF IN USED FRY OIL,\nWRAP MY HANDS IN SOME OLD DUCT\nTAPE I STOLE FROM A BROKEN CAR\nWINDOW, THEN POUND A SIX-PACK OF\nBLUEBERRY HARD SELTZER AND A\nSACK OF PILLS I STOLE FROM A\nPARKED AMBULANCE, THEN\nARM-WRESTLE A RACCOON IN THE\nBACK ALLEY VISION QUEST OF NEWS\nTHAT IS MY SEGMENT:\n\"MEANWHILE!\""

|

| 26 |

+

},

|

| 27 |

+

"5Dsh9AgqRG0": {

|

| 28 |

+

"begin": "1:06.0",

|

| 29 |

+

"end": "2:34.0",

|

| 30 |

+

"text": "YOU KNOW, FOLKS, I SPEND MOST OF\nMY TIME RIGHT OVER THERE, MINING\nTHE DAY'S BIGGEST, MOST\nIMPORTANT STORIES, COLLECTING\nTHE FINEST, MOST TOPICAL IRON\nORE, HAND HAMMERING IT INTO JOKE\nPANELS.\nTHEN I CRAFT SHEETS OF BRONZE\nEMBLAZONED WITH PATTERNS THAT\nTELL AN EPIC TALE OF CONQUEST\nAND GLORY.\nTHEN, USING THE GERMANIC\nTRADITIONAL PRESSBLECH\nPROCESS, I PLACE THIN SHEETS OF\nFOIL AGAINST THE SCENES, AND BY\nHAMMERING OR OTHERWISE,\nAPPLYING PRESSURE FROM THE BACK,\nI PROJECT THESE SCENES INTO A\nPAIR OF CHEEK GUARDS AND A\nFACEPLATE.\nAND, FINALLY, USING FLUTED\nSTRIPS OF WHITE ALLOYED\nMOULDING, I DIVIDE THE DESIGNS\nINTO FRAMED PANELS AND HOLD IT\nALL TOGETHER USING BRONZE RIVETS\nTO CREATE THE BEAUTIFUL AND\nINTIMIDATING ANGLO-SAXON\nBATTLE HELM THAT IS MY\nNIGHTLY MONOLOGUE.\nBUT SOMETIMES, SOMETIMES, FOLKS,\nSOMETIMES, JUST SOMETIMES, I COME TO MY SENSES FULLY NAKED\nON THE DECK OF A PIRATE-BESIEGED\nMELEE CONTAINER SHIP THAT PICKED\nME UP FLOATING ON THE DETACHED\nDOOR OF A PORT-A-POTTY IN THE\nINDIAN OCEAN.\nTHEN, AFTER A SUNSTROKE-INDUCED\nREALIZATION THAT THE CREW OF\nTHIS SHIP PLANS TO SELL ME IN\nEXCHANGE FOR A BAG OF ORANGES TO\nFIGHT OFF SCURVY, I LEAD A\nMUTINY USING ONLY A P.V.C. PIPE\nAND A POOL CHAIN.\nTHEN, ACCEPTING MY NEW ROLE AS\nCAPTAIN, AND DECLARING MYSELF\nKING OF THE WINE-DARK SEAS, I\nGRAB A DIRTY MOP BUCKET COVERED\nIN BARNACLES AND ADORN IT WITH\nTHE TEETH OF THE VANQUISHED, TO\nCREATE THE SOPPING WET PIRATE\nCROWN OF NEWS THAT IS MY\nSEGMENT:\n\"MEANWHILE!\" "

|

| 31 |

+

},

|

| 32 |

+

"748OyesQy84": {

|

| 33 |

+

"begin": "0:40.0",

|

| 34 |

+

"end": "1:41.0",

|

| 35 |

+

"text": "FOLKS, IF YOU WATCH THE SHOW, YOU KNOW, I SPEND MOST OF\nMY TIME, RIGHT OVER THERE,\nCAREFULLY BLENDING FOR YOU THE\nDAY'S NEWSIEST, MOST TOPICAL\nFLOUR, EGGS, MILK, AND BUTTER,\nAND STRAINING IT INTO A FINE\nBATTER TO MAKE DELICATE, YET\nINFORMATIVE COMEDY PANCAKES.\nTHEN I GLAZE THEM IN THE JUICE\nAND ZEST OF THE MOST RELEVANT\nMIDNIGHT VALENCIA ORANGES, AND\nDOUSE IT ALL IN A FINE DELAMAIN\nDE VOYAGE COGNAC, BEFORE\nFLAMBEING AND BASTING THEM TABLE\nSIDE, TO SERVE FOR YOU THE JAMES\nBEARD AWARD-WORTHY CREPES\nSUZETTE THAT IS MY NIGHTLY,\nMONOLOGUE.\nBUT SOMETIMES, JUST SOMETIMES FOLKS, I WAKE UP IN THE\nBAGGAGE HOLD OF A GREYHOUND BUS\nAS ITS BEING HOISTED BY THE\nSCRAPYARD CLAW TOWARD THE BURN\nPIT, ESCAPE TO A NEARBY\nABANDONED PRICE CHOPPER, WHERE I\nSCROUNGE FOR OLD BREAD SCRAPS,\nBUSTED OPEN BAGS OF STAR FRUIT\nCANDIES, AND EXPIRED EGGS,\nCHUCK IT ALL IN A DIRTY HUBCAP\nAND SLAP IT OVER A TIRE FIRE\nBEFORE USING THE LEGS OF A\nSTAINED PAIR OF SWEATPANTS AS\nOVEN MITTS TO EXTRACT AND SERVE\nTHE DEMENTED TRANSIENT'S POUND\nCAKE OF NEWS THAT IS MY SEGMENT:\n\"MEANWHILE.\""

|

| 36 |

+

},

|

| 37 |

+

"8prs9Pq5Xhk": {

|

| 38 |

+

"begin": "1:18.5",

|

| 39 |

+

"end": "2:17.5",

|

| 40 |

+

"text": "FOLKS, IF YOU WATCH THE SHOW,\nAND I HOPE YOU DO, I SPEND A\nLOT OF TIME RIGHT OVER THERE,\nTIRELESSLY STUDYING THE LINEAGE\nOF THE DAY'S MOST IMPORTANT\nTHOROUGHBRED STORIES AND\nHOLSTEINER HEADLINES, WORKING\nWITH THE BEST TRAINERS MONEY CAN\nBUY TO REAR THEIR COMEDY\nOFFSPRING WITH A HAND THAT IS\nSTERN, YET GENTLE, INTO THE\nTRIPLE-CROWN-WINNING EQUINE\nSPECIMEN THAT IS MY NIGHTLY\nMONOLOGUE.\nBUT SOMETIMES, SOMETIMES, FOLKS\nI BREAK INTO AN UNINCORPORATED\nVETERINARY GENETICS LAB AND GRAB\nWHATEVER TEST TUBES I CAN FIND.\nAND THEN, UNDER A GROW LIGHT I GOT\nFROM A DISCARDED CHIA PET, I MIX\nTHE PILFERED D.N.A. OF A HORSE\nAND WHATEVER WAS IN A TUBE\nLABELED \"KEITH-COLON-EXTRA,\"\nSLURRYING THE CONCOCTION WITH\nCAFFEINE PILLS AND A MICROWAVED\nRED BULL, I SCREAM-SING A PRAYER\nTO JANUS, INITIATOR OF HUMAN\nLIFE AND GOD OF TRANSFORMATION\nAS A HALF-HORSE, HALF-MAN FREAK,\nSEIZES TO LIFE BEFORE ME IN THE\nHIDEOUS COLLECTION OF LOOSE\nANIMAL PARTS AND CORRUPTED MAN\nTISSUE THAT IS MY SEGMENT:\nMEANWHILE!"

|

| 41 |

+

},

|

| 42 |

+

"9gX4kdFajqE": {

|

| 43 |

+

"begin": "0:44.0",

|

| 44 |

+

"end": "1:08.0",

|

| 45 |

+

"text": "FOLKS, IF YOU WATCH THE SHOW,\nYOU KNOW SOMETIMES I'M OVER\nTHERE DOING THE MONOLOGUE.\nAND THEN THERE'S A COMMERCIAL\nBREAK, AND THEN I'M SITTING\nHERE.\nAND I DO A REALLY LONG\nDESCRIPTION OF A DIFFERENT\nSEGMENT ON THE SHOW, A SEGMENT\nWE CALL... \"MEANWHILE!\""

|

| 46 |

+

},

|

| 47 |

+

"9ssGpE9oem8": {

|

| 48 |

+

"begin": "0:00.0",

|

| 49 |

+

"end": "0:58.0",

|

| 50 |

+

"text": "WELCOME BACK, EVERYBODY.\nYOU KNOW, FOLKS, I SPEND MOST OF\nMY TIME RIGHT OVER THERE,\nCOMBING OVER THE DAY'S NEWS,\nSELECTING ONLY THE HIGHEST\nQUALITY AND MOST TOPICAL BONDED\nCALFSKIN-LEATHER STORIES,\nCAREFULLY TANNING THEM AND CUTTING\nTHEM WITH MILLIMETER PRECISION,\nTHEN WEAVING IT TOGETHER IN\nA DOUBLE-FACED INTRECCIATO\nPATTERN TO CREATE FOR YOU THE\nEXQUISITE BOTTEGA VENETA CLUTCH\nTHAT IS MY MONOLOGUE.\nBUT SOMETIMES, WHILE AT A RAVE\nIN A CONDEMNED CEMENT FACTORY, I\nGET INJECTED WITH A MYSTERY\nCOCKTAIL OF HALLUCINOGENS AND\nPAINT SOLVENTS, THEN, OBEYING\nTHE VOICES WHO WILL STEAL MY\nTEETH IF I DON'T, I STUMBLE INTO\nA SHIPYARD WHERE I RIP THE\nCANVAS TARP FROM A GRAVEL TRUCK,\nAND TIE IT OFF WITH THE ROPE FROM A\nROTTING FISHING NET, THEN WANDER\nA FOOD COURT, FILLING IT WITH\nWHAT I THINK ARE GOLD COINS BUT\nARE, IN FACT, OTHER PEOPLE'S CAR\nKEYS, TO DRAG AROUND THE\nROOTLESS TRANSIENT'S CLUSTER\nSACK OF NEWS THAT IS MY SEGMENT:\n\"MEANWHILE!\""

|

| 51 |

+

},

|

| 52 |

+

"ARw4K9BRCAE": {

|

| 53 |

+

"begin": "0:26.0",

|

| 54 |

+

"end": "1:16.0",

|

| 55 |

+

"text": "YOU KNOW, FOLKS, I SPEND\nMOST OF MY TIME STANDING RIGHT OVER\nTHERE,\nGOING OVER THE DAY'S NEWS\nAND SELECTING THE FINEST,\nMOST\nTOPICAL CARBON FIBER\nSTORIES, SHAPING THEM IN DRY\nDOCK INTO A\nSLEEK AND SEXY HULL, KITTING\nIT OUT WITH THE MOST TOPICAL\nFIBERGLASS AND TEAK FITTINGS\nBRASS RAILINGS, HOT TUBS,\nAND\nNEWS HELIPADS, TO CREATE THE\nCUSTOM DESIGNED, GLEAMING\nMEGA-YACHT THAT IS MY NIGHTLY\nMONOLOGUE. BUT SOMETIMES, JUST SOMETIMES FOLKS, I\nWASH ASHORE AT\nAN ABANDONED BEACH RESORT\nAFTER A NIGHT OF BATH SALTS\nAND\nSCOPOLAMINE, LASH SOME\nROTTING PICNIC TABLES\nTOGETHER, THEN\nDREDGE THE NEWS POND TO\nHAUL UP WHATEVER DISCARDED\nTRICYCLES AND BROKEN\nFRISBEES I CAN FIND, STEAL\nAN EYE PATCH\nFROM A HOBO, STAPLE A DEAD\nPIGEON TO MY SHOULDER, AND\nSAIL\nINTO INTERNATIONAL WATERS\nON THE PIRATE GARBAGE SCOW\nOF\nNEWS THAT IS MY SEGMENT:\nMEANWHILE!"

|

| 56 |

+

},

|

| 57 |

+

"B1DRmrOlKtY": {

|

| 58 |

+

"begin": "1:34.0",

|

| 59 |

+