Spaces:

Runtime error

Runtime error

Upload 34 files

Browse files- __init__.py +0 -0

- app.py +398 -0

- assets/mplug_owl2_logo.png +0 -0

- assets/mplug_owl2_radar.png +0 -0

- examples/Rebecca_(1939_poster)_Small.jpeg +0 -0

- examples/extreme_ironing.jpg +0 -0

- model_worker.py +143 -0

- mplug_owl2/__init__.py +1 -0

- mplug_owl2/constants.py +9 -0

- mplug_owl2/conversation.py +301 -0

- mplug_owl2/mm_utils.py +103 -0

- mplug_owl2/model/__init__.py +2 -0

- mplug_owl2/model/builder.py +118 -0

- mplug_owl2/model/configuration_mplug_owl2.py +332 -0

- mplug_owl2/model/convert_mplug_owl2_weight_to_hf.py +395 -0

- mplug_owl2/model/modeling_attn_mask_utils.py +247 -0

- mplug_owl2/model/modeling_llama2.py +486 -0

- mplug_owl2/model/modeling_mplug_owl2.py +329 -0

- mplug_owl2/model/utils.py +20 -0

- mplug_owl2/model/visual_encoder.py +928 -0

- mplug_owl2/serve/__init__.py +0 -0

- mplug_owl2/serve/cli.py +120 -0

- mplug_owl2/serve/controller.py +298 -0

- mplug_owl2/serve/examples/Rebecca_(1939_poster)_Small.jpeg +0 -0

- mplug_owl2/serve/examples/extreme_ironing.jpg +0 -0

- mplug_owl2/serve/gradio_web_server.py +460 -0

- mplug_owl2/serve/model_worker.py +278 -0

- mplug_owl2/serve/register_workers.py +26 -0

- mplug_owl2/train/llama_flash_attn_monkey_patch.py +117 -0

- mplug_owl2/train/mplug_owl2_trainer.py +243 -0

- mplug_owl2/train/train.py +848 -0

- mplug_owl2/train/train_mem.py +13 -0

- mplug_owl2/utils.py +126 -0

- requirements.txt +17 -0

__init__.py

ADDED

|

File without changes

|

app.py

ADDED

|

@@ -0,0 +1,398 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import argparse

|

| 2 |

+

import datetime

|

| 3 |

+

import json

|

| 4 |

+

import os

|

| 5 |

+

import time

|

| 6 |

+

|

| 7 |

+

import gradio as gr

|

| 8 |

+

import requests

|

| 9 |

+

|

| 10 |

+

from mplug_owl2.conversation import (default_conversation, conv_templates,

|

| 11 |

+

SeparatorStyle)

|

| 12 |

+

from mplug_owl2.constants import LOGDIR

|

| 13 |

+

from mplug_owl2.utils import (build_logger, server_error_msg,

|

| 14 |

+

violates_moderation, moderation_msg)

|

| 15 |

+

from model_worker import ModelWorker

|

| 16 |

+

import hashlib

|

| 17 |

+

|

| 18 |

+

from modelscope.hub.snapshot_download import snapshot_download

|

| 19 |

+

model_dir = snapshot_download('damo/mPLUG-Owl2', cache_dir='./')

|

| 20 |

+

|

| 21 |

+

print(os.listdir('./'))

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

logger = build_logger("gradio_web_server_local", "gradio_web_server_local.log")

|

| 25 |

+

|

| 26 |

+

headers = {"User-Agent": "mPLUG-Owl2 Client"}

|

| 27 |

+

|

| 28 |

+

no_change_btn = gr.Button.update()

|

| 29 |

+

enable_btn = gr.Button.update(interactive=True)

|

| 30 |

+

disable_btn = gr.Button.update(interactive=False)

|

| 31 |

+

|

| 32 |

+

def get_conv_log_filename():

|

| 33 |

+

t = datetime.datetime.now()

|

| 34 |

+

name = os.path.join(LOGDIR, f"{t.year}-{t.month:02d}-{t.day:02d}-conv.json")

|

| 35 |

+

return name

|

| 36 |

+

|

| 37 |

+

get_window_url_params = """

|

| 38 |

+

function() {

|

| 39 |

+

const params = new URLSearchParams(window.location.search);

|

| 40 |

+

url_params = Object.fromEntries(params);

|

| 41 |

+

console.log(url_params);

|

| 42 |

+

return url_params;

|

| 43 |

+

}

|

| 44 |

+

"""

|

| 45 |

+

|

| 46 |

+

|

| 47 |

+

def load_demo(url_params, request: gr.Request):

|

| 48 |

+

logger.info(f"load_demo. ip: {request.client.host}. params: {url_params}")

|

| 49 |

+

state = default_conversation.copy()

|

| 50 |

+

return state

|

| 51 |

+

|

| 52 |

+

|

| 53 |

+

def vote_last_response(state, vote_type, request: gr.Request):

|

| 54 |

+

with open(get_conv_log_filename(), "a") as fout:

|

| 55 |

+

data = {

|

| 56 |

+

"tstamp": round(time.time(), 4),

|

| 57 |

+

"type": vote_type,

|

| 58 |

+

"state": state.dict(),

|

| 59 |

+

"ip": request.client.host,

|

| 60 |

+

}

|

| 61 |

+

fout.write(json.dumps(data) + "\n")

|

| 62 |

+

|

| 63 |

+

|

| 64 |

+

def upvote_last_response(state, request: gr.Request):

|

| 65 |

+

logger.info(f"upvote. ip: {request.client.host}")

|

| 66 |

+

vote_last_response(state, "upvote", request)

|

| 67 |

+

return ("",) + (disable_btn,) * 3

|

| 68 |

+

|

| 69 |

+

|

| 70 |

+

def downvote_last_response(state, request: gr.Request):

|

| 71 |

+

logger.info(f"downvote. ip: {request.client.host}")

|

| 72 |

+

vote_last_response(state, "downvote", request)

|

| 73 |

+

return ("",) + (disable_btn,) * 3

|

| 74 |

+

|

| 75 |

+

|

| 76 |

+

def flag_last_response(state, request: gr.Request):

|

| 77 |

+

logger.info(f"flag. ip: {request.client.host}")

|

| 78 |

+

vote_last_response(state, "flag", request)

|

| 79 |

+

return ("",) + (disable_btn,) * 3

|

| 80 |

+

|

| 81 |

+

|

| 82 |

+

def regenerate(state, image_process_mode, request: gr.Request):

|

| 83 |

+

logger.info(f"regenerate. ip: {request.client.host}")

|

| 84 |

+

state.messages[-1][-1] = None

|

| 85 |

+

prev_human_msg = state.messages[-2]

|

| 86 |

+

if type(prev_human_msg[1]) in (tuple, list):

|

| 87 |

+

prev_human_msg[1] = (*prev_human_msg[1][:2], image_process_mode)

|

| 88 |

+

state.skip_next = False

|

| 89 |

+

return (state, state.to_gradio_chatbot(), "", None) + (disable_btn,) * 5

|

| 90 |

+

|

| 91 |

+

|

| 92 |

+

def clear_history(request: gr.Request):

|

| 93 |

+

logger.info(f"clear_history. ip: {request.client.host}")

|

| 94 |

+

state = default_conversation.copy()

|

| 95 |

+

return (state, state.to_gradio_chatbot(), "", None) + (disable_btn,) * 5

|

| 96 |

+

|

| 97 |

+

|

| 98 |

+

def add_text(state, text, image, image_process_mode, request: gr.Request):

|

| 99 |

+

logger.info(f"add_text. ip: {request.client.host}. len: {len(text)}")

|

| 100 |

+

if len(text) <= 0 and image is None:

|

| 101 |

+

state.skip_next = True

|

| 102 |

+

return (state, state.to_gradio_chatbot(), "", None) + (no_change_btn,) * 5

|

| 103 |

+

if args.moderate:

|

| 104 |

+

flagged = violates_moderation(text)

|

| 105 |

+

if flagged:

|

| 106 |

+

state.skip_next = True

|

| 107 |

+

return (state, state.to_gradio_chatbot(), moderation_msg, None) + (

|

| 108 |

+

no_change_btn,) * 5

|

| 109 |

+

|

| 110 |

+

text = text[:3584] # Hard cut-off

|

| 111 |

+

if image is not None:

|

| 112 |

+

text = text[:3500] # Hard cut-off for images

|

| 113 |

+

if '<|image|>' not in text:

|

| 114 |

+

text = '<|image|>' + text

|

| 115 |

+

text = (text, image, image_process_mode)

|

| 116 |

+

if len(state.get_images(return_pil=True)) > 0:

|

| 117 |

+

state = default_conversation.copy()

|

| 118 |

+

state.append_message(state.roles[0], text)

|

| 119 |

+

state.append_message(state.roles[1], None)

|

| 120 |

+

state.skip_next = False

|

| 121 |

+

return (state, state.to_gradio_chatbot(), "", None) + (disable_btn,) * 5

|

| 122 |

+

|

| 123 |

+

|

| 124 |

+

def http_bot(state, temperature, top_p, max_new_tokens, request: gr.Request):

|

| 125 |

+

logger.info(f"http_bot. ip: {request.client.host}")

|

| 126 |

+

start_tstamp = time.time()

|

| 127 |

+

|

| 128 |

+

if state.skip_next:

|

| 129 |

+

# This generate call is skipped due to invalid inputs

|

| 130 |

+

yield (state, state.to_gradio_chatbot()) + (no_change_btn,) * 5

|

| 131 |

+

return

|

| 132 |

+

|

| 133 |

+

if len(state.messages) == state.offset + 2:

|

| 134 |

+

# First round of conversation

|

| 135 |

+

template_name = "mplug_owl2"

|

| 136 |

+

new_state = conv_templates[template_name].copy()

|

| 137 |

+

new_state.append_message(new_state.roles[0], state.messages[-2][1])

|

| 138 |

+

new_state.append_message(new_state.roles[1], None)

|

| 139 |

+

state = new_state

|

| 140 |

+

|

| 141 |

+

# Construct prompt

|

| 142 |

+

prompt = state.get_prompt()

|

| 143 |

+

|

| 144 |

+

all_images = state.get_images(return_pil=True)

|

| 145 |

+

all_image_hash = [hashlib.md5(image.tobytes()).hexdigest() for image in all_images]

|

| 146 |

+

for image, hash in zip(all_images, all_image_hash):

|

| 147 |

+

t = datetime.datetime.now()

|

| 148 |

+

filename = os.path.join(LOGDIR, "serve_images", f"{t.year}-{t.month:02d}-{t.day:02d}", f"{hash}.jpg")

|

| 149 |

+

if not os.path.isfile(filename):

|

| 150 |

+

os.makedirs(os.path.dirname(filename), exist_ok=True)

|

| 151 |

+

image.save(filename)

|

| 152 |

+

|

| 153 |

+

# Make requests

|

| 154 |

+

pload = {

|

| 155 |

+

"prompt": prompt,

|

| 156 |

+

"temperature": float(temperature),

|

| 157 |

+

"top_p": float(top_p),

|

| 158 |

+

"max_new_tokens": min(int(max_new_tokens), 2048),

|

| 159 |

+

"stop": state.sep if state.sep_style in [SeparatorStyle.SINGLE, SeparatorStyle.MPT] else state.sep2,

|

| 160 |

+

"images": f'List of {len(state.get_images())} images: {all_image_hash}',

|

| 161 |

+

}

|

| 162 |

+

logger.info(f"==== request ====\n{pload}")

|

| 163 |

+

|

| 164 |

+

pload['images'] = state.get_images()

|

| 165 |

+

|

| 166 |

+

state.messages[-1][-1] = "▌"

|

| 167 |

+

yield (state, state.to_gradio_chatbot()) + (disable_btn,) * 5

|

| 168 |

+

|

| 169 |

+

try:

|

| 170 |

+

# Stream output

|

| 171 |

+

# response = requests.post(worker_addr + "/worker_generate_stream",

|

| 172 |

+

# headers=headers, json=pload, stream=True, timeout=10)

|

| 173 |

+

# for chunk in response.iter_lines(decode_unicode=False, delimiter=b"\0"):

|

| 174 |

+

response = model.generate_stream_gate(pload)

|

| 175 |

+

for chunk in response:

|

| 176 |

+

if chunk:

|

| 177 |

+

data = json.loads(chunk.decode())

|

| 178 |

+

if data["error_code"] == 0:

|

| 179 |

+

output = data["text"][len(prompt):].strip()

|

| 180 |

+

state.messages[-1][-1] = output + "▌"

|

| 181 |

+

yield (state, state.to_gradio_chatbot()) + (disable_btn,) * 5

|

| 182 |

+

else:

|

| 183 |

+

output = data["text"] + f" (error_code: {data['error_code']})"

|

| 184 |

+

state.messages[-1][-1] = output

|

| 185 |

+

yield (state, state.to_gradio_chatbot()) + (disable_btn, disable_btn, disable_btn, enable_btn, enable_btn)

|

| 186 |

+

return

|

| 187 |

+

time.sleep(0.03)

|

| 188 |

+

except requests.exceptions.RequestException as e:

|

| 189 |

+

state.messages[-1][-1] = server_error_msg

|

| 190 |

+

yield (state, state.to_gradio_chatbot()) + (disable_btn, disable_btn, disable_btn, enable_btn, enable_btn)

|

| 191 |

+

return

|

| 192 |

+

|

| 193 |

+

state.messages[-1][-1] = state.messages[-1][-1][:-1]

|

| 194 |

+

yield (state, state.to_gradio_chatbot()) + (enable_btn,) * 5

|

| 195 |

+

|

| 196 |

+

finish_tstamp = time.time()

|

| 197 |

+

logger.info(f"{output}")

|

| 198 |

+

|

| 199 |

+

with open(get_conv_log_filename(), "a") as fout:

|

| 200 |

+

data = {

|

| 201 |

+

"tstamp": round(finish_tstamp, 4),

|

| 202 |

+

"type": "chat",

|

| 203 |

+

"start": round(start_tstamp, 4),

|

| 204 |

+

"finish": round(start_tstamp, 4),

|

| 205 |

+

"state": state.dict(),

|

| 206 |

+

"images": all_image_hash,

|

| 207 |

+

"ip": request.client.host,

|

| 208 |

+

}

|

| 209 |

+

fout.write(json.dumps(data) + "\n")

|

| 210 |

+

|

| 211 |

+

|

| 212 |

+

title_markdown = ("""

|

| 213 |

+

<h1 align="center"><a href="https://github.com/X-PLUG/mPLUG-Owl"><img src="https://z1.ax1x.com/2023/11/03/piM1rGQ.md.png", alt="mPLUG-Owl" border="0" style="margin: 0 auto; height: 200px;" /></a> </h1>

|

| 214 |

+

|

| 215 |

+

<h2 align="center"> mPLUG-Owl2: Revolutionizing Multi-modal Large Language Model with Modality Collaboration</h2>

|

| 216 |

+

|

| 217 |

+

<h5 align="center"> If you like our project, please give us a star ✨ on Github for latest update. </h2>

|

| 218 |

+

|

| 219 |

+

<div align="center">

|

| 220 |

+

<div style="display:flex; gap: 0.25rem;" align="center">

|

| 221 |

+

<a href='https://github.com/X-PLUG/mPLUG-Owl'><img src='https://img.shields.io/badge/Github-Code-blue'></a>

|

| 222 |

+

<a href="https://arxiv.org/abs/2304.14178"><img src="https://img.shields.io/badge/Arxiv-2304.14178-red"></a>

|

| 223 |

+

<a href='https://github.com/X-PLUG/mPLUG-Owl/stargazers'><img src='https://img.shields.io/github/stars/X-PLUG/mPLUG-Owl.svg?style=social'></a>

|

| 224 |

+

</div>

|

| 225 |

+

</div>

|

| 226 |

+

|

| 227 |

+

""")

|

| 228 |

+

|

| 229 |

+

|

| 230 |

+

tos_markdown = ("""

|

| 231 |

+

### Terms of use

|

| 232 |

+

By using this service, users are required to agree to the following terms:

|

| 233 |

+

The service is a research preview intended for non-commercial use only. It only provides limited safety measures and may generate offensive content. It must not be used for any illegal, harmful, violent, racist, or sexual purposes. The service may collect user dialogue data for future research.

|

| 234 |

+

Please click the "Flag" button if you get any inappropriate answer! We will collect those to keep improving our moderator.

|

| 235 |

+

For an optimal experience, please use desktop computers for this demo, as mobile devices may compromise its quality.

|

| 236 |

+

""")

|

| 237 |

+

|

| 238 |

+

|

| 239 |

+

learn_more_markdown = ("""

|

| 240 |

+

### License

|

| 241 |

+

The service is a research preview intended for non-commercial use only, subject to the model [License](https://github.com/facebookresearch/llama/blob/main/MODEL_CARD.md) of LLaMA, [Terms of Use](https://openai.com/policies/terms-of-use) of the data generated by OpenAI, and [Privacy Practices](https://chrome.google.com/webstore/detail/sharegpt-share-your-chatg/daiacboceoaocpibfodeljbdfacokfjb) of ShareGPT. Please contact us if you find any potential violation.

|

| 242 |

+

""")

|

| 243 |

+

|

| 244 |

+

block_css = """

|

| 245 |

+

|

| 246 |

+

#buttons button {

|

| 247 |

+

min-width: min(120px,100%);

|

| 248 |

+

}

|

| 249 |

+

|

| 250 |

+

"""

|

| 251 |

+

|

| 252 |

+

def build_demo(embed_mode):

|

| 253 |

+

textbox = gr.Textbox(show_label=False, placeholder="Enter text and press ENTER", container=False)

|

| 254 |

+

with gr.Blocks(title="mPLUG-Owl2", theme=gr.themes.Default(), css=block_css) as demo:

|

| 255 |

+

state = gr.State()

|

| 256 |

+

|

| 257 |

+

if not embed_mode:

|

| 258 |

+

gr.Markdown(title_markdown)

|

| 259 |

+

|

| 260 |

+

with gr.Row():

|

| 261 |

+

with gr.Column(scale=3):

|

| 262 |

+

imagebox = gr.Image(type="pil")

|

| 263 |

+

image_process_mode = gr.Radio(

|

| 264 |

+

["Crop", "Resize", "Pad", "Default"],

|

| 265 |

+

value="Default",

|

| 266 |

+

label="Preprocess for non-square image", visible=False)

|

| 267 |

+

|

| 268 |

+

cur_dir = os.path.dirname(os.path.abspath(__file__))

|

| 269 |

+

gr.Examples(examples=[

|

| 270 |

+

[f"{cur_dir}/examples/extreme_ironing.jpg", "What is unusual about this image?"],

|

| 271 |

+

[f"{cur_dir}/examples/Rebecca_(1939_poster)_Small.jpeg", "What is the name of the movie in the poster?"],

|

| 272 |

+

], inputs=[imagebox, textbox])

|

| 273 |

+

|

| 274 |

+

with gr.Accordion("Parameters", open=True) as parameter_row:

|

| 275 |

+

temperature = gr.Slider(minimum=0.0, maximum=1.0, value=0.2, step=0.1, interactive=True, label="Temperature",)

|

| 276 |

+

top_p = gr.Slider(minimum=0.0, maximum=1.0, value=0.7, step=0.1, interactive=True, label="Top P",)

|

| 277 |

+

max_output_tokens = gr.Slider(minimum=0, maximum=1024, value=512, step=64, interactive=True, label="Max output tokens",)

|

| 278 |

+

|

| 279 |

+

with gr.Column(scale=8):

|

| 280 |

+

chatbot = gr.Chatbot(elem_id="Chatbot", label="mPLUG-Owl2 Chatbot", height=600)

|

| 281 |

+

with gr.Row():

|

| 282 |

+

with gr.Column(scale=8):

|

| 283 |

+

textbox.render()

|

| 284 |

+

with gr.Column(scale=1, min_width=50):

|

| 285 |

+

submit_btn = gr.Button(value="Send", variant="primary")

|

| 286 |

+

with gr.Row(elem_id="buttons") as button_row:

|

| 287 |

+

upvote_btn = gr.Button(value="👍 Upvote", interactive=False)

|

| 288 |

+

downvote_btn = gr.Button(value="👎 Downvote", interactive=False)

|

| 289 |

+

flag_btn = gr.Button(value="⚠️ Flag", interactive=False)

|

| 290 |

+

#stop_btn = gr.Button(value="⏹️ Stop Generation", interactive=False)

|

| 291 |

+

regenerate_btn = gr.Button(value="🔄 Regenerate", interactive=False)

|

| 292 |

+

clear_btn = gr.Button(value="🗑️ Clear", interactive=False)

|

| 293 |

+

|

| 294 |

+

if not embed_mode:

|

| 295 |

+

gr.Markdown(tos_markdown)

|

| 296 |

+

gr.Markdown(learn_more_markdown)

|

| 297 |

+

url_params = gr.JSON(visible=False)

|

| 298 |

+

|

| 299 |

+

# Register listeners

|

| 300 |

+

btn_list = [upvote_btn, downvote_btn, flag_btn, regenerate_btn, clear_btn]

|

| 301 |

+

upvote_btn.click(

|

| 302 |

+

upvote_last_response,

|

| 303 |

+

state,

|

| 304 |

+

[textbox, upvote_btn, downvote_btn, flag_btn],

|

| 305 |

+

queue=False

|

| 306 |

+

)

|

| 307 |

+

downvote_btn.click(

|

| 308 |

+

downvote_last_response,

|

| 309 |

+

state,

|

| 310 |

+

[textbox, upvote_btn, downvote_btn, flag_btn],

|

| 311 |

+

queue=False

|

| 312 |

+

)

|

| 313 |

+

flag_btn.click(

|

| 314 |

+

flag_last_response,

|

| 315 |

+

state,

|

| 316 |

+

[textbox, upvote_btn, downvote_btn, flag_btn],

|

| 317 |

+

queue=False

|

| 318 |

+

)

|

| 319 |

+

|

| 320 |

+

regenerate_btn.click(

|

| 321 |

+

regenerate,

|

| 322 |

+

[state, image_process_mode],

|

| 323 |

+

[state, chatbot, textbox, imagebox] + btn_list,

|

| 324 |

+

queue=False

|

| 325 |

+

).then(

|

| 326 |

+

http_bot,

|

| 327 |

+

[state, temperature, top_p, max_output_tokens],

|

| 328 |

+

[state, chatbot] + btn_list

|

| 329 |

+

)

|

| 330 |

+

|

| 331 |

+

clear_btn.click(

|

| 332 |

+

clear_history,

|

| 333 |

+

None,

|

| 334 |

+

[state, chatbot, textbox, imagebox] + btn_list,

|

| 335 |

+

queue=False

|

| 336 |

+

)

|

| 337 |

+

|

| 338 |

+

textbox.submit(

|

| 339 |

+

add_text,

|

| 340 |

+

[state, textbox, imagebox, image_process_mode],

|

| 341 |

+

[state, chatbot, textbox, imagebox] + btn_list,

|

| 342 |

+

queue=False

|

| 343 |

+

).then(

|

| 344 |

+

http_bot,

|

| 345 |

+

[state, temperature, top_p, max_output_tokens],

|

| 346 |

+

[state, chatbot] + btn_list

|

| 347 |

+

)

|

| 348 |

+

|

| 349 |

+

submit_btn.click(

|

| 350 |

+

add_text,

|

| 351 |

+

[state, textbox, imagebox, image_process_mode],

|

| 352 |

+

[state, chatbot, textbox, imagebox] + btn_list,

|

| 353 |

+

queue=False

|

| 354 |

+

).then(

|

| 355 |

+

http_bot,

|

| 356 |

+

[state, temperature, top_p, max_output_tokens],

|

| 357 |

+

[state, chatbot] + btn_list

|

| 358 |

+

)

|

| 359 |

+

|

| 360 |

+

demo.load(

|

| 361 |

+

load_demo,

|

| 362 |

+

[url_params],

|

| 363 |

+

state,

|

| 364 |

+

_js=get_window_url_params,

|

| 365 |

+

queue=False

|

| 366 |

+

)

|

| 367 |

+

|

| 368 |

+

return demo

|

| 369 |

+

|

| 370 |

+

|

| 371 |

+

if __name__ == "__main__":

|

| 372 |

+

parser = argparse.ArgumentParser()

|

| 373 |

+

parser.add_argument("--host", type=str, default="0.0.0.0")

|

| 374 |

+

parser.add_argument("--port", type=int)

|

| 375 |

+

parser.add_argument("--concurrency-count", type=int, default=10)

|

| 376 |

+

parser.add_argument("--model-list-mode", type=str, default="once",

|

| 377 |

+

choices=["once", "reload"])

|

| 378 |

+

parser.add_argument("--model-path", type=str, default="./mPLUG-Owl2")

|

| 379 |

+

parser.add_argument("--device", type=str, default="cuda")

|

| 380 |

+

parser.add_argument("--load-8bit", action="store_true")

|

| 381 |

+

parser.add_argument("--load-4bit", action="store_true")

|

| 382 |

+

parser.add_argument("--moderate", action="store_true")

|

| 383 |

+

parser.add_argument("--embed", action="store_true")

|

| 384 |

+

args = parser.parse_args()

|

| 385 |

+

logger.info(f"args: {args}")

|

| 386 |

+

|

| 387 |

+

model = ModelWorker(args.model_path, None, None, args.load_8bit, args.load_4bit, args.device)

|

| 388 |

+

|

| 389 |

+

logger.info(args)

|

| 390 |

+

demo = build_demo(args.embed)

|

| 391 |

+

demo.queue(

|

| 392 |

+

concurrency_count=args.concurrency_count,

|

| 393 |

+

api_open=False

|

| 394 |

+

).launch(

|

| 395 |

+

server_name=args.host,

|

| 396 |

+

server_port=args.port,

|

| 397 |

+

share=False

|

| 398 |

+

)

|

assets/mplug_owl2_logo.png

ADDED

|

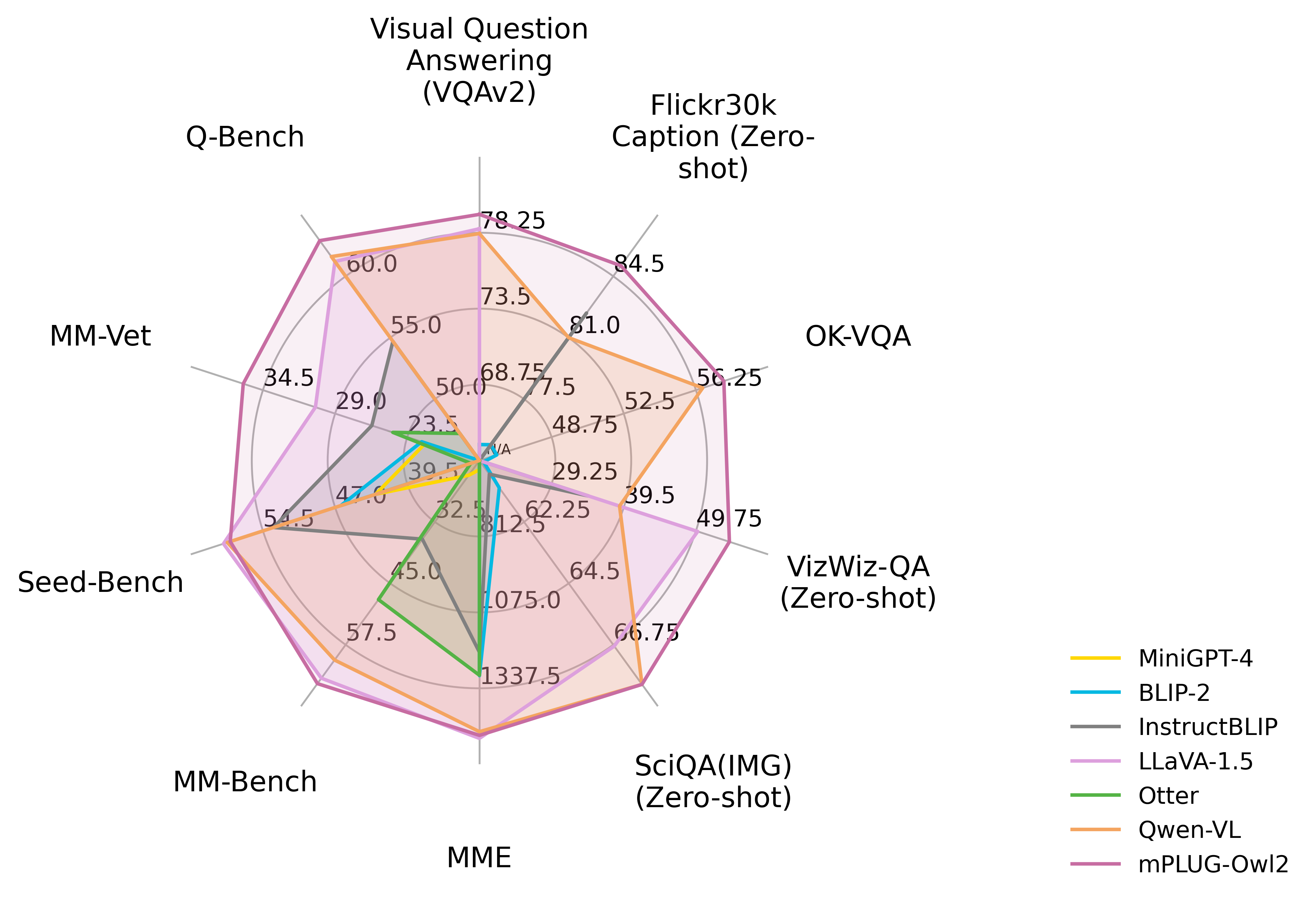

assets/mplug_owl2_radar.png

ADDED

|

examples/Rebecca_(1939_poster)_Small.jpeg

ADDED

_Small.jpeg)

|

examples/extreme_ironing.jpg

ADDED

|

model_worker.py

ADDED

|

@@ -0,0 +1,143 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

A model worker executes the model.

|

| 3 |

+

"""

|

| 4 |

+

import argparse

|

| 5 |

+

import asyncio

|

| 6 |

+

import json

|

| 7 |

+

import time

|

| 8 |

+

import threading

|

| 9 |

+

import uuid

|

| 10 |

+

|

| 11 |

+

import requests

|

| 12 |

+

import torch

|

| 13 |

+

from functools import partial

|

| 14 |

+

|

| 15 |

+

from mplug_owl2.constants import WORKER_HEART_BEAT_INTERVAL

|

| 16 |

+

from mplug_owl2.utils import (build_logger, server_error_msg,

|

| 17 |

+

pretty_print_semaphore)

|

| 18 |

+

from mplug_owl2.model.builder import load_pretrained_model

|

| 19 |

+

from mplug_owl2.mm_utils import process_images, load_image_from_base64, tokenizer_image_token, KeywordsStoppingCriteria

|

| 20 |

+

from mplug_owl2.constants import IMAGE_TOKEN_INDEX, DEFAULT_IMAGE_TOKEN

|

| 21 |

+

from transformers import TextIteratorStreamer

|

| 22 |

+

from threading import Thread

|

| 23 |

+

|

| 24 |

+

GB = 1 << 30

|

| 25 |

+

|

| 26 |

+

worker_id = str(uuid.uuid4())[:6]

|

| 27 |

+

logger = build_logger("model_worker", f"model_worker_{worker_id}.log")

|

| 28 |

+

|

| 29 |

+

class ModelWorker:

|

| 30 |

+

def __init__(self, model_path, model_base, model_name, load_8bit, load_4bit, device):

|

| 31 |

+

self.worker_id = worker_id

|

| 32 |

+

if model_path.endswith("/"):

|

| 33 |

+

model_path = model_path[:-1]

|

| 34 |

+

if model_name is None:

|

| 35 |

+

model_paths = model_path.split("/")

|

| 36 |

+

if model_paths[-1].startswith('checkpoint-'):

|

| 37 |

+

self.model_name = model_paths[-2] + "_" + model_paths[-1]

|

| 38 |

+

else:

|

| 39 |

+

self.model_name = model_paths[-1]

|

| 40 |

+

else:

|

| 41 |

+

self.model_name = model_name

|

| 42 |

+

|

| 43 |

+

self.device = device

|

| 44 |

+

logger.info(f"Loading the model {self.model_name} on worker {worker_id} ...")

|

| 45 |

+

self.tokenizer, self.model, self.image_processor, self.context_len = load_pretrained_model(

|

| 46 |

+

model_path, model_base, self.model_name, load_8bit, load_4bit, device=self.device)

|

| 47 |

+

self.is_multimodal = True

|

| 48 |

+

|

| 49 |

+

@torch.inference_mode()

|

| 50 |

+

def generate_stream(self, params):

|

| 51 |

+

tokenizer, model, image_processor = self.tokenizer, self.model, self.image_processor

|

| 52 |

+

|

| 53 |

+

prompt = params["prompt"]

|

| 54 |

+

ori_prompt = prompt

|

| 55 |

+

images = params.get("images", None)

|

| 56 |

+

num_image_tokens = 0

|

| 57 |

+

if images is not None and len(images) > 0 and self.is_multimodal:

|

| 58 |

+

if len(images) > 0:

|

| 59 |

+

if len(images) != prompt.count(DEFAULT_IMAGE_TOKEN):

|

| 60 |

+

raise ValueError("Number of images does not match number of <|image|> tokens in prompt")

|

| 61 |

+

|

| 62 |

+

images = [load_image_from_base64(image) for image in images]

|

| 63 |

+

images = process_images(images, image_processor, model.config)

|

| 64 |

+

|

| 65 |

+

if type(images) is list:

|

| 66 |

+

images = [image.to(self.model.device, dtype=torch.float16) for image in images]

|

| 67 |

+

else:

|

| 68 |

+

images = images.to(self.model.device, dtype=torch.float16)

|

| 69 |

+

|

| 70 |

+

replace_token = DEFAULT_IMAGE_TOKEN

|

| 71 |

+

prompt = prompt.replace(DEFAULT_IMAGE_TOKEN, replace_token)

|

| 72 |

+

|

| 73 |

+

num_image_tokens = prompt.count(replace_token) * (model.get_model().visual_abstractor.config.num_learnable_queries + 1)

|

| 74 |

+

else:

|

| 75 |

+

images = None

|

| 76 |

+

image_args = {"images": images}

|

| 77 |

+

else:

|

| 78 |

+

images = None

|

| 79 |

+

image_args = {}

|

| 80 |

+

|

| 81 |

+

temperature = float(params.get("temperature", 1.0))

|

| 82 |

+

top_p = float(params.get("top_p", 1.0))

|

| 83 |

+

max_context_length = getattr(model.config, 'max_position_embeddings', 4096)

|

| 84 |

+

max_new_tokens = min(int(params.get("max_new_tokens", 256)), 1024)

|

| 85 |

+

stop_str = params.get("stop", None)

|

| 86 |

+

do_sample = True if temperature > 0.001 else False

|

| 87 |

+

|

| 88 |

+

input_ids = tokenizer_image_token(prompt, tokenizer, IMAGE_TOKEN_INDEX, return_tensors='pt').unsqueeze(0).to(self.device)

|

| 89 |

+

keywords = [stop_str]

|

| 90 |

+

stopping_criteria = KeywordsStoppingCriteria(keywords, tokenizer, input_ids)

|

| 91 |

+

streamer = TextIteratorStreamer(tokenizer, skip_prompt=True, skip_special_tokens=True, timeout=15)

|

| 92 |

+

|

| 93 |

+

max_new_tokens = min(max_new_tokens, max_context_length - input_ids.shape[-1] - num_image_tokens)

|

| 94 |

+

|

| 95 |

+

if max_new_tokens < 1:

|

| 96 |

+

yield json.dumps({"text": ori_prompt + "Exceeds max token length. Please start a new conversation, thanks.", "error_code": 0}).encode() + b"\0"

|

| 97 |

+

return

|

| 98 |

+

|

| 99 |

+

thread = Thread(target=model.generate, kwargs=dict(

|

| 100 |

+

inputs=input_ids,

|

| 101 |

+

do_sample=do_sample,

|

| 102 |

+

temperature=temperature,

|

| 103 |

+

top_p=top_p,

|

| 104 |

+

max_new_tokens=max_new_tokens,

|

| 105 |

+

streamer=streamer,

|

| 106 |

+

stopping_criteria=[stopping_criteria],

|

| 107 |

+

use_cache=True,

|

| 108 |

+

**image_args

|

| 109 |

+

))

|

| 110 |

+

thread.start()

|

| 111 |

+

|

| 112 |

+

generated_text = ori_prompt

|

| 113 |

+

for new_text in streamer:

|

| 114 |

+

generated_text += new_text

|

| 115 |

+

if generated_text.endswith(stop_str):

|

| 116 |

+

generated_text = generated_text[:-len(stop_str)]

|

| 117 |

+

yield json.dumps({"text": generated_text, "error_code": 0}).encode()

|

| 118 |

+

|

| 119 |

+

def generate_stream_gate(self, params):

|

| 120 |

+

try:

|

| 121 |

+

for x in self.generate_stream(params):

|

| 122 |

+

yield x

|

| 123 |

+

except ValueError as e:

|

| 124 |

+

print("Caught ValueError:", e)

|

| 125 |

+

ret = {

|

| 126 |

+

"text": server_error_msg,

|

| 127 |

+

"error_code": 1,

|

| 128 |

+

}

|

| 129 |

+

yield json.dumps(ret).encode()

|

| 130 |

+

except torch.cuda.CudaError as e:

|

| 131 |

+

print("Caught torch.cuda.CudaError:", e)

|

| 132 |

+

ret = {

|

| 133 |

+

"text": server_error_msg,

|

| 134 |

+

"error_code": 1,

|

| 135 |

+

}

|

| 136 |

+

yield json.dumps(ret).encode()

|

| 137 |

+

except Exception as e:

|

| 138 |

+

print("Caught Unknown Error", e)

|

| 139 |

+

ret = {

|

| 140 |

+

"text": server_error_msg,

|

| 141 |

+

"error_code": 1,

|

| 142 |

+

}

|

| 143 |

+

yield json.dumps(ret).encode()

|

mplug_owl2/__init__.py

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

from .model import MPLUGOwl2LlamaForCausalLM

|

mplug_owl2/constants.py

ADDED

|

@@ -0,0 +1,9 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

CONTROLLER_HEART_BEAT_EXPIRATION = 30

|

| 2 |

+

WORKER_HEART_BEAT_INTERVAL = 15

|

| 3 |

+

|

| 4 |

+

LOGDIR = "./demo_logs"

|

| 5 |

+

|

| 6 |

+

# Model Constants

|

| 7 |

+

IGNORE_INDEX = -100

|

| 8 |

+

IMAGE_TOKEN_INDEX = -200

|

| 9 |

+

DEFAULT_IMAGE_TOKEN = "<|image|>"

|

mplug_owl2/conversation.py

ADDED

|

@@ -0,0 +1,301 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import dataclasses

|

| 2 |

+

from enum import auto, Enum

|

| 3 |

+

from typing import List, Tuple

|

| 4 |

+

from mplug_owl2.constants import DEFAULT_IMAGE_TOKEN

|

| 5 |

+

|

| 6 |

+

class SeparatorStyle(Enum):

|

| 7 |

+

"""Different separator style."""

|

| 8 |

+

SINGLE = auto()

|

| 9 |

+

TWO = auto()

|

| 10 |

+

TWO_NO_SYS = auto()

|

| 11 |

+

MPT = auto()

|

| 12 |

+

PLAIN = auto()

|

| 13 |

+

LLAMA_2 = auto()

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

@dataclasses.dataclass

|

| 17 |

+

class Conversation:

|

| 18 |

+

"""A class that keeps all conversation history."""

|

| 19 |

+

system: str

|

| 20 |

+

roles: List[str]

|

| 21 |

+

messages: List[List[str]]

|

| 22 |

+

offset: int

|

| 23 |

+

sep_style: SeparatorStyle = SeparatorStyle.SINGLE

|

| 24 |

+

sep: str = "###"

|

| 25 |

+

sep2: str = None

|

| 26 |

+

version: str = "Unknown"

|

| 27 |

+

|

| 28 |

+

skip_next: bool = False

|

| 29 |

+

|

| 30 |

+

def get_prompt(self):

|

| 31 |

+

messages = self.messages

|

| 32 |

+

if len(messages) > 0 and type(messages[0][1]) is tuple:

|

| 33 |

+

messages = self.messages.copy()

|

| 34 |

+

init_role, init_msg = messages[0].copy()

|

| 35 |

+

# init_msg = init_msg[0].replace("<image>", "").strip()

|

| 36 |

+

# if 'mmtag' in self.version:

|

| 37 |

+

# messages[0] = (init_role, init_msg)

|

| 38 |

+

# messages.insert(0, (self.roles[0], "<Image><image></Image>"))

|

| 39 |

+

# messages.insert(1, (self.roles[1], "Received."))

|

| 40 |

+

# else:

|

| 41 |

+

# messages[0] = (init_role, "<image>\n" + init_msg)

|

| 42 |

+

init_msg = init_msg[0].replace(DEFAULT_IMAGE_TOKEN, "").strip()

|

| 43 |

+

messages[0] = (init_role, DEFAULT_IMAGE_TOKEN + init_msg)

|

| 44 |

+

|

| 45 |

+

if self.sep_style == SeparatorStyle.SINGLE:

|

| 46 |

+

ret = self.system + self.sep

|

| 47 |

+

for role, message in messages:

|

| 48 |

+

if message:

|

| 49 |

+

if type(message) is tuple:

|

| 50 |

+

message, _, _ = message

|

| 51 |

+

ret += role + ": " + message + self.sep

|

| 52 |

+

else:

|

| 53 |

+

ret += role + ":"

|

| 54 |

+

elif self.sep_style == SeparatorStyle.TWO:

|

| 55 |

+

seps = [self.sep, self.sep2]

|

| 56 |

+

ret = self.system + seps[0]

|

| 57 |

+

for i, (role, message) in enumerate(messages):

|

| 58 |

+

if message:

|

| 59 |

+

if type(message) is tuple:

|

| 60 |

+

message, _, _ = message

|

| 61 |

+

ret += role + ": " + message + seps[i % 2]

|

| 62 |

+

else:

|

| 63 |

+

ret += role + ":"

|

| 64 |

+

elif self.sep_style == SeparatorStyle.TWO_NO_SYS:

|

| 65 |

+

seps = [self.sep, self.sep2]

|

| 66 |

+

ret = ""

|

| 67 |

+

for i, (role, message) in enumerate(messages):

|

| 68 |

+

if message:

|

| 69 |

+

if type(message) is tuple:

|

| 70 |

+

message, _, _ = message

|

| 71 |

+

ret += role + ": " + message + seps[i % 2]

|

| 72 |

+

else:

|

| 73 |

+

ret += role + ":"

|

| 74 |

+

elif self.sep_style == SeparatorStyle.MPT:

|

| 75 |

+

ret = self.system + self.sep

|

| 76 |

+

for role, message in messages:

|

| 77 |

+

if message:

|

| 78 |

+

if type(message) is tuple:

|

| 79 |

+

message, _, _ = message

|

| 80 |

+

ret += role + message + self.sep

|

| 81 |

+

else:

|

| 82 |

+

ret += role

|

| 83 |

+

elif self.sep_style == SeparatorStyle.LLAMA_2:

|

| 84 |

+

wrap_sys = lambda msg: f"<<SYS>>\n{msg}\n<</SYS>>\n\n"

|

| 85 |

+

wrap_inst = lambda msg: f"[INST] {msg} [/INST]"

|

| 86 |

+

ret = ""

|

| 87 |

+

|

| 88 |

+

for i, (role, message) in enumerate(messages):

|

| 89 |

+

if i == 0:

|

| 90 |

+

assert message, "first message should not be none"

|

| 91 |

+

assert role == self.roles[0], "first message should come from user"

|

| 92 |

+

if message:

|

| 93 |

+

if type(message) is tuple:

|

| 94 |

+

message, _, _ = message

|

| 95 |

+

if i == 0: message = wrap_sys(self.system) + message

|

| 96 |

+

if i % 2 == 0:

|

| 97 |

+

message = wrap_inst(message)

|

| 98 |

+

ret += self.sep + message

|

| 99 |

+

else:

|

| 100 |

+

ret += " " + message + " " + self.sep2

|

| 101 |

+

else:

|

| 102 |

+

ret += ""

|

| 103 |

+

ret = ret.lstrip(self.sep)

|

| 104 |

+

elif self.sep_style == SeparatorStyle.PLAIN:

|

| 105 |

+

seps = [self.sep, self.sep2]

|

| 106 |

+

ret = self.system

|

| 107 |

+

for i, (role, message) in enumerate(messages):

|

| 108 |

+

if message:

|

| 109 |

+

if type(message) is tuple:

|

| 110 |

+

message, _, _ = message

|

| 111 |

+

ret += message + seps[i % 2]

|

| 112 |

+

else:

|

| 113 |

+

ret += ""

|

| 114 |

+

else:

|

| 115 |

+

raise ValueError(f"Invalid style: {self.sep_style}")

|

| 116 |

+

|

| 117 |

+

return ret

|

| 118 |

+

|

| 119 |

+

def append_message(self, role, message):

|

| 120 |

+

self.messages.append([role, message])

|

| 121 |

+

|

| 122 |

+

def get_images(self, return_pil=False):

|

| 123 |

+

images = []

|

| 124 |

+

for i, (role, msg) in enumerate(self.messages[self.offset:]):

|

| 125 |

+

if i % 2 == 0:

|

| 126 |

+

if type(msg) is tuple:

|

| 127 |

+

import base64

|

| 128 |

+

from io import BytesIO

|

| 129 |

+

from PIL import Image

|

| 130 |

+

msg, image, image_process_mode = msg

|

| 131 |

+

if image_process_mode == "Pad":

|

| 132 |

+

def expand2square(pil_img, background_color=(122, 116, 104)):

|

| 133 |

+

width, height = pil_img.size

|

| 134 |

+

if width == height:

|

| 135 |

+

return pil_img

|

| 136 |

+

elif width > height:

|

| 137 |

+

result = Image.new(pil_img.mode, (width, width), background_color)

|

| 138 |

+

result.paste(pil_img, (0, (width - height) // 2))

|

| 139 |

+

return result

|

| 140 |

+

else:

|

| 141 |

+

result = Image.new(pil_img.mode, (height, height), background_color)

|

| 142 |

+

result.paste(pil_img, ((height - width) // 2, 0))

|

| 143 |

+

return result

|

| 144 |

+

image = expand2square(image)

|

| 145 |

+

elif image_process_mode in ["Default", "Crop"]:

|

| 146 |

+

pass

|

| 147 |

+

elif image_process_mode == "Resize":

|

| 148 |

+

image = image.resize((336, 336))

|

| 149 |

+

else:

|

| 150 |

+

raise ValueError(f"Invalid image_process_mode: {image_process_mode}")

|

| 151 |

+

max_hw, min_hw = max(image.size), min(image.size)

|

| 152 |

+

aspect_ratio = max_hw / min_hw

|

| 153 |

+

max_len, min_len = 800, 400

|

| 154 |

+

shortest_edge = int(min(max_len / aspect_ratio, min_len, min_hw))

|

| 155 |

+

longest_edge = int(shortest_edge * aspect_ratio)

|

| 156 |

+

W, H = image.size

|

| 157 |

+

if longest_edge != max(image.size):

|

| 158 |

+

if H > W:

|

| 159 |

+

H, W = longest_edge, shortest_edge

|

| 160 |

+

else:

|

| 161 |

+

H, W = shortest_edge, longest_edge

|

| 162 |

+

image = image.resize((W, H))

|

| 163 |

+

if return_pil:

|

| 164 |

+

images.append(image)

|

| 165 |

+

else:

|

| 166 |

+

buffered = BytesIO()

|

| 167 |

+

image.save(buffered, format="PNG")

|

| 168 |

+

img_b64_str = base64.b64encode(buffered.getvalue()).decode()

|

| 169 |

+

images.append(img_b64_str)

|

| 170 |

+

return images

|

| 171 |

+

|

| 172 |

+

def to_gradio_chatbot(self):

|

| 173 |

+

ret = []

|

| 174 |

+

for i, (role, msg) in enumerate(self.messages[self.offset:]):

|

| 175 |

+

if i % 2 == 0:

|

| 176 |

+

if type(msg) is tuple:

|

| 177 |

+

import base64

|

| 178 |

+

from io import BytesIO

|

| 179 |

+

msg, image, image_process_mode = msg

|

| 180 |

+

max_hw, min_hw = max(image.size), min(image.size)

|

| 181 |

+

aspect_ratio = max_hw / min_hw

|

| 182 |

+

max_len, min_len = 800, 400

|

| 183 |

+

shortest_edge = int(min(max_len / aspect_ratio, min_len, min_hw))

|

| 184 |

+

longest_edge = int(shortest_edge * aspect_ratio)

|

| 185 |

+

W, H = image.size

|

| 186 |

+

if H > W:

|

| 187 |

+

H, W = longest_edge, shortest_edge

|

| 188 |

+

else:

|

| 189 |

+

H, W = shortest_edge, longest_edge

|

| 190 |

+

image = image.resize((W, H))

|

| 191 |

+

buffered = BytesIO()

|

| 192 |

+

image.save(buffered, format="JPEG")

|

| 193 |

+

img_b64_str = base64.b64encode(buffered.getvalue()).decode()

|

| 194 |

+

img_str = f'<img src="data:image/png;base64,{img_b64_str}" alt="user upload image" />'

|

| 195 |

+

msg = img_str + msg.replace('<|image|>', '').strip()

|

| 196 |

+

ret.append([msg, None])

|

| 197 |

+

else:

|

| 198 |

+

ret.append([msg, None])

|

| 199 |

+

else:

|

| 200 |

+

ret[-1][-1] = msg

|

| 201 |

+

return ret

|

| 202 |

+

|

| 203 |

+

def copy(self):

|

| 204 |

+

return Conversation(

|

| 205 |

+

system=self.system,

|

| 206 |

+

roles=self.roles,

|

| 207 |

+

messages=[[x, y] for x, y in self.messages],

|

| 208 |

+

offset=self.offset,

|

| 209 |

+

sep_style=self.sep_style,

|

| 210 |

+

sep=self.sep,

|

| 211 |

+

sep2=self.sep2,

|

| 212 |

+

version=self.version)

|

| 213 |

+

|

| 214 |

+

def dict(self):

|

| 215 |

+

if len(self.get_images()) > 0:

|

| 216 |

+

return {

|

| 217 |

+

"system": self.system,

|

| 218 |

+

"roles": self.roles,

|

| 219 |

+

"messages": [[x, y[0] if type(y) is tuple else y] for x, y in self.messages],

|

| 220 |

+

"offset": self.offset,

|

| 221 |

+

"sep": self.sep,

|

| 222 |

+

"sep2": self.sep2,

|

| 223 |

+

}

|

| 224 |

+

return {

|

| 225 |

+

"system": self.system,

|

| 226 |

+

"roles": self.roles,

|

| 227 |

+

"messages": self.messages,

|

| 228 |

+

"offset": self.offset,

|

| 229 |

+

"sep": self.sep,

|

| 230 |

+

"sep2": self.sep2,

|

| 231 |

+

}

|

| 232 |

+

|

| 233 |

+

|

| 234 |

+

conv_vicuna_v0 = Conversation(

|

| 235 |

+

system="A chat between a curious human and an artificial intelligence assistant. "

|

| 236 |

+

"The assistant gives helpful, detailed, and polite answers to the human's questions.",

|

| 237 |

+

roles=("Human", "Assistant"),

|

| 238 |

+

messages=(

|

| 239 |

+

("Human", "What are the key differences between renewable and non-renewable energy sources?"),

|

| 240 |

+

("Assistant",

|

| 241 |

+

"Renewable energy sources are those that can be replenished naturally in a relatively "

|

| 242 |

+

"short amount of time, such as solar, wind, hydro, geothermal, and biomass. "

|

| 243 |

+

"Non-renewable energy sources, on the other hand, are finite and will eventually be "

|

| 244 |

+

"depleted, such as coal, oil, and natural gas. Here are some key differences between "

|

| 245 |

+

"renewable and non-renewable energy sources:\n"

|

| 246 |

+