+

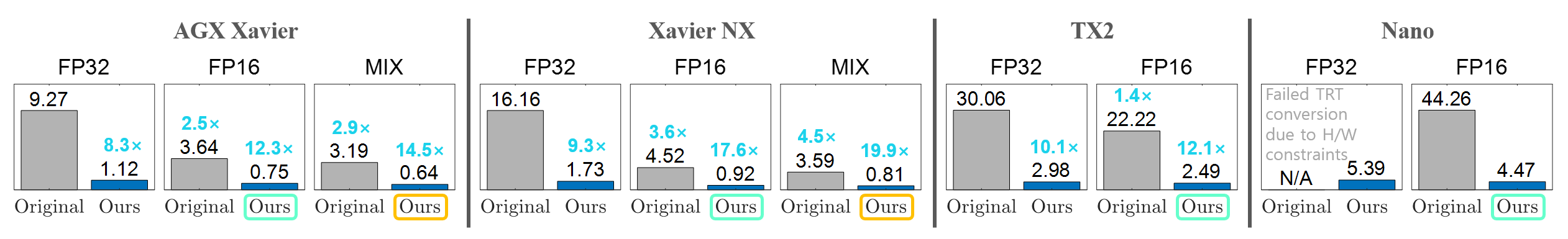

++ +The generation speed may vary depending on network traffic. Nevertheless, our compresed Wav2Lip _consistently_ delivers a faster inference than the original model, while maintaining similar visual quality. Different from the paper, in this demo, we measure **total processing time** and **FPS** throughout loading the preprocessed video and audio, generating with the model, and merging lip-synced facial images with the original video. + +

+ + +### Notice + - This work was accepted to [Demo] [**ICCV 2023 Demo Track**](https://iccv2023.thecvf.com/demos-111.php); [[Paper](https://arxiv.org/abs/2304.00471)] [**On-Device Intelligence Workshop (ODIW) @ MLSys 2023**](https://sites.google.com/g.harvard.edu/on-device-workshop-23/home); [Poster] [**NVIDIA GPU Technology Conference (GTC) as Poster Spotlight**](https://www.nvidia.com/en-us/on-demand/search/?facet.mimetype[]=event%20session&layout=list&page=1&q=52409&sort=relevance&sortDir=desc). + - We thank [NVIDIA Applied Research Accelerator Program](https://www.nvidia.com/en-us/industries/higher-education-research/applied-research-program/) for supporting this research and [Wav2Lip's Authors](https://github.com/Rudrabha/Wav2Lip) for their pioneering research. \ No newline at end of file diff --git a/docs/footer.md b/docs/footer.md new file mode 100644 index 0000000000000000000000000000000000000000..8fc884f2346cfbba76d1a517e5b7137c445df2bb --- /dev/null +++ b/docs/footer.md @@ -0,0 +1,5 @@ + + +

\ No newline at end of file diff --git a/docs/header.md b/docs/header.md new file mode 100644 index 0000000000000000000000000000000000000000..8abf23e808e0fd3911f1330e93cde1df165b0ebd --- /dev/null +++ b/docs/header.md @@ -0,0 +1,10 @@ +#

+ + + +