Spaces:

Runtime error

Runtime error

Commit

•

86fe4f0

1

Parent(s):

5bfb5a7

Upload 631 files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +1 -0

- .gitignore +5 -0

- LICENSE +107 -0

- README.md +71 -0

- checkpoints/README.txt +1 -0

- images/demo.png +3 -0

- images/workflow.png +0 -0

- ootd/inference_ootd.py +133 -0

- ootd/inference_ootd_dc.py +132 -0

- ootd/inference_ootd_hd.py +132 -0

- ootd/pipelines_ootd/attention_garm.py +402 -0

- ootd/pipelines_ootd/attention_vton.py +407 -0

- ootd/pipelines_ootd/pipeline_ootd.py +846 -0

- ootd/pipelines_ootd/transformer_garm_2d.py +449 -0

- ootd/pipelines_ootd/transformer_vton_2d.py +452 -0

- ootd/pipelines_ootd/unet_garm_2d_blocks.py +0 -0

- ootd/pipelines_ootd/unet_garm_2d_condition.py +1183 -0

- ootd/pipelines_ootd/unet_vton_2d_blocks.py +0 -0

- ootd/pipelines_ootd/unet_vton_2d_condition.py +1183 -0

- preprocess/humanparsing/datasets/__init__.py +0 -0

- preprocess/humanparsing/datasets/datasets.py +201 -0

- preprocess/humanparsing/datasets/simple_extractor_dataset.py +89 -0

- preprocess/humanparsing/datasets/target_generation.py +40 -0

- preprocess/humanparsing/mhp_extension/coco_style_annotation_creator/human_to_coco.py +166 -0

- preprocess/humanparsing/mhp_extension/coco_style_annotation_creator/pycococreatortools.py +114 -0

- preprocess/humanparsing/mhp_extension/coco_style_annotation_creator/test_human2coco_format.py +74 -0

- preprocess/humanparsing/mhp_extension/detectron2/.circleci/config.yml +179 -0

- preprocess/humanparsing/mhp_extension/detectron2/.clang-format +85 -0

- preprocess/humanparsing/mhp_extension/detectron2/.flake8 +9 -0

- preprocess/humanparsing/mhp_extension/detectron2/.github/CODE_OF_CONDUCT.md +5 -0

- preprocess/humanparsing/mhp_extension/detectron2/.github/CONTRIBUTING.md +49 -0

- preprocess/humanparsing/mhp_extension/detectron2/.github/Detectron2-Logo-Horz.svg +1 -0

- preprocess/humanparsing/mhp_extension/detectron2/.github/ISSUE_TEMPLATE.md +5 -0

- preprocess/humanparsing/mhp_extension/detectron2/.github/ISSUE_TEMPLATE/bugs.md +36 -0

- preprocess/humanparsing/mhp_extension/detectron2/.github/ISSUE_TEMPLATE/config.yml +9 -0

- preprocess/humanparsing/mhp_extension/detectron2/.github/ISSUE_TEMPLATE/feature-request.md +31 -0

- preprocess/humanparsing/mhp_extension/detectron2/.github/ISSUE_TEMPLATE/questions-help-support.md +26 -0

- preprocess/humanparsing/mhp_extension/detectron2/.github/ISSUE_TEMPLATE/unexpected-problems-bugs.md +45 -0

- preprocess/humanparsing/mhp_extension/detectron2/.github/pull_request_template.md +9 -0

- preprocess/humanparsing/mhp_extension/detectron2/.gitignore +46 -0

- preprocess/humanparsing/mhp_extension/detectron2/GETTING_STARTED.md +79 -0

- preprocess/humanparsing/mhp_extension/detectron2/INSTALL.md +184 -0

- preprocess/humanparsing/mhp_extension/detectron2/LICENSE +201 -0

- preprocess/humanparsing/mhp_extension/detectron2/MODEL_ZOO.md +903 -0

- preprocess/humanparsing/mhp_extension/detectron2/README.md +56 -0

- preprocess/humanparsing/mhp_extension/detectron2/configs/Base-RCNN-C4.yaml +18 -0

- preprocess/humanparsing/mhp_extension/detectron2/configs/Base-RCNN-DilatedC5.yaml +31 -0

- preprocess/humanparsing/mhp_extension/detectron2/configs/Base-RCNN-FPN.yaml +42 -0

- preprocess/humanparsing/mhp_extension/detectron2/configs/Base-RetinaNet.yaml +24 -0

- preprocess/humanparsing/mhp_extension/detectron2/configs/COCO-Detection/fast_rcnn_R_50_FPN_1x.yaml +17 -0

.gitattributes

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

images/demo.png filter=lfs diff=lfs merge=lfs -text

|

.gitignore

ADDED

|

@@ -0,0 +1,5 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

*.ckpt

|

| 2 |

+

__pycache__/

|

| 3 |

+

.vscode/

|

| 4 |

+

*.pyc

|

| 5 |

+

.uuid

|

LICENSE

ADDED

|

@@ -0,0 +1,107 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International

|

| 2 |

+

|

| 3 |

+

Creative Commons Corporation ("Creative Commons") is not a law firm and does not provide legal services or legal advice. Distribution of Creative Commons public licenses does not create a lawyer-client or other relationship. Creative Commons makes its licenses and related information available on an "as-is" basis. Creative Commons gives no warranties regarding its licenses, any material licensed under their terms and conditions, or any related information. Creative Commons disclaims all liability for damages resulting from their use to the fullest extent possible.

|

| 4 |

+

|

| 5 |

+

Using Creative Commons Public Licenses

|

| 6 |

+

|

| 7 |

+

Creative Commons public licenses provide a standard set of terms and conditions that creators and other rights holders may use to share original works of authorship and other material subject to copyright and certain other rights specified in the public license below. The following considerations are for informational purposes only, are not exhaustive, and do not form part of our licenses.

|

| 8 |

+

|

| 9 |

+

Considerations for licensors: Our public licenses are intended for use by those authorized to give the public permission to use material in ways otherwise restricted by copyright and certain other rights. Our licenses are irrevocable. Licensors should read and understand the terms and conditions of the license they choose before applying it. Licensors should also secure all rights necessary before applying our licenses so that the public can reuse the material as expected. Licensors should clearly mark any material not subject to the license. This includes other CC-licensed material, or material used under an exception or limitation to copyright. More considerations for licensors : wiki.creativecommons.org/Considerations_for_licensors

|

| 10 |

+

|

| 11 |

+

Considerations for the public: By using one of our public licenses, a licensor grants the public permission to use the licensed material under specified terms and conditions. If the licensor's permission is not necessary for any reason–for example, because of any applicable exception or limitation to copyright–then that use is not regulated by the license. Our licenses grant only permissions under copyright and certain other rights that a licensor has authority to grant. Use of the licensed material may still be restricted for other reasons, including because others have copyright or other rights in the material. A licensor may make special requests, such as asking that all changes be marked or described. Although not required by our licenses, you are encouraged to respect those requests where reasonable. More considerations for the public : wiki.creativecommons.org/Considerations_for_licensees

|

| 12 |

+

|

| 13 |

+

Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International Public License

|

| 14 |

+

|

| 15 |

+

By exercising the Licensed Rights (defined below), You accept and agree to be bound by the terms and conditions of this Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International Public License ("Public License"). To the extent this Public License may be interpreted as a contract, You are granted the Licensed Rights in consideration of Your acceptance of these terms and conditions, and the Licensor grants You such rights in consideration of benefits the Licensor receives from making the Licensed Material available under these terms and conditions.

|

| 16 |

+

|

| 17 |

+

Section 1 – Definitions.

|

| 18 |

+

|

| 19 |

+

a. Adapted Material means material subject to Copyright and Similar Rights that is derived from or based upon the Licensed Material and in which the Licensed Material is translated, altered, arranged, transformed, or otherwise modified in a manner requiring permission under the Copyright and Similar Rights held by the Licensor. For purposes of this Public License, where the Licensed Material is a musical work, performance, or sound recording, Adapted Material is always produced where the Licensed Material is synched in timed relation with a moving image.

|

| 20 |

+

b. Adapter's License means the license You apply to Your Copyright and Similar Rights in Your contributions to Adapted Material in accordance with the terms and conditions of this Public License.

|

| 21 |

+

c. BY-NC-SA Compatible License means a license listed at creativecommons.org/compatiblelicenses, approved by Creative Commons as essentially the equivalent of this Public License.

|

| 22 |

+

d. Copyright and Similar Rights means copyright and/or similar rights closely related to copyright including, without limitation, performance, broadcast, sound recording, and Sui Generis Database Rights, without regard to how the rights are labeled or categorized. For purposes of this Public License, the rights specified in Section 2(b)(1)-(2) are not Copyright and Similar Rights.

|

| 23 |

+

e. Effective Technological Measures means those measures that, in the absence of proper authority, may not be circumvented under laws fulfilling obligations under Article 11 of the WIPO Copyright Treaty adopted on December 20, 1996, and/or similar international agreements.

|

| 24 |

+

f. Exceptions and Limitations means fair use, fair dealing, and/or any other exception or limitation to Copyright and Similar Rights that applies to Your use of the Licensed Material.

|

| 25 |

+

g. License Elements means the license attributes listed in the name of a Creative Commons Public License. The License Elements of this Public License are Attribution, NonCommercial, and ShareAlike.

|

| 26 |

+

h. Licensed Material means the artistic or literary work, database, or other material to which the Licensor applied this Public License.

|

| 27 |

+

i. Licensed Rights means the rights granted to You subject to the terms and conditions of this Public License, which are limited to all Copyright and Similar Rights that apply to Your use of the Licensed Material and that the Licensor has authority to license.

|

| 28 |

+

j. Licensor means the individual(s) or entity(ies) granting rights under this Public License.

|

| 29 |

+

k. NonCommercial means not primarily intended for or directed towards commercial advantage or monetary compensation. For purposes of this Public License, the exchange of the Licensed Material for other material subject to Copyright and Similar Rights by digital file-sharing or similar means is NonCommercial provided there is no payment of monetary compensation in connection with the exchange.

|

| 30 |

+

l. Share means to provide material to the public by any means or process that requires permission under the Licensed Rights, such as reproduction, public display, public performance, distribution, dissemination, communication, or importation, and to make material available to the public including in ways that members of the public may access the material from a place and at a time individually chosen by them.

|

| 31 |

+

m. Sui Generis Database Rights means rights other than copyright resulting from Directive 96/9/EC of the European Parliament and of the Council of 11 March 1996 on the legal protection of databases, as amended and/or succeeded, as well as other essentially equivalent rights anywhere in the world.

|

| 32 |

+

n. You means the individual or entity exercising the Licensed Rights under this Public License. Your has a corresponding meaning.

|

| 33 |

+

Section 2 – Scope.

|

| 34 |

+

|

| 35 |

+

a. License grant.

|

| 36 |

+

1. Subject to the terms and conditions of this Public License, the Licensor hereby grants You a worldwide, royalty-free, non-sublicensable, non-exclusive, irrevocable license to exercise the Licensed Rights in the Licensed Material to:

|

| 37 |

+

A. reproduce and Share the Licensed Material, in whole or in part, for NonCommercial purposes only; and

|

| 38 |

+

B. produce, reproduce, and Share Adapted Material for NonCommercial purposes only.

|

| 39 |

+

2. Exceptions and Limitations. For the avoidance of doubt, where Exceptions and Limitations apply to Your use, this Public License does not apply, and You do not need to comply with its terms and conditions.

|

| 40 |

+

3. Term. The term of this Public License is specified in Section 6(a).

|

| 41 |

+

4. Media and formats; technical modifications allowed. The Licensor authorizes You to exercise the Licensed Rights in all media and formats whether now known or hereafter created, and to make technical modifications necessary to do so. The Licensor waives and/or agrees not to assert any right or authority to forbid You from making technical modifications necessary to exercise the Licensed Rights, including technical modifications necessary to circumvent Effective Technological Measures. For purposes of this Public License, simply making modifications authorized by this Section 2(a)(4) never produces Adapted Material.

|

| 42 |

+

5. Downstream recipients.

|

| 43 |

+

A. Offer from the Licensor – Licensed Material. Every recipient of the Licensed Material automatically receives an offer from the Licensor to exercise the Licensed Rights under the terms and conditions of this Public License.

|

| 44 |

+

B. Additional offer from the Licensor – Adapted Material. Every recipient of Adapted Material from You automatically receives an offer from the Licensor to exercise the Licensed Rights in the Adapted Material under the conditions of the Adapter's License You apply.

|

| 45 |

+

C. No downstream restrictions. You may not offer or impose any additional or different terms or conditions on, or apply any Effective Technological Measures to, the Licensed Material if doing so restricts exercise of the Licensed Rights by any recipient of the Licensed Material.

|

| 46 |

+

6. No endorsement. Nothing in this Public License constitutes or may be construed as permission to assert or imply that You are, or that Your use of the Licensed Material is, connected with, or sponsored, endorsed, or granted official status by, the Licensor or others designated to receive attribution as provided in Section 3(a)(1)(A)(i).

|

| 47 |

+

b. Other rights.

|

| 48 |

+

1. Moral rights, such as the right of integrity, are not licensed under this Public License, nor are publicity, privacy, and/or other similar personality rights; however, to the extent possible, the Licensor waives and/or agrees not to assert any such rights held by the Licensor to the limited extent necessary to allow You to exercise the Licensed Rights, but not otherwise.

|

| 49 |

+

2. Patent and trademark rights are not licensed under this Public License.

|

| 50 |

+

3. To the extent possible, the Licensor waives any right to collect royalties from You for the exercise of the Licensed Rights, whether directly or through a collecting society under any voluntary or waivable statutory or compulsory licensing scheme. In all other cases the Licensor expressly reserves any right to collect such royalties, including when the Licensed Material is used other than for NonCommercial purposes.

|

| 51 |

+

Section 3 – License Conditions.

|

| 52 |

+

|

| 53 |

+

Your exercise of the Licensed Rights is expressly made subject to the following conditions.

|

| 54 |

+

|

| 55 |

+

a. Attribution.

|

| 56 |

+

1. If You Share the Licensed Material (including in modified form), You must:

|

| 57 |

+

A. retain the following if it is supplied by the Licensor with the Licensed Material:

|

| 58 |

+

i. identification of the creator(s) of the Licensed Material and any others designated to receive attribution, in any reasonable manner requested by the Licensor (including by pseudonym if designated);

|

| 59 |

+

ii. a copyright notice;

|

| 60 |

+

iii. a notice that refers to this Public License;

|

| 61 |

+

iv. a notice that refers to the disclaimer of warranties;

|

| 62 |

+

v. a URI or hyperlink to the Licensed Material to the extent reasonably practicable;

|

| 63 |

+

|

| 64 |

+

B. indicate if You modified the Licensed Material and retain an indication of any previous modifications; and

|

| 65 |

+

C. indicate the Licensed Material is licensed under this Public License, and include the text of, or the URI or hyperlink to, this Public License.

|

| 66 |

+

2. You may satisfy the conditions in Section 3(a)(1) in any reasonable manner based on the medium, means, and context in which You Share the Licensed Material. For example, it may be reasonable to satisfy the conditions by providing a URI or hyperlink to a resource that includes the required information.

|

| 67 |

+

3. If requested by the Licensor, You must remove any of the information required by Section 3(a)(1)(A) to the extent reasonably practicable.

|

| 68 |

+

b. ShareAlike.In addition to the conditions in Section 3(a), if You Share Adapted Material You produce, the following conditions also apply.

|

| 69 |

+

1. The Adapter's License You apply must be a Creative Commons license with the same License Elements, this version or later, or a BY-NC-SA Compatible License.

|

| 70 |

+

2. You must include the text of, or the URI or hyperlink to, the Adapter's License You apply. You may satisfy this condition in any reasonable manner based on the medium, means, and context in which You Share Adapted Material.

|

| 71 |

+

3. You may not offer or impose any additional or different terms or conditions on, or apply any Effective Technological Measures to, Adapted Material that restrict exercise of the rights granted under the Adapter's License You apply.

|

| 72 |

+

Section 4 – Sui Generis Database Rights.

|

| 73 |

+

|

| 74 |

+

Where the Licensed Rights include Sui Generis Database Rights that apply to Your use of the Licensed Material:

|

| 75 |

+

|

| 76 |

+

a. for the avoidance of doubt, Section 2(a)(1) grants You the right to extract, reuse, reproduce, and Share all or a substantial portion of the contents of the database for NonCommercial purposes only;

|

| 77 |

+

b. if You include all or a substantial portion of the database contents in a database in which You have Sui Generis Database Rights, then the database in which You have Sui Generis Database Rights (but not its individual contents) is Adapted Material, including for purposes of Section 3(b); and

|

| 78 |

+

c. You must comply with the conditions in Section 3(a) if You Share all or a substantial portion of the contents of the database.

|

| 79 |

+

For the avoidance of doubt, this Section 4 supplements and does not replace Your obligations under this Public License where the Licensed Rights include other Copyright and Similar Rights.

|

| 80 |

+

Section 5 – Disclaimer of Warranties and Limitation of Liability.

|

| 81 |

+

|

| 82 |

+

a. Unless otherwise separately undertaken by the Licensor, to the extent possible, the Licensor offers the Licensed Material as-is and as-available, and makes no representations or warranties of any kind concerning the Licensed Material, whether express, implied, statutory, or other. This includes, without limitation, warranties of title, merchantability, fitness for a particular purpose, non-infringement, absence of latent or other defects, accuracy, or the presence or absence of errors, whether or not known or discoverable. Where disclaimers of warranties are not allowed in full or in part, this disclaimer may not apply to You.

|

| 83 |

+

b. To the extent possible, in no event will the Licensor be liable to You on any legal theory (including, without limitation, negligence) or otherwise for any direct, special, indirect, incidental, consequential, punitive, exemplary, or other losses, costs, expenses, or damages arising out of this Public License or use of the Licensed Material, even if the Licensor has been advised of the possibility of such losses, costs, expenses, or damages. Where a limitation of liability is not allowed in full or in part, this limitation may not apply to You.

|

| 84 |

+

c. The disclaimer of warranties and limitation of liability provided above shall be interpreted in a manner that, to the extent possible, most closely approximates an absolute disclaimer and waiver of all liability.

|

| 85 |

+

Section 6 – Term and Termination.

|

| 86 |

+

|

| 87 |

+

a. This Public License applies for the term of the Copyright and Similar Rights licensed here. However, if You fail to comply with this Public License, then Your rights under this Public License terminate automatically.

|

| 88 |

+

b. Where Your right to use the Licensed Material has terminated under Section 6(a), it reinstates:

|

| 89 |

+

1. automatically as of the date the violation is cured, provided it is cured within 30 days of Your discovery of the violation; or

|

| 90 |

+

2. upon express reinstatement by the Licensor.

|

| 91 |

+

For the avoidance of doubt, this Section 6(b) does not affect any right the Licensor may have to seek remedies for Your violations of this Public License.

|

| 92 |

+

|

| 93 |

+

c. For the avoidance of doubt, the Licensor may also offer the Licensed Material under separate terms or conditions or stop distributing the Licensed Material at any time; however, doing so will not terminate this Public License.

|

| 94 |

+

d. Sections 1, 5, 6, 7, and 8 survive termination of this Public License.

|

| 95 |

+

Section 7 – Other Terms and Conditions.

|

| 96 |

+

|

| 97 |

+

a. The Licensor shall not be bound by any additional or different terms or conditions communicated by You unless expressly agreed.

|

| 98 |

+

b. Any arrangements, understandings, or agreements regarding the Licensed Material not stated herein are separate from and independent of the terms and conditions of this Public License.

|

| 99 |

+

Section 8 – Interpretation.

|

| 100 |

+

|

| 101 |

+

a. For the avoidance of doubt, this Public License does not, and shall not be interpreted to, reduce, limit, restrict, or impose conditions on any use of the Licensed Material that could lawfully be made without permission under this Public License.

|

| 102 |

+

b. To the extent possible, if any provision of this Public License is deemed unenforceable, it shall be automatically reformed to the minimum extent necessary to make it enforceable. If the provision cannot be reformed, it shall be severed from this Public License without affecting the enforceability of the remaining terms and conditions.

|

| 103 |

+

c. No term or condition of this Public License will be waived and no failure to comply consented to unless expressly agreed to by the Licensor.

|

| 104 |

+

d. Nothing in this Public License constitutes or may be interpreted as a limitation upon, or waiver of, any privileges and immunities that apply to the Licensor or You, including from the legal processes of any jurisdiction or authority.

|

| 105 |

+

Creative Commons is not a party to its public licenses. Notwithstanding, Creative Commons may elect to apply one of its public licenses to material it publishes and in those instances will be considered the "Licensor." The text of the Creative Commons public licenses is dedicated to the public domain under the CC0 Public Domain Dedication. Except for the limited purpose of indicating that material is shared under a Creative Commons public license or as otherwise permitted by the Creative Commons policies published at creativecommons.org/policies, Creative Commons does not authorize the use of the trademark "Creative Commons" or any other trademark or logo of Creative Commons without its prior written consent including, without limitation, in connection with any unauthorized modifications to any of its public licenses or any other arrangements, understandings, or agreements concerning use of licensed material. For the avoidance of doubt, this paragraph does not form part of the public licenses.

|

| 106 |

+

|

| 107 |

+

Creative Commons may be contacted at creativecommons.org.

|

README.md

ADDED

|

@@ -0,0 +1,71 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

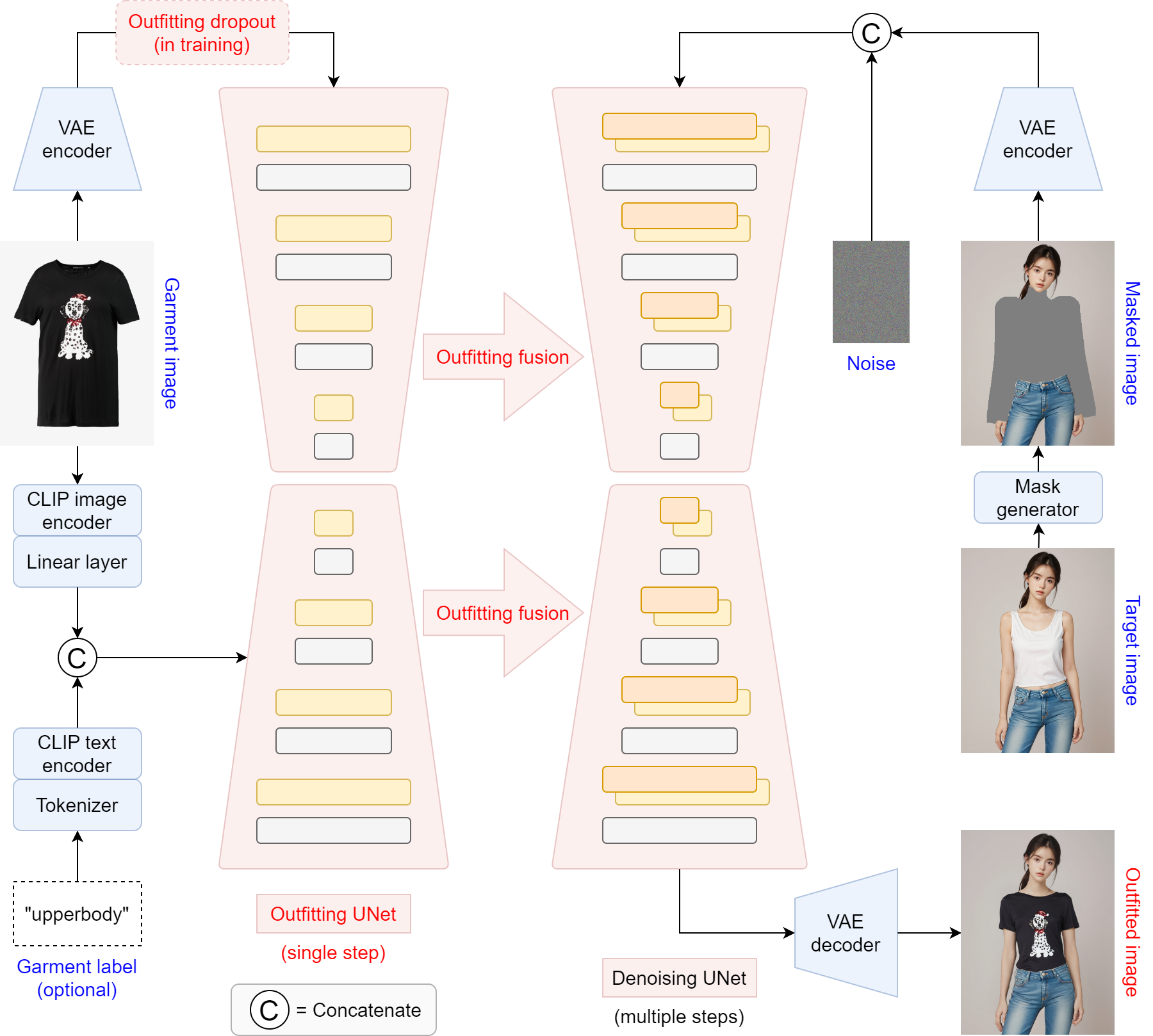

# OOTDiffusion

|

| 2 |

+

This repository is the official implementation of OOTDiffusion

|

| 3 |

+

|

| 4 |

+

🤗 [Try out OOTDiffusion](https://huggingface.co/spaces/levihsu/OOTDiffusion) (Thanks to [ZeroGPU](https://huggingface.co/zero-gpu-explorers) for providing A100 GPUs)

|

| 5 |

+

|

| 6 |

+

Or [try our own demo](https://ootd.ibot.cn/) on RTX 4090 GPUs

|

| 7 |

+

|

| 8 |

+

> **OOTDiffusion: Outfitting Fusion based Latent Diffusion for Controllable Virtual Try-on** [[arXiv paper](https://arxiv.org/abs/2403.01779)]<br>

|

| 9 |

+

> [Yuhao Xu](http://levihsu.github.io/), [Tao Gu](https://github.com/T-Gu), [Weifeng Chen](https://github.com/ShineChen1024), [Chengcai Chen](https://www.researchgate.net/profile/Chengcai-Chen)<br>

|

| 10 |

+

> Xiao-i Research

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

Our model checkpoints trained on [VITON-HD](https://github.com/shadow2496/VITON-HD) (half-body) and [Dress Code](https://github.com/aimagelab/dress-code) (full-body) have been released

|

| 14 |

+

|

| 15 |

+

* 🤗 [Hugging Face link](https://huggingface.co/levihsu/OOTDiffusion)

|

| 16 |

+

* 📢📢 We support ONNX for [humanparsing](https://github.com/GoGoDuck912/Self-Correction-Human-Parsing) now. Most environmental issues should have been addressed : )

|

| 17 |

+

* Please download [clip-vit-large-patch14](https://huggingface.co/openai/clip-vit-large-patch14) into ***checkpoints*** folder

|

| 18 |

+

* We've only tested our code and models on Linux (Ubuntu 22.04)

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

## Installation

|

| 24 |

+

1. Clone the repository

|

| 25 |

+

|

| 26 |

+

```sh

|

| 27 |

+

git clone https://github.com/levihsu/OOTDiffusion

|

| 28 |

+

```

|

| 29 |

+

|

| 30 |

+

2. Create a conda environment and install the required packages

|

| 31 |

+

|

| 32 |

+

```sh

|

| 33 |

+

conda create -n ootd python==3.10

|

| 34 |

+

conda activate ootd

|

| 35 |

+

pip install torch==2.0.1 torchvision==0.15.2 torchaudio==2.0.2

|

| 36 |

+

pip install -r requirements.txt

|

| 37 |

+

```

|

| 38 |

+

|

| 39 |

+

## Inference

|

| 40 |

+

1. Half-body model

|

| 41 |

+

|

| 42 |

+

```sh

|

| 43 |

+

cd OOTDiffusion/run

|

| 44 |

+

python run_ootd.py --model_path <model-image-path> --cloth_path <cloth-image-path> --scale 2.0 --sample 4

|

| 45 |

+

```

|

| 46 |

+

|

| 47 |

+

2. Full-body model

|

| 48 |

+

|

| 49 |

+

> Garment category must be paired: 0 = upperbody; 1 = lowerbody; 2 = dress

|

| 50 |

+

|

| 51 |

+

```sh

|

| 52 |

+

cd OOTDiffusion/run

|

| 53 |

+

python run_ootd.py --model_path <model-image-path> --cloth_path <cloth-image-path> --model_type dc --category 2 --scale 2.0 --sample 4

|

| 54 |

+

```

|

| 55 |

+

|

| 56 |

+

## Citation

|

| 57 |

+

```

|

| 58 |

+

@article{xu2024ootdiffusion,

|

| 59 |

+

title={OOTDiffusion: Outfitting Fusion based Latent Diffusion for Controllable Virtual Try-on},

|

| 60 |

+

author={Xu, Yuhao and Gu, Tao and Chen, Weifeng and Chen, Chengcai},

|

| 61 |

+

journal={arXiv preprint arXiv:2403.01779},

|

| 62 |

+

year={2024}

|

| 63 |

+

}

|

| 64 |

+

```

|

| 65 |

+

|

| 66 |

+

## TODO List

|

| 67 |

+

- [x] Paper

|

| 68 |

+

- [x] Gradio demo

|

| 69 |

+

- [x] Inference code

|

| 70 |

+

- [x] Model weights

|

| 71 |

+

- [ ] Training code

|

checkpoints/README.txt

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

Put checkpoints here, including ootd, humanparsing, openpose and clip-vit-large-patch14

|

images/demo.png

ADDED

|

Git LFS Details

|

images/workflow.png

ADDED

|

ootd/inference_ootd.py

ADDED

|

@@ -0,0 +1,133 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import pdb

|

| 2 |

+

from pathlib import Path

|

| 3 |

+

import sys

|

| 4 |

+

PROJECT_ROOT = Path(__file__).absolute().parents[0].absolute()

|

| 5 |

+

sys.path.insert(0, str(PROJECT_ROOT))

|

| 6 |

+

import os

|

| 7 |

+

|

| 8 |

+

import torch

|

| 9 |

+

import numpy as np

|

| 10 |

+

from PIL import Image

|

| 11 |

+

import cv2

|

| 12 |

+

|

| 13 |

+

import random

|

| 14 |

+

import time

|

| 15 |

+

import pdb

|

| 16 |

+

|

| 17 |

+

from pipelines_ootd.pipeline_ootd import OotdPipeline

|

| 18 |

+

from pipelines_ootd.unet_garm_2d_condition import UNetGarm2DConditionModel

|

| 19 |

+

from pipelines_ootd.unet_vton_2d_condition import UNetVton2DConditionModel

|

| 20 |

+

from diffusers import UniPCMultistepScheduler

|

| 21 |

+

from diffusers import AutoencoderKL

|

| 22 |

+

|

| 23 |

+

import torch.nn as nn

|

| 24 |

+

import torch.nn.functional as F

|

| 25 |

+

from transformers import AutoProcessor, CLIPVisionModelWithProjection

|

| 26 |

+

from transformers import CLIPTextModel, CLIPTokenizer

|

| 27 |

+

|

| 28 |

+

VIT_PATH = "../checkpoints/clip-vit-large-patch14"

|

| 29 |

+

VAE_PATH = "../checkpoints/ootd"

|

| 30 |

+

UNET_PATH = "../checkpoints/ootd/ootd_hd/checkpoint-36000"

|

| 31 |

+

MODEL_PATH = "../checkpoints/ootd"

|

| 32 |

+

|

| 33 |

+

class OOTDiffusion:

|

| 34 |

+

|

| 35 |

+

def __init__(self, gpu_id):

|

| 36 |

+

self.gpu_id = 'cuda:' + str(gpu_id)

|

| 37 |

+

|

| 38 |

+

vae = AutoencoderKL.from_pretrained(

|

| 39 |

+

VAE_PATH,

|

| 40 |

+

subfolder="vae",

|

| 41 |

+

torch_dtype=torch.float16,

|

| 42 |

+

)

|

| 43 |

+

|

| 44 |

+

unet_garm = UNetGarm2DConditionModel.from_pretrained(

|

| 45 |

+

UNET_PATH,

|

| 46 |

+

subfolder="unet_garm",

|

| 47 |

+

torch_dtype=torch.float16,

|

| 48 |

+

use_safetensors=True,

|

| 49 |

+

)

|

| 50 |

+

unet_vton = UNetVton2DConditionModel.from_pretrained(

|

| 51 |

+

UNET_PATH,

|

| 52 |

+

subfolder="unet_vton",

|

| 53 |

+

torch_dtype=torch.float16,

|

| 54 |

+

use_safetensors=True,

|

| 55 |

+

)

|

| 56 |

+

|

| 57 |

+

self.pipe = OotdPipeline.from_pretrained(

|

| 58 |

+

MODEL_PATH,

|

| 59 |

+

unet_garm=unet_garm,

|

| 60 |

+

unet_vton=unet_vton,

|

| 61 |

+

vae=vae,

|

| 62 |

+

torch_dtype=torch.float16,

|

| 63 |

+

variant="fp16",

|

| 64 |

+

use_safetensors=True,

|

| 65 |

+

safety_checker=None,

|

| 66 |

+

requires_safety_checker=False,

|

| 67 |

+

).to(self.gpu_id)

|

| 68 |

+

|

| 69 |

+

self.pipe.scheduler = UniPCMultistepScheduler.from_config(self.pipe.scheduler.config)

|

| 70 |

+

|

| 71 |

+

self.auto_processor = AutoProcessor.from_pretrained(VIT_PATH)

|

| 72 |

+

self.image_encoder = CLIPVisionModelWithProjection.from_pretrained(VIT_PATH).to(self.gpu_id)

|

| 73 |

+

|

| 74 |

+

self.tokenizer = CLIPTokenizer.from_pretrained(

|

| 75 |

+

MODEL_PATH,

|

| 76 |

+

subfolder="tokenizer",

|

| 77 |

+

)

|

| 78 |

+

self.text_encoder = CLIPTextModel.from_pretrained(

|

| 79 |

+

MODEL_PATH,

|

| 80 |

+

subfolder="text_encoder",

|

| 81 |

+

).to(self.gpu_id)

|

| 82 |

+

|

| 83 |

+

|

| 84 |

+

def tokenize_captions(self, captions, max_length):

|

| 85 |

+

inputs = self.tokenizer(

|

| 86 |

+

captions, max_length=max_length, padding="max_length", truncation=True, return_tensors="pt"

|

| 87 |

+

)

|

| 88 |

+

return inputs.input_ids

|

| 89 |

+

|

| 90 |

+

|

| 91 |

+

def __call__(self,

|

| 92 |

+

model_type='hd',

|

| 93 |

+

category='upperbody',

|

| 94 |

+

image_garm=None,

|

| 95 |

+

image_vton=None,

|

| 96 |

+

mask=None,

|

| 97 |

+

image_ori=None,

|

| 98 |

+

num_samples=1,

|

| 99 |

+

num_steps=20,

|

| 100 |

+

image_scale=1.0,

|

| 101 |

+

seed=-1,

|

| 102 |

+

):

|

| 103 |

+

if seed == -1:

|

| 104 |

+

random.seed(time.time())

|

| 105 |

+

seed = random.randint(0, 2147483647)

|

| 106 |

+

print('Initial seed: ' + str(seed))

|

| 107 |

+

generator = torch.manual_seed(seed)

|

| 108 |

+

|

| 109 |

+

with torch.no_grad():

|

| 110 |

+

prompt_image = self.auto_processor(images=image_garm, return_tensors="pt").to(self.gpu_id)

|

| 111 |

+

prompt_image = self.image_encoder(prompt_image.data['pixel_values']).image_embeds

|

| 112 |

+

prompt_image = prompt_image.unsqueeze(1)

|

| 113 |

+

if model_type == 'hd':

|

| 114 |

+

prompt_embeds = self.text_encoder(self.tokenize_captions([""], 2).to(self.gpu_id))[0]

|

| 115 |

+

prompt_embeds[:, 1:] = prompt_image[:]

|

| 116 |

+

elif model_type == 'dc':

|

| 117 |

+

prompt_embeds = self.text_encoder(self.tokenize_captions([category], 3).to(self.gpu_id))[0]

|

| 118 |

+

prompt_embeds = torch.cat([prompt_embeds, prompt_image], dim=1)

|

| 119 |

+

else:

|

| 120 |

+

raise ValueError("model_type must be \'hd\' or \'dc\'!")

|

| 121 |

+

|

| 122 |

+

images = self.pipe(prompt_embeds=prompt_embeds,

|

| 123 |

+

image_garm=image_garm,

|

| 124 |

+

image_vton=image_vton,

|

| 125 |

+

mask=mask,

|

| 126 |

+

image_ori=image_ori,

|

| 127 |

+

num_inference_steps=num_steps,

|

| 128 |

+

image_guidance_scale=image_scale,

|

| 129 |

+

num_images_per_prompt=num_samples,

|

| 130 |

+

generator=generator,

|

| 131 |

+

).images

|

| 132 |

+

|

| 133 |

+

return images

|

ootd/inference_ootd_dc.py

ADDED

|

@@ -0,0 +1,132 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import pdb

|

| 2 |

+

from pathlib import Path

|

| 3 |

+

import sys

|

| 4 |

+

PROJECT_ROOT = Path(__file__).absolute().parents[0].absolute()

|

| 5 |

+

sys.path.insert(0, str(PROJECT_ROOT))

|

| 6 |

+

import os

|

| 7 |

+

import torch

|

| 8 |

+

import numpy as np

|

| 9 |

+

from PIL import Image

|

| 10 |

+

import cv2

|

| 11 |

+

|

| 12 |

+

import random

|

| 13 |

+

import time

|

| 14 |

+

import pdb

|

| 15 |

+

|

| 16 |

+

from pipelines_ootd.pipeline_ootd import OotdPipeline

|

| 17 |

+

from pipelines_ootd.unet_garm_2d_condition import UNetGarm2DConditionModel

|

| 18 |

+

from pipelines_ootd.unet_vton_2d_condition import UNetVton2DConditionModel

|

| 19 |

+

from diffusers import UniPCMultistepScheduler

|

| 20 |

+

from diffusers import AutoencoderKL

|

| 21 |

+

|

| 22 |

+

import torch.nn as nn

|

| 23 |

+

import torch.nn.functional as F

|

| 24 |

+

from transformers import AutoProcessor, CLIPVisionModelWithProjection

|

| 25 |

+

from transformers import CLIPTextModel, CLIPTokenizer

|

| 26 |

+

|

| 27 |

+

VIT_PATH = "../checkpoints/clip-vit-large-patch14"

|

| 28 |

+

VAE_PATH = "../checkpoints/ootd"

|

| 29 |

+

UNET_PATH = "../checkpoints/ootd/ootd_dc/checkpoint-36000"

|

| 30 |

+

MODEL_PATH = "../checkpoints/ootd"

|

| 31 |

+

|

| 32 |

+

class OOTDiffusionDC:

|

| 33 |

+

|

| 34 |

+

def __init__(self, gpu_id):

|

| 35 |

+

self.gpu_id = 'cuda:' + str(gpu_id)

|

| 36 |

+

|

| 37 |

+

vae = AutoencoderKL.from_pretrained(

|

| 38 |

+

VAE_PATH,

|

| 39 |

+

subfolder="vae",

|

| 40 |

+

torch_dtype=torch.float16,

|

| 41 |

+

)

|

| 42 |

+

|

| 43 |

+

unet_garm = UNetGarm2DConditionModel.from_pretrained(

|

| 44 |

+

UNET_PATH,

|

| 45 |

+

subfolder="unet_garm",

|

| 46 |

+

torch_dtype=torch.float16,

|

| 47 |

+

use_safetensors=True,

|

| 48 |

+

)

|

| 49 |

+

unet_vton = UNetVton2DConditionModel.from_pretrained(

|

| 50 |

+

UNET_PATH,

|

| 51 |

+

subfolder="unet_vton",

|

| 52 |

+

torch_dtype=torch.float16,

|

| 53 |

+

use_safetensors=True,

|

| 54 |

+

)

|

| 55 |

+

|

| 56 |

+

self.pipe = OotdPipeline.from_pretrained(

|

| 57 |

+

MODEL_PATH,

|

| 58 |

+

unet_garm=unet_garm,

|

| 59 |

+

unet_vton=unet_vton,

|

| 60 |

+

vae=vae,

|

| 61 |

+

torch_dtype=torch.float16,

|

| 62 |

+

variant="fp16",

|

| 63 |

+

use_safetensors=True,

|

| 64 |

+

safety_checker=None,

|

| 65 |

+

requires_safety_checker=False,

|

| 66 |

+

).to(self.gpu_id)

|

| 67 |

+

|

| 68 |

+

self.pipe.scheduler = UniPCMultistepScheduler.from_config(self.pipe.scheduler.config)

|

| 69 |

+

|

| 70 |

+

self.auto_processor = AutoProcessor.from_pretrained(VIT_PATH)

|

| 71 |

+

self.image_encoder = CLIPVisionModelWithProjection.from_pretrained(VIT_PATH).to(self.gpu_id)

|

| 72 |

+

|

| 73 |

+

self.tokenizer = CLIPTokenizer.from_pretrained(

|

| 74 |

+

MODEL_PATH,

|

| 75 |

+

subfolder="tokenizer",

|

| 76 |

+

)

|

| 77 |

+

self.text_encoder = CLIPTextModel.from_pretrained(

|

| 78 |

+

MODEL_PATH,

|

| 79 |

+

subfolder="text_encoder",

|

| 80 |

+

).to(self.gpu_id)

|

| 81 |

+

|

| 82 |

+

|

| 83 |

+

def tokenize_captions(self, captions, max_length):

|

| 84 |

+

inputs = self.tokenizer(

|

| 85 |

+

captions, max_length=max_length, padding="max_length", truncation=True, return_tensors="pt"

|

| 86 |

+

)

|

| 87 |

+

return inputs.input_ids

|

| 88 |

+

|

| 89 |

+

|

| 90 |

+

def __call__(self,

|

| 91 |

+

model_type='hd',

|

| 92 |

+

category='upperbody',

|

| 93 |

+

image_garm=None,

|

| 94 |

+

image_vton=None,

|

| 95 |

+

mask=None,

|

| 96 |

+

image_ori=None,

|

| 97 |

+

num_samples=1,

|

| 98 |

+

num_steps=20,

|

| 99 |

+

image_scale=1.0,

|

| 100 |

+

seed=-1,

|

| 101 |

+

):

|

| 102 |

+

if seed == -1:

|

| 103 |

+

random.seed(time.time())

|

| 104 |

+

seed = random.randint(0, 2147483647)

|

| 105 |

+

print('Initial seed: ' + str(seed))

|

| 106 |

+

generator = torch.manual_seed(seed)

|

| 107 |

+

|

| 108 |

+

with torch.no_grad():

|

| 109 |

+

prompt_image = self.auto_processor(images=image_garm, return_tensors="pt").to(self.gpu_id)

|

| 110 |

+

prompt_image = self.image_encoder(prompt_image.data['pixel_values']).image_embeds

|

| 111 |

+

prompt_image = prompt_image.unsqueeze(1)

|

| 112 |

+

if model_type == 'hd':

|

| 113 |

+

prompt_embeds = self.text_encoder(self.tokenize_captions([""], 2).to(self.gpu_id))[0]

|

| 114 |

+

prompt_embeds[:, 1:] = prompt_image[:]

|

| 115 |

+

elif model_type == 'dc':

|

| 116 |

+

prompt_embeds = self.text_encoder(self.tokenize_captions([category], 3).to(self.gpu_id))[0]

|

| 117 |

+

prompt_embeds = torch.cat([prompt_embeds, prompt_image], dim=1)

|

| 118 |

+

else:

|

| 119 |

+

raise ValueError("model_type must be \'hd\' or \'dc\'!")

|

| 120 |

+

|

| 121 |

+

images = self.pipe(prompt_embeds=prompt_embeds,

|

| 122 |

+

image_garm=image_garm,

|

| 123 |

+

image_vton=image_vton,

|

| 124 |

+

mask=mask,

|

| 125 |

+

image_ori=image_ori,

|

| 126 |

+

num_inference_steps=num_steps,

|

| 127 |

+

image_guidance_scale=image_scale,

|

| 128 |

+

num_images_per_prompt=num_samples,

|

| 129 |

+

generator=generator,

|

| 130 |

+

).images

|

| 131 |

+

|

| 132 |

+

return images

|

ootd/inference_ootd_hd.py

ADDED

|

@@ -0,0 +1,132 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import pdb

|

| 2 |

+

from pathlib import Path

|

| 3 |

+

import sys

|

| 4 |

+

PROJECT_ROOT = Path(__file__).absolute().parents[0].absolute()

|

| 5 |

+

sys.path.insert(0, str(PROJECT_ROOT))

|

| 6 |

+

import os

|

| 7 |

+

import torch

|

| 8 |

+

import numpy as np

|

| 9 |

+

from PIL import Image

|

| 10 |

+

import cv2

|

| 11 |

+

|

| 12 |

+

import random

|

| 13 |

+

import time

|

| 14 |

+

import pdb

|

| 15 |

+

|

| 16 |

+

from pipelines_ootd.pipeline_ootd import OotdPipeline

|

| 17 |

+

from pipelines_ootd.unet_garm_2d_condition import UNetGarm2DConditionModel

|

| 18 |

+

from pipelines_ootd.unet_vton_2d_condition import UNetVton2DConditionModel

|

| 19 |

+

from diffusers import UniPCMultistepScheduler

|

| 20 |

+

from diffusers import AutoencoderKL

|

| 21 |

+

|

| 22 |

+

import torch.nn as nn

|

| 23 |

+

import torch.nn.functional as F

|

| 24 |

+

from transformers import AutoProcessor, CLIPVisionModelWithProjection

|

| 25 |

+

from transformers import CLIPTextModel, CLIPTokenizer

|

| 26 |

+

|

| 27 |

+

VIT_PATH = "../checkpoints/clip-vit-large-patch14"

|

| 28 |

+

VAE_PATH = "../checkpoints/ootd"

|

| 29 |

+

UNET_PATH = "../checkpoints/ootd/ootd_hd/checkpoint-36000"

|

| 30 |

+

MODEL_PATH = "../checkpoints/ootd"

|

| 31 |

+

|

| 32 |

+

class OOTDiffusionHD:

|

| 33 |

+

|

| 34 |

+

def __init__(self, gpu_id):

|

| 35 |

+

self.gpu_id = 'cuda:' + str(gpu_id)

|

| 36 |

+

|

| 37 |

+

vae = AutoencoderKL.from_pretrained(

|

| 38 |

+

VAE_PATH,

|

| 39 |

+

subfolder="vae",

|

| 40 |

+

torch_dtype=torch.float16,

|

| 41 |

+

)

|

| 42 |

+

|

| 43 |

+

unet_garm = UNetGarm2DConditionModel.from_pretrained(

|

| 44 |

+

UNET_PATH,

|

| 45 |

+

subfolder="unet_garm",

|

| 46 |

+

torch_dtype=torch.float16,

|

| 47 |

+

use_safetensors=True,

|

| 48 |

+

)

|

| 49 |

+

unet_vton = UNetVton2DConditionModel.from_pretrained(

|

| 50 |

+

UNET_PATH,

|

| 51 |

+

subfolder="unet_vton",

|

| 52 |

+

torch_dtype=torch.float16,

|

| 53 |

+

use_safetensors=True,

|

| 54 |

+

)

|

| 55 |

+

|

| 56 |

+

self.pipe = OotdPipeline.from_pretrained(

|

| 57 |

+

MODEL_PATH,

|

| 58 |

+

unet_garm=unet_garm,

|

| 59 |

+

unet_vton=unet_vton,

|

| 60 |

+

vae=vae,

|

| 61 |

+

torch_dtype=torch.float16,

|

| 62 |

+

variant="fp16",

|

| 63 |

+

use_safetensors=True,

|

| 64 |

+

safety_checker=None,

|

| 65 |

+

requires_safety_checker=False,

|

| 66 |

+

).to(self.gpu_id)

|

| 67 |

+

|

| 68 |

+

self.pipe.scheduler = UniPCMultistepScheduler.from_config(self.pipe.scheduler.config)

|

| 69 |

+

|

| 70 |

+

self.auto_processor = AutoProcessor.from_pretrained(VIT_PATH)

|

| 71 |

+

self.image_encoder = CLIPVisionModelWithProjection.from_pretrained(VIT_PATH).to(self.gpu_id)

|

| 72 |

+

|

| 73 |

+

self.tokenizer = CLIPTokenizer.from_pretrained(

|

| 74 |

+

MODEL_PATH,

|

| 75 |

+

subfolder="tokenizer",

|

| 76 |

+

)

|

| 77 |

+

self.text_encoder = CLIPTextModel.from_pretrained(

|

| 78 |

+

MODEL_PATH,

|

| 79 |

+

subfolder="text_encoder",

|

| 80 |

+

).to(self.gpu_id)

|

| 81 |

+

|

| 82 |

+

|

| 83 |

+

def tokenize_captions(self, captions, max_length):

|

| 84 |

+

inputs = self.tokenizer(

|

| 85 |

+

captions, max_length=max_length, padding="max_length", truncation=True, return_tensors="pt"

|

| 86 |

+

)

|

| 87 |

+

return inputs.input_ids

|

| 88 |

+

|

| 89 |

+

|

| 90 |

+

def __call__(self,

|

| 91 |

+

model_type='hd',

|

| 92 |

+

category='upperbody',

|

| 93 |

+

image_garm=None,

|

| 94 |

+

image_vton=None,

|

| 95 |

+

mask=None,

|

| 96 |

+

image_ori=None,

|

| 97 |

+

num_samples=1,

|

| 98 |

+

num_steps=20,

|

| 99 |

+

image_scale=1.0,

|

| 100 |

+

seed=-1,

|

| 101 |

+

):

|

| 102 |

+

if seed == -1:

|

| 103 |

+

random.seed(time.time())

|

| 104 |

+

seed = random.randint(0, 2147483647)

|

| 105 |

+

print('Initial seed: ' + str(seed))

|

| 106 |

+

generator = torch.manual_seed(seed)

|

| 107 |

+

|

| 108 |

+

with torch.no_grad():

|

| 109 |

+

prompt_image = self.auto_processor(images=image_garm, return_tensors="pt").to(self.gpu_id)

|

| 110 |

+

prompt_image = self.image_encoder(prompt_image.data['pixel_values']).image_embeds

|

| 111 |

+

prompt_image = prompt_image.unsqueeze(1)

|

| 112 |

+

if model_type == 'hd':

|

| 113 |

+

prompt_embeds = self.text_encoder(self.tokenize_captions([""], 2).to(self.gpu_id))[0]

|

| 114 |

+

prompt_embeds[:, 1:] = prompt_image[:]

|

| 115 |

+

elif model_type == 'dc':

|

| 116 |

+

prompt_embeds = self.text_encoder(self.tokenize_captions([category], 3).to(self.gpu_id))[0]

|

| 117 |

+

prompt_embeds = torch.cat([prompt_embeds, prompt_image], dim=1)

|

| 118 |

+

else:

|

| 119 |

+

raise ValueError("model_type must be \'hd\' or \'dc\'!")

|

| 120 |

+

|

| 121 |

+

images = self.pipe(prompt_embeds=prompt_embeds,

|

| 122 |

+

image_garm=image_garm,

|

| 123 |

+

image_vton=image_vton,

|

| 124 |

+

mask=mask,

|

| 125 |

+

image_ori=image_ori,

|

| 126 |

+

num_inference_steps=num_steps,

|

| 127 |

+

image_guidance_scale=image_scale,

|

| 128 |

+

num_images_per_prompt=num_samples,

|

| 129 |

+

generator=generator,

|

| 130 |

+

).images

|

| 131 |

+

|

| 132 |

+

return images

|

ootd/pipelines_ootd/attention_garm.py

ADDED

|

@@ -0,0 +1,402 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|