Spaces:

Build error

Build error

Commit

•

de59d95

1

Parent(s):

ec5cf45

Upload 30 files

Browse files- .gitattributes +3 -0

- fluxgym-main/.dockerignore +16 -0

- fluxgym-main/Dockerfile +53 -0

- fluxgym-main/Dockerfile.cuda12.4 +53 -0

- fluxgym-main/README.md +259 -0

- fluxgym-main/advanced.png +0 -0

- fluxgym-main/app-launch.sh +5 -0

- fluxgym-main/app.py +1119 -0

- fluxgym-main/docker-compose.yml +28 -0

- fluxgym-main/flags.png +0 -0

- fluxgym-main/flow.gif +3 -0

- fluxgym-main/icon.png +0 -0

- fluxgym-main/install.js +96 -0

- fluxgym-main/models.yaml +27 -0

- fluxgym-main/models/.gitkeep +0 -0

- fluxgym-main/models/clip/.gitkeep +0 -0

- fluxgym-main/models/unet/.gitkeep +0 -0

- fluxgym-main/models/vae/.gitkeep +0 -0

- fluxgym-main/outputs/.gitkeep +0 -0

- fluxgym-main/pinokio.js +95 -0

- fluxgym-main/pinokio_meta.json +38 -0

- fluxgym-main/publish_to_hf.png +0 -0

- fluxgym-main/requirements.txt +34 -0

- fluxgym-main/reset.js +13 -0

- fluxgym-main/sample.png +3 -0

- fluxgym-main/sample_fields.png +0 -0

- fluxgym-main/screenshot.png +0 -0

- fluxgym-main/seed.gif +3 -0

- fluxgym-main/start.js +37 -0

- fluxgym-main/torch.js +75 -0

- fluxgym-main/update.js +46 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,6 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

fluxgym-main/flow.gif filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

fluxgym-main/sample.png filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

fluxgym-main/seed.gif filter=lfs diff=lfs merge=lfs -text

|

fluxgym-main/.dockerignore

ADDED

|

@@ -0,0 +1,16 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

.cache/

|

| 2 |

+

cudnn_windows/

|

| 3 |

+

bitsandbytes_windows/

|

| 4 |

+

bitsandbytes_windows_deprecated/

|

| 5 |

+

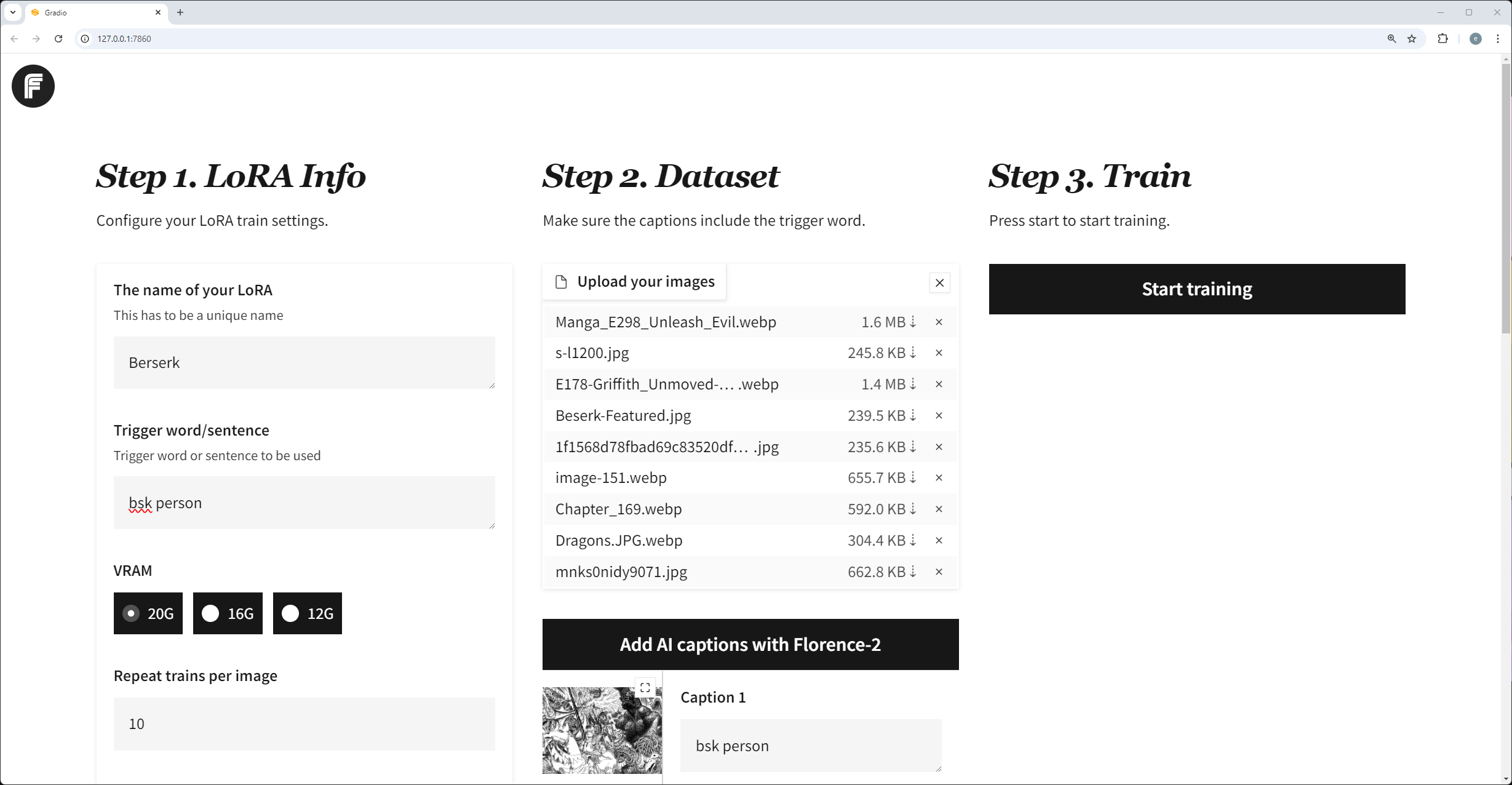

dataset/

|

| 6 |

+

__pycache__/

|

| 7 |

+

venv/

|

| 8 |

+

**/.hadolint.yml

|

| 9 |

+

**/*.log

|

| 10 |

+

**/.git

|

| 11 |

+

**/.gitignore

|

| 12 |

+

**/.env

|

| 13 |

+

**/.github

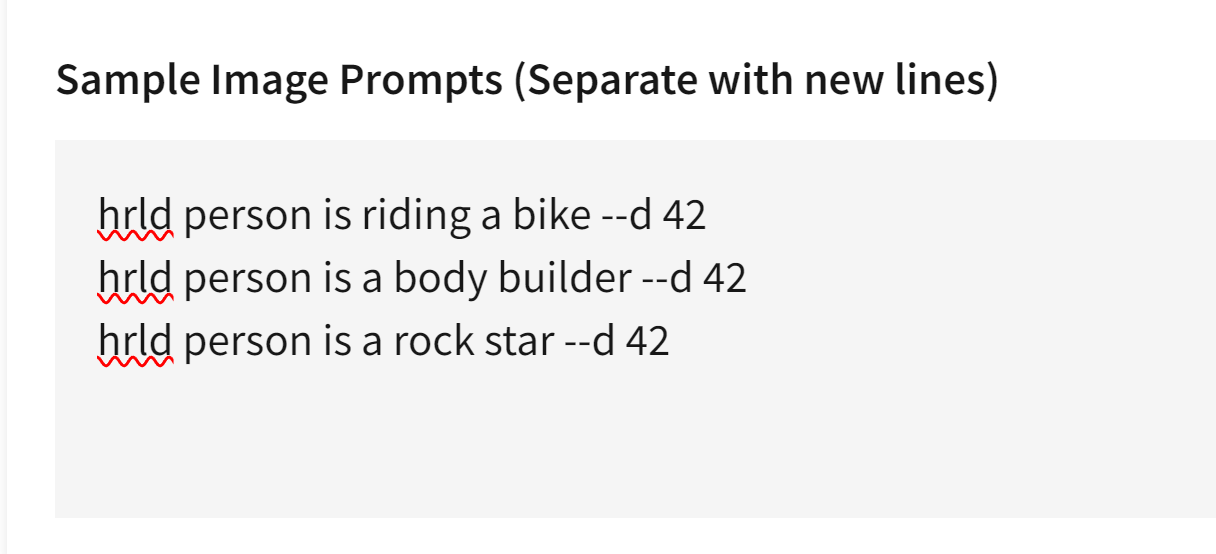

|

| 14 |

+

**/.vscode

|

| 15 |

+

**/*.ps1

|

| 16 |

+

sd-scripts/

|

fluxgym-main/Dockerfile

ADDED

|

@@ -0,0 +1,53 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

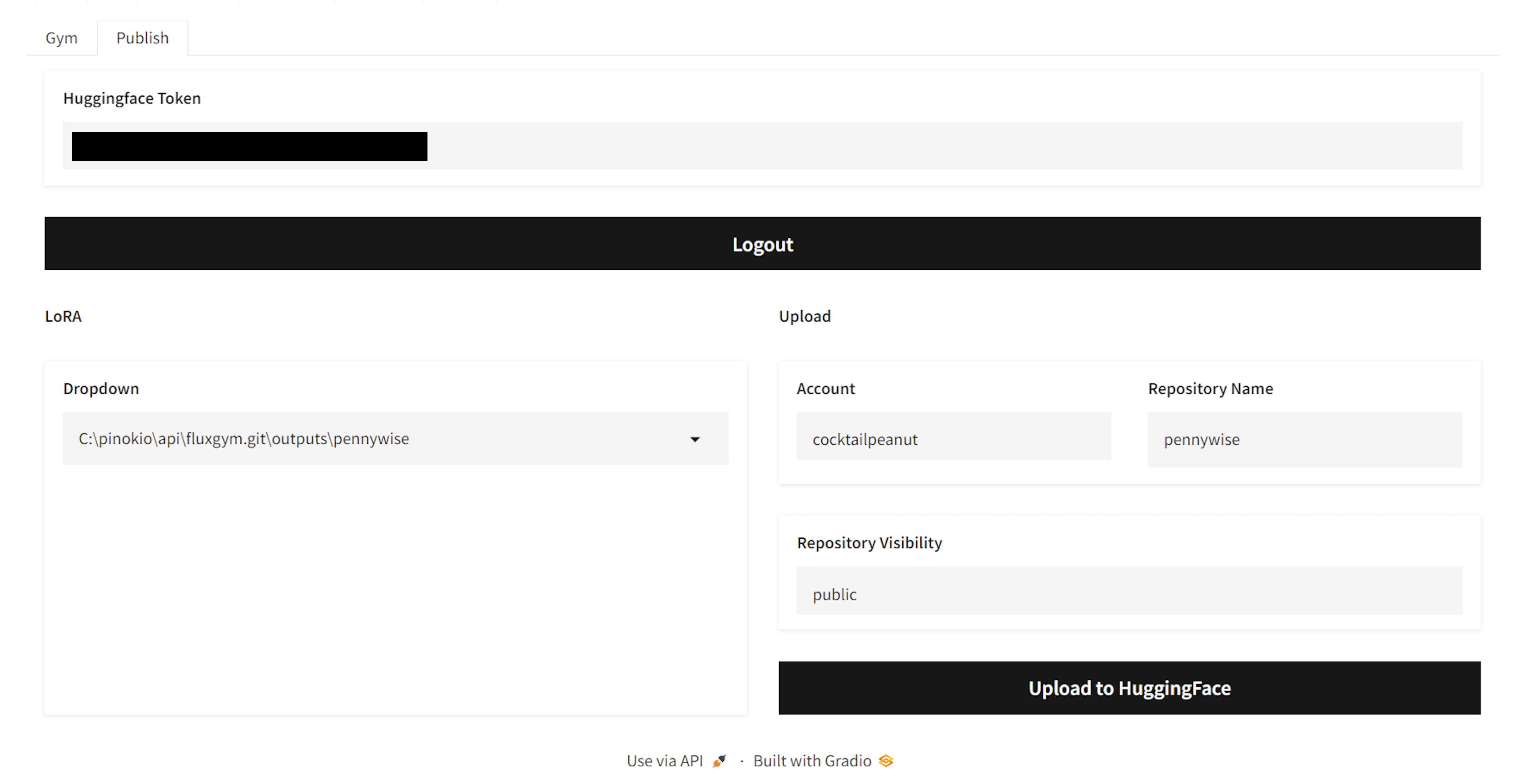

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Base image with CUDA 12.2

|

| 2 |

+

FROM nvidia/cuda:12.2.2-base-ubuntu22.04

|

| 3 |

+

|

| 4 |

+

# Install pip if not already installed

|

| 5 |

+

RUN apt-get update -y && apt-get install -y \

|

| 6 |

+

python3-pip \

|

| 7 |

+

python3-dev \

|

| 8 |

+

git \

|

| 9 |

+

build-essential # Install dependencies for building extensions

|

| 10 |

+

|

| 11 |

+

# Define environment variables for UID and GID and local timezone

|

| 12 |

+

ENV PUID=${PUID:-1000}

|

| 13 |

+

ENV PGID=${PGID:-1000}

|

| 14 |

+

|

| 15 |

+

# Create a group with the specified GID

|

| 16 |

+

RUN groupadd -g "${PGID}" appuser

|

| 17 |

+

# Create a user with the specified UID and GID

|

| 18 |

+

RUN useradd -m -s /bin/sh -u "${PUID}" -g "${PGID}" appuser

|

| 19 |

+

|

| 20 |

+

WORKDIR /app

|

| 21 |

+

|

| 22 |

+

# Get sd-scripts from kohya-ss and install them

|

| 23 |

+

RUN git clone -b sd3 https://github.com/kohya-ss/sd-scripts && \

|

| 24 |

+

cd sd-scripts && \

|

| 25 |

+

pip install --no-cache-dir -r ./requirements.txt

|

| 26 |

+

|

| 27 |

+

# Install main application dependencies

|

| 28 |

+

COPY ./requirements.txt ./requirements.txt

|

| 29 |

+

RUN pip install --no-cache-dir -r ./requirements.txt

|

| 30 |

+

|

| 31 |

+

# Install Torch, Torchvision, and Torchaudio for CUDA 12.2

|

| 32 |

+

RUN pip install torch torchvision torchaudio --extra-index-url https://download.pytorch.org/whl/cu122/torch_stable.html

|

| 33 |

+

|

| 34 |

+

RUN chown -R appuser:appuser /app

|

| 35 |

+

|

| 36 |

+

# delete redundant requirements.txt and sd-scripts directory within the container

|

| 37 |

+

RUN rm -r ./sd-scripts

|

| 38 |

+

RUN rm ./requirements.txt

|

| 39 |

+

|

| 40 |

+

#Run application as non-root

|

| 41 |

+

USER appuser

|

| 42 |

+

|

| 43 |

+

# Copy fluxgym application code

|

| 44 |

+

COPY . ./fluxgym

|

| 45 |

+

|

| 46 |

+

EXPOSE 7860

|

| 47 |

+

|

| 48 |

+

ENV GRADIO_SERVER_NAME="0.0.0.0"

|

| 49 |

+

|

| 50 |

+

WORKDIR /app/fluxgym

|

| 51 |

+

|

| 52 |

+

# Run fluxgym Python application

|

| 53 |

+

CMD ["python3", "./app.py"]

|

fluxgym-main/Dockerfile.cuda12.4

ADDED

|

@@ -0,0 +1,53 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Base image with CUDA 12.4

|

| 2 |

+

FROM nvidia/cuda:12.4.1-base-ubuntu22.04

|

| 3 |

+

|

| 4 |

+

# Install pip if not already installed

|

| 5 |

+

RUN apt-get update -y && apt-get install -y \

|

| 6 |

+

python3-pip \

|

| 7 |

+

python3-dev \

|

| 8 |

+

git \

|

| 9 |

+

build-essential # Install dependencies for building extensions

|

| 10 |

+

|

| 11 |

+

# Define environment variables for UID and GID and local timezone

|

| 12 |

+

ENV PUID=${PUID:-1000}

|

| 13 |

+

ENV PGID=${PGID:-1000}

|

| 14 |

+

|

| 15 |

+

# Create a group with the specified GID

|

| 16 |

+

RUN groupadd -g "${PGID}" appuser

|

| 17 |

+

# Create a user with the specified UID and GID

|

| 18 |

+

RUN useradd -m -s /bin/sh -u "${PUID}" -g "${PGID}" appuser

|

| 19 |

+

|

| 20 |

+

WORKDIR /app

|

| 21 |

+

|

| 22 |

+

# Get sd-scripts from kohya-ss and install them

|

| 23 |

+

RUN git clone -b sd3 https://github.com/kohya-ss/sd-scripts && \

|

| 24 |

+

cd sd-scripts && \

|

| 25 |

+

pip install --no-cache-dir -r ./requirements.txt

|

| 26 |

+

|

| 27 |

+

# Install main application dependencies

|

| 28 |

+

COPY ./requirements.txt ./requirements.txt

|

| 29 |

+

RUN pip install --no-cache-dir -r ./requirements.txt

|

| 30 |

+

|

| 31 |

+

# Install Torch, Torchvision, and Torchaudio for CUDA 12.4

|

| 32 |

+

RUN pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu124

|

| 33 |

+

|

| 34 |

+

RUN chown -R appuser:appuser /app

|

| 35 |

+

|

| 36 |

+

# delete redundant requirements.txt and sd-scripts directory within the container

|

| 37 |

+

RUN rm -r ./sd-scripts

|

| 38 |

+

RUN rm ./requirements.txt

|

| 39 |

+

|

| 40 |

+

#Run application as non-root

|

| 41 |

+

USER appuser

|

| 42 |

+

|

| 43 |

+

# Copy fluxgym application code

|

| 44 |

+

COPY . ./fluxgym

|

| 45 |

+

|

| 46 |

+

EXPOSE 7860

|

| 47 |

+

|

| 48 |

+

ENV GRADIO_SERVER_NAME="0.0.0.0"

|

| 49 |

+

|

| 50 |

+

WORKDIR /app/fluxgym

|

| 51 |

+

|

| 52 |

+

# Run fluxgym Python application

|

| 53 |

+

CMD ["python3", "./app.py"]

|

fluxgym-main/README.md

ADDED

|

@@ -0,0 +1,259 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Flux Gym

|

| 2 |

+

|

| 3 |

+

Dead simple web UI for training FLUX LoRA **with LOW VRAM (12GB/16GB/20GB) support.**

|

| 4 |

+

|

| 5 |

+

- **Frontend:** The WebUI forked from [AI-Toolkit](https://github.com/ostris/ai-toolkit) (Gradio UI created by https://x.com/multimodalart)

|

| 6 |

+

- **Backend:** The Training script powered by [Kohya Scripts](https://github.com/kohya-ss/sd-scripts)

|

| 7 |

+

|

| 8 |

+

FluxGym supports 100% of Kohya sd-scripts features through an [Advanced](#advanced) tab, which is hidden by default.

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

---

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

# What is this?

|

| 16 |

+

|

| 17 |

+

1. I wanted a super simple UI for training Flux LoRAs

|

| 18 |

+

2. The [AI-Toolkit](https://github.com/ostris/ai-toolkit) project is great, and the gradio UI contribution by [@multimodalart](https://x.com/multimodalart) is perfect, but the project only works for 24GB VRAM.

|

| 19 |

+

3. [Kohya Scripts](https://github.com/kohya-ss/sd-scripts) are very flexible and powerful for training FLUX, but you need to run in terminal.

|

| 20 |

+

4. What if you could have the simplicity of AI-Toolkit WebUI and the flexibility of Kohya Scripts?

|

| 21 |

+

5. Flux Gym was born. Supports 12GB, 16GB, 20GB VRAMs, and extensible since it uses Kohya Scripts underneath.

|

| 22 |

+

|

| 23 |

+

---

|

| 24 |

+

|

| 25 |

+

# News

|

| 26 |

+

|

| 27 |

+

- September 25: Docker support + Autodownload Models (No need to manually download models when setting up) + Support custom base models (not just flux-dev but anything, just need to include in the [models.yaml](models.yaml) file.

|

| 28 |

+

- September 16: Added "Publish to Huggingface" + 100% Kohya sd-scripts feature support: https://x.com/cocktailpeanut/status/1835719701172756592

|

| 29 |

+

- September 11: Automatic Sample Image Generation + Custom Resolution: https://x.com/cocktailpeanut/status/1833881392482066638

|

| 30 |

+

|

| 31 |

+

---

|

| 32 |

+

|

| 33 |

+

# Supported Models

|

| 34 |

+

|

| 35 |

+

1. Flux1-dev

|

| 36 |

+

2. Flux1-dev2pro (as explained here: https://medium.com/@zhiwangshi28/why-flux-lora-so-hard-to-train-and-how-to-overcome-it-a0c70bc59eaf)

|

| 37 |

+

3. Flux1-schnell (Couldn't get high quality results, so not really recommended, but feel free to experiment with it)

|

| 38 |

+

4. More?

|

| 39 |

+

|

| 40 |

+

The models are automatically downloaded when you start training with the model selected.

|

| 41 |

+

|

| 42 |

+

You can easily add more to the supported models list by editing the [models.yaml](models.yaml) file. If you want to share some interesting base models, please send a PR.

|

| 43 |

+

|

| 44 |

+

---

|

| 45 |

+

|

| 46 |

+

# How people are using Fluxgym

|

| 47 |

+

|

| 48 |

+

Here are people using Fluxgym to locally train Lora sharing their experience:

|

| 49 |

+

|

| 50 |

+

https://pinokio.computer/item?uri=https://github.com/cocktailpeanut/fluxgym

|

| 51 |

+

|

| 52 |

+

|

| 53 |

+

# More Info

|

| 54 |

+

|

| 55 |

+

To learn more, check out this X thread: https://x.com/cocktailpeanut/status/1832084951115972653

|

| 56 |

+

|

| 57 |

+

# Install

|

| 58 |

+

|

| 59 |

+

## 1. One-Click Install

|

| 60 |

+

|

| 61 |

+

You can automatically install and launch everything locally with Pinokio 1-click launcher: https://pinokio.computer/item?uri=https://github.com/cocktailpeanut/fluxgym

|

| 62 |

+

|

| 63 |

+

|

| 64 |

+

## 2. Install Manually

|

| 65 |

+

|

| 66 |

+

First clone Fluxgym and kohya-ss/sd-scripts:

|

| 67 |

+

|

| 68 |

+

```

|

| 69 |

+

git clone https://github.com/cocktailpeanut/fluxgym

|

| 70 |

+

cd fluxgym

|

| 71 |

+

git clone -b sd3 https://github.com/kohya-ss/sd-scripts

|

| 72 |

+

```

|

| 73 |

+

|

| 74 |

+

Your folder structure will look like this:

|

| 75 |

+

|

| 76 |

+

```

|

| 77 |

+

/fluxgym

|

| 78 |

+

app.py

|

| 79 |

+

requirements.txt

|

| 80 |

+

/sd-scripts

|

| 81 |

+

```

|

| 82 |

+

|

| 83 |

+

Now activate a venv from the root `fluxgym` folder:

|

| 84 |

+

|

| 85 |

+

If you're on Windows:

|

| 86 |

+

|

| 87 |

+

```

|

| 88 |

+

python -m venv env

|

| 89 |

+

env\Scripts\activate

|

| 90 |

+

```

|

| 91 |

+

|

| 92 |

+

If your're on Linux:

|

| 93 |

+

|

| 94 |

+

```

|

| 95 |

+

python -m venv env

|

| 96 |

+

source env/bin/activate

|

| 97 |

+

```

|

| 98 |

+

|

| 99 |

+

This will create an `env` folder right below the `fluxgym` folder:

|

| 100 |

+

|

| 101 |

+

```

|

| 102 |

+

/fluxgym

|

| 103 |

+

app.py

|

| 104 |

+

requirements.txt

|

| 105 |

+

/sd-scripts

|

| 106 |

+

/env

|

| 107 |

+

```

|

| 108 |

+

|

| 109 |

+

Now go to the `sd-scripts` folder and install dependencies to the activated environment:

|

| 110 |

+

|

| 111 |

+

```

|

| 112 |

+

cd sd-scripts

|

| 113 |

+

pip install -r requirements.txt

|

| 114 |

+

```

|

| 115 |

+

|

| 116 |

+

Now come back to the root folder and install the app dependencies:

|

| 117 |

+

|

| 118 |

+

```

|

| 119 |

+

cd ..

|

| 120 |

+

pip install -r requirements.txt

|

| 121 |

+

```

|

| 122 |

+

|

| 123 |

+

Finally, install pytorch Nightly:

|

| 124 |

+

|

| 125 |

+

```

|

| 126 |

+

pip install --pre torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu121

|

| 127 |

+

```

|

| 128 |

+

|

| 129 |

+

|

| 130 |

+

# Start

|

| 131 |

+

|

| 132 |

+

Go back to the root `fluxgym` folder, with the venv activated, run:

|

| 133 |

+

|

| 134 |

+

```

|

| 135 |

+

python app.py

|

| 136 |

+

```

|

| 137 |

+

|

| 138 |

+

> Make sure to have the venv activated before running `python app.py`.

|

| 139 |

+

>

|

| 140 |

+

> Windows: `env/Scripts/activate`

|

| 141 |

+

> Linux: `source env/bin/activate`

|

| 142 |

+

|

| 143 |

+

## 3. Install via Docker

|

| 144 |

+

|

| 145 |

+

First clone Fluxgym and kohya-ss/sd-scripts:

|

| 146 |

+

|

| 147 |

+

```

|

| 148 |

+

git clone https://github.com/cocktailpeanut/fluxgym

|

| 149 |

+

cd fluxgym

|

| 150 |

+

git clone -b sd3 https://github.com/kohya-ss/sd-scripts

|

| 151 |

+

```

|

| 152 |

+

Check your `user id` and `group id` and change it if it's not 1000 via `environment variables` of `PUID` and `PGID`.

|

| 153 |

+

You can find out what these are in linux by running the following command: `id`

|

| 154 |

+

|

| 155 |

+

Now build the image and run it via `docker-compose`:

|

| 156 |

+

```

|

| 157 |

+

docker compose up -d --build

|

| 158 |

+

```

|

| 159 |

+

|

| 160 |

+

Open web browser and goto the IP address of the computer/VM: http://localhost:7860

|

| 161 |

+

|

| 162 |

+

# Usage

|

| 163 |

+

|

| 164 |

+

The usage is pretty straightforward:

|

| 165 |

+

|

| 166 |

+

1. Enter the lora info

|

| 167 |

+

2. Upload images and caption them (using the trigger word)

|

| 168 |

+

3. Click "start".

|

| 169 |

+

|

| 170 |

+

That's all!

|

| 171 |

+

|

| 172 |

+

|

| 173 |

+

|

| 174 |

+

# Configuration

|

| 175 |

+

|

| 176 |

+

## Sample Images

|

| 177 |

+

|

| 178 |

+

By default fluxgym doesn't generate any sample images during training.

|

| 179 |

+

|

| 180 |

+

You can however configure Fluxgym to automatically generate sample images for every N steps. Here's what it looks like:

|

| 181 |

+

|

| 182 |

+

|

| 183 |

+

|

| 184 |

+

To turn this on, just set the two fields:

|

| 185 |

+

|

| 186 |

+

1. **Sample Image Prompts:** These prompts will be used to automatically generate images during training. If you want multiple, separate teach prompt with new line.

|

| 187 |

+

2. **Sample Image Every N Steps:** If your "Expected training steps" is 960 and your "Sample Image Every N Steps" is 100, the images will be generated at step 100, 200, 300, 400, 500, 600, 700, 800, 900, for EACH prompt.

|

| 188 |

+

|

| 189 |

+

|

| 190 |

+

|

| 191 |

+

## Advanced Sample Images

|

| 192 |

+

|

| 193 |

+

Thanks to the built-in syntax from [kohya/sd-scripts](https://github.com/kohya-ss/sd-scripts?tab=readme-ov-file#sample-image-generation-during-training), you can control exactly how the sample images are generated during the training phase:

|

| 194 |

+

|

| 195 |

+

Let's say the trigger word is **hrld person.** Normally you would try sample prompts like:

|

| 196 |

+

|

| 197 |

+

```

|

| 198 |

+

hrld person is riding a bike

|

| 199 |

+

hrld person is a body builder

|

| 200 |

+

hrld person is a rock star

|

| 201 |

+

```

|

| 202 |

+

|

| 203 |

+

But for every prompt you can include **advanced flags** to fully control the image generation process. For example, the `--d` flag lets you specify the SEED.

|

| 204 |

+

|

| 205 |

+

Specifying a seed means every sample image will use that exact seed, which means you can literally see the LoRA evolve. Here's an example usage:

|

| 206 |

+

|

| 207 |

+

```

|

| 208 |

+

hrld person is riding a bike --d 42

|

| 209 |

+

hrld person is a body builder --d 42

|

| 210 |

+

hrld person is a rock star --d 42

|

| 211 |

+

```

|

| 212 |

+

|

| 213 |

+

Here's what it looks like in the UI:

|

| 214 |

+

|

| 215 |

+

|

| 216 |

+

|

| 217 |

+

And here are the results:

|

| 218 |

+

|

| 219 |

+

|

| 220 |

+

|

| 221 |

+

In addition to the `--d` flag, here are other flags you can use:

|

| 222 |

+

|

| 223 |

+

|

| 224 |

+

- `--n`: Negative prompt up to the next option.

|

| 225 |

+

- `--w`: Specifies the width of the generated image.

|

| 226 |

+

- `--h`: Specifies the height of the generated image.

|

| 227 |

+

- `--d`: Specifies the seed of the generated image.

|

| 228 |

+

- `--l`: Specifies the CFG scale of the generated image.

|

| 229 |

+

- `--s`: Specifies the number of steps in the generation.

|

| 230 |

+

|

| 231 |

+

The prompt weighting such as `( )` and `[ ]` also work. (Learn more about [Attention/Emphasis](https://github.com/AUTOMATIC1111/stable-diffusion-webui/wiki/Features#attentionemphasis))

|

| 232 |

+

|

| 233 |

+

## Publishing to Huggingface

|

| 234 |

+

|

| 235 |

+

1. Get your Huggingface Token from https://huggingface.co/settings/tokens

|

| 236 |

+

2. Enter the token in the "Huggingface Token" field and click "Login". This will save the token text in a local file named `HF_TOKEN` (All local and private).

|

| 237 |

+

3. Once you're logged in, you will be able to select a trained LoRA from the dropdown, edit the name if you want, and publish to Huggingface.

|

| 238 |

+

|

| 239 |

+

|

| 240 |

+

|

| 241 |

+

|

| 242 |

+

## Advanced

|

| 243 |

+

|

| 244 |

+

The advanced tab is automatically constructed by parsing the launch flags available to the latest version of [kohya sd-scripts](https://github.com/kohya-ss/sd-scripts). This means Fluxgym is a full fledged UI for using the Kohya script.

|

| 245 |

+

|

| 246 |

+

> By default the advanced tab is hidden. You can click the "advanced" accordion to expand it.

|

| 247 |

+

|

| 248 |

+

|

| 249 |

+

|

| 250 |

+

|

| 251 |

+

## Advanced Features

|

| 252 |

+

|

| 253 |

+

### Uploading Caption Files

|

| 254 |

+

|

| 255 |

+

You can also upload the caption files along with the image files. You just need to follow the convention:

|

| 256 |

+

|

| 257 |

+

1. Every caption file must be a `.txt` file.

|

| 258 |

+

2. Each caption file needs to have a corresponding image file that has the same name.

|

| 259 |

+

3. For example, if you have an image file named `img0.png`, the corresponding caption file must be `img0.txt`.

|

fluxgym-main/advanced.png

ADDED

|

fluxgym-main/app-launch.sh

ADDED

|

@@ -0,0 +1,5 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/usr/bin/env bash

|

| 2 |

+

|

| 3 |

+

cd "`dirname "$0"`" || exit 1

|

| 4 |

+

. env/bin/activate

|

| 5 |

+

python app.py

|

fluxgym-main/app.py

ADDED

|

@@ -0,0 +1,1119 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import sys

|

| 3 |

+

os.environ["HF_HUB_ENABLE_HF_TRANSFER"] = "1"

|

| 4 |

+

os.environ['GRADIO_ANALYTICS_ENABLED'] = '0'

|

| 5 |

+

sys.path.insert(0, os.getcwd())

|

| 6 |

+

sys.path.append(os.path.join(os.path.dirname(__file__), 'sd-scripts'))

|

| 7 |

+

import subprocess

|

| 8 |

+

import gradio as gr

|

| 9 |

+

from PIL import Image

|

| 10 |

+

import torch

|

| 11 |

+

import uuid

|

| 12 |

+

import shutil

|

| 13 |

+

import json

|

| 14 |

+

import yaml

|

| 15 |

+

from slugify import slugify

|

| 16 |

+

from transformers import AutoProcessor, AutoModelForCausalLM

|

| 17 |

+

from gradio_logsview import LogsView, LogsViewRunner

|

| 18 |

+

from huggingface_hub import hf_hub_download, HfApi

|

| 19 |

+

from library import flux_train_utils, huggingface_util

|

| 20 |

+

from argparse import Namespace

|

| 21 |

+

import train_network

|

| 22 |

+

import toml

|

| 23 |

+

import re

|

| 24 |

+

MAX_IMAGES = 150

|

| 25 |

+

|

| 26 |

+

with open('models.yaml', 'r') as file:

|

| 27 |

+

models = yaml.safe_load(file)

|

| 28 |

+

|

| 29 |

+

def readme(base_model, lora_name, instance_prompt, sample_prompts):

|

| 30 |

+

|

| 31 |

+

# model license

|

| 32 |

+

model_config = models[base_model]

|

| 33 |

+

model_file = model_config["file"]

|

| 34 |

+

base_model_name = model_config["base"]

|

| 35 |

+

license = None

|

| 36 |

+

license_name = None

|

| 37 |

+

license_link = None

|

| 38 |

+

license_items = []

|

| 39 |

+

if "license" in model_config:

|

| 40 |

+

license = model_config["license"]

|

| 41 |

+

license_items.append(f"license: {license}")

|

| 42 |

+

if "license_name" in model_config:

|

| 43 |

+

license_name = model_config["license_name"]

|

| 44 |

+

license_items.append(f"license_name: {license_name}")

|

| 45 |

+

if "license_link" in model_config:

|

| 46 |

+

license_link = model_config["license_link"]

|

| 47 |

+

license_items.append(f"license_link: {license_link}")

|

| 48 |

+

license_str = "\n".join(license_items)

|

| 49 |

+

print(f"license_items={license_items}")

|

| 50 |

+

print(f"license_str = {license_str}")

|

| 51 |

+

|

| 52 |

+

# tags

|

| 53 |

+

tags = [ "text-to-image", "flux", "lora", "diffusers", "template:sd-lora", "fluxgym" ]

|

| 54 |

+

|

| 55 |

+

# widgets

|

| 56 |

+

widgets = []

|

| 57 |

+

sample_image_paths = []

|

| 58 |

+

output_name = slugify(lora_name)

|

| 59 |

+

samples_dir = resolve_path_without_quotes(f"outputs/{output_name}/sample")

|

| 60 |

+

try:

|

| 61 |

+

for filename in os.listdir(samples_dir):

|

| 62 |

+

# Filename Schema: [name]_[steps]_[index]_[timestamp].png

|

| 63 |

+

match = re.search(r"_(\d+)_(\d+)_(\d+)\.png$", filename)

|

| 64 |

+

if match:

|

| 65 |

+

steps, index, timestamp = int(match.group(1)), int(match.group(2)), int(match.group(3))

|

| 66 |

+

sample_image_paths.append((steps, index, f"sample/{filename}"))

|

| 67 |

+

|

| 68 |

+

# Sort by numeric index

|

| 69 |

+

sample_image_paths.sort(key=lambda x: x[0], reverse=True)

|

| 70 |

+

|

| 71 |

+

final_sample_image_paths = sample_image_paths[:len(sample_prompts)]

|

| 72 |

+

final_sample_image_paths.sort(key=lambda x: x[1])

|

| 73 |

+

for i, prompt in enumerate(sample_prompts):

|

| 74 |

+

_, _, image_path = final_sample_image_paths[i]

|

| 75 |

+

widgets.append(

|

| 76 |

+

{

|

| 77 |

+

"text": prompt,

|

| 78 |

+

"output": {

|

| 79 |

+

"url": image_path

|

| 80 |

+

},

|

| 81 |

+

}

|

| 82 |

+

)

|

| 83 |

+

except:

|

| 84 |

+

print(f"no samples")

|

| 85 |

+

dtype = "torch.bfloat16"

|

| 86 |

+

# Construct the README content

|

| 87 |

+

readme_content = f"""---

|

| 88 |

+

tags:

|

| 89 |

+

{yaml.dump(tags, indent=4).strip()}

|

| 90 |

+

{"widget:" if os.path.isdir(samples_dir) else ""}

|

| 91 |

+

{yaml.dump(widgets, indent=4).strip() if widgets else ""}

|

| 92 |

+

base_model: {base_model_name}

|

| 93 |

+

{"instance_prompt: " + instance_prompt if instance_prompt else ""}

|

| 94 |

+

{license_str}

|

| 95 |

+

---

|

| 96 |

+

|

| 97 |

+

# {lora_name}

|

| 98 |

+

|

| 99 |

+

A Flux LoRA trained on a local computer with [Fluxgym](https://github.com/cocktailpeanut/fluxgym)

|

| 100 |

+

|

| 101 |

+

<Gallery />

|

| 102 |

+

|

| 103 |

+

## Trigger words

|

| 104 |

+

|

| 105 |

+

{"You should use `" + instance_prompt + "` to trigger the image generation." if instance_prompt else "No trigger words defined."}

|

| 106 |

+

|

| 107 |

+

## Download model and use it with ComfyUI, AUTOMATIC1111, SD.Next, Invoke AI, Forge, etc.

|

| 108 |

+

|

| 109 |

+

Weights for this model are available in Safetensors format.

|

| 110 |

+

|

| 111 |

+

"""

|

| 112 |

+

return readme_content

|

| 113 |

+

|

| 114 |

+

def account_hf():

|

| 115 |

+

try:

|

| 116 |

+

with open("HF_TOKEN", "r") as file:

|

| 117 |

+

token = file.read()

|

| 118 |

+

api = HfApi(token=token)

|

| 119 |

+

try:

|

| 120 |

+

account = api.whoami()

|

| 121 |

+

return { "token": token, "account": account['name'] }

|

| 122 |

+

except:

|

| 123 |

+

return None

|

| 124 |

+

except:

|

| 125 |

+

return None

|

| 126 |

+

|

| 127 |

+

"""

|

| 128 |

+

hf_logout.click(fn=logout_hf, outputs=[hf_token, hf_login, hf_logout, repo_owner])

|

| 129 |

+

"""

|

| 130 |

+

def logout_hf():

|

| 131 |

+

os.remove("HF_TOKEN")

|

| 132 |

+

global current_account

|

| 133 |

+

current_account = account_hf()

|

| 134 |

+

print(f"current_account={current_account}")

|

| 135 |

+

return gr.update(value=""), gr.update(visible=True), gr.update(visible=False), gr.update(value="", visible=False)

|

| 136 |

+

|

| 137 |

+

|

| 138 |

+

"""

|

| 139 |

+

hf_login.click(fn=login_hf, inputs=[hf_token], outputs=[hf_token, hf_login, hf_logout, repo_owner])

|

| 140 |

+

"""

|

| 141 |

+

def login_hf(hf_token):

|

| 142 |

+

api = HfApi(token=hf_token)

|

| 143 |

+

try:

|

| 144 |

+

account = api.whoami()

|

| 145 |

+

if account != None:

|

| 146 |

+

if "name" in account:

|

| 147 |

+

with open("HF_TOKEN", "w") as file:

|

| 148 |

+

file.write(hf_token)

|

| 149 |

+

global current_account

|

| 150 |

+

current_account = account_hf()

|

| 151 |

+

return gr.update(visible=True), gr.update(visible=False), gr.update(visible=True), gr.update(value=current_account["account"], visible=True)

|

| 152 |

+

return gr.update(), gr.update(), gr.update(), gr.update()

|

| 153 |

+

except:

|

| 154 |

+

print(f"incorrect hf_token")

|

| 155 |

+

return gr.update(), gr.update(), gr.update(), gr.update()

|

| 156 |

+

|

| 157 |

+

def upload_hf(base_model, lora_rows, repo_owner, repo_name, repo_visibility, hf_token):

|

| 158 |

+

src = lora_rows

|

| 159 |

+

repo_id = f"{repo_owner}/{repo_name}"

|

| 160 |

+

gr.Info(f"Uploading to Huggingface. Please Stand by...", duration=None)

|

| 161 |

+

args = Namespace(

|

| 162 |

+

huggingface_repo_id=repo_id,

|

| 163 |

+

huggingface_repo_type="model",

|

| 164 |

+

huggingface_repo_visibility=repo_visibility,

|

| 165 |

+

huggingface_path_in_repo="",

|

| 166 |

+

huggingface_token=hf_token,

|

| 167 |

+

async_upload=False

|

| 168 |

+

)

|

| 169 |

+

print(f"upload_hf args={args}")

|

| 170 |

+

huggingface_util.upload(args=args, src=src)

|

| 171 |

+

gr.Info(f"[Upload Complete] https://huggingface.co/{repo_id}", duration=None)

|

| 172 |

+

|

| 173 |

+

def load_captioning(uploaded_files, concept_sentence):

|

| 174 |

+

uploaded_images = [file for file in uploaded_files if not file.endswith('.txt')]

|

| 175 |

+

txt_files = [file for file in uploaded_files if file.endswith('.txt')]

|