alvanli

commited on

Commit

•

1f43fd8

1

Parent(s):

844bec9

Add cheese model

Browse files- .gitignore +168 -0

- Dockerfile +16 -0

- FROMAGe_example_notebook.ipynb +0 -0

- README.md +1 -1

- app.py +125 -0

- example_1.png +0 -0

- example_2.png +0 -0

- example_3.png +0 -0

- fromage/__init__.py +0 -0

- fromage/data.py +129 -0

- fromage/evaluate.py +307 -0

- fromage/losses.py +44 -0

- fromage/models.py +658 -0

- fromage/utils.py +250 -0

- fromage_model/fromage_vis4/cc3m_embeddings.pkl +3 -0

- fromage_model/fromage_vis4/model_args.json +16 -0

- fromage_model/model_args.json +22 -0

- main.py +642 -0

- requirements.txt +35 -0

.gitignore

ADDED

|

@@ -0,0 +1,168 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

.DS_Store

|

| 2 |

+

*.pyc

|

| 3 |

+

__pycache__

|

| 4 |

+

.pytest_cache

|

| 5 |

+

venv

|

| 6 |

+

runs/

|

| 7 |

+

data/

|

| 8 |

+

|

| 9 |

+

# Byte-compiled / optimized / DLL files

|

| 10 |

+

__pycache__/

|

| 11 |

+

*.py[cod]

|

| 12 |

+

*$py.class

|

| 13 |

+

|

| 14 |

+

# C extensions

|

| 15 |

+

*.so

|

| 16 |

+

|

| 17 |

+

# Distribution / packaging

|

| 18 |

+

.Python

|

| 19 |

+

build/

|

| 20 |

+

develop-eggs/

|

| 21 |

+

dist/

|

| 22 |

+

downloads/

|

| 23 |

+

eggs/

|

| 24 |

+

.eggs/

|

| 25 |

+

lib/

|

| 26 |

+

lib64/

|

| 27 |

+

parts/

|

| 28 |

+

sdist/

|

| 29 |

+

var/

|

| 30 |

+

wheels/

|

| 31 |

+

share/python-wheels/

|

| 32 |

+

*.egg-info/

|

| 33 |

+

.installed.cfg

|

| 34 |

+

*.egg

|

| 35 |

+

MANIFEST

|

| 36 |

+

|

| 37 |

+

# PyInstaller

|

| 38 |

+

# Usually these files are written by a python script from a template

|

| 39 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 40 |

+

*.manifest

|

| 41 |

+

*.spec

|

| 42 |

+

|

| 43 |

+

# Installer logs

|

| 44 |

+

pip-log.txt

|

| 45 |

+

pip-delete-this-directory.txt

|

| 46 |

+

|

| 47 |

+

# Unit test / coverage reports

|

| 48 |

+

htmlcov/

|

| 49 |

+

.tox/

|

| 50 |

+

.nox/

|

| 51 |

+

.coverage

|

| 52 |

+

.coverage.*

|

| 53 |

+

.cache

|

| 54 |

+

nosetests.xml

|

| 55 |

+

coverage.xml

|

| 56 |

+

*.cover

|

| 57 |

+

*.py,cover

|

| 58 |

+

.hypothesis/

|

| 59 |

+

.pytest_cache/

|

| 60 |

+

cover/

|

| 61 |

+

|

| 62 |

+

# Translations

|

| 63 |

+

*.mo

|

| 64 |

+

*.pot

|

| 65 |

+

|

| 66 |

+

# Django stuff:

|

| 67 |

+

*.log

|

| 68 |

+

local_settings.py

|

| 69 |

+

db.sqlite3

|

| 70 |

+

db.sqlite3-journal

|

| 71 |

+

|

| 72 |

+

# Flask stuff:

|

| 73 |

+

instance/

|

| 74 |

+

.webassets-cache

|

| 75 |

+

|

| 76 |

+

# Scrapy stuff:

|

| 77 |

+

.scrapy

|

| 78 |

+

|

| 79 |

+

# Sphinx documentation

|

| 80 |

+

docs/_build/

|

| 81 |

+

|

| 82 |

+

# PyBuilder

|

| 83 |

+

.pybuilder/

|

| 84 |

+

target/

|

| 85 |

+

|

| 86 |

+

# Jupyter Notebook

|

| 87 |

+

.ipynb_checkpoints

|

| 88 |

+

|

| 89 |

+

# IPython

|

| 90 |

+

profile_default/

|

| 91 |

+

ipython_config.py

|

| 92 |

+

|

| 93 |

+

# pyenv

|

| 94 |

+

# For a library or package, you might want to ignore these files since the code is

|

| 95 |

+

# intended to run in multiple environments; otherwise, check them in:

|

| 96 |

+

# .python-version

|

| 97 |

+

|

| 98 |

+

# pipenv

|

| 99 |

+

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

| 100 |

+

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

| 101 |

+

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

| 102 |

+

# install all needed dependencies.

|

| 103 |

+

#Pipfile.lock

|

| 104 |

+

|

| 105 |

+

# poetry

|

| 106 |

+

# Similar to Pipfile.lock, it is generally recommended to include poetry.lock in version control.

|

| 107 |

+

# This is especially recommended for binary packages to ensure reproducibility, and is more

|

| 108 |

+

# commonly ignored for libraries.

|

| 109 |

+

# https://python-poetry.org/docs/basic-usage/#commit-your-poetrylock-file-to-version-control

|

| 110 |

+

#poetry.lock

|

| 111 |

+

|

| 112 |

+

# pdm

|

| 113 |

+

# Similar to Pipfile.lock, it is generally recommended to include pdm.lock in version control.

|

| 114 |

+

#pdm.lock

|

| 115 |

+

# pdm stores project-wide configurations in .pdm.toml, but it is recommended to not include it

|

| 116 |

+

# in version control.

|

| 117 |

+

# https://pdm.fming.dev/#use-with-ide

|

| 118 |

+

.pdm.toml

|

| 119 |

+

|

| 120 |

+

# PEP 582; used by e.g. github.com/David-OConnor/pyflow and github.com/pdm-project/pdm

|

| 121 |

+

__pypackages__/

|

| 122 |

+

|

| 123 |

+

# Celery stuff

|

| 124 |

+

celerybeat-schedule

|

| 125 |

+

celerybeat.pid

|

| 126 |

+

|

| 127 |

+

# SageMath parsed files

|

| 128 |

+

*.sage.py

|

| 129 |

+

|

| 130 |

+

# Environments

|

| 131 |

+

.env

|

| 132 |

+

.venv

|

| 133 |

+

env/

|

| 134 |

+

venv/

|

| 135 |

+

ENV/

|

| 136 |

+

env.bak/

|

| 137 |

+

venv.bak/

|

| 138 |

+

|

| 139 |

+

# Spyder project settings

|

| 140 |

+

.spyderproject

|

| 141 |

+

.spyproject

|

| 142 |

+

|

| 143 |

+

# Rope project settings

|

| 144 |

+

.ropeproject

|

| 145 |

+

|

| 146 |

+

# mkdocs documentation

|

| 147 |

+

/site

|

| 148 |

+

|

| 149 |

+

# mypy

|

| 150 |

+

.mypy_cache/

|

| 151 |

+

.dmypy.json

|

| 152 |

+

dmypy.json

|

| 153 |

+

|

| 154 |

+

# Pyre type checker

|

| 155 |

+

.pyre/

|

| 156 |

+

|

| 157 |

+

# pytype static type analyzer

|

| 158 |

+

.pytype/

|

| 159 |

+

|

| 160 |

+

# Cython debug symbols

|

| 161 |

+

cython_debug/

|

| 162 |

+

|

| 163 |

+

# PyCharm

|

| 164 |

+

# JetBrains specific template is maintained in a separate JetBrains.gitignore that can

|

| 165 |

+

# be found at https://github.com/github/gitignore/blob/main/Global/JetBrains.gitignore

|

| 166 |

+

# and can be added to the global gitignore or merged into this file. For a more nuclear

|

| 167 |

+

# option (not recommended) you can uncomment the following to ignore the entire idea folder.

|

| 168 |

+

#.idea/

|

Dockerfile

ADDED

|

@@ -0,0 +1,16 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

FROM pytorch/pytorch:1.11.0-cuda11.3-cudnn8-devel as base

|

| 2 |

+

RUN apt-key adv --keyserver keyserver.ubuntu.com --recv-keys A4B469963BF863CC

|

| 3 |

+

|

| 4 |

+

ENV HOME=/exp/fromage

|

| 5 |

+

|

| 6 |

+

RUN apt-get update && apt-get -y install git

|

| 7 |

+

|

| 8 |

+

WORKDIR /exp/fromage

|

| 9 |

+

COPY ./requirements.txt ./requirements.txt

|

| 10 |

+

RUN python -m pip install -r ./requirements.txt

|

| 11 |

+

RUN python -m pip install gradio

|

| 12 |

+

|

| 13 |

+

COPY . .

|

| 14 |

+

RUN chmod -R a+rwX .

|

| 15 |

+

|

| 16 |

+

CMD ["uvicorn", "app:main", "--host", "0.0.0.0", "--port", "7860"]

|

FROMAGe_example_notebook.ipynb

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

README.md

CHANGED

|

@@ -1,6 +1,6 @@

|

|

| 1 |

---

|

| 2 |

title: FROMAGe

|

| 3 |

-

emoji:

|

| 4 |

colorFrom: pink

|

| 5 |

colorTo: red

|

| 6 |

sdk: docker

|

|

|

|

| 1 |

---

|

| 2 |

title: FROMAGe

|

| 3 |

+

emoji: 🧀

|

| 4 |

colorFrom: pink

|

| 5 |

colorTo: red

|

| 6 |

sdk: docker

|

app.py

ADDED

|

@@ -0,0 +1,125 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

eexitimport os, time, copy

|

| 2 |

+

os.environ["HF_HUB_ENABLE_HF_TRANSFER"] = "False"

|

| 3 |

+

|

| 4 |

+

from PIL import Image

|

| 5 |

+

|

| 6 |

+

import gradio as gr

|

| 7 |

+

|

| 8 |

+

import numpy as np

|

| 9 |

+

import torch

|

| 10 |

+

from transformers import logging

|

| 11 |

+

logging.set_verbosity_error()

|

| 12 |

+

|

| 13 |

+

from fromage import models

|

| 14 |

+

from fromage import utils

|

| 15 |

+

|

| 16 |

+

BASE_WIDTH = 512

|

| 17 |

+

MODEL_DIR = './fromage_model/fromage_vis4'

|

| 18 |

+

|

| 19 |

+

def upload_image(file):

|

| 20 |

+

return Image.open(file)

|

| 21 |

+

|

| 22 |

+

def upload_button_config():

|

| 23 |

+

return gr.update(visible=False)

|

| 24 |

+

|

| 25 |

+

def upload_textbox_config(text_in):

|

| 26 |

+

return gr.update(visible=True)

|

| 27 |

+

|

| 28 |

+

|

| 29 |

+

class ChatBotCheese:

|

| 30 |

+

def __init__(self):

|

| 31 |

+

from huggingface_hub import hf_hub_download

|

| 32 |

+

model_ckpt_path = hf_hub_download("alvanlii/fromage", "pretrained_ckpt.pth.tar")

|

| 33 |

+

self.model = models.load_fromage(MODEL_DIR, model_ckpt_path)

|

| 34 |

+

self.curr_image = None

|

| 35 |

+

self.chat_history = ''

|

| 36 |

+

|

| 37 |

+

def add_image(self, state, image_in):

|

| 38 |

+

state = state + [(f"", "Ok, now type your message")]

|

| 39 |

+

self.curr_image = Image.open(image_in.name).convert('RGB')

|

| 40 |

+

return state, state

|

| 41 |

+

|

| 42 |

+

def save_im(self, image_pil):

|

| 43 |

+

file_name = f"{int(time.time())}_{np.random.randint(100)}.png"

|

| 44 |

+

image_pil.save(file_name)

|

| 45 |

+

return file_name

|

| 46 |

+

|

| 47 |

+

def chat(self, input_text, state, ret_scale_factor, num_ims, num_words, temp):

|

| 48 |

+

# model_outputs = ["heyo", []]

|

| 49 |

+

self.chat_history += f'Q: {input_text} \nA:'

|

| 50 |

+

if self.curr_image is not None:

|

| 51 |

+

model_outputs = self.model.generate_for_images_and_texts([self.curr_image, self.chat_history], num_words=num_words, max_num_rets=num_ims, ret_scale_factor=ret_scale_factor, temperature=temp)

|

| 52 |

+

else:

|

| 53 |

+

model_outputs = self.model.generate_for_images_and_texts([self.chat_history], max_num_rets=num_ims, num_words=num_words, ret_scale_factor=ret_scale_factor, temperature=temp)

|

| 54 |

+

self.chat_history += ' '.join([s for s in model_outputs if type(s) == str]) + '\n'

|

| 55 |

+

|

| 56 |

+

im_names = []

|

| 57 |

+

if len(model_outputs) > 1:

|

| 58 |

+

im_names = [self.save_im(im) for im in model_outputs[1]]

|

| 59 |

+

|

| 60 |

+

response = model_outputs[0]

|

| 61 |

+

for im_name in im_names:

|

| 62 |

+

response += f'<img src="/file={im_name}">'

|

| 63 |

+

state.append((input_text, response.replace("[RET]", "")))

|

| 64 |

+

self.curr_image = None

|

| 65 |

+

return state, state

|

| 66 |

+

|

| 67 |

+

def reset(self):

|

| 68 |

+

self.chat_history = ""

|

| 69 |

+

self.curr_image = None

|

| 70 |

+

return [], []

|

| 71 |

+

|

| 72 |

+

def main(self):

|

| 73 |

+

with gr.Blocks(css="#chatbot .overflow-y-auto{height:1500px}") as demo:

|

| 74 |

+

gr.Markdown(

|

| 75 |

+

"""

|

| 76 |

+

## FROMAGe

|

| 77 |

+

### Grounding Language Models to Images for Multimodal Generation

|

| 78 |

+

Jing Yu Koh, Ruslan Salakhutdinov, Daniel Fried <br/>

|

| 79 |

+

[Paper](https://arxiv.org/abs/2301.13823) [Github](https://github.com/kohjingyu/fromage) <br/>

|

| 80 |

+

- Upload an image (optional)

|

| 81 |

+

- Chat with FROMAGe!

|

| 82 |

+

- Check out the examples at the bottom!

|

| 83 |

+

"""

|

| 84 |

+

)

|

| 85 |

+

|

| 86 |

+

chatbot = gr.Chatbot(elem_id="chatbot")

|

| 87 |

+

gr_state = gr.State([])

|

| 88 |

+

|

| 89 |

+

with gr.Row():

|

| 90 |

+

with gr.Column(scale=0.85):

|

| 91 |

+

txt = gr.Textbox(show_label=False, placeholder="Upload an image first [Optional]. Then enter text and press enter,").style(container=False)

|

| 92 |

+

with gr.Column(scale=0.15, min_width=0):

|

| 93 |

+

btn = gr.UploadButton("🖼️", file_types=["image"])

|

| 94 |

+

|

| 95 |

+

with gr.Row():

|

| 96 |

+

with gr.Column(scale=0.20, min_width=0):

|

| 97 |

+

reset_btn = gr.Button("Reset Messages")

|

| 98 |

+

gr_ret_scale_factor = gr.Number(value=1.0, label="Increased prob of returning images", interactive=True)

|

| 99 |

+

gr_num_ims = gr.Number(value=3, precision=1, label="Max # of Images returned", interactive=True)

|

| 100 |

+

gr_num_words = gr.Number(value=32, precision=1, label="Max # of words returned", interactive=True)

|

| 101 |

+

gr_temp = gr.Number(value=0.0, label="Temperature", interactive=True)

|

| 102 |

+

|

| 103 |

+

with gr.Row():

|

| 104 |

+

gr.Image("example_1.png", label="Example 1")

|

| 105 |

+

gr.Image("example_2.png", label="Example 2")

|

| 106 |

+

gr.Image("example_3.png", label="Example 3")

|

| 107 |

+

|

| 108 |

+

|

| 109 |

+

txt.submit(self.chat, [txt, gr_state, gr_ret_scale_factor, gr_num_ims, gr_num_words, gr_temp], [gr_state, chatbot])

|

| 110 |

+

txt.submit(lambda :"", None, txt)

|

| 111 |

+

btn.upload(self.add_image, [gr_state, btn], [gr_state, chatbot])

|

| 112 |

+

reset_btn.click(self.reset, [], [gr_state, chatbot])

|

| 113 |

+

|

| 114 |

+

# chatbot.change(fn = upload_button_config, outputs=btn_upload)

|

| 115 |

+

# text_in.submit(None, [], [], _js = "() => document.getElementById('#chatbot-component').scrollTop = document.getElementById('#chatbot-component').scrollHeight")

|

| 116 |

+

|

| 117 |

+

demo.launch(share=False, server_name="0.0.0.0")

|

| 118 |

+

|

| 119 |

+

def main():

|

| 120 |

+

cheddar = ChatBotCheese()

|

| 121 |

+

cheddar.main()

|

| 122 |

+

|

| 123 |

+

if __name__ == "__main__":

|

| 124 |

+

cheddar = ChatBotCheese()

|

| 125 |

+

cheddar.main()

|

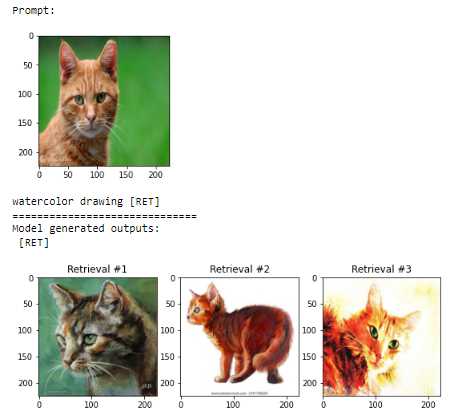

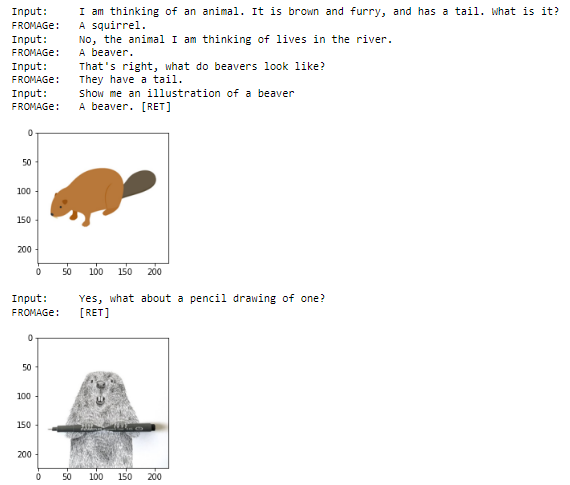

example_1.png

ADDED

|

example_2.png

ADDED

|

example_3.png

ADDED

|

fromage/__init__.py

ADDED

|

File without changes

|

fromage/data.py

ADDED

|

@@ -0,0 +1,129 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""Modified from https://github.com/mlfoundations/open_clip"""

|

| 2 |

+

|

| 3 |

+

from typing import Optional, Tuple

|

| 4 |

+

|

| 5 |

+

import collections

|

| 6 |

+

import logging

|

| 7 |

+

import os

|

| 8 |

+

import numpy as np

|

| 9 |

+

import pandas as pd

|

| 10 |

+

import torch

|

| 11 |

+

import torchvision.datasets as datasets

|

| 12 |

+

from torchvision import transforms as T

|

| 13 |

+

from PIL import Image, ImageFont

|

| 14 |

+

from torch.utils.data import Dataset

|

| 15 |

+

|

| 16 |

+

from fromage import utils

|

| 17 |

+

|

| 18 |

+

|

| 19 |

+

def collate_fn(batch):

|

| 20 |

+

batch = list(filter(lambda x: x is not None, batch))

|

| 21 |

+

return torch.utils.data.dataloader.default_collate(batch)

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

def get_dataset(args, split: str, tokenizer, precision: str = 'fp32') -> Dataset:

|

| 25 |

+

assert split in ['train', 'val'

|

| 26 |

+

], 'Expected split to be one of "train" or "val", got {split} instead.'

|

| 27 |

+

|

| 28 |

+

dataset_paths = []

|

| 29 |

+

image_data_dirs = []

|

| 30 |

+

train = split == 'train'

|

| 31 |

+

|

| 32 |

+

# Default configs for datasets.

|

| 33 |

+

# Folder structure should look like:

|

| 34 |

+

if split == 'train':

|

| 35 |

+

if 'cc3m' in args.dataset:

|

| 36 |

+

dataset_paths.append(os.path.join(args.dataset_dir, 'cc3m_train.tsv'))

|

| 37 |

+

image_data_dirs.append(os.path.join(args.image_dir, 'cc3m/training/'))

|

| 38 |

+

else:

|

| 39 |

+

raise NotImplementedError

|

| 40 |

+

|

| 41 |

+

elif split == 'val':

|

| 42 |

+

if 'cc3m' in args.val_dataset:

|

| 43 |

+

dataset_paths.append(os.path.join(args.dataset_dir, 'cc3m_val.tsv'))

|

| 44 |

+

image_data_dirs.append(os.path.join(args.image_dir, 'cc3m/validation'))

|

| 45 |

+

else:

|

| 46 |

+

raise NotImplementedError

|

| 47 |

+

|

| 48 |

+

assert len(dataset_paths) == len(image_data_dirs) == 1, (dataset_paths, image_data_dirs)

|

| 49 |

+

else:

|

| 50 |

+

raise NotImplementedError

|

| 51 |

+

|

| 52 |

+

if len(dataset_paths) > 1:

|

| 53 |

+

print(f'{len(dataset_paths)} datasets requested: {dataset_paths}')

|

| 54 |

+

dataset = torch.utils.data.ConcatDataset([

|

| 55 |

+

CsvDataset(path, image_dir, tokenizer, 'image',

|

| 56 |

+

'caption', args.visual_model, train=train, max_len=args.max_len, precision=args.precision,

|

| 57 |

+

image_size=args.image_size, retrieval_token_idx=args.retrieval_token_idx)

|

| 58 |

+

for (path, image_dir) in zip(dataset_paths, image_data_dirs)])

|

| 59 |

+

elif len(dataset_paths) == 1:

|

| 60 |

+

dataset = CsvDataset(dataset_paths[0], image_data_dirs[0], tokenizer, 'image',

|

| 61 |

+

'caption', args.visual_model, train=train, max_len=args.max_len, precision=args.precision,

|

| 62 |

+

image_size=args.image_size, retrieval_token_idx=args.retrieval_token_idx)

|

| 63 |

+

else:

|

| 64 |

+

raise ValueError(f'There should be at least one valid dataset, got train={args.dataset}, val={args.val_dataset} instead.')

|

| 65 |

+

return dataset

|

| 66 |

+

|

| 67 |

+

|

| 68 |

+

class CsvDataset(Dataset):

|

| 69 |

+

def __init__(self, input_filename, base_image_dir, tokenizer, img_key,

|

| 70 |

+

caption_key, feature_extractor_model: str,

|

| 71 |

+

train: bool = True, max_len: int = 32, sep="\t", precision: str = 'fp32',

|

| 72 |

+

image_size: int = 224, retrieval_token_idx: int = -1):

|

| 73 |

+

logging.debug(f'Loading tsv data from {input_filename}.')

|

| 74 |

+

df = pd.read_csv(input_filename, sep=sep)

|

| 75 |

+

|

| 76 |

+

self.base_image_dir = base_image_dir

|

| 77 |

+

self.images = df[img_key].tolist()

|

| 78 |

+

self.captions = df[caption_key].tolist()

|

| 79 |

+

assert len(self.images) == len(self.captions)

|

| 80 |

+

|

| 81 |

+

self.feature_extractor_model = feature_extractor_model

|

| 82 |

+

self.feature_extractor = utils.get_feature_extractor_for_model(

|

| 83 |

+

feature_extractor_model, image_size=image_size, train=False)

|

| 84 |

+

self.image_size = image_size

|

| 85 |

+

|

| 86 |

+

self.tokenizer = tokenizer

|

| 87 |

+

self.max_len = max_len

|

| 88 |

+

self.precision = precision

|

| 89 |

+

self.retrieval_token_idx = retrieval_token_idx

|

| 90 |

+

|

| 91 |

+

self.font = None

|

| 92 |

+

|

| 93 |

+

logging.debug('Done loading data.')

|

| 94 |

+

|

| 95 |

+

def __len__(self):

|

| 96 |

+

return len(self.captions)

|

| 97 |

+

|

| 98 |

+

def __getitem__(self, idx):

|

| 99 |

+

while True:

|

| 100 |

+

image_path = os.path.join(self.base_image_dir, str(self.images[idx]))

|

| 101 |

+

caption = str(self.captions[idx])

|

| 102 |

+

|

| 103 |

+

try:

|

| 104 |

+

img = Image.open(image_path)

|

| 105 |

+

images = utils.get_pixel_values_for_model(self.feature_extractor, img)

|

| 106 |

+

|

| 107 |

+

caption += '[RET]'

|

| 108 |

+

tokenized_data = self.tokenizer(

|

| 109 |

+

caption,

|

| 110 |

+

return_tensors="pt",

|

| 111 |

+

padding='max_length',

|

| 112 |

+

truncation=True,

|

| 113 |

+

max_length=self.max_len)

|

| 114 |

+

tokens = tokenized_data.input_ids[0]

|

| 115 |

+

|

| 116 |

+

caption_len = tokenized_data.attention_mask[0].sum()

|

| 117 |

+

|

| 118 |

+

decode_caption = self.tokenizer.decode(tokens, skip_special_tokens=False)

|

| 119 |

+

self.font = self.font or ImageFont.load_default()

|

| 120 |

+

cap_img = utils.create_image_of_text(decode_caption.encode('ascii', 'ignore'), width=self.image_size, nrows=2, font=self.font)

|

| 121 |

+

|

| 122 |

+

if tokens[-1] not in [self.retrieval_token_idx, self.tokenizer.pad_token_id]:

|

| 123 |

+

tokens[-1] = self.retrieval_token_idx

|

| 124 |

+

|

| 125 |

+

return image_path, images, cap_img, tokens, caption_len

|

| 126 |

+

except Exception as e:

|

| 127 |

+

print(f'Error reading {image_path} with caption {caption}: {e}')

|

| 128 |

+

# Pick a new example at random.

|

| 129 |

+

idx = np.random.randint(0, len(self)-1)

|

fromage/evaluate.py

ADDED

|

@@ -0,0 +1,307 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import collections

|

| 2 |

+

import json

|

| 3 |

+

import os

|

| 4 |

+

from PIL import Image

|

| 5 |

+

import numpy as np

|

| 6 |

+

import time

|

| 7 |

+

import tqdm

|

| 8 |

+

import torch

|

| 9 |

+

import torch.distributed as dist

|

| 10 |

+

from torch.utils.tensorboard import SummaryWriter

|

| 11 |

+

from torchmetrics import BLEUScore

|

| 12 |

+

import torchvision

|

| 13 |

+

|

| 14 |

+

from fromage import losses as losses_utils

|

| 15 |

+

from fromage import utils

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

def validate(val_loader, model, tokenizer, criterion, epoch, args):

|

| 19 |

+

ngpus_per_node = torch.cuda.device_count()

|

| 20 |

+

writer = SummaryWriter(args.log_dir)

|

| 21 |

+

bleu_scorers = [BLEUScore(n_gram=i) for i in [1, 2, 3, 4]]

|

| 22 |

+

actual_step = (epoch + 1) * args.steps_per_epoch

|

| 23 |

+

model_modes = ['captioning', 'retrieval']

|

| 24 |

+

num_words = 32 # Number of tokens to generate.

|

| 25 |

+

|

| 26 |

+

feature_extractor = utils.get_feature_extractor_for_model(args.visual_model, image_size=args.image_size, train=False)

|

| 27 |

+

|

| 28 |

+

def get_pixel_values_from_path(path: str):

|

| 29 |

+

img = Image.open(path)

|

| 30 |

+

img = img.resize((args.image_size, args.image_size))

|

| 31 |

+

pixel_values = utils.get_pixel_values_for_model(feature_extractor, img)[None, ...]

|

| 32 |

+

|

| 33 |

+

if args.precision == 'fp16':

|

| 34 |

+

pixel_values = pixel_values.half()

|

| 35 |

+

elif args.precision == 'bf16':

|

| 36 |

+

pixel_values = pixel_values.bfloat16()

|

| 37 |

+

if torch.cuda.is_available():

|

| 38 |

+

pixel_values = pixel_values.cuda()

|

| 39 |

+

return pixel_values

|

| 40 |

+

|

| 41 |

+

def run_validate(loader, base_progress=0):

|

| 42 |

+

with torch.no_grad():

|

| 43 |

+

end = time.time()

|

| 44 |

+

all_generated_captions = []

|

| 45 |

+

all_gt_captions = []

|

| 46 |

+

all_generated_image_paths = []

|

| 47 |

+

all_image_features = []

|

| 48 |

+

all_text_features = []

|

| 49 |

+

|

| 50 |

+

for i, (image_paths, images, caption_images, tgt_tokens, token_len) in tqdm.tqdm(enumerate(loader), position=0, total=len(loader)):

|

| 51 |

+

i = base_progress + i

|

| 52 |

+

|

| 53 |

+

if torch.cuda.is_available():

|

| 54 |

+

tgt_tokens = tgt_tokens.cuda(args.gpu, non_blocking=True)

|

| 55 |

+

token_len = token_len.cuda(args.gpu, non_blocking=True)

|

| 56 |

+

images = images.cuda()

|

| 57 |

+

|

| 58 |

+

if args.precision == 'fp16':

|

| 59 |

+

images = images.half()

|

| 60 |

+

elif args.precision == 'bf16':

|

| 61 |

+

images = images.bfloat16()

|

| 62 |

+

|

| 63 |

+

for model_mode in model_modes:

|

| 64 |

+

(model_output, full_labels, last_embedding, _, visual_embs) = model(

|

| 65 |

+

images, tgt_tokens, token_len, mode=model_mode, input_prefix=args.input_prompt, inference=True) # (N, T, C)

|

| 66 |

+

|

| 67 |

+

if model_mode == 'captioning':

|

| 68 |

+

loss = args.cap_loss_scale * model_output.loss

|

| 69 |

+

elif model_mode == 'retrieval':

|

| 70 |

+

loss = args.ret_loss_scale * model_output.loss

|

| 71 |

+

else:

|

| 72 |

+

raise NotImplementedError

|

| 73 |

+

|

| 74 |

+

output = model_output.logits

|

| 75 |

+

if model_mode == 'captioning':

|

| 76 |

+

acc1, acc5 = utils.accuracy(output[:, :-1, :], full_labels[:, 1:], -100, topk=(1, 5))

|

| 77 |

+

top1.update(acc1[0], images.size(0))

|

| 78 |

+

top5.update(acc5[0], images.size(0))

|

| 79 |

+

ce_losses.update(loss.item(), images.size(0))

|

| 80 |

+

|

| 81 |

+

if model_mode == 'captioning':

|

| 82 |

+

losses.update(loss.item(), images.size(0))

|

| 83 |

+

elif model_mode == 'retrieval':

|

| 84 |

+

if args.distributed:

|

| 85 |

+

original_last_embedding = torch.clone(last_embedding)

|

| 86 |

+

all_visual_embs = [torch.zeros_like(visual_embs) for _ in range(dist.get_world_size())]

|

| 87 |

+

all_last_embedding = [torch.zeros_like(last_embedding) for _ in range(dist.get_world_size())]

|

| 88 |

+

dist.all_gather(all_visual_embs, visual_embs)

|

| 89 |

+

dist.all_gather(all_last_embedding, last_embedding)

|

| 90 |

+

|

| 91 |

+

# Overwrite with embeddings produced on this replica, which track the gradients.

|

| 92 |

+

all_visual_embs[dist.get_rank()] = visual_embs

|

| 93 |

+

all_last_embedding[dist.get_rank()] = last_embedding

|

| 94 |

+

visual_embs = torch.cat(all_visual_embs)

|

| 95 |

+

last_embedding = torch.cat(all_last_embedding)

|

| 96 |

+

start_idx = args.rank * images.shape[0]

|

| 97 |

+

end_idx = start_idx + images.shape[0]

|

| 98 |

+

assert torch.all(last_embedding[start_idx:end_idx] == original_last_embedding), args.rank

|

| 99 |

+

|

| 100 |

+

all_text_features.append(last_embedding.cpu())

|

| 101 |

+

all_image_features.append(visual_embs.cpu())

|

| 102 |

+

|

| 103 |

+

# Run auto-regressive generation sample

|

| 104 |

+

if model_mode == 'captioning':

|

| 105 |

+

input_embs = model.module.model.get_visual_embs(images, mode='captioning') # (2, n_visual_tokens, D)

|

| 106 |

+

if args.input_prompt is not None:

|

| 107 |

+

print(f'Adding prefix "{args.input_prompt}" to captioning generate=True.')

|

| 108 |

+

prompt_ids = tokenizer(args.input_prompt, add_special_tokens=False, return_tensors="pt").input_ids

|

| 109 |

+

prompt_ids = prompt_ids.to(visual_embs.device)

|

| 110 |

+

prompt_embs = model.module.model.input_embeddings(prompt_ids)

|

| 111 |

+

prompt_embs = prompt_embs.repeat(input_embs.shape[0], 1, 1)

|

| 112 |

+

input_embs = torch.cat([input_embs, prompt_embs], dim=1)

|

| 113 |

+

|

| 114 |

+

generated_ids, _, _ = model(input_embs, tgt_tokens, token_len,

|

| 115 |

+

generate=True, num_words=num_words, temperature=0.0, top_p=1.0,

|

| 116 |

+

min_word_tokens=num_words)

|

| 117 |

+

|

| 118 |

+

if args.distributed and ngpus_per_node > 1:

|

| 119 |

+

all_generated_ids = [torch.zeros_like(generated_ids) for _ in range(dist.get_world_size())]

|

| 120 |

+

dist.all_gather(all_generated_ids, generated_ids)

|

| 121 |

+

all_generated_ids[dist.get_rank()] = generated_ids

|

| 122 |

+

generated_ids = torch.cat(all_generated_ids)

|

| 123 |

+

|

| 124 |

+

all_tgt_tokens = [torch.zeros_like(tgt_tokens) for _ in range(dist.get_world_size())]

|

| 125 |

+

dist.all_gather(all_tgt_tokens, tgt_tokens)

|

| 126 |

+

all_tgt_tokens[dist.get_rank()] = tgt_tokens

|

| 127 |

+

all_tgt_tokens = torch.cat(all_tgt_tokens)

|

| 128 |

+

|

| 129 |

+

all_image_paths = [[None for _ in image_paths] for _ in range(dist.get_world_size())]

|

| 130 |

+

dist.all_gather_object(all_image_paths, image_paths)

|

| 131 |

+

all_image_paths[dist.get_rank()] = image_paths

|

| 132 |

+

image_paths = []

|

| 133 |

+

for p in all_image_paths:

|

| 134 |

+

image_paths.extend(p)

|

| 135 |

+

else:

|

| 136 |

+

all_tgt_tokens = tgt_tokens

|

| 137 |

+

|

| 138 |

+

all_tgt_tokens[all_tgt_tokens == -100] = tokenizer.pad_token_id

|

| 139 |

+

generated_captions = tokenizer.batch_decode(generated_ids, skip_special_tokens=True)

|

| 140 |

+

gt_captions = tokenizer.batch_decode(all_tgt_tokens, skip_special_tokens=True)

|

| 141 |

+

|

| 142 |

+

for cap_i in range(len(generated_captions)):

|

| 143 |

+

image_path = image_paths[cap_i]

|

| 144 |

+

all_generated_image_paths.append(image_path)

|

| 145 |

+

stop_idx = generated_captions[cap_i].find('.')

|

| 146 |

+

if stop_idx > 5:

|

| 147 |

+

all_generated_captions.append(generated_captions[cap_i][:stop_idx])

|

| 148 |

+

else:

|

| 149 |

+

all_generated_captions.append(generated_captions[cap_i])

|

| 150 |

+

all_gt_captions.append([gt_captions[cap_i]])

|

| 151 |

+

elif model_mode == 'retrieval':

|

| 152 |

+

if i == 0:

|

| 153 |

+

# Generate without image input to visualize text-generation ability.

|

| 154 |

+

input_ids = tgt_tokens[:, :3] # Use first 3 tokens as initial prompt for generation.

|

| 155 |

+

input_embs = model.module.model.input_embeddings(input_ids) # (N, T, D)

|

| 156 |

+

generated_ids, _, _ = model(input_embs, tgt_tokens, token_len, generate=True, num_words=num_words, temperature=0.0, top_p=1.0)

|

| 157 |

+

generated_ids = torch.cat([input_ids, generated_ids], dim=1)

|

| 158 |

+

generated_captions = tokenizer.batch_decode(generated_ids, skip_special_tokens=False)

|

| 159 |

+

gt_captions = tokenizer.batch_decode(tgt_tokens, skip_special_tokens=False)

|

| 160 |

+

else:

|

| 161 |

+

raise NotImplementedError

|

| 162 |

+

|

| 163 |

+

if i == 0:

|

| 164 |

+

max_to_display = 5

|

| 165 |

+

print('=' * 30)

|

| 166 |

+

print('Generated samples:')

|

| 167 |

+

for cap_i, cap in enumerate(generated_captions[:max_to_display]):

|

| 168 |

+

print(f'{cap_i}) {cap}')

|

| 169 |

+

print('=' * 30)

|

| 170 |

+

print('Real samples:')

|

| 171 |

+

for cap_i, cap in enumerate(gt_captions[:max_to_display]):

|

| 172 |

+

print(f'{cap_i}) {cap}')

|

| 173 |

+

print('=' * 30)

|

| 174 |

+

|

| 175 |

+

# Write images and captions to Tensorboard.

|

| 176 |

+

if not args.distributed or (args.rank % ngpus_per_node == 0):

|

| 177 |

+

max_images_to_show = 16

|

| 178 |

+

normalized_images = images - images.min()

|

| 179 |

+

normalized_images /= normalized_images.max() # (N, 3, H, W)

|

| 180 |

+

# Create generated caption text.

|

| 181 |

+

generated_cap_images = torch.stack([

|

| 182 |

+

utils.create_image_of_text(

|

| 183 |

+

generated_captions[j].encode('ascii', 'ignore'),

|

| 184 |

+

width=normalized_images.shape[3],

|

| 185 |

+

color=(255, 255, 0))

|

| 186 |

+

for j in range(normalized_images.shape[0])], axis=0)

|

| 187 |

+

# Append gt/generated caption images.

|

| 188 |

+

display_images = torch.cat([normalized_images.float().cpu(), caption_images, generated_cap_images], axis=2)[:max_images_to_show]

|

| 189 |

+

grid = torchvision.utils.make_grid(display_images, nrow=int(max_images_to_show ** 0.5), padding=4)

|

| 190 |

+

writer.add_image(f'val/images_{model_mode}', grid, actual_step)

|

| 191 |

+

|

| 192 |

+

# measure elapsed time

|

| 193 |

+

batch_time.update(time.time() - end)

|

| 194 |

+

end = time.time()

|

| 195 |

+

|

| 196 |

+

if i % args.print_freq == 0:

|

| 197 |

+

progress.display(i + 1)

|

| 198 |

+

|

| 199 |

+

if i == args.val_steps_per_epoch - 1:

|

| 200 |

+

break

|

| 201 |

+

|

| 202 |

+

# Measure captioning metrics.

|

| 203 |

+

path2captions = collections.defaultdict(list)

|

| 204 |

+

for image_path, caption in zip(all_generated_image_paths, all_gt_captions):

|

| 205 |

+

assert len(caption) == 1, caption

|

| 206 |

+

path2captions[image_path].append(caption[0].replace('[RET]', ''))

|

| 207 |

+

full_gt_captions = [path2captions[path] for path in all_generated_image_paths]

|

| 208 |

+

|

| 209 |

+

print(f'Computing BLEU with {len(all_generated_captions)} generated captions:'

|

| 210 |

+

f'{all_generated_captions[:5]} and {len(full_gt_captions)} groundtruth captions:',

|

| 211 |

+

f'{full_gt_captions[:5]}.')

|

| 212 |

+

bleu1_score = bleu_scorers[0](all_generated_captions, full_gt_captions)

|

| 213 |

+

bleu1.update(bleu1_score, 1)

|

| 214 |

+

bleu2_score = bleu_scorers[1](all_generated_captions, full_gt_captions)

|

| 215 |

+

bleu2.update(bleu2_score, 1)

|

| 216 |

+

bleu3_score = bleu_scorers[2](all_generated_captions, full_gt_captions)

|

| 217 |

+

bleu3.update(bleu3_score, 2)

|

| 218 |

+

bleu4_score = bleu_scorers[3](all_generated_captions, full_gt_captions)

|

| 219 |

+

bleu4.update(bleu4_score, 3)

|

| 220 |

+

|

| 221 |

+

# Measure retrieval metrics over the entire validation set.

|

| 222 |

+

all_image_features = torch.cat(all_image_features, axis=0) # (coco_val_len, 2048)

|

| 223 |

+

all_text_features = torch.cat(all_text_features, axis=0) # (coco_val_len, 2048)

|

| 224 |

+

|

| 225 |

+

print(f"Computing similarity between {all_image_features.shape} and {all_text_features.shape}.")

|

| 226 |

+

logits_per_image = all_image_features @ all_text_features.t()

|

| 227 |

+

logits_per_text = logits_per_image.t()

|

| 228 |

+

all_image_acc1, all_image_acc5 = losses_utils.contrastive_acc(logits_per_image, topk=(1, 5))

|

| 229 |

+

all_caption_acc1, all_caption_acc5 = losses_utils.contrastive_acc(logits_per_text, topk=(1, 5))

|

| 230 |

+

image_loss = losses_utils.contrastive_loss(logits_per_image)

|

| 231 |

+

caption_loss = losses_utils.contrastive_loss(logits_per_text)

|

| 232 |

+

|

| 233 |

+

loss = args.ret_loss_scale * (image_loss + caption_loss) / 2.0

|

| 234 |

+

losses.update(loss.item(), logits_per_image.size(0))

|

| 235 |

+

top1_caption.update(all_caption_acc1.item(), logits_per_image.size(0))

|

| 236 |

+

top5_caption.update(all_caption_acc5.item(), logits_per_image.size(0))

|

| 237 |

+

top1_image.update(all_image_acc1.item(), logits_per_image.size(0))

|

| 238 |

+

top5_image.update(all_image_acc5.item(), logits_per_image.size(0))

|

| 239 |

+

|

| 240 |

+

|

| 241 |

+

batch_time = utils.AverageMeter('Time', ':6.3f', utils.Summary.AVERAGE)

|

| 242 |

+

losses = utils.AverageMeter('Loss', ':.4e', utils.Summary.AVERAGE)

|

| 243 |

+

ce_losses = utils.AverageMeter('CeLoss', ':.4e', utils.Summary.AVERAGE)

|

| 244 |

+

top1 = utils.AverageMeter('Acc@1', ':6.2f', utils.Summary.AVERAGE)

|

| 245 |

+

top5 = utils.AverageMeter('Acc@5', ':6.2f', utils.Summary.AVERAGE)

|

| 246 |

+

bleu1 = utils.AverageMeter('BLEU@1', ':6.2f', utils.Summary.AVERAGE)

|

| 247 |

+

bleu2 = utils.AverageMeter('BLEU@2', ':6.2f', utils.Summary.AVERAGE)

|

| 248 |

+

bleu3 = utils.AverageMeter('BLEU@3', ':6.2f', utils.Summary.AVERAGE)

|

| 249 |

+

bleu4 = utils.AverageMeter('BLEU@4', ':6.2f', utils.Summary.AVERAGE)

|

| 250 |

+

top1_caption = utils.AverageMeter('CaptionAcc@1', ':6.2f', utils.Summary.AVERAGE)

|

| 251 |

+

top5_caption = utils.AverageMeter('CaptionAcc@5', ':6.2f', utils.Summary.AVERAGE)

|

| 252 |

+

top1_image = utils.AverageMeter('ImageAcc@1', ':6.2f', utils.Summary.AVERAGE)

|

| 253 |

+

top5_image = utils.AverageMeter('ImageAcc@5', ':6.2f', utils.Summary.AVERAGE)

|

| 254 |

+

|

| 255 |

+

progress = utils.ProgressMeter(

|

| 256 |

+

len(val_loader) + (args.distributed and (len(val_loader.sampler) * args.world_size < len(val_loader.dataset))),

|

| 257 |

+

[batch_time, losses, top1, top5, bleu4],

|

| 258 |

+

prefix='Test: ')

|

| 259 |

+

|

| 260 |

+

# switch to evaluate mode

|

| 261 |

+

model.eval()

|

| 262 |

+

|

| 263 |

+

run_validate(val_loader)

|

| 264 |

+

if args.distributed:

|

| 265 |

+

batch_time.all_reduce()

|

| 266 |

+

losses.all_reduce()

|

| 267 |

+

bleu1.all_reduce()

|

| 268 |

+

bleu2.all_reduce()

|

| 269 |

+

bleu3.all_reduce()

|

| 270 |

+

bleu4.all_reduce()

|

| 271 |

+

top1.all_reduce()

|

| 272 |

+

top5.all_reduce()

|

| 273 |

+

top1_caption.all_reduce()

|

| 274 |

+

top5_caption.all_reduce()

|

| 275 |

+

top1_image.all_reduce()

|

| 276 |

+

top5_image.all_reduce()

|

| 277 |

+

|

| 278 |

+

if args.distributed and (len(val_loader.sampler) * args.world_size < len(val_loader.dataset)):

|

| 279 |

+

aux_val_dataset = Subset(val_loader.dataset,

|

| 280 |

+

range(len(val_loader.sampler) * args.world_size, len(val_loader.dataset)))

|

| 281 |

+

aux_val_loader = torch.utils.data.DataLoader(

|

| 282 |

+

aux_val_dataset, batch_size=(args.val_batch_size or args.batch_size), shuffle=False,

|

| 283 |

+

num_workers=args.workers, pin_memory=True, collate_fn=data.collate_fn)

|

| 284 |

+

run_validate(aux_val_loader, len(val_loader))

|

| 285 |

+

|

| 286 |

+

progress.display_summary()

|

| 287 |

+

|

| 288 |

+

writer.add_scalar('val/total_secs_per_batch', batch_time.avg, actual_step)

|

| 289 |

+

writer.add_scalar('val/seq_top1_acc', top1.avg, actual_step)

|

| 290 |

+

writer.add_scalar('val/seq_top5_acc', top5.avg, actual_step)

|

| 291 |

+

writer.add_scalar('val/ce_loss', losses.avg, actual_step)

|

| 292 |

+

writer.add_scalar('val/bleu1', bleu1.avg, actual_step)

|

| 293 |

+

writer.add_scalar('val/bleu2', bleu2.avg, actual_step)

|

| 294 |

+

writer.add_scalar('val/bleu3', bleu3.avg, actual_step)

|

| 295 |

+

writer.add_scalar('val/bleu4', bleu4.avg, actual_step)

|

| 296 |

+

writer.add_scalar('val/contrastive_loss', losses.avg, actual_step)

|

| 297 |

+

writer.add_scalar('val/t2i_top1_acc', top1_caption.avg, actual_step)

|

| 298 |

+

writer.add_scalar('val/t2i_top5_acc', top5_caption.avg, actual_step)

|

| 299 |

+

writer.add_scalar('val/i2t_top1_acc', top1_image.avg, actual_step)

|

| 300 |

+

writer.add_scalar('val/i2t_top5_acc', top5_image.avg, actual_step)

|

| 301 |

+

writer.add_scalar('val/top1_acc', (top1_caption.avg + top1_image.avg) / 2.0, actual_step)

|

| 302 |

+

writer.add_scalar('val/top5_acc', (top5_caption.avg + top5_image.avg) / 2.0, actual_step)

|

| 303 |

+

|

| 304 |

+

writer.close()

|

| 305 |

+

|

| 306 |

+

# Use top1 accuracy as the metric for keeping the best checkpoint.

|

| 307 |

+

return top1_caption.avg

|

fromage/losses.py

ADDED

|

@@ -0,0 +1,44 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from typing import Optional

|

| 2 |

+

import torch

|

| 3 |

+

from fromage import utils

|

| 4 |

+

|

| 5 |

+

def contrastive_loss(logits: torch.Tensor) -> torch.Tensor:

|

| 6 |

+

return torch.nn.functional.cross_entropy(logits, torch.arange(len(logits), device=logits.device))

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

def contrastive_acc(logits: torch.Tensor, target: Optional[torch.Tensor] = None, topk=(1,)) -> torch.Tensor:

|

| 10 |

+

"""

|

| 11 |

+

Args:

|

| 12 |

+

logits: (N, N) predictions.

|

| 13 |

+

target: (N, num_correct_answers) labels.

|

| 14 |

+

"""

|

| 15 |

+

assert len(logits.shape) == 2, logits.shape

|

| 16 |

+

batch_size = logits.shape[0]

|

| 17 |

+

|

| 18 |

+

if target is None:

|

| 19 |

+

target = torch.arange(len(logits), device=logits.device)

|

| 20 |

+

return utils.accuracy(logits, target, -1, topk)

|

| 21 |

+

else:

|

| 22 |

+

assert len(target.shape) == 2, target.shape

|

| 23 |

+

with torch.no_grad():

|

| 24 |

+

maxk = max(topk)

|

| 25 |

+

if logits.shape[-1] < maxk:

|

| 26 |

+

print(f"[WARNING] Less than {maxk} predictions available. Using {logits.shape[-1]} for topk.")

|

| 27 |

+

maxk = min(maxk, logits.shape[-1])

|

| 28 |

+

|

| 29 |

+

# Take topk along the last dimension.

|

| 30 |

+

_, pred = logits.topk(maxk, -1, True, True) # (N, topk)

|

| 31 |

+

assert pred.shape == (batch_size, maxk)

|

| 32 |

+

|

| 33 |

+

target_expand = target[:, :, None].repeat(1, 1, maxk) # (N, num_correct_answers, topk)

|

| 34 |

+

pred_expand = pred[:, None, :].repeat(1, target.shape[1], 1) # (N, num_correct_answers, topk)

|

| 35 |

+

correct = pred_expand.eq(target_expand) # (N, num_correct_answers, topk)

|

| 36 |

+

correct = torch.any(correct, dim=1) # (N, topk)

|

| 37 |

+

|

| 38 |

+

res = []

|

| 39 |

+

for k in topk:

|

| 40 |

+

any_k_correct = torch.clamp(correct[:, :k].sum(1), max=1) # (N,)

|

| 41 |

+

correct_k = any_k_correct.float().sum(0, keepdim=True)

|

| 42 |

+

res.append(correct_k.mul_(100.0 / batch_size))

|

| 43 |

+

return res

|

| 44 |

+

|

fromage/models.py

ADDED

|

@@ -0,0 +1,658 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|