Spaces:

Paused

Paused

root

commited on

Commit

•

424a94c

1

Parent(s):

2d96aed

video-llama-2-test

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- LICENSE +28 -0

- LICENSE_Lavis.md +14 -0

- LICENSE_Minigpt4.md +14 -0

- README copy.md +244 -0

- README.md +249 -13

- Video-LLaMA-2-7B-Finetuned/AL_LLaMA_2_7B_Finetuned.pth +3 -0

- Video-LLaMA-2-7B-Finetuned/VL_LLaMA_2_7B_Finetuned.pth +3 -0

- Video-LLaMA-2-7B-Finetuned/imagebind_huge.pth +3 -0

- Video-LLaMA-2-7B-Finetuned/llama-2-7b-chat-hf/config.json +22 -0

- Video-LLaMA-2-7B-Finetuned/llama-2-7b-chat-hf/generation_config.json +7 -0

- Video-LLaMA-2-7B-Finetuned/llama-2-7b-chat-hf/pytorch_model-00001-of-00002.bin +3 -0

- Video-LLaMA-2-7B-Finetuned/llama-2-7b-chat-hf/pytorch_model-00002-of-00002.bin +3 -0

- Video-LLaMA-2-7B-Finetuned/llama-2-7b-chat-hf/pytorch_model.bin.index.json +330 -0

- Video-LLaMA-2-7B-Finetuned/llama-2-7b-chat-hf/special_tokens_map.json +23 -0

- Video-LLaMA-2-7B-Finetuned/llama-2-7b-chat-hf/tokenizer.json +0 -0

- Video-LLaMA-2-7B-Finetuned/llama-2-7b-chat-hf/tokenizer.model +3 -0

- Video-LLaMA-2-7B-Finetuned/llama-2-7b-chat-hf/tokenizer_config.json +33 -0

- app.py +237 -0

- apply_delta.py +49 -0

- demo_audiovideo.py +250 -0

- demo_video.py +247 -0

- environment.yml +70 -0

- eval_configs/video_llama_eval_only_vl.yaml +36 -0

- eval_configs/video_llama_eval_withaudio.yaml +35 -0

- figs/architecture.png +0 -0

- figs/architecture_v2.png +0 -0

- figs/video_llama_logo.jpg +0 -0

- prompts/alignment_image.txt +4 -0

- requirement.txt +13 -0

- setup.py +17 -0

- train.py +107 -0

- train_configs/audiobranch_stage1_pretrain.yaml +88 -0

- train_configs/audiobranch_stage2_finetune.yaml +120 -0

- train_configs/visionbranch_stage1_pretrain.yaml +87 -0

- train_configs/visionbranch_stage2_finetune.yaml +122 -0

- video_llama/__init__.py +31 -0

- video_llama/__pycache__/__init__.cpython-39.pyc +0 -0

- video_llama/common/__init__.py +0 -0

- video_llama/common/__pycache__/__init__.cpython-39.pyc +0 -0

- video_llama/common/__pycache__/config.cpython-39.pyc +0 -0

- video_llama/common/__pycache__/dist_utils.cpython-39.pyc +0 -0

- video_llama/common/__pycache__/logger.cpython-39.pyc +0 -0

- video_llama/common/__pycache__/registry.cpython-39.pyc +0 -0

- video_llama/common/__pycache__/utils.cpython-39.pyc +0 -0

- video_llama/common/config.py +468 -0

- video_llama/common/dist_utils.py +137 -0

- video_llama/common/gradcam.py +24 -0

- video_llama/common/logger.py +195 -0

- video_llama/common/optims.py +119 -0

- video_llama/common/registry.py +329 -0

LICENSE

ADDED

|

@@ -0,0 +1,28 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

BSD 3-Clause License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2023, Multilingual NLP Team at Alibaba DAMO Academy

|

| 4 |

+

|

| 5 |

+

Redistribution and use in source and binary forms, with or without

|

| 6 |

+

modification, are permitted provided that the following conditions are met:

|

| 7 |

+

|

| 8 |

+

1. Redistributions of source code must retain the above copyright notice, this

|

| 9 |

+

list of conditions and the following disclaimer.

|

| 10 |

+

|

| 11 |

+

2. Redistributions in binary form must reproduce the above copyright notice,

|

| 12 |

+

this list of conditions and the following disclaimer in the documentation

|

| 13 |

+

and/or other materials provided with the distribution.

|

| 14 |

+

|

| 15 |

+

3. Neither the name of the copyright holder nor the names of its

|

| 16 |

+

contributors may be used to endorse or promote products derived from

|

| 17 |

+

this software without specific prior written permission.

|

| 18 |

+

|

| 19 |

+

THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS"

|

| 20 |

+

AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

|

| 21 |

+

IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE

|

| 22 |

+

DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE

|

| 23 |

+

FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL

|

| 24 |

+

DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR

|

| 25 |

+

SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER

|

| 26 |

+

CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY,

|

| 27 |

+

OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

|

| 28 |

+

OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

|

LICENSE_Lavis.md

ADDED

|

@@ -0,0 +1,14 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

BSD 3-Clause License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2022 Salesforce, Inc.

|

| 4 |

+

All rights reserved.

|

| 5 |

+

|

| 6 |

+

Redistribution and use in source and binary forms, with or without modification, are permitted provided that the following conditions are met:

|

| 7 |

+

|

| 8 |

+

1. Redistributions of source code must retain the above copyright notice, this list of conditions and the following disclaimer.

|

| 9 |

+

|

| 10 |

+

2. Redistributions in binary form must reproduce the above copyright notice, this list of conditions and the following disclaimer in the documentation and/or other materials provided with the distribution.

|

| 11 |

+

|

| 12 |

+

3. Neither the name of Salesforce.com nor the names of its contributors may be used to endorse or promote products derived from this software without specific prior written permission.

|

| 13 |

+

|

| 14 |

+

THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS" AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

|

LICENSE_Minigpt4.md

ADDED

|

@@ -0,0 +1,14 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

BSD 3-Clause License

|

| 2 |

+

|

| 3 |

+

Copyright 2023 Deyao Zhu

|

| 4 |

+

All rights reserved.

|

| 5 |

+

|

| 6 |

+

Redistribution and use in source and binary forms, with or without modification, are permitted provided that the following conditions are met:

|

| 7 |

+

|

| 8 |

+

1. Redistributions of source code must retain the above copyright notice, this list of conditions and the following disclaimer.

|

| 9 |

+

|

| 10 |

+

2. Redistributions in binary form must reproduce the above copyright notice, this list of conditions and the following disclaimer in the documentation and/or other materials provided with the distribution.

|

| 11 |

+

|

| 12 |

+

3. Neither the name of the copyright holder nor the names of its contributors may be used to endorse or promote products derived from this software without specific prior written permission.

|

| 13 |

+

|

| 14 |

+

THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS" AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

|

README copy.md

ADDED

|

@@ -0,0 +1,244 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<p align="center" width="100%">

|

| 2 |

+

<a target="_blank"><img src="figs/video_llama_logo.jpg" alt="Video-LLaMA" style="width: 50%; min-width: 200px; display: block; margin: auto;"></a>

|

| 3 |

+

</p>

|

| 4 |

+

|

| 5 |

+

|

| 6 |

+

|

| 7 |

+

# Video-LLaMA: An Instruction-tuned Audio-Visual Language Model for Video Understanding

|

| 8 |

+

<!-- **Video-LLaMA: An Instruction-tuned Audio-Visual Language Model for Video Understanding** -->

|

| 9 |

+

|

| 10 |

+

This is the repo for the Video-LLaMA project, which is working on empowering large language models with video and audio understanding capabilities.

|

| 11 |

+

|

| 12 |

+

<div style='display:flex; gap: 0.25rem; '>

|

| 13 |

+

<a href='https://huggingface.co/spaces/DAMO-NLP-SG/Video-LLaMA'><img src='https://img.shields.io/badge/%F0%9F%A4%97%20Hugging%20Face-Demo-blue'></a>

|

| 14 |

+

<a href='https://modelscope.cn/studios/damo/video-llama/summary'><img src='https://img.shields.io/badge/ModelScope-Demo-blueviolet'></a>

|

| 15 |

+

<a href='https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-Series'><img src='https://img.shields.io/badge/%F0%9F%A4%97%20Hugging%20Face-Model-blue'></a>

|

| 16 |

+

<a href='https://arxiv.org/abs/2306.02858'><img src='https://img.shields.io/badge/Paper-PDF-red'></a>

|

| 17 |

+

</div>

|

| 18 |

+

|

| 19 |

+

## News

|

| 20 |

+

- [08.03] **NOTE**: Release the LLaMA-2-Chat version of **Video-LLaMA**, including its pre-trained and instruction-tuned checkpoints. We uploaded full weights on Huggingface ([7B-Pretrained](https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-2-7B-Pretrained),[7B-Finetuned](https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-2-7B-Finetuned),[13B-Pretrained](https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-2-13B-Pretrained),[13B-Finetuned](https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-2-13B-Finetuned)), just for your convenience and secondary development. Welcome to try.

|

| 21 |

+

- [06.14] **NOTE**: the current online interactive demo is primarily for English chatting and it may **NOT** be a good option to ask Chinese questions since Vicuna/LLaMA does not represent Chinese texts very well.

|

| 22 |

+

- [06.13] **NOTE**: the audio support is **ONLY** for Vicuna-7B by now although we have several VL checkpoints available for other decoders.

|

| 23 |

+

- [06.10] **NOTE**: we have NOT updated the HF demo yet because the whole framework (with audio branch) cannot run normally on A10-24G. The current running demo is still the previous version of Video-LLaMA. We will fix this issue soon.

|

| 24 |

+

- [06.08] 🚀🚀 Release the checkpoints of the audio-supported Video-LLaMA. Documentation and example outputs are also updated.

|

| 25 |

+

- [05.22] 🚀🚀 Interactive demo online, try our Video-LLaMA (with **Vicuna-7B** as language decoder) at [Hugging Face](https://huggingface.co/spaces/DAMO-NLP-SG/Video-LLaMA) and [ModelScope](https://pre.modelscope.cn/studios/damo/video-llama/summary)!!

|

| 26 |

+

- [05.22] ⭐️ Release **Video-LLaMA v2** built with Vicuna-7B

|

| 27 |

+

- [05.18] 🚀🚀 Support video-grounded chat in Chinese

|

| 28 |

+

- [**Video-LLaMA-BiLLA**](https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-Series/resolve/main/finetune-billa7b-zh.pth): we introduce [BiLLa-7B](https://huggingface.co/Neutralzz/BiLLa-7B-SFT) as language decoder and fine-tune the video-language aligned model (i.e., stage 1 model) with machine-translated [VideoChat](https://github.com/OpenGVLab/InternVideo/tree/main/Data/instruction_data) instructions.

|

| 29 |

+

- [**Video-LLaMA-Ziya**](https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-Series/resolve/main/finetune-ziya13b-zh.pth): same with Video-LLaMA-BiLLA but the language decoder is changed to [Ziya-13B](https://huggingface.co/IDEA-CCNL/Ziya-LLaMA-13B-v1).

|

| 30 |

+

- [05.18] ⭐️ Create a Hugging Face [repo](https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-Series) to store the model weights of all the variants of our Video-LLaMA.

|

| 31 |

+

- [05.15] ⭐️ Release [**Video-LLaMA v2**](https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-Series/resolve/main/finetune-vicuna13b-v2.pth): we use the training data provided by [VideoChat](https://github.com/OpenGVLab/InternVideo/tree/main/Data/instruction_data) to further enhance the instruction-following capability of Video-LLaMA.

|

| 32 |

+

- [05.07] Release the initial version of **Video-LLaMA**, including its pre-trained and instruction-tuned checkpoints.

|

| 33 |

+

|

| 34 |

+

<p align="center" width="100%">

|

| 35 |

+

<a target="_blank"><img src="figs/architecture_v2.png" alt="Video-LLaMA" style="width: 80%; min-width: 200px; display: block; margin: auto;"></a>

|

| 36 |

+

</p>

|

| 37 |

+

|

| 38 |

+

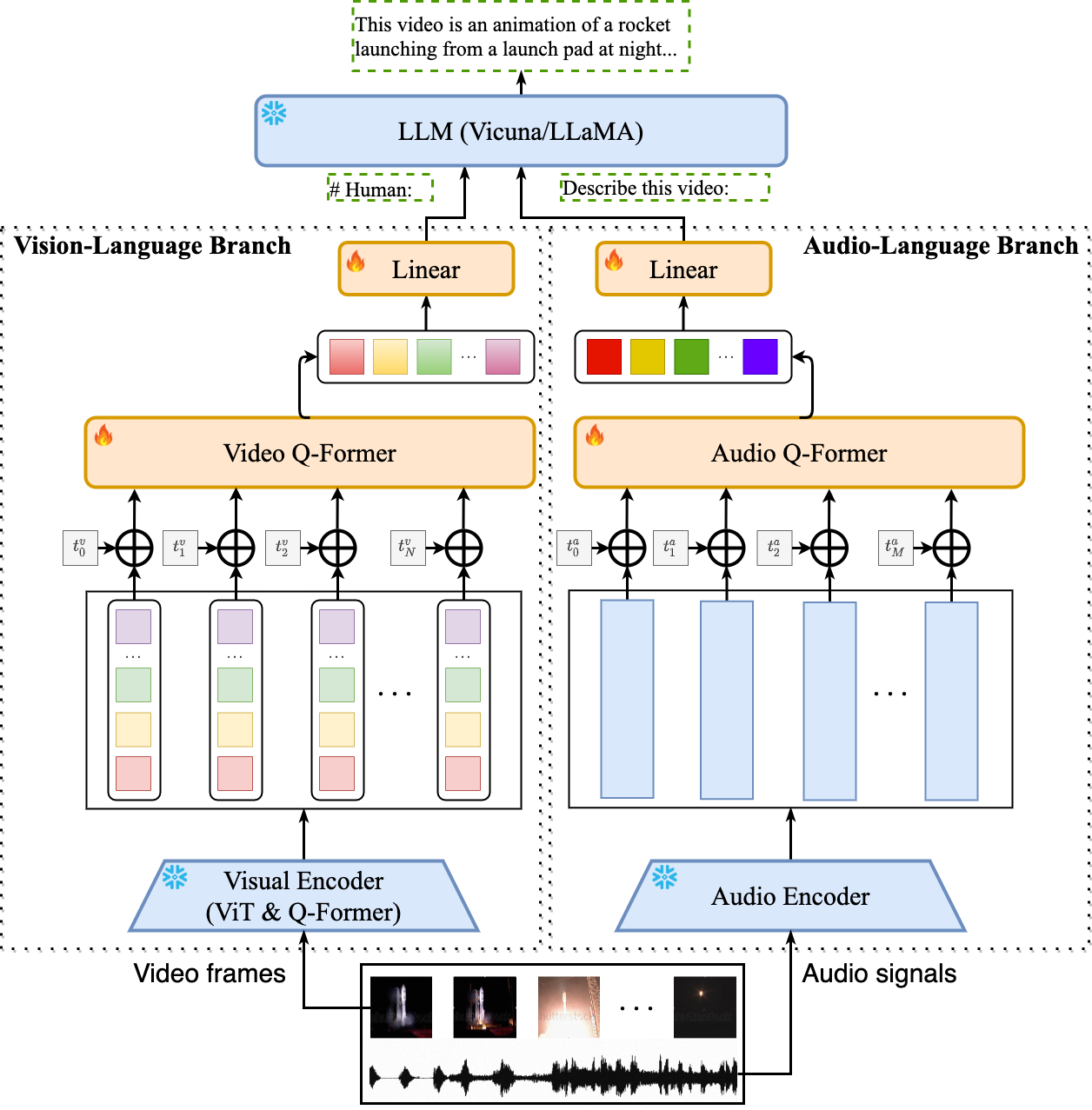

## Introduction

|

| 39 |

+

|

| 40 |

+

|

| 41 |

+

- Video-LLaMA is built on top of [BLIP-2](https://github.com/salesforce/LAVIS/tree/main/projects/blip2) and [MiniGPT-4](https://github.com/Vision-CAIR/MiniGPT-4). It is composed of two core components: (1) Vision-Language (VL) Branch and (2) Audio-Language (AL) Branch.

|

| 42 |

+

- **VL Branch** (Visual encoder: ViT-G/14 + BLIP-2 Q-Former)

|

| 43 |

+

- A two-layer video Q-Former and a frame embedding layer (applied to the embeddings of each frame) are introduced to compute video representations.

|

| 44 |

+

- We train VL Branch on the Webvid-2M video caption dataset with a video-to-text generation task. We also add image-text pairs (~595K image captions from [LLaVA](https://github.com/haotian-liu/LLaVA)) into the pre-training dataset to enhance the understanding of static visual concepts.

|

| 45 |

+

- After pre-training, we further fine-tune our VL Branch using the instruction-tuning data from [MiniGPT-4](https://github.com/Vision-CAIR/MiniGPT-4), [LLaVA](https://github.com/haotian-liu/LLaVA) and [VideoChat](https://github.com/OpenGVLab/Ask-Anything).

|

| 46 |

+

- **AL Branch** (Audio encoder: ImageBind-Huge)

|

| 47 |

+

- A two-layer audio Q-Former and a audio segment embedding layer (applied to the embedding of each audio segment) are introduced to compute audio representations.

|

| 48 |

+

- As the used audio encoder (i.e., ImageBind) is already aligned across multiple modalities, we train AL Branch on video/image instrucaption data only, just to connect the output of ImageBind to language decoder.

|

| 49 |

+

- Note that only the Video/Audio Q-Former, positional embedding layers and the linear layers are trainable during cross-modal training.

|

| 50 |

+

|

| 51 |

+

|

| 52 |

+

|

| 53 |

+

## Example Outputs

|

| 54 |

+

|

| 55 |

+

|

| 56 |

+

- **Video with background sound**

|

| 57 |

+

|

| 58 |

+

<p float="left">

|

| 59 |

+

<img src="https://github.com/DAMO-NLP-SG/Video-LLaMA/assets/18526640/7f7bddb2-5cf1-4cf4-bce3-3fa67974cbb3" style="width: 45%; margin: auto;">

|

| 60 |

+

<img src="https://github.com/DAMO-NLP-SG/Video-LLaMA/assets/18526640/ec76be04-4aa9-4dde-bff2-0a232b8315e0" style="width: 45%; margin: auto;">

|

| 61 |

+

</p>

|

| 62 |

+

|

| 63 |

+

|

| 64 |

+

- **Video without sound effects**

|

| 65 |

+

<p float="left">

|

| 66 |

+

<img src="https://github.com/DAMO-NLP-SG/Video-LLaMA/assets/18526640/539ea3cc-360d-4b2c-bf86-5505096df2f7" style="width: 45%; margin: auto;">

|

| 67 |

+

<img src="https://github.com/DAMO-NLP-SG/Video-LLaMA/assets/18526640/7304ad6f-1009-46f1-aca4-7f861b636363" style="width: 45%; margin: auto;">

|

| 68 |

+

</p>

|

| 69 |

+

|

| 70 |

+

- **Static image**

|

| 71 |

+

<p float="left">

|

| 72 |

+

<img src="https://github.com/DAMO-NLP-SG/Video-LLaMA/assets/18526640/a146c169-8693-4627-96e6-f885ca22791f" style="width: 45%; margin: auto;">

|

| 73 |

+

<img src="https://github.com/DAMO-NLP-SG/Video-LLaMA/assets/18526640/66fc112d-e47e-4b66-b9bc-407f8d418b17" style="width: 45%; margin: auto;">

|

| 74 |

+

</p>

|

| 75 |

+

|

| 76 |

+

|

| 77 |

+

|

| 78 |

+

## Pre-trained & Fine-tuned Checkpoints

|

| 79 |

+

|

| 80 |

+

The following checkpoints store learnable parameters (positional embedding layers, Video/Audio Q-former and linear projection layers) only.

|

| 81 |

+

|

| 82 |

+

#### Vision-Language Branch

|

| 83 |

+

| Checkpoint | Link | Note |

|

| 84 |

+

|:------------|-------------|-------------|

|

| 85 |

+

| pretrain-vicuna7b | [link](https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-Series/resolve/main/pretrain_vicuna7b-v2.pth) | Pre-trained on WebVid (2.5M video-caption pairs) and LLaVA-CC3M (595k image-caption pairs) |

|

| 86 |

+

| finetune-vicuna7b-v2 | [link](https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-Series/resolve/main/finetune-vicuna7b-v2.pth) | Fine-tuned on the instruction-tuning data from [MiniGPT-4](https://github.com/Vision-CAIR/MiniGPT-4), [LLaVA](https://github.com/haotian-liu/LLaVA) and [VideoChat](https://github.com/OpenGVLab/Ask-Anything)|

|

| 87 |

+

| pretrain-vicuna13b | [link](https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-Series/resolve/main/pretrain-vicuna13b.pth) | Pre-trained on WebVid (2.5M video-caption pairs) and LLaVA-CC3M (595k image-caption pairs) |

|

| 88 |

+

| finetune-vicuna13b-v2 | [link](https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-Series/resolve/main/finetune-vicuna13b-v2.pth) | Fine-tuned on the instruction-tuning data from [MiniGPT-4](https://github.com/Vision-CAIR/MiniGPT-4), [LLaVA](https://github.com/haotian-liu/LLaVA) and [VideoChat](https://github.com/OpenGVLab/Ask-Anything)|

|

| 89 |

+

| pretrain-ziya13b-zh | [link](https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-Series/resolve/main/pretrain-ziya13b-zh.pth) | Pre-trained with Chinese LLM [Ziya-13B](https://huggingface.co/IDEA-CCNL/Ziya-LLaMA-13B-v1) |

|

| 90 |

+

| finetune-ziya13b-zh | [link](https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-Series/resolve/main/finetune-ziya13b-zh.pth) | Fine-tuned on machine-translated [VideoChat](https://github.com/OpenGVLab/Ask-Anything) instruction-following dataset (in Chinese)|

|

| 91 |

+

| pretrain-billa7b-zh | [link](https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-Series/resolve/main/pretrain-billa7b-zh.pth) | Pre-trained with Chinese LLM [BiLLA-7B](https://huggingface.co/IDEA-CCNL/Ziya-LLaMA-13B-v1) |

|

| 92 |

+

| finetune-billa7b-zh | [link](https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-Series/resolve/main/finetune-billa7b-zh.pth) | Fine-tuned on machine-translated [VideoChat](https://github.com/OpenGVLab/Ask-Anything) instruction-following dataset (in Chinese) |

|

| 93 |

+

|

| 94 |

+

#### Audio-Language Branch

|

| 95 |

+

| Checkpoint | Link | Note |

|

| 96 |

+

|:------------|-------------|-------------|

|

| 97 |

+

| pretrain-vicuna7b | [link](https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-Series/resolve/main/pretrain_vicuna7b_audiobranch.pth) | Pre-trained on WebVid (2.5M video-caption pairs) and LLaVA-CC3M (595k image-caption pairs) |

|

| 98 |

+

| finetune-vicuna7b-v2 | [link](https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-Series/resolve/main/finetune_vicuna7b_audiobranch.pth) | Fine-tuned on the instruction-tuning data from [MiniGPT-4](https://github.com/Vision-CAIR/MiniGPT-4), [LLaVA](https://github.com/haotian-liu/LLaVA) and [VideoChat](https://github.com/OpenGVLab/Ask-Anything)|

|

| 99 |

+

|

| 100 |

+

|

| 101 |

+

## Usage

|

| 102 |

+

#### Enviroment Preparation

|

| 103 |

+

|

| 104 |

+

First, install ffmpeg.

|

| 105 |

+

```

|

| 106 |

+

apt update

|

| 107 |

+

apt install ffmpeg

|

| 108 |

+

```

|

| 109 |

+

Then, create a conda environment:

|

| 110 |

+

```

|

| 111 |

+

conda env create -f environment.yml

|

| 112 |

+

conda activate videollama

|

| 113 |

+

```

|

| 114 |

+

|

| 115 |

+

|

| 116 |

+

## Prerequisites

|

| 117 |

+

|

| 118 |

+

Before using the repository, make sure you have obtained the following checkpoints:

|

| 119 |

+

|

| 120 |

+

#### Pre-trained Language Decoder

|

| 121 |

+

|

| 122 |

+

- Get the original LLaMA weights in the Hugging Face format by following the instructions [here](https://huggingface.co/docs/transformers/main/model_doc/llama).

|

| 123 |

+

- Download Vicuna delta weights :point_right: [[7B](https://huggingface.co/lmsys/vicuna-7b-delta-v0)][[13B](https://huggingface.co/lmsys/vicuna-13b-delta-v0)] (Note: we use **v0 weights** instead of v1.1 weights).

|

| 124 |

+

- Use the following command to add delta weights to the original LLaMA weights to obtain the Vicuna weights:

|

| 125 |

+

|

| 126 |

+

```

|

| 127 |

+

python apply_delta.py \

|

| 128 |

+

--base /path/to/llama-13b \

|

| 129 |

+

--target /output/path/to/vicuna-13b --delta /path/to/vicuna-13b-delta

|

| 130 |

+

```

|

| 131 |

+

|

| 132 |

+

#### Pre-trained Visual Encoder in Vision-Language Branch

|

| 133 |

+

- Download the MiniGPT-4 model (trained linear layer) from this [link](https://drive.google.com/file/d/1a4zLvaiDBr-36pasffmgpvH5P7CKmpze/view).

|

| 134 |

+

|

| 135 |

+

#### Pre-trained Audio Encoder in Audio-Language Branch

|

| 136 |

+

- Download the weight of ImageBind from this [link](https://github.com/facebookresearch/ImageBind).

|

| 137 |

+

|

| 138 |

+

## Download Learnable Weights

|

| 139 |

+

Use `git-lfs` to download the learnable weights of our Video-LLaMA (i.e., positional embedding layer + Q-Former + linear projection layer):

|

| 140 |

+

```bash

|

| 141 |

+

git lfs install

|

| 142 |

+

git clone https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-Series

|

| 143 |

+

```

|

| 144 |

+

The above commands will download the model weights of all the Video-LLaMA variants. For sure, you can choose to download the weights on demand. For example, if you want to run Video-LLaMA with Vicuna-7B as language decoder locally, then:

|

| 145 |

+

```bash

|

| 146 |

+

wget https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-Series/resolve/main/finetune-vicuna7b-v2.pth

|

| 147 |

+

wget https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-Series/resolve/main/finetune_vicuna7b_audiobranch.pth

|

| 148 |

+

```

|

| 149 |

+

should meet the requirement.

|

| 150 |

+

|

| 151 |

+

## How to Run Demo Locally

|

| 152 |

+

|

| 153 |

+

Firstly, set the `llama_model`, `imagebind_ckpt_path`, `ckpt` and `ckpt_2` in [eval_configs/video_llama_eval_withaudio.yaml](./eval_configs/video_llama_eval_withaudio.yaml).

|

| 154 |

+

Then run the script:

|

| 155 |

+

```

|

| 156 |

+

python demo_audiovideo.py \

|

| 157 |

+

--cfg-path eval_configs/video_llama_eval_withaudio.yaml --model_type vicuna --gpu-id 0

|

| 158 |

+

```

|

| 159 |

+

|

| 160 |

+

## Training

|

| 161 |

+

|

| 162 |

+

The training of each cross-modal branch (i.e., VL branch or AL branch) in Video-LLaMA consists of two stages,

|

| 163 |

+

|

| 164 |

+

1. Pre-training on the [Webvid-2.5M](https://github.com/m-bain/webvid) video caption dataset and [LLaVA-CC3M]((https://github.com/haotian-liu/LLaVA)) image caption dataset.

|

| 165 |

+

|

| 166 |

+

2. Fine-tuning using the image-based instruction-tuning data from [MiniGPT-4](https://github.com/Vision-CAIR/MiniGPT-4)/[LLaVA](https://github.com/haotian-liu/LLaVA) and the video-based instruction-tuning data from [VideoChat](https://github.com/OpenGVLab/Ask-Anything).

|

| 167 |

+

|

| 168 |

+

### 1. Pre-training

|

| 169 |

+

#### Data Preparation

|

| 170 |

+

Download the metadata and video following the instruction from the official Github repo of [Webvid](https://github.com/m-bain/webvid).

|

| 171 |

+

The folder structure of the dataset is shown below:

|

| 172 |

+

```

|

| 173 |

+

|webvid_train_data

|

| 174 |

+

|──filter_annotation

|

| 175 |

+

|────0.tsv

|

| 176 |

+

|──videos

|

| 177 |

+

|────000001_000050

|

| 178 |

+

|──────1066674784.mp4

|

| 179 |

+

```

|

| 180 |

+

```

|

| 181 |

+

|cc3m

|

| 182 |

+

|──filter_cap.json

|

| 183 |

+

|──image

|

| 184 |

+

|────GCC_train_000000000.jpg

|

| 185 |

+

|────...

|

| 186 |

+

```

|

| 187 |

+

#### Script

|

| 188 |

+

Config the the checkpoint and dataset paths in [video_llama_stage1_pretrain.yaml](./train_configs/video_llama_stage1_pretrain.yaml).

|

| 189 |

+

Run the script:

|

| 190 |

+

```

|

| 191 |

+

conda activate videollama

|

| 192 |

+

torchrun --nproc_per_node=8 train.py --cfg-path ./train_configs/video_llama_stage1_pretrain.yaml

|

| 193 |

+

```

|

| 194 |

+

|

| 195 |

+

### 2. Instruction Fine-tuning

|

| 196 |

+

#### Data

|

| 197 |

+

For now, the fine-tuning dataset consists of:

|

| 198 |

+

* 150K image-based instructions from LLaVA [[link](https://huggingface.co/datasets/liuhaotian/LLaVA-Instruct-150K/raw/main/llava_instruct_150k.json)]

|

| 199 |

+

* 3K image-based instructions from MiniGPT-4 [[link](https://github.com/Vision-CAIR/MiniGPT-4/blob/main/dataset/README_2_STAGE.md)]

|

| 200 |

+

* 11K video-based instructions from VideoChat [[link](https://github.com/OpenGVLab/InternVideo/tree/main/Data/instruction_data)]

|

| 201 |

+

|

| 202 |

+

#### Script

|

| 203 |

+

Config the checkpoint and dataset paths in [video_llama_stage2_finetune.yaml](./train_configs/video_llama_stage2_finetune.yaml).

|

| 204 |

+

```

|

| 205 |

+

conda activate videollama

|

| 206 |

+

torchrun --nproc_per_node=8 train.py --cfg-path ./train_configs/video_llama_stage2_finetune.yaml

|

| 207 |

+

```

|

| 208 |

+

|

| 209 |

+

## Recommended GPUs

|

| 210 |

+

* Pre-training: 8xA100 (80G)

|

| 211 |

+

* Instruction-tuning: 8xA100 (80G)

|

| 212 |

+

* Inference: 1xA100 (40G/80G) or 1xA6000

|

| 213 |

+

|

| 214 |

+

## Acknowledgement

|

| 215 |

+

We are grateful for the following awesome projects our Video-LLaMA arising from:

|

| 216 |

+

* [MiniGPT-4](https://github.com/Vision-CAIR/MiniGPT-4): Enhancing Vision-language Understanding with Advanced Large Language Models

|

| 217 |

+

* [FastChat](https://github.com/lm-sys/FastChat): An Open Platform for Training, Serving, and Evaluating Large Language Model based Chatbots

|

| 218 |

+

* [BLIP-2](https://github.com/salesforce/LAVIS/tree/main/projects/blip2): Bootstrapping Language-Image Pre-training with Frozen Image Encoders and Large Language Models

|

| 219 |

+

* [EVA-CLIP](https://github.com/baaivision/EVA/tree/master/EVA-CLIP): Improved Training Techniques for CLIP at Scale

|

| 220 |

+

* [ImageBind](https://github.com/facebookresearch/ImageBind): One Embedding Space To Bind Them All

|

| 221 |

+

* [LLaMA](https://github.com/facebookresearch/llama): Open and Efficient Foundation Language Models

|

| 222 |

+

* [VideoChat](https://github.com/OpenGVLab/Ask-Anything): Chat-Centric Video Understanding

|

| 223 |

+

* [LLaVA](https://github.com/haotian-liu/LLaVA): Large Language and Vision Assistant

|

| 224 |

+

* [WebVid](https://github.com/m-bain/webvid): A Large-scale Video-Text dataset

|

| 225 |

+

* [mPLUG-Owl](https://github.com/X-PLUG/mPLUG-Owl/tree/main): Modularization Empowers Large Language Models with Multimodality

|

| 226 |

+

|

| 227 |

+

The logo of Video-LLaMA is generated by [Midjourney](https://www.midjourney.com/).

|

| 228 |

+

|

| 229 |

+

|

| 230 |

+

## Term of Use

|

| 231 |

+

Our Video-LLaMA is just a research preview intended for non-commercial use only. You must **NOT** use our Video-LLaMA for any illegal, harmful, violent, racist, or sexual purposes. You are strictly prohibited from engaging in any activity that will potentially violate these guidelines.

|

| 232 |

+

|

| 233 |

+

## Citation

|

| 234 |

+

If you find our project useful, hope you can star our repo and cite our paper as follows:

|

| 235 |

+

```

|

| 236 |

+

@article{damonlpsg2023videollama,

|

| 237 |

+

author = {Zhang, Hang and Li, Xin and Bing, Lidong},

|

| 238 |

+

title = {Video-LLaMA: An Instruction-tuned Audio-Visual Language Model for Video Understanding},

|

| 239 |

+

year = 2023,

|

| 240 |

+

journal = {arXiv preprint arXiv:2306.02858},

|

| 241 |

+

url = {https://arxiv.org/abs/2306.02858}

|

| 242 |

+

}

|

| 243 |

+

```

|

| 244 |

+

|

README.md

CHANGED

|

@@ -1,13 +1,249 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

| 13 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<p align="center" width="100%">

|

| 2 |

+

<a target="_blank"><img src="figs/video_llama_logo.jpg" alt="Video-LLaMA" style="width: 50%; min-width: 200px; display: block; margin: auto;"></a>

|

| 3 |

+

</p>

|

| 4 |

+

|

| 5 |

+

|

| 6 |

+

|

| 7 |

+

# Video-LLaMA: An Instruction-tuned Audio-Visual Language Model for Video Understanding

|

| 8 |

+

<!-- **Video-LLaMA: An Instruction-tuned Audio-Visual Language Model for Video Understanding** -->

|

| 9 |

+

|

| 10 |

+

This is the repo for the Video-LLaMA project, which is working on empowering large language models with video and audio understanding capabilities.

|

| 11 |

+

|

| 12 |

+

<div style='display:flex; gap: 0.25rem; '>

|

| 13 |

+

<a href='https://modelscope.cn/studios/damo/video-llama/summary'><img src='https://img.shields.io/badge/ModelScope-Demo-blueviolet'></a>

|

| 14 |

+

<a href='https://www.modelscope.cn/models/damo/videollama_7b_llama2_finetuned/summary'><img src='https://img.shields.io/badge/ModelScope-Checkpoint-blueviolet'></a>

|

| 15 |

+

<a href='https://huggingface.co/spaces/DAMO-NLP-SG/Video-LLaMA'><img src='https://img.shields.io/badge/%F0%9F%A4%97%20Hugging%20Face-Demo-blue'></a>

|

| 16 |

+

<a href='https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-2-7B-Finetuned'><img src='https://img.shields.io/badge/%F0%9F%A4%97%20Hugging%20Face-Checkpoint-blue'></a>

|

| 17 |

+

<a href='https://arxiv.org/abs/2306.02858'><img src='https://img.shields.io/badge/Paper-PDF-red'></a>

|

| 18 |

+

</div>

|

| 19 |

+

|

| 20 |

+

## News

|

| 21 |

+

- [08.03] 🚀🚀 Release **Video-LLaMA-2** with [Llama-2-7B/13B-Chat](https://huggingface.co/meta-llama) as language decoder

|

| 22 |

+

- **NO** delta weights and separate Q-former weights anymore, full weights to run Video-LLaMA are all here :point_right: [[7B](https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-2-7B-Finetuned)][[13B](https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-2-13B-Finetuned)]

|

| 23 |

+

- Allow further customization starting from our pre-trained checkpoints [[7B-Pretrained](https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-2-7B-Pretrained)] [[13B-Pretrained](https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-2-13B-Pretrained)]

|

| 24 |

+

- [06.14] **NOTE**: The current online interactive demo is primarily for English chatting and it may **NOT** be a good option to ask Chinese questions since Vicuna/LLaMA does not represent Chinese texts very well.

|

| 25 |

+

- [06.13] **NOTE**: The audio support is **ONLY** for Vicuna-7B by now although we have several VL checkpoints available for other decoders.

|

| 26 |

+

- [06.10] **NOTE**: We have NOT updated the HF demo yet because the whole framework (with the audio branch) cannot run normally on A10-24G. The current running demo is still the previous version of Video-LLaMA. We will fix this issue soon.

|

| 27 |

+

- [06.08] 🚀🚀 Release the checkpoints of the audio-supported Video-LLaMA. Documentation and example outputs are also updated.

|

| 28 |

+

- [05.22] 🚀🚀 Interactive demo online, try our Video-LLaMA (with **Vicuna-7B** as language decoder) at [Hugging Face](https://huggingface.co/spaces/DAMO-NLP-SG/Video-LLaMA) and [ModelScope](https://pre.modelscope.cn/studios/damo/video-llama/summary)!!

|

| 29 |

+

- [05.22] ⭐️ Release **Video-LLaMA v2** built with Vicuna-7B

|

| 30 |

+

- [05.18] 🚀🚀 Support video-grounded chat in Chinese

|

| 31 |

+

- [**Video-LLaMA-BiLLA**](https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-Series/resolve/main/finetune-billa7b-zh.pth): we introduce [BiLLa-7B-SFT](https://huggingface.co/Neutralzz/BiLLa-7B-SFT) as language decoder and fine-tune the video-language aligned model (i.e., stage 1 model) with machine-translated [VideoChat](https://github.com/OpenGVLab/InternVideo/tree/main/Data/instruction_data) instructions.

|

| 32 |

+

- [**Video-LLaMA-Ziya**](https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-Series/resolve/main/finetune-ziya13b-zh.pth): same with Video-LLaMA-BiLLA but the language decoder is changed to [Ziya-13B](https://huggingface.co/IDEA-CCNL/Ziya-LLaMA-13B-v1).

|

| 33 |

+

- [05.18] ⭐️ Create a Hugging Face [repo](https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-Series) to store the model weights of all the variants of our Video-LLaMA.

|

| 34 |

+

- [05.15] ⭐️ Release [**Video-LLaMA v2**](https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-Series/resolve/main/finetune-vicuna13b-v2.pth): we use the training data provided by [VideoChat](https://github.com/OpenGVLab/InternVideo/tree/main/Data/instruction_data) to further enhance the instruction-following capability of Video-LLaMA.

|

| 35 |

+

- [05.07] Release the initial version of **Video-LLaMA**, including its pre-trained and instruction-tuned checkpoints.

|

| 36 |

+

|

| 37 |

+

<p align="center" width="100%">

|

| 38 |

+

<a target="_blank"><img src="figs/architecture_v2.png" alt="Video-LLaMA" style="width: 80%; min-width: 200px; display: block; margin: auto;"></a>

|

| 39 |

+

</p>

|

| 40 |

+

|

| 41 |

+

## Introduction

|

| 42 |

+

|

| 43 |

+

|

| 44 |

+

- Video-LLaMA is built on top of [BLIP-2](https://github.com/salesforce/LAVIS/tree/main/projects/blip2) and [MiniGPT-4](https://github.com/Vision-CAIR/MiniGPT-4). It is composed of two core components: (1) Vision-Language (VL) Branch and (2) Audio-Language (AL) Branch.

|

| 45 |

+

- **VL Branch** (Visual encoder: ViT-G/14 + BLIP-2 Q-Former)

|

| 46 |

+

- A two-layer video Q-Former and a frame embedding layer (applied to the embeddings of each frame) are introduced to compute video representations.

|

| 47 |

+

- We train VL Branch on the Webvid-2M video caption dataset with a video-to-text generation task. We also add image-text pairs (~595K image captions from [LLaVA](https://github.com/haotian-liu/LLaVA)) into the pre-training dataset to enhance the understanding of static visual concepts.

|

| 48 |

+

- After pre-training, we further fine-tune our VL Branch using the instruction-tuning data from [MiniGPT-4](https://github.com/Vision-CAIR/MiniGPT-4), [LLaVA](https://github.com/haotian-liu/LLaVA) and [VideoChat](https://github.com/OpenGVLab/Ask-Anything).

|

| 49 |

+

- **AL Branch** (Audio encoder: ImageBind-Huge)

|

| 50 |

+

- A two-layer audio Q-Former and an audio segment embedding layer (applied to the embedding of each audio segment) are introduced to compute audio representations.

|

| 51 |

+

- As the used audio encoder (i.e., ImageBind) is already aligned across multiple modalities, we train AL Branch on video/image instruction data only, just to connect the output of ImageBind to the language decoder.

|

| 52 |

+

- Only the Video/Audio Q-Former, positional embedding layers, and linear layers are trainable during cross-modal training.

|

| 53 |

+

|

| 54 |

+

|

| 55 |

+

|

| 56 |

+

## Example Outputs

|

| 57 |

+

|

| 58 |

+

|

| 59 |

+

- **Video with background sound**

|

| 60 |

+

|

| 61 |

+

<p float="left">

|

| 62 |

+

<img src="https://github.com/DAMO-NLP-SG/Video-LLaMA/assets/18526640/7f7bddb2-5cf1-4cf4-bce3-3fa67974cbb3" style="width: 45%; margin: auto;">

|

| 63 |

+

<img src="https://github.com/DAMO-NLP-SG/Video-LLaMA/assets/18526640/ec76be04-4aa9-4dde-bff2-0a232b8315e0" style="width: 45%; margin: auto;">

|

| 64 |

+

</p>

|

| 65 |

+

|

| 66 |

+

|

| 67 |

+

- **Video without sound effects**

|

| 68 |

+

<p float="left">

|

| 69 |

+

<img src="https://github.com/DAMO-NLP-SG/Video-LLaMA/assets/18526640/539ea3cc-360d-4b2c-bf86-5505096df2f7" style="width: 45%; margin: auto;">

|

| 70 |

+

<img src="https://github.com/DAMO-NLP-SG/Video-LLaMA/assets/18526640/7304ad6f-1009-46f1-aca4-7f861b636363" style="width: 45%; margin: auto;">

|

| 71 |

+

</p>

|

| 72 |

+

|

| 73 |

+

- **Static image**

|

| 74 |

+

<p float="left">

|

| 75 |

+

<img src="https://github.com/DAMO-NLP-SG/Video-LLaMA/assets/18526640/a146c169-8693-4627-96e6-f885ca22791f" style="width: 45%; margin: auto;">

|

| 76 |

+

<img src="https://github.com/DAMO-NLP-SG/Video-LLaMA/assets/18526640/66fc112d-e47e-4b66-b9bc-407f8d418b17" style="width: 45%; margin: auto;">

|

| 77 |

+

</p>

|

| 78 |

+

|

| 79 |

+

|

| 80 |

+

|

| 81 |

+

## Pre-trained & Fine-tuned Checkpoints

|

| 82 |

+

|

| 83 |

+

The following checkpoints store learnable parameters (positional embedding layers, Video/Audio Q-former, and linear projection layers) only.

|

| 84 |

+

|

| 85 |

+

#### Vision-Language Branch

|

| 86 |

+

| Checkpoint | Link | Note |

|

| 87 |

+

|:------------|-------------|-------------|

|

| 88 |

+

| pretrain-vicuna7b | [link](https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-Series/resolve/main/pretrain_vicuna7b-v2.pth) | Pre-trained on WebVid (2.5M video-caption pairs) and LLaVA-CC3M (595k image-caption pairs) |

|

| 89 |

+

| finetune-vicuna7b-v2 | [link](https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-Series/resolve/main/finetune-vicuna7b-v2.pth) | Fine-tuned on the instruction-tuning data from [MiniGPT-4](https://github.com/Vision-CAIR/MiniGPT-4), [LLaVA](https://github.com/haotian-liu/LLaVA) and [VideoChat](https://github.com/OpenGVLab/Ask-Anything)|

|

| 90 |

+

| pretrain-vicuna13b | [link](https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-Series/resolve/main/pretrain-vicuna13b.pth) | Pre-trained on WebVid (2.5M video-caption pairs) and LLaVA-CC3M (595k image-caption pairs) |

|

| 91 |

+

| finetune-vicuna13b-v2 | [link](https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-Series/resolve/main/finetune-vicuna13b-v2.pth) | Fine-tuned on the instruction-tuning data from [MiniGPT-4](https://github.com/Vision-CAIR/MiniGPT-4), [LLaVA](https://github.com/haotian-liu/LLaVA) and [VideoChat](https://github.com/OpenGVLab/Ask-Anything)|

|

| 92 |

+

| pretrain-ziya13b-zh | [link](https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-Series/resolve/main/pretrain-ziya13b-zh.pth) | Pre-trained with Chinese LLM [Ziya-13B](https://huggingface.co/IDEA-CCNL/Ziya-LLaMA-13B-v1) |

|

| 93 |

+

| finetune-ziya13b-zh | [link](https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-Series/resolve/main/finetune-ziya13b-zh.pth) | Fine-tuned on machine-translated [VideoChat](https://github.com/OpenGVLab/Ask-Anything) instruction-following dataset (in Chinese)|

|

| 94 |

+

| pretrain-billa7b-zh | [link](https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-Series/resolve/main/pretrain-billa7b-zh.pth) | Pre-trained with Chinese LLM [BiLLA-7B-SFT](https://huggingface.co/Neutralzz/BiLLa-7B-SFT) |

|

| 95 |

+

| finetune-billa7b-zh | [link](https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-Series/resolve/main/finetune-billa7b-zh.pth) | Fine-tuned on machine-translated [VideoChat](https://github.com/OpenGVLab/Ask-Anything) instruction-following dataset (in Chinese) |

|

| 96 |

+

|

| 97 |

+

#### Audio-Language Branch

|

| 98 |

+

| Checkpoint | Link | Note |

|

| 99 |

+

|:------------|-------------|-------------|

|

| 100 |

+

| pretrain-vicuna7b | [link](https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-Series/resolve/main/pretrain_vicuna7b_audiobranch.pth) | Pre-trained on WebVid (2.5M video-caption pairs) and LLaVA-CC3M (595k image-caption pairs) |

|

| 101 |

+

| finetune-vicuna7b-v2 | [link](https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-Series/resolve/main/finetune_vicuna7b_audiobranch.pth) | Fine-tuned on the instruction-tuning data from [MiniGPT-4](https://github.com/Vision-CAIR/MiniGPT-4), [LLaVA](https://github.com/haotian-liu/LLaVA) and [VideoChat](https://github.com/OpenGVLab/Ask-Anything)|

|

| 102 |

+

|

| 103 |

+

|

| 104 |

+

## Usage

|

| 105 |

+

#### Enviroment Preparation

|

| 106 |

+

|

| 107 |

+

First, install ffmpeg.

|

| 108 |

+

```

|

| 109 |

+

apt update

|

| 110 |

+

apt install ffmpeg

|

| 111 |

+

```

|

| 112 |

+

Then, create a conda environment:

|

| 113 |

+

```

|

| 114 |

+

conda env create -f environment.yml

|

| 115 |

+

conda activate videollama

|

| 116 |

+

```

|

| 117 |

+

|

| 118 |

+

|

| 119 |

+

## Prerequisites

|

| 120 |

+

|

| 121 |

+

Before using the repository, make sure you have obtained the following checkpoints:

|

| 122 |

+

|

| 123 |

+

#### Pre-trained Language Decoder

|

| 124 |

+

|

| 125 |

+

- Get the original LLaMA weights in the Hugging Face format by following the instructions [here](https://huggingface.co/docs/transformers/main/model_doc/llama).

|

| 126 |

+

- Download Vicuna delta weights :point_right: [[7B](https://huggingface.co/lmsys/vicuna-7b-delta-v0)][[13B](https://huggingface.co/lmsys/vicuna-13b-delta-v0)] (Note: we use **v0 weights** instead of v1.1 weights).

|

| 127 |

+

- Use the following command to add delta weights to the original LLaMA weights to obtain the Vicuna weights:

|

| 128 |

+

|

| 129 |

+

```

|

| 130 |

+

python apply_delta.py \

|

| 131 |

+

--base /path/to/llama-13b \

|

| 132 |

+

--target /output/path/to/vicuna-13b --delta /path/to/vicuna-13b-delta

|

| 133 |

+

```

|

| 134 |

+

|

| 135 |

+

#### Pre-trained Visual Encoder in Vision-Language Branch

|

| 136 |

+

- Download the MiniGPT-4 model (trained linear layer) from this [link](https://drive.google.com/file/d/1a4zLvaiDBr-36pasffmgpvH5P7CKmpze/view).

|

| 137 |

+

|

| 138 |

+

#### Pre-trained Audio Encoder in Audio-Language Branch

|

| 139 |

+

- Download the weight of ImageBind from this [link](https://github.com/facebookresearch/ImageBind).

|

| 140 |

+

|

| 141 |

+

## Download Learnable Weights

|

| 142 |

+

Use `git-lfs` to download the learnable weights of our Video-LLaMA (i.e., positional embedding layer + Q-Former + linear projection layer):

|

| 143 |

+

```bash

|

| 144 |

+

git lfs install

|

| 145 |

+

git clone https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-Series

|

| 146 |

+

```

|

| 147 |

+

The above commands will download the model weights of all the Video-LLaMA variants. For sure, you can choose to download the weights on demand. For example, if you want to run Video-LLaMA with Vicuna-7B as language decoder locally, then:

|

| 148 |

+

```bash

|

| 149 |

+

wget https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-Series/resolve/main/finetune-vicuna7b-v2.pth

|

| 150 |

+

wget https://huggingface.co/DAMO-NLP-SG/Video-LLaMA-Series/resolve/main/finetune_vicuna7b_audiobranch.pth

|

| 151 |

+

```

|

| 152 |

+

should meet the requirement.

|

| 153 |

+

|

| 154 |

+

## How to Run Demo Locally

|

| 155 |

+

|

| 156 |

+

Firstly, set the `llama_model`, `imagebind_ckpt_path`, `ckpt` and `ckpt_2` in [eval_configs/video_llama_eval_withaudio.yaml](./eval_configs/video_llama_eval_withaudio.yaml).

|

| 157 |

+

Then run the script:

|

| 158 |

+

```

|

| 159 |

+

python demo_audiovideo.py \

|

| 160 |

+

--cfg-path eval_configs/video_llama_eval_withaudio.yaml \

|

| 161 |

+

--model_type llama_v2 \ # or vicuna

|

| 162 |

+

--gpu-id 0

|

| 163 |

+

```

|

| 164 |

+

|

| 165 |

+

## Training

|

| 166 |

+

|

| 167 |

+

The training of each cross-modal branch (i.e., VL branch or AL branch) in Video-LLaMA consists of two stages,

|

| 168 |

+

|

| 169 |

+

1. Pre-training on the [Webvid-2.5M](https://github.com/m-bain/webvid) video caption dataset and [LLaVA-CC3M]((https://github.com/haotian-liu/LLaVA)) image caption dataset.

|

| 170 |

+

|

| 171 |

+

2. Fine-tuning using the image-based instruction-tuning data from [MiniGPT-4](https://github.com/Vision-CAIR/MiniGPT-4)/[LLaVA](https://github.com/haotian-liu/LLaVA) and the video-based instruction-tuning data from [VideoChat](https://github.com/OpenGVLab/Ask-Anything).

|

| 172 |

+

|

| 173 |

+

### 1. Pre-training

|

| 174 |

+

#### Data Preparation

|

| 175 |

+

Download the metadata and video following the instructions from the official Github repo of [Webvid](https://github.com/m-bain/webvid).

|

| 176 |

+

The folder structure of the dataset is shown below:

|

| 177 |

+

```

|

| 178 |

+

|webvid_train_data

|

| 179 |

+

|──filter_annotation

|

| 180 |

+

|────0.tsv

|

| 181 |

+

|──videos

|

| 182 |

+

|────000001_000050

|

| 183 |

+

|──────1066674784.mp4

|

| 184 |

+

```

|

| 185 |

+

```

|

| 186 |

+

|cc3m

|

| 187 |

+

|──filter_cap.json

|

| 188 |

+

|──image

|

| 189 |

+

|────GCC_train_000000000.jpg

|

| 190 |

+

|────...

|

| 191 |

+

```

|

| 192 |

+

#### Script

|

| 193 |

+

Config the the checkpoint and dataset paths in [video_llama_stage1_pretrain.yaml](./train_configs/video_llama_stage1_pretrain.yaml).

|

| 194 |

+

Run the script:

|

| 195 |

+

```

|

| 196 |

+

conda activate videollama

|

| 197 |

+

torchrun --nproc_per_node=8 train.py --cfg-path ./train_configs/video_llama_stage1_pretrain.yaml

|

| 198 |

+

```

|

| 199 |

+

|

| 200 |

+

### 2. Instruction Fine-tuning

|

| 201 |

+

#### Data

|

| 202 |

+

For now, the fine-tuning dataset consists of:

|

| 203 |

+

* 150K image-based instructions from LLaVA [[link](https://huggingface.co/datasets/liuhaotian/LLaVA-Instruct-150K/raw/main/llava_instruct_150k.json)]

|

| 204 |

+

* 3K image-based instructions from MiniGPT-4 [[link](https://github.com/Vision-CAIR/MiniGPT-4/blob/main/dataset/README_2_STAGE.md)]

|

| 205 |

+

* 11K video-based instructions from VideoChat [[link](https://github.com/OpenGVLab/InternVideo/tree/main/Data/instruction_data)]

|

| 206 |

+

|

| 207 |

+

#### Script

|

| 208 |

+

Config the checkpoint and dataset paths in [video_llama_stage2_finetune.yaml](./train_configs/video_llama_stage2_finetune.yaml).

|

| 209 |

+

```

|

| 210 |

+

conda activate videollama

|

| 211 |

+

torchrun --nproc_per_node=8 train.py --cfg-path ./train_configs/video_llama_stage2_finetune.yaml

|

| 212 |

+

```

|

| 213 |

+

|

| 214 |

+

## Recommended GPUs

|

| 215 |

+

* Pre-training: 8xA100 (80G)

|

| 216 |

+

* Instruction-tuning: 8xA100 (80G)

|

| 217 |

+

* Inference: 1xA100 (40G/80G) or 1xA6000

|

| 218 |

+

|

| 219 |

+

## Acknowledgement

|

| 220 |

+

We are grateful for the following awesome projects our Video-LLaMA arising from:

|

| 221 |

+

* [MiniGPT-4](https://github.com/Vision-CAIR/MiniGPT-4): Enhancing Vision-language Understanding with Advanced Large Language Models

|

| 222 |

+

* [FastChat](https://github.com/lm-sys/FastChat): An Open Platform for Training, Serving, and Evaluating Large Language Model based Chatbots

|

| 223 |

+

* [BLIP-2](https://github.com/salesforce/LAVIS/tree/main/projects/blip2): Bootstrapping Language-Image Pre-training with Frozen Image Encoders and Large Language Models

|

| 224 |

+

* [EVA-CLIP](https://github.com/baaivision/EVA/tree/master/EVA-CLIP): Improved Training Techniques for CLIP at Scale

|

| 225 |

+

* [ImageBind](https://github.com/facebookresearch/ImageBind): One Embedding Space To Bind Them All

|

| 226 |

+

* [LLaMA](https://github.com/facebookresearch/llama): Open and Efficient Foundation Language Models

|

| 227 |

+

* [VideoChat](https://github.com/OpenGVLab/Ask-Anything): Chat-Centric Video Understanding

|

| 228 |

+

* [LLaVA](https://github.com/haotian-liu/LLaVA): Large Language and Vision Assistant

|

| 229 |

+

* [WebVid](https://github.com/m-bain/webvid): A Large-scale Video-Text dataset

|

| 230 |

+

* [mPLUG-Owl](https://github.com/X-PLUG/mPLUG-Owl/tree/main): Modularization Empowers Large Language Models with Multimodality

|

| 231 |

+

|

| 232 |

+

The logo of Video-LLaMA is generated by [Midjourney](https://www.midjourney.com/).

|

| 233 |

+

|

| 234 |

+

|

| 235 |

+

## Term of Use

|

| 236 |

+

Our Video-LLaMA is just a research preview intended for non-commercial use only. You must **NOT** use our Video-LLaMA for any illegal, harmful, violent, racist, or sexual purposes. You are strictly prohibited from engaging in any activity that will potentially violate these guidelines.

|

| 237 |

+

|

| 238 |

+

## Citation

|

| 239 |

+

If you find our project useful, hope you can star our repo and cite our paper as follows:

|

| 240 |

+

```

|

| 241 |

+

@article{damonlpsg2023videollama,

|

| 242 |

+

author = {Zhang, Hang and Li, Xin and Bing, Lidong},

|

| 243 |

+

title = {Video-LLaMA: An Instruction-tuned Audio-Visual Language Model for Video Understanding},

|

| 244 |

+

year = 2023,

|

| 245 |

+

journal = {arXiv preprint arXiv:2306.02858},

|

| 246 |

+

url = {https://arxiv.org/abs/2306.02858}

|

| 247 |

+

}

|

| 248 |

+

```

|

| 249 |

+

|

Video-LLaMA-2-7B-Finetuned/AL_LLaMA_2_7B_Finetuned.pth

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:1ad66d3e0eb9eaef5392e7b67c4689166f5610088c92652b9ecdae332b8d5b6f

|

| 3 |

+

size 274578657

|

Video-LLaMA-2-7B-Finetuned/VL_LLaMA_2_7B_Finetuned.pth

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:3cec0e2979ed7656e08ecc5b185c2229a3c577b4b7a4721a94bd461ba0447c6e

|

| 3 |

+

size 265559201

|

Video-LLaMA-2-7B-Finetuned/imagebind_huge.pth

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:d6f6c22bedcc90708448d5d2fbb7b2db9c73f505dc89bd0b2e09b23af1b62157

|

| 3 |

+

size 4803584173

|

Video-LLaMA-2-7B-Finetuned/llama-2-7b-chat-hf/config.json

ADDED

|

@@ -0,0 +1,22 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"architectures": [

|

| 3 |

+

"LlamaForCausalLM"

|

| 4 |

+

],

|

| 5 |

+

"bos_token_id": 1,

|

| 6 |

+

"eos_token_id": 2,

|

| 7 |

+

"hidden_act": "silu",

|

| 8 |

+

"hidden_size": 4096,

|

| 9 |

+

"initializer_range": 0.02,

|

| 10 |

+

"intermediate_size": 11008,

|

| 11 |

+

"max_position_embeddings": 2048,

|

| 12 |

+

"model_type": "llama",

|

| 13 |

+

"num_attention_heads": 32,

|

| 14 |

+

"num_hidden_layers": 32,

|

| 15 |

+

"pad_token_id": 0,

|

| 16 |

+

"rms_norm_eps": 1e-06,

|

| 17 |

+

"tie_word_embeddings": false,

|

| 18 |

+

"torch_dtype": "float16",

|

| 19 |

+

"transformers_version": "4.29.0.dev0",

|

| 20 |

+

"use_cache": true,

|

| 21 |

+

"vocab_size": 32000

|

| 22 |

+

}

|

Video-LLaMA-2-7B-Finetuned/llama-2-7b-chat-hf/generation_config.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_from_model_config": true,

|

| 3 |

+

"bos_token_id": 1,

|

| 4 |

+

"eos_token_id": 2,

|

| 5 |

+

"pad_token_id": 0,

|

| 6 |

+

"transformers_version": "4.29.0.dev0"

|

| 7 |

+

}

|