diff --git a/.agent-store-config.yaml b/.agent-store-config.yaml

index d5d24ee57f9d537f834de0ec2e2636ebfcd62dc7..789a5d200bafad8882197f662e51c467d01a2ce6 100644

--- a/.agent-store-config.yaml

+++ b/.agent-store-config.yaml

@@ -1,6 +1,6 @@

role:

name: SoftwareCompany

- module: metagpt.roles.software_company

+ module: software_company

skills:

- name: WritePRD

- name: WriteDesign

diff --git a/.agent-store-config.yaml.example b/.agent-store-config.yaml.example

deleted file mode 100644

index d12cc6999ee0a0665fae8a9f25a1e44443a3f1b7..0000000000000000000000000000000000000000

--- a/.agent-store-config.yaml.example

+++ /dev/null

@@ -1,9 +0,0 @@

-role:

- name: Teacher # Referenced the `Teacher` in `metagpt/roles/teacher.py`.

- module: metagpt.roles.teacher # Referenced `metagpt/roles/teacher.py`.

- skills: # Refer to the skill `name` of the published skill in `.well-known/skills.yaml`.

- - name: text_to_speech

- description: Text-to-speech

- - name: text_to_image

- description: Create a drawing based on the text.

-

diff --git a/.dockerignore b/.dockerignore

index 2968dd34dcdc908cb1353345faa78b10192b378a..26c417db3399d645956bdd526c2ec3ed1e1e4a37 100644

--- a/.dockerignore

+++ b/.dockerignore

@@ -5,3 +5,4 @@ workspace

dist

data

geckodriver.log

+logs

\ No newline at end of file

diff --git a/Dockerfile b/Dockerfile

index ce39b8eca96a3c5eadc337d2de5254cf31586e7d..713f9e92e1542f4602c958402940c983b255ba61 100644

--- a/Dockerfile

+++ b/Dockerfile

@@ -4,7 +4,8 @@ FROM nikolaik/python-nodejs:python3.9-nodejs20-slim

USER root

# Install Debian software needed by MetaGPT and clean up in one RUN command to reduce image size

-RUN apt update &&\

+RUN sed -i 's/deb.debian.org/mirrors.ustc.edu.cn/g' /etc/apt/sources.list.d/debian.sources && \

+ apt update &&\

apt install -y git chromium fonts-ipafont-gothic fonts-wqy-zenhei fonts-thai-tlwg fonts-kacst fonts-freefont-ttf libxss1 --no-install-recommends &&\

apt clean && rm -rf /var/lib/apt/lists/*

@@ -12,20 +13,17 @@ RUN apt update &&\

ENV CHROME_BIN="/usr/bin/chromium" \

PUPPETEER_CONFIG="/app/metagpt/config/puppeteer-config.json"\

PUPPETEER_SKIP_CHROMIUM_DOWNLOAD="true"

-RUN npm install -g @mermaid-js/mermaid-cli &&\

+

+RUN npm install -g @mermaid-js/mermaid-cli --registry=http://registry.npmmirror.com &&\

npm cache clean --force

# Install Python dependencies and install MetaGPT

COPY requirements.txt requirements.txt

-RUN pip install --no-cache-dir -r requirements.txt

+RUN pip install --no-cache-dir -r requirements.txt -i https://pypi.tuna.tsinghua.edu.cn/simple

-COPY . /app/metagpt

-WORKDIR /app/metagpt

-RUN chmod -R 777 /app/metagpt/logs/ &&\

- mkdir workspace &&\

- chmod -R 777 /app/metagpt/workspace/ &&\

- python -m pip install -e.

+WORKDIR /app

+COPY . .

-CMD ["python", "metagpt/web/app.py"]

+CMD ["uvicorn", "app:app"]

diff --git a/README.md b/README.md

index f604416555cda44fc5ac2edc56c80012bfa9c2cb..ea1aeee1688226c01ea89deb01f1f7f373c39c2c 100644

--- a/README.md

+++ b/README.md

@@ -1,260 +1 @@

----

-title: MetaGPT

-emoji: 🐼

-colorFrom: green

-colorTo: blue

-sdk: docker

-app_file: app.py

-pinned: false

----

-

-# MetaGPT: The Multi-Agent Framework

-

-

-

-

-

-Assign different roles to GPTs to form a collaborative software entity for complex tasks.

-

-

-

-

-

-

-

-

-1. MetaGPT takes a **one line requirement** as input and outputs **user stories / competitive analysis / requirements / data structures / APIs / documents, etc.**

-2. Internally, MetaGPT includes **product managers / architects / project managers / engineers.** It provides the entire process of a **software company along with carefully orchestrated SOPs.**

- 1. `Code = SOP(Team)` is the core philosophy. We materialize SOP and apply it to teams composed of LLMs.

-

-

-

-Software Company Multi-Role Schematic (Gradually Implementing)

-

-## Examples (fully generated by GPT-4)

-

-For example, if you type `python startup.py "Design a RecSys like Toutiao"`, you would get many outputs, one of them is data & api design

-

-

-

-It costs approximately **$0.2** (in GPT-4 API fees) to generate one example with analysis and design, and around **$2.0** for a full project.

-

-## Installation

-

-### Installation Video Guide

-

-- [Matthew Berman: How To Install MetaGPT - Build A Startup With One Prompt!!](https://youtu.be/uT75J_KG_aY)

-

-### Traditional Installation

-

-```bash

-# Step 1: Ensure that NPM is installed on your system. Then install mermaid-js.

-npm --version

-sudo npm install -g @mermaid-js/mermaid-cli

-

-# Step 2: Ensure that Python 3.9+ is installed on your system. You can check this by using:

-python --version

-

-# Step 3: Clone the repository to your local machine, and install it.

-git clone https://github.com/geekan/metagpt

-cd metagpt

-python setup.py install

-```

-

-**Note:**

-

-- If already have Chrome, Chromium, or MS Edge installed, you can skip downloading Chromium by setting the environment variable

- `PUPPETEER_SKIP_CHROMIUM_DOWNLOAD` to `true`.

-

-- Some people are [having issues](https://github.com/mermaidjs/mermaid.cli/issues/15) installing this tool globally. Installing it locally is an alternative solution,

-

- ```bash

- npm install @mermaid-js/mermaid-cli

- ```

-

-- don't forget to the configuration for mmdc in config.yml

-

- ```yml

- PUPPETEER_CONFIG: "./config/puppeteer-config.json"

- MMDC: "./node_modules/.bin/mmdc"

- ```

-

-- if `python setup.py install` fails with error `[Errno 13] Permission denied: '/usr/local/lib/python3.11/dist-packages/test-easy-install-13129.write-test'`, try instead running `python setup.py install --user`

-

-### Installation by Docker

-

-```bash

-# Step 1: Download metagpt official image and prepare config.yaml

-docker pull metagpt/metagpt:v0.3.1

-mkdir -p /opt/metagpt/{config,workspace}

-docker run --rm metagpt/metagpt:v0.3.1 cat /app/metagpt/config/config.yaml > /opt/metagpt/config/key.yaml

-vim /opt/metagpt/config/key.yaml # Change the config

-

-# Step 2: Run metagpt demo with container

-docker run --rm \

- --privileged \

- -v /opt/metagpt/config/key.yaml:/app/metagpt/config/key.yaml \

- -v /opt/metagpt/workspace:/app/metagpt/workspace \

- metagpt/metagpt:v0.3.1 \

- python startup.py "Write a cli snake game"

-

-# You can also start a container and execute commands in it

-docker run --name metagpt -d \

- --privileged \

- -v /opt/metagpt/config/key.yaml:/app/metagpt/config/key.yaml \

- -v /opt/metagpt/workspace:/app/metagpt/workspace \

- metagpt/metagpt:v0.3.1

-

-docker exec -it metagpt /bin/bash

-$ python startup.py "Write a cli snake game"

-```

-

-The command `docker run ...` do the following things:

-

-- Run in privileged mode to have permission to run the browser

-- Map host directory `/opt/metagpt/config` to container directory `/app/metagpt/config`

-- Map host directory `/opt/metagpt/workspace` to container directory `/app/metagpt/workspace`

-- Execute the demo command `python startup.py "Write a cli snake game"`

-

-### Build image by yourself

-

-```bash

-# You can also build metagpt image by yourself.

-git clone https://github.com/geekan/MetaGPT.git

-cd MetaGPT && docker build -t metagpt:custom .

-```

-

-## Configuration

-

-- Configure your `OPENAI_API_KEY` in any of `config/key.yaml / config/config.yaml / env`

-- Priority order: `config/key.yaml > config/config.yaml > env`

-

-```bash

-# Copy the configuration file and make the necessary modifications.

-cp config/config.yaml config/key.yaml

-```

-

-| Variable Name | config/key.yaml | env |

-| ------------------------------------------ | ----------------------------------------- | ----------------------------------------------- |

-| OPENAI_API_KEY # Replace with your own key | OPENAI_API_KEY: "sk-..." | export OPENAI_API_KEY="sk-..." |

-| OPENAI_API_BASE # Optional | OPENAI_API_BASE: "https:///v1" | export OPENAI_API_BASE="https:///v1" |

-

-## Tutorial: Initiating a startup

-

-```shell

-# Run the script

-python startup.py "Write a cli snake game"

-# Do not hire an engineer to implement the project

-python startup.py "Write a cli snake game" --implement False

-# Hire an engineer and perform code reviews

-python startup.py "Write a cli snake game" --code_review True

-```

-

-After running the script, you can find your new project in the `workspace/` directory.

-

-### Preference of Platform or Tool

-

-You can tell which platform or tool you want to use when stating your requirements.

-

-```shell

-python startup.py "Write a cli snake game based on pygame"

-```

-

-### Usage

-

-```

-NAME

- startup.py - We are a software startup comprised of AI. By investing in us, you are empowering a future filled with limitless possibilities.

-

-SYNOPSIS

- startup.py IDEA

-

-DESCRIPTION

- We are a software startup comprised of AI. By investing in us, you are empowering a future filled with limitless possibilities.

-

-POSITIONAL ARGUMENTS

- IDEA

- Type: str

- Your innovative idea, such as "Creating a snake game."

-

-FLAGS

- --investment=INVESTMENT

- Type: float

- Default: 3.0

- As an investor, you have the opportunity to contribute a certain dollar amount to this AI company.

- --n_round=N_ROUND

- Type: int

- Default: 5

-

-NOTES

- You can also use flags syntax for POSITIONAL ARGUMENTS

-```

-

-### Code walkthrough

-

-```python

-from metagpt.software_company import SoftwareCompany

-from metagpt.roles import ProjectManager, ProductManager, Architect, Engineer

-

-async def startup(idea: str, investment: float = 3.0, n_round: int = 5):

- """Run a startup. Be a boss."""

- company = SoftwareCompany()

- company.hire([ProductManager(), Architect(), ProjectManager(), Engineer()])

- company.invest(investment)

- company.start_project(idea)

- await company.run(n_round=n_round)

-```

-

-You can check `examples` for more details on single role (with knowledge base) and LLM only examples.

-

-## QuickStart

-

-It is difficult to install and configure the local environment for some users. The following tutorials will allow you to quickly experience the charm of MetaGPT.

-

-- [MetaGPT quickstart](https://deepwisdom.feishu.cn/wiki/CyY9wdJc4iNqArku3Lncl4v8n2b)

-

-## Citation

-

-For now, cite the [Arxiv paper](https://arxiv.org/abs/2308.00352):

-

-```bibtex

-@misc{hong2023metagpt,

- title={MetaGPT: Meta Programming for Multi-Agent Collaborative Framework},

- author={Sirui Hong and Xiawu Zheng and Jonathan Chen and Yuheng Cheng and Jinlin Wang and Ceyao Zhang and Zili Wang and Steven Ka Shing Yau and Zijuan Lin and Liyang Zhou and Chenyu Ran and Lingfeng Xiao and Chenglin Wu},

- year={2023},

- eprint={2308.00352},

- archivePrefix={arXiv},

- primaryClass={cs.AI}

-}

-```

-

-## Contact Information

-

-If you have any questions or feedback about this project, please feel free to contact us. We highly appreciate your suggestions!

-

-- **Email:** alexanderwu@fuzhi.ai

-- **GitHub Issues:** For more technical inquiries, you can also create a new issue in our [GitHub repository](https://github.com/geekan/metagpt/issues).

-

-We will respond to all questions within 2-3 business days.

-

-## Demo

-

-https://github.com/geekan/MetaGPT/assets/2707039/5e8c1062-8c35-440f-bb20-2b0320f8d27d

-

-## Join us

-

-📢 Join Our Discord Channel!

-https://discord.gg/ZRHeExS6xv

-

-Looking forward to seeing you there! 🎉

+# MetaGPT Web UI

diff --git a/metagpt/web/app.py b/app.py

similarity index 76%

rename from metagpt/web/app.py

rename to app.py

index 5df702fbb9e996def8a93a5a05e2fa938cd2f7af..b6d3316a8f130bb01b0f0e72a476cb330ca90ab2 100644

--- a/metagpt/web/app.py

+++ b/app.py

@@ -1,10 +1,17 @@

#!/usr/bin/python3

# -*- coding: utf-8 -*-

+from __future__ import annotations

+

import asyncio

+from collections import deque

+import contextlib

+from functools import partial

import urllib.parse

from datetime import datetime

import uuid

from enum import Enum

+from metagpt.logs import set_llm_stream_logfunc

+import pathlib

from fastapi import FastAPI, Request, HTTPException

from fastapi.responses import StreamingResponse, RedirectResponse

@@ -15,12 +22,12 @@ import uvicorn

from typing import Any, Optional

-from metagpt import Message

+from metagpt.schema import Message

from metagpt.actions.action import Action

from metagpt.actions.action_output import ActionOutput

from metagpt.config import CONFIG

-from metagpt.roles.software_company import RoleRun, SoftwareCompany

+from software_company import RoleRun, SoftwareCompany

class QueryAnswerType(Enum):

@@ -97,13 +104,14 @@ class ThinkActPrompt(BaseModel):

description=action.desc,

)

- def update_act(self, message: ActionOutput):

- self.step.status = "finish"

+ def update_act(self, message: ActionOutput | str, is_finished: bool = True):

+ if is_finished:

+ self.step.status = "finish"

self.step.content = Sentence(

type="text",

- id=ThinkActPrompt.guid32(),

- value=SentenceValue(answer=message.content),

- is_finished=True,

+ id=str(1),

+ value=SentenceValue(answer=message.content if is_finished else message),

+ is_finished=is_finished,

)

@staticmethod

@@ -147,7 +155,11 @@ async def create_message(req_model: NewMsg, request: Request):

Session message stream

"""

config = {k.upper(): v for k, v in req_model.config.items()}

- CONFIG.set_context(config)

+ set_context(config, uuid.uuid4().hex)

+

+ msg_queue = deque()

+ CONFIG.LLM_STREAM_LOG = lambda x: msg_queue.appendleft(x) if x else None

+

role = SoftwareCompany()

role.recv(message=Message(content=req_model.query))

answer = MessageJsonModel(

@@ -171,16 +183,47 @@ async def create_message(req_model: NewMsg, request: Request):

think_result: RoleRun = await role.think()

if not think_result: # End of conversion

break

+

think_act_prompt = ThinkActPrompt(role=think_result.role.profile)

think_act_prompt.update_think(tc_id, think_result)

yield think_act_prompt.prompt + "\n\n"

- act_result = await role.act()

+ task = asyncio.create_task(role.act())

+

+ while not await request.is_disconnected():

+ if msg_queue:

+ think_act_prompt.update_act(msg_queue.pop(), False)

+ yield think_act_prompt.prompt + "\n\n"

+ continue

+

+ if task.done():

+ break

+

+ await asyncio.sleep(0.5)

+

+ act_result = await task

think_act_prompt.update_act(act_result)

yield think_act_prompt.prompt + "\n\n"

answer.add_think_act(think_act_prompt)

yield answer.prompt + "\n\n" # Notify the front-end that the message is complete.

+default_llm_stream_log = partial(print, end="")

+

+

+def llm_stream_log(msg):

+ with contextlib.suppress():

+ CONFIG._get("LLM_STREAM_LOG", default_llm_stream_log)(msg)

+

+

+def set_context(context, uid):

+ context["WORKSPACE_PATH"] = pathlib.Path("workspace", uid)

+ for old, new in (("DEPLOYMENT_ID", "DEPLOYMENT_NAME"), ("OPENAI_API_BASE", "OPENAI_BASE_URL")):

+ if old in context and new not in context:

+ context[new] = context[old]

+ CONFIG.set_context(context)

+ return context

+

+

class ChatHandler:

@staticmethod

async def create_message(req_model: NewMsg, request: Request):

@@ -194,7 +237,7 @@ app = FastAPI()

app.mount(

"/static",

- StaticFiles(directory="./metagpt/static/", check_dir=True),

+ StaticFiles(directory="./static/", check_dir=True),

name="static",

)

app.add_api_route(

@@ -216,6 +259,9 @@ async def catch_all(request: Request):

return RedirectResponse(url=new_path)

+set_llm_stream_logfunc(llm_stream_log)

+

+

def main():

uvicorn.run(app="__main__:app", host="0.0.0.0", port=7860)

diff --git a/config/config.yaml b/config/config.yaml

index 0e0380555fd32e6c5c28e468e0244d761d6ae413..4d1d77eda13c09919dd423248621d6b569caa8d0 100644

--- a/config/config.yaml

+++ b/config/config.yaml

@@ -1,27 +1,56 @@

# DO NOT MODIFY THIS FILE, create a new key.yaml, define OPENAI_API_KEY.

# The configuration of key.yaml has a higher priority and will not enter git

+#### Project Path Setting

+# WORKSPACE_PATH: "Path for placing output files"

+

#### if OpenAI

-## The official OPENAI_API_BASE is https://api.openai.com/v1

-## If the official OPENAI_API_BASE is not available, we recommend using the [openai-forward](https://github.com/beidongjiedeguang/openai-forward).

-## Or, you can configure OPENAI_PROXY to access official OPENAI_API_BASE.

-OPENAI_API_BASE: "https://api.openai.com/v1"

+## The official OPENAI_BASE_URL is https://api.openai.com/v1

+## If the official OPENAI_BASE_URL is not available, we recommend using the [openai-forward](https://github.com/beidongjiedeguang/openai-forward).

+## Or, you can configure OPENAI_PROXY to access official OPENAI_BASE_URL.

+OPENAI_BASE_URL: "https://api.openai.com/v1"

#OPENAI_PROXY: "http://127.0.0.1:8118"

-#OPENAI_API_KEY: "YOUR_API_KEY"

-OPENAI_API_MODEL: "gpt-4"

-MAX_TOKENS: 1500

+#OPENAI_API_KEY: "YOUR_API_KEY" # set the value to sk-xxx if you host the openai interface for open llm model

+OPENAI_API_MODEL: "gpt-4-1106-preview"

+MAX_TOKENS: 4096

RPM: 10

+#### if Spark

+#SPARK_APPID : "YOUR_APPID"

+#SPARK_API_SECRET : "YOUR_APISecret"

+#SPARK_API_KEY : "YOUR_APIKey"

+#DOMAIN : "generalv2"

+#SPARK_URL : "ws://spark-api.xf-yun.com/v2.1/chat"

+

#### if Anthropic

-#Anthropic_API_KEY: "YOUR_API_KEY"

+#ANTHROPIC_API_KEY: "YOUR_API_KEY"

#### if AZURE, check https://github.com/openai/openai-cookbook/blob/main/examples/azure/chat.ipynb

-

#OPENAI_API_TYPE: "azure"

-#OPENAI_API_BASE: "YOUR_AZURE_ENDPOINT"

+#OPENAI_BASE_URL: "YOUR_AZURE_ENDPOINT"

#OPENAI_API_KEY: "YOUR_AZURE_API_KEY"

#OPENAI_API_VERSION: "YOUR_AZURE_API_VERSION"

-#DEPLOYMENT_ID: "YOUR_DEPLOYMENT_ID"

+#DEPLOYMENT_NAME: "YOUR_DEPLOYMENT_NAME"

+

+#### if zhipuai from `https://open.bigmodel.cn`. You can set here or export API_KEY="YOUR_API_KEY"

+# ZHIPUAI_API_KEY: "YOUR_API_KEY"

+

+#### if Google Gemini from `https://ai.google.dev/` and API_KEY from `https://makersuite.google.com/app/apikey`.

+#### You can set here or export GOOGLE_API_KEY="YOUR_API_KEY"

+# GEMINI_API_KEY: "YOUR_API_KEY"

+

+#### if use self-host open llm model with openai-compatible interface

+#OPEN_LLM_API_BASE: "http://127.0.0.1:8000/v1"

+#OPEN_LLM_API_MODEL: "llama2-13b"

+#

+##### if use Fireworks api

+#FIREWORKS_API_KEY: "YOUR_API_KEY"

+#FIREWORKS_API_BASE: "https://api.fireworks.ai/inference/v1"

+#FIREWORKS_API_MODEL: "YOUR_LLM_MODEL" # example, accounts/fireworks/models/llama-v2-13b-chat

+

+#### if use self-host open llm model by ollama

+# OLLAMA_API_BASE: http://127.0.0.1:11434/api

+# OLLAMA_API_MODEL: llama2

#### for Search

@@ -57,8 +86,8 @@ RPM: 10

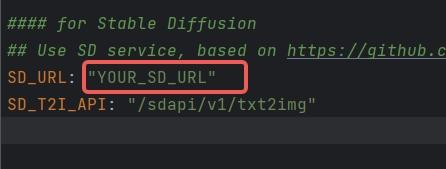

#### for Stable Diffusion

## Use SD service, based on https://github.com/AUTOMATIC1111/stable-diffusion-webui

-SD_URL: "YOUR_SD_URL"

-SD_T2I_API: "/sdapi/v1/txt2img"

+#SD_URL: "YOUR_SD_URL"

+#SD_T2I_API: "/sdapi/v1/txt2img"

#### for Execution

#LONG_TERM_MEMORY: false

@@ -73,14 +102,21 @@ SD_T2I_API: "/sdapi/v1/txt2img"

# CALC_USAGE: false

### for Research

-MODEL_FOR_RESEARCHER_SUMMARY: gpt-3.5-turbo

-MODEL_FOR_RESEARCHER_REPORT: gpt-3.5-turbo-16k

+# MODEL_FOR_RESEARCHER_SUMMARY: gpt-3.5-turbo

+# MODEL_FOR_RESEARCHER_REPORT: gpt-3.5-turbo-16k

+

+### choose the engine for mermaid conversion,

+# default is nodejs, you can change it to playwright,pyppeteer or ink

+# MERMAID_ENGINE: nodejs

+

+### browser path for pyppeteer engine, support Chrome, Chromium,MS Edge

+#PYPPETEER_EXECUTABLE_PATH: "/usr/bin/google-chrome-stable"

+

+### for repair non-openai LLM's output when parse json-text if PROMPT_FORMAT=json

+### due to non-openai LLM's output will not always follow the instruction, so here activate a post-process

+### repair operation on the content extracted from LLM's raw output. Warning, it improves the result but not fix all cases.

+# REPAIR_LLM_OUTPUT: false

-### Meta Models

-#METAGPT_TEXT_TO_IMAGE_MODEL: MODEL_URL

+# PROMPT_FORMAT: json #json or markdown

-### S3 config

-S3:

- access_key: "YOUR_S3_ACCESS_KEY"

- secret_key: "YOUR_S3_SECRET_KEY"

- endpoint_url: "YOUR_S3_ENDPOINT_URL"

+DISABLE_LLM_PROVIDER_CHECK: true

diff --git a/docs/FAQ-EN.md b/docs/FAQ-EN.md

deleted file mode 100644

index b5ae9184b39cbd61e409a9ab6ca02efd00993a2b..0000000000000000000000000000000000000000

--- a/docs/FAQ-EN.md

+++ /dev/null

@@ -1,181 +0,0 @@

-Our vision is to [extend human life](https://github.com/geekan/HowToLiveLonger) and [reduce working hours](https://github.com/geekan/MetaGPT/).

-

-1. ### Convenient Link for Sharing this Document:

-

-```

-- MetaGPT-Index/FAQ https://deepwisdom.feishu.cn/wiki/MsGnwQBjiif9c3koSJNcYaoSnu4

-```

-

-2. ### Link

-

-

-

-1. Code:https://github.com/geekan/MetaGPT

-

-1. Roadmap:https://github.com/geekan/MetaGPT/blob/main/docs/ROADMAP.md

-

-1. EN

-

- 1. Demo Video: [MetaGPT: Multi-Agent AI Programming Framework](https://www.youtube.com/watch?v=8RNzxZBTW8M)

- 1. Tutorial: [MetaGPT: Deploy POWERFUL Autonomous Ai Agents BETTER Than SUPERAGI!](https://www.youtube.com/watch?v=q16Gi9pTG_M&t=659s)

-

-1. CN

-

- 1. Demo Video: [MetaGPT:一行代码搭建你的虚拟公司_哔哩哔哩_bilibili](https://www.bilibili.com/video/BV1NP411C7GW/?spm_id_from=333.999.0.0&vd_source=735773c218b47da1b4bd1b98a33c5c77)

- 1. Tutorial: [一个提示词写游戏 Flappy bird, 比AutoGPT强10倍的MetaGPT,最接近AGI的AI项目](https://youtu.be/Bp95b8yIH5c)

- 1. Author's thoughts video(CN): [MetaGPT作者深度解析直播回放_哔哩哔哩_bilibili](https://www.bilibili.com/video/BV1Ru411V7XL/?spm_id_from=333.337.search-card.all.click)

-

-

-

-3. ### How to become a contributor?

-

-

-

-1. Choose a task from the Roadmap (or you can propose one). By submitting a PR, you can become a contributor and join the dev team.

-1. Current contributors come from backgrounds including: ByteDance AI Lab/DingDong/Didi/Xiaohongshu, Tencent/Baidu/MSRA/TikTok/BloomGPT Infra/Bilibili/CUHK/HKUST/CMU/UCB

-

-

-

-4. ### Chief Evangelist (Monthly Rotation)

-

-MetaGPT Community - The position of Chief Evangelist rotates on a monthly basis. The primary responsibilities include:

-

-1. Maintaining community FAQ documents, announcements, Github resources/READMEs.

-1. Responding to, answering, and distributing community questions within an average of 30 minutes, including on platforms like Github Issues, Discord and WeChat.

-1. Upholding a community atmosphere that is enthusiastic, genuine, and friendly.

-1. Encouraging everyone to become contributors and participate in projects that are closely related to achieving AGI (Artificial General Intelligence).

-1. (Optional) Organizing small-scale events, such as hackathons.

-

-

-

-5. ### FAQ

-

-

-

-1. Experience with the generated repo code:

-

- 1. https://github.com/geekan/MetaGPT/releases/tag/v0.1.0

-

-1. Code truncation/ Parsing failure:

-

- 1. Check if it's due to exceeding length. Consider using the gpt-3.5-turbo-16k or other long token versions.

-

-1. Success rate:

-

- 1. There hasn't been a quantitative analysis yet, but the success rate of code generated by GPT-4 is significantly higher than that of gpt-3.5-turbo.

-

-1. Support for incremental, differential updates (if you wish to continue a half-done task):

-

- 1. Several prerequisite tasks are listed on the ROADMAP.

-

-1. Can existing code be loaded?

-

- 1. It's not on the ROADMAP yet, but there are plans in place. It just requires some time.

-

-1. Support for multiple programming languages and natural languages?

-

- 1. It's listed on ROADMAP.

-

-1. Want to join the contributor team? How to proceed?

-

- 1. Merging a PR will get you into the contributor's team. The main ongoing tasks are all listed on the ROADMAP.

-

-1. PRD stuck / unable to access/ connection interrupted

-

- 1. The official OPENAI_API_BASE address is `https://api.openai.com/v1`

- 1. If the official OPENAI_API_BASE address is inaccessible in your environment (this can be verified with curl), it's recommended to configure using the reverse proxy OPENAI_API_BASE provided by libraries such as openai-forward. For instance, `OPENAI_API_BASE: "``https://api.openai-forward.com/v1``"`

- 1. If the official OPENAI_API_BASE address is inaccessible in your environment (again, verifiable via curl), another option is to configure the OPENAI_PROXY parameter. This way, you can access the official OPENAI_API_BASE via a local proxy. If you don't need to access via a proxy, please do not enable this configuration; if accessing through a proxy is required, modify it to the correct proxy address. Note that when OPENAI_PROXY is enabled, don't set OPENAI_API_BASE.

- 1. Note: OpenAI's default API design ends with a v1. An example of the correct configuration is: `OPENAI_API_BASE: "``https://api.openai.com/v1``"`

-

-1. Absolutely! How can I assist you today?

-

- 1. Did you use Chi or a similar service? These services are prone to errors, and it seems that the error rate is higher when consuming 3.5k-4k tokens in GPT-4

-

-1. What does Max token mean?

-

- 1. It's a configuration for OpenAI's maximum response length. If the response exceeds the max token, it will be truncated.

-

-1. How to change the investment amount?

-

- 1. You can view all commands by typing `python startup.py --help`

-

-1. Which version of Python is more stable?

-

- 1. python3.9 / python3.10

-

-1. Can't use GPT-4, getting the error "The model gpt-4 does not exist."

-

- 1. OpenAI's official requirement: You can use GPT-4 only after spending $1 on OpenAI.

- 1. Tip: Run some data with gpt-3.5-turbo (consume the free quota and $1), and then you should be able to use gpt-4.

-

-1. Can games whose code has never been seen before be written?

-

- 1. Refer to the README. The recommendation system of Toutiao is one of the most complex systems in the world currently. Although it's not on GitHub, many discussions about it exist online. If it can visualize these, it suggests it can also summarize these discussions and convert them into code. The prompt would be something like "write a recommendation system similar to Toutiao". Note: this was approached in earlier versions of the software. The SOP of those versions was different; the current one adopts Elon Musk's five-step work method, emphasizing trimming down requirements as much as possible.

-

-1. Under what circumstances would there typically be errors?

-

- 1. More than 500 lines of code: some function implementations may be left blank.

- 1. When using a database, it often gets the implementation wrong — since the SQL database initialization process is usually not in the code.

- 1. With more lines of code, there's a higher chance of false impressions, leading to calls to non-existent APIs.

-

-1. Instructions for using SD Skills/UI Role:

-

- 1. Currently, there is a test script located in /tests/metagpt/roles. The file ui_role provides the corresponding code implementation. For testing, you can refer to the test_ui in the same directory.

-

- 1. The UI role takes over from the product manager role, extending the output from the 【UI Design draft】 provided by the product manager role. The UI role has implemented the UIDesign Action. Within the run of UIDesign, it processes the respective context, and based on the set template, outputs the UI. The output from the UI role includes:

-

- 1. UI Design Description:Describes the content to be designed and the design objectives.

- 1. Selected Elements:Describes the elements in the design that need to be illustrated.

- 1. HTML Layout:Outputs the HTML code for the page.

- 1. CSS Styles (styles.css):Outputs the CSS code for the page.

-

- 1. Currently, the SD skill is a tool invoked by UIDesign. It instantiates the SDEngine, with specific code found in metagpt/tools/sd_engine.

-

- 1. Configuration instructions for SD Skills: The SD interface is currently deployed based on *https://github.com/AUTOMATIC1111/stable-diffusion-webui* **For environmental configurations and model downloads, please refer to the aforementioned GitHub repository. To initiate the SD service that supports API calls, run the command specified in cmd with the parameter nowebui, i.e.,

-

- 1. > python webui.py --enable-insecure-extension-access --port xxx --no-gradio-queue --nowebui

- 1. Once it runs without errors, the interface will be accessible after approximately 1 minute when the model finishes loading.

- 1. Configure SD_URL and SD_T2I_API in the config.yaml/key.yaml files.

- 1.

- 1. SD_URL is the deployed server/machine IP, and Port is the specified port above, defaulting to 7860.

- 1. > SD_URL: IP:Port

-

-1. An error occurred during installation: "Another program is using this file...egg".

-

- 1. Delete the file and try again.

- 1. Or manually execute`pip install -r requirements.txt`

-

-1. The origin of the name MetaGPT?

-

- 1. The name was derived after iterating with GPT-4 over a dozen rounds. GPT-4 scored and suggested it.

-

-1. Is there a more step-by-step installation tutorial?

-

- 1. Youtube(CN):[一个提示词写游戏 Flappy bird, 比AutoGPT强10倍的MetaGPT,最接近AGI的AI项目=一个软件公司产品经理+程序员](https://youtu.be/Bp95b8yIH5c)

- 1. Youtube(EN)https://www.youtube.com/watch?v=q16Gi9pTG_M&t=659s

-

-1. openai.error.RateLimitError: You exceeded your current quota, please check your plan and billing details

-

- 1. If you haven't exhausted your free quota, set RPM to 3 or lower in the settings.

- 1. If your free quota is used up, consider adding funds to your account.

-

-1. What does "borg" mean in n_borg?

-

- 1. https://en.wikipedia.org/wiki/Borg

- 1. The Borg civilization operates based on a hive or collective mentality, known as "the Collective." Every Borg individual is connected to the collective via a sophisticated subspace network, ensuring continuous oversight and guidance for every member. This collective consciousness allows them to not only "share the same thoughts" but also to adapt swiftly to new strategies. While individual members of the collective rarely communicate, the collective "voice" sometimes transmits aboard ships.

-

-1. How to use the Claude API?

-

- 1. The full implementation of the Claude API is not provided in the current code.

- 1. You can use the Claude API through third-party API conversion projects like: https://github.com/jtsang4/claude-to-chatgpt

-

-1. Is Llama2 supported?

-

- 1. On the day Llama2 was released, some of the community members began experiments and found that output can be generated based on MetaGPT's structure. However, Llama2's context is too short to generate a complete project. Before regularly using Llama2, it's necessary to expand the context window to at least 8k. If anyone has good recommendations for expansion models or methods, please leave a comment.

-

-1. `mermaid-cli getElementsByTagName SyntaxError: Unexpected token '.'`

-

- 1. Upgrade node to version 14.x or above:

-

- 1. `npm install -g n`

- 1. `n stable` to install the stable version of node(v18.x)

diff --git a/docs/README_CN.md b/docs/README_CN.md

deleted file mode 100644

index 2180eb51874ceaa3215331ade42fe8139f130f28..0000000000000000000000000000000000000000

--- a/docs/README_CN.md

+++ /dev/null

@@ -1,201 +0,0 @@

-# MetaGPT: 多智能体框架

-

-

-

-

-

-使 GPTs 组成软件公司,协作处理更复杂的任务

-

-

-

-

-

-1. MetaGPT输入**一句话的老板需求**,输出**用户故事 / 竞品分析 / 需求 / 数据结构 / APIs / 文件等**

-2. MetaGPT内部包括**产品经理 / 架构师 / 项目经理 / 工程师**,它提供了一个**软件公司**的全过程与精心调配的SOP

- 1. `Code = SOP(Team)` 是核心哲学。我们将SOP具象化,并且用于LLM构成的团队

-

-

-

-软件公司多角色示意图(正在逐步实现)

-

-## 示例(均由 GPT-4 生成)

-

-例如,键入`python startup.py "写个类似今日头条的推荐系统"`并回车,你会获得一系列输出,其一是数据结构与API设计

-

-

-

-这需要大约**0.2美元**(GPT-4 API的费用)来生成一个带有分析和设计的示例,大约2.0美元用于一个完整的项目

-

-## 安装

-

-### 传统安装

-

-```bash

-# 第 1 步:确保您的系统上安装了 NPM。并使用npm安装mermaid-js

-npm --version

-sudo npm install -g @mermaid-js/mermaid-cli

-

-# 第 2 步:确保您的系统上安装了 Python 3.9+。您可以使用以下命令进行检查:

-python --version

-

-# 第 3 步:克隆仓库到您的本地机器,并进行安装。

-git clone https://github.com/geekan/metagpt

-cd metagpt

-python setup.py install

-```

-

-### Docker安装

-

-```bash

-# 步骤1: 下载metagpt官方镜像并准备好config.yaml

-docker pull metagpt/metagpt:v0.3

-mkdir -p /opt/metagpt/{config,workspace}

-docker run --rm metagpt/metagpt:v0.3 cat /app/metagpt/config/config.yaml > /opt/metagpt/config/config.yaml

-vim /opt/metagpt/config/config.yaml # 修改config

-

-# 步骤2: 使用容器运行metagpt演示

-docker run --rm \

- --privileged \

- -v /opt/metagpt/config:/app/metagpt/config \

- -v /opt/metagpt/workspace:/app/metagpt/workspace \

- metagpt/metagpt:v0.3 \

- python startup.py "Write a cli snake game"

-

-# 您也可以启动一个容器并在其中执行命令

-docker run --name metagpt -d \

- --privileged \

- -v /opt/metagpt/config:/app/metagpt/config \

- -v /opt/metagpt/workspace:/app/metagpt/workspace \

- metagpt/metagpt:v0.3

-

-docker exec -it metagpt /bin/bash

-$ python startup.py "Write a cli snake game"

-```

-

-`docker run ...`做了以下事情:

-

-- 以特权模式运行,有权限运行浏览器

-- 将主机目录 `/opt/metagpt/config` 映射到容器目录`/app/metagpt/config`

-- 将主机目录 `/opt/metagpt/workspace` 映射到容器目录 `/app/metagpt/workspace`

-- 执行演示命令 `python startup.py "Write a cli snake game"`

-

-### 自己构建镜像

-

-```bash

-# 您也可以自己构建metagpt镜像

-git clone https://github.com/geekan/MetaGPT.git

-cd MetaGPT && docker build -t metagpt:v0.3 .

-```

-

-## 配置

-

-- 在 `config/key.yaml / config/config.yaml / env` 中配置您的 `OPENAI_API_KEY`

-- 优先级顺序:`config/key.yaml > config/config.yaml > env`

-

-```bash

-# 复制配置文件并进行必要的修改

-cp config/config.yaml config/key.yaml

-```

-

-| 变量名 | config/key.yaml | env |

-|--------------------------------------------|-------------------------------------------|--------------------------------|

-| OPENAI_API_KEY # 用您自己的密钥替换 | OPENAI_API_KEY: "sk-..." | export OPENAI_API_KEY="sk-..." |

-| OPENAI_API_BASE # 可选 | OPENAI_API_BASE: "https:///v1" | export OPENAI_API_BASE="https:///v1" |

-

-## 示例:启动一个创业公司

-

-```shell

-python startup.py "写一个命令行贪吃蛇"

-# 开启code review模式会会花费更多的money, 但是会提升代码质量和成功率

-python startup.py "写一个命令行贪吃蛇" --code_review True

-```

-

-运行脚本后,您可以在 `workspace/` 目录中找到您的新项目。

-### 平台或工具的倾向性

-可以在阐述需求时说明想要使用的平台或工具。

-例如:

-

-```shell

-python startup.py "写一个基于pygame的命令行贪吃蛇"

-```

-

-### 使用

-

-```

-名称

- startup.py - 我们是一家AI软件创业公司。通过投资我们,您将赋能一个充满无限可能的未来。

-

-概要

- startup.py IDEA

-

-描述

- 我们是一家AI软件创业公司。通过投资我们,您将赋能一个充满无限可能的未来。

-

-位置参数

- IDEA

- 类型: str

- 您的创新想法,例如"写一个命令行贪吃蛇。"

-

-标志

- --investment=INVESTMENT

- 类型: float

- 默认值: 3.0

- 作为投资者,您有机会向这家AI公司投入一定的美元金额。

- --n_round=N_ROUND

- 类型: int

- 默认值: 5

-

-备注

- 您也可以用`标志`的语法,来处理`位置参数`

-```

-

-### 代码实现

-

-```python

-from metagpt.software_company import SoftwareCompany

-from metagpt.roles import ProjectManager, ProductManager, Architect, Engineer

-

-async def startup(idea: str, investment: float = 3.0, n_round: int = 5):

- """运行一个创业公司。做一个老板"""

- company = SoftwareCompany()

- company.hire([ProductManager(), Architect(), ProjectManager(), Engineer()])

- company.invest(investment)

- company.start_project(idea)

- await company.run(n_round=n_round)

-```

-

-你可以查看`examples`,其中有单角色(带知识库)的使用例子与仅LLM的使用例子。

-

-## 快速体验

-对一些用户来说,安装配置本地环境是有困难的,下面这些教程能够让你快速体验到MetaGPT的魅力。

-

-- [MetaGPT快速体验](https://deepwisdom.feishu.cn/wiki/Q8ycw6J9tiNXdHk66MRcIN8Pnlg)

-

-## 联系信息

-

-如果您对这个项目有任何问题或反馈,欢迎联系我们。我们非常欢迎您的建议!

-

-- **邮箱:** alexanderwu@fuzhi.ai

-- **GitHub 问题:** 对于更技术性的问题,您也可以在我们的 [GitHub 仓库](https://github.com/geekan/metagpt/issues) 中创建一个新的问题。

-

-我们会在2-3个工作日内回复所有问题。

-

-## 演示

-

-https://github.com/geekan/MetaGPT/assets/2707039/5e8c1062-8c35-440f-bb20-2b0320f8d27d

-

-## 加入我们

-

-📢 加入我们的Discord频道!

-https://discord.gg/ZRHeExS6xv

-

-期待在那里与您相见!🎉

diff --git a/docs/README_JA.md b/docs/README_JA.md

deleted file mode 100644

index 57f6487a783c81f0cd0bd17f263d9ce9ae984401..0000000000000000000000000000000000000000

--- a/docs/README_JA.md

+++ /dev/null

@@ -1,210 +0,0 @@

-# MetaGPT: マルチエージェントフレームワーク

-

-

-

-

-

-GPT にさまざまな役割を割り当てることで、複雑なタスクのための共同ソフトウェアエンティティを形成します。

-

-

-

-

-

-1. MetaGPT は、**1 行の要件** を入力とし、**ユーザーストーリー / 競合分析 / 要件 / データ構造 / API / 文書など** を出力します。

-2. MetaGPT には、**プロダクト マネージャー、アーキテクト、プロジェクト マネージャー、エンジニア** が含まれています。MetaGPT は、**ソフトウェア会社のプロセス全体を、慎重に調整された SOP とともに提供します。**

- 1. `Code = SOP(Team)` が基本理念です。私たちは SOP を具体化し、LLM で構成されるチームに適用します。

-

-

-

-ソフトウェア会社のマルチロール図式(順次導入)

-

-## 例(GPT-4 で完全生成)

-

-例えば、`python startup.py "Toutiao のような RecSys をデザインする"`と入力すると、多くの出力が得られます

-

-

-

-解析と設計を含む 1 つの例を生成するのに、**$0.2** (GPT-4 の api のコスト)程度、完全なプロジェクトには **$2.0** 程度が必要です。

-

-## インストール

-

-### 伝統的なインストール

-

-```bash

-# ステップ 1: NPM がシステムにインストールされていることを確認してください。次に mermaid-js をインストールします。

-npm --version

-sudo npm install -g @mermaid-js/mermaid-cli

-

-# ステップ 2: Python 3.9+ がシステムにインストールされていることを確認してください。これを確認するには:

-python --version

-

-# ステップ 3: リポジトリをローカルマシンにクローンし、インストールする。

-git clone https://github.com/geekan/metagpt

-cd metagpt

-python setup.py install

-```

-

-**注:**

-

-- すでに Chrome、Chromium、MS Edge がインストールされている場合は、環境変数 `PUPPETEER_SKIP_CHROMIUM_DOWNLOAD` を `true` に設定することで、

-Chromium のダウンロードをスキップすることができます。

-

-- このツールをグローバルにインストールする[問題を抱えている](https://github.com/mermaidjs/mermaid.cli/issues/15)人もいます。ローカルにインストールするのが代替の解決策です、

-

- ```bash

- npm install @mermaid-js/mermaid-cli

- ```

-

-- config.yml に mmdc のコンフィギュレーションを記述するのを忘れないこと

-

- ```yml

- PUPPETEER_CONFIG: "./config/puppeteer-config.json"

- MMDC: "./node_modules/.bin/mmdc"

- ```

-

-### Docker によるインストール

-

-```bash

-# ステップ 1: metagpt 公式イメージをダウンロードし、config.yaml を準備する

-docker pull metagpt/metagpt:v0.3.1

-mkdir -p /opt/metagpt/{config,workspace}

-docker run --rm metagpt/metagpt:v0.3.1 cat /app/metagpt/config/config.yaml > /opt/metagpt/config/key.yaml

-vim /opt/metagpt/config/key.yaml # 設定を変更する

-

-# ステップ 2: コンテナで metagpt デモを実行する

-docker run --rm \

- --privileged \

- -v /opt/metagpt/config/key.yaml:/app/metagpt/config/key.yaml \

- -v /opt/metagpt/workspace:/app/metagpt/workspace \

- metagpt/metagpt:v0.3.1 \

- python startup.py "Write a cli snake game"

-

-# コンテナを起動し、その中でコマンドを実行することもできます

-docker run --name metagpt -d \

- --privileged \

- -v /opt/metagpt/config/key.yaml:/app/metagpt/config/key.yaml \

- -v /opt/metagpt/workspace:/app/metagpt/workspace \

- metagpt/metagpt:v0.3.1

-

-docker exec -it metagpt /bin/bash

-$ python startup.py "Write a cli snake game"

-```

-

-コマンド `docker run ...` は以下のことを行います:

-

-- 特権モードで実行し、ブラウザの実行権限を得る

-- ホストディレクトリ `/opt/metagpt/config` をコンテナディレクトリ `/app/metagpt/config` にマップする

-- ホストディレクトリ `/opt/metagpt/workspace` をコンテナディレクトリ `/app/metagpt/workspace` にマップする

-- デモコマンド `python startup.py "Write a cli snake game"` を実行する

-

-### 自分でイメージをビルドする

-

-```bash

-# また、自分で metagpt イメージを構築することもできます。

-git clone https://github.com/geekan/MetaGPT.git

-cd MetaGPT && docker build -t metagpt:custom .

-```

-

-## 設定

-

-- `OPENAI_API_KEY` を `config/key.yaml / config/config.yaml / env` のいずれかで設定します。

-- 優先順位は: `config/key.yaml > config/config.yaml > env` の順です。

-

-```bash

-# 設定ファイルをコピーし、必要な修正を加える。

-cp config/config.yaml config/key.yaml

-```

-

-| 変数名 | config/key.yaml | env |

-| ------------------------------------------ | ----------------------------------------- | ----------------------------------------------- |

-| OPENAI_API_KEY # 自分のキーに置き換える | OPENAI_API_KEY: "sk-..." | export OPENAI_API_KEY="sk-..." |

-| OPENAI_API_BASE # オプション | OPENAI_API_BASE: "https:///v1" | export OPENAI_API_BASE="https:///v1" |

-

-## チュートリアル: スタートアップの開始

-

-```shell

-python startup.py "Write a cli snake game"

-# コードレビューを利用すれば、コストはかかるが、より良いコード品質を選ぶことができます。

-python startup.py "Write a cli snake game" --code_review True

-```

-

-スクリプトを実行すると、`workspace/` ディレクトリに新しいプロジェクトが見つかります。

-### プラットフォームまたはツールの設定

-

-要件を述べるときに、どのプラットフォームまたはツールを使用するかを指定できます。

-```shell

-python startup.py "pygame をベースとした cli ヘビゲームを書く"

-```

-### 使用方法

-

-```

-会社名

- startup.py - 私たちは AI で構成されたソフトウェア・スタートアップです。私たちに投資することは、無限の可能性に満ちた未来に力を与えることです。

-

-シノプシス

- startup.py IDEA

-

-説明

- 私たちは AI で構成されたソフトウェア・スタートアップです。私たちに投資することは、無限の可能性に満ちた未来に力を与えることです。

-

-位置引数

- IDEA

- 型: str

- あなたの革新的なアイデア、例えば"スネークゲームを作る。"など

-

-フラグ

- --investment=INVESTMENT

- 型: float

- デフォルト: 3.0

- 投資家として、あなたはこの AI 企業に一定の金額を拠出する機会がある。

- --n_round=N_ROUND

- 型: int

- デフォルト: 5

-

-注意事項

- 位置引数にフラグ構文を使うこともできます

-```

-

-### コードウォークスルー

-

-```python

-from metagpt.software_company import SoftwareCompany

-from metagpt.roles import ProjectManager, ProductManager, Architect, Engineer

-

-async def startup(idea: str, investment: float = 3.0, n_round: int = 5):

- """スタートアップを実行する。ボスになる。"""

- company = SoftwareCompany()

- company.hire([ProductManager(), Architect(), ProjectManager(), Engineer()])

- company.invest(investment)

- company.start_project(idea)

- await company.run(n_round=n_round)

-```

-

-`examples` でシングル・ロール(ナレッジ・ベース付き)と LLM のみの例を詳しく見ることができます。

-

-## クイックスタート

-ローカル環境のインストールや設定は、ユーザーによっては難しいものです。以下のチュートリアルで MetaGPT の魅力をすぐに体験できます。

-

-- [MetaGPT クイックスタート](https://deepwisdom.feishu.cn/wiki/Q8ycw6J9tiNXdHk66MRcIN8Pnlg)

-

-## お問い合わせ先

-

-このプロジェクトに関するご質問やご意見がございましたら、お気軽にお問い合わせください。皆様のご意見をお待ちしております!

-

-- **Email:** alexanderwu@fuzhi.ai

-- **GitHub Issues:** 技術的なお問い合わせについては、[GitHub リポジトリ](https://github.com/geekan/metagpt/issues) に新しい issue を作成することもできます。

-

-ご質問には 2-3 営業日以内に回答いたします。

-

-## デモ

-

-https://github.com/geekan/MetaGPT/assets/2707039/5e8c1062-8c35-440f-bb20-2b0320f8d27d

diff --git a/docs/ROADMAP.md b/docs/ROADMAP.md

deleted file mode 100644

index 005a59ab2bd16d5153d2e2d1cca116c2db40d038..0000000000000000000000000000000000000000

--- a/docs/ROADMAP.md

+++ /dev/null

@@ -1,84 +0,0 @@

-

-## Roadmap

-

-### Long-term Objective

-

-Enable MetaGPT to self-evolve, accomplishing self-training, fine-tuning, optimization, utilization, and updates.

-

-### Short-term Objective

-

-1. Become the multi-agent framework with the highest ROI.

-2. Support fully automatic implementation of medium-sized projects (around 2000 lines of code).

-3. Implement most identified tasks, reaching version 0.5.

-

-### Tasks

-

-To reach version v0.5, approximately 70% of the following tasks need to be completed.

-

-1. Usability

- 1. Release v0.01 pip package to try to solve issues like npm installation (though not necessarily successfully)

- 2. Support for overall save and recovery of software companies

- 3. Support human confirmation and modification during the process

- 4. Support process caching: Consider carefully whether to add server caching mechanism

- 5. Resolve occasional failure to follow instruction under current prompts, causing code parsing errors, through stricter system prompts

- 6. Write documentation, describing the current features and usage at all levels

- 7. ~~Support Docker~~

-2. Features

- 1. Support a more standard and stable parser (need to analyze the format that the current LLM is better at)

- 2. ~~Establish a separate output queue, differentiated from the message queue~~

- 3. Attempt to atomize all role work, but this may significantly increase token overhead

- 4. Complete the design and implementation of module breakdown

- 5. Support various modes of memory: clearly distinguish between long-term and short-term memory

- 6. Perfect the test role, and carry out necessary interactions with humans

- 7. Provide full mode instead of the current fast mode, allowing natural communication between roles

- 8. Implement SkillManager and the process of incremental Skill learning

- 9. Automatically get RPM and configure it by calling the corresponding openai page, so that each key does not need to be manually configured

-3. Strategies

- 1. Support ReAct strategy

- 2. Support CoT strategy

- 3. Support ToT strategy

- 4. Support Reflection strategy

-4. Actions

- 1. Implementation: Search

- 2. Implementation: Knowledge search, supporting 10+ data formats

- 3. Implementation: Data EDA

- 4. Implementation: Review

- 5. Implementation: Add Document

- 6. Implementation: Delete Document

- 7. Implementation: Self-training

- 8. Implementation: DebugError

- 9. Implementation: Generate reliable unit tests based on YAPI

- 10. Implementation: Self-evaluation

- 11. Implementation: AI Invocation

- 12. Implementation: Learning and using third-party standard libraries

- 13. Implementation: Data collection

- 14. Implementation: AI training

- 15. Implementation: Run code

- 16. Implementation: Web access

-5. Plugins: Compatibility with plugin system

-6. Tools

- 1. ~~Support SERPER api~~

- 2. ~~Support Selenium apis~~

- 3. ~~Support Playwright apis~~

-7. Roles

- 1. Perfect the action pool/skill pool for each role

- 2. Red Book blogger

- 3. E-commerce seller

- 4. Data analyst

- 5. News observer

- 6. Institutional researcher

-8. Evaluation

- 1. Support an evaluation on a game dataset

- 2. Reproduce papers, implement full skill acquisition for a single game role, achieving SOTA results

- 3. Support an evaluation on a math dataset

- 4. Reproduce papers, achieving SOTA results for current mathematical problem solving process

-9. LLM

- 1. Support Claude underlying API

- 2. ~~Support Azure asynchronous API~~

- 3. Support streaming version of all APIs

- 4. ~~Make gpt-3.5-turbo available (HARD)~~

-10. Other

- 1. Clean up existing unused code

- 2. Unify all code styles and establish contribution standards

- 3. Multi-language support

- 4. Multi-programming-language support

diff --git a/docs/resources/20230811-214014.jpg b/docs/resources/20230811-214014.jpg

deleted file mode 100644

index 68a2d5f4752659818073a8981888888c580bfcff..0000000000000000000000000000000000000000

--- a/docs/resources/20230811-214014.jpg

+++ /dev/null

@@ -1,3 +0,0 @@

-version https://git-lfs.github.com/spec/v1

-oid sha256:846dd5e2fef8d99fbec5d27346876eb12f9de99a7d6025c570ea27536b80cc39

-size 59081

diff --git a/docs/resources/MetaGPT-WeChat-Personal.jpeg b/docs/resources/MetaGPT-WeChat-Personal.jpeg

deleted file mode 100644

index f6b48577d132d0f30353585f54d6aeebaee98e01..0000000000000000000000000000000000000000

Binary files a/docs/resources/MetaGPT-WeChat-Personal.jpeg and /dev/null differ

diff --git a/docs/resources/MetaGPT-WorkWeChatGroup-6.jpg b/docs/resources/MetaGPT-WorkWeChatGroup-6.jpg

deleted file mode 100644

index d81cae63b6c85283618406ecfea36ef442ab53cb..0000000000000000000000000000000000000000

--- a/docs/resources/MetaGPT-WorkWeChatGroup-6.jpg

+++ /dev/null

@@ -1,3 +0,0 @@

-version https://git-lfs.github.com/spec/v1

-oid sha256:8c284f3cc89244a80f5c05db4a5639fb7f0df9fd69a612a730b829942ef7b93c

-size 86287

diff --git a/docs/resources/MetaGPT-logo.jpeg b/docs/resources/MetaGPT-logo.jpeg

deleted file mode 100644

index 33c3a2639fa9f8724acf7d4f10b9ff6d157694ae..0000000000000000000000000000000000000000

Binary files a/docs/resources/MetaGPT-logo.jpeg and /dev/null differ

diff --git a/docs/resources/MetaGPT-logo.png b/docs/resources/MetaGPT-logo.png

deleted file mode 100644

index 0357f307f20ac355afbaf938d75c9f7e16833b47..0000000000000000000000000000000000000000

--- a/docs/resources/MetaGPT-logo.png

+++ /dev/null

@@ -1,3 +0,0 @@

-version https://git-lfs.github.com/spec/v1

-oid sha256:084c8f63f3af75d2e100b287db3a0f1385e90f614e09a7c21080597fa99a294e

-size 50622

diff --git a/docs/resources/software_company_cd.jpeg b/docs/resources/software_company_cd.jpeg

deleted file mode 100644

index dd252ba962a9d042d5c14582e89ea36c3441eb6c..0000000000000000000000000000000000000000

Binary files a/docs/resources/software_company_cd.jpeg and /dev/null differ

diff --git a/docs/resources/software_company_sd.jpeg b/docs/resources/software_company_sd.jpeg

deleted file mode 100644

index 7c2a39359ca948d22273a7db904680dfb1773670..0000000000000000000000000000000000000000

Binary files a/docs/resources/software_company_sd.jpeg and /dev/null differ

diff --git a/docs/resources/workspace/content_rec_sys/resources/competitive_analysis.pdf b/docs/resources/workspace/content_rec_sys/resources/competitive_analysis.pdf

deleted file mode 100644

index c5a45e9aff568eb98d4df4beb0b70acbca664686..0000000000000000000000000000000000000000

Binary files a/docs/resources/workspace/content_rec_sys/resources/competitive_analysis.pdf and /dev/null differ

diff --git a/docs/resources/workspace/content_rec_sys/resources/competitive_analysis.png b/docs/resources/workspace/content_rec_sys/resources/competitive_analysis.png

deleted file mode 100644

index 4305df6d1cdf4c0b1d30f27c87a00aba24ac42d3..0000000000000000000000000000000000000000

--- a/docs/resources/workspace/content_rec_sys/resources/competitive_analysis.png

+++ /dev/null

@@ -1,3 +0,0 @@

-version https://git-lfs.github.com/spec/v1

-oid sha256:2d6eea5d284c72a93e9995576e357a11d0901ffb74a3e180ddc1666ab263f426

-size 38690

diff --git a/docs/resources/workspace/content_rec_sys/resources/competitive_analysis.svg b/docs/resources/workspace/content_rec_sys/resources/competitive_analysis.svg

deleted file mode 100644

index bda590dcd08c0150b60e9fd57e74478c0f60c446..0000000000000000000000000000000000000000

--- a/docs/resources/workspace/content_rec_sys/resources/competitive_analysis.svg

+++ /dev/null

@@ -1,3 +0,0 @@

-version https://git-lfs.github.com/spec/v1

-oid sha256:70a8c240a7ac4c447caa47ef7f71268e57d72aca9280dc2a85299ed0eade18b5

-size 5762

diff --git a/docs/resources/workspace/content_rec_sys/resources/data_api_design.pdf b/docs/resources/workspace/content_rec_sys/resources/data_api_design.pdf

deleted file mode 100644

index 6bf5457a9365fb1c6b01b397377cf596a24d5d30..0000000000000000000000000000000000000000

Binary files a/docs/resources/workspace/content_rec_sys/resources/data_api_design.pdf and /dev/null differ

diff --git a/docs/resources/workspace/content_rec_sys/resources/data_api_design.png b/docs/resources/workspace/content_rec_sys/resources/data_api_design.png

deleted file mode 100644

index 758956cf74ef3a98a36e86bd65841271c2564547..0000000000000000000000000000000000000000

--- a/docs/resources/workspace/content_rec_sys/resources/data_api_design.png

+++ /dev/null

@@ -1,3 +0,0 @@

-version https://git-lfs.github.com/spec/v1

-oid sha256:9f5a6265915cc4ff672aada5b66e818a024a0aa8d007fd3c684cdca0dd3f7c07

-size 176981

diff --git a/docs/resources/workspace/content_rec_sys/resources/data_api_design.svg b/docs/resources/workspace/content_rec_sys/resources/data_api_design.svg

deleted file mode 100644

index f38def8a0530fb9dde538999b07d91d571c19e45..0000000000000000000000000000000000000000

--- a/docs/resources/workspace/content_rec_sys/resources/data_api_design.svg

+++ /dev/null

@@ -1,3 +0,0 @@

-version https://git-lfs.github.com/spec/v1

-oid sha256:89c6067be45e60004f5544b9b34c1bcbaca8f80dfebd3c1d20b1d7a12d10c4e1

-size 44125

diff --git a/docs/resources/workspace/content_rec_sys/resources/seq_flow.pdf b/docs/resources/workspace/content_rec_sys/resources/seq_flow.pdf

deleted file mode 100644

index 34f73827db955362e61eb6838707249daa7a8be3..0000000000000000000000000000000000000000

Binary files a/docs/resources/workspace/content_rec_sys/resources/seq_flow.pdf and /dev/null differ

diff --git a/docs/resources/workspace/content_rec_sys/resources/seq_flow.png b/docs/resources/workspace/content_rec_sys/resources/seq_flow.png

deleted file mode 100644

index 24df16964a872c7062936fd237a1fa67fc578724..0000000000000000000000000000000000000000

--- a/docs/resources/workspace/content_rec_sys/resources/seq_flow.png

+++ /dev/null

@@ -1,3 +0,0 @@

-version https://git-lfs.github.com/spec/v1

-oid sha256:67f508c5715d7800ea20380fdbce240f073fa3807d30f7f2660d3aa367a370cf

-size 81772

diff --git a/docs/resources/workspace/content_rec_sys/resources/seq_flow.svg b/docs/resources/workspace/content_rec_sys/resources/seq_flow.svg

deleted file mode 100644

index b989883764ad9fd55ffcc419c07c874d723e1faf..0000000000000000000000000000000000000000

--- a/docs/resources/workspace/content_rec_sys/resources/seq_flow.svg

+++ /dev/null

@@ -1,3 +0,0 @@

-version https://git-lfs.github.com/spec/v1

-oid sha256:00146d187abe04ec54f32cb4e1ad7b94ba500488c682d921a59519fb21f4bdf0

-size 31275

diff --git a/docs/resources/workspace/llmops_framework/resources/competitive_analysis.pdf b/docs/resources/workspace/llmops_framework/resources/competitive_analysis.pdf

deleted file mode 100644

index eb287aadebe7623f7e7f3c9b9d0273672c61d50d..0000000000000000000000000000000000000000

Binary files a/docs/resources/workspace/llmops_framework/resources/competitive_analysis.pdf and /dev/null differ

diff --git a/docs/resources/workspace/llmops_framework/resources/competitive_analysis.png b/docs/resources/workspace/llmops_framework/resources/competitive_analysis.png

deleted file mode 100644

index 3afdd2e639bf8aed9911a78cd1d8b85abe397c4f..0000000000000000000000000000000000000000

--- a/docs/resources/workspace/llmops_framework/resources/competitive_analysis.png

+++ /dev/null

@@ -1,3 +0,0 @@

-version https://git-lfs.github.com/spec/v1

-oid sha256:2571079e39cd18b9102fca3d2f5725aac96b20ba7a35c8774760ff1444d3a05e

-size 41353

diff --git a/docs/resources/workspace/llmops_framework/resources/competitive_analysis.svg b/docs/resources/workspace/llmops_framework/resources/competitive_analysis.svg

deleted file mode 100644

index 90578b9f59f42e925e290892ad9445924eabc632..0000000000000000000000000000000000000000

--- a/docs/resources/workspace/llmops_framework/resources/competitive_analysis.svg

+++ /dev/null

@@ -1,3 +0,0 @@

-version https://git-lfs.github.com/spec/v1

-oid sha256:9f9660f5d98f28abc490670c1765ecd834788315f6b940d882182601a4766dab

-size 5753

diff --git a/docs/resources/workspace/llmops_framework/resources/data_api_design.pdf b/docs/resources/workspace/llmops_framework/resources/data_api_design.pdf

deleted file mode 100644

index 9fe9721a9345177c2c66213292948ebc0f422a29..0000000000000000000000000000000000000000

Binary files a/docs/resources/workspace/llmops_framework/resources/data_api_design.pdf and /dev/null differ

diff --git a/docs/resources/workspace/llmops_framework/resources/data_api_design.png b/docs/resources/workspace/llmops_framework/resources/data_api_design.png

deleted file mode 100644

index 0a7898cfd0b46c3ac2b3356fd5155a5c0d74a3ce..0000000000000000000000000000000000000000

--- a/docs/resources/workspace/llmops_framework/resources/data_api_design.png

+++ /dev/null

@@ -1,3 +0,0 @@

-version https://git-lfs.github.com/spec/v1

-oid sha256:53c6501dd63c72c6b72504074738b9d3def3878048e8329dc44cb0a58f62d472

-size 146766

diff --git a/docs/resources/workspace/llmops_framework/resources/data_api_design.svg b/docs/resources/workspace/llmops_framework/resources/data_api_design.svg

deleted file mode 100644

index 75e3f88757f035b2125e5b06f7c33ffe01135c4d..0000000000000000000000000000000000000000

--- a/docs/resources/workspace/llmops_framework/resources/data_api_design.svg

+++ /dev/null

@@ -1,3 +0,0 @@

-version https://git-lfs.github.com/spec/v1

-oid sha256:2fbcaa319e9da40211376f076f992004ea1315b8cb9824df6431d333f04b042e

-size 27651

diff --git a/docs/resources/workspace/llmops_framework/resources/seq_flow.pdf b/docs/resources/workspace/llmops_framework/resources/seq_flow.pdf

deleted file mode 100644

index a8e246658847c206f2833eeba3b3cecb765e1084..0000000000000000000000000000000000000000

Binary files a/docs/resources/workspace/llmops_framework/resources/seq_flow.pdf and /dev/null differ

diff --git a/docs/resources/workspace/llmops_framework/resources/seq_flow.png b/docs/resources/workspace/llmops_framework/resources/seq_flow.png

deleted file mode 100644

index 0ac3466e1fd5bb900515a368ee56458f4506cac8..0000000000000000000000000000000000000000

--- a/docs/resources/workspace/llmops_framework/resources/seq_flow.png

+++ /dev/null

@@ -1,3 +0,0 @@

-version https://git-lfs.github.com/spec/v1

-oid sha256:f646a4a4111d3aa0536fc3490f92bfc286e5b86f15faa6d1b3a97dee1712670a

-size 116168

diff --git a/docs/resources/workspace/llmops_framework/resources/seq_flow.svg b/docs/resources/workspace/llmops_framework/resources/seq_flow.svg

deleted file mode 100644

index 94a518087454ae603b565ab0a938bb513a2e96e7..0000000000000000000000000000000000000000

--- a/docs/resources/workspace/llmops_framework/resources/seq_flow.svg

+++ /dev/null

@@ -1,3 +0,0 @@

-version https://git-lfs.github.com/spec/v1

-oid sha256:c42cafccb78072cb898e68ebf0297b66c21c4667cd8204dd6c21eedfe1c6c234

-size 30864

diff --git a/docs/resources/workspace/match3_puzzle_game/resources/competitive_analysis.pdf b/docs/resources/workspace/match3_puzzle_game/resources/competitive_analysis.pdf

deleted file mode 100644

index 6ce1a74f1204b8228fef5acfa873a19f210eb7aa..0000000000000000000000000000000000000000

Binary files a/docs/resources/workspace/match3_puzzle_game/resources/competitive_analysis.pdf and /dev/null differ

diff --git a/docs/resources/workspace/match3_puzzle_game/resources/competitive_analysis.png b/docs/resources/workspace/match3_puzzle_game/resources/competitive_analysis.png

deleted file mode 100644

index 1a9bd818ac60016c8d00d459cb28b3dfb69fbb32..0000000000000000000000000000000000000000

--- a/docs/resources/workspace/match3_puzzle_game/resources/competitive_analysis.png

+++ /dev/null

@@ -1,3 +0,0 @@

-version https://git-lfs.github.com/spec/v1

-oid sha256:41722083912101c2ca706227c862f538a77cf4a205910a48089d8dd06039598a

-size 41991

diff --git a/docs/resources/workspace/match3_puzzle_game/resources/competitive_analysis.svg b/docs/resources/workspace/match3_puzzle_game/resources/competitive_analysis.svg

deleted file mode 100644

index 139ce2773f50ae7675b6f89ff30526e274739ab4..0000000000000000000000000000000000000000

--- a/docs/resources/workspace/match3_puzzle_game/resources/competitive_analysis.svg

+++ /dev/null

@@ -1,3 +0,0 @@

-version https://git-lfs.github.com/spec/v1

-oid sha256:733558b186585157f040ac902262f3022e91ecbb580509b736ff08fdfb3bb848

-size 5813

diff --git a/docs/resources/workspace/match3_puzzle_game/resources/data_api_design.pdf b/docs/resources/workspace/match3_puzzle_game/resources/data_api_design.pdf

deleted file mode 100644

index 083f7939326ceae2cb6aeda33715e847215fbcca..0000000000000000000000000000000000000000

Binary files a/docs/resources/workspace/match3_puzzle_game/resources/data_api_design.pdf and /dev/null differ

diff --git a/docs/resources/workspace/match3_puzzle_game/resources/data_api_design.png b/docs/resources/workspace/match3_puzzle_game/resources/data_api_design.png

deleted file mode 100644

index 950e3a9db9a433d5751b1f5cb4315a4c76d45d48..0000000000000000000000000000000000000000

--- a/docs/resources/workspace/match3_puzzle_game/resources/data_api_design.png

+++ /dev/null

@@ -1,3 +0,0 @@

-version https://git-lfs.github.com/spec/v1

-oid sha256:8f70036cdde825e4d6f5c5604fbc32e851c5e66073d63d4277d71bdad2784e9c

-size 135590

diff --git a/docs/resources/workspace/match3_puzzle_game/resources/data_api_design.svg b/docs/resources/workspace/match3_puzzle_game/resources/data_api_design.svg

deleted file mode 100644

index 198d9aabd0b03873810adc211b2841b43b126d3f..0000000000000000000000000000000000000000

--- a/docs/resources/workspace/match3_puzzle_game/resources/data_api_design.svg

+++ /dev/null

@@ -1,3 +0,0 @@

-version https://git-lfs.github.com/spec/v1

-oid sha256:954a1fa23b96e6631c7cb84498912ed66e0e23e28f79ecfb6c9bc39de0bfd231

-size 41172

diff --git a/docs/resources/workspace/match3_puzzle_game/resources/seq_flow.pdf b/docs/resources/workspace/match3_puzzle_game/resources/seq_flow.pdf

deleted file mode 100644

index 4b4878ce141c6cb803aa3e4bc7cc572cd1fe2a64..0000000000000000000000000000000000000000

Binary files a/docs/resources/workspace/match3_puzzle_game/resources/seq_flow.pdf and /dev/null differ

diff --git a/docs/resources/workspace/match3_puzzle_game/resources/seq_flow.png b/docs/resources/workspace/match3_puzzle_game/resources/seq_flow.png

deleted file mode 100644

index 349ae9c828f3f24e4a2a9ed13a22d838868d48e0..0000000000000000000000000000000000000000

--- a/docs/resources/workspace/match3_puzzle_game/resources/seq_flow.png

+++ /dev/null

@@ -1,3 +0,0 @@

-version https://git-lfs.github.com/spec/v1

-oid sha256:9d27ace227a29ca395cd5ba90f582712403a1c6a233675b321aaff07bd5c0fa3

-size 119441

diff --git a/docs/resources/workspace/match3_puzzle_game/resources/seq_flow.svg b/docs/resources/workspace/match3_puzzle_game/resources/seq_flow.svg

deleted file mode 100644

index 97e7a1d88e429526a1a9c99e6fea5be73a70e033..0000000000000000000000000000000000000000

--- a/docs/resources/workspace/match3_puzzle_game/resources/seq_flow.svg

+++ /dev/null

@@ -1,3 +0,0 @@

-version https://git-lfs.github.com/spec/v1

-oid sha256:4f8e7ced25c9d15dddec20e83ed202333a88b8f87bf59b6be0cca5310cd67722

-size 35429

diff --git a/docs/resources/workspace/minimalist_pomodoro_timer/resources/competitive_analysis.pdf b/docs/resources/workspace/minimalist_pomodoro_timer/resources/competitive_analysis.pdf

deleted file mode 100644

index 228b08224788e0e886b5b9aecd918b0987fe5dcc..0000000000000000000000000000000000000000

Binary files a/docs/resources/workspace/minimalist_pomodoro_timer/resources/competitive_analysis.pdf and /dev/null differ

diff --git a/docs/resources/workspace/minimalist_pomodoro_timer/resources/competitive_analysis.png b/docs/resources/workspace/minimalist_pomodoro_timer/resources/competitive_analysis.png

deleted file mode 100644

index 60aff7450db047a956c756634de8c6ec76741f1d..0000000000000000000000000000000000000000

--- a/docs/resources/workspace/minimalist_pomodoro_timer/resources/competitive_analysis.png

+++ /dev/null

@@ -1,3 +0,0 @@

-version https://git-lfs.github.com/spec/v1

-oid sha256:c1d3b7ed2d3c26f705b5d263f653962dd15e3f28e58830c62d01f8418a7e0d58

-size 36569

diff --git a/docs/resources/workspace/minimalist_pomodoro_timer/resources/competitive_analysis.svg b/docs/resources/workspace/minimalist_pomodoro_timer/resources/competitive_analysis.svg

deleted file mode 100644

index e57d99ae252e6f939869b7794fd4f41ca708f4bd..0000000000000000000000000000000000000000

--- a/docs/resources/workspace/minimalist_pomodoro_timer/resources/competitive_analysis.svg

+++ /dev/null

@@ -1,3 +0,0 @@

-version https://git-lfs.github.com/spec/v1

-oid sha256:af7d31017e16e989211a162f58b77cc7b1b4ddb3b3b6023b916f5260f62cb621

-size 5241

diff --git a/docs/resources/workspace/minimalist_pomodoro_timer/resources/data_api_design.pdf b/docs/resources/workspace/minimalist_pomodoro_timer/resources/data_api_design.pdf

deleted file mode 100644

index f8f6bfa7ef7601013cc2e7bdc8c3607d64324b67..0000000000000000000000000000000000000000

Binary files a/docs/resources/workspace/minimalist_pomodoro_timer/resources/data_api_design.pdf and /dev/null differ

diff --git a/docs/resources/workspace/minimalist_pomodoro_timer/resources/data_api_design.png b/docs/resources/workspace/minimalist_pomodoro_timer/resources/data_api_design.png

deleted file mode 100644

index 4e7d9abf6a35b5fae9a5b28b0e48d42fa097b9f5..0000000000000000000000000000000000000000

--- a/docs/resources/workspace/minimalist_pomodoro_timer/resources/data_api_design.png

+++ /dev/null

@@ -1,3 +0,0 @@

-version https://git-lfs.github.com/spec/v1

-oid sha256:66aba8f103d387da517dd2600dd0e315d6632e4f89fa8117f11c2ead553a245a

-size 32219

diff --git a/docs/resources/workspace/minimalist_pomodoro_timer/resources/data_api_design.svg b/docs/resources/workspace/minimalist_pomodoro_timer/resources/data_api_design.svg

deleted file mode 100644

index 90a3c9f0ba132a2c7e38349f2d11fe4f251b38f0..0000000000000000000000000000000000000000

--- a/docs/resources/workspace/minimalist_pomodoro_timer/resources/data_api_design.svg

+++ /dev/null

@@ -1,3 +0,0 @@

-version https://git-lfs.github.com/spec/v1

-oid sha256:ad6e3e26073b35d8e5de50b6cf41ebcba360722fe1380788e12f325b4c168f3b

-size 9660

diff --git a/docs/resources/workspace/minimalist_pomodoro_timer/resources/seq_flow.pdf b/docs/resources/workspace/minimalist_pomodoro_timer/resources/seq_flow.pdf

deleted file mode 100644

index 4a3309aefc5ad61dfadb7d156d92013527d4b8fe..0000000000000000000000000000000000000000

Binary files a/docs/resources/workspace/minimalist_pomodoro_timer/resources/seq_flow.pdf and /dev/null differ

diff --git a/docs/resources/workspace/minimalist_pomodoro_timer/resources/seq_flow.png b/docs/resources/workspace/minimalist_pomodoro_timer/resources/seq_flow.png

deleted file mode 100644

index 23ea86b6a138bf3a9fdbbc9ba6cfb9355b8463f7..0000000000000000000000000000000000000000

--- a/docs/resources/workspace/minimalist_pomodoro_timer/resources/seq_flow.png

+++ /dev/null

@@ -1,3 +0,0 @@

-version https://git-lfs.github.com/spec/v1

-oid sha256:69e09d3d7a79af022bb8802d319b81a038da2af5c5074f906925f5d9314b4dd7

-size 70721

diff --git a/docs/resources/workspace/minimalist_pomodoro_timer/resources/seq_flow.svg b/docs/resources/workspace/minimalist_pomodoro_timer/resources/seq_flow.svg

deleted file mode 100644

index e377b834d76a515da8576bbf2619c1c55162b7bb..0000000000000000000000000000000000000000

--- a/docs/resources/workspace/minimalist_pomodoro_timer/resources/seq_flow.svg

+++ /dev/null

@@ -1,3 +0,0 @@

-version https://git-lfs.github.com/spec/v1

-oid sha256:0184bcb5c2f24ebdc755b5e283203079a93a4c55a6f5d386b66ce201c78d23b0

-size 25425

diff --git a/docs/resources/workspace/pyrogue/resources/competitive_analysis.pdf b/docs/resources/workspace/pyrogue/resources/competitive_analysis.pdf

deleted file mode 100644

index 4e8aa999d02257d46f80487b1e742f383f226b1f..0000000000000000000000000000000000000000

Binary files a/docs/resources/workspace/pyrogue/resources/competitive_analysis.pdf and /dev/null differ

diff --git a/docs/resources/workspace/pyrogue/resources/competitive_analysis.png b/docs/resources/workspace/pyrogue/resources/competitive_analysis.png

deleted file mode 100644

index a892d5cbc04901a6a26c0d3c951731f8a730195f..0000000000000000000000000000000000000000

--- a/docs/resources/workspace/pyrogue/resources/competitive_analysis.png

+++ /dev/null

@@ -1,3 +0,0 @@

-version https://git-lfs.github.com/spec/v1

-oid sha256:81474589f64338da45ae9590a223154681055a02ba9128093ef2db3ed1488419

-size 45696

diff --git a/docs/resources/workspace/pyrogue/resources/competitive_analysis.svg b/docs/resources/workspace/pyrogue/resources/competitive_analysis.svg

deleted file mode 100644

index a1f15ea17d2a1bbbc9629bdde6c48ac2eeaf8f8e..0000000000000000000000000000000000000000

--- a/docs/resources/workspace/pyrogue/resources/competitive_analysis.svg

+++ /dev/null

@@ -1,3 +0,0 @@

-version https://git-lfs.github.com/spec/v1

-oid sha256:48c1e3a5e8bb8e0d0e6838e5d41b5696e261303a9a985f91998ac65d7c7ad4b8

-size 5836

diff --git a/docs/resources/workspace/pyrogue/resources/data_api_design.pdf b/docs/resources/workspace/pyrogue/resources/data_api_design.pdf

deleted file mode 100644

index 4fa0690f8e1311a534ecfb9b38781168f714b668..0000000000000000000000000000000000000000

Binary files a/docs/resources/workspace/pyrogue/resources/data_api_design.pdf and /dev/null differ

diff --git a/docs/resources/workspace/pyrogue/resources/data_api_design.png b/docs/resources/workspace/pyrogue/resources/data_api_design.png

deleted file mode 100644

index e36aae2333d2b42b2a704f7a249798099399f92e..0000000000000000000000000000000000000000

--- a/docs/resources/workspace/pyrogue/resources/data_api_design.png

+++ /dev/null

@@ -1,3 +0,0 @@

-version https://git-lfs.github.com/spec/v1

-oid sha256:b2c64bb8650580a5b6c66a6bbdc1611d21a4aa4546952abf88a1fba64e2e88bd

-size 187750

diff --git a/docs/resources/workspace/pyrogue/resources/data_api_design.svg b/docs/resources/workspace/pyrogue/resources/data_api_design.svg

deleted file mode 100644

index 66ec39799ff679cff5b0e098dfac1a57250c7301..0000000000000000000000000000000000000000

--- a/docs/resources/workspace/pyrogue/resources/data_api_design.svg

+++ /dev/null

@@ -1,3 +0,0 @@

-version https://git-lfs.github.com/spec/v1

-oid sha256:63e9ad7daf04a1690ffb0a5bdba6152a024a914793ff9ebed7ddefa404d01f56

-size 34404

diff --git a/docs/resources/workspace/pyrogue/resources/seq_flow.pdf b/docs/resources/workspace/pyrogue/resources/seq_flow.pdf

deleted file mode 100644

index cace014cf02147789575aeb7ecb3b78bea009428..0000000000000000000000000000000000000000

Binary files a/docs/resources/workspace/pyrogue/resources/seq_flow.pdf and /dev/null differ

diff --git a/docs/resources/workspace/pyrogue/resources/seq_flow.png b/docs/resources/workspace/pyrogue/resources/seq_flow.png

deleted file mode 100644

index 29c5ea3a4f0cb1c669129ef2e3b3b0e9bfe48e1e..0000000000000000000000000000000000000000

--- a/docs/resources/workspace/pyrogue/resources/seq_flow.png

+++ /dev/null

@@ -1,3 +0,0 @@

-version https://git-lfs.github.com/spec/v1

-oid sha256:4db135fb8f61d642f2ec1bfefb45f5ff339fe56a61479fc4dfcd18e6e1fddbda

-size 135782

diff --git a/docs/resources/workspace/pyrogue/resources/seq_flow.svg b/docs/resources/workspace/pyrogue/resources/seq_flow.svg

deleted file mode 100644

index 5221958d711f20aeb7fa00aa1e0789a9f59cee3c..0000000000000000000000000000000000000000

--- a/docs/resources/workspace/pyrogue/resources/seq_flow.svg

+++ /dev/null

@@ -1,3 +0,0 @@

-version https://git-lfs.github.com/spec/v1

-oid sha256:cf406c7cab12c81b5000c4abef93a9d529685492a30e2fd3d85fbea6c10e3364

-size 32590

diff --git a/docs/resources/workspace/search_algorithm_framework/resources/competitive_analysis.pdf b/docs/resources/workspace/search_algorithm_framework/resources/competitive_analysis.pdf

deleted file mode 100644

index fa35dcaff74d8b4349c57a38e1bd8eb8c0710a13..0000000000000000000000000000000000000000

Binary files a/docs/resources/workspace/search_algorithm_framework/resources/competitive_analysis.pdf and /dev/null differ

diff --git a/docs/resources/workspace/search_algorithm_framework/resources/competitive_analysis.png b/docs/resources/workspace/search_algorithm_framework/resources/competitive_analysis.png

deleted file mode 100644

index 86f96c398d31aa0398fd2dbcdf7d47ecb182b3cd..0000000000000000000000000000000000000000

--- a/docs/resources/workspace/search_algorithm_framework/resources/competitive_analysis.png

+++ /dev/null

@@ -1,3 +0,0 @@

-version https://git-lfs.github.com/spec/v1

-oid sha256:1005333be5c83c969290189fee568a9e807b85694672abc7c44d4fc6432b709a

-size 42339

diff --git a/docs/resources/workspace/search_algorithm_framework/resources/competitive_analysis.svg b/docs/resources/workspace/search_algorithm_framework/resources/competitive_analysis.svg

deleted file mode 100644

index 93df9a95332f86e7ac5d09ab5a490fbcbbcf3ea4..0000000000000000000000000000000000000000

--- a/docs/resources/workspace/search_algorithm_framework/resources/competitive_analysis.svg

+++ /dev/null

@@ -1,3 +0,0 @@

-version https://git-lfs.github.com/spec/v1

-oid sha256:3ef633ca0fe0831b9d3bc89a3472b5ff1b95b0bec0e798a74f39be5e75852634

-size 5846

diff --git a/docs/resources/workspace/search_algorithm_framework/resources/data_api_design.pdf b/docs/resources/workspace/search_algorithm_framework/resources/data_api_design.pdf

deleted file mode 100644

index ad3b3aa97e5b816498076a90aa8507a2054e0bc1..0000000000000000000000000000000000000000

Binary files a/docs/resources/workspace/search_algorithm_framework/resources/data_api_design.pdf and /dev/null differ

diff --git a/docs/resources/workspace/search_algorithm_framework/resources/data_api_design.png b/docs/resources/workspace/search_algorithm_framework/resources/data_api_design.png

deleted file mode 100644