Spaces:

Configuration error

Configuration error

Upload folder using huggingface_hub

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +1 -0

- .github/workflows/bot-autolint.yaml +50 -0

- .github/workflows/ci.yaml +54 -0

- .gitignore +178 -0

- .pre-commit-config.yaml +62 -0

- CIs/add_license_all.sh +2 -0

- Dockerfile +20 -0

- LICENSE +117 -0

- README.md +231 -12

- app/app_sana.py +488 -0

- app/app_sana_multithread.py +565 -0

- app/safety_check.py +72 -0

- app/sana_pipeline.py +324 -0

- asset/Sana.jpg +3 -0

- asset/docs/metrics_toolkit.md +118 -0

- asset/example_data/00000000.txt +1 -0

- asset/examples.py +69 -0

- asset/model-incremental.jpg +0 -0

- asset/model_paths.txt +2 -0

- asset/samples.txt +125 -0

- asset/samples_mini.txt +10 -0

- configs/sana_app_config/Sana_1600M_app.yaml +107 -0

- configs/sana_app_config/Sana_600M_app.yaml +105 -0

- configs/sana_base.yaml +140 -0

- configs/sana_config/1024ms/Sana_1600M_img1024.yaml +109 -0

- configs/sana_config/1024ms/Sana_600M_img1024.yaml +105 -0

- configs/sana_config/512ms/Sana_1600M_img512.yaml +108 -0

- configs/sana_config/512ms/Sana_600M_img512.yaml +107 -0

- configs/sana_config/512ms/ci_Sana_600M_img512.yaml +107 -0

- configs/sana_config/512ms/sample_dataset.yaml +107 -0

- diffusion/__init__.py +9 -0

- diffusion/data/__init__.py +2 -0

- diffusion/data/builder.py +76 -0

- diffusion/data/datasets/__init__.py +3 -0

- diffusion/data/datasets/sana_data.py +467 -0

- diffusion/data/datasets/sana_data_multi_scale.py +265 -0

- diffusion/data/datasets/utils.py +506 -0

- diffusion/data/transforms.py +46 -0

- diffusion/data/wids/__init__.py +16 -0

- diffusion/data/wids/wids.py +1051 -0

- diffusion/data/wids/wids_dl.py +174 -0

- diffusion/data/wids/wids_lru.py +81 -0

- diffusion/data/wids/wids_mmtar.py +168 -0

- diffusion/data/wids/wids_specs.py +192 -0

- diffusion/data/wids/wids_tar.py +98 -0

- diffusion/dpm_solver.py +69 -0

- diffusion/flow_euler_sampler.py +74 -0

- diffusion/iddpm.py +76 -0

- diffusion/lcm_scheduler.py +457 -0

- diffusion/model/__init__.py +1 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

asset/Sana.jpg filter=lfs diff=lfs merge=lfs -text

|

.github/workflows/bot-autolint.yaml

ADDED

|

@@ -0,0 +1,50 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: Auto Lint (triggered by "auto lint" label)

|

| 2 |

+

on:

|

| 3 |

+

pull_request:

|

| 4 |

+

types:

|

| 5 |

+

- opened

|

| 6 |

+

- edited

|

| 7 |

+

- closed

|

| 8 |

+

- reopened

|

| 9 |

+

- synchronize

|

| 10 |

+

- labeled

|

| 11 |

+

- unlabeled

|

| 12 |

+

# run only one unit test for a branch / tag.

|

| 13 |

+

concurrency:

|

| 14 |

+

group: ci-lint-${{ github.ref }}

|

| 15 |

+

cancel-in-progress: true

|

| 16 |

+

jobs:

|

| 17 |

+

lint-by-label:

|

| 18 |

+

if: contains(github.event.pull_request.labels.*.name, 'lint wanted')

|

| 19 |

+

runs-on: ubuntu-latest

|

| 20 |

+

steps:

|

| 21 |

+

- name: Check out Git repository

|

| 22 |

+

uses: actions/checkout@v4

|

| 23 |

+

with:

|

| 24 |

+

token: ${{ secrets.PAT }}

|

| 25 |

+

ref: ${{ github.event.pull_request.head.ref }}

|

| 26 |

+

- name: Set up Python

|

| 27 |

+

uses: actions/setup-python@v5

|

| 28 |

+

with:

|

| 29 |

+

python-version: '3.10'

|

| 30 |

+

- name: Test pre-commit hooks

|

| 31 |

+

continue-on-error: true

|

| 32 |

+

uses: pre-commit/[email protected] # sync with https://github.com/Efficient-Large-Model/VILA-Internal/blob/main/.github/workflows/pre-commit.yaml

|

| 33 |

+

with:

|

| 34 |

+

extra_args: --all-files

|

| 35 |

+

- name: Check if there are any changes

|

| 36 |

+

id: verify_diff

|

| 37 |

+

run: |

|

| 38 |

+

git diff --quiet . || echo "changed=true" >> $GITHUB_OUTPUT

|

| 39 |

+

- name: Commit files

|

| 40 |

+

if: steps.verify_diff.outputs.changed == 'true'

|

| 41 |

+

run: |

|

| 42 |

+

git config --local user.email "[email protected]"

|

| 43 |

+

git config --local user.name "GitHub Action"

|

| 44 |

+

git add .

|

| 45 |

+

git commit -m "[CI-Lint] Fix code style issues with pre-commit ${{ github.sha }}" -a

|

| 46 |

+

git push

|

| 47 |

+

- name: Remove label(s) after lint

|

| 48 |

+

uses: actions-ecosystem/action-remove-labels@v1

|

| 49 |

+

with:

|

| 50 |

+

labels: lint wanted

|

.github/workflows/ci.yaml

ADDED

|

@@ -0,0 +1,54 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: ci

|

| 2 |

+

on:

|

| 3 |

+

pull_request:

|

| 4 |

+

push:

|

| 5 |

+

branches: [main, feat/Sana-public, feat/Sana-public-for-NVLab]

|

| 6 |

+

concurrency:

|

| 7 |

+

group: ci-${{ github.workflow }}-${{ github.ref }}

|

| 8 |

+

cancel-in-progress: true

|

| 9 |

+

# if: ${{ github.repository == 'Efficient-Large-Model/Sana' }}

|

| 10 |

+

jobs:

|

| 11 |

+

pre-commit:

|

| 12 |

+

runs-on: ubuntu-latest

|

| 13 |

+

steps:

|

| 14 |

+

- name: Check out Git repository

|

| 15 |

+

uses: actions/checkout@v4

|

| 16 |

+

- name: Set up Python

|

| 17 |

+

uses: actions/setup-python@v5

|

| 18 |

+

with:

|

| 19 |

+

python-version: 3.10.10

|

| 20 |

+

- name: Test pre-commit hooks

|

| 21 |

+

uses: pre-commit/[email protected]

|

| 22 |

+

tests-bash:

|

| 23 |

+

# needs: pre-commit

|

| 24 |

+

runs-on: self-hosted

|

| 25 |

+

steps:

|

| 26 |

+

- name: Check out Git repository

|

| 27 |

+

uses: actions/checkout@v4

|

| 28 |

+

- name: Set up Python

|

| 29 |

+

uses: actions/setup-python@v5

|

| 30 |

+

with:

|

| 31 |

+

python-version: 3.10.10

|

| 32 |

+

- name: Set up the environment

|

| 33 |

+

run: |

|

| 34 |

+

bash environment_setup.sh

|

| 35 |

+

- name: Run tests with Slurm

|

| 36 |

+

run: |

|

| 37 |

+

sana-run --pty -m ci -J tests-bash bash tests/bash/entry.sh

|

| 38 |

+

|

| 39 |

+

# tests-python:

|

| 40 |

+

# needs: pre-commit

|

| 41 |

+

# runs-on: self-hosted

|

| 42 |

+

# steps:

|

| 43 |

+

# - name: Check out Git repository

|

| 44 |

+

# uses: actions/checkout@v4

|

| 45 |

+

# - name: Set up Python

|

| 46 |

+

# uses: actions/setup-python@v5

|

| 47 |

+

# with:

|

| 48 |

+

# python-version: 3.10.10

|

| 49 |

+

# - name: Set up the environment

|

| 50 |

+

# run: |

|

| 51 |

+

# ./environment_setup.sh

|

| 52 |

+

# - name: Run tests with Slurm

|

| 53 |

+

# run: |

|

| 54 |

+

# sana-run --pty -m ci -J tests-python pytest tests/python

|

.gitignore

ADDED

|

@@ -0,0 +1,178 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Sana related files

|

| 2 |

+

.idea/

|

| 3 |

+

*.png

|

| 4 |

+

*.json

|

| 5 |

+

tmp*

|

| 6 |

+

output*

|

| 7 |

+

output/

|

| 8 |

+

outputs/

|

| 9 |

+

wandb/

|

| 10 |

+

.vscode/

|

| 11 |

+

private/

|

| 12 |

+

ldm_ae*

|

| 13 |

+

data/*

|

| 14 |

+

*.pth

|

| 15 |

+

.gradio/

|

| 16 |

+

|

| 17 |

+

# Byte-compiled / optimized / DLL files

|

| 18 |

+

__pycache__/

|

| 19 |

+

*.py[cod]

|

| 20 |

+

*$py.class

|

| 21 |

+

|

| 22 |

+

# C extensions

|

| 23 |

+

*.so

|

| 24 |

+

|

| 25 |

+

# Distribution / packaging

|

| 26 |

+

.Python

|

| 27 |

+

build/

|

| 28 |

+

develop-eggs/

|

| 29 |

+

dist/

|

| 30 |

+

downloads/

|

| 31 |

+

eggs/

|

| 32 |

+

.eggs/

|

| 33 |

+

lib/

|

| 34 |

+

lib64/

|

| 35 |

+

parts/

|

| 36 |

+

sdist/

|

| 37 |

+

var/

|

| 38 |

+

wheels/

|

| 39 |

+

share/python-wheels/

|

| 40 |

+

*.egg-info/

|

| 41 |

+

.installed.cfg

|

| 42 |

+

*.egg

|

| 43 |

+

MANIFEST

|

| 44 |

+

|

| 45 |

+

# PyInstaller

|

| 46 |

+

# Usually these files are written by a python script from a template

|

| 47 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 48 |

+

*.manifest

|

| 49 |

+

*.spec

|

| 50 |

+

|

| 51 |

+

# Installer logs

|

| 52 |

+

pip-log.txt

|

| 53 |

+

pip-delete-this-directory.txt

|

| 54 |

+

|

| 55 |

+

# Unit test / coverage reports

|

| 56 |

+

htmlcov/

|

| 57 |

+

.tox/

|

| 58 |

+

.nox/

|

| 59 |

+

.coverage

|

| 60 |

+

.coverage.*

|

| 61 |

+

.cache

|

| 62 |

+

nosetests.xml

|

| 63 |

+

coverage.xml

|

| 64 |

+

*.cover

|

| 65 |

+

*.py,cover

|

| 66 |

+

.hypothesis/

|

| 67 |

+

.pytest_cache/

|

| 68 |

+

cover/

|

| 69 |

+

|

| 70 |

+

# Translations

|

| 71 |

+

*.mo

|

| 72 |

+

*.pot

|

| 73 |

+

|

| 74 |

+

# Django stuff:

|

| 75 |

+

*.log

|

| 76 |

+

local_settings.py

|

| 77 |

+

db.sqlite3

|

| 78 |

+

db.sqlite3-journal

|

| 79 |

+

|

| 80 |

+

# Flask stuff:

|

| 81 |

+

instance/

|

| 82 |

+

.webassets-cache

|

| 83 |

+

|

| 84 |

+

# Scrapy stuff:

|

| 85 |

+

.scrapy

|

| 86 |

+

|

| 87 |

+

# Sphinx documentation

|

| 88 |

+

docs/_build/

|

| 89 |

+

|

| 90 |

+

# PyBuilder

|

| 91 |

+

.pybuilder/

|

| 92 |

+

target/

|

| 93 |

+

|

| 94 |

+

# Jupyter Notebook

|

| 95 |

+

.ipynb_checkpoints

|

| 96 |

+

|

| 97 |

+

# IPython

|

| 98 |

+

profile_default/

|

| 99 |

+

ipython_config.py

|

| 100 |

+

|

| 101 |

+

# pyenv

|

| 102 |

+

# For a library or package, you might want to ignore these files since the code is

|

| 103 |

+

# intended to run in multiple environments; otherwise, check them in:

|

| 104 |

+

# .python-version

|

| 105 |

+

|

| 106 |

+

# pipenv

|

| 107 |

+

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

| 108 |

+

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

| 109 |

+

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

| 110 |

+

# install all needed dependencies.

|

| 111 |

+

#Pipfile.lock

|

| 112 |

+

|

| 113 |

+

# poetry

|

| 114 |

+

# Similar to Pipfile.lock, it is generally recommended to include poetry.lock in version control.

|

| 115 |

+

# This is especially recommended for binary packages to ensure reproducibility, and is more

|

| 116 |

+

# commonly ignored for libraries.

|

| 117 |

+

# https://python-poetry.org/docs/basic-usage/#commit-your-poetrylock-file-to-version-control

|

| 118 |

+

#poetry.lock

|

| 119 |

+

|

| 120 |

+

# pdm

|

| 121 |

+

# Similar to Pipfile.lock, it is generally recommended to include pdm.lock in version control.

|

| 122 |

+

#pdm.lock

|

| 123 |

+

# pdm stores project-wide configurations in .pdm.toml, but it is recommended to not include it

|

| 124 |

+

# in version control.

|

| 125 |

+

# https://pdm.fming.dev/latest/usage/project/#working-with-version-control

|

| 126 |

+

.pdm.toml

|

| 127 |

+

.pdm-python

|

| 128 |

+

.pdm-build/

|

| 129 |

+

|

| 130 |

+

# PEP 582; used by e.g. github.com/David-OConnor/pyflow and github.com/pdm-project/pdm

|

| 131 |

+

__pypackages__/

|

| 132 |

+

|

| 133 |

+

# Celery stuff

|

| 134 |

+

celerybeat-schedule

|

| 135 |

+

celerybeat.pid

|

| 136 |

+

|

| 137 |

+

# SageMath parsed files

|

| 138 |

+

*.sage.py

|

| 139 |

+

|

| 140 |

+

# Environments

|

| 141 |

+

.env

|

| 142 |

+

.venv

|

| 143 |

+

env/

|

| 144 |

+

venv/

|

| 145 |

+

ENV/

|

| 146 |

+

env.bak/

|

| 147 |

+

venv.bak/

|

| 148 |

+

|

| 149 |

+

# Spyder project settings

|

| 150 |

+

.spyderproject

|

| 151 |

+

.spyproject

|

| 152 |

+

|

| 153 |

+

# Rope project settings

|

| 154 |

+

.ropeproject

|

| 155 |

+

|

| 156 |

+

# mkdocs documentation

|

| 157 |

+

/site

|

| 158 |

+

|

| 159 |

+

# mypy

|

| 160 |

+

.mypy_cache/

|

| 161 |

+

.dmypy.json

|

| 162 |

+

dmypy.json

|

| 163 |

+

|

| 164 |

+

# Pyre type checker

|

| 165 |

+

.pyre/

|

| 166 |

+

|

| 167 |

+

# pytype static type analyzer

|

| 168 |

+

.pytype/

|

| 169 |

+

|

| 170 |

+

# Cython debug symbols

|

| 171 |

+

cython_debug/

|

| 172 |

+

|

| 173 |

+

# PyCharm

|

| 174 |

+

# JetBrains specific template is maintained in a separate JetBrains.gitignore that can

|

| 175 |

+

# be found at https://github.com/github/gitignore/blob/main/Global/JetBrains.gitignore

|

| 176 |

+

# and can be added to the global gitignore or merged into this file. For a more nuclear

|

| 177 |

+

# option (not recommended) you can uncomment the following to ignore the entire idea folder.

|

| 178 |

+

#.idea/

|

.pre-commit-config.yaml

ADDED

|

@@ -0,0 +1,62 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

repos:

|

| 2 |

+

- repo: https://github.com/pre-commit/pre-commit-hooks

|

| 3 |

+

rev: v5.0.0

|

| 4 |

+

hooks:

|

| 5 |

+

- id: trailing-whitespace

|

| 6 |

+

name: (Common) Remove trailing whitespaces

|

| 7 |

+

- id: mixed-line-ending

|

| 8 |

+

name: (Common) Fix mixed line ending

|

| 9 |

+

args: [--fix=lf]

|

| 10 |

+

- id: end-of-file-fixer

|

| 11 |

+

name: (Common) Remove extra EOF newlines

|

| 12 |

+

- id: check-merge-conflict

|

| 13 |

+

name: (Common) Check for merge conflicts

|

| 14 |

+

- id: requirements-txt-fixer

|

| 15 |

+

name: (Common) Sort "requirements.txt"

|

| 16 |

+

- id: fix-encoding-pragma

|

| 17 |

+

name: (Python) Remove encoding pragmas

|

| 18 |

+

args: [--remove]

|

| 19 |

+

# - id: debug-statements

|

| 20 |

+

# name: (Python) Check for debugger imports

|

| 21 |

+

- id: check-json

|

| 22 |

+

name: (JSON) Check syntax

|

| 23 |

+

- id: check-yaml

|

| 24 |

+

name: (YAML) Check syntax

|

| 25 |

+

- id: check-toml

|

| 26 |

+

name: (TOML) Check syntax

|

| 27 |

+

# - repo: https://github.com/shellcheck-py/shellcheck-py

|

| 28 |

+

# rev: v0.10.0.1

|

| 29 |

+

# hooks:

|

| 30 |

+

# - id: shellcheck

|

| 31 |

+

- repo: https://github.com/google/yamlfmt

|

| 32 |

+

rev: v0.13.0

|

| 33 |

+

hooks:

|

| 34 |

+

- id: yamlfmt

|

| 35 |

+

- repo: https://github.com/executablebooks/mdformat

|

| 36 |

+

rev: 0.7.16

|

| 37 |

+

hooks:

|

| 38 |

+

- id: mdformat

|

| 39 |

+

name: (Markdown) Format docs with mdformat

|

| 40 |

+

- repo: https://github.com/asottile/pyupgrade

|

| 41 |

+

rev: v3.2.2

|

| 42 |

+

hooks:

|

| 43 |

+

- id: pyupgrade

|

| 44 |

+

name: (Python) Update syntax for newer versions

|

| 45 |

+

args: [--py37-plus]

|

| 46 |

+

- repo: https://github.com/psf/black

|

| 47 |

+

rev: 22.10.0

|

| 48 |

+

hooks:

|

| 49 |

+

- id: black

|

| 50 |

+

name: (Python) Format code with black

|

| 51 |

+

- repo: https://github.com/pycqa/isort

|

| 52 |

+

rev: 5.12.0

|

| 53 |

+

hooks:

|

| 54 |

+

- id: isort

|

| 55 |

+

name: (Python) Sort imports with isort

|

| 56 |

+

- repo: https://github.com/pre-commit/mirrors-clang-format

|

| 57 |

+

rev: v15.0.4

|

| 58 |

+

hooks:

|

| 59 |

+

- id: clang-format

|

| 60 |

+

name: (C/C++/CUDA) Format code with clang-format

|

| 61 |

+

args: [-style=google, -i]

|

| 62 |

+

types_or: [c, c++, cuda]

|

CIs/add_license_all.sh

ADDED

|

@@ -0,0 +1,2 @@

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#/bin/bash

|

| 2 |

+

addlicense -s -c 'NVIDIA CORPORATION & AFFILIATES' -ignore "**/*__init__.py" **/*.py

|

Dockerfile

ADDED

|

@@ -0,0 +1,20 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

FROM nvcr.io/nvidia/pytorch:24.06-py3

|

| 2 |

+

|

| 3 |

+

WORKDIR /app

|

| 4 |

+

|

| 5 |

+

RUN curl https://repo.anaconda.com/miniconda/Miniconda3-latest-Linux-x86_64.sh -o ~/miniconda.sh \

|

| 6 |

+

&& sh ~/miniconda.sh -b -p /opt/conda \

|

| 7 |

+

&& rm ~/miniconda.sh

|

| 8 |

+

|

| 9 |

+

ENV PATH /opt/conda/bin:$PATH

|

| 10 |

+

COPY pyproject.toml pyproject.toml

|

| 11 |

+

COPY diffusion diffusion

|

| 12 |

+

COPY configs configs

|

| 13 |

+

COPY sana sana

|

| 14 |

+

COPY app app

|

| 15 |

+

|

| 16 |

+

COPY environment_setup.sh environment_setup.sh

|

| 17 |

+

RUN ./environment_setup.sh sana

|

| 18 |

+

|

| 19 |

+

# COPY server.py server.py

|

| 20 |

+

CMD ["conda", "run", "-n", "sana", "--no-capture-output", "python", "-u", "-W", "ignore", "app/app_sana.py", "--config=configs/sana_config/1024ms/Sana_1600M_img1024.yaml", "--model_path=hf://Sana_1600M_1024px/checkpoints/Sana_1600M_1024px.pth",]

|

LICENSE

ADDED

|

@@ -0,0 +1,117 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Copyright (c) 2019, NVIDIA Corporation. All rights reserved.

|

| 2 |

+

|

| 3 |

+

|

| 4 |

+

Nvidia Source Code License-NC

|

| 5 |

+

|

| 6 |

+

=======================================================================

|

| 7 |

+

|

| 8 |

+

1. Definitions

|

| 9 |

+

|

| 10 |

+

“Licensor” means any person or entity that distributes its Work.

|

| 11 |

+

|

| 12 |

+

“Work” means (a) the original work of authorship made available under

|

| 13 |

+

this license, which may include software, documentation, or other

|

| 14 |

+

files, and (b) any additions to or derivative works thereof

|

| 15 |

+

that are made available under this license.

|

| 16 |

+

|

| 17 |

+

“NVIDIA Processors” means any central processing unit (CPU),

|

| 18 |

+

graphics processing unit (GPU), field-programmable gate array (FPGA),

|

| 19 |

+

application-specific integrated circuit (ASIC) or any combination

|

| 20 |

+

thereof designed, made, sold, or provided by NVIDIA or its affiliates.

|

| 21 |

+

|

| 22 |

+

The terms “reproduce,” “reproduction,” “derivative works,” and

|

| 23 |

+

“distribution” have the meaning as provided under U.S. copyright law;

|

| 24 |

+

provided, however, that for the purposes of this license, derivative

|

| 25 |

+

works shall not include works that remain separable from, or merely

|

| 26 |

+

link (or bind by name) to the interfaces of, the Work.

|

| 27 |

+

|

| 28 |

+

Works are “made available” under this license by including in or with

|

| 29 |

+

the Work either (a) a copyright notice referencing the applicability

|

| 30 |

+

of this license to the Work, or (b) a copy of this license.

|

| 31 |

+

|

| 32 |

+

"Safe Model" means ShieldGemma-2B, which is a series of safety

|

| 33 |

+

content moderation models designed to moderate four categories of

|

| 34 |

+

harmful content: sexually explicit material, dangerous content,

|

| 35 |

+

hate speech, and harassment, and which you separately obtain

|

| 36 |

+

from Google at https://huggingface.co/google/shieldgemma-2b.

|

| 37 |

+

|

| 38 |

+

|

| 39 |

+

2. License Grant

|

| 40 |

+

|

| 41 |

+

2.1 Copyright Grant. Subject to the terms and conditions of this

|

| 42 |

+

license, each Licensor grants to you a perpetual, worldwide,

|

| 43 |

+

non-exclusive, royalty-free, copyright license to use, reproduce,

|

| 44 |

+

prepare derivative works of, publicly display, publicly perform,

|

| 45 |

+

sublicense and distribute its Work and any resulting derivative

|

| 46 |

+

works in any form.

|

| 47 |

+

|

| 48 |

+

3. Limitations

|

| 49 |

+

|

| 50 |

+

3.1 Redistribution. You may reproduce or distribute the Work only if

|

| 51 |

+

(a) you do so under this license, (b) you include a complete copy of

|

| 52 |

+

this license with your distribution, and (c) you retain without

|

| 53 |

+

modification any copyright, patent, trademark, or attribution notices

|

| 54 |

+

that are present in the Work.

|

| 55 |

+

|

| 56 |

+

3.2 Derivative Works. You may specify that additional or different

|

| 57 |

+

terms apply to the use, reproduction, and distribution of your

|

| 58 |

+

derivative works of the Work (“Your Terms”) only if (a) Your Terms

|

| 59 |

+

provide that the use limitation in Section 3.3 applies to your

|

| 60 |

+

derivative works, and (b) you identify the specific derivative works

|

| 61 |

+

that are subject to Your Terms. Notwithstanding Your Terms, this

|

| 62 |

+

license (including the redistribution requirements in Section 3.1)

|

| 63 |

+

will continue to apply to the Work itself.

|

| 64 |

+

|

| 65 |

+

3.3 Use Limitation. The Work and any derivative works thereof only may

|

| 66 |

+

be used or intended for use non-commercially and with NVIDIA Processors,

|

| 67 |

+

in accordance with Section 3.4, below. Notwithstanding the foregoing,

|

| 68 |

+

NVIDIA Corporation and its affiliates may use the Work and any

|

| 69 |

+

derivative works commercially. As used herein, “non-commercially”

|

| 70 |

+

means for research or evaluation purposes only.

|

| 71 |

+

|

| 72 |

+

3.4 You shall filter your input content to the Work and any derivative

|

| 73 |

+

works thereof through the Safe Model to ensure that no content described

|

| 74 |

+

as Not Safe For Work (NSFW) is processed or generated. You shall not use

|

| 75 |

+

the Work to process or generate NSFW content. You are solely responsible

|

| 76 |

+

for any damages and liabilities arising from your failure to adequately

|

| 77 |

+

filter content in accordance with this section. As used herein,

|

| 78 |

+

“Not Safe For Work” or “NSFW” means content, videos or website pages

|

| 79 |

+

that contain potentially disturbing subject matter, including but not

|

| 80 |

+

limited to content that is sexually explicit, dangerous, hate,

|

| 81 |

+

or harassment.

|

| 82 |

+

|

| 83 |

+

3.5 Patent Claims. If you bring or threaten to bring a patent claim

|

| 84 |

+

against any Licensor (including any claim, cross-claim or counterclaim

|

| 85 |

+

in a lawsuit) to enforce any patents that you allege are infringed by

|

| 86 |

+

any Work, then your rights under this license from such Licensor

|

| 87 |

+

(including the grant in Section 2.1) will terminate immediately.

|

| 88 |

+

|

| 89 |

+

3.6 Trademarks. This license does not grant any rights to use any

|

| 90 |

+

Licensor’s or its affiliates’ names, logos, or trademarks, except as

|

| 91 |

+

necessary to reproduce the notices described in this license.

|

| 92 |

+

|

| 93 |

+

3.7 Termination. If you violate any term of this license, then your

|

| 94 |

+

rights under this license (including the grant in Section 2.1) will

|

| 95 |

+

terminate immediately.

|

| 96 |

+

|

| 97 |

+

4. Disclaimer of Warranty.

|

| 98 |

+

|

| 99 |

+

THE WORK IS PROVIDED “AS IS” WITHOUT WARRANTIES OR CONDITIONS OF ANY

|

| 100 |

+

KIND, EITHER EXPRESS OR IMPLIED, INCLUDING WARRANTIES OR CONDITIONS OF

|

| 101 |

+

MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE, TITLE OR

|

| 102 |

+

NON-INFRINGEMENT. YOU BEAR THE RISK OF UNDERTAKING ANY ACTIVITIES

|

| 103 |

+

UNDER THIS LICENSE.

|

| 104 |

+

|

| 105 |

+

5. Limitation of Liability.

|

| 106 |

+

|

| 107 |

+

EXCEPT AS PROHIBITED BY APPLICABLE LAW, IN NO EVENT AND UNDER NO LEGAL

|

| 108 |

+

THEORY, WHETHER IN TORT (INCLUDING NEGLIGENCE), CONTRACT, OR OTHERWISE

|

| 109 |

+

SHALL ANY LICENSOR BE LIABLE TO YOU FOR DAMAGES, INCLUDING ANY DIRECT,

|

| 110 |

+

INDIRECT, SPECIAL, INCIDENTAL, OR CONSEQUENTIAL DAMAGES ARISING OUT OF

|

| 111 |

+

OR RELATED TO THIS LICENSE, THE USE OR INABILITY TO USE THE WORK

|

| 112 |

+

(INCLUDING BUT NOT LIMITED TO LOSS OF GOODWILL, BUSINESS INTERRUPTION,

|

| 113 |

+

LOST PROFITS OR DATA, COMPUTER FAILURE OR MALFUNCTION, OR ANY OTHER

|

| 114 |

+

DAMAGES OR LOSSES), EVEN IF THE LICENSOR HAS BEEN ADVISED OF THE

|

| 115 |

+

POSSIBILITY OF SUCH DAMAGES.

|

| 116 |

+

|

| 117 |

+

=======================================================================

|

README.md

CHANGED

|

@@ -1,12 +1,231 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

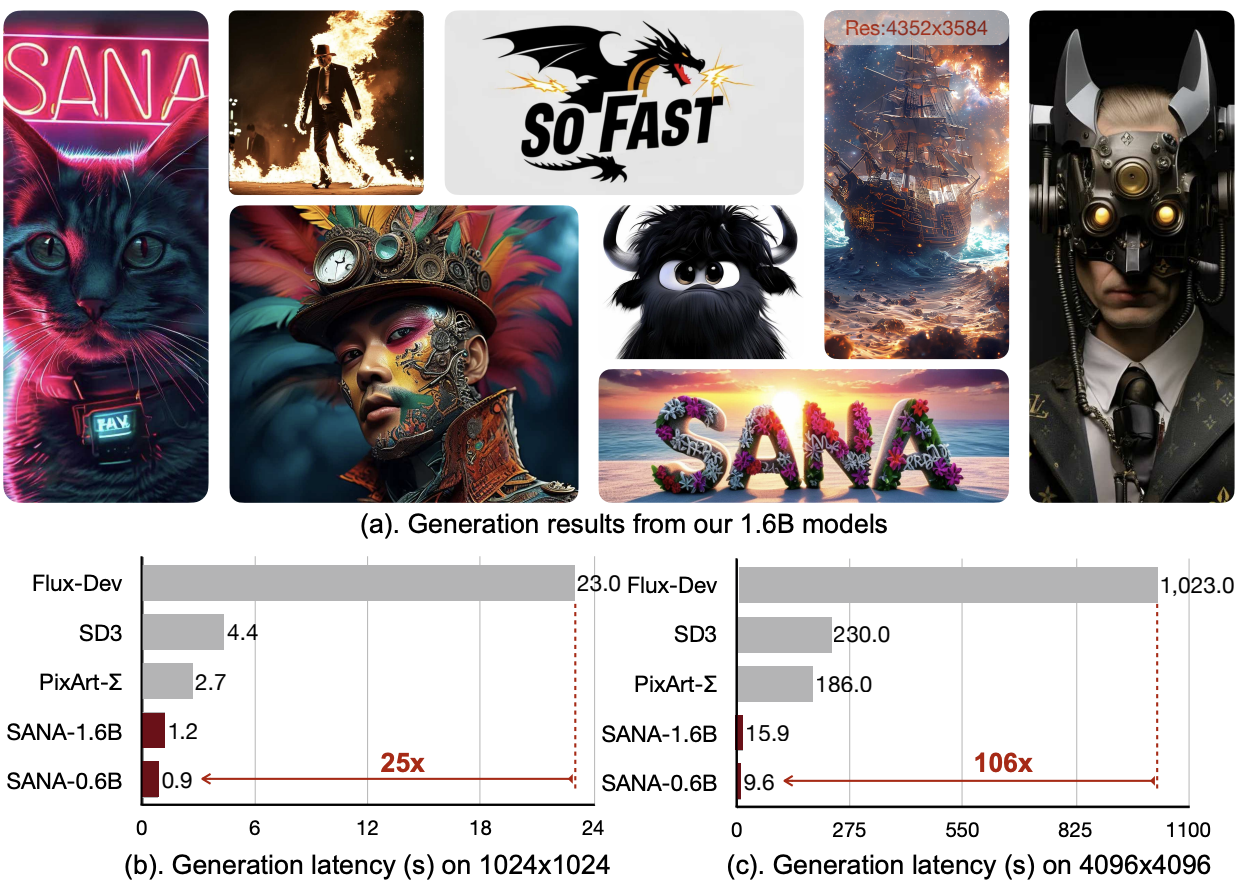

| 1 |

+

<p align="center" style="border-radius: 10px">

|

| 2 |

+

<img src="asset/logo.png" width="35%" alt="logo"/>

|

| 3 |

+

</p>

|

| 4 |

+

|

| 5 |

+

# ⚡️Sana: Efficient High-Resolution Image Synthesis with Linear Diffusion Transformer

|

| 6 |

+

|

| 7 |

+

<div align="center">

|

| 8 |

+

<a href="https://nvlabs.github.io/Sana/"><img src="https://img.shields.io/static/v1?label=Project&message=Github&color=blue&logo=github-pages"></a>

|

| 9 |

+

<a href="https://hanlab.mit.edu/projects/sana/"><img src="https://img.shields.io/static/v1?label=Page&message=MIT&color=darkred&logo=github-pages"></a>

|

| 10 |

+

<a href="https://arxiv.org/abs/2410.10629"><img src="https://img.shields.io/static/v1?label=Arxiv&message=Sana&color=red&logo=arxiv"></a>

|

| 11 |

+

<a href="https://nv-sana.mit.edu/"><img src="https://img.shields.io/static/v1?label=Demo&message=MIT&color=yellow"></a>

|

| 12 |

+

<a href="https://discord.gg/rde6eaE5Ta"><img src="https://img.shields.io/static/v1?label=Discuss&message=Discord&color=purple&logo=discord"></a>

|

| 13 |

+

</div>

|

| 14 |

+

|

| 15 |

+

<p align="center" border-raduis="10px">

|

| 16 |

+

<img src="asset/Sana.jpg" width="90%" alt="teaser_page1"/>

|

| 17 |

+

</p>

|

| 18 |

+

|

| 19 |

+

## 💡 Introduction

|

| 20 |

+

|

| 21 |

+

We introduce Sana, a text-to-image framework that can efficiently generate images up to 4096 × 4096 resolution.

|

| 22 |

+

Sana can synthesize high-resolution, high-quality images with strong text-image alignment at a remarkably fast speed, deployable on laptop GPU.

|

| 23 |

+

Core designs include:

|

| 24 |

+

|

| 25 |

+

(1) [**DC-AE**](https://hanlab.mit.edu/projects/dc-ae): unlike traditional AEs, which compress images only 8×, we trained an AE that can compress images 32×, effectively reducing the number of latent tokens. \

|

| 26 |

+

(2) **Linear DiT**: we replace all vanilla attention in DiT with linear attention, which is more efficient at high resolutions without sacrificing quality. \

|

| 27 |

+

(3) **Decoder-only text encoder**: we replaced T5 with modern decoder-only small LLM as the text encoder and designed complex human instruction with in-context learning to enhance the image-text alignment. \

|

| 28 |

+

(4) **Efficient training and sampling**: we propose **Flow-DPM-Solver** to reduce sampling steps, with efficient caption labeling and selection to accelerate convergence.

|

| 29 |

+

|

| 30 |

+

As a result, Sana-0.6B is very competitive with modern giant diffusion model (e.g. Flux-12B), being 20 times smaller and 100+ times faster in measured throughput. Moreover, Sana-0.6B can be deployed on a 16GB laptop GPU, taking less than 1 second to generate a 1024 × 1024 resolution image. Sana enables content creation at low cost.

|

| 31 |

+

|

| 32 |

+

<p align="center" border-raduis="10px">

|

| 33 |

+

<img src="asset/model-incremental.jpg" width="90%" alt="teaser_page2"/>

|

| 34 |

+

</p>

|

| 35 |

+

|

| 36 |

+

## 🔥🔥 News

|

| 37 |

+

|

| 38 |

+

- (🔥 New) \[2024/11\] 1.6B [Sana models](https://huggingface.co/collections/Efficient-Large-Model/sana-673efba2a57ed99843f11f9e) are released.

|

| 39 |

+

- (🔥 New) \[2024/11\] Training & Inference & Metrics code are released.

|

| 40 |

+

- (🔥 New) \[2024/11\] Working on [`diffusers`](https://github.com/huggingface/diffusers/pull/9982).

|

| 41 |

+

- \[2024/10\] [Demo](https://nv-sana.mit.edu/) is released.

|

| 42 |

+

- \[2024/10\] [DC-AE Code](https://github.com/mit-han-lab/efficientvit/blob/master/applications/dc_ae/README.md) and [weights](https://huggingface.co/collections/mit-han-lab/dc-ae-670085b9400ad7197bb1009b) are released!

|

| 43 |

+

- \[2024/10\] [Paper](https://arxiv.org/abs/2410.10629) is on Arxiv!

|

| 44 |

+

|

| 45 |

+

## Performance

|

| 46 |

+

|

| 47 |

+

| Methods (1024x1024) | Throughput (samples/s) | Latency (s) | Params (B) | Speedup | FID 👆 | CLIP 👆 | GenEval 👆 | DPG 👆 |

|

| 48 |

+

|------------------------------|------------------------|-------------|------------|-----------|-------------|--------------|-------------|-------------|

|

| 49 |

+

| FLUX-dev | 0.04 | 23.0 | 12.0 | 1.0× | 10.15 | 27.47 | _0.67_ | _84.0_ |

|

| 50 |

+

| **Sana-0.6B** | 1.7 | 0.9 | 0.6 | **39.5×** | <u>5.81</u> | 28.36 | 0.64 | 83.6 |

|

| 51 |

+

| **Sana-1.6B** | 1.0 | 1.2 | 1.6 | **23.3×** | **5.76** | <u>28.67</u> | <u>0.66</u> | **84.8** |

|

| 52 |

+

|

| 53 |

+

<details>

|

| 54 |

+

<summary><h3>Click to show all</h3></summary>

|

| 55 |

+

|

| 56 |

+

| Methods | Throughput (samples/s) | Latency (s) | Params (B) | Speedup | FID 👆 | CLIP 👆 | GenEval 👆 | DPG 👆 |

|

| 57 |

+

|------------------------------|------------------------|-------------|------------|-----------|-------------|--------------|-------------|-------------|

|

| 58 |

+

| _**512 × 512 resolution**_ | | | | | | | | |

|

| 59 |

+

| PixArt-α | 1.5 | 1.2 | 0.6 | 1.0× | 6.14 | 27.55 | 0.48 | 71.6 |

|

| 60 |

+

| PixArt-Σ | 1.5 | 1.2 | 0.6 | 1.0× | _6.34_ | _27.62_ | <u>0.52</u> | _79.5_ |

|

| 61 |

+

| **Sana-0.6B** | 6.7 | 0.8 | 0.6 | 5.0× | <u>5.67</u> | <u>27.92</u> | _0.64_ | <u>84.3</u> |

|

| 62 |

+

| **Sana-1.6B** | 3.8 | 0.6 | 1.6 | 2.5× | **5.16** | **28.19** | **0.66** | **85.5** |

|

| 63 |

+

| _**1024 × 1024 resolution**_ | | | | | | | | |

|

| 64 |

+

| LUMINA-Next | 0.12 | 9.1 | 2.0 | 2.8× | 7.58 | 26.84 | 0.46 | 74.6 |

|

| 65 |

+

| SDXL | 0.15 | 6.5 | 2.6 | 3.5× | 6.63 | _29.03_ | 0.55 | 74.7 |

|

| 66 |

+

| PlayGroundv2.5 | 0.21 | 5.3 | 2.6 | 4.9× | _6.09_ | **29.13** | 0.56 | 75.5 |

|

| 67 |

+

| Hunyuan-DiT | 0.05 | 18.2 | 1.5 | 1.2× | 6.54 | 28.19 | 0.63 | 78.9 |

|

| 68 |

+

| PixArt-Σ | 0.4 | 2.7 | 0.6 | 9.3× | 6.15 | 28.26 | 0.54 | 80.5 |

|

| 69 |

+

| DALLE3 | - | - | - | - | - | - | _0.67_ | 83.5 |

|

| 70 |

+

| SD3-medium | 0.28 | 4.4 | 2.0 | 6.5× | 11.92 | 27.83 | 0.62 | <u>84.1</u> |

|

| 71 |

+

| FLUX-dev | 0.04 | 23.0 | 12.0 | 1.0× | 10.15 | 27.47 | _0.67_ | _84.0_ |

|

| 72 |

+

| FLUX-schnell | 0.5 | 2.1 | 12.0 | 11.6× | 7.94 | 28.14 | **0.71** | **84.8** |

|

| 73 |

+

| **Sana-0.6B** | 1.7 | 0.9 | 0.6 | **39.5×** | <u>5.81</u> | 28.36 | 0.64 | 83.6 |

|

| 74 |

+

| **Sana-1.6B** | 1.0 | 1.2 | 1.6 | **23.3×** | **5.76** | <u>28.67</u> | <u>0.66</u> | **84.8** |

|

| 75 |

+

|

| 76 |

+

</details>

|

| 77 |

+

|

| 78 |

+

## Contents

|

| 79 |

+

|

| 80 |

+

- [Env](#-1-dependencies-and-installation)

|

| 81 |

+

- [Demo](#-3-how-to-inference)

|

| 82 |

+

- [Training](#-2-how-to-train)

|

| 83 |

+

- [Testing](#-4-how-to-inference--test-metrics-fid-clip-score-geneval-dpg-bench-etc)

|

| 84 |

+

- [TODO](#to-do-list)

|

| 85 |

+

- [Citation](#bibtex)

|

| 86 |

+

|

| 87 |

+

# 🔧 1. Dependencies and Installation

|

| 88 |

+

|

| 89 |

+

- Python >= 3.10.0 (Recommend to use [Anaconda](https://www.anaconda.com/download/#linux) or [Miniconda](https://docs.conda.io/en/latest/miniconda.html))

|

| 90 |

+

- [PyTorch >= 2.0.1+cu12.1](https://pytorch.org/)

|

| 91 |

+

|

| 92 |

+

```bash

|

| 93 |

+

git clone https://github.com/NVlabs/Sana.git

|

| 94 |

+

cd Sana

|

| 95 |

+

|

| 96 |

+

./environment_setup.sh sana

|

| 97 |

+

# or you can install each components step by step following environment_setup.sh

|

| 98 |

+

```

|

| 99 |

+

|

| 100 |

+

# 💻 2. How to Play with Sana (Inference)

|

| 101 |

+

|

| 102 |

+

## 💰Hardware requirement

|

| 103 |

+

|

| 104 |

+

- 9GB VRAM is required for 0.6B model and 12GB VRAM for 1.6B model. Our later quantization version will require less than 8GB for inference.

|

| 105 |

+

- All the tests are done on A100 GPUs. Different GPU version may be different.

|

| 106 |

+

|

| 107 |

+

## 🔛 Quick start with [Gradio](https://www.gradio.app/guides/quickstart)

|

| 108 |

+

|

| 109 |

+

```bash

|

| 110 |

+

# official online demo

|

| 111 |

+

DEMO_PORT=15432 \

|

| 112 |

+

python app/app_sana.py \

|

| 113 |

+

--config=configs/sana_config/1024ms/Sana_1600M_img1024.yaml \

|

| 114 |

+

--model_path=hf://Efficient-Large-Model/Sana_1600M_1024px/checkpoints/Sana_1600M_1024px.pth

|

| 115 |

+

```

|

| 116 |

+

|

| 117 |

+

```python

|

| 118 |

+

import torch

|

| 119 |

+

from app.sana_pipeline import SanaPipeline

|

| 120 |

+

from torchvision.utils import save_image

|

| 121 |

+

|

| 122 |

+

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

|

| 123 |

+

generator = torch.Generator(device=device).manual_seed(42)

|

| 124 |

+

|

| 125 |

+

sana = SanaPipeline("configs/sana_config/1024ms/Sana_1600M_img1024.yaml")

|

| 126 |

+

sana.from_pretrained("hf://Efficient-Large-Model/Sana_1600M_1024px/checkpoints/Sana_1600M_1024px.pth")

|

| 127 |

+

prompt = 'a cyberpunk cat with a neon sign that says "Sana"'

|

| 128 |

+

|

| 129 |

+

image = sana(

|

| 130 |

+

prompt=prompt,

|

| 131 |

+

height=1024,

|

| 132 |

+

width=1024,

|

| 133 |

+

guidance_scale=5.0,

|

| 134 |

+

pag_guidance_scale=2.0,

|

| 135 |

+

num_inference_steps=18,

|

| 136 |

+

generator=generator,

|

| 137 |

+

)

|

| 138 |

+

save_image(image, 'output/sana.png', nrow=1, normalize=True, value_range=(-1, 1))

|

| 139 |

+

```

|

| 140 |

+

|

| 141 |

+

## 🔛 Run inference with TXT or JSON files

|

| 142 |

+

|

| 143 |

+

```bash

|

| 144 |

+

# Run samples in a txt file

|

| 145 |

+

python scripts/inference.py \

|

| 146 |

+

--config=configs/sana_config/1024ms/Sana_1600M_img1024.yaml \

|

| 147 |

+

--model_path=hf://Efficient-Large-Model/Sana_1600M_1024px/checkpoints/Sana_1600M_1024px.pth

|

| 148 |

+

--txt_file=asset/samples_mini.txt

|

| 149 |

+

|

| 150 |

+

# Run samples in a json file

|

| 151 |

+

python scripts/inference.py \

|

| 152 |

+

--config=configs/sana_config/1024ms/Sana_1600M_img1024.yaml \

|

| 153 |

+

--model_path=hf://Efficient-Large-Model/Sana_1600M_1024px/checkpoints/Sana_1600M_1024px.pth

|

| 154 |

+

--json_file=asset/samples_mini.json

|

| 155 |

+

```

|

| 156 |

+

|

| 157 |

+

where each line of [`asset/samples_mini.txt`](asset/samples_mini.txt) contains a prompt to generate

|

| 158 |

+

|

| 159 |

+

# 🔥 3. How to Train Sana

|

| 160 |

+

|

| 161 |

+

## 💰Hardware requirement

|

| 162 |

+

|

| 163 |

+

- 32GB VRAM is required for both 0.6B and 1.6B model's training

|

| 164 |

+

|

| 165 |

+

We provide a training example here and you can also select your desired config file from [config files dir](configs/sana_config) based on your data structure.

|

| 166 |

+

|

| 167 |

+

To launch Sana training, you will first need to prepare data in the following formats

|

| 168 |

+

|

| 169 |

+

```bash

|

| 170 |

+

asset/example_data

|

| 171 |

+

├── AAA.txt

|

| 172 |

+

├── AAA.png

|

| 173 |

+

├── BCC.txt

|

| 174 |

+

├── BCC.png

|

| 175 |

+

├── ......

|

| 176 |

+

├── CCC.txt

|

| 177 |

+

└── CCC.png

|

| 178 |

+

```

|

| 179 |

+

|

| 180 |

+

Then Sana's training can be launched via

|

| 181 |

+

|

| 182 |

+

```bash

|

| 183 |

+

# Example of training Sana 0.6B with 512x512 resolution

|

| 184 |

+

bash train_scripts/train.sh \

|

| 185 |

+

configs/sana_config/512ms/Sana_600M_img512.yaml \

|

| 186 |

+

--data.data_dir="[asset/example_data]" \

|

| 187 |

+

--data.type=SanaImgDataset \

|

| 188 |

+

--model.multi_scale=false \

|

| 189 |

+

--train.train_batch_size=32

|

| 190 |

+

|

| 191 |

+

# Example of training Sana 1.6B with 1024x1024 resolution

|

| 192 |

+

bash train_scripts/train.sh \

|

| 193 |

+

configs/sana_config/1024ms/Sana_1600M_img1024.yaml \

|

| 194 |

+

--data.data_dir="[asset/example_data]" \

|

| 195 |

+

--data.type=SanaImgDataset \

|

| 196 |

+

--model.multi_scale=false \

|

| 197 |

+

--train.train_batch_size=8

|

| 198 |

+

```

|

| 199 |

+

|

| 200 |

+

# 💻 4. Metric toolkit

|

| 201 |

+

|

| 202 |

+

Refer to [Toolkit Manual](asset/docs/metrics_toolkit.md).

|

| 203 |

+

|

| 204 |

+

# 💪To-Do List

|

| 205 |

+

|

| 206 |

+

We will try our best to release

|

| 207 |

+

|

| 208 |

+

- \[x\] Training code

|

| 209 |

+

- \[x\] Inference code

|

| 210 |

+

- \[+\] Model zoo

|

| 211 |

+

- \[ \] working on Diffusers(https://github.com/huggingface/diffusers/pull/9982)

|

| 212 |

+

- \[ \] ComfyUI

|

| 213 |

+

- \[ \] Laptop development

|

| 214 |

+

|

| 215 |

+

# 🤗Acknowledgements

|

| 216 |

+

|

| 217 |

+

- Thanks to [PixArt-α](https://github.com/PixArt-alpha/PixArt-alpha), [PixArt-Σ](https://github.com/PixArt-alpha/PixArt-sigma) and [Efficient-ViT](https://github.com/mit-han-lab/efficientvit) for their wonderful work and codebase!

|

| 218 |

+

|

| 219 |

+

# 📖BibTeX

|

| 220 |

+

|

| 221 |

+

```

|

| 222 |

+

@misc{xie2024sana,

|

| 223 |

+

title={Sana: Efficient High-Resolution Image Synthesis with Linear Diffusion Transformer},

|

| 224 |

+

author={Enze Xie and Junsong Chen and Junyu Chen and Han Cai and Haotian Tang and Yujun Lin and Zhekai Zhang and Muyang Li and Ligeng Zhu and Yao Lu and Song Han},

|

| 225 |

+

year={2024},

|

| 226 |

+

eprint={2410.10629},

|

| 227 |

+

archivePrefix={arXiv},

|

| 228 |

+

primaryClass={cs.CV},

|

| 229 |

+

url={https://arxiv.org/abs/2410.10629},

|

| 230 |

+

}

|

| 231 |

+

```

|

app/app_sana.py

ADDED

|

@@ -0,0 +1,488 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|