Spaces:

Running

on

Zero

Running

on

Zero

Commit

•

e682d93

1

Parent(s):

f9ebf9e

Track binary files with Git LFS

Browse files- .gitattributes +2 -0

- LICENSE +395 -0

- OmniParser +0 -1

- README.md +56 -12

- SECURITY.md +41 -0

- __pycache__/utils.cpython-312.pyc +0 -0

- __pycache__/utils.cpython-39.pyc +0 -0

- config.json +40 -0

- demo.ipynb +0 -0

- gradio_demo.py +108 -0

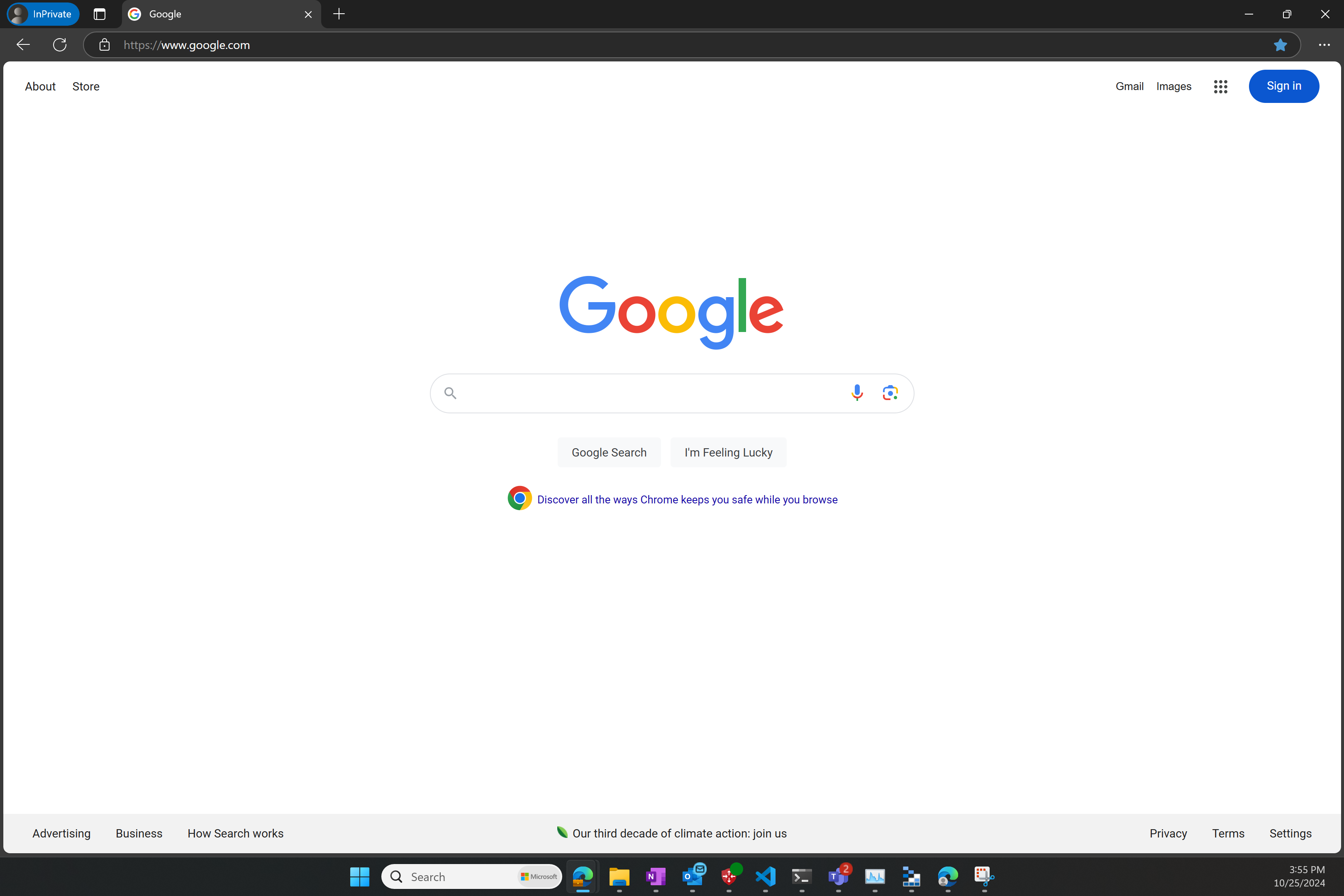

- imgs/google_page.png +0 -0

- imgs/logo.png +0 -0

- imgs/saved_image_demo.png +0 -0

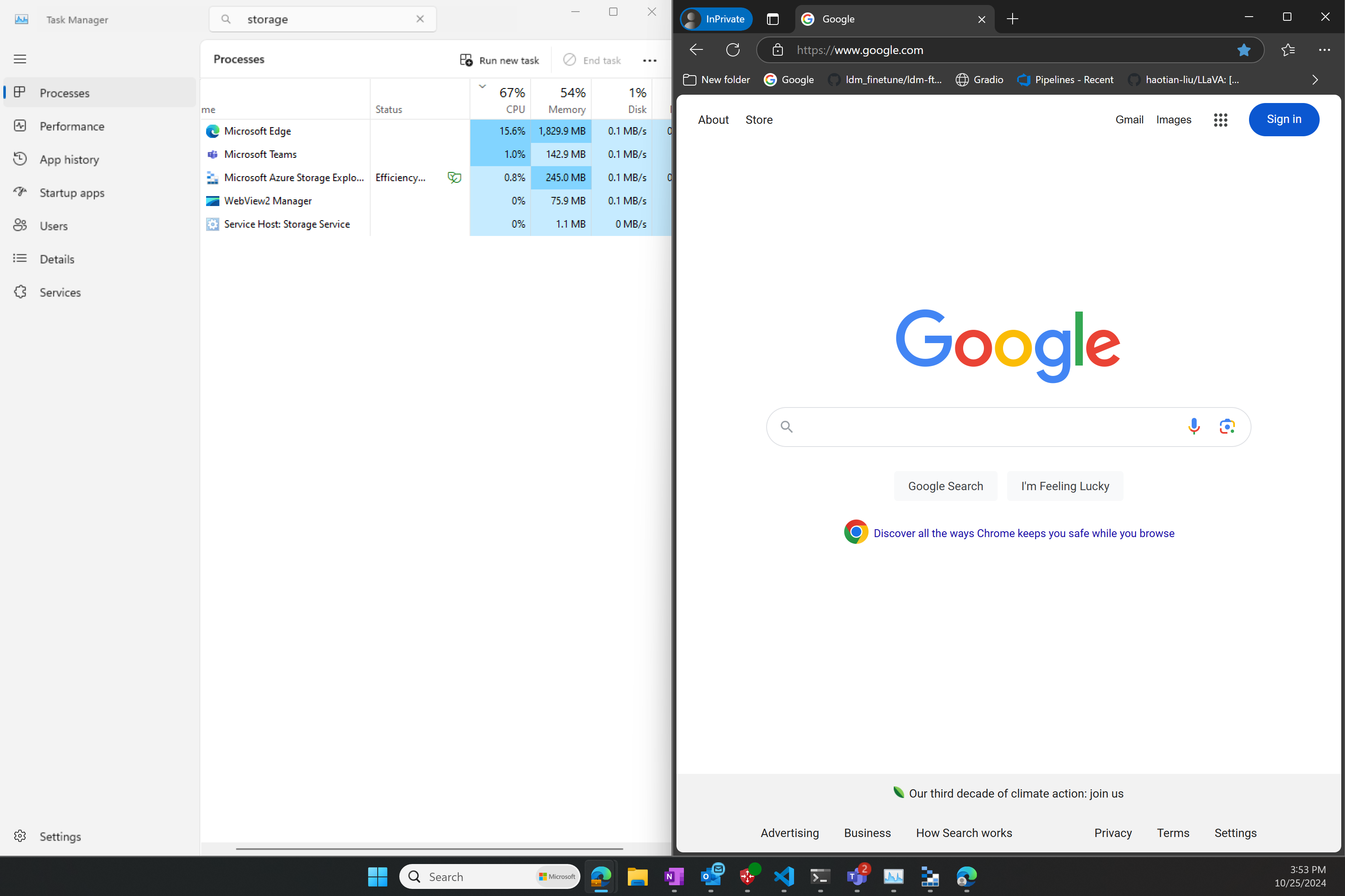

- imgs/windows_home.png +3 -0

- imgs/windows_multitab.png +0 -0

- omniparser.py +60 -0

- pytorch_model-00001-of-00002.bin +3 -0

- requirements.txt +16 -0

- util/__init__.py +0 -0

- util/__pycache__/__init__.cpython-312.pyc +0 -0

- util/__pycache__/__init__.cpython-39.pyc +0 -0

- util/__pycache__/action_matching.cpython-39.pyc +0 -0

- util/__pycache__/box_annotator.cpython-312.pyc +0 -0

- util/__pycache__/box_annotator.cpython-39.pyc +0 -0

- util/action_matching.py +425 -0

- util/action_type.py +45 -0

- util/box_annotator.py +262 -0

- utils.py +403 -0

- weights/README.md +3 -0

- weights/config.json +3 -0

- weights/convert_safetensor_to_pt.py +3 -0

- weights/icon_caption_blip2/LICENSE +21 -0

- weights/icon_caption_blip2/config.json +40 -0

- weights/icon_caption_blip2/generation_config.json +7 -0

- weights/icon_caption_blip2/pytorch_model-00001-of-00002.bin +3 -0

- weights/icon_caption_blip2/pytorch_model-00002-of-00002.bin +3 -0

- weights/icon_caption_blip2/pytorch_model.bin.index.json +0 -0

- weights/icon_caption_florence/LICENSE +21 -0

- weights/icon_caption_florence/config.json +237 -0

- weights/icon_caption_florence/generation_config.json +13 -0

- weights/icon_caption_florence/model.safetensors +3 -0

- weights/icon_detect/LICENSE +661 -0

- weights/icon_detect/model.safetensors +3 -0

- weights/icon_detect/model.yaml +127 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,5 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

weights/* filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

imgs/windows_home.png filter=lfs diff=lfs merge=lfs -text

|

LICENSE

ADDED

|

@@ -0,0 +1,395 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Attribution 4.0 International

|

| 2 |

+

|

| 3 |

+

=======================================================================

|

| 4 |

+

|

| 5 |

+

Creative Commons Corporation ("Creative Commons") is not a law firm and

|

| 6 |

+

does not provide legal services or legal advice. Distribution of

|

| 7 |

+

Creative Commons public licenses does not create a lawyer-client or

|

| 8 |

+

other relationship. Creative Commons makes its licenses and related

|

| 9 |

+

information available on an "as-is" basis. Creative Commons gives no

|

| 10 |

+

warranties regarding its licenses, any material licensed under their

|

| 11 |

+

terms and conditions, or any related information. Creative Commons

|

| 12 |

+

disclaims all liability for damages resulting from their use to the

|

| 13 |

+

fullest extent possible.

|

| 14 |

+

|

| 15 |

+

Using Creative Commons Public Licenses

|

| 16 |

+

|

| 17 |

+

Creative Commons public licenses provide a standard set of terms and

|

| 18 |

+

conditions that creators and other rights holders may use to share

|

| 19 |

+

original works of authorship and other material subject to copyright

|

| 20 |

+

and certain other rights specified in the public license below. The

|

| 21 |

+

following considerations are for informational purposes only, are not

|

| 22 |

+

exhaustive, and do not form part of our licenses.

|

| 23 |

+

|

| 24 |

+

Considerations for licensors: Our public licenses are

|

| 25 |

+

intended for use by those authorized to give the public

|

| 26 |

+

permission to use material in ways otherwise restricted by

|

| 27 |

+

copyright and certain other rights. Our licenses are

|

| 28 |

+

irrevocable. Licensors should read and understand the terms

|

| 29 |

+

and conditions of the license they choose before applying it.

|

| 30 |

+

Licensors should also secure all rights necessary before

|

| 31 |

+

applying our licenses so that the public can reuse the

|

| 32 |

+

material as expected. Licensors should clearly mark any

|

| 33 |

+

material not subject to the license. This includes other CC-

|

| 34 |

+

licensed material, or material used under an exception or

|

| 35 |

+

limitation to copyright. More considerations for licensors:

|

| 36 |

+

wiki.creativecommons.org/Considerations_for_licensors

|

| 37 |

+

|

| 38 |

+

Considerations for the public: By using one of our public

|

| 39 |

+

licenses, a licensor grants the public permission to use the

|

| 40 |

+

licensed material under specified terms and conditions. If

|

| 41 |

+

the licensor's permission is not necessary for any reason--for

|

| 42 |

+

example, because of any applicable exception or limitation to

|

| 43 |

+

copyright--then that use is not regulated by the license. Our

|

| 44 |

+

licenses grant only permissions under copyright and certain

|

| 45 |

+

other rights that a licensor has authority to grant. Use of

|

| 46 |

+

the licensed material may still be restricted for other

|

| 47 |

+

reasons, including because others have copyright or other

|

| 48 |

+

rights in the material. A licensor may make special requests,

|

| 49 |

+

such as asking that all changes be marked or described.

|

| 50 |

+

Although not required by our licenses, you are encouraged to

|

| 51 |

+

respect those requests where reasonable. More_considerations

|

| 52 |

+

for the public:

|

| 53 |

+

wiki.creativecommons.org/Considerations_for_licensees

|

| 54 |

+

|

| 55 |

+

=======================================================================

|

| 56 |

+

|

| 57 |

+

Creative Commons Attribution 4.0 International Public License

|

| 58 |

+

|

| 59 |

+

By exercising the Licensed Rights (defined below), You accept and agree

|

| 60 |

+

to be bound by the terms and conditions of this Creative Commons

|

| 61 |

+

Attribution 4.0 International Public License ("Public License"). To the

|

| 62 |

+

extent this Public License may be interpreted as a contract, You are

|

| 63 |

+

granted the Licensed Rights in consideration of Your acceptance of

|

| 64 |

+

these terms and conditions, and the Licensor grants You such rights in

|

| 65 |

+

consideration of benefits the Licensor receives from making the

|

| 66 |

+

Licensed Material available under these terms and conditions.

|

| 67 |

+

|

| 68 |

+

|

| 69 |

+

Section 1 -- Definitions.

|

| 70 |

+

|

| 71 |

+

a. Adapted Material means material subject to Copyright and Similar

|

| 72 |

+

Rights that is derived from or based upon the Licensed Material

|

| 73 |

+

and in which the Licensed Material is translated, altered,

|

| 74 |

+

arranged, transformed, or otherwise modified in a manner requiring

|

| 75 |

+

permission under the Copyright and Similar Rights held by the

|

| 76 |

+

Licensor. For purposes of this Public License, where the Licensed

|

| 77 |

+

Material is a musical work, performance, or sound recording,

|

| 78 |

+

Adapted Material is always produced where the Licensed Material is

|

| 79 |

+

synched in timed relation with a moving image.

|

| 80 |

+

|

| 81 |

+

b. Adapter's License means the license You apply to Your Copyright

|

| 82 |

+

and Similar Rights in Your contributions to Adapted Material in

|

| 83 |

+

accordance with the terms and conditions of this Public License.

|

| 84 |

+

|

| 85 |

+

c. Copyright and Similar Rights means copyright and/or similar rights

|

| 86 |

+

closely related to copyright including, without limitation,

|

| 87 |

+

performance, broadcast, sound recording, and Sui Generis Database

|

| 88 |

+

Rights, without regard to how the rights are labeled or

|

| 89 |

+

categorized. For purposes of this Public License, the rights

|

| 90 |

+

specified in Section 2(b)(1)-(2) are not Copyright and Similar

|

| 91 |

+

Rights.

|

| 92 |

+

|

| 93 |

+

d. Effective Technological Measures means those measures that, in the

|

| 94 |

+

absence of proper authority, may not be circumvented under laws

|

| 95 |

+

fulfilling obligations under Article 11 of the WIPO Copyright

|

| 96 |

+

Treaty adopted on December 20, 1996, and/or similar international

|

| 97 |

+

agreements.

|

| 98 |

+

|

| 99 |

+

e. Exceptions and Limitations means fair use, fair dealing, and/or

|

| 100 |

+

any other exception or limitation to Copyright and Similar Rights

|

| 101 |

+

that applies to Your use of the Licensed Material.

|

| 102 |

+

|

| 103 |

+

f. Licensed Material means the artistic or literary work, database,

|

| 104 |

+

or other material to which the Licensor applied this Public

|

| 105 |

+

License.

|

| 106 |

+

|

| 107 |

+

g. Licensed Rights means the rights granted to You subject to the

|

| 108 |

+

terms and conditions of this Public License, which are limited to

|

| 109 |

+

all Copyright and Similar Rights that apply to Your use of the

|

| 110 |

+

Licensed Material and that the Licensor has authority to license.

|

| 111 |

+

|

| 112 |

+

h. Licensor means the individual(s) or entity(ies) granting rights

|

| 113 |

+

under this Public License.

|

| 114 |

+

|

| 115 |

+

i. Share means to provide material to the public by any means or

|

| 116 |

+

process that requires permission under the Licensed Rights, such

|

| 117 |

+

as reproduction, public display, public performance, distribution,

|

| 118 |

+

dissemination, communication, or importation, and to make material

|

| 119 |

+

available to the public including in ways that members of the

|

| 120 |

+

public may access the material from a place and at a time

|

| 121 |

+

individually chosen by them.

|

| 122 |

+

|

| 123 |

+

j. Sui Generis Database Rights means rights other than copyright

|

| 124 |

+

resulting from Directive 96/9/EC of the European Parliament and of

|

| 125 |

+

the Council of 11 March 1996 on the legal protection of databases,

|

| 126 |

+

as amended and/or succeeded, as well as other essentially

|

| 127 |

+

equivalent rights anywhere in the world.

|

| 128 |

+

|

| 129 |

+

k. You means the individual or entity exercising the Licensed Rights

|

| 130 |

+

under this Public License. Your has a corresponding meaning.

|

| 131 |

+

|

| 132 |

+

|

| 133 |

+

Section 2 -- Scope.

|

| 134 |

+

|

| 135 |

+

a. License grant.

|

| 136 |

+

|

| 137 |

+

1. Subject to the terms and conditions of this Public License,

|

| 138 |

+

the Licensor hereby grants You a worldwide, royalty-free,

|

| 139 |

+

non-sublicensable, non-exclusive, irrevocable license to

|

| 140 |

+

exercise the Licensed Rights in the Licensed Material to:

|

| 141 |

+

|

| 142 |

+

a. reproduce and Share the Licensed Material, in whole or

|

| 143 |

+

in part; and

|

| 144 |

+

|

| 145 |

+

b. produce, reproduce, and Share Adapted Material.

|

| 146 |

+

|

| 147 |

+

2. Exceptions and Limitations. For the avoidance of doubt, where

|

| 148 |

+

Exceptions and Limitations apply to Your use, this Public

|

| 149 |

+

License does not apply, and You do not need to comply with

|

| 150 |

+

its terms and conditions.

|

| 151 |

+

|

| 152 |

+

3. Term. The term of this Public License is specified in Section

|

| 153 |

+

6(a).

|

| 154 |

+

|

| 155 |

+

4. Media and formats; technical modifications allowed. The

|

| 156 |

+

Licensor authorizes You to exercise the Licensed Rights in

|

| 157 |

+

all media and formats whether now known or hereafter created,

|

| 158 |

+

and to make technical modifications necessary to do so. The

|

| 159 |

+

Licensor waives and/or agrees not to assert any right or

|

| 160 |

+

authority to forbid You from making technical modifications

|

| 161 |

+

necessary to exercise the Licensed Rights, including

|

| 162 |

+

technical modifications necessary to circumvent Effective

|

| 163 |

+

Technological Measures. For purposes of this Public License,

|

| 164 |

+

simply making modifications authorized by this Section 2(a)

|

| 165 |

+

(4) never produces Adapted Material.

|

| 166 |

+

|

| 167 |

+

5. Downstream recipients.

|

| 168 |

+

|

| 169 |

+

a. Offer from the Licensor -- Licensed Material. Every

|

| 170 |

+

recipient of the Licensed Material automatically

|

| 171 |

+

receives an offer from the Licensor to exercise the

|

| 172 |

+

Licensed Rights under the terms and conditions of this

|

| 173 |

+

Public License.

|

| 174 |

+

|

| 175 |

+

b. No downstream restrictions. You may not offer or impose

|

| 176 |

+

any additional or different terms or conditions on, or

|

| 177 |

+

apply any Effective Technological Measures to, the

|

| 178 |

+

Licensed Material if doing so restricts exercise of the

|

| 179 |

+

Licensed Rights by any recipient of the Licensed

|

| 180 |

+

Material.

|

| 181 |

+

|

| 182 |

+

6. No endorsement. Nothing in this Public License constitutes or

|

| 183 |

+

may be construed as permission to assert or imply that You

|

| 184 |

+

are, or that Your use of the Licensed Material is, connected

|

| 185 |

+

with, or sponsored, endorsed, or granted official status by,

|

| 186 |

+

the Licensor or others designated to receive attribution as

|

| 187 |

+

provided in Section 3(a)(1)(A)(i).

|

| 188 |

+

|

| 189 |

+

b. Other rights.

|

| 190 |

+

|

| 191 |

+

1. Moral rights, such as the right of integrity, are not

|

| 192 |

+

licensed under this Public License, nor are publicity,

|

| 193 |

+

privacy, and/or other similar personality rights; however, to

|

| 194 |

+

the extent possible, the Licensor waives and/or agrees not to

|

| 195 |

+

assert any such rights held by the Licensor to the limited

|

| 196 |

+

extent necessary to allow You to exercise the Licensed

|

| 197 |

+

Rights, but not otherwise.

|

| 198 |

+

|

| 199 |

+

2. Patent and trademark rights are not licensed under this

|

| 200 |

+

Public License.

|

| 201 |

+

|

| 202 |

+

3. To the extent possible, the Licensor waives any right to

|

| 203 |

+

collect royalties from You for the exercise of the Licensed

|

| 204 |

+

Rights, whether directly or through a collecting society

|

| 205 |

+

under any voluntary or waivable statutory or compulsory

|

| 206 |

+

licensing scheme. In all other cases the Licensor expressly

|

| 207 |

+

reserves any right to collect such royalties.

|

| 208 |

+

|

| 209 |

+

|

| 210 |

+

Section 3 -- License Conditions.

|

| 211 |

+

|

| 212 |

+

Your exercise of the Licensed Rights is expressly made subject to the

|

| 213 |

+

following conditions.

|

| 214 |

+

|

| 215 |

+

a. Attribution.

|

| 216 |

+

|

| 217 |

+

1. If You Share the Licensed Material (including in modified

|

| 218 |

+

form), You must:

|

| 219 |

+

|

| 220 |

+

a. retain the following if it is supplied by the Licensor

|

| 221 |

+

with the Licensed Material:

|

| 222 |

+

|

| 223 |

+

i. identification of the creator(s) of the Licensed

|

| 224 |

+

Material and any others designated to receive

|

| 225 |

+

attribution, in any reasonable manner requested by

|

| 226 |

+

the Licensor (including by pseudonym if

|

| 227 |

+

designated);

|

| 228 |

+

|

| 229 |

+

ii. a copyright notice;

|

| 230 |

+

|

| 231 |

+

iii. a notice that refers to this Public License;

|

| 232 |

+

|

| 233 |

+

iv. a notice that refers to the disclaimer of

|

| 234 |

+

warranties;

|

| 235 |

+

|

| 236 |

+

v. a URI or hyperlink to the Licensed Material to the

|

| 237 |

+

extent reasonably practicable;

|

| 238 |

+

|

| 239 |

+

b. indicate if You modified the Licensed Material and

|

| 240 |

+

retain an indication of any previous modifications; and

|

| 241 |

+

|

| 242 |

+

c. indicate the Licensed Material is licensed under this

|

| 243 |

+

Public License, and include the text of, or the URI or

|

| 244 |

+

hyperlink to, this Public License.

|

| 245 |

+

|

| 246 |

+

2. You may satisfy the conditions in Section 3(a)(1) in any

|

| 247 |

+

reasonable manner based on the medium, means, and context in

|

| 248 |

+

which You Share the Licensed Material. For example, it may be

|

| 249 |

+

reasonable to satisfy the conditions by providing a URI or

|

| 250 |

+

hyperlink to a resource that includes the required

|

| 251 |

+

information.

|

| 252 |

+

|

| 253 |

+

3. If requested by the Licensor, You must remove any of the

|

| 254 |

+

information required by Section 3(a)(1)(A) to the extent

|

| 255 |

+

reasonably practicable.

|

| 256 |

+

|

| 257 |

+

4. If You Share Adapted Material You produce, the Adapter's

|

| 258 |

+

License You apply must not prevent recipients of the Adapted

|

| 259 |

+

Material from complying with this Public License.

|

| 260 |

+

|

| 261 |

+

|

| 262 |

+

Section 4 -- Sui Generis Database Rights.

|

| 263 |

+

|

| 264 |

+

Where the Licensed Rights include Sui Generis Database Rights that

|

| 265 |

+

apply to Your use of the Licensed Material:

|

| 266 |

+

|

| 267 |

+

a. for the avoidance of doubt, Section 2(a)(1) grants You the right

|

| 268 |

+

to extract, reuse, reproduce, and Share all or a substantial

|

| 269 |

+

portion of the contents of the database;

|

| 270 |

+

|

| 271 |

+

b. if You include all or a substantial portion of the database

|

| 272 |

+

contents in a database in which You have Sui Generis Database

|

| 273 |

+

Rights, then the database in which You have Sui Generis Database

|

| 274 |

+

Rights (but not its individual contents) is Adapted Material; and

|

| 275 |

+

|

| 276 |

+

c. You must comply with the conditions in Section 3(a) if You Share

|

| 277 |

+

all or a substantial portion of the contents of the database.

|

| 278 |

+

|

| 279 |

+

For the avoidance of doubt, this Section 4 supplements and does not

|

| 280 |

+

replace Your obligations under this Public License where the Licensed

|

| 281 |

+

Rights include other Copyright and Similar Rights.

|

| 282 |

+

|

| 283 |

+

|

| 284 |

+

Section 5 -- Disclaimer of Warranties and Limitation of Liability.

|

| 285 |

+

|

| 286 |

+

a. UNLESS OTHERWISE SEPARATELY UNDERTAKEN BY THE LICENSOR, TO THE

|

| 287 |

+

EXTENT POSSIBLE, THE LICENSOR OFFERS THE LICENSED MATERIAL AS-IS

|

| 288 |

+

AND AS-AVAILABLE, AND MAKES NO REPRESENTATIONS OR WARRANTIES OF

|

| 289 |

+

ANY KIND CONCERNING THE LICENSED MATERIAL, WHETHER EXPRESS,

|

| 290 |

+

IMPLIED, STATUTORY, OR OTHER. THIS INCLUDES, WITHOUT LIMITATION,

|

| 291 |

+

WARRANTIES OF TITLE, MERCHANTABILITY, FITNESS FOR A PARTICULAR

|

| 292 |

+

PURPOSE, NON-INFRINGEMENT, ABSENCE OF LATENT OR OTHER DEFECTS,

|

| 293 |

+

ACCURACY, OR THE PRESENCE OR ABSENCE OF ERRORS, WHETHER OR NOT

|

| 294 |

+

KNOWN OR DISCOVERABLE. WHERE DISCLAIMERS OF WARRANTIES ARE NOT

|

| 295 |

+

ALLOWED IN FULL OR IN PART, THIS DISCLAIMER MAY NOT APPLY TO YOU.

|

| 296 |

+

|

| 297 |

+

b. TO THE EXTENT POSSIBLE, IN NO EVENT WILL THE LICENSOR BE LIABLE

|

| 298 |

+

TO YOU ON ANY LEGAL THEORY (INCLUDING, WITHOUT LIMITATION,

|

| 299 |

+

NEGLIGENCE) OR OTHERWISE FOR ANY DIRECT, SPECIAL, INDIRECT,

|

| 300 |

+

INCIDENTAL, CONSEQUENTIAL, PUNITIVE, EXEMPLARY, OR OTHER LOSSES,

|

| 301 |

+

COSTS, EXPENSES, OR DAMAGES ARISING OUT OF THIS PUBLIC LICENSE OR

|

| 302 |

+

USE OF THE LICENSED MATERIAL, EVEN IF THE LICENSOR HAS BEEN

|

| 303 |

+

ADVISED OF THE POSSIBILITY OF SUCH LOSSES, COSTS, EXPENSES, OR

|

| 304 |

+

DAMAGES. WHERE A LIMITATION OF LIABILITY IS NOT ALLOWED IN FULL OR

|

| 305 |

+

IN PART, THIS LIMITATION MAY NOT APPLY TO YOU.

|

| 306 |

+

|

| 307 |

+

c. The disclaimer of warranties and limitation of liability provided

|

| 308 |

+

above shall be interpreted in a manner that, to the extent

|

| 309 |

+

possible, most closely approximates an absolute disclaimer and

|

| 310 |

+

waiver of all liability.

|

| 311 |

+

|

| 312 |

+

|

| 313 |

+

Section 6 -- Term and Termination.

|

| 314 |

+

|

| 315 |

+

a. This Public License applies for the term of the Copyright and

|

| 316 |

+

Similar Rights licensed here. However, if You fail to comply with

|

| 317 |

+

this Public License, then Your rights under this Public License

|

| 318 |

+

terminate automatically.

|

| 319 |

+

|

| 320 |

+

b. Where Your right to use the Licensed Material has terminated under

|

| 321 |

+

Section 6(a), it reinstates:

|

| 322 |

+

|

| 323 |

+

1. automatically as of the date the violation is cured, provided

|

| 324 |

+

it is cured within 30 days of Your discovery of the

|

| 325 |

+

violation; or

|

| 326 |

+

|

| 327 |

+

2. upon express reinstatement by the Licensor.

|

| 328 |

+

|

| 329 |

+

For the avoidance of doubt, this Section 6(b) does not affect any

|

| 330 |

+

right the Licensor may have to seek remedies for Your violations

|

| 331 |

+

of this Public License.

|

| 332 |

+

|

| 333 |

+

c. For the avoidance of doubt, the Licensor may also offer the

|

| 334 |

+

Licensed Material under separate terms or conditions or stop

|

| 335 |

+

distributing the Licensed Material at any time; however, doing so

|

| 336 |

+

will not terminate this Public License.

|

| 337 |

+

|

| 338 |

+

d. Sections 1, 5, 6, 7, and 8 survive termination of this Public

|

| 339 |

+

License.

|

| 340 |

+

|

| 341 |

+

|

| 342 |

+

Section 7 -- Other Terms and Conditions.

|

| 343 |

+

|

| 344 |

+

a. The Licensor shall not be bound by any additional or different

|

| 345 |

+

terms or conditions communicated by You unless expressly agreed.

|

| 346 |

+

|

| 347 |

+

b. Any arrangements, understandings, or agreements regarding the

|

| 348 |

+

Licensed Material not stated herein are separate from and

|

| 349 |

+

independent of the terms and conditions of this Public License.

|

| 350 |

+

|

| 351 |

+

|

| 352 |

+

Section 8 -- Interpretation.

|

| 353 |

+

|

| 354 |

+

a. For the avoidance of doubt, this Public License does not, and

|

| 355 |

+

shall not be interpreted to, reduce, limit, restrict, or impose

|

| 356 |

+

conditions on any use of the Licensed Material that could lawfully

|

| 357 |

+

be made without permission under this Public License.

|

| 358 |

+

|

| 359 |

+

b. To the extent possible, if any provision of this Public License is

|

| 360 |

+

deemed unenforceable, it shall be automatically reformed to the

|

| 361 |

+

minimum extent necessary to make it enforceable. If the provision

|

| 362 |

+

cannot be reformed, it shall be severed from this Public License

|

| 363 |

+

without affecting the enforceability of the remaining terms and

|

| 364 |

+

conditions.

|

| 365 |

+

|

| 366 |

+

c. No term or condition of this Public License will be waived and no

|

| 367 |

+

failure to comply consented to unless expressly agreed to by the

|

| 368 |

+

Licensor.

|

| 369 |

+

|

| 370 |

+

d. Nothing in this Public License constitutes or may be interpreted

|

| 371 |

+

as a limitation upon, or waiver of, any privileges and immunities

|

| 372 |

+

that apply to the Licensor or You, including from the legal

|

| 373 |

+

processes of any jurisdiction or authority.

|

| 374 |

+

|

| 375 |

+

|

| 376 |

+

=======================================================================

|

| 377 |

+

|

| 378 |

+

Creative Commons is not a party to its public

|

| 379 |

+

licenses. Notwithstanding, Creative Commons may elect to apply one of

|

| 380 |

+

its public licenses to material it publishes and in those instances

|

| 381 |

+

will be considered the “Licensor.” The text of the Creative Commons

|

| 382 |

+

public licenses is dedicated to the public domain under the CC0 Public

|

| 383 |

+

Domain Dedication. Except for the limited purpose of indicating that

|

| 384 |

+

material is shared under a Creative Commons public license or as

|

| 385 |

+

otherwise permitted by the Creative Commons policies published at

|

| 386 |

+

creativecommons.org/policies, Creative Commons does not authorize the

|

| 387 |

+

use of the trademark "Creative Commons" or any other trademark or logo

|

| 388 |

+

of Creative Commons without its prior written consent including,

|

| 389 |

+

without limitation, in connection with any unauthorized modifications

|

| 390 |

+

to any of its public licenses or any other arrangements,

|

| 391 |

+

understandings, or agreements concerning use of licensed material. For

|

| 392 |

+

the avoidance of doubt, this paragraph does not form part of the

|

| 393 |

+

public licenses.

|

| 394 |

+

|

| 395 |

+

Creative Commons may be contacted at creativecommons.org.

|

OmniParser

DELETED

|

@@ -1 +0,0 @@

|

|

| 1 |

-

Subproject commit ba4e04fccb67070c718530e65570703f9f68a347

|

|

|

|

|

|

README.md

CHANGED

|

@@ -1,12 +1,56 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# OmniParser: Screen Parsing tool for Pure Vision Based GUI Agent

|

| 2 |

+

|

| 3 |

+

<p align="center">

|

| 4 |

+

<img src="imgs/logo.png" alt="Logo">

|

| 5 |

+

</p>

|

| 6 |

+

|

| 7 |

+

[](https://arxiv.org/abs/2408.00203)

|

| 8 |

+

[](https://opensource.org/licenses/MIT)

|

| 9 |

+

|

| 10 |

+

📢 [[Project Page](https://microsoft.github.io/OmniParser/)] [[Blog Post](https://www.microsoft.com/en-us/research/articles/omniparser-for-pure-vision-based-gui-agent/)] [[Models](https://huggingface.co/microsoft/OmniParser)]

|

| 11 |

+

|

| 12 |

+

**OmniParser** is a comprehensive method for parsing user interface screenshots into structured and easy-to-understand elements, which significantly enhances the ability of GPT-4V to generate actions that can be accurately grounded in the corresponding regions of the interface.

|

| 13 |

+

|

| 14 |

+

## News

|

| 15 |

+

- [2024/10] Both Interactive Region Detection Model and Icon functional description model are released! [Hugginface models](https://huggingface.co/microsoft/OmniParser)

|

| 16 |

+

- [2024/09] OmniParser achieves the best performance on [Windows Agent Arena](https://microsoft.github.io/WindowsAgentArena/)!

|

| 17 |

+

|

| 18 |

+

## Install

|

| 19 |

+

Install environment:

|

| 20 |

+

```python

|

| 21 |

+

conda create -n "omni" python==3.12

|

| 22 |

+

conda activate omni

|

| 23 |

+

pip install -r requirements.txt

|

| 24 |

+

```

|

| 25 |

+

|

| 26 |

+

Then download the model ckpts files in: https://huggingface.co/microsoft/OmniParser, and put them under weights/, default folder structure is: weights/icon_detect, weights/icon_caption_florence, weights/icon_caption_blip2.

|

| 27 |

+

|

| 28 |

+

Finally, convert the safetensor to .pt file.

|

| 29 |

+

```python

|

| 30 |

+

python weights/convert_safetensor_to_pt.py

|

| 31 |

+

```

|

| 32 |

+

|

| 33 |

+

## Examples:

|

| 34 |

+

We put together a few simple examples in the demo.ipynb.

|

| 35 |

+

|

| 36 |

+

## Gradio Demo

|

| 37 |

+

To run gradio demo, simply run:

|

| 38 |

+

```python

|

| 39 |

+

python gradio_demo.py

|

| 40 |

+

```

|

| 41 |

+

|

| 42 |

+

|

| 43 |

+

## 📚 Citation

|

| 44 |

+

Our technical report can be found [here](https://arxiv.org/abs/2408.00203).

|

| 45 |

+

If you find our work useful, please consider citing our work:

|

| 46 |

+

```

|

| 47 |

+

@misc{lu2024omniparserpurevisionbased,

|

| 48 |

+

title={OmniParser for Pure Vision Based GUI Agent},

|

| 49 |

+

author={Yadong Lu and Jianwei Yang and Yelong Shen and Ahmed Awadallah},

|

| 50 |

+

year={2024},

|

| 51 |

+

eprint={2408.00203},

|

| 52 |

+

archivePrefix={arXiv},

|

| 53 |

+

primaryClass={cs.CV},

|

| 54 |

+

url={https://arxiv.org/abs/2408.00203},

|

| 55 |

+

}

|

| 56 |

+

```

|

SECURITY.md

ADDED

|

@@ -0,0 +1,41 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<!-- BEGIN MICROSOFT SECURITY.MD V0.0.9 BLOCK -->

|

| 2 |

+

|

| 3 |

+

## Security

|

| 4 |

+

|

| 5 |

+

Microsoft takes the security of our software products and services seriously, which includes all source code repositories managed through our GitHub organizations, which include [Microsoft](https://github.com/Microsoft), [Azure](https://github.com/Azure), [DotNet](https://github.com/dotnet), [AspNet](https://github.com/aspnet) and [Xamarin](https://github.com/xamarin).

|

| 6 |

+

|

| 7 |

+

If you believe you have found a security vulnerability in any Microsoft-owned repository that meets [Microsoft's definition of a security vulnerability](https://aka.ms/security.md/definition), please report it to us as described below.

|

| 8 |

+

|

| 9 |

+

## Reporting Security Issues

|

| 10 |

+

|

| 11 |

+

**Please do not report security vulnerabilities through public GitHub issues.**

|

| 12 |

+

|

| 13 |

+

Instead, please report them to the Microsoft Security Response Center (MSRC) at [https://msrc.microsoft.com/create-report](https://aka.ms/security.md/msrc/create-report).

|

| 14 |

+

|

| 15 |

+

If you prefer to submit without logging in, send email to [[email protected]](mailto:[email protected]). If possible, encrypt your message with our PGP key; please download it from the [Microsoft Security Response Center PGP Key page](https://aka.ms/security.md/msrc/pgp).

|

| 16 |

+

|

| 17 |

+

You should receive a response within 24 hours. If for some reason you do not, please follow up via email to ensure we received your original message. Additional information can be found at [microsoft.com/msrc](https://www.microsoft.com/msrc).

|

| 18 |

+

|

| 19 |

+

Please include the requested information listed below (as much as you can provide) to help us better understand the nature and scope of the possible issue:

|

| 20 |

+

|

| 21 |

+

* Type of issue (e.g. buffer overflow, SQL injection, cross-site scripting, etc.)

|

| 22 |

+

* Full paths of source file(s) related to the manifestation of the issue

|

| 23 |

+

* The location of the affected source code (tag/branch/commit or direct URL)

|

| 24 |

+

* Any special configuration required to reproduce the issue

|

| 25 |

+

* Step-by-step instructions to reproduce the issue

|

| 26 |

+

* Proof-of-concept or exploit code (if possible)

|

| 27 |

+

* Impact of the issue, including how an attacker might exploit the issue

|

| 28 |

+

|

| 29 |

+

This information will help us triage your report more quickly.

|

| 30 |

+

|

| 31 |

+

If you are reporting for a bug bounty, more complete reports can contribute to a higher bounty award. Please visit our [Microsoft Bug Bounty Program](https://aka.ms/security.md/msrc/bounty) page for more details about our active programs.

|

| 32 |

+

|

| 33 |

+

## Preferred Languages

|

| 34 |

+

|

| 35 |

+

We prefer all communications to be in English.

|

| 36 |

+

|

| 37 |

+

## Policy

|

| 38 |

+

|

| 39 |

+

Microsoft follows the principle of [Coordinated Vulnerability Disclosure](https://aka.ms/security.md/cvd).

|

| 40 |

+

|

| 41 |

+

<!-- END MICROSOFT SECURITY.MD BLOCK -->

|

__pycache__/utils.cpython-312.pyc

ADDED

|

Binary file (22.7 kB). View file

|

|

|

__pycache__/utils.cpython-39.pyc

ADDED

|

Binary file (19.7 kB). View file

|

|

|

config.json

ADDED

|

@@ -0,0 +1,40 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_name_or_path": "Salesforce/blip2-opt-2.7b",

|

| 3 |

+

"architectures": [

|

| 4 |

+

"Blip2ForConditionalGeneration"

|

| 5 |

+

],

|

| 6 |

+

"initializer_factor": 1.0,

|

| 7 |

+

"initializer_range": 0.02,

|

| 8 |

+

"model_type": "blip-2",

|

| 9 |

+

"num_query_tokens": 32,

|

| 10 |

+

"qformer_config": {

|

| 11 |

+

"classifier_dropout": null,

|

| 12 |

+

"model_type": "blip_2_qformer"

|

| 13 |

+

},

|

| 14 |

+

"text_config": {

|

| 15 |

+

"_name_or_path": "facebook/opt-2.7b",

|

| 16 |

+

"activation_dropout": 0.0,

|

| 17 |

+

"architectures": [

|

| 18 |

+

"OPTForCausalLM"

|

| 19 |

+

],

|

| 20 |

+

"eos_token_id": 50118,

|

| 21 |

+

"ffn_dim": 10240,

|

| 22 |

+

"hidden_size": 2560,

|

| 23 |

+

"model_type": "opt",

|

| 24 |

+

"num_attention_heads": 32,

|

| 25 |

+

"num_hidden_layers": 32,

|

| 26 |

+

"prefix": "</s>",

|

| 27 |

+

"torch_dtype": "float16",

|

| 28 |

+

"word_embed_proj_dim": 2560

|

| 29 |

+

},

|

| 30 |

+

"torch_dtype": "bfloat16",

|

| 31 |

+

"transformers_version": "4.40.2",

|

| 32 |

+

"use_decoder_only_language_model": true,

|

| 33 |

+

"vision_config": {

|

| 34 |

+

"dropout": 0.0,

|

| 35 |

+

"initializer_factor": 1.0,

|

| 36 |

+

"model_type": "blip_2_vision_model",

|

| 37 |

+

"num_channels": 3,

|

| 38 |

+

"projection_dim": 512

|

| 39 |

+

}

|

| 40 |

+

}

|

demo.ipynb

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

gradio_demo.py

ADDED

|

@@ -0,0 +1,108 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from typing import Optional

|

| 2 |

+

|

| 3 |

+

import gradio as gr

|

| 4 |

+

import numpy as np

|

| 5 |

+

import torch

|

| 6 |

+

from PIL import Image

|

| 7 |

+

import io

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

import base64, os

|

| 11 |

+

from utils import check_ocr_box, get_yolo_model, get_caption_model_processor, get_som_labeled_img

|

| 12 |

+

import torch

|

| 13 |

+

from PIL import Image

|

| 14 |

+

|

| 15 |

+

yolo_model = get_yolo_model(model_path='weights/icon_detect/best.pt')

|

| 16 |

+

caption_model_processor = get_caption_model_processor(model_name="florence2", model_name_or_path="weights/icon_caption_florence")

|

| 17 |

+

platform = 'pc'

|

| 18 |

+

if platform == 'pc':

|

| 19 |

+

draw_bbox_config = {

|

| 20 |

+

'text_scale': 0.8,

|

| 21 |

+

'text_thickness': 2,

|

| 22 |

+

'text_padding': 2,

|

| 23 |

+

'thickness': 2,

|

| 24 |

+

}

|

| 25 |

+

elif platform == 'web':

|

| 26 |

+

draw_bbox_config = {

|

| 27 |

+

'text_scale': 0.8,

|

| 28 |

+

'text_thickness': 2,

|

| 29 |

+

'text_padding': 3,

|

| 30 |

+

'thickness': 3,

|

| 31 |

+

}

|

| 32 |

+

elif platform == 'mobile':

|

| 33 |

+

draw_bbox_config = {

|

| 34 |

+

'text_scale': 0.8,

|

| 35 |

+

'text_thickness': 2,

|

| 36 |

+

'text_padding': 3,

|

| 37 |

+

'thickness': 3,

|

| 38 |

+

}

|

| 39 |

+

|

| 40 |

+

|

| 41 |

+

|

| 42 |

+

MARKDOWN = """

|

| 43 |

+

# OmniParser for Pure Vision Based General GUI Agent 🔥

|

| 44 |

+

<div>

|

| 45 |

+

<a href="https://arxiv.org/pdf/2408.00203">

|

| 46 |

+

<img src="https://img.shields.io/badge/arXiv-2408.00203-b31b1b.svg" alt="Arxiv" style="display:inline-block;">

|

| 47 |

+

</a>

|

| 48 |

+

</div>

|

| 49 |

+

|

| 50 |

+

OmniParser is a screen parsing tool to convert general GUI screen to structured elements.

|

| 51 |

+

"""

|

| 52 |

+

|

| 53 |

+

DEVICE = torch.device('cuda')

|

| 54 |

+

|

| 55 |

+

# @spaces.GPU

|

| 56 |

+

# @torch.inference_mode()

|

| 57 |

+

# @torch.autocast(device_type="cuda", dtype=torch.bfloat16)

|

| 58 |

+

def process(

|

| 59 |

+

image_input,

|

| 60 |

+

box_threshold,

|

| 61 |

+

iou_threshold

|

| 62 |

+

) -> Optional[Image.Image]:

|

| 63 |

+

|

| 64 |

+

image_save_path = 'imgs/saved_image_demo.png'

|

| 65 |

+

image_input.save(image_save_path)

|

| 66 |

+

# import pdb; pdb.set_trace()

|

| 67 |

+

|

| 68 |

+

ocr_bbox_rslt, is_goal_filtered = check_ocr_box(image_save_path, display_img = False, output_bb_format='xyxy', goal_filtering=None, easyocr_args={'paragraph': False, 'text_threshold':0.9})

|

| 69 |

+

text, ocr_bbox = ocr_bbox_rslt

|

| 70 |

+

# print('prompt:', prompt)

|

| 71 |

+

dino_labled_img, label_coordinates, parsed_content_list = get_som_labeled_img(image_save_path, yolo_model, BOX_TRESHOLD = box_threshold, output_coord_in_ratio=True, ocr_bbox=ocr_bbox,draw_bbox_config=draw_bbox_config, caption_model_processor=caption_model_processor, ocr_text=text,iou_threshold=iou_threshold)

|

| 72 |

+

image = Image.open(io.BytesIO(base64.b64decode(dino_labled_img)))

|

| 73 |

+

print('finish processing')

|

| 74 |

+

parsed_content_list = '\n'.join(parsed_content_list)

|

| 75 |

+

return image, str(parsed_content_list)

|

| 76 |

+

|

| 77 |

+

|

| 78 |

+

|

| 79 |

+

with gr.Blocks() as demo:

|

| 80 |

+

gr.Markdown(MARKDOWN)

|

| 81 |

+

with gr.Row():

|

| 82 |

+

with gr.Column():

|

| 83 |

+

image_input_component = gr.Image(

|

| 84 |

+

type='pil', label='Upload image')

|

| 85 |

+

# set the threshold for removing the bounding boxes with low confidence, default is 0.05

|

| 86 |

+

box_threshold_component = gr.Slider(

|

| 87 |

+

label='Box Threshold', minimum=0.01, maximum=1.0, step=0.01, value=0.05)

|

| 88 |

+

# set the threshold for removing the bounding boxes with large overlap, default is 0.1

|

| 89 |

+

iou_threshold_component = gr.Slider(

|

| 90 |

+

label='IOU Threshold', minimum=0.01, maximum=1.0, step=0.01, value=0.1)

|

| 91 |

+

submit_button_component = gr.Button(

|

| 92 |

+

value='Submit', variant='primary')

|

| 93 |

+

with gr.Column():

|

| 94 |

+

image_output_component = gr.Image(type='pil', label='Image Output')

|

| 95 |

+

text_output_component = gr.Textbox(label='Parsed screen elements', placeholder='Text Output')

|

| 96 |

+

|

| 97 |

+

submit_button_component.click(

|

| 98 |

+

fn=process,

|

| 99 |

+

inputs=[

|

| 100 |

+

image_input_component,

|

| 101 |

+

box_threshold_component,

|

| 102 |

+

iou_threshold_component

|

| 103 |

+

],

|

| 104 |

+

outputs=[image_output_component, text_output_component]

|

| 105 |

+

)

|

| 106 |

+

|

| 107 |

+

# demo.launch(debug=False, show_error=True, share=True)

|

| 108 |

+

demo.launch(share=True, server_port=7861, server_name='0.0.0.0')

|

imgs/google_page.png

ADDED

|

imgs/logo.png

ADDED

|

imgs/saved_image_demo.png

ADDED

|

imgs/windows_home.png

ADDED

|

Git LFS Details

|

imgs/windows_multitab.png

ADDED

|

omniparser.py

ADDED

|

@@ -0,0 +1,60 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from utils import get_som_labeled_img, check_ocr_box, get_caption_model_processor, get_dino_model, get_yolo_model

|

| 2 |

+

import torch

|

| 3 |

+

from ultralytics import YOLO

|

| 4 |

+

from PIL import Image

|

| 5 |

+

from typing import Dict, Tuple, List

|

| 6 |

+

import io

|

| 7 |

+

import base64

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

config = {

|

| 11 |

+

'som_model_path': 'finetuned_icon_detect.pt',

|

| 12 |

+

'device': 'cpu',

|

| 13 |

+

'caption_model_path': 'Salesforce/blip2-opt-2.7b',

|

| 14 |

+

'draw_bbox_config': {

|

| 15 |

+

'text_scale': 0.8,

|

| 16 |

+

'text_thickness': 2,

|

| 17 |

+

'text_padding': 3,

|

| 18 |

+

'thickness': 3,

|

| 19 |

+

},

|

| 20 |

+

'BOX_TRESHOLD': 0.05

|

| 21 |

+

}

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

class Omniparser(object):

|

| 25 |

+

def __init__(self, config: Dict):

|

| 26 |

+

self.config = config

|

| 27 |

+

|

| 28 |

+

self.som_model = get_yolo_model(model_path=config['som_model_path'])

|

| 29 |

+

# self.caption_model_processor = get_caption_model_processor(config['caption_model_path'], device=cofig['device'])

|

| 30 |

+

# self.caption_model_processor['model'].to(torch.float32)

|

| 31 |

+

|

| 32 |

+

def parse(self, image_path: str):

|

| 33 |

+

print('Parsing image:', image_path)

|

| 34 |

+

ocr_bbox_rslt, is_goal_filtered = check_ocr_box(image_path, display_img = False, output_bb_format='xyxy', goal_filtering=None, easyocr_args={'paragraph': False, 'text_threshold':0.9})

|

| 35 |

+

text, ocr_bbox = ocr_bbox_rslt

|

| 36 |

+

|

| 37 |

+

draw_bbox_config = self.config['draw_bbox_config']

|

| 38 |

+

BOX_TRESHOLD = self.config['BOX_TRESHOLD']

|

| 39 |

+

dino_labled_img, label_coordinates, parsed_content_list = get_som_labeled_img(image_path, self.som_model, BOX_TRESHOLD = BOX_TRESHOLD, output_coord_in_ratio=False, ocr_bbox=ocr_bbox,draw_bbox_config=draw_bbox_config, caption_model_processor=None, ocr_text=text,use_local_semantics=False)

|

| 40 |

+

|

| 41 |

+

image = Image.open(io.BytesIO(base64.b64decode(dino_labled_img)))

|

| 42 |

+

# formating output

|

| 43 |

+

return_list = [{'from': 'omniparser', 'shape': {'x':coord[0], 'y':coord[1], 'width':coord[2], 'height':coord[3]},

|

| 44 |

+

'text': parsed_content_list[i].split(': ')[1], 'type':'text'} for i, (k, coord) in enumerate(label_coordinates.items()) if i < len(parsed_content_list)]

|

| 45 |

+

return_list.extend(

|

| 46 |

+

[{'from': 'omniparser', 'shape': {'x':coord[0], 'y':coord[1], 'width':coord[2], 'height':coord[3]},

|

| 47 |

+

'text': 'None', 'type':'icon'} for i, (k, coord) in enumerate(label_coordinates.items()) if i >= len(parsed_content_list)]

|

| 48 |

+

)

|

| 49 |

+

|

| 50 |

+

return [image, return_list]

|

| 51 |

+

|

| 52 |

+

parser = Omniparser(config)

|

| 53 |

+

image_path = 'examples/pc_1.png'

|

| 54 |

+

|

| 55 |

+

# time the parser

|

| 56 |

+

import time

|

| 57 |

+

s = time.time()

|

| 58 |

+

image, parsed_content_list = parser.parse(image_path)

|

| 59 |

+

device = config['device']

|

| 60 |

+

print(f'Time taken for Omniparser on {device}:', time.time() - s)

|

pytorch_model-00001-of-00002.bin

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:e948c349fa5dba28e2b5b8706ebaa162c8ff6ecae54e9e49dffc2e668bac04ff

|

| 3 |

+

size 650555364

|

requirements.txt

ADDED

|

@@ -0,0 +1,16 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

torch

|

| 2 |

+

easyocr

|

| 3 |

+

torchvision

|

| 4 |

+

supervision==0.18.0

|

| 5 |

+

openai==1.3.5

|

| 6 |

+

transformers

|

| 7 |

+

ultralytics==8.1.24

|

| 8 |

+

azure-identity

|

| 9 |

+

numpy

|

| 10 |

+

opencv-python

|

| 11 |

+

opencv-python-headless

|

| 12 |

+

gradio

|

| 13 |

+

dill

|

| 14 |

+

accelerate

|

| 15 |

+

timm

|

| 16 |

+

einops==0.8.0

|

util/__init__.py

ADDED

|

File without changes

|

util/__pycache__/__init__.cpython-312.pyc

ADDED

|

Binary file (139 Bytes). View file

|

|

|

util/__pycache__/__init__.cpython-39.pyc

ADDED

|

Binary file (141 Bytes). View file

|

|

|

util/__pycache__/action_matching.cpython-39.pyc

ADDED

|

Binary file (8.49 kB). View file

|

|

|

util/__pycache__/box_annotator.cpython-312.pyc

ADDED

|

Binary file (9.79 kB). View file

|

|

|

util/__pycache__/box_annotator.cpython-39.pyc

ADDED

|

Binary file (6.57 kB). View file

|

|

|

util/action_matching.py

ADDED

|

@@ -0,0 +1,425 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|