Spaces:

Runtime error

Runtime error

Add NVLM

Browse files- pages/28_NVEagle.py +2 -2

- pages/29_NVLM.py +167 -0

- pages/NVLM/image_1.png +0 -0

- pages/NVLM/image_2.png +0 -0

- pages/NVLM/image_3.png +0 -0

- pages/NVLM/image_4.png +0 -0

pages/28_NVEagle.py

CHANGED

|

@@ -159,7 +159,7 @@ with col2:

|

|

| 159 |

with col3:

|

| 160 |

if lang == "en":

|

| 161 |

if st.button("Next paper", use_container_width=True):

|

| 162 |

-

switch_page("

|

| 163 |

else:

|

| 164 |

if st.button("Papier suivant", use_container_width=True):

|

| 165 |

-

switch_page("

|

|

|

|

| 159 |

with col3:

|

| 160 |

if lang == "en":

|

| 161 |

if st.button("Next paper", use_container_width=True):

|

| 162 |

+

switch_page("NVLM")

|

| 163 |

else:

|

| 164 |

if st.button("Papier suivant", use_container_width=True):

|

| 165 |

+

switch_page("NVLM")

|

pages/29_NVLM.py

ADDED

|

@@ -0,0 +1,167 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

from streamlit_extras.switch_page_button import switch_page

|

| 3 |

+

|

| 4 |

+

|

| 5 |

+

translations = {

|

| 6 |

+

'en': {'title': 'NVLM',

|

| 7 |

+

'original_tweet':

|

| 8 |

+

"""

|

| 9 |

+

[Original tweet](https://x.com/mervenoyann/status/1841098941900767323) (October 1st, 2024)

|

| 10 |

+

""",

|

| 11 |

+

'tweet_1':

|

| 12 |

+

"""

|

| 13 |

+

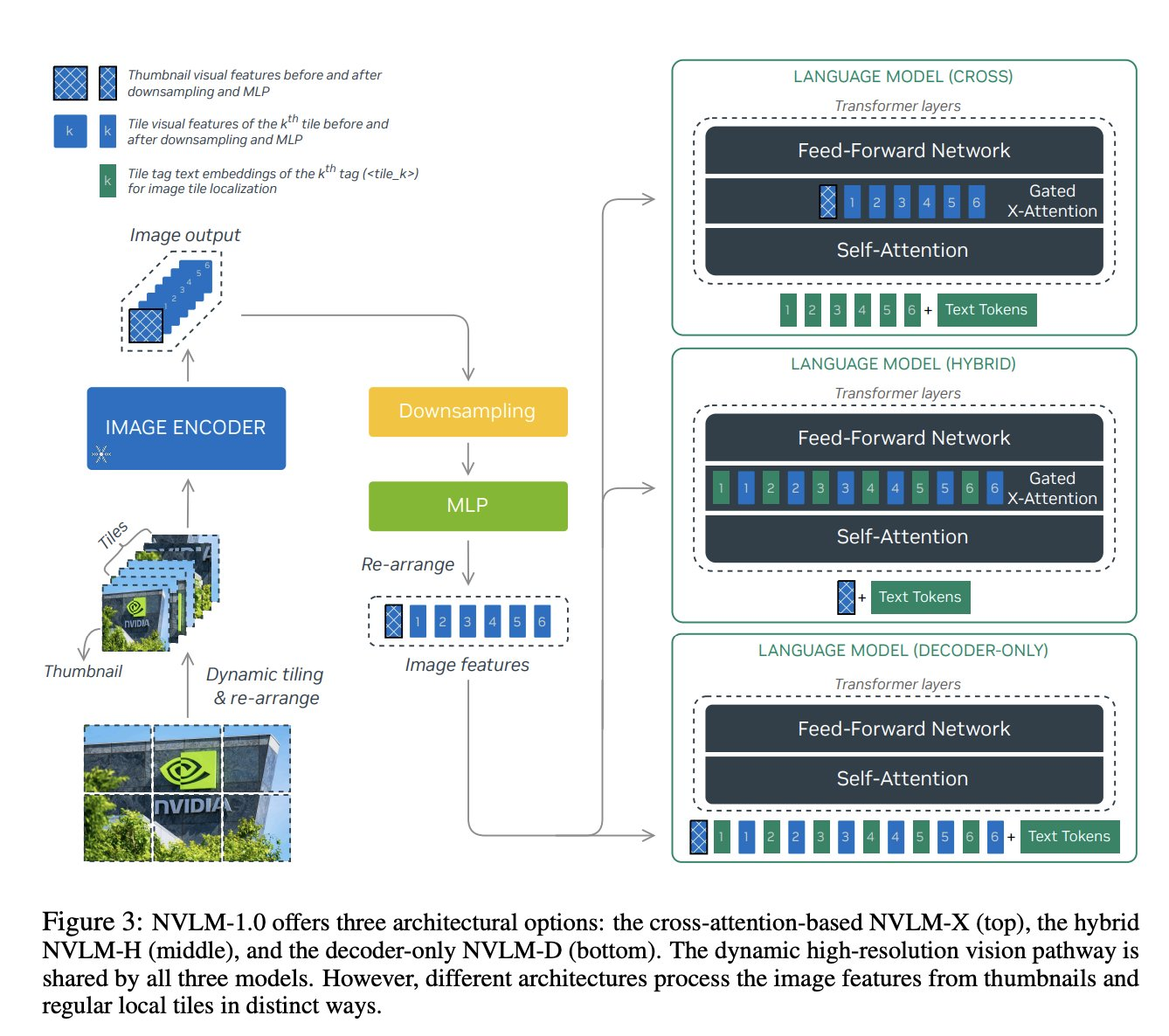

NVIDIA just dropped a gigantic multimodal model called NVLM 72B 🦖

|

| 14 |

+

Explaining everything from what I got of reading the paper here 📝

|

| 15 |

+

""",

|

| 16 |

+

'tweet_2':

|

| 17 |

+

"""

|

| 18 |

+

The paper contains many ablation studies on various ways to use the LLM backbone 👇🏻

|

| 19 |

+

|

| 20 |

+

🦩 Flamingo-like cross-attention (NVLM-X)

|

| 21 |

+

🌋 Llava-like concatenation of image and text embeddings to a decoder-only model (NVLM-D)

|

| 22 |

+

✨ a hybrid architecture (NVLM-H)

|

| 23 |

+

""",

|

| 24 |

+

'tweet_3':

|

| 25 |

+

"""

|

| 26 |

+

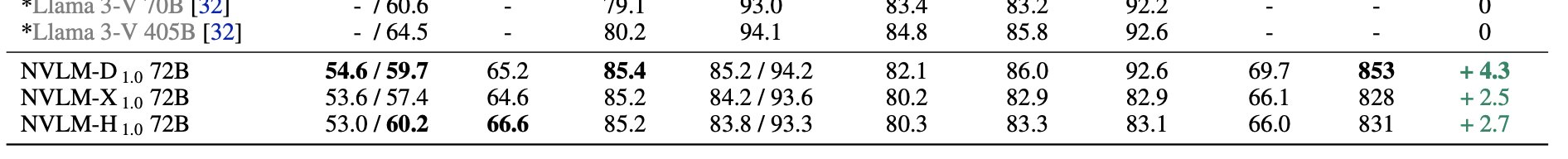

Checking evaluations, NVLM-D and NVLM-H are best or second best compared to other models 👏

|

| 27 |

+

|

| 28 |

+

The released model is NVLM-D based on Qwen-2 Instruct, aligned with InternViT-6B using a huge mixture of different datasets

|

| 29 |

+

""",

|

| 30 |

+

'tweet_4':

|

| 31 |

+

"""

|

| 32 |

+

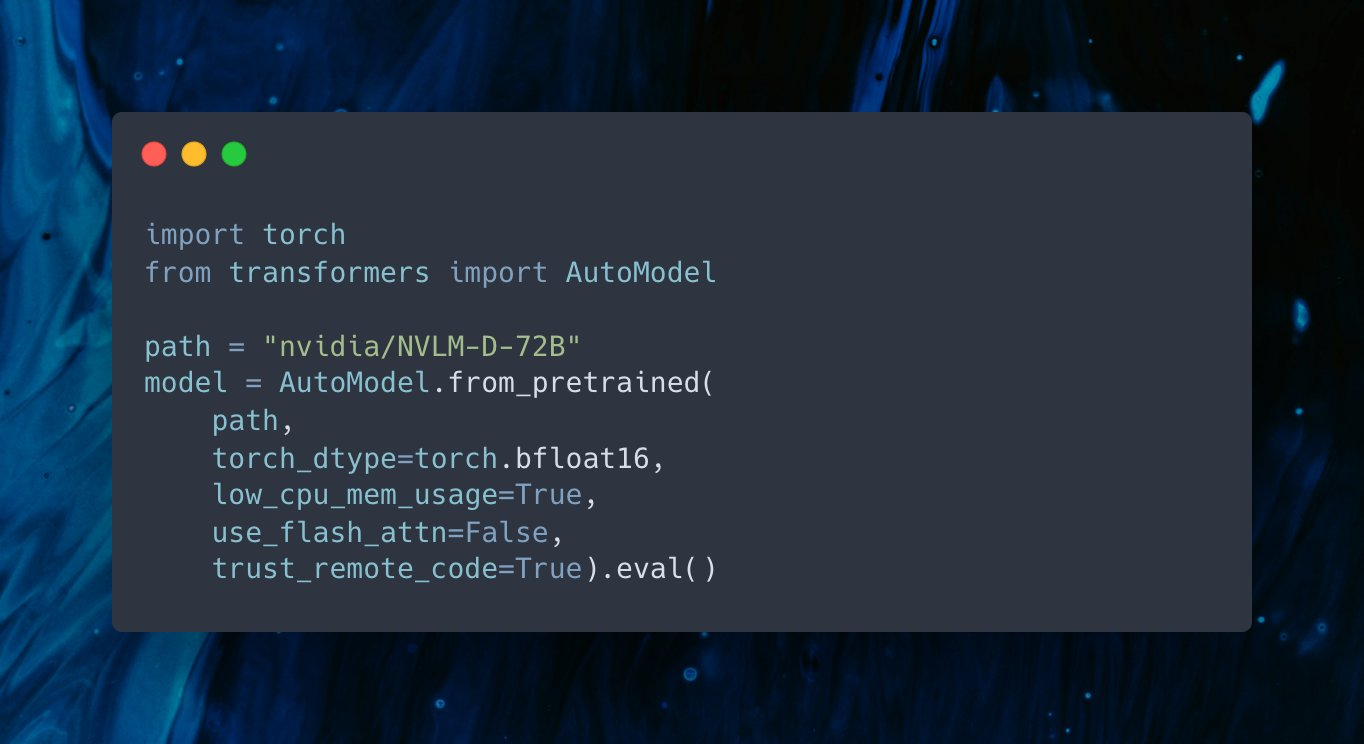

You can easily use this model by loading it through 🤗 Transformers' AutoModel 😍

|

| 33 |

+

""",

|

| 34 |

+

'ressources':

|

| 35 |

+

"""

|

| 36 |

+

Ressources:

|

| 37 |

+

[NVLM: Open Frontier-Class Multimodal LLMs](https://arxiv.org/abs/2409.11402)

|

| 38 |

+

by Wenliang Dai, Nayeon Lee, Boxin Wang, Zhuoling Yang, Zihan Liu, Jon Barker, Tuomas Rintamaki, Mohammad Shoeybi, Bryan Catanzaro, Wei Ping (2024)

|

| 39 |

+

[GitHub](https://nvlm-project.github.io/)

|

| 40 |

+

[Model](https://huggingface.co/nvidia/NVLM-D-72B)

|

| 41 |

+

"""

|

| 42 |

+

},

|

| 43 |

+

'fr': {

|

| 44 |

+

'title': 'NVLM',

|

| 45 |

+

'original_tweet':

|

| 46 |

+

"""

|

| 47 |

+

[Tweet de base](https://x.com/mervenoyann/status/1841098941900767323) (en anglais) (1er ocotbre 2024)

|

| 48 |

+

""",

|

| 49 |

+

'tweet_1':

|

| 50 |

+

"""

|

| 51 |

+

NVIDIA vient de publier un gigantesque modèle multimodal appelé NVLM 72B 🦖

|

| 52 |

+

J'explique tout ce que j'ai compris suite à la lecture du papier 📝

|

| 53 |

+

""",

|

| 54 |

+

'tweet_2':

|

| 55 |

+

"""

|

| 56 |

+

L'article contient de nombreuses études d'ablation sur les différentes façons d'utiliser le backbone 👇🏻

|

| 57 |

+

|

| 58 |

+

🦩 Attention croisée de type Flamingo (NVLM-X)

|

| 59 |

+

🌋 concaténation de type Llava d'embeddings d'images et de textes à un décodeur (NVLM-D)

|

| 60 |

+

✨ une architecture hybride (NVLM-H)

|

| 61 |

+

""",

|

| 62 |

+

'tweet_3':

|

| 63 |

+

"""

|

| 64 |

+

En vérifiant les évaluations, NVLM-D et NVLM-H sont les meilleurs ou les deuxièmes par rapport aux autres modèles 👏

|

| 65 |

+

|

| 66 |

+

Le modèle publié est NVLM-D basé sur Qwen-2 Instruct, aligné avec InternViT-6B en utilisant un énorme mélange de différents jeux de données.

|

| 67 |

+

""",

|

| 68 |

+

'tweet_4':

|

| 69 |

+

"""

|

| 70 |

+

Vous pouvez facilement utiliser ce modèle en le chargeant via AutoModel de 🤗 Transformers 😍

|

| 71 |

+

""",

|

| 72 |

+

'ressources':

|

| 73 |

+

"""

|

| 74 |

+

Ressources :

|

| 75 |

+

[NVLM: Open Frontier-Class Multimodal LLMs](https://arxiv.org/abs/2409.11402)

|

| 76 |

+

de Wenliang Dai, Nayeon Lee, Boxin Wang, Zhuoling Yang, Zihan Liu, Jon Barker, Tuomas Rintamaki, Mohammad Shoeybi, Bryan Catanzaro, Wei Ping (2024)

|

| 77 |

+

[GitHub](https://nvlm-project.github.io/)

|

| 78 |

+

[Modèle](https://huggingface.co/nvidia/NVLM-D-72B)

|

| 79 |

+

"""

|

| 80 |

+

}

|

| 81 |

+

}

|

| 82 |

+

|

| 83 |

+

|

| 84 |

+

def language_selector():

|

| 85 |

+

languages = {'EN': '🇬🇧', 'FR': '🇫🇷'}

|

| 86 |

+

selected_lang = st.selectbox('', options=list(languages.keys()), format_func=lambda x: languages[x], key='lang_selector')

|

| 87 |

+

return 'en' if selected_lang == 'EN' else 'fr'

|

| 88 |

+

|

| 89 |

+

left_column, right_column = st.columns([5, 1])

|

| 90 |

+

|

| 91 |

+

# Add a selector to the right column

|

| 92 |

+

with right_column:

|

| 93 |

+

lang = language_selector()

|

| 94 |

+

|

| 95 |

+

# Add a title to the left column

|

| 96 |

+

with left_column:

|

| 97 |

+

st.title(translations[lang]["title"])

|

| 98 |

+

|

| 99 |

+

st.success(translations[lang]["original_tweet"], icon="ℹ️")

|

| 100 |

+

st.markdown(""" """)

|

| 101 |

+

|

| 102 |

+

st.markdown(translations[lang]["tweet_1"], unsafe_allow_html=True)

|

| 103 |

+

st.markdown(""" """)

|

| 104 |

+

|

| 105 |

+

st.image("pages/NVLM/image_1.png", use_column_width=True)

|

| 106 |

+

st.markdown(""" """)

|

| 107 |

+

|

| 108 |

+

st.markdown(translations[lang]["tweet_2"], unsafe_allow_html=True)

|

| 109 |

+

st.markdown(""" """)

|

| 110 |

+

|

| 111 |

+

st.image("pages/NVLM/image_2.png", use_column_width=True)

|

| 112 |

+

st.markdown(""" """)

|

| 113 |

+

|

| 114 |

+

st.markdown(translations[lang]["tweet_3"], unsafe_allow_html=True)

|

| 115 |

+

st.markdown(""" """)

|

| 116 |

+

|

| 117 |

+

st.image("pages/NVLM/image_3.png", use_column_width=True)

|

| 118 |

+

st.markdown(""" """)

|

| 119 |

+

|

| 120 |

+

st.markdown(translations[lang]["tweet_4"], unsafe_allow_html=True)

|

| 121 |

+

st.markdown(""" """)

|

| 122 |

+

|

| 123 |

+

st.image("pages/NVLM/image_4.png", use_column_width=True)

|

| 124 |

+

st.markdown(""" """)

|

| 125 |

+

|

| 126 |

+

with st.expander ("Code"):

|

| 127 |

+

st.code("""

|

| 128 |

+

import torch

|

| 129 |

+

from transformers import AutoModel

|

| 130 |

+

|

| 131 |

+

path = "nvidia/NVLM-D-72B"

|

| 132 |

+

|

| 133 |

+

model = AutoModel.from_pretrained(

|

| 134 |

+

path,

|

| 135 |

+

torch_dtype=torch.bfloat16,

|

| 136 |

+

low_cpu_mem_usage=True,

|

| 137 |

+

use_flash_attn=False,

|

| 138 |

+

trust_remote_code=True).eval()

|

| 139 |

+

""")

|

| 140 |

+

|

| 141 |

+

st.info(translations[lang]["ressources"], icon="📚")

|

| 142 |

+

|

| 143 |

+

st.markdown(""" """)

|

| 144 |

+

st.markdown(""" """)

|

| 145 |

+

st.markdown(""" """)

|

| 146 |

+

col1, col2, col3= st.columns(3)

|

| 147 |

+

with col1:

|

| 148 |

+

if lang == "en":

|

| 149 |

+

if st.button('Previous paper', use_container_width=True):

|

| 150 |

+

switch_page("NVEagle")

|

| 151 |

+

else:

|

| 152 |

+

if st.button('Papier précédent', use_container_width=True):

|

| 153 |

+

switch_page("NVEagle")

|

| 154 |

+

with col2:

|

| 155 |

+

if lang == "en":

|

| 156 |

+

if st.button("Home", use_container_width=True):

|

| 157 |

+

switch_page("Home")

|

| 158 |

+

else:

|

| 159 |

+

if st.button("Accueil", use_container_width=True):

|

| 160 |

+

switch_page("Home")

|

| 161 |

+

with col3:

|

| 162 |

+

if lang == "en":

|

| 163 |

+

if st.button("Next paper", use_container_width=True):

|

| 164 |

+

switch_page("Home")

|

| 165 |

+

else:

|

| 166 |

+

if st.button("Papier suivant", use_container_width=True):

|

| 167 |

+

switch_page("Home")

|

pages/NVLM/image_1.png

ADDED

|

pages/NVLM/image_2.png

ADDED

|

pages/NVLM/image_3.png

ADDED

|

pages/NVLM/image_4.png

ADDED

|