+

+  +

+

+

+

+ +🤗 Diffusers is the go-to library for state-of-the-art pretrained diffusion models for generating images, audio, and even 3D structures of molecules. Whether you're looking for a simple inference solution or training your own diffusion models, 🤗 Diffusers is a modular toolbox that supports both. Our library is designed with a focus on [usability over performance](https://huggingface.co/docs/diffusers/conceptual/philosophy#usability-over-performance), [simple over easy](https://huggingface.co/docs/diffusers/conceptual/philosophy#simple-over-easy), and [customizability over abstractions](https://huggingface.co/docs/diffusers/conceptual/philosophy#tweakable-contributorfriendly-over-abstraction). + +🤗 Diffusers offers three core components: + +- State-of-the-art [diffusion pipelines](https://huggingface.co/docs/diffusers/api/pipelines/overview) that can be run in inference with just a few lines of code. +- Interchangeable noise [schedulers](https://huggingface.co/docs/diffusers/api/schedulers/overview) for different diffusion speeds and output quality. +- Pretrained [models](https://huggingface.co/docs/diffusers/api/models) that can be used as building blocks, and combined with schedulers, for creating your own end-to-end diffusion systems. + +## Installation + +We recommend installing 🤗 Diffusers in a virtual environment from PyPi or Conda. For more details about installing [PyTorch](https://pytorch.org/get-started/locally/) and [Flax](https://flax.readthedocs.io/en/latest/#installation), please refer to their official documentation. + +### PyTorch + +With `pip` (official package): + +```bash +pip install --upgrade diffusers[torch] +``` + +With `conda` (maintained by the community): + +```sh +conda install -c conda-forge diffusers +``` + +### Flax + +With `pip` (official package): + +```bash +pip install --upgrade diffusers[flax] +``` + +### Apple Silicon (M1/M2) support + +Please refer to the [How to use Stable Diffusion in Apple Silicon](https://huggingface.co/docs/diffusers/optimization/mps) guide. + +## Quickstart + +Generating outputs is super easy with 🤗 Diffusers. To generate an image from text, use the `from_pretrained` method to load any pretrained diffusion model (browse the [Hub](https://huggingface.co/models?library=diffusers&sort=downloads) for 4000+ checkpoints): + +```python +from diffusers import DiffusionPipeline +import torch + +pipeline = DiffusionPipeline.from_pretrained("runwayml/stable-diffusion-v1-5", torch_dtype=torch.float16) +pipeline.to("cuda") +pipeline("An image of a squirrel in Picasso style").images[0] +``` + +You can also dig into the models and schedulers toolbox to build your own diffusion system: + +```python +from diffusers import DDPMScheduler, UNet2DModel +from PIL import Image +import torch +import numpy as np + +scheduler = DDPMScheduler.from_pretrained("google/ddpm-cat-256") +model = UNet2DModel.from_pretrained("google/ddpm-cat-256").to("cuda") +scheduler.set_timesteps(50) + +sample_size = model.config.sample_size +noise = torch.randn((1, 3, sample_size, sample_size)).to("cuda") +input = noise + +for t in scheduler.timesteps: + with torch.no_grad(): + noisy_residual = model(input, t).sample + prev_noisy_sample = scheduler.step(noisy_residual, t, input).prev_sample + input = prev_noisy_sample + +image = (input / 2 + 0.5).clamp(0, 1) +image = image.cpu().permute(0, 2, 3, 1).numpy()[0] +image = Image.fromarray((image * 255).round().astype("uint8")) +image +``` + +Check out the [Quickstart](https://huggingface.co/docs/diffusers/quicktour) to launch your diffusion journey today! + +## How to navigate the documentation + +| **Documentation** | **What can I learn?** | +|---------------------------------------------------------------------|-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------| +| [Tutorial](https://huggingface.co/docs/diffusers/tutorials/tutorial_overview) | A basic crash course for learning how to use the library's most important features like using models and schedulers to build your own diffusion system, and training your own diffusion model. | +| [Loading](https://huggingface.co/docs/diffusers/using-diffusers/loading_overview) | Guides for how to load and configure all the components (pipelines, models, and schedulers) of the library, as well as how to use different schedulers. | +| [Pipelines for inference](https://huggingface.co/docs/diffusers/using-diffusers/pipeline_overview) | Guides for how to use pipelines for different inference tasks, batched generation, controlling generated outputs and randomness, and how to contribute a pipeline to the library. | +| [Optimization](https://huggingface.co/docs/diffusers/optimization/opt_overview) | Guides for how to optimize your diffusion model to run faster and consume less memory. | +| [Training](https://huggingface.co/docs/diffusers/training/overview) | Guides for how to train a diffusion model for different tasks with different training techniques. | +## Contribution + +We ❤️ contributions from the open-source community! +If you want to contribute to this library, please check out our [Contribution guide](https://github.com/huggingface/diffusers/blob/main/CONTRIBUTING.md). +You can look out for [issues](https://github.com/huggingface/diffusers/issues) you'd like to tackle to contribute to the library. +- See [Good first issues](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22good+first+issue%22) for general opportunities to contribute +- See [New model/pipeline](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22New+pipeline%2Fmodel%22) to contribute exciting new diffusion models / diffusion pipelines +- See [New scheduler](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22New+scheduler%22) + +Also, say 👋 in our public Discord channel . We discuss the hottest trends about diffusion models, help each other with contributions, personal projects or

+just hang out ☕.

+

+

+## Popular Tasks & Pipelines

+

+

. We discuss the hottest trends about diffusion models, help each other with contributions, personal projects or

+just hang out ☕.

+

+

+## Popular Tasks & Pipelines

+

+*Trained with spatial color palette* | A image with 8x8 color palette.|

|

| |

+|[TencentARC/t2iadapter_canny_sd14v1](https://huggingface.co/TencentARC/t2iadapter_canny_sd14v1)

|

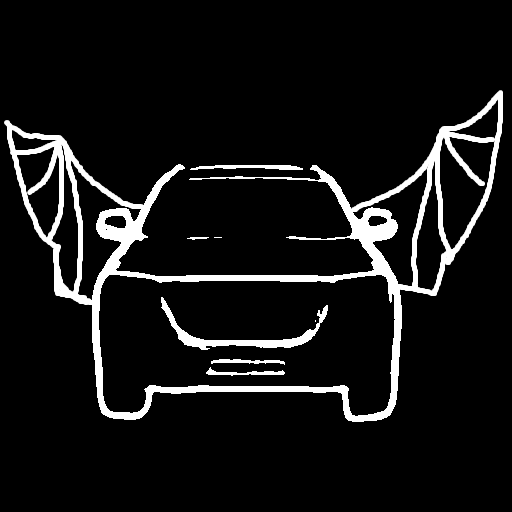

+|[TencentARC/t2iadapter_canny_sd14v1](https://huggingface.co/TencentARC/t2iadapter_canny_sd14v1)*Trained with canny edge detection* | A monochrome image with white edges on a black background.|

|

| |

+|[TencentARC/t2iadapter_sketch_sd14v1](https://huggingface.co/TencentARC/t2iadapter_sketch_sd14v1)

|

+|[TencentARC/t2iadapter_sketch_sd14v1](https://huggingface.co/TencentARC/t2iadapter_sketch_sd14v1)*Trained with [PidiNet](https://github.com/zhuoinoulu/pidinet) edge detection* | A hand-drawn monochrome image with white outlines on a black background.|

|

| |

+|[TencentARC/t2iadapter_depth_sd14v1](https://huggingface.co/TencentARC/t2iadapter_depth_sd14v1)

|

+|[TencentARC/t2iadapter_depth_sd14v1](https://huggingface.co/TencentARC/t2iadapter_depth_sd14v1)*Trained with Midas depth estimation* | A grayscale image with black representing deep areas and white representing shallow areas.|

|

| |

+|[TencentARC/t2iadapter_openpose_sd14v1](https://huggingface.co/TencentARC/t2iadapter_openpose_sd14v1)

|

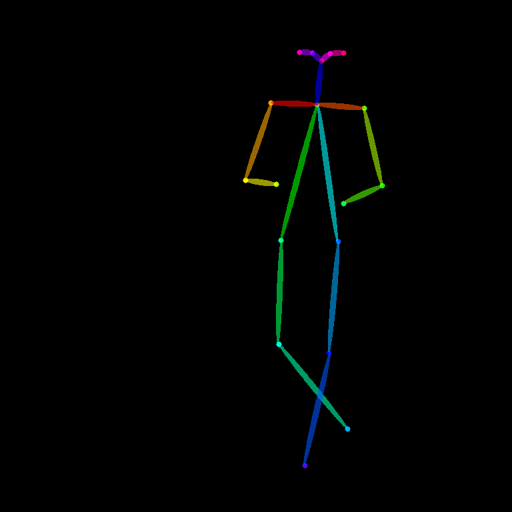

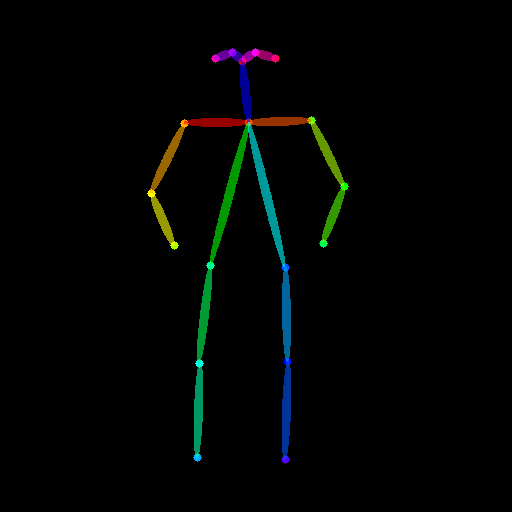

+|[TencentARC/t2iadapter_openpose_sd14v1](https://huggingface.co/TencentARC/t2iadapter_openpose_sd14v1)*Trained with OpenPose bone image* | A [OpenPose bone](https://github.com/CMU-Perceptual-Computing-Lab/openpose) image.|

|

| |

+|[TencentARC/t2iadapter_keypose_sd14v1](https://huggingface.co/TencentARC/t2iadapter_keypose_sd14v1)

|

+|[TencentARC/t2iadapter_keypose_sd14v1](https://huggingface.co/TencentARC/t2iadapter_keypose_sd14v1)*Trained with mmpose skeleton image* | A [mmpose skeleton](https://github.com/open-mmlab/mmpose) image.|

|

| |

+|[TencentARC/t2iadapter_seg_sd14v1](https://huggingface.co/TencentARC/t2iadapter_seg_sd14v1)

|

+|[TencentARC/t2iadapter_seg_sd14v1](https://huggingface.co/TencentARC/t2iadapter_seg_sd14v1)*Trained with semantic segmentation* | An [custom](https://github.com/TencentARC/T2I-Adapter/discussions/25) segmentation protocol image.|

|

| |

+|[TencentARC/t2iadapter_canny_sd15v2](https://huggingface.co/TencentARC/t2iadapter_canny_sd15v2)||

+|[TencentARC/t2iadapter_depth_sd15v2](https://huggingface.co/TencentARC/t2iadapter_depth_sd15v2)||

+|[TencentARC/t2iadapter_sketch_sd15v2](https://huggingface.co/TencentARC/t2iadapter_sketch_sd15v2)||

+|[TencentARC/t2iadapter_zoedepth_sd15v1](https://huggingface.co/TencentARC/t2iadapter_zoedepth_sd15v1)||

+|[Adapter/t2iadapter, subfolder='sketch_sdxl_1.0'](https://huggingface.co/Adapter/t2iadapter/tree/main/sketch_sdxl_1.0)||

+|[Adapter/t2iadapter, subfolder='canny_sdxl_1.0'](https://huggingface.co/Adapter/t2iadapter/tree/main/canny_sdxl_1.0)||

+|[Adapter/t2iadapter, subfolder='openpose_sdxl_1.0'](https://huggingface.co/Adapter/t2iadapter/tree/main/openpose_sdxl_1.0)||

+

+## Combining multiple adapters

+

+[`MultiAdapter`] can be used for applying multiple conditionings at once.

+

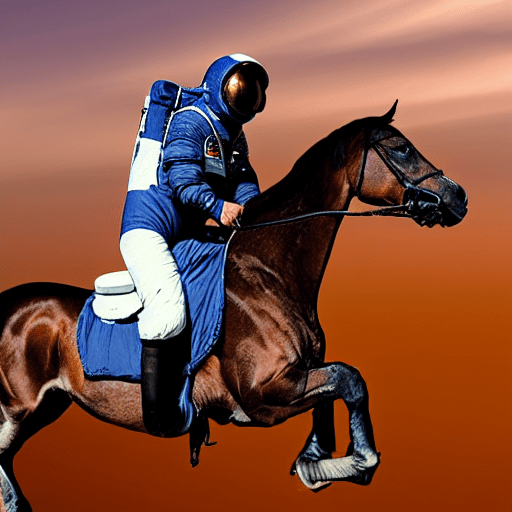

+Here we use the keypose adapter for the character posture and the depth adapter for creating the scene.

+

+```py

+import torch

+from PIL import Image

+from diffusers.utils import load_image

+

+cond_keypose = load_image(

+ "https://huggingface.co/datasets/diffusers/docs-images/resolve/main/t2i-adapter/keypose_sample_input.png"

+)

+cond_depth = load_image(

+ "https://huggingface.co/datasets/diffusers/docs-images/resolve/main/t2i-adapter/depth_sample_input.png"

+)

+cond = [[cond_keypose, cond_depth]]

+

+prompt = ["A man walking in an office room with a nice view"]

+```

+

+The two control images look as such:

+

+

+

+

+

+`MultiAdapter` combines keypose and depth adapters.

+

+`adapter_conditioning_scale` balances the relative influence of the different adapters.

+

+```py

+from diffusers import StableDiffusionAdapterPipeline, MultiAdapter

+

+adapters = MultiAdapter(

+ [

+ T2IAdapter.from_pretrained("TencentARC/t2iadapter_keypose_sd14v1"),

+ T2IAdapter.from_pretrained("TencentARC/t2iadapter_depth_sd14v1"),

+ ]

+)

+adapters = adapters.to(torch.float16)

+

+pipe = StableDiffusionAdapterPipeline.from_pretrained(

+ "CompVis/stable-diffusion-v1-4",

+ torch_dtype=torch.float16,

+ adapter=adapters,

+)

+

+images = pipe(prompt, cond, adapter_conditioning_scale=[0.8, 0.8])

+```

+

+

+

+

+## T2I Adapter vs ControlNet

+

+T2I-Adapter is similar to [ControlNet](https://huggingface.co/docs/diffusers/main/en/api/pipelines/controlnet).

+T2i-Adapter uses a smaller auxiliary network which is only run once for the entire diffusion process.

+However, T2I-Adapter performs slightly worse than ControlNet.

+

+## StableDiffusionAdapterPipeline

+[[autodoc]] StableDiffusionAdapterPipeline

+ - all

+ - __call__

+ - enable_attention_slicing

+ - disable_attention_slicing

+ - enable_vae_slicing

+ - disable_vae_slicing

+ - enable_xformers_memory_efficient_attention

+ - disable_xformers_memory_efficient_attention

+

+## StableDiffusionXLAdapterPipeline

+[[autodoc]] StableDiffusionXLAdapterPipeline

+ - all

+ - __call__

+ - enable_attention_slicing

+ - disable_attention_slicing

+ - enable_vae_slicing

+ - disable_vae_slicing

+ - enable_xformers_memory_efficient_attention

+ - disable_xformers_memory_efficient_attention

diff --git a/diffuserslocal/docs/source/en/api/pipelines/stable_diffusion/depth2img.md b/diffuserslocal/docs/source/en/api/pipelines/stable_diffusion/depth2img.md

new file mode 100644

index 0000000000000000000000000000000000000000..09814f387b724071d5c29a28dec9efd9b2bfc02f

--- /dev/null

+++ b/diffuserslocal/docs/source/en/api/pipelines/stable_diffusion/depth2img.md

@@ -0,0 +1,40 @@

+

+

+# Depth-to-image

+

+The Stable Diffusion model can also infer depth based on an image using [MiDas](https://github.com/isl-org/MiDaS). This allows you to pass a text prompt and an initial image to condition the generation of new images as well as a `depth_map` to preserve the image structure.

+

+

|

+|[TencentARC/t2iadapter_canny_sd15v2](https://huggingface.co/TencentARC/t2iadapter_canny_sd15v2)||

+|[TencentARC/t2iadapter_depth_sd15v2](https://huggingface.co/TencentARC/t2iadapter_depth_sd15v2)||

+|[TencentARC/t2iadapter_sketch_sd15v2](https://huggingface.co/TencentARC/t2iadapter_sketch_sd15v2)||

+|[TencentARC/t2iadapter_zoedepth_sd15v1](https://huggingface.co/TencentARC/t2iadapter_zoedepth_sd15v1)||

+|[Adapter/t2iadapter, subfolder='sketch_sdxl_1.0'](https://huggingface.co/Adapter/t2iadapter/tree/main/sketch_sdxl_1.0)||

+|[Adapter/t2iadapter, subfolder='canny_sdxl_1.0'](https://huggingface.co/Adapter/t2iadapter/tree/main/canny_sdxl_1.0)||

+|[Adapter/t2iadapter, subfolder='openpose_sdxl_1.0'](https://huggingface.co/Adapter/t2iadapter/tree/main/openpose_sdxl_1.0)||

+

+## Combining multiple adapters

+

+[`MultiAdapter`] can be used for applying multiple conditionings at once.

+

+Here we use the keypose adapter for the character posture and the depth adapter for creating the scene.

+

+```py

+import torch

+from PIL import Image

+from diffusers.utils import load_image

+

+cond_keypose = load_image(

+ "https://huggingface.co/datasets/diffusers/docs-images/resolve/main/t2i-adapter/keypose_sample_input.png"

+)

+cond_depth = load_image(

+ "https://huggingface.co/datasets/diffusers/docs-images/resolve/main/t2i-adapter/depth_sample_input.png"

+)

+cond = [[cond_keypose, cond_depth]]

+

+prompt = ["A man walking in an office room with a nice view"]

+```

+

+The two control images look as such:

+

+

+

+

+

+`MultiAdapter` combines keypose and depth adapters.

+

+`adapter_conditioning_scale` balances the relative influence of the different adapters.

+

+```py

+from diffusers import StableDiffusionAdapterPipeline, MultiAdapter

+

+adapters = MultiAdapter(

+ [

+ T2IAdapter.from_pretrained("TencentARC/t2iadapter_keypose_sd14v1"),

+ T2IAdapter.from_pretrained("TencentARC/t2iadapter_depth_sd14v1"),

+ ]

+)

+adapters = adapters.to(torch.float16)

+

+pipe = StableDiffusionAdapterPipeline.from_pretrained(

+ "CompVis/stable-diffusion-v1-4",

+ torch_dtype=torch.float16,

+ adapter=adapters,

+)

+

+images = pipe(prompt, cond, adapter_conditioning_scale=[0.8, 0.8])

+```

+

+

+

+

+## T2I Adapter vs ControlNet

+

+T2I-Adapter is similar to [ControlNet](https://huggingface.co/docs/diffusers/main/en/api/pipelines/controlnet).

+T2i-Adapter uses a smaller auxiliary network which is only run once for the entire diffusion process.

+However, T2I-Adapter performs slightly worse than ControlNet.

+

+## StableDiffusionAdapterPipeline

+[[autodoc]] StableDiffusionAdapterPipeline

+ - all

+ - __call__

+ - enable_attention_slicing

+ - disable_attention_slicing

+ - enable_vae_slicing

+ - disable_vae_slicing

+ - enable_xformers_memory_efficient_attention

+ - disable_xformers_memory_efficient_attention

+

+## StableDiffusionXLAdapterPipeline

+[[autodoc]] StableDiffusionXLAdapterPipeline

+ - all

+ - __call__

+ - enable_attention_slicing

+ - disable_attention_slicing

+ - enable_vae_slicing

+ - disable_vae_slicing

+ - enable_xformers_memory_efficient_attention

+ - disable_xformers_memory_efficient_attention

diff --git a/diffuserslocal/docs/source/en/api/pipelines/stable_diffusion/depth2img.md b/diffuserslocal/docs/source/en/api/pipelines/stable_diffusion/depth2img.md

new file mode 100644

index 0000000000000000000000000000000000000000..09814f387b724071d5c29a28dec9efd9b2bfc02f

--- /dev/null

+++ b/diffuserslocal/docs/source/en/api/pipelines/stable_diffusion/depth2img.md

@@ -0,0 +1,40 @@

+

+

+# Depth-to-image

+

+The Stable Diffusion model can also infer depth based on an image using [MiDas](https://github.com/isl-org/MiDaS). This allows you to pass a text prompt and an initial image to condition the generation of new images as well as a `depth_map` to preserve the image structure.

+

+| + Pipeline + | ++ Supported tasks + | ++ Space + | + +

|---|---|---|

| + StableDiffusion + | +text-to-image | + +

+ |

+

| + StableDiffusionImg2Img + | +image-to-image | + +

+ |

+

| + StableDiffusionInpaint + | +inpainting | + +

+ |

+

| + StableDiffusionDepth2Img + | +depth-to-image | + +

+ |

+

| + StableDiffusionImageVariation + | +image variation | + +

+ |

+

| + StableDiffusionPipelineSafe + | +filtered text-to-image | + +

+ |

+

| + StableDiffusion2 + | +text-to-image, inpainting, depth-to-image, super-resolution | + +

+ |

+

| + StableDiffusionXL + | +text-to-image, image-to-image | + +

+ |

+

| + StableDiffusionLatentUpscale + | +super-resolution | + +

+ |

+

| + StableDiffusionUpscale + | +super-resolution | +|

| + StableDiffusionLDM3D + | +text-to-rgb, text-to-depth | + +

+ |

+

+  +

+ |

+ +  +

+ |

+

+  +

+ |

+

+

+Whichever way you choose to contribute, we strive to be part of an open, welcoming, and kind community. Please, read our [code of conduct](https://github.com/huggingface/diffusers/blob/main/CODE_OF_CONDUCT.md) and be mindful to respect it during your interactions. We also recommend you become familiar with the [ethical guidelines](https://huggingface.co/docs/diffusers/conceptual/ethical_guidelines) that guide our project and ask you to adhere to the same principles of transparency and responsibility.

+

+We enormously value feedback from the community, so please do not be afraid to speak up if you believe you have valuable feedback that can help improve the library - every message, comment, issue, and pull request (PR) is read and considered.

+

+## Overview

+

+You can contribute in many ways ranging from answering questions on issues to adding new diffusion models to

+the core library.

+

+In the following, we give an overview of different ways to contribute, ranked by difficulty in ascending order. All of them are valuable to the community.

+

+* 1. Asking and answering questions on [the Diffusers discussion forum](https://discuss.huggingface.co/c/discussion-related-to-httpsgithubcomhuggingfacediffusers) or on [Discord](https://discord.gg/G7tWnz98XR).

+* 2. Opening new issues on [the GitHub Issues tab](https://github.com/huggingface/diffusers/issues/new/choose)

+* 3. Answering issues on [the GitHub Issues tab](https://github.com/huggingface/diffusers/issues)

+* 4. Fix a simple issue, marked by the "Good first issue" label, see [here](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22good+first+issue%22).

+* 5. Contribute to the [documentation](https://github.com/huggingface/diffusers/tree/main/docs/source).

+* 6. Contribute a [Community Pipeline](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3Acommunity-examples)

+* 7. Contribute to the [examples](https://github.com/huggingface/diffusers/tree/main/examples).

+* 8. Fix a more difficult issue, marked by the "Good second issue" label, see [here](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22Good+second+issue%22).

+* 9. Add a new pipeline, model, or scheduler, see ["New Pipeline/Model"](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22New+pipeline%2Fmodel%22) and ["New scheduler"](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22New+scheduler%22) issues. For this contribution, please have a look at [Design Philosophy](https://github.com/huggingface/diffusers/blob/main/PHILOSOPHY.md).

+

+As said before, **all contributions are valuable to the community**.

+In the following, we will explain each contribution a bit more in detail.

+

+For all contributions 4.-9. you will need to open a PR. It is explained in detail how to do so in [Opening a pull requst](#how-to-open-a-pr)

+

+### 1. Asking and answering questions on the Diffusers discussion forum or on the Diffusers Discord

+

+Any question or comment related to the Diffusers library can be asked on the [discussion forum](https://discuss.huggingface.co/c/discussion-related-to-httpsgithubcomhuggingfacediffusers/) or on [Discord](https://discord.gg/G7tWnz98XR). Such questions and comments include (but are not limited to):

+- Reports of training or inference experiments in an attempt to share knowledge

+- Presentation of personal projects

+- Questions to non-official training examples

+- Project proposals

+- General feedback

+- Paper summaries

+- Asking for help on personal projects that build on top of the Diffusers library

+- General questions

+- Ethical questions regarding diffusion models

+- ...

+

+Every question that is asked on the forum or on Discord actively encourages the community to publicly

+share knowledge and might very well help a beginner in the future that has the same question you're

+having. Please do pose any questions you might have.

+In the same spirit, you are of immense help to the community by answering such questions because this way you are publicly documenting knowledge for everybody to learn from.

+

+**Please** keep in mind that the more effort you put into asking or answering a question, the higher

+the quality of the publicly documented knowledge. In the same way, well-posed and well-answered questions create a high-quality knowledge database accessible to everybody, while badly posed questions or answers reduce the overall quality of the public knowledge database.

+In short, a high quality question or answer is *precise*, *concise*, *relevant*, *easy-to-understand*, *accesible*, and *well-formated/well-posed*. For more information, please have a look through the [How to write a good issue](#how-to-write-a-good-issue) section.

+

+**NOTE about channels**:

+[*The forum*](https://discuss.huggingface.co/c/discussion-related-to-httpsgithubcomhuggingfacediffusers/63) is much better indexed by search engines, such as Google. Posts are ranked by popularity rather than chronologically. Hence, it's easier to look up questions and answers that we posted some time ago.

+In addition, questions and answers posted in the forum can easily be linked to.

+In contrast, *Discord* has a chat-like format that invites fast back-and-forth communication.

+While it will most likely take less time for you to get an answer to your question on Discord, your

+question won't be visible anymore over time. Also, it's much harder to find information that was posted a while back on Discord. We therefore strongly recommend using the forum for high-quality questions and answers in an attempt to create long-lasting knowledge for the community. If discussions on Discord lead to very interesting answers and conclusions, we recommend posting the results on the forum to make the information more available for future readers.

+

+### 2. Opening new issues on the GitHub issues tab

+

+The 🧨 Diffusers library is robust and reliable thanks to the users who notify us of

+the problems they encounter. So thank you for reporting an issue.

+

+Remember, GitHub issues are reserved for technical questions directly related to the Diffusers library, bug reports, feature requests, or feedback on the library design.

+

+In a nutshell, this means that everything that is **not** related to the **code of the Diffusers library** (including the documentation) should **not** be asked on GitHub, but rather on either the [forum](https://discuss.huggingface.co/c/discussion-related-to-httpsgithubcomhuggingfacediffusers/63) or [Discord](https://discord.gg/G7tWnz98XR).

+

+**Please consider the following guidelines when opening a new issue**:

+- Make sure you have searched whether your issue has already been asked before (use the search bar on GitHub under Issues).

+- Please never report a new issue on another (related) issue. If another issue is highly related, please

+open a new issue nevertheless and link to the related issue.

+- Make sure your issue is written in English. Please use one of the great, free online translation services, such as [DeepL](https://www.deepl.com/translator) to translate from your native language to English if you are not comfortable in English.

+- Check whether your issue might be solved by updating to the newest Diffusers version. Before posting your issue, please make sure that `python -c "import diffusers; print(diffusers.__version__)"` is higher or matches the latest Diffusers version.

+- Remember that the more effort you put into opening a new issue, the higher the quality of your answer will be and the better the overall quality of the Diffusers issues.

+

+New issues usually include the following.

+

+#### 2.1. Reproducible, minimal bug reports.

+

+A bug report should always have a reproducible code snippet and be as minimal and concise as possible.

+This means in more detail:

+- Narrow the bug down as much as you can, **do not just dump your whole code file**

+- Format your code

+- Do not include any external libraries except for Diffusers depending on them.

+- **Always** provide all necessary information about your environment; for this, you can run: `diffusers-cli env` in your shell and copy-paste the displayed information to the issue.

+- Explain the issue. If the reader doesn't know what the issue is and why it is an issue, she cannot solve it.

+- **Always** make sure the reader can reproduce your issue with as little effort as possible. If your code snippet cannot be run because of missing libraries or undefined variables, the reader cannot help you. Make sure your reproducible code snippet is as minimal as possible and can be copy-pasted into a simple Python shell.

+- If in order to reproduce your issue a model and/or dataset is required, make sure the reader has access to that model or dataset. You can always upload your model or dataset to the [Hub](https://huggingface.co) to make it easily downloadable. Try to keep your model and dataset as small as possible, to make the reproduction of your issue as effortless as possible.

+

+For more information, please have a look through the [How to write a good issue](#how-to-write-a-good-issue) section.

+

+You can open a bug report [here](https://github.com/huggingface/diffusers/issues/new/choose).

+

+#### 2.2. Feature requests.

+

+A world-class feature request addresses the following points:

+

+1. Motivation first:

+* Is it related to a problem/frustration with the library? If so, please explain

+why. Providing a code snippet that demonstrates the problem is best.

+* Is it related to something you would need for a project? We'd love to hear

+about it!

+* Is it something you worked on and think could benefit the community?

+Awesome! Tell us what problem it solved for you.

+2. Write a *full paragraph* describing the feature;

+3. Provide a **code snippet** that demonstrates its future use;

+4. In case this is related to a paper, please attach a link;

+5. Attach any additional information (drawings, screenshots, etc.) you think may help.

+

+You can open a feature request [here](https://github.com/huggingface/diffusers/issues/new?assignees=&labels=&template=feature_request.md&title=).

+

+#### 2.3 Feedback.

+

+Feedback about the library design and why it is good or not good helps the core maintainers immensely to build a user-friendly library. To understand the philosophy behind the current design philosophy, please have a look [here](https://huggingface.co/docs/diffusers/conceptual/philosophy). If you feel like a certain design choice does not fit with the current design philosophy, please explain why and how it should be changed. If a certain design choice follows the design philosophy too much, hence restricting use cases, explain why and how it should be changed.

+If a certain design choice is very useful for you, please also leave a note as this is great feedback for future design decisions.

+

+You can open an issue about feedback [here](https://github.com/huggingface/diffusers/issues/new?assignees=&labels=&template=feedback.md&title=).

+

+#### 2.4 Technical questions.

+

+Technical questions are mainly about why certain code of the library was written in a certain way, or what a certain part of the code does. Please make sure to link to the code in question and please provide detail on

+why this part of the code is difficult to understand.

+

+You can open an issue about a technical question [here](https://github.com/huggingface/diffusers/issues/new?assignees=&labels=bug&template=bug-report.yml).

+

+#### 2.5 Proposal to add a new model, scheduler, or pipeline.

+

+If the diffusion model community released a new model, pipeline, or scheduler that you would like to see in the Diffusers library, please provide the following information:

+

+* Short description of the diffusion pipeline, model, or scheduler and link to the paper or public release.

+* Link to any of its open-source implementation.

+* Link to the model weights if they are available.

+

+If you are willing to contribute to the model yourself, let us know so we can best guide you. Also, don't forget

+to tag the original author of the component (model, scheduler, pipeline, etc.) by GitHub handle if you can find it.

+

+You can open a request for a model/pipeline/scheduler [here](https://github.com/huggingface/diffusers/issues/new?assignees=&labels=New+model%2Fpipeline%2Fscheduler&template=new-model-addition.yml).

+

+### 3. Answering issues on the GitHub issues tab

+

+Answering issues on GitHub might require some technical knowledge of Diffusers, but we encourage everybody to give it a try even if you are not 100% certain that your answer is correct.

+Some tips to give a high-quality answer to an issue:

+- Be as concise and minimal as possible

+- Stay on topic. An answer to the issue should concern the issue and only the issue.

+- Provide links to code, papers, or other sources that prove or encourage your point.

+- Answer in code. If a simple code snippet is the answer to the issue or shows how the issue can be solved, please provide a fully reproducible code snippet.

+

+Also, many issues tend to be simply off-topic, duplicates of other issues, or irrelevant. It is of great

+help to the maintainers if you can answer such issues, encouraging the author of the issue to be

+more precise, provide the link to a duplicated issue or redirect them to [the forum](https://discuss.huggingface.co/c/discussion-related-to-httpsgithubcomhuggingfacediffusers/63) or [Discord](https://discord.gg/G7tWnz98XR)

+

+If you have verified that the issued bug report is correct and requires a correction in the source code,

+please have a look at the next sections.

+

+For all of the following contributions, you will need to open a PR. It is explained in detail how to do so in the [Opening a pull requst](#how-to-open-a-pr) section.

+

+### 4. Fixing a `Good first issue`

+

+*Good first issues* are marked by the [Good first issue](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22good+first+issue%22) label. Usually, the issue already

+explains how a potential solution should look so that it is easier to fix.

+If the issue hasn't been closed and you would like to try to fix this issue, you can just leave a message "I would like to try this issue.". There are usually three scenarios:

+- a.) The issue description already proposes a fix. In this case and if the solution makes sense to you, you can open a PR or draft PR to fix it.

+- b.) The issue description does not propose a fix. In this case, you can ask what a proposed fix could look like and someone from the Diffusers team should answer shortly. If you have a good idea of how to fix it, feel free to directly open a PR.

+- c.) There is already an open PR to fix the issue, but the issue hasn't been closed yet. If the PR has gone stale, you can simply open a new PR and link to the stale PR. PRs often go stale if the original contributor who wanted to fix the issue suddenly cannot find the time anymore to proceed. This often happens in open-source and is very normal. In this case, the community will be very happy if you give it a new try and leverage the knowledge of the existing PR. If there is already a PR and it is active, you can help the author by giving suggestions, reviewing the PR or even asking whether you can contribute to the PR.

+

+

+### 5. Contribute to the documentation

+

+A good library **always** has good documentation! The official documentation is often one of the first points of contact for new users of the library, and therefore contributing to the documentation is a **highly

+valuable contribution**.

+

+Contributing to the library can have many forms:

+

+- Correcting spelling or grammatical errors.

+- Correct incorrect formatting of the docstring. If you see that the official documentation is weirdly displayed or a link is broken, we are very happy if you take some time to correct it.

+- Correct the shape or dimensions of a docstring input or output tensor.

+- Clarify documentation that is hard to understand or incorrect.

+- Update outdated code examples.

+- Translating the documentation to another language.

+

+Anything displayed on [the official Diffusers doc page](https://huggingface.co/docs/diffusers/index) is part of the official documentation and can be corrected, adjusted in the respective [documentation source](https://github.com/huggingface/diffusers/tree/main/docs/source).

+

+Please have a look at [this page](https://github.com/huggingface/diffusers/tree/main/docs) on how to verify changes made to the documentation locally.

+

+

+### 6. Contribute a community pipeline

+

+[Pipelines](https://huggingface.co/docs/diffusers/api/pipelines/overview) are usually the first point of contact between the Diffusers library and the user.

+Pipelines are examples of how to use Diffusers [models](https://huggingface.co/docs/diffusers/api/models) and [schedulers](https://huggingface.co/docs/diffusers/api/schedulers/overview).

+We support two types of pipelines:

+

+- Official Pipelines

+- Community Pipelines

+

+Both official and community pipelines follow the same design and consist of the same type of components.

+

+Official pipelines are tested and maintained by the core maintainers of Diffusers. Their code

+resides in [src/diffusers/pipelines](https://github.com/huggingface/diffusers/tree/main/src/diffusers/pipelines).

+In contrast, community pipelines are contributed and maintained purely by the **community** and are **not** tested.

+They reside in [examples/community](https://github.com/huggingface/diffusers/tree/main/examples/community) and while they can be accessed via the [PyPI diffusers package](https://pypi.org/project/diffusers/), their code is not part of the PyPI distribution.

+

+The reason for the distinction is that the core maintainers of the Diffusers library cannot maintain and test all

+possible ways diffusion models can be used for inference, but some of them may be of interest to the community.

+Officially released diffusion pipelines,

+such as Stable Diffusion are added to the core src/diffusers/pipelines package which ensures

+high quality of maintenance, no backward-breaking code changes, and testing.

+More bleeding edge pipelines should be added as community pipelines. If usage for a community pipeline is high, the pipeline can be moved to the official pipelines upon request from the community. This is one of the ways we strive to be a community-driven library.

+

+To add a community pipeline, one should add a

+

+Whichever way you choose to contribute, we strive to be part of an open, welcoming, and kind community. Please, read our [code of conduct](https://github.com/huggingface/diffusers/blob/main/CODE_OF_CONDUCT.md) and be mindful to respect it during your interactions. We also recommend you become familiar with the [ethical guidelines](https://huggingface.co/docs/diffusers/conceptual/ethical_guidelines) that guide our project and ask you to adhere to the same principles of transparency and responsibility.

+

+We enormously value feedback from the community, so please do not be afraid to speak up if you believe you have valuable feedback that can help improve the library - every message, comment, issue, and pull request (PR) is read and considered.

+

+## Overview

+

+You can contribute in many ways ranging from answering questions on issues to adding new diffusion models to

+the core library.

+

+In the following, we give an overview of different ways to contribute, ranked by difficulty in ascending order. All of them are valuable to the community.

+

+* 1. Asking and answering questions on [the Diffusers discussion forum](https://discuss.huggingface.co/c/discussion-related-to-httpsgithubcomhuggingfacediffusers) or on [Discord](https://discord.gg/G7tWnz98XR).

+* 2. Opening new issues on [the GitHub Issues tab](https://github.com/huggingface/diffusers/issues/new/choose)

+* 3. Answering issues on [the GitHub Issues tab](https://github.com/huggingface/diffusers/issues)

+* 4. Fix a simple issue, marked by the "Good first issue" label, see [here](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22good+first+issue%22).

+* 5. Contribute to the [documentation](https://github.com/huggingface/diffusers/tree/main/docs/source).

+* 6. Contribute a [Community Pipeline](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3Acommunity-examples)

+* 7. Contribute to the [examples](https://github.com/huggingface/diffusers/tree/main/examples).

+* 8. Fix a more difficult issue, marked by the "Good second issue" label, see [here](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22Good+second+issue%22).

+* 9. Add a new pipeline, model, or scheduler, see ["New Pipeline/Model"](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22New+pipeline%2Fmodel%22) and ["New scheduler"](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22New+scheduler%22) issues. For this contribution, please have a look at [Design Philosophy](https://github.com/huggingface/diffusers/blob/main/PHILOSOPHY.md).

+

+As said before, **all contributions are valuable to the community**.

+In the following, we will explain each contribution a bit more in detail.

+

+For all contributions 4.-9. you will need to open a PR. It is explained in detail how to do so in [Opening a pull requst](#how-to-open-a-pr)

+

+### 1. Asking and answering questions on the Diffusers discussion forum or on the Diffusers Discord

+

+Any question or comment related to the Diffusers library can be asked on the [discussion forum](https://discuss.huggingface.co/c/discussion-related-to-httpsgithubcomhuggingfacediffusers/) or on [Discord](https://discord.gg/G7tWnz98XR). Such questions and comments include (but are not limited to):

+- Reports of training or inference experiments in an attempt to share knowledge

+- Presentation of personal projects

+- Questions to non-official training examples

+- Project proposals

+- General feedback

+- Paper summaries

+- Asking for help on personal projects that build on top of the Diffusers library

+- General questions

+- Ethical questions regarding diffusion models

+- ...

+

+Every question that is asked on the forum or on Discord actively encourages the community to publicly

+share knowledge and might very well help a beginner in the future that has the same question you're

+having. Please do pose any questions you might have.

+In the same spirit, you are of immense help to the community by answering such questions because this way you are publicly documenting knowledge for everybody to learn from.

+

+**Please** keep in mind that the more effort you put into asking or answering a question, the higher

+the quality of the publicly documented knowledge. In the same way, well-posed and well-answered questions create a high-quality knowledge database accessible to everybody, while badly posed questions or answers reduce the overall quality of the public knowledge database.

+In short, a high quality question or answer is *precise*, *concise*, *relevant*, *easy-to-understand*, *accesible*, and *well-formated/well-posed*. For more information, please have a look through the [How to write a good issue](#how-to-write-a-good-issue) section.

+

+**NOTE about channels**:

+[*The forum*](https://discuss.huggingface.co/c/discussion-related-to-httpsgithubcomhuggingfacediffusers/63) is much better indexed by search engines, such as Google. Posts are ranked by popularity rather than chronologically. Hence, it's easier to look up questions and answers that we posted some time ago.

+In addition, questions and answers posted in the forum can easily be linked to.

+In contrast, *Discord* has a chat-like format that invites fast back-and-forth communication.

+While it will most likely take less time for you to get an answer to your question on Discord, your

+question won't be visible anymore over time. Also, it's much harder to find information that was posted a while back on Discord. We therefore strongly recommend using the forum for high-quality questions and answers in an attempt to create long-lasting knowledge for the community. If discussions on Discord lead to very interesting answers and conclusions, we recommend posting the results on the forum to make the information more available for future readers.

+

+### 2. Opening new issues on the GitHub issues tab

+

+The 🧨 Diffusers library is robust and reliable thanks to the users who notify us of

+the problems they encounter. So thank you for reporting an issue.

+

+Remember, GitHub issues are reserved for technical questions directly related to the Diffusers library, bug reports, feature requests, or feedback on the library design.

+

+In a nutshell, this means that everything that is **not** related to the **code of the Diffusers library** (including the documentation) should **not** be asked on GitHub, but rather on either the [forum](https://discuss.huggingface.co/c/discussion-related-to-httpsgithubcomhuggingfacediffusers/63) or [Discord](https://discord.gg/G7tWnz98XR).

+

+**Please consider the following guidelines when opening a new issue**:

+- Make sure you have searched whether your issue has already been asked before (use the search bar on GitHub under Issues).

+- Please never report a new issue on another (related) issue. If another issue is highly related, please

+open a new issue nevertheless and link to the related issue.

+- Make sure your issue is written in English. Please use one of the great, free online translation services, such as [DeepL](https://www.deepl.com/translator) to translate from your native language to English if you are not comfortable in English.

+- Check whether your issue might be solved by updating to the newest Diffusers version. Before posting your issue, please make sure that `python -c "import diffusers; print(diffusers.__version__)"` is higher or matches the latest Diffusers version.

+- Remember that the more effort you put into opening a new issue, the higher the quality of your answer will be and the better the overall quality of the Diffusers issues.

+

+New issues usually include the following.

+

+#### 2.1. Reproducible, minimal bug reports.

+

+A bug report should always have a reproducible code snippet and be as minimal and concise as possible.

+This means in more detail:

+- Narrow the bug down as much as you can, **do not just dump your whole code file**

+- Format your code

+- Do not include any external libraries except for Diffusers depending on them.

+- **Always** provide all necessary information about your environment; for this, you can run: `diffusers-cli env` in your shell and copy-paste the displayed information to the issue.

+- Explain the issue. If the reader doesn't know what the issue is and why it is an issue, she cannot solve it.

+- **Always** make sure the reader can reproduce your issue with as little effort as possible. If your code snippet cannot be run because of missing libraries or undefined variables, the reader cannot help you. Make sure your reproducible code snippet is as minimal as possible and can be copy-pasted into a simple Python shell.

+- If in order to reproduce your issue a model and/or dataset is required, make sure the reader has access to that model or dataset. You can always upload your model or dataset to the [Hub](https://huggingface.co) to make it easily downloadable. Try to keep your model and dataset as small as possible, to make the reproduction of your issue as effortless as possible.

+

+For more information, please have a look through the [How to write a good issue](#how-to-write-a-good-issue) section.

+

+You can open a bug report [here](https://github.com/huggingface/diffusers/issues/new/choose).

+

+#### 2.2. Feature requests.

+

+A world-class feature request addresses the following points:

+

+1. Motivation first:

+* Is it related to a problem/frustration with the library? If so, please explain

+why. Providing a code snippet that demonstrates the problem is best.

+* Is it related to something you would need for a project? We'd love to hear

+about it!

+* Is it something you worked on and think could benefit the community?

+Awesome! Tell us what problem it solved for you.

+2. Write a *full paragraph* describing the feature;

+3. Provide a **code snippet** that demonstrates its future use;

+4. In case this is related to a paper, please attach a link;

+5. Attach any additional information (drawings, screenshots, etc.) you think may help.

+

+You can open a feature request [here](https://github.com/huggingface/diffusers/issues/new?assignees=&labels=&template=feature_request.md&title=).

+

+#### 2.3 Feedback.

+

+Feedback about the library design and why it is good or not good helps the core maintainers immensely to build a user-friendly library. To understand the philosophy behind the current design philosophy, please have a look [here](https://huggingface.co/docs/diffusers/conceptual/philosophy). If you feel like a certain design choice does not fit with the current design philosophy, please explain why and how it should be changed. If a certain design choice follows the design philosophy too much, hence restricting use cases, explain why and how it should be changed.

+If a certain design choice is very useful for you, please also leave a note as this is great feedback for future design decisions.

+

+You can open an issue about feedback [here](https://github.com/huggingface/diffusers/issues/new?assignees=&labels=&template=feedback.md&title=).

+

+#### 2.4 Technical questions.

+

+Technical questions are mainly about why certain code of the library was written in a certain way, or what a certain part of the code does. Please make sure to link to the code in question and please provide detail on

+why this part of the code is difficult to understand.

+

+You can open an issue about a technical question [here](https://github.com/huggingface/diffusers/issues/new?assignees=&labels=bug&template=bug-report.yml).

+

+#### 2.5 Proposal to add a new model, scheduler, or pipeline.

+

+If the diffusion model community released a new model, pipeline, or scheduler that you would like to see in the Diffusers library, please provide the following information:

+

+* Short description of the diffusion pipeline, model, or scheduler and link to the paper or public release.

+* Link to any of its open-source implementation.

+* Link to the model weights if they are available.

+

+If you are willing to contribute to the model yourself, let us know so we can best guide you. Also, don't forget

+to tag the original author of the component (model, scheduler, pipeline, etc.) by GitHub handle if you can find it.

+

+You can open a request for a model/pipeline/scheduler [here](https://github.com/huggingface/diffusers/issues/new?assignees=&labels=New+model%2Fpipeline%2Fscheduler&template=new-model-addition.yml).

+

+### 3. Answering issues on the GitHub issues tab

+

+Answering issues on GitHub might require some technical knowledge of Diffusers, but we encourage everybody to give it a try even if you are not 100% certain that your answer is correct.

+Some tips to give a high-quality answer to an issue:

+- Be as concise and minimal as possible

+- Stay on topic. An answer to the issue should concern the issue and only the issue.

+- Provide links to code, papers, or other sources that prove or encourage your point.

+- Answer in code. If a simple code snippet is the answer to the issue or shows how the issue can be solved, please provide a fully reproducible code snippet.

+

+Also, many issues tend to be simply off-topic, duplicates of other issues, or irrelevant. It is of great

+help to the maintainers if you can answer such issues, encouraging the author of the issue to be

+more precise, provide the link to a duplicated issue or redirect them to [the forum](https://discuss.huggingface.co/c/discussion-related-to-httpsgithubcomhuggingfacediffusers/63) or [Discord](https://discord.gg/G7tWnz98XR)

+

+If you have verified that the issued bug report is correct and requires a correction in the source code,

+please have a look at the next sections.

+

+For all of the following contributions, you will need to open a PR. It is explained in detail how to do so in the [Opening a pull requst](#how-to-open-a-pr) section.

+

+### 4. Fixing a `Good first issue`

+

+*Good first issues* are marked by the [Good first issue](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22good+first+issue%22) label. Usually, the issue already

+explains how a potential solution should look so that it is easier to fix.

+If the issue hasn't been closed and you would like to try to fix this issue, you can just leave a message "I would like to try this issue.". There are usually three scenarios:

+- a.) The issue description already proposes a fix. In this case and if the solution makes sense to you, you can open a PR or draft PR to fix it.

+- b.) The issue description does not propose a fix. In this case, you can ask what a proposed fix could look like and someone from the Diffusers team should answer shortly. If you have a good idea of how to fix it, feel free to directly open a PR.

+- c.) There is already an open PR to fix the issue, but the issue hasn't been closed yet. If the PR has gone stale, you can simply open a new PR and link to the stale PR. PRs often go stale if the original contributor who wanted to fix the issue suddenly cannot find the time anymore to proceed. This often happens in open-source and is very normal. In this case, the community will be very happy if you give it a new try and leverage the knowledge of the existing PR. If there is already a PR and it is active, you can help the author by giving suggestions, reviewing the PR or even asking whether you can contribute to the PR.

+

+

+### 5. Contribute to the documentation

+

+A good library **always** has good documentation! The official documentation is often one of the first points of contact for new users of the library, and therefore contributing to the documentation is a **highly

+valuable contribution**.

+

+Contributing to the library can have many forms:

+

+- Correcting spelling or grammatical errors.

+- Correct incorrect formatting of the docstring. If you see that the official documentation is weirdly displayed or a link is broken, we are very happy if you take some time to correct it.

+- Correct the shape or dimensions of a docstring input or output tensor.

+- Clarify documentation that is hard to understand or incorrect.

+- Update outdated code examples.

+- Translating the documentation to another language.

+

+Anything displayed on [the official Diffusers doc page](https://huggingface.co/docs/diffusers/index) is part of the official documentation and can be corrected, adjusted in the respective [documentation source](https://github.com/huggingface/diffusers/tree/main/docs/source).

+

+Please have a look at [this page](https://github.com/huggingface/diffusers/tree/main/docs) on how to verify changes made to the documentation locally.

+

+

+### 6. Contribute a community pipeline

+

+[Pipelines](https://huggingface.co/docs/diffusers/api/pipelines/overview) are usually the first point of contact between the Diffusers library and the user.

+Pipelines are examples of how to use Diffusers [models](https://huggingface.co/docs/diffusers/api/models) and [schedulers](https://huggingface.co/docs/diffusers/api/schedulers/overview).

+We support two types of pipelines:

+

+- Official Pipelines

+- Community Pipelines

+

+Both official and community pipelines follow the same design and consist of the same type of components.

+

+Official pipelines are tested and maintained by the core maintainers of Diffusers. Their code

+resides in [src/diffusers/pipelines](https://github.com/huggingface/diffusers/tree/main/src/diffusers/pipelines).

+In contrast, community pipelines are contributed and maintained purely by the **community** and are **not** tested.

+They reside in [examples/community](https://github.com/huggingface/diffusers/tree/main/examples/community) and while they can be accessed via the [PyPI diffusers package](https://pypi.org/project/diffusers/), their code is not part of the PyPI distribution.

+

+The reason for the distinction is that the core maintainers of the Diffusers library cannot maintain and test all

+possible ways diffusion models can be used for inference, but some of them may be of interest to the community.

+Officially released diffusion pipelines,

+such as Stable Diffusion are added to the core src/diffusers/pipelines package which ensures

+high quality of maintenance, no backward-breaking code changes, and testing.

+More bleeding edge pipelines should be added as community pipelines. If usage for a community pipeline is high, the pipeline can be moved to the official pipelines upon request from the community. This is one of the ways we strive to be a community-driven library.

+

+To add a community pipeline, one should add a

+

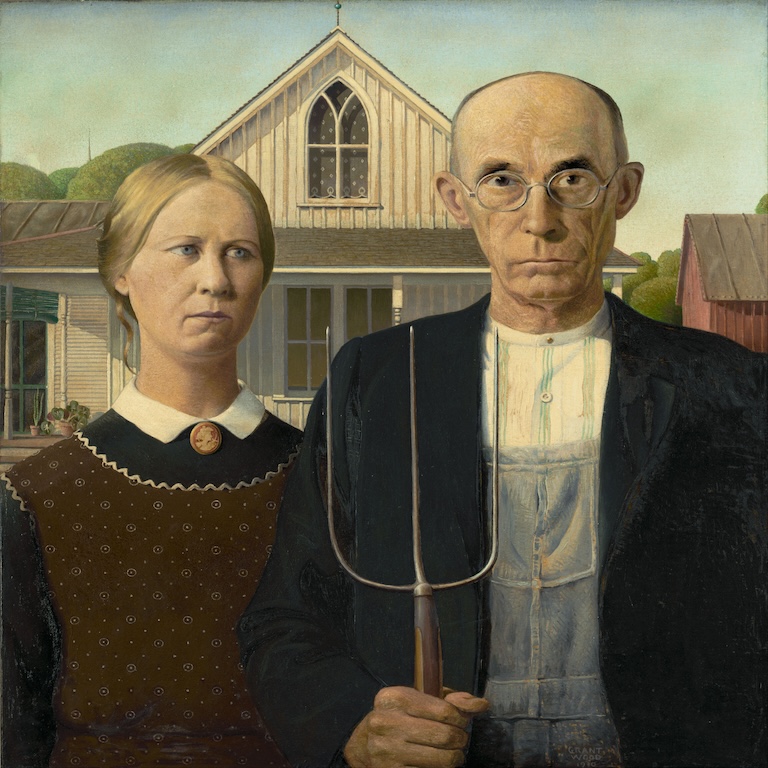

+ Real images.

+

+

+ Fake images.

+

+

+  +

+

+

Learn the fundamental skills you need to start generating outputs, build your own diffusion system, and train a diffusion model. We recommend starting here if you're using 🤗 Diffusers for the first time!

+ +Practical guides for helping you load pipelines, models, and schedulers. You'll also learn how to use pipelines for specific tasks, control how outputs are generated, optimize for inference speed, and different training techniques.

+ +Understand why the library was designed the way it was, and learn more about the ethical guidelines and safety implementations for using the library.

+ +Technical descriptions of how 🤗 Diffusers classes and methods work.

+ + +

+ +

+ +

+no compile** | **torch nightly -

no compile** | **torch 2.0 -

compile** | **torch nightly -

compile** | +|:---:|:---:|:---:|:---:|:---:| +| SD - txt2img | 21.66 | 23.13 | 44.03 | 49.74 | +| SD - img2img | 21.81 | 22.40 | 43.92 | 46.32 | +| SD - inpaint | 22.24 | 23.23 | 43.76 | 49.25 | +| SD - controlnet | 15.02 | 15.82 | 32.13 | 36.08 | +| IF | 20.21 /

13.84 /

24.00 | 20.12 /

13.70 /

24.03 | ❌ | 97.34 /

27.23 /

111.66 | + +### A100 (batch size: 4) + +| **Pipeline** | **torch 2.0 -

no compile** | **torch nightly -

no compile** | **torch 2.0 -

compile** | **torch nightly -

compile** | +|:---:|:---:|:---:|:---:|:---:| +| SD - txt2img | 11.6 | 13.12 | 14.62 | 17.27 | +| SD - img2img | 11.47 | 13.06 | 14.66 | 17.25 | +| SD - inpaint | 11.67 | 13.31 | 14.88 | 17.48 | +| SD - controlnet | 8.28 | 9.38 | 10.51 | 12.41 | +| IF | 25.02 | 18.04 | ❌ | 48.47 | + +### A100 (batch size: 16) + +| **Pipeline** | **torch 2.0 -

no compile** | **torch nightly -

no compile** | **torch 2.0 -

compile** | **torch nightly -

compile** | +|:---:|:---:|:---:|:---:|:---:| +| SD - txt2img | 3.04 | 3.6 | 3.83 | 4.68 | +| SD - img2img | 2.98 | 3.58 | 3.83 | 4.67 | +| SD - inpaint | 3.04 | 3.66 | 3.9 | 4.76 | +| SD - controlnet | 2.15 | 2.58 | 2.74 | 3.35 | +| IF | 8.78 | 9.82 | ❌ | 16.77 | + +### V100 (batch size: 1) + +| **Pipeline** | **torch 2.0 -

no compile** | **torch nightly -

no compile** | **torch 2.0 -

compile** | **torch nightly -

compile** | +|:---:|:---:|:---:|:---:|:---:| +| SD - txt2img | 18.99 | 19.14 | 20.95 | 22.17 | +| SD - img2img | 18.56 | 19.18 | 20.95 | 22.11 | +| SD - inpaint | 19.14 | 19.06 | 21.08 | 22.20 | +| SD - controlnet | 13.48 | 13.93 | 15.18 | 15.88 | +| IF | 20.01 /

9.08 /

23.34 | 19.79 /

8.98 /

24.10 | ❌ | 55.75 /

11.57 /

57.67 | + +### V100 (batch size: 4) + +| **Pipeline** | **torch 2.0 -

no compile** | **torch nightly -

no compile** | **torch 2.0 -

compile** | **torch nightly -

compile** | +|:---:|:---:|:---:|:---:|:---:| +| SD - txt2img | 5.96 | 5.89 | 6.83 | 6.86 | +| SD - img2img | 5.90 | 5.91 | 6.81 | 6.82 | +| SD - inpaint | 5.99 | 6.03 | 6.93 | 6.95 | +| SD - controlnet | 4.26 | 4.29 | 4.92 | 4.93 | +| IF | 15.41 | 14.76 | ❌ | 22.95 | + +### V100 (batch size: 16) + +| **Pipeline** | **torch 2.0 -

no compile** | **torch nightly -

no compile** | **torch 2.0 -

compile** | **torch nightly -

compile** | +|:---:|:---:|:---:|:---:|:---:| +| SD - txt2img | 1.66 | 1.66 | 1.92 | 1.90 | +| SD - img2img | 1.65 | 1.65 | 1.91 | 1.89 | +| SD - inpaint | 1.69 | 1.69 | 1.95 | 1.93 | +| SD - controlnet | 1.19 | 1.19 | OOM after warmup | 1.36 | +| IF | 5.43 | 5.29 | ❌ | 7.06 | + +### T4 (batch size: 1) + +| **Pipeline** | **torch 2.0 -

no compile** | **torch nightly -

no compile** | **torch 2.0 -

compile** | **torch nightly -

compile** | +|:---:|:---:|:---:|:---:|:---:| +| SD - txt2img | 6.9 | 6.95 | 7.3 | 7.56 | +| SD - img2img | 6.84 | 6.99 | 7.04 | 7.55 | +| SD - inpaint | 6.91 | 6.7 | 7.01 | 7.37 | +| SD - controlnet | 4.89 | 4.86 | 5.35 | 5.48 | +| IF | 17.42 /

2.47 /

18.52 | 16.96 /

2.45 /

18.69 | ❌ | 24.63 /

2.47 /

23.39 | + +### T4 (batch size: 4) + +| **Pipeline** | **torch 2.0 -

no compile** | **torch nightly -

no compile** | **torch 2.0 -

compile** | **torch nightly -

compile** | +|:---:|:---:|:---:|:---:|:---:| +| SD - txt2img | 1.79 | 1.79 | 2.03 | 1.99 | +| SD - img2img | 1.77 | 1.77 | 2.05 | 2.04 | +| SD - inpaint | 1.81 | 1.82 | 2.09 | 2.09 | +| SD - controlnet | 1.34 | 1.27 | 1.47 | 1.46 | +| IF | 5.79 | 5.61 | ❌ | 7.39 | + +### T4 (batch size: 16) + +| **Pipeline** | **torch 2.0 -

no compile** | **torch nightly -

no compile** | **torch 2.0 -

compile** | **torch nightly -

compile** | +|:---:|:---:|:---:|:---:|:---:| +| SD - txt2img | 2.34s | 2.30s | OOM after 2nd iteration | 1.99s | +| SD - img2img | 2.35s | 2.31s | OOM after warmup | 2.00s | +| SD - inpaint | 2.30s | 2.26s | OOM after 2nd iteration | 1.95s | +| SD - controlnet | OOM after 2nd iteration | OOM after 2nd iteration | OOM after warmup | OOM after warmup | +| IF * | 1.44 | 1.44 | ❌ | 1.94 | + +### RTX 3090 (batch size: 1) + +| **Pipeline** | **torch 2.0 -

no compile** | **torch nightly -

no compile** | **torch 2.0 -

compile** | **torch nightly -

compile** | +|:---:|:---:|:---:|:---:|:---:| +| SD - txt2img | 22.56 | 22.84 | 23.84 | 25.69 | +| SD - img2img | 22.25 | 22.61 | 24.1 | 25.83 | +| SD - inpaint | 22.22 | 22.54 | 24.26 | 26.02 | +| SD - controlnet | 16.03 | 16.33 | 17.38 | 18.56 | +| IF | 27.08 /

9.07 /

31.23 | 26.75 /

8.92 /

31.47 | ❌ | 68.08 /

11.16 /

65.29 | + +### RTX 3090 (batch size: 4) + +| **Pipeline** | **torch 2.0 -

no compile** | **torch nightly -

no compile** | **torch 2.0 -

compile** | **torch nightly -

compile** | +|:---:|:---:|:---:|:---:|:---:| +| SD - txt2img | 6.46 | 6.35 | 7.29 | 7.3 | +| SD - img2img | 6.33 | 6.27 | 7.31 | 7.26 | +| SD - inpaint | 6.47 | 6.4 | 7.44 | 7.39 | +| SD - controlnet | 4.59 | 4.54 | 5.27 | 5.26 | +| IF | 16.81 | 16.62 | ❌ | 21.57 | + +### RTX 3090 (batch size: 16) + +| **Pipeline** | **torch 2.0 -

no compile** | **torch nightly -

no compile** | **torch 2.0 -

compile** | **torch nightly -

compile** | +|:---:|:---:|:---:|:---:|:---:| +| SD - txt2img | 1.7 | 1.69 | 1.93 | 1.91 | +| SD - img2img | 1.68 | 1.67 | 1.93 | 1.9 | +| SD - inpaint | 1.72 | 1.71 | 1.97 | 1.94 | +| SD - controlnet | 1.23 | 1.22 | 1.4 | 1.38 | +| IF | 5.01 | 5.00 | ❌ | 6.33 | + +### RTX 4090 (batch size: 1) + +| **Pipeline** | **torch 2.0 -

no compile** | **torch nightly -

no compile** | **torch 2.0 -

compile** | **torch nightly -

compile** | +|:---:|:---:|:---:|:---:|:---:| +| SD - txt2img | 40.5 | 41.89 | 44.65 | 49.81 | +| SD - img2img | 40.39 | 41.95 | 44.46 | 49.8 | +| SD - inpaint | 40.51 | 41.88 | 44.58 | 49.72 | +| SD - controlnet | 29.27 | 30.29 | 32.26 | 36.03 | +| IF | 69.71 /

18.78 /

85.49 | 69.13 /

18.80 /

85.56 | ❌ | 124.60 /

26.37 /

138.79 | + +### RTX 4090 (batch size: 4) + +| **Pipeline** | **torch 2.0 -

no compile** | **torch nightly -

no compile** | **torch 2.0 -

compile** | **torch nightly -

compile** | +|:---:|:---:|:---:|:---:|:---:| +| SD - txt2img | 12.62 | 12.84 | 15.32 | 15.59 | +| SD - img2img | 12.61 | 12,.79 | 15.35 | 15.66 | +| SD - inpaint | 12.65 | 12.81 | 15.3 | 15.58 | +| SD - controlnet | 9.1 | 9.25 | 11.03 | 11.22 | +| IF | 31.88 | 31.14 | ❌ | 43.92 | + +### RTX 4090 (batch size: 16) + +| **Pipeline** | **torch 2.0 -

no compile** | **torch nightly -

no compile** | **torch 2.0 -

compile** | **torch nightly -

compile** | +|:---:|:---:|:---:|:---:|:---:| +| SD - txt2img | 3.17 | 3.2 | 3.84 | 3.85 | +| SD - img2img | 3.16 | 3.2 | 3.84 | 3.85 | +| SD - inpaint | 3.17 | 3.2 | 3.85 | 3.85 | +| SD - controlnet | 2.23 | 2.3 | 2.7 | 2.75 | +| IF | 9.26 | 9.2 | ❌ | 13.31 | + +## Notes + +* Follow this [PR](https://github.com/huggingface/diffusers/pull/3313) for more details on the environment used for conducting the benchmarks. +* For the DeepFloyd IF pipeline where batch sizes > 1, we only used a batch size of > 1 in the first IF pipeline for text-to-image generation and NOT for upscaling. That means the two upscaling pipelines received a batch size of 1. + +*Thanks to [Horace He](https://github.com/Chillee) from the PyTorch team for their support in improving our support of `torch.compile()` in Diffusers.* \ No newline at end of file diff --git a/diffuserslocal/docs/source/en/optimization/xformers.md b/diffuserslocal/docs/source/en/optimization/xformers.md new file mode 100644 index 0000000000000000000000000000000000000000..e5aa4d106ad2e211c0484acb7ebe588ea8e72ec9 --- /dev/null +++ b/diffuserslocal/docs/source/en/optimization/xformers.md @@ -0,0 +1,35 @@ + + +# xFormers + +We recommend [xFormers](https://github.com/facebookresearch/xformers) for both inference and training. In our tests, the optimizations performed in the attention blocks allow for both faster speed and reduced memory consumption. + +Install xFormers from `pip`: + +```bash +pip install xformers +``` + +

+

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+

+  +

+

+  +

+

+

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+fine-tuning** | **Comments** | +| :-------------------------------------------------: | :----------------: | :-------------------------------------: | :---------------------------------------------------------------------------------------------: | +| [Instruct Pix2Pix](#instruct-pix2pix) | ✅ | ❌ | Can additionally be

fine-tuned for better

performance on specific

edit instructions. | +| [Pix2Pix Zero](#pix2pixzero) | ✅ | ❌ | | +| [Attend and Excite](#attend-and-excite) | ✅ | ❌ | | +| [Semantic Guidance](#semantic-guidance) | ✅ | ❌ | | +| [Self-attention Guidance](#self-attention-guidance) | ✅ | ❌ | | +| [Depth2Image](#depth2image) | ✅ | ❌ | | +| [MultiDiffusion Panorama](#multidiffusion-panorama) | ✅ | ❌ | | +| [DreamBooth](#dreambooth) | ❌ | ✅ | | +| [Textual Inversion](#textual-inversion) | ❌ | ✅ | | +| [ControlNet](#controlnet) | ✅ | ❌ | A ControlNet can be

trained/fine-tuned on

a custom conditioning. | +| [Prompt Weighting](#prompt-weighting) | ✅ | ❌ | | +| [Custom Diffusion](#custom-diffusion) | ❌ | ✅ | | +| [Model Editing](#model-editing) | ✅ | ❌ | | +| [DiffEdit](#diffedit) | ✅ | ❌ | | +| [T2I-Adapter](#t2i-adapter) | ✅ | ❌ | | +| [Fabric](#fabric) | ✅ | ❌ | | +## Instruct Pix2Pix + +[Paper](https://arxiv.org/abs/2211.09800) + +[Instruct Pix2Pix](../api/pipelines/pix2pix) is fine-tuned from stable diffusion to support editing input images. It takes as inputs an image and a prompt describing an edit, and it outputs the edited image. +Instruct Pix2Pix has been explicitly trained to work well with [InstructGPT](https://openai.com/blog/instruction-following/)-like prompts. + +See [here](../api/pipelines/pix2pix) for more information on how to use it. + +## Pix2Pix Zero + +[Paper](https://arxiv.org/abs/2302.03027) + +[Pix2Pix Zero](../api/pipelines/pix2pix_zero) allows modifying an image so that one concept or subject is translated to another one while preserving general image semantics. + +The denoising process is guided from one conceptual embedding towards another conceptual embedding. The intermediate latents are optimized during the denoising process to push the attention maps towards reference attention maps. The reference attention maps are from the denoising process of the input image and are used to encourage semantic preservation. + +Pix2Pix Zero can be used both to edit synthetic images as well as real images. + +- To edit synthetic images, one first generates an image given a caption. + Next, we generate image captions for the concept that shall be edited and for the new target concept. We can use a model like [Flan-T5](https://huggingface.co/docs/transformers/model_doc/flan-t5) for this purpose. Then, "mean" prompt embeddings for both the source and target concepts are created via the text encoder. Finally, the pix2pix-zero algorithm is used to edit the synthetic image. +- To edit a real image, one first generates an image caption using a model like [BLIP](https://huggingface.co/docs/transformers/model_doc/blip). Then one applies ddim inversion on the prompt and image to generate "inverse" latents. Similar to before, "mean" prompt embeddings for both source and target concepts are created and finally the pix2pix-zero algorithm in combination with the "inverse" latents is used to edit the image. + +

+

+  +

+  +

+ +

+  +

+  +

+  +

+  +

+ +

+  +

+  +

+  +

+  +

+ +

+  +

+  +

+  +

+  |

|  |

+

+Play around with the Spaces below and see if you notice a difference between generated images with and without a depth map!

+

+

diff --git a/diffuserslocal/docs/source/en/using-diffusers/diffedit.md b/diffuserslocal/docs/source/en/using-diffusers/diffedit.md

new file mode 100644

index 0000000000000000000000000000000000000000..4c32eb4c482b86a86004c2870b79f307dc5553e5

--- /dev/null

+++ b/diffuserslocal/docs/source/en/using-diffusers/diffedit.md

@@ -0,0 +1,262 @@

+# DiffEdit

+

+[[open-in-colab]]

+

+Image editing typically requires providing a mask of the area to be edited. DiffEdit automatically generates the mask for you based on a text query, making it easier overall to create a mask without image editing software. The DiffEdit algorithm works in three steps:

+

+1. the diffusion model denoises an image conditioned on some query text and reference text which produces different noise estimates for different areas of the image; the difference is used to infer a mask to identify which area of the image needs to be changed to match the query text

+2. the input image is encoded into latent space with DDIM

+3. the latents are decoded with the diffusion model conditioned on the text query, using the mask as a guide such that pixels outside the mask remain the same as in the input image

+

+This guide will show you how to use DiffEdit to edit images without manually creating a mask.

+

+Before you begin, make sure you have the following libraries installed:

+

+```py

+# uncomment to install the necessary libraries in Colab

+#!pip install diffusers transformers accelerate safetensors

+```

+

+The [`StableDiffusionDiffEditPipeline`] requires an image mask and a set of partially inverted latents. The image mask is generated from the [`~StableDiffusionDiffEditPipeline.generate_mask`] function, and includes two parameters, `source_prompt` and `target_prompt`. These parameters determine what to edit in the image. For example, if you want to change a bowl of *fruits* to a bowl of *pears*, then:

+

+```py

+source_prompt = "a bowl of fruits"

+target_prompt = "a bowl of pears"

+```

+

+The partially inverted latents are generated from the [`~StableDiffusionDiffEditPipeline.invert`] function, and it is generally a good idea to include a `prompt` or *caption* describing the image to help guide the inverse latent sampling process. The caption can often be your `source_prompt`, but feel free to experiment with other text descriptions!

+

+Let's load the pipeline, scheduler, inverse scheduler, and enable some optimizations to reduce memory usage:

+

+```py

+import torch

+from diffusers import DDIMScheduler, DDIMInverseScheduler, StableDiffusionDiffEditPipeline

+

+pipeline = StableDiffusionDiffEditPipeline.from_pretrained(

+ "stabilityai/stable-diffusion-2-1",

+ torch_dtype=torch.float16,

+ safety_checker=None,

+ use_safetensors=True,

+)

+pipeline.scheduler = DDIMScheduler.from_config(pipeline.scheduler.config)

+pipeline.inverse_scheduler = DDIMInverseScheduler.from_config(pipeline.scheduler.config)

+pipeline.enable_model_cpu_offload()

+pipeline.enable_vae_slicing()

+```

+

+Load the image to edit:

+

+```py

+from diffusers.utils import load_image

+

+img_url = "https://github.com/Xiang-cd/DiffEdit-stable-diffusion/raw/main/assets/origin.png"

+raw_image = load_image(img_url).convert("RGB").resize((768, 768))

+```

+

+Use the [`~StableDiffusionDiffEditPipeline.generate_mask`] function to generate the image mask. You'll need to pass it the `source_prompt` and `target_prompt` to specify what to edit in the image:

+

+```py

+source_prompt = "a bowl of fruits"

+target_prompt = "a basket of pears"

+mask_image = pipeline.generate_mask(

+ image=raw_image,

+ source_prompt=source_prompt,

+ target_prompt=target_prompt,

+)

+```

+

+Next, create the inverted latents and pass it a caption describing the image:

+

+```py

+inv_latents = pipeline.invert(prompt=source_prompt, image=raw_image).latents

+```

+

+Finally, pass the image mask and inverted latents to the pipeline. The `target_prompt` becomes the `prompt` now, and the `source_prompt` is used as the `negative_prompt`:

+

+```py

+image = pipeline(

+ prompt=target_prompt,

+ mask_image=mask_image,

+ image_latents=inv_latents,

+ negative_prompt=source_prompt,

+).images[0]

+image.save("edited_image.png")

+```

+

+

|

+

+Play around with the Spaces below and see if you notice a difference between generated images with and without a depth map!

+

+

diff --git a/diffuserslocal/docs/source/en/using-diffusers/diffedit.md b/diffuserslocal/docs/source/en/using-diffusers/diffedit.md

new file mode 100644

index 0000000000000000000000000000000000000000..4c32eb4c482b86a86004c2870b79f307dc5553e5

--- /dev/null

+++ b/diffuserslocal/docs/source/en/using-diffusers/diffedit.md

@@ -0,0 +1,262 @@

+# DiffEdit

+

+[[open-in-colab]]

+

+Image editing typically requires providing a mask of the area to be edited. DiffEdit automatically generates the mask for you based on a text query, making it easier overall to create a mask without image editing software. The DiffEdit algorithm works in three steps:

+

+1. the diffusion model denoises an image conditioned on some query text and reference text which produces different noise estimates for different areas of the image; the difference is used to infer a mask to identify which area of the image needs to be changed to match the query text

+2. the input image is encoded into latent space with DDIM

+3. the latents are decoded with the diffusion model conditioned on the text query, using the mask as a guide such that pixels outside the mask remain the same as in the input image

+

+This guide will show you how to use DiffEdit to edit images without manually creating a mask.

+

+Before you begin, make sure you have the following libraries installed:

+

+```py

+# uncomment to install the necessary libraries in Colab

+#!pip install diffusers transformers accelerate safetensors

+```

+

+The [`StableDiffusionDiffEditPipeline`] requires an image mask and a set of partially inverted latents. The image mask is generated from the [`~StableDiffusionDiffEditPipeline.generate_mask`] function, and includes two parameters, `source_prompt` and `target_prompt`. These parameters determine what to edit in the image. For example, if you want to change a bowl of *fruits* to a bowl of *pears*, then:

+

+```py

+source_prompt = "a bowl of fruits"

+target_prompt = "a bowl of pears"

+```

+

+The partially inverted latents are generated from the [`~StableDiffusionDiffEditPipeline.invert`] function, and it is generally a good idea to include a `prompt` or *caption* describing the image to help guide the inverse latent sampling process. The caption can often be your `source_prompt`, but feel free to experiment with other text descriptions!

+

+Let's load the pipeline, scheduler, inverse scheduler, and enable some optimizations to reduce memory usage:

+

+```py

+import torch

+from diffusers import DDIMScheduler, DDIMInverseScheduler, StableDiffusionDiffEditPipeline

+

+pipeline = StableDiffusionDiffEditPipeline.from_pretrained(

+ "stabilityai/stable-diffusion-2-1",

+ torch_dtype=torch.float16,

+ safety_checker=None,

+ use_safetensors=True,

+)

+pipeline.scheduler = DDIMScheduler.from_config(pipeline.scheduler.config)

+pipeline.inverse_scheduler = DDIMInverseScheduler.from_config(pipeline.scheduler.config)

+pipeline.enable_model_cpu_offload()

+pipeline.enable_vae_slicing()

+```

+

+Load the image to edit:

+

+```py

+from diffusers.utils import load_image

+

+img_url = "https://github.com/Xiang-cd/DiffEdit-stable-diffusion/raw/main/assets/origin.png"

+raw_image = load_image(img_url).convert("RGB").resize((768, 768))

+```

+

+Use the [`~StableDiffusionDiffEditPipeline.generate_mask`] function to generate the image mask. You'll need to pass it the `source_prompt` and `target_prompt` to specify what to edit in the image:

+

+```py

+source_prompt = "a bowl of fruits"

+target_prompt = "a basket of pears"