Spaces:

Runtime error

Runtime error

Commit

•

e3a6a57

1

Parent(s):

689d7e7

Upload 194 files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +3 -0

- app.py +87 -0

- stylegan3-fun/.github/FUNDING.yml +3 -0

- stylegan3-fun/.github/ISSUE_TEMPLATE/bug_report.md +35 -0

- stylegan3-fun/.github/ISSUE_TEMPLATE/feature_request.md +20 -0

- stylegan3-fun/.gitignore +173 -0

- stylegan3-fun/Dockerfile +19 -0

- stylegan3-fun/LICENSE.txt +97 -0

- stylegan3-fun/README.md +507 -0

- stylegan3-fun/__pycache__/legacy.cpython-311.pyc +0 -0

- stylegan3-fun/__pycache__/legacy.cpython-38.pyc +0 -0

- stylegan3-fun/__pycache__/legacy.cpython-39.pyc +0 -0

- stylegan3-fun/avg_spectra.py +276 -0

- stylegan3-fun/calc_metrics.py +188 -0

- stylegan3-fun/dataset_tool.py +547 -0

- stylegan3-fun/discriminator_synthesis.py +1007 -0

- stylegan3-fun/dnnlib/__init__.py +9 -0

- stylegan3-fun/dnnlib/__pycache__/__init__.cpython-311.pyc +0 -0

- stylegan3-fun/dnnlib/__pycache__/__init__.cpython-38.pyc +0 -0

- stylegan3-fun/dnnlib/__pycache__/__init__.cpython-39.pyc +0 -0

- stylegan3-fun/dnnlib/__pycache__/util.cpython-311.pyc +0 -0

- stylegan3-fun/dnnlib/__pycache__/util.cpython-38.pyc +0 -0

- stylegan3-fun/dnnlib/__pycache__/util.cpython-39.pyc +0 -0

- stylegan3-fun/dnnlib/util.py +491 -0

- stylegan3-fun/docs/avg_spectra_screen0.png +0 -0

- stylegan3-fun/docs/avg_spectra_screen0_half.png +0 -0

- stylegan3-fun/docs/configs.md +201 -0

- stylegan3-fun/docs/dataset-tool-help.txt +70 -0

- stylegan3-fun/docs/stylegan3-teaser-1920x1006.png +3 -0

- stylegan3-fun/docs/train-help.txt +53 -0

- stylegan3-fun/docs/troubleshooting.md +31 -0

- stylegan3-fun/docs/visualizer_screen0.png +3 -0

- stylegan3-fun/docs/visualizer_screen0_half.png +0 -0

- stylegan3-fun/environment.yml +35 -0

- stylegan3-fun/gen_images.py +145 -0

- stylegan3-fun/gen_video.py +281 -0

- stylegan3-fun/generate.py +838 -0

- stylegan3-fun/gui_utils/__init__.py +9 -0

- stylegan3-fun/gui_utils/__pycache__/__init__.cpython-311.pyc +0 -0

- stylegan3-fun/gui_utils/__pycache__/__init__.cpython-38.pyc +0 -0

- stylegan3-fun/gui_utils/__pycache__/__init__.cpython-39.pyc +0 -0

- stylegan3-fun/gui_utils/__pycache__/gl_utils.cpython-311.pyc +0 -0

- stylegan3-fun/gui_utils/__pycache__/gl_utils.cpython-38.pyc +0 -0

- stylegan3-fun/gui_utils/__pycache__/gl_utils.cpython-39.pyc +0 -0

- stylegan3-fun/gui_utils/__pycache__/glfw_window.cpython-311.pyc +0 -0

- stylegan3-fun/gui_utils/__pycache__/glfw_window.cpython-38.pyc +0 -0

- stylegan3-fun/gui_utils/__pycache__/glfw_window.cpython-39.pyc +0 -0

- stylegan3-fun/gui_utils/__pycache__/imgui_utils.cpython-311.pyc +0 -0

- stylegan3-fun/gui_utils/__pycache__/imgui_utils.cpython-38.pyc +0 -0

- stylegan3-fun/gui_utils/__pycache__/imgui_utils.cpython-39.pyc +0 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,6 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

stylegan3-fun/docs/stylegan3-teaser-1920x1006.png filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

stylegan3-fun/docs/visualizer_screen0.png filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

stylegan3-fun/out/seed0002.png filter=lfs diff=lfs merge=lfs -text

|

app.py

ADDED

|

@@ -0,0 +1,87 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

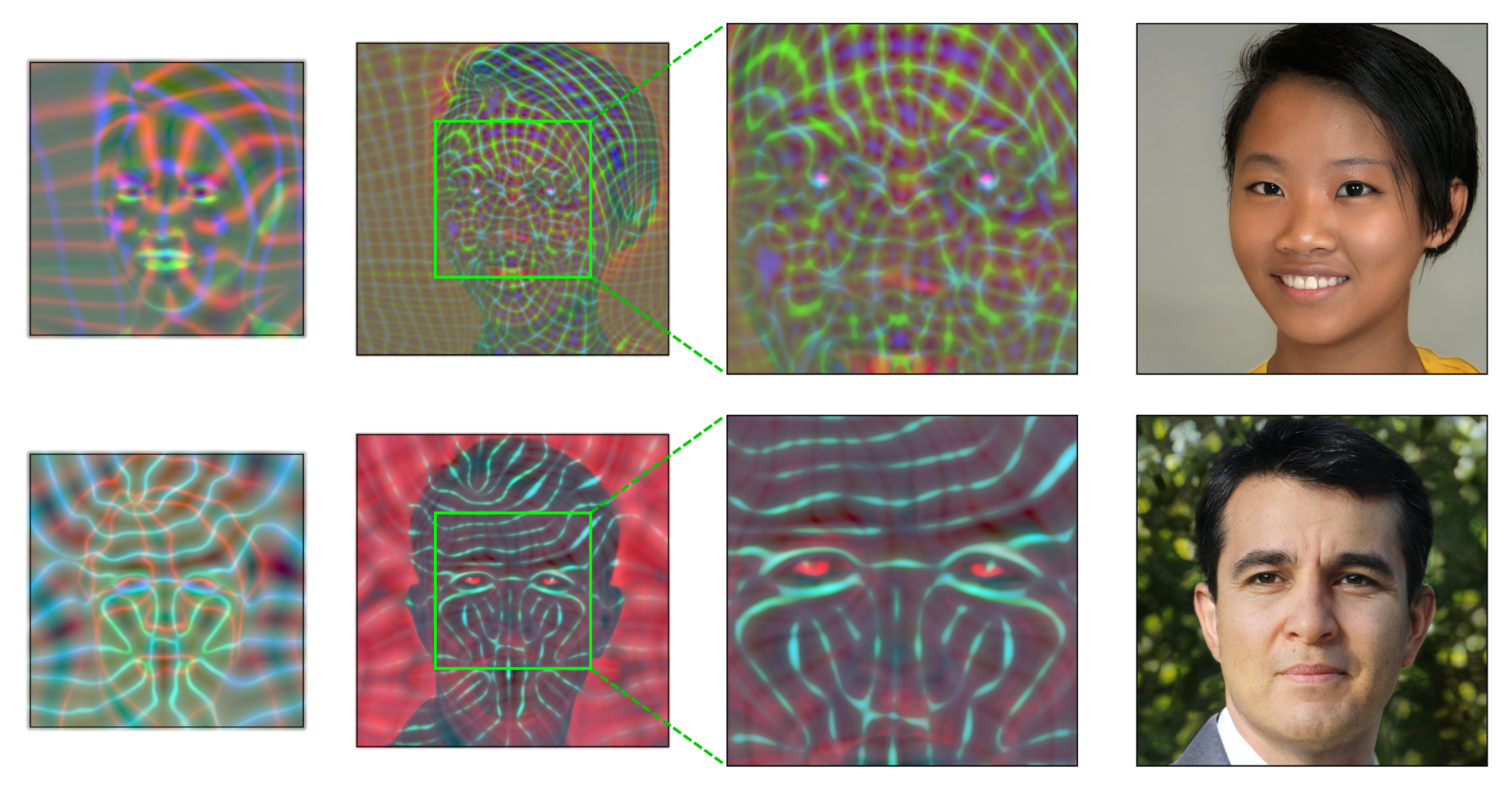

|

|

|

|

|

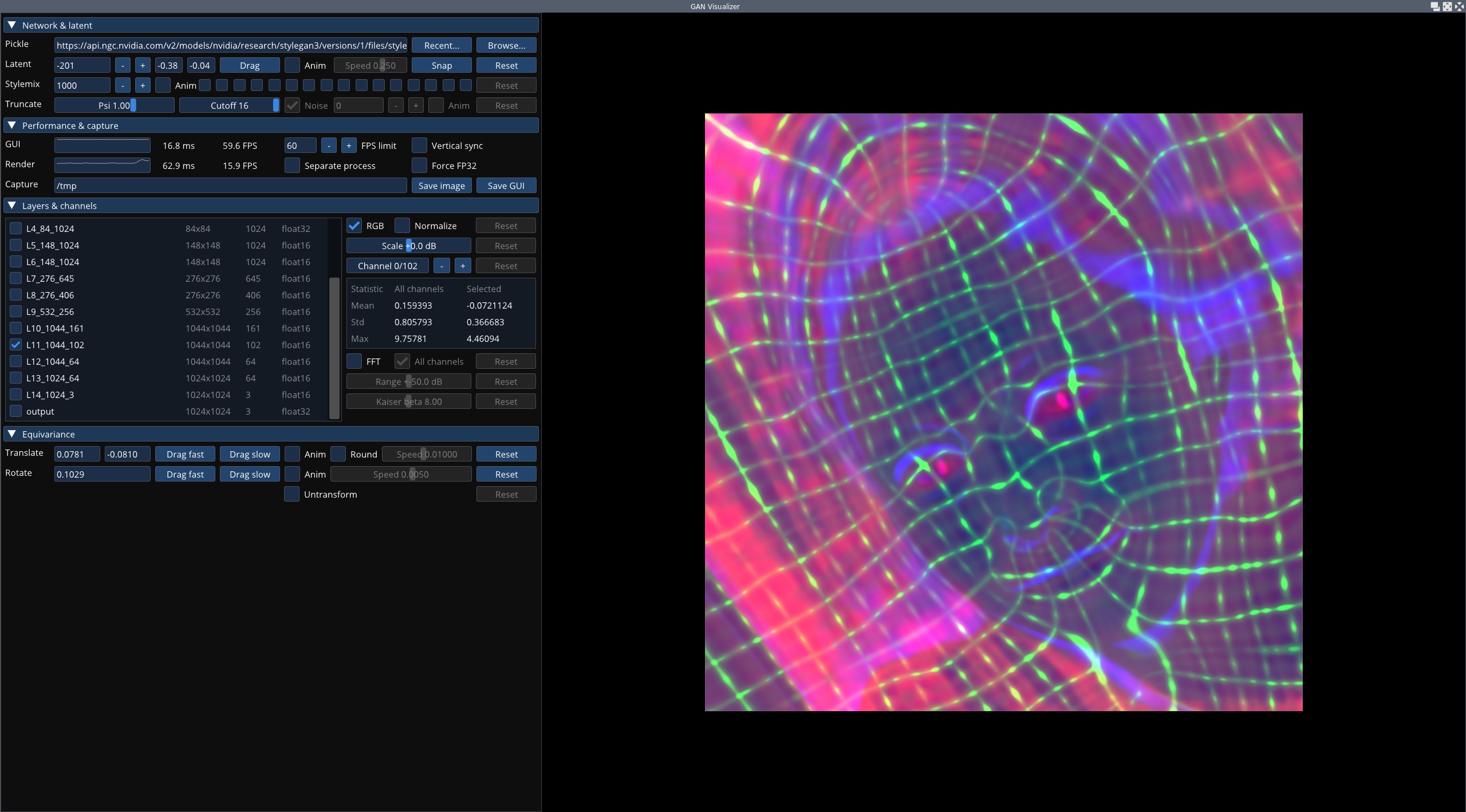

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import sys

|

| 3 |

+

import re

|

| 4 |

+

from typing import List, Optional, Tuple, Union

|

| 5 |

+

import random

|

| 6 |

+

|

| 7 |

+

sys.path.append('stylegan3-fun') # change this to the path where dnnlib is located

|

| 8 |

+

|

| 9 |

+

import numpy as np

|

| 10 |

+

import PIL.Image

|

| 11 |

+

import torch

|

| 12 |

+

import streamlit as st

|

| 13 |

+

import dnnlib

|

| 14 |

+

import legacy

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

def parse_range(s: Union[str, List]) -> List[int]:

|

| 18 |

+

'''Parse a comma separated list of numbers or ranges and return a list of ints.

|

| 19 |

+

|

| 20 |

+

Example: '1,2,5-10' returns [1, 2, 5, 6, 7]

|

| 21 |

+

'''

|

| 22 |

+

if isinstance(s, list): return s

|

| 23 |

+

ranges = []

|

| 24 |

+

range_re = re.compile(r'^(\d+)-(\d+)$')

|

| 25 |

+

for p in s.split(','):

|

| 26 |

+

m = range_re.match(p)

|

| 27 |

+

if m:

|

| 28 |

+

ranges.extend(range(int(m.group(1)), int(m.group(2))+1))

|

| 29 |

+

else:

|

| 30 |

+

ranges.append(int(p))

|

| 31 |

+

return ranges

|

| 32 |

+

|

| 33 |

+

def make_transform(translate: Tuple[float,float], angle: float):

|

| 34 |

+

m = np.eye(3)

|

| 35 |

+

s = np.sin(angle/360.0*np.pi*2)

|

| 36 |

+

c = np.cos(angle/360.0*np.pi*2)

|

| 37 |

+

m[0][0] = c

|

| 38 |

+

m[0][1] = s

|

| 39 |

+

m[0][2] = translate[0]

|

| 40 |

+

m[1][0] = -s

|

| 41 |

+

m[1][1] = c

|

| 42 |

+

m[1][2] = translate[1]

|

| 43 |

+

return m

|

| 44 |

+

|

| 45 |

+

def generate_image(network_pkl: str, seed: int, truncation_psi: float, noise_mode: str, translate: Tuple[float,float], rotate: float, class_idx: Optional[int]):

|

| 46 |

+

print('Loading networks from "%s"...' % network_pkl)

|

| 47 |

+

device = torch.device('cuda')

|

| 48 |

+

with open(network_pkl, 'rb') as f:

|

| 49 |

+

G = legacy.load_network_pkl(f)['G_ema'].to(device) # type: ignore

|

| 50 |

+

|

| 51 |

+

# Labels.

|

| 52 |

+

label = torch.zeros([1, G.c_dim], device=device)

|

| 53 |

+

if G.c_dim != 0:

|

| 54 |

+

if class_idx is None:

|

| 55 |

+

raise Exception('Must specify class label when using a conditional network')

|

| 56 |

+

label[:, class_idx] = 1

|

| 57 |

+

|

| 58 |

+

z = torch.from_numpy(np.random.RandomState(seed).randn(1, G.z_dim)).to(device)

|

| 59 |

+

|

| 60 |

+

if hasattr(G.synthesis, 'input'):

|

| 61 |

+

m = make_transform(translate, rotate)

|

| 62 |

+

m = np.linalg.inv(m)

|

| 63 |

+

G.synthesis.input.transform.copy_(torch.from_numpy(m))

|

| 64 |

+

|

| 65 |

+

img = G(z, label, truncation_psi=truncation_psi, noise_mode=noise_mode)

|

| 66 |

+

img = (img.permute(0, 2, 3, 1) * 127.5 + 128).clamp(0, 255).to(torch.uint8)

|

| 67 |

+

img = PIL.Image.fromarray(img[0].cpu().numpy(), 'RGB')

|

| 68 |

+

return img

|

| 69 |

+

|

| 70 |

+

def main():

|

| 71 |

+

st.title('Kpop Face Generator')

|

| 72 |

+

|

| 73 |

+

st.write('Press the button below to generate a new image:')

|

| 74 |

+

if st.button('Generate'):

|

| 75 |

+

network_pkl = 'kpopGG.pkl'

|

| 76 |

+

seed = random.randint(0, 99999)

|

| 77 |

+

truncation_psi = 0.45

|

| 78 |

+

noise_mode = 'const'

|

| 79 |

+

translate = (0.0, 0.0)

|

| 80 |

+

rotate = 0.0

|

| 81 |

+

class_idx = None

|

| 82 |

+

|

| 83 |

+

image = generate_image(network_pkl, seed, truncation_psi, noise_mode, translate, rotate, class_idx)

|

| 84 |

+

st.image(image)

|

| 85 |

+

|

| 86 |

+

if __name__ == "__main__":

|

| 87 |

+

main()

|

stylegan3-fun/.github/FUNDING.yml

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# These are supported funding model platforms

|

| 2 |

+

|

| 3 |

+

github: PDillis # Replace with up to 4 GitHub Sponsors-enabled usernames e.g., [user1, user2]

|

stylegan3-fun/.github/ISSUE_TEMPLATE/bug_report.md

ADDED

|

@@ -0,0 +1,35 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

name: Bug report

|

| 3 |

+

about: Create a report to help us improve

|

| 4 |

+

title: ''

|

| 5 |

+

labels: ''

|

| 6 |

+

assignees: ''

|

| 7 |

+

|

| 8 |

+

---

|

| 9 |

+

|

| 10 |

+

**Describe the bug**

|

| 11 |

+

A clear and concise description of what the bug is.

|

| 12 |

+

|

| 13 |

+

**To Reproduce**

|

| 14 |

+

Steps to reproduce the behavior:

|

| 15 |

+

1. In '...' directory, run command '...'

|

| 16 |

+

2. See error (copy&paste full log, including exceptions and **stacktraces**).

|

| 17 |

+

|

| 18 |

+

Please copy&paste text instead of screenshots for better searchability.

|

| 19 |

+

|

| 20 |

+

**Expected behavior**

|

| 21 |

+

A clear and concise description of what you expected to happen.

|

| 22 |

+

|

| 23 |

+

**Screenshots**

|

| 24 |

+

If applicable, add screenshots to help explain your problem.

|

| 25 |

+

|

| 26 |

+

**Desktop (please complete the following information):**

|

| 27 |

+

- OS: [e.g. Linux Ubuntu 20.04, Windows 10]

|

| 28 |

+

- PyTorch version (e.g., pytorch 1.9.0)

|

| 29 |

+

- CUDA toolkit version (e.g., CUDA 11.4)

|

| 30 |

+

- NVIDIA driver version

|

| 31 |

+

- GPU [e.g., Titan V, RTX 3090]

|

| 32 |

+

- Docker: did you use Docker? If yes, specify docker image URL (e.g., nvcr.io/nvidia/pytorch:21.08-py3)

|

| 33 |

+

|

| 34 |

+

**Additional context**

|

| 35 |

+

Add any other context about the problem here.

|

stylegan3-fun/.github/ISSUE_TEMPLATE/feature_request.md

ADDED

|

@@ -0,0 +1,20 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

name: Feature request

|

| 3 |

+

about: Suggest an idea for this project

|

| 4 |

+

title: ''

|

| 5 |

+

labels: ''

|

| 6 |

+

assignees: ''

|

| 7 |

+

|

| 8 |

+

---

|

| 9 |

+

|

| 10 |

+

**Is your feature request related to a problem? Please describe.**

|

| 11 |

+

A clear and concise description of what the problem is. Ex. I'm always frustrated when [...]

|

| 12 |

+

|

| 13 |

+

**Describe the solution you'd like**

|

| 14 |

+

A clear and concise description of what you want to happen.

|

| 15 |

+

|

| 16 |

+

**Describe alternatives you've considered**

|

| 17 |

+

A clear and concise description of any alternative solutions or features you've considered.

|

| 18 |

+

|

| 19 |

+

**Additional context**

|

| 20 |

+

Add any other context or screenshots about the feature request here.

|

stylegan3-fun/.gitignore

ADDED

|

@@ -0,0 +1,173 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Byte-compiled / optimized / DLL files

|

| 2 |

+

*/**/__pycache__/

|

| 3 |

+

__pycache__/

|

| 4 |

+

*.py[cod]

|

| 5 |

+

*$py.class

|

| 6 |

+

|

| 7 |

+

# C extensions

|

| 8 |

+

*.so

|

| 9 |

+

|

| 10 |

+

# Distribution / packaging

|

| 11 |

+

.Python

|

| 12 |

+

build/

|

| 13 |

+

develop-eggs/

|

| 14 |

+

dist/

|

| 15 |

+

downloads/

|

| 16 |

+

eggs/

|

| 17 |

+

.eggs/

|

| 18 |

+

lib/

|

| 19 |

+

lib64/

|

| 20 |

+

parts/

|

| 21 |

+

sdist/

|

| 22 |

+

var/

|

| 23 |

+

wheels/

|

| 24 |

+

share/python-wheels/

|

| 25 |

+

*.egg-info/

|

| 26 |

+

.installed.cfg

|

| 27 |

+

*.egg

|

| 28 |

+

MANIFEST

|

| 29 |

+

|

| 30 |

+

# PyInstaller

|

| 31 |

+

# Usually these files are written by a python script from a template

|

| 32 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 33 |

+

*.manifest

|

| 34 |

+

*.spec

|

| 35 |

+

|

| 36 |

+

# Installer logs

|

| 37 |

+

pip-log.txt

|

| 38 |

+

pip-delete-this-directory.txt

|

| 39 |

+

|

| 40 |

+

# Unit test / coverage reports

|

| 41 |

+

htmlcov/

|

| 42 |

+

.tox/

|

| 43 |

+

.nox/

|

| 44 |

+

.coverage

|

| 45 |

+

.coverage.*

|

| 46 |

+

.cache

|

| 47 |

+

nosetests.xml

|

| 48 |

+

coverage.xml

|

| 49 |

+

*.cover

|

| 50 |

+

*.py,cover

|

| 51 |

+

.hypothesis/

|

| 52 |

+

.pytest_cache/

|

| 53 |

+

cover/

|

| 54 |

+

|

| 55 |

+

# Translations

|

| 56 |

+

*.mo

|

| 57 |

+

*.pot

|

| 58 |

+

|

| 59 |

+

# Django stuff:

|

| 60 |

+

*.log

|

| 61 |

+

local_settings.py

|

| 62 |

+

db.sqlite3

|

| 63 |

+

db.sqlite3-journal

|

| 64 |

+

|

| 65 |

+

# Flask stuff:

|

| 66 |

+

instance/

|

| 67 |

+

.webassets-cache

|

| 68 |

+

|

| 69 |

+

# Scrapy stuff:

|

| 70 |

+

.scrapy

|

| 71 |

+

|

| 72 |

+

# Sphinx documentation

|

| 73 |

+

docs/_build/

|

| 74 |

+

|

| 75 |

+

# PyBuilder

|

| 76 |

+

.pybuilder/

|

| 77 |

+

target/

|

| 78 |

+

|

| 79 |

+

# Jupyter Notebook

|

| 80 |

+

.ipynb_checkpoints

|

| 81 |

+

|

| 82 |

+

# IPython

|

| 83 |

+

profile_default/

|

| 84 |

+

ipython_config.py

|

| 85 |

+

|

| 86 |

+

# pyenv

|

| 87 |

+

# For a library or package, you might want to ignore these files since the code is

|

| 88 |

+

# intended to run in multiple environments; otherwise, check them in:

|

| 89 |

+

# .python-version

|

| 90 |

+

|

| 91 |

+

# pipenv

|

| 92 |

+

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

| 93 |

+

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

| 94 |

+

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

| 95 |

+

# install all needed dependencies.

|

| 96 |

+

#Pipfile.lock

|

| 97 |

+

|

| 98 |

+

# poetry

|

| 99 |

+

# Similar to Pipfile.lock, it is generally recommended to include poetry.lock in version control.

|

| 100 |

+

# This is especially recommended for binary packages to ensure reproducibility, and is more

|

| 101 |

+

# commonly ignored for libraries.

|

| 102 |

+

# https://python-poetry.org/docs/basic-usage/#commit-your-poetrylock-file-to-version-control

|

| 103 |

+

#poetry.lock

|

| 104 |

+

|

| 105 |

+

# pdm

|

| 106 |

+

# Similar to Pipfile.lock, it is generally recommended to include pdm.lock in version control.

|

| 107 |

+

#pdm.lock

|

| 108 |

+

# pdm stores project-wide configurations in .pdm.toml, but it is recommended to not include it

|

| 109 |

+

# in version control.

|

| 110 |

+

# https://pdm.fming.dev/#use-with-ide

|

| 111 |

+

.pdm.toml

|

| 112 |

+

|

| 113 |

+

# PEP 582; used by e.g. github.com/David-OConnor/pyflow and github.com/pdm-project/pdm

|

| 114 |

+

__pypackages__/

|

| 115 |

+

|

| 116 |

+

# Celery stuff

|

| 117 |

+

celerybeat-schedule

|

| 118 |

+

celerybeat.pid

|

| 119 |

+

|

| 120 |

+

# SageMath parsed files

|

| 121 |

+

*.sage.py

|

| 122 |

+

|

| 123 |

+

# Environments

|

| 124 |

+

.env

|

| 125 |

+

.venv

|

| 126 |

+

env/

|

| 127 |

+

venv/

|

| 128 |

+

ENV/

|

| 129 |

+

env.bak/

|

| 130 |

+

venv.bak/

|

| 131 |

+

|

| 132 |

+

# Spyder project settings

|

| 133 |

+

.spyderproject

|

| 134 |

+

.spyproject

|

| 135 |

+

|

| 136 |

+

# Rope project settings

|

| 137 |

+

.ropeproject

|

| 138 |

+

|

| 139 |

+

# mkdocs documentation

|

| 140 |

+

/site

|

| 141 |

+

|

| 142 |

+

# mypy

|

| 143 |

+

.mypy_cache/

|

| 144 |

+

.dmypy.json

|

| 145 |

+

dmypy.json

|

| 146 |

+

|

| 147 |

+

# Pyre type checker

|

| 148 |

+

.pyre/

|

| 149 |

+

|

| 150 |

+

# pytype static type analyzer

|

| 151 |

+

.pytype/

|

| 152 |

+

|

| 153 |

+

# Cython debug symbols

|

| 154 |

+

cython_debug/

|

| 155 |

+

|

| 156 |

+

# PyCharm

|

| 157 |

+

# JetBrains specific template is maintained in a separate JetBrains.gitignore that can

|

| 158 |

+

# be found at https://github.com/github/gitignore/blob/main/Global/JetBrains.gitignore

|

| 159 |

+

# and can be added to the global gitignore or merged into this file. For a more nuclear

|

| 160 |

+

# option (not recommended) you can uncomment the following to ignore the entire idea folder.

|

| 161 |

+

.idea/

|

| 162 |

+

|

| 163 |

+

# Conda temp

|

| 164 |

+

.condatmp/

|

| 165 |

+

|

| 166 |

+

# SGAN specific folders

|

| 167 |

+

datasets/

|

| 168 |

+

dlatents/

|

| 169 |

+

out/

|

| 170 |

+

training-runs/

|

| 171 |

+

pretrained/

|

| 172 |

+

video/

|

| 173 |

+

_screenshots/

|

stylegan3-fun/Dockerfile

ADDED

|

@@ -0,0 +1,19 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Copyright (c) 2021, NVIDIA CORPORATION & AFFILIATES. All rights reserved.

|

| 2 |

+

#

|

| 3 |

+

# NVIDIA CORPORATION and its licensors retain all intellectual property

|

| 4 |

+

# and proprietary rights in and to this software, related documentation

|

| 5 |

+

# and any modifications thereto. Any use, reproduction, disclosure or

|

| 6 |

+

# distribution of this software and related documentation without an express

|

| 7 |

+

# license agreement from NVIDIA CORPORATION is strictly prohibited.

|

| 8 |

+

|

| 9 |

+

FROM nvcr.io/nvidia/pytorch:21.08-py3

|

| 10 |

+

|

| 11 |

+

ENV PYTHONDONTWRITEBYTECODE 1

|

| 12 |

+

ENV PYTHONUNBUFFERED 1

|

| 13 |

+

|

| 14 |

+

RUN pip install imageio imageio-ffmpeg==0.4.4 pyspng==0.1.0

|

| 15 |

+

|

| 16 |

+

WORKDIR /workspace

|

| 17 |

+

|

| 18 |

+

RUN (printf '#!/bin/bash\nexec \"$@\"\n' >> /entry.sh) && chmod a+x /entry.sh

|

| 19 |

+

ENTRYPOINT ["/entry.sh"]

|

stylegan3-fun/LICENSE.txt

ADDED

|

@@ -0,0 +1,97 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Copyright (c) 2021, NVIDIA Corporation & affiliates. All rights reserved.

|

| 2 |

+

|

| 3 |

+

|

| 4 |

+

NVIDIA Source Code License for StyleGAN3

|

| 5 |

+

|

| 6 |

+

|

| 7 |

+

=======================================================================

|

| 8 |

+

|

| 9 |

+

1. Definitions

|

| 10 |

+

|

| 11 |

+

"Licensor" means any person or entity that distributes its Work.

|

| 12 |

+

|

| 13 |

+

"Software" means the original work of authorship made available under

|

| 14 |

+

this License.

|

| 15 |

+

|

| 16 |

+

"Work" means the Software and any additions to or derivative works of

|

| 17 |

+

the Software that are made available under this License.

|

| 18 |

+

|

| 19 |

+

The terms "reproduce," "reproduction," "derivative works," and

|

| 20 |

+

"distribution" have the meaning as provided under U.S. copyright law;

|

| 21 |

+

provided, however, that for the purposes of this License, derivative

|

| 22 |

+

works shall not include works that remain separable from, or merely

|

| 23 |

+

link (or bind by name) to the interfaces of, the Work.

|

| 24 |

+

|

| 25 |

+

Works, including the Software, are "made available" under this License

|

| 26 |

+

by including in or with the Work either (a) a copyright notice

|

| 27 |

+

referencing the applicability of this License to the Work, or (b) a

|

| 28 |

+

copy of this License.

|

| 29 |

+

|

| 30 |

+

2. License Grants

|

| 31 |

+

|

| 32 |

+

2.1 Copyright Grant. Subject to the terms and conditions of this

|

| 33 |

+

License, each Licensor grants to you a perpetual, worldwide,

|

| 34 |

+

non-exclusive, royalty-free, copyright license to reproduce,

|

| 35 |

+

prepare derivative works of, publicly display, publicly perform,

|

| 36 |

+

sublicense and distribute its Work and any resulting derivative

|

| 37 |

+

works in any form.

|

| 38 |

+

|

| 39 |

+

3. Limitations

|

| 40 |

+

|

| 41 |

+

3.1 Redistribution. You may reproduce or distribute the Work only

|

| 42 |

+

if (a) you do so under this License, (b) you include a complete

|

| 43 |

+

copy of this License with your distribution, and (c) you retain

|

| 44 |

+

without modification any copyright, patent, trademark, or

|

| 45 |

+

attribution notices that are present in the Work.

|

| 46 |

+

|

| 47 |

+

3.2 Derivative Works. You may specify that additional or different

|

| 48 |

+

terms apply to the use, reproduction, and distribution of your

|

| 49 |

+

derivative works of the Work ("Your Terms") only if (a) Your Terms

|

| 50 |

+

provide that the use limitation in Section 3.3 applies to your

|

| 51 |

+

derivative works, and (b) you identify the specific derivative

|

| 52 |

+

works that are subject to Your Terms. Notwithstanding Your Terms,

|

| 53 |

+

this License (including the redistribution requirements in Section

|

| 54 |

+

3.1) will continue to apply to the Work itself.

|

| 55 |

+

|

| 56 |

+

3.3 Use Limitation. The Work and any derivative works thereof only

|

| 57 |

+

may be used or intended for use non-commercially. Notwithstanding

|

| 58 |

+

the foregoing, NVIDIA and its affiliates may use the Work and any

|

| 59 |

+

derivative works commercially. As used herein, "non-commercially"

|

| 60 |

+

means for research or evaluation purposes only.

|

| 61 |

+

|

| 62 |

+

3.4 Patent Claims. If you bring or threaten to bring a patent claim

|

| 63 |

+

against any Licensor (including any claim, cross-claim or

|

| 64 |

+

counterclaim in a lawsuit) to enforce any patents that you allege

|

| 65 |

+

are infringed by any Work, then your rights under this License from

|

| 66 |

+

such Licensor (including the grant in Section 2.1) will terminate

|

| 67 |

+

immediately.

|

| 68 |

+

|

| 69 |

+

3.5 Trademarks. This License does not grant any rights to use any

|

| 70 |

+

Licensor’s or its affiliates’ names, logos, or trademarks, except

|

| 71 |

+

as necessary to reproduce the notices described in this License.

|

| 72 |

+

|

| 73 |

+

3.6 Termination. If you violate any term of this License, then your

|

| 74 |

+

rights under this License (including the grant in Section 2.1) will

|

| 75 |

+

terminate immediately.

|

| 76 |

+

|

| 77 |

+

4. Disclaimer of Warranty.

|

| 78 |

+

|

| 79 |

+

THE WORK IS PROVIDED "AS IS" WITHOUT WARRANTIES OR CONDITIONS OF ANY

|

| 80 |

+

KIND, EITHER EXPRESS OR IMPLIED, INCLUDING WARRANTIES OR CONDITIONS OF

|

| 81 |

+

MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE, TITLE OR

|

| 82 |

+

NON-INFRINGEMENT. YOU BEAR THE RISK OF UNDERTAKING ANY ACTIVITIES UNDER

|

| 83 |

+

THIS LICENSE.

|

| 84 |

+

|

| 85 |

+

5. Limitation of Liability.

|

| 86 |

+

|

| 87 |

+

EXCEPT AS PROHIBITED BY APPLICABLE LAW, IN NO EVENT AND UNDER NO LEGAL

|

| 88 |

+

THEORY, WHETHER IN TORT (INCLUDING NEGLIGENCE), CONTRACT, OR OTHERWISE

|

| 89 |

+

SHALL ANY LICENSOR BE LIABLE TO YOU FOR DAMAGES, INCLUDING ANY DIRECT,

|

| 90 |

+

INDIRECT, SPECIAL, INCIDENTAL, OR CONSEQUENTIAL DAMAGES ARISING OUT OF

|

| 91 |

+

OR RELATED TO THIS LICENSE, THE USE OR INABILITY TO USE THE WORK

|

| 92 |

+

(INCLUDING BUT NOT LIMITED TO LOSS OF GOODWILL, BUSINESS INTERRUPTION,

|

| 93 |

+

LOST PROFITS OR DATA, COMPUTER FAILURE OR MALFUNCTION, OR ANY OTHER

|

| 94 |

+

COMMERCIAL DAMAGES OR LOSSES), EVEN IF THE LICENSOR HAS BEEN ADVISED OF

|

| 95 |

+

THE POSSIBILITY OF SUCH DAMAGES.

|

| 96 |

+

|

| 97 |

+

=======================================================================

|

stylegan3-fun/README.md

ADDED

|

@@ -0,0 +1,507 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# StyleGAN3-Fun<br><sub>Let's have fun with StyleGAN2/ADA/3!</sub>

|

| 2 |

+

|

| 3 |

+

SOTA GANs are hard to train and to explore, and StyleGAN2/ADA/3 are no different. The point of this repository is to allow

|

| 4 |

+

the user to both easily train and explore the trained models without unnecessary headaches.

|

| 5 |

+

|

| 6 |

+

As [before](https://github.com/PDillis/stylegan2-fun), we will build upon the official repository, which has the advantage

|

| 7 |

+

of being backwards-compatible. As such, we can use our previously-trained models from StyleGAN2 and StyleGAN2-ADA. Please

|

| 8 |

+

get acquainted with the official repository and its codebase, as we will be building upon it and as such, increase its

|

| 9 |

+

capabilities (but hopefully not its complexity!).

|

| 10 |

+

|

| 11 |

+

## Additions

|

| 12 |

+

|

| 13 |

+

This repository adds/has the following changes (not yet the complete list):

|

| 14 |

+

|

| 15 |

+

* ***Dataset Setup (`dataset_tool.py`)***

|

| 16 |

+

* **RGBA support**, so revert saving images to `.png` ([Issue #156](https://github.com/NVlabs/stylegan3/issues/156) by @1378dm). Training can use RGBA and images can be generated.

|

| 17 |

+

* ***TODO:*** ~~Check that training code is correct for normalizing the alpha channel~~, as well as making the

|

| 18 |

+

interpolation code work with this new format (look into [`moviepy.editor.VideoClip`](https://zulko.github.io/moviepy/getting_started/videoclips.html?highlight=mask#mask-clips))

|

| 19 |

+

* For now, interpolation videos will only be saved in RGB format, e.g., discarding the alpha channel.

|

| 20 |

+

* **`--center-crop-tall`**: add vertical black bars to the sides of each image in the dataset (rectangular images, with height > width),

|

| 21 |

+

and you wish to train a square model, in the same vein as the horizontal bars added when using `--center-crop-wide` (where width > height).

|

| 22 |

+

* This is useful when you don't want to lose information from the left and right side of the image by only using the center

|

| 23 |

+

crop (ibidem for `--center-crop-wide`, but for the top and bottom of the image)

|

| 24 |

+

* Note that each image doesn't have to be of the same size, and the added bars will only ensure you get a square image, which will then be

|

| 25 |

+

resized to the model's desired resolution (set by `--resolution`).

|

| 26 |

+

* Grayscale images in the dataset are converted to `RGB`

|

| 27 |

+

* If you want to turn this off, remove the respective line in `dataset_tool.py`, e.g., if your dataset is made of images in a folder, then the function to be used is

|

| 28 |

+

`open_image_folder` in `dataset_tool.py`, and the line to be removed is `img = img.convert('RGB')` in the `iterate_images` inner function.

|

| 29 |

+

* The dataset can be forced to be of a specific number of channels, that is, grayscale, RGB or RGBA.

|

| 30 |

+

* To use this, set `--force-channels=1` for grayscale, `--force-channels=3` for RGB, and `--force-channels=4` for RGBA.

|

| 31 |

+

* If the dataset tool encounters an error, print it along the offending image, but continue with the rest of the dataset

|

| 32 |

+

([PR #39](https://github.com/NVlabs/stylegan3/pull/39) from [Andreas Jansson](https://github.com/andreasjansson)).

|

| 33 |

+

* For conditional models, we can use the subdirectories as the classes by adding `--subfolders-as-labels`. This will

|

| 34 |

+

generate the `dataset.json` file automatically as done by @pbaylies [here](https://github.com/pbaylies/stylegan2-ada/blob/a8f0b1c891312631f870c94f996bcd65e0b8aeef/dataset_tool.py#L772)

|

| 35 |

+

* Additionally, in the `--source` folder, we will save a `class_labels.txt` file, to further know which classes correspond to each subdirectory.

|

| 36 |

+

|

| 37 |

+

* ***Training***

|

| 38 |

+

* Add `--cfg=stylegan2-ext`, which uses @aydao's extended modifications for handling large and diverse datasets.

|

| 39 |

+

* A good explanation is found in Gwern's blog [here](https://gwern.net/face#extended-stylegan2-danbooru2019-aydao)

|

| 40 |

+

* If you wish to fine-tune from @aydao's Anime model, use `--cfg=stylegan2-ext --resume=anime512` when running `train.py`

|

| 41 |

+

* Note: ***This is an extremely experimental configuration!*** The `.pkl` files will be ~1.1Gb each and training will slow down

|

| 42 |

+

significantly. Use at your own risk!

|

| 43 |

+

* `--blur-percent`: Blur both real and generated images before passing them to the Discriminator.

|

| 44 |

+

* The blur (`blur_init_sigma=10.0`) will completely fade after the selected percentage of the training is completed (using a linear ramp).

|

| 45 |

+

* Another experimental feature, should help with datasets that have a lot of variation, and you wish the model to slowly

|

| 46 |

+

learn to generate the objects and then its details.

|

| 47 |

+

* `--mirrory`: Added vertical mirroring for doubling the dataset size (quadrupling if `--mirror` is used; make sure your dataset has either or both

|

| 48 |

+

of these symmetries in order for it to make sense to use them)

|

| 49 |

+

* `--gamma`: If no R1 regularization is provided, the heuristic formula from [StyleGAN](https://github.com/NVlabs/stylegan2) will be used.

|

| 50 |

+

* Specifically, we will set `gamma=0.0002 * resolution ** 2 / batch_size`

|

| 51 |

+

* `--aug`: ***TODO:*** add [Deceive-D/APA](https://github.com/EndlessSora/DeceiveD) as an option.

|

| 52 |

+

* `--augpipe`: Now available to use is [StyleGAN2-ADA's](https://github.com/NVlabs/stylegan2-ada-pytorch) full list of augpipe, i.e., individual augmentations (`blit`, `geom`, `color`, `filter`, `noise`, `cutout`) or their combinations (`bg`, `bgc`, `bgcf`, `bgcfn`, `bgcfnc`).

|

| 53 |

+

* `--img-snap`: Set when to save snapshot images, so now it's independent of when the model is saved (e.g., save image snapshots more often to know how the model is training without saving the model itself, to save space).

|

| 54 |

+

* `--snap-res`: The resolution of the snapshots, depending on how many images you wish to see per snapshot. Available resolutions: `1080p`, `4k`, and `8k`.

|

| 55 |

+

* `--resume-kimg`: Starting number of `kimg`, useful when continuing training a previous run

|

| 56 |

+

* `--outdir`: Automatically set as `training-runs`, so no need to set beforehand (in general this is true throughout the repository)

|

| 57 |

+

* `--metrics`: Now set by default to `None`, so there's no need to worry about this one

|

| 58 |

+

* `--freezeD`: Renamed `--freezed` for better readability

|

| 59 |

+

* `--freezeM`: Freeze the first layers of the Mapping Network Gm (`G.mapping`)

|

| 60 |

+

* `--freezeE`: Freeze the embedding layer of the Generator (for class-conditional models)

|

| 61 |

+

* `--freezeG`: ***TODO:*** Freeze the first layers of the Synthesis Network (`G.synthesis`; less cost to transfer learn, focus on high layers?)

|

| 62 |

+

* `--resume`: All available pre-trained models from NVIDIA (and more) can be used with a simple dictionary, depending on the `--cfg` used.

|

| 63 |

+

For example, if you wish to use StyleGAN3's `config-r`, then set `--cfg=stylegan3-r`. In addition, if you wish to transfer learn from FFHQU at 1024 resolution, set `--resume=ffhqu1024`.

|

| 64 |

+

* The full list of currently available models to transfer learn from (or synthesize new images with) is the following (***TODO:*** add small description of each model,

|

| 65 |

+

so the user can better know which to use for their particular use-case; proper citation to original authors as well):

|

| 66 |

+

|

| 67 |

+

<details>

|

| 68 |

+

<summary>StyleGAN2 models</summary>

|

| 69 |

+

|

| 70 |

+

1. Majority, if not all, are `config-f`: set `--cfg=stylegan2`

|

| 71 |

+

* `ffhq256`

|

| 72 |

+

* `ffhqu256`

|

| 73 |

+

* `ffhq512`

|

| 74 |

+

* `ffhq1024`

|

| 75 |

+

* `ffhqu1024`

|

| 76 |

+

* `celebahq256`

|

| 77 |

+

* `lsundog256`

|

| 78 |

+

* `afhqcat512`

|

| 79 |

+

* `afhqdog512`

|

| 80 |

+

* `afhqwild512`

|

| 81 |

+

* `afhq512`

|

| 82 |

+

* `brecahad512`

|

| 83 |

+

* `cifar10` (conditional, 10 classes)

|

| 84 |

+

* `metfaces1024`

|

| 85 |

+

* `metfacesu1024`

|

| 86 |

+

* `lsuncar512` (config-f)

|

| 87 |

+

* `lsuncat256` (config-f)

|

| 88 |

+

* `lsunchurch256` (config-f)

|

| 89 |

+

* `lsunhorse256` (config-f)

|

| 90 |

+

* `minecraft1024` (thanks to @jeffheaton)

|

| 91 |

+

* `imagenet512` (thanks to @shawwn)

|

| 92 |

+

* `wikiart1024-C` (conditional, 167 classes; thanks to @pbaylies)

|

| 93 |

+

* `wikiart1024-U` (thanks to @pbaylies)

|

| 94 |

+

* `maps1024` (thanks to @tjukanov)

|

| 95 |

+

* `fursona512` (thanks to @arfafax)

|

| 96 |

+

* `mlpony512` (thanks to @arfafax)

|

| 97 |

+

* `lhq1024` (thanks to @justinpinkney)

|

| 98 |

+

* `afhqcat256` (Deceive-D/APA models)

|

| 99 |

+

* `anime256` (Deceive-D/APA models)

|

| 100 |

+

* `cub256` (Deceive-D/APA models)

|

| 101 |

+

* `sddogs1024` (Self-Distilled StyleGAN models)

|

| 102 |

+

* `sdelephant512` (Self-Distilled StyleGAN models)

|

| 103 |

+

* `sdhorses512` (Self-Distilled StyleGAN models)

|

| 104 |

+

* `sdbicycles256` (Self-Distilled StyleGAN models)

|

| 105 |

+

* `sdlions512` (Self-Distilled StyleGAN models)

|

| 106 |

+

* `sdgiraffes512` (Self-Distilled StyleGAN models)

|

| 107 |

+

* `sdparrots512` (Self-Distilled StyleGAN models)

|

| 108 |

+

2. Extended StyleGAN2 config from @aydao: set `--cfg=stylegan2-ext`

|

| 109 |

+

* `anime512` (thanks to @aydao; writeup by @gwern: https://gwern.net/Faces#extended-stylegan2-danbooru2019-aydao)

|

| 110 |

+

</details>

|

| 111 |

+

|

| 112 |

+

<details>

|

| 113 |

+

<summary>StyleGAN3 models</summary>

|

| 114 |

+

|

| 115 |

+

1. `config-t`: set `--cfg=stylegan3-t`

|

| 116 |

+

* `afhq512`

|

| 117 |

+

* `ffhqu256`

|

| 118 |

+

* `ffhq1024`

|

| 119 |

+

* `ffhqu1024`

|

| 120 |

+

* `metfaces1024`

|

| 121 |

+

* `metfacesu1024`

|

| 122 |

+

* `landscapes256` (thanks to @justinpinkney)

|

| 123 |

+

* `wikiart1024` (thanks to @justinpinkney)

|

| 124 |

+

* `mechfuture256` (thanks to @edstoica; 29 kimg tick)

|

| 125 |

+

* `vivflowers256` (thanks to @edstoica; 68 kimg tick)

|

| 126 |

+

* `alienglass256` (thanks to @edstoica; 38 kimg tick)

|

| 127 |

+

* `scificity256` (thanks to @edstoica; 210 kimg tick)

|

| 128 |

+

* `scifiship256` (thanks to @edstoica; 168 kimg tick)

|

| 129 |

+

2. `config-r`: set `--cfg=stylegan3-r`

|

| 130 |

+

* `afhq512`

|

| 131 |

+

* `ffhq1024`

|

| 132 |

+

* `ffhqu1024`

|

| 133 |

+

* `ffhqu256`

|

| 134 |

+

* `metfaces1024`

|

| 135 |

+

* `metfacesu1024`

|

| 136 |

+

</details>

|

| 137 |

+

|

| 138 |

+

* The main sources of these pretrained models are both the [official NVIDIA repository](https://catalog.ngc.nvidia.com/orgs/nvidia/teams/research/models/stylegan3),

|

| 139 |

+

as well as other community repositories, such as [Justin Pinkney](https://github.com/justinpinkney) 's [Awesome Pretrained StyleGAN2](https://github.com/justinpinkney/awesome-pretrained-stylegan2)

|

| 140 |

+

and [Awesome Pretrained StyleGAN3](https://github.com/justinpinkney/awesome-pretrained-stylegan3), [Deceive-D/APA](https://github.com/EndlessSora/DeceiveD),

|

| 141 |

+

[Self-Distilled StyleGAN/Internet Photos](https://github.com/self-distilled-stylegan/self-distilled-internet-photos), and [edstoica](https://github.com/edstoica) 's

|

| 142 |

+

[Wombo Dream](https://www.wombo.art/) [-based models](https://github.com/edstoica/lucid_stylegan3_datasets_models). Others can be found around the net and are properly credited in this repository,

|

| 143 |

+

so long as they can be easily downloaded with [`dnnlib.util.open_url`](https://github.com/PDillis/stylegan3-fun/blob/4ce9d6f7601641ba1e2906ed97f2739a63fb96e2/dnnlib/util.py#L396).

|

| 144 |

+

|

| 145 |

+

* ***Interpolation videos***

|

| 146 |

+

* [Random interpolation](https://youtu.be/DNfocO1IOUE)

|

| 147 |

+

* [Generate images/interpolations with the internal representations of the model](https://nvlabs-fi-cdn.nvidia.com/_web/stylegan3/videos/video_8_internal_activations.mp4)

|

| 148 |

+

* Usage: Add `--layer=<layer_name>` to specify which layer to use for interpolation.

|

| 149 |

+

* If you don't know the names of the layers available for your model, add the flag `--available-layers` and the

|

| 150 |

+

layers will be printed to the console, along their names, number of channels, and sizes.

|

| 151 |

+

* Use one of `--grayscale` or `--rgb` to specify whether to save the images as grayscale or RGB during the interpolation.

|

| 152 |

+

* For `--rgb`, three consecutive channels (starting at `--starting-channel=0`) will be used to create the RGB image. For `--grayscale`, only the first channel will be used.

|

| 153 |

+

* Style-mixing

|

| 154 |

+

* [Sightseeding](https://twitter.com/PDillis/status/1270341433401249793?s=20&t=yLueNkagqsidZFqZ2jNPAw) (jumpiness has been fixed)

|

| 155 |

+

* [Circular interpolation](https://youtu.be/4nktYGjSVHg)

|

| 156 |

+

* [Visual-reactive interpolation](https://youtu.be/KoEAkPnE-zA) (Beta)

|

| 157 |

+

* Audiovisual-reactive interpolation (TODO)

|

| 158 |

+

* ***TODO:*** Give support to RGBA models!

|

| 159 |

+

* ***Projection into the latent space***

|

| 160 |

+

* [Project into $\mathcal{W}+$](https://arxiv.org/abs/1904.03189)

|

| 161 |

+

* Additional losses to use for better projection (e.g., using VGG16 or [CLIP](https://github.com/openai/CLIP))

|

| 162 |

+

* ***[Discriminator Synthesis](https://arxiv.org/abs/2111.02175)*** (official code)

|

| 163 |

+

* Generate a static image (`python discriminator_synthesis.py dream --help`) or a [video](https://youtu.be/hEJKWL2VQTE) with a feedback loop (`python discriminator_synthesis.py dream-zoom --help`,

|

| 164 |

+

`python discriminator_synthesis.py channel-zoom --help`, or `python discriminator_synthesis.py interp --help`)

|

| 165 |

+

* Start from a random image (`random` for noise or `perlin` for 2D fractal Perlin noise, using

|

| 166 |

+

[Mathieu Duchesneau's implementation](https://github.com/duchesneaumathieu/pyperlin)) or from an existing one

|

| 167 |

+

* ***Expansion on GUI/`visualizer.py`***

|

| 168 |

+

* Added the rest of the affine transformations

|

| 169 |

+

* Added widget for class-conditional models (***TODO:*** mix classes with continuous values for `cls`!)

|

| 170 |

+

* ***General model and code additions***

|

| 171 |

+

* [Multi-modal truncation trick](https://arxiv.org/abs/2202.12211): find the different clusters in your model and use the closest one to your dlatent, in order to increase the fidelity

|

| 172 |

+

* Usage: Run `python multimodal_truncation.py get-centroids --network=<path_to_model>` to use default values; for extra options, run `python multimodal_truncation.py get-centroids --help`

|

| 173 |

+

* StyleGAN3: anchor the latent space for easier to follow interpolations (thanks to [Rivers Have Wings](https://github.com/crowsonkb) and [nshepperd](https://github.com/nshepperd)).

|

| 174 |

+

* Use CPU instead of GPU if desired (not recommended, but perfectly fine for generating images, whenever the custom CUDA kernels fail to compile).

|

| 175 |

+

* Add missing dependencies and channels so that the [`conda`](https://docs.conda.io/en/latest/) environment is correctly setup in Windows

|

| 176 |

+

(PR's [#111](https://github.com/NVlabs/stylegan3/pull/111)/[#125](https://github.com/NVlabs/stylegan3/pull/125) and [#80](https://github.com/NVlabs/stylegan3/pull/80) /[#143](https://github.com/NVlabs/stylegan3/pull/143) from the base, respectively)

|

| 177 |

+

* Use [StyleGAN-NADA](https://github.com/rinongal/StyleGAN-nada) models with any part of the code (Issue [#9](https://github.com/PDillis/stylegan3-fun/issues/9))

|

| 178 |

+

* The StyleGAN-NADA models must first be converted via [Vadim Epstein](https://github.com/eps696) 's conversion code found [here](https://github.com/eps696/stylegan2ada#tweaking-models).

|

| 179 |

+

* Add PR [#173](https://github.com/NVlabs/stylegan3/pull/173) for adding the last remaining unknown kwarg for using StyleGAN2 models using TF 1.15.

|

| 180 |

+

* ***TODO*** list (this is a long one with more to come, so any help is appreciated):

|

| 181 |

+

* Add `--device={cuda, ref}` option to pass to each of the custom operations in order to (theoretically) be able to use AMD GPUs, as explained in

|

| 182 |

+

@l4rz's post [here](https://github.com/l4rz/practical-aspects-of-stylegan2-training#hardware)

|

| 183 |

+

* Define a [custom Generator](https://github.com/dvschultz/stylegan2-ada-pytorch/blob/59e05bb115c1c7d0de56be0523754076c2b7ee83/legacy.py#L131) in `legacy.py` to modify the output size

|

| 184 |

+

* Related: the [multi-latent](https://github.com/dvschultz/stylegan2-ada-pytorch/blob/main/training/stylegan2_multi.py), i.e., the one from [@eps696](https://github.com/eps696/stylegan2)

|

| 185 |

+

* Add [Top-K training](https://arxiv.org/abs/2002.06224) as done [here](https://github.com/dvschultz/stylegan2-ada/blob/8f4ab24f494483542d31bf10f4fdb0005dc62739/train.py#L272) and [here](https://github.com/dvschultz/stylegan2-ada-pytorch/blob/59e05bb115c1c7d0de56be0523754076c2b7ee83/training/loss.py#L79)

|

| 186 |