Spaces:

Sleeping

Sleeping

SivilTaram

commited on

Commit

•

9d20c05

1

Parent(s):

fe11039

update lorahub modules

Browse files- app.py +21 -10

- lorahub_demo.jpg +0 -0

app.py

CHANGED

|

@@ -22,13 +22,16 @@ st.markdown(css, unsafe_allow_html=True)

|

|

| 22 |

|

| 23 |

|

| 24 |

def main():

|

| 25 |

-

st.title("LoraHub")

|

| 26 |

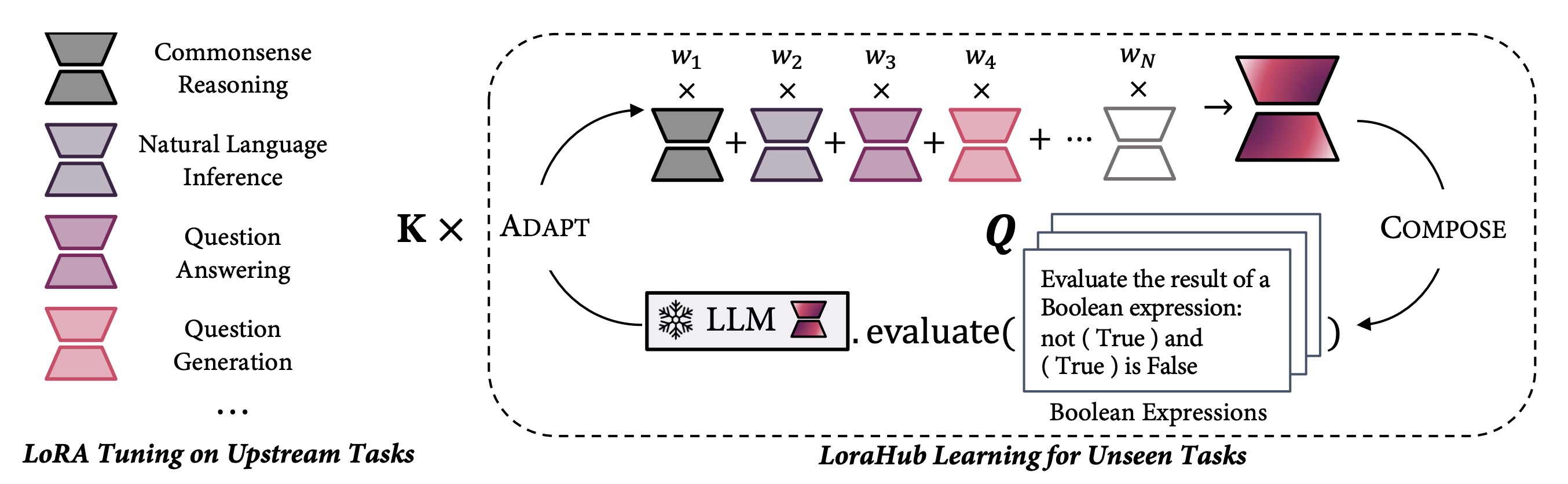

st.markdown("Low-rank adaptations (LoRA) are techniques for fine-tuning large language models on new tasks. We propose LoraHub, a framework that allows composing multiple LoRA modules trained on different tasks. The goal is to achieve good performance on unseen tasks using just a few examples, without needing extra parameters or training. And we want to build a marketplace where users can share their trained LoRA modules, thereby facilitating the application of these modules to new tasks.")

|

| 27 |

|

| 28 |

-

st.

|

|

|

|

|

|

|

|

|

|

| 29 |

|

| 30 |

with st.sidebar:

|

| 31 |

-

st.title("LoRA Module

|

| 32 |

st.markdown(

|

| 33 |

"The following modules are available for you to compose for your new task. Every module name is a peft repository in Huggingface Hub, and you can find them [here](https://huggingface.co/models?search=lorahub).")

|

| 34 |

|

|

@@ -41,7 +44,7 @@ def main():

|

|

| 41 |

hide_index=True)

|

| 42 |

|

| 43 |

st.multiselect(

|

| 44 |

-

'

|

| 45 |

list(range(len(LORA_HUB_NAMES))),

|

| 46 |

[],

|

| 47 |

key="select_names")

|

|

@@ -57,26 +60,33 @@ def main():

|

|

| 57 |

st.write('We will use the following modules', [

|

| 58 |

LORA_HUB_NAMES[i] for i in st.session_state["select_names"]])

|

| 59 |

|

| 60 |

-

st.subheader("

|

|

|

|

| 61 |

|

| 62 |

-

|

|

|

|

|

|

|

|

|

|

| 63 |

'''

|

| 64 |

Infer the date from context. Q: Today, 8/3/1997, is a day that we will never forget. What is the date one week ago from today in MM/DD/YYYY? Options: (A) 03/27/1998 (B) 09/02/1997 (C) 07/27/1997 (D) 06/29/1997 (E) 07/27/1973 (F) 12/27/1997 A:

|

| 65 |

Infer the date from context. Q: May 6, 1992 is like yesterday to Jane, but that is actually ten years ago. What is the date tomorrow in MM/DD/YYYY? Options: (A) 04/16/2002 (B) 04/07/2003 (C) 05/07/2036 (D) 05/28/2002 (E) 05/07/2002 A:

|

| 66 |

Infer the date from context. Q: Today is the second day of the third month of 1966. What is the date one week ago from today in MM/DD/YYYY? Options: (A) 02/26/1966 (B) 01/13/1966 (C) 02/02/1966 (D) 10/23/1966 (E) 02/23/1968 (F) 02/23/1966 A:

|

| 67 |

'''.strip())

|

| 68 |

|

| 69 |

-

txt_output = st.text_area('Examples Outputs (One Line One Output)', '''

|

| 70 |

(C)

|

| 71 |

(E)

|

| 72 |

(F)

|

| 73 |

'''.strip())

|

| 74 |

|

| 75 |

-

st.subheader("Set

|

|

|

|

| 76 |

|

| 77 |

-

max_step = st.slider('Maximum iteration step', 10,

|

| 78 |

|

| 79 |

st.subheader("Start LoraHub Learning")

|

|

|

|

|

|

|

| 80 |

# st.subheader("Watch the logs below")

|

| 81 |

buffer = st.expander("Learning Logs")

|

| 82 |

|

|

@@ -106,11 +116,12 @@ Infer the date from context. Q: Today is the second day of the third month of 1

|

|

| 106 |

shutil.make_archive("lora_module", 'zip', "lora")

|

| 107 |

with open("lora_module.zip", "rb") as fp:

|

| 108 |

btn = st.download_button(

|

| 109 |

-

label="Download

|

| 110 |

data=fp,

|

| 111 |

file_name="lora_module.zip",

|

| 112 |

mime="application/zip"

|

| 113 |

)

|

|

|

|

| 114 |

|

| 115 |

|

| 116 |

|

|

|

|

| 22 |

|

| 23 |

|

| 24 |

def main():

|

| 25 |

+

st.title("💡 LoraHub")

|

| 26 |

st.markdown("Low-rank adaptations (LoRA) are techniques for fine-tuning large language models on new tasks. We propose LoraHub, a framework that allows composing multiple LoRA modules trained on different tasks. The goal is to achieve good performance on unseen tasks using just a few examples, without needing extra parameters or training. And we want to build a marketplace where users can share their trained LoRA modules, thereby facilitating the application of these modules to new tasks.")

|

| 27 |

|

| 28 |

+

st.image(open("lorahub_demo.jpg", "rb").read(),

|

| 29 |

+

"The Illustration of LoraHub Learning", use_column_width=True)

|

| 30 |

+

|

| 31 |

+

st.markdown("In this demo, you will use avaiable lora modules selected in the left sidebar to tackle your new task. When the LoraHub learning is done, you can download the final LoRA module and use it for your new task. You can check out more details in our [paper](https://huggingface.co/papers/2307.13269).")

|

| 32 |

|

| 33 |

with st.sidebar:

|

| 34 |

+

st.title("🛒 LoRA Module Market", help="Feel free to clone this demo and add more modules to the marketplace. Remember to make sure your lora modules share the same base model and have the same rank.")

|

| 35 |

st.markdown(

|

| 36 |

"The following modules are available for you to compose for your new task. Every module name is a peft repository in Huggingface Hub, and you can find them [here](https://huggingface.co/models?search=lorahub).")

|

| 37 |

|

|

|

|

| 44 |

hide_index=True)

|

| 45 |

|

| 46 |

st.multiselect(

|

| 47 |

+

'Choose the modules you want to add',

|

| 48 |

list(range(len(LORA_HUB_NAMES))),

|

| 49 |

[],

|

| 50 |

key="select_names")

|

|

|

|

| 60 |

st.write('We will use the following modules', [

|

| 61 |

LORA_HUB_NAMES[i] for i in st.session_state["select_names"]])

|

| 62 |

|

| 63 |

+

st.subheader("Choose the Module Candidates")

|

| 64 |

+

st.markdown("Please checkout the sidebar on the left to select the modules you want to compose for your new task. You can also click the button to **get 20 lucky modules**.")

|

| 65 |

|

| 66 |

+

st.subheader("Upload Examples of Your Task")

|

| 67 |

+

st.markdown("When faced with a new task, our method requires a few examples of that task in order to perform the lora module composition. Below you should provide a few examples of the task you want to perform. The default examples are from the Date Understanding task of the BBH benchmark.")

|

| 68 |

+

|

| 69 |

+

txt_input = st.text_area('*Examples Inputs (One Line One Input)*',

|

| 70 |

'''

|

| 71 |

Infer the date from context. Q: Today, 8/3/1997, is a day that we will never forget. What is the date one week ago from today in MM/DD/YYYY? Options: (A) 03/27/1998 (B) 09/02/1997 (C) 07/27/1997 (D) 06/29/1997 (E) 07/27/1973 (F) 12/27/1997 A:

|

| 72 |

Infer the date from context. Q: May 6, 1992 is like yesterday to Jane, but that is actually ten years ago. What is the date tomorrow in MM/DD/YYYY? Options: (A) 04/16/2002 (B) 04/07/2003 (C) 05/07/2036 (D) 05/28/2002 (E) 05/07/2002 A:

|

| 73 |

Infer the date from context. Q: Today is the second day of the third month of 1966. What is the date one week ago from today in MM/DD/YYYY? Options: (A) 02/26/1966 (B) 01/13/1966 (C) 02/02/1966 (D) 10/23/1966 (E) 02/23/1968 (F) 02/23/1966 A:

|

| 74 |

'''.strip())

|

| 75 |

|

| 76 |

+

txt_output = st.text_area('*Examples Outputs (One Line One Output)*', '''

|

| 77 |

(C)

|

| 78 |

(E)

|

| 79 |

(F)

|

| 80 |

'''.strip())

|

| 81 |

|

| 82 |

+

st.subheader("Set Iteration Steps")

|

| 83 |

+

st.markdown("Our method involves performing multiple inference iterations to perform the LoRA module composition. The module can then be intergrated into the LLM to carry out the new task. The maximum number of inference steps impacts performance and speed. We suggest setting it to 40 steps if 20 modules were chosen, with more steps typically needed for more modules.")

|

| 84 |

|

| 85 |

+

max_step = st.slider('Maximum iteration step', 10, 100, step=5)

|

| 86 |

|

| 87 |

st.subheader("Start LoraHub Learning")

|

| 88 |

+

|

| 89 |

+

st.markdown("Note that the learning process may take a while (depending on the maximum iteration step), and downloading LoRA modules from HuggingfaceHub also takes some time. This demo runs on CPU by default, and you can monitor the learning logs below.")

|

| 90 |

# st.subheader("Watch the logs below")

|

| 91 |

buffer = st.expander("Learning Logs")

|

| 92 |

|

|

|

|

| 116 |

shutil.make_archive("lora_module", 'zip', "lora")

|

| 117 |

with open("lora_module.zip", "rb") as fp:

|

| 118 |

btn = st.download_button(

|

| 119 |

+

label="📥 Download the final LoRA Module",

|

| 120 |

data=fp,

|

| 121 |

file_name="lora_module.zip",

|

| 122 |

mime="application/zip"

|

| 123 |

)

|

| 124 |

+

st.warning("The page will be refreshed once you click the download button.")

|

| 125 |

|

| 126 |

|

| 127 |

|

lorahub_demo.jpg

ADDED

|