Spaces:

Runtime error

Runtime error

Upload folder using huggingface_hub

Browse files- .env +10 -0

- .gitattributes +1 -0

- .gitignore +8 -0

- 76437bc4e8bea417641aaa076508098a7158e664c1cecfabfa41df497a27f98c +3 -0

- CONTRIBUTING.md +90 -0

- LICENSE +21 -0

- README.md +213 -8

- app.py +322 -0

- app_4bit_ggml.py +320 -0

- benchmark.py +96 -0

- docs/performance.md +19 -0

- env_examples/.env.13b_example +10 -0

- env_examples/.env.7b_8bit_example +10 -0

- env_examples/.env.7b_ggmlv3_q4_0_example +10 -0

- env_examples/.env.7b_gptq_example +10 -0

- gradio_cached_examples/19/Chatbot/tmpihfsul2n.json +1 -0

- gradio_cached_examples/19/Chatbot/tmpj22ucqjj.json +1 -0

- gradio_cached_examples/19/log.csv +3 -0

- llama2_wrapper/__init__.py +1 -0

- llama2_wrapper/__pycache__/__init__.cpython-310.pyc +0 -0

- llama2_wrapper/__pycache__/model.cpython-310.pyc +0 -0

- llama2_wrapper/model.py +197 -0

- poetry.lock +0 -0

- pyproject.toml +33 -0

- requirements.txt +12 -0

- static/screenshot.png +0 -0

- tests/__init__.py +0 -0

.env

ADDED

|

@@ -0,0 +1,10 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MODEL_PATH = "/path-to/Llama-2-7b-chat-hf"

|

| 2 |

+

LOAD_IN_8BIT = True

|

| 3 |

+

LOAD_IN_4BIT = False

|

| 4 |

+

LLAMA_CPP = False

|

| 5 |

+

|

| 6 |

+

MAX_MAX_NEW_TOKENS = 2048

|

| 7 |

+

DEFAULT_MAX_NEW_TOKENS = 1024

|

| 8 |

+

MAX_INPUT_TOKEN_LENGTH = 4000

|

| 9 |

+

|

| 10 |

+

DEFAULT_SYSTEM_PROMPT = "You are a helpful, respectful and honest assistant. Always answer as helpfully as possible, while being safe. Your answers should not include any harmful, unethical, racist, sexist, toxic, dangerous, or illegal content. Please ensure that your responses are socially unbiased and positive in nature. If a question does not make any sense, or is not factually coherent, explain why instead of answering something not correct. If you don't know the answer to a question, please don't share false information."

|

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

76437bc4e8bea417641aaa076508098a7158e664c1cecfabfa41df497a27f98c filter=lfs diff=lfs merge=lfs -text

|

.gitignore

ADDED

|

@@ -0,0 +1,8 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

models

|

| 2 |

+

dist

|

| 3 |

+

|

| 4 |

+

.DS_Store

|

| 5 |

+

.vscode

|

| 6 |

+

|

| 7 |

+

__pycache__

|

| 8 |

+

gradio_cached_examples

|

76437bc4e8bea417641aaa076508098a7158e664c1cecfabfa41df497a27f98c

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:76437bc4e8bea417641aaa076508098a7158e664c1cecfabfa41df497a27f98c

|

| 3 |

+

size 3825517184

|

CONTRIBUTING.md

ADDED

|

@@ -0,0 +1,90 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Contributing to [llama2-webui](https://github.com/liltom-eth/llama2-webui)

|

| 2 |

+

|

| 3 |

+

We love your input! We want to make contributing to this project as easy and transparent as possible, whether it's:

|

| 4 |

+

|

| 5 |

+

- Reporting a bug

|

| 6 |

+

- Proposing new features

|

| 7 |

+

- Discussing the current state of the code

|

| 8 |

+

- Update README.md

|

| 9 |

+

- Submitting a PR

|

| 10 |

+

|

| 11 |

+

## Using GitHub's [issues](https://github.com/liltom-eth/llama2-webui/issues)

|

| 12 |

+

|

| 13 |

+

We use GitHub issues to track public bugs. Report a bug by [opening a new issue](https://github.com/liltom-eth/llama2-webui/issues). It's that easy!

|

| 14 |

+

|

| 15 |

+

Thanks for **[jlb1504](https://github.com/jlb1504)** for reporting the [first issue](https://github.com/liltom-eth/llama2-webui/issues/1)!

|

| 16 |

+

|

| 17 |

+

**Great Bug Reports** tend to have:

|

| 18 |

+

|

| 19 |

+

- A quick summary and/or background

|

| 20 |

+

- Steps to reproduce

|

| 21 |

+

- Be specific!

|

| 22 |

+

- Give a sample code if you can.

|

| 23 |

+

- What you expected would happen

|

| 24 |

+

- What actually happens

|

| 25 |

+

- Notes (possibly including why you think this might be happening, or stuff you tried that didn't work)

|

| 26 |

+

|

| 27 |

+

Proposing new features are also welcome.

|

| 28 |

+

|

| 29 |

+

## Pull Request

|

| 30 |

+

|

| 31 |

+

All pull requests are welcome. For example, you update the `README.md` to help users to better understand the usage.

|

| 32 |

+

|

| 33 |

+

### Clone the repository

|

| 34 |

+

|

| 35 |

+

1. Create a user account on GitHub if you do not already have one.

|

| 36 |

+

|

| 37 |

+

2. Fork the project [repository](https://github.com/liltom-eth/llama2-webui): click on the *Fork* button near the top of the page. This creates a copy of the code under your account on GitHub.

|

| 38 |

+

|

| 39 |

+

3. Clone this copy to your local disk:

|

| 40 |

+

|

| 41 |

+

```

|

| 42 |

+

git clone [email protected]:liltom-eth/llama2-webui.git

|

| 43 |

+

cd llama2-webui

|

| 44 |

+

```

|

| 45 |

+

|

| 46 |

+

### Implement your changes

|

| 47 |

+

|

| 48 |

+

1. Create a branch to hold your changes:

|

| 49 |

+

|

| 50 |

+

```

|

| 51 |

+

git checkout -b my-feature

|

| 52 |

+

```

|

| 53 |

+

|

| 54 |

+

and start making changes. Never work on the main branch!

|

| 55 |

+

|

| 56 |

+

2. Start your work on this branch.

|

| 57 |

+

|

| 58 |

+

3. When you’re done editing, do:

|

| 59 |

+

|

| 60 |

+

```

|

| 61 |

+

git add <MODIFIED FILES>

|

| 62 |

+

git commit

|

| 63 |

+

```

|

| 64 |

+

|

| 65 |

+

to record your changes in [git](https://git-scm.com/).

|

| 66 |

+

|

| 67 |

+

### Submit your contribution

|

| 68 |

+

|

| 69 |

+

1. If everything works fine, push your local branch to the remote server with:

|

| 70 |

+

|

| 71 |

+

```

|

| 72 |

+

git push -u origin my-feature

|

| 73 |

+

```

|

| 74 |

+

|

| 75 |

+

2. Go to the web page of your fork and click "Create pull request" to send your changes for review.

|

| 76 |

+

|

| 77 |

+

```{todo}

|

| 78 |

+

Find more detailed information in [creating a PR]. You might also want to open

|

| 79 |

+

the PR as a draft first and mark it as ready for review after the feedbacks

|

| 80 |

+

from the continuous integration (CI) system or any required fixes.

|

| 81 |

+

```

|

| 82 |

+

|

| 83 |

+

## License

|

| 84 |

+

|

| 85 |

+

By contributing, you agree that your contributions will be licensed under its MIT License.

|

| 86 |

+

|

| 87 |

+

## Questions?

|

| 88 |

+

|

| 89 |

+

Email us at [[email protected]](mailto:[email protected])

|

| 90 |

+

|

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2023 Tom

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

README.md

CHANGED

|

@@ -1,12 +1,217 @@

|

|

| 1 |

---

|

| 2 |

-

title:

|

| 3 |

-

|

| 4 |

-

colorFrom: yellow

|

| 5 |

-

colorTo: purple

|

| 6 |

sdk: gradio

|

| 7 |

-

sdk_version: 3.

|

| 8 |

-

app_file: app.py

|

| 9 |

-

pinned: false

|

| 10 |

---

|

|

|

|

| 11 |

|

| 12 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

+

title: llama2-webui

|

| 3 |

+

app_file: app_4bit_ggml.py

|

|

|

|

|

|

|

| 4 |

sdk: gradio

|

| 5 |

+

sdk_version: 3.37.0

|

|

|

|

|

|

|

| 6 |

---

|

| 7 |

+

# llama2-webui

|

| 8 |

|

| 9 |

+

Running Llama 2 with gradio web UI on GPU or CPU from anywhere (Linux/Windows/Mac).

|

| 10 |

+

- Supporting all Llama 2 models (7B, 13B, 70B, GPTQ, GGML) with 8-bit, 4-bit mode.

|

| 11 |

+

- Supporting GPU inference with at least 6 GB VRAM, and CPU inference.

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

## Features

|

| 16 |

+

|

| 17 |

+

- Supporting models: [Llama-2-7b](https://huggingface.co/meta-llama/Llama-2-7b-chat-hf)/[13b](https://huggingface.co/llamaste/Llama-2-13b-chat-hf)/[70b](https://huggingface.co/llamaste/Llama-2-70b-chat-hf), all [Llama-2-GPTQ](https://huggingface.co/TheBloke/Llama-2-7b-Chat-GPTQ), all [Llama-2-GGML](https://huggingface.co/TheBloke/Llama-2-7B-Chat-GGML) ...

|

| 18 |

+

- Supporting model backends

|

| 19 |

+

- Nvidia GPU: tranformers, [bitsandbytes(8-bit inference)](https://github.com/TimDettmers/bitsandbytes), [AutoGPTQ(4-bit inference)](https://github.com/PanQiWei/AutoGPTQ)

|

| 20 |

+

- GPU inference with at least 6 GB VRAM

|

| 21 |

+

|

| 22 |

+

- CPU, Mac/AMD GPU: [llama.cpp](https://github.com/ggerganov/llama.cpp)

|

| 23 |

+

- CPU inference [Demo](https://twitter.com/liltom_eth/status/1682791729207070720?s=20) on Macbook Air.

|

| 24 |

+

|

| 25 |

+

- Web UI interface: gradio

|

| 26 |

+

|

| 27 |

+

## Contents

|

| 28 |

+

|

| 29 |

+

- [Install](#install)

|

| 30 |

+

- [Download Llama-2 Models](#download-llama-2-models)

|

| 31 |

+

- [Model List](#model-list)

|

| 32 |

+

- [Download Script](#download-script)

|

| 33 |

+

- [Usage](#usage)

|

| 34 |

+

- [Config Examples](#config-examples)

|

| 35 |

+

- [Start Web UI](#start-web-ui)

|

| 36 |

+

- [Run on Nvidia GPU](#run-on-nvidia-gpu)

|

| 37 |

+

- [Run on Low Memory GPU with 8 bit](#run-on-low-memory-gpu-with-8-bit)

|

| 38 |

+

- [Run on Low Memory GPU with 4 bit](#run-on-low-memory-gpu-with-4-bit)

|

| 39 |

+

- [Run on CPU](#run-on-cpu)

|

| 40 |

+

- [Mac GPU and AMD/Nvidia GPU Acceleration](#mac-gpu-and-amdnvidia-gpu-acceleration)

|

| 41 |

+

- [Benchmark](#benchmark)

|

| 42 |

+

- [Contributing](#contributing)

|

| 43 |

+

- [License](#license)

|

| 44 |

+

|

| 45 |

+

|

| 46 |

+

|

| 47 |

+

## Install

|

| 48 |

+

### Method 1: From [PyPI](https://pypi.org/project/llama2-wrapper/)

|

| 49 |

+

```

|

| 50 |

+

pip install llama2-wrapper

|

| 51 |

+

```

|

| 52 |

+

### Method 2: From Source:

|

| 53 |

+

```

|

| 54 |

+

git clone https://github.com/liltom-eth/llama2-webui.git

|

| 55 |

+

cd llama2-webui

|

| 56 |

+

pip install -r requirements.txt

|

| 57 |

+

```

|

| 58 |

+

### Install Issues:

|

| 59 |

+

`bitsandbytes >= 0.39` may not work on older NVIDIA GPUs. In that case, to use `LOAD_IN_8BIT`, you may have to downgrade like this:

|

| 60 |

+

|

| 61 |

+

- `pip install bitsandbytes==0.38.1`

|

| 62 |

+

|

| 63 |

+

`bitsandbytes` also need a special install for Windows:

|

| 64 |

+

```

|

| 65 |

+

pip uninstall bitsandbytes

|

| 66 |

+

pip install https://github.com/jllllll/bitsandbytes-windows-webui/releases/download/wheels/bitsandbytes-0.41.0-py3-none-win_amd64.whl

|

| 67 |

+

```

|

| 68 |

+

|

| 69 |

+

## Download Llama-2 Models

|

| 70 |

+

|

| 71 |

+

Llama 2 is a collection of pre-trained and fine-tuned generative text models ranging in scale from 7 billion to 70 billion parameters.

|

| 72 |

+

|

| 73 |

+

Llama-2-7b-Chat-GPTQ is the GPTQ model files for [Meta's Llama 2 7b Chat](https://huggingface.co/meta-llama/Llama-2-7b-chat-hf). GPTQ 4-bit Llama-2 model require less GPU VRAM to run it.

|

| 74 |

+

|

| 75 |

+

### Model List

|

| 76 |

+

|

| 77 |

+

| Model Name | set MODEL_PATH in .env | Download URL |

|

| 78 |

+

| ------------------------------ | ---------------------------------------- | ------------------------------------------------------------ |

|

| 79 |

+

| meta-llama/Llama-2-7b-chat-hf | /path-to/Llama-2-7b-chat-hf | [Link](https://huggingface.co/llamaste/Llama-2-7b-chat-hf) |

|

| 80 |

+

| meta-llama/Llama-2-13b-chat-hf | /path-to/Llama-2-13b-chat-hf | [Link](https://huggingface.co/llamaste/Llama-2-13b-chat-hf) |

|

| 81 |

+

| meta-llama/Llama-2-70b-chat-hf | /path-to/Llama-2-70b-chat-hf | [Link](https://huggingface.co/llamaste/Llama-2-70b-chat-hf) |

|

| 82 |

+

| meta-llama/Llama-2-7b-hf | /path-to/Llama-2-7b-hf | [Link](https://huggingface.co/meta-llama/Llama-2-7b-hf) |

|

| 83 |

+

| meta-llama/Llama-2-13b-hf | /path-to/Llama-2-13b-hf | [Link](https://huggingface.co/meta-llama/Llama-2-13b-hf) |

|

| 84 |

+

| meta-llama/Llama-2-70b-hf | /path-to/Llama-2-70b-hf | [Link](https://huggingface.co/meta-llama/Llama-2-70b-hf) |

|

| 85 |

+

| TheBloke/Llama-2-7b-Chat-GPTQ | /path-to/Llama-2-7b-Chat-GPTQ | [Link](https://huggingface.co/TheBloke/Llama-2-7b-Chat-GPTQ) |

|

| 86 |

+

| TheBloke/Llama-2-7B-Chat-GGML | /path-to/llama-2-7b-chat.ggmlv3.q4_0.bin | [Link](https://huggingface.co/TheBloke/Llama-2-7B-Chat-GGML) |

|

| 87 |

+

| ... | ... | ... |

|

| 88 |

+

|

| 89 |

+

Running 4-bit model `Llama-2-7b-Chat-GPTQ` needs GPU with 6GB VRAM.

|

| 90 |

+

|

| 91 |

+

Running 4-bit model `llama-2-7b-chat.ggmlv3.q4_0.bin` needs CPU with 6GB RAM. There is also a list of other 2, 3, 4, 5, 6, 8-bit GGML models that can be used from [TheBloke/Llama-2-7B-Chat-GGML](https://huggingface.co/TheBloke/Llama-2-7B-Chat-GGML).

|

| 92 |

+

|

| 93 |

+

### Download Script

|

| 94 |

+

|

| 95 |

+

These models can be downloaded from the link using CMD like:

|

| 96 |

+

|

| 97 |

+

```bash

|

| 98 |

+

# Make sure you have git-lfs installed (https://git-lfs.com)

|

| 99 |

+

git lfs install

|

| 100 |

+

git clone [email protected]:meta-llama/Llama-2-7b-chat-hf

|

| 101 |

+

```

|

| 102 |

+

|

| 103 |

+

To download Llama 2 models, you need to request access from [https://ai.meta.com/llama/](https://ai.meta.com/llama/) and also enable access on repos like [meta-llama/Llama-2-7b-chat-hf](https://huggingface.co/meta-llama/Llama-2-7b-chat-hf/tree/main). Requests will be processed in hours.

|

| 104 |

+

|

| 105 |

+

For GPTQ models like [TheBloke/Llama-2-7b-Chat-GPTQ](https://huggingface.co/TheBloke/Llama-2-7b-Chat-GPTQ), you can directly download without requesting access.

|

| 106 |

+

|

| 107 |

+

For GGML models like [TheBloke/Llama-2-7B-Chat-GGML](https://huggingface.co/TheBloke/Llama-2-7B-Chat-GGML), you can directly download without requesting access.

|

| 108 |

+

|

| 109 |

+

## Usage

|

| 110 |

+

|

| 111 |

+

### Config Examples

|

| 112 |

+

|

| 113 |

+

Setup your `MODEL_PATH` and model configs in `.env` file.

|

| 114 |

+

|

| 115 |

+

There are some examples in `./env_examples/` folder.

|

| 116 |

+

|

| 117 |

+

| Model Setup | Example .env |

|

| 118 |

+

| --------------------------------- | --------------------------- |

|

| 119 |

+

| Llama-2-7b-chat-hf 8-bit on GPU | .env.7b_8bit_example |

|

| 120 |

+

| Llama-2-7b-Chat-GPTQ 4-bit on GPU | .env.7b_gptq_example |

|

| 121 |

+

| Llama-2-7B-Chat-GGML 4bit on CPU | .env.7b_ggmlv3_q4_0_example |

|

| 122 |

+

| Llama-2-13b-chat-hf on GPU | .env.13b_example |

|

| 123 |

+

| ... | ... |

|

| 124 |

+

|

| 125 |

+

### Start Web UI

|

| 126 |

+

|

| 127 |

+

Run chatbot with web UI:

|

| 128 |

+

|

| 129 |

+

```

|

| 130 |

+

python app.py

|

| 131 |

+

```

|

| 132 |

+

|

| 133 |

+

### Run on Nvidia GPU

|

| 134 |

+

|

| 135 |

+

The running requires around 14GB of GPU VRAM for Llama-2-7b and 28GB of GPU VRAM for Llama-2-13b.

|

| 136 |

+

|

| 137 |

+

If you are running on multiple GPUs, the model will be loaded automatically on GPUs and split the VRAM usage. That allows you to run Llama-2-7b (requires 14GB of GPU VRAM) on a setup like 2 GPUs (11GB VRAM each).

|

| 138 |

+

|

| 139 |

+

#### Run on Low Memory GPU with 8 bit

|

| 140 |

+

|

| 141 |

+

If you do not have enough memory, you can set up your `LOAD_IN_8BIT` as `True` in `.env`. This can reduce memory usage by around half with slightly degraded model quality. It is compatible with the CPU, GPU, and Metal backend.

|

| 142 |

+

|

| 143 |

+

Llama-2-7b with 8-bit compression can run on a single GPU with 8 GB of VRAM, like an Nvidia RTX 2080Ti, RTX 4080, T4, V100 (16GB).

|

| 144 |

+

|

| 145 |

+

#### Run on Low Memory GPU with 4 bit

|

| 146 |

+

|

| 147 |

+

If you want to run 4 bit Llama-2 model like `Llama-2-7b-Chat-GPTQ`, you can set up your `LOAD_IN_4BIT` as `True` in `.env` like example `.env.7b_gptq_example`.

|

| 148 |

+

|

| 149 |

+

Make sure you have downloaded the 4-bit model from `Llama-2-7b-Chat-GPTQ` and set the `MODEL_PATH` and arguments in `.env` file.

|

| 150 |

+

|

| 151 |

+

`Llama-2-7b-Chat-GPTQ` can run on a single GPU with 6 GB of VRAM.

|

| 152 |

+

|

| 153 |

+

### Run on CPU

|

| 154 |

+

|

| 155 |

+

Run Llama-2 model on CPU requires [llama.cpp](https://github.com/ggerganov/llama.cpp) dependency and [llama.cpp Python Bindings](https://github.com/abetlen/llama-cpp-python), which are already installed.

|

| 156 |

+

|

| 157 |

+

|

| 158 |

+

Download GGML models like `llama-2-7b-chat.ggmlv3.q4_0.bin` following [Download Llama-2 Models](#download-llama-2-models) section. `llama-2-7b-chat.ggmlv3.q4_0.bin` model requires at least 6 GB RAM to run on CPU.

|

| 159 |

+

|

| 160 |

+

Set up configs like `.env.7b_ggmlv3_q4_0_example` from `env_examples` as `.env`.

|

| 161 |

+

|

| 162 |

+

Run web UI `python app.py` .

|

| 163 |

+

|

| 164 |

+

|

| 165 |

+

|

| 166 |

+

#### Mac GPU and AMD/Nvidia GPU Acceleration

|

| 167 |

+

|

| 168 |

+

If you would like to use Mac GPU and AMD/Nvidia GPU for acceleration, check these:

|

| 169 |

+

|

| 170 |

+

- [Installation with OpenBLAS / cuBLAS / CLBlast / Metal](https://github.com/abetlen/llama-cpp-python#installation-with-openblas--cublas--clblast--metal)

|

| 171 |

+

|

| 172 |

+

- [MacOS Install with Metal GPU](https://github.com/abetlen/llama-cpp-python/blob/main/docs/install/macos.md)

|

| 173 |

+

|

| 174 |

+

### Benchmark

|

| 175 |

+

|

| 176 |

+

Run benchmark script to compute performance on your device:

|

| 177 |

+

|

| 178 |

+

```bash

|

| 179 |

+

python benchmark.py

|

| 180 |

+

```

|

| 181 |

+

|

| 182 |

+

`benchmark.py` will load the same `.env` as `app.py`.

|

| 183 |

+

|

| 184 |

+

Some benchmark performance:

|

| 185 |

+

|

| 186 |

+

| Model | Precision | Device | GPU VRAM | Speed (tokens / sec) | load time (s) |

|

| 187 |

+

| -------------------- | --------- | ------------------ | ----------- | -------------------- | ------------- |

|

| 188 |

+

| Llama-2-7b-chat-hf | 8bit | NVIDIA RTX 2080 Ti | 7.7 GB VRAM | 3.76 | 783.87 |

|

| 189 |

+

| Llama-2-7b-Chat-GPTQ | 4 bit | NVIDIA RTX 2080 Ti | 5.8 GB VRAM | 12.08 | 192.91 |

|

| 190 |

+

| Llama-2-7B-Chat-GGML | 4 bit | Intel i7-8700 | 5.1GB RAM | 4.16 | 105.75 |

|

| 191 |

+

|

| 192 |

+

Check / contribute the performance of your device in the full [performance doc](./docs/performance.md).

|

| 193 |

+

|

| 194 |

+

## Contributing

|

| 195 |

+

|

| 196 |

+

Kindly read our [Contributing Guide](CONTRIBUTING.md) to learn and understand about our development process.

|

| 197 |

+

|

| 198 |

+

### All Contributors

|

| 199 |

+

|

| 200 |

+

<a href="https://github.com/liltom-eth/llama2-webui/graphs/contributors">

|

| 201 |

+

<img src="https://contrib.rocks/image?repo=liltom-eth/llama2-webui" />

|

| 202 |

+

</a>

|

| 203 |

+

|

| 204 |

+

## License

|

| 205 |

+

|

| 206 |

+

MIT - see [MIT License](LICENSE)

|

| 207 |

+

|

| 208 |

+

This project enables users to adapt it freely for proprietary purposes without any restrictions.

|

| 209 |

+

|

| 210 |

+

## Credits

|

| 211 |

+

|

| 212 |

+

- https://huggingface.co/meta-llama/Llama-2-7b-chat-hf

|

| 213 |

+

- https://huggingface.co/spaces/huggingface-projects/llama-2-7b-chat

|

| 214 |

+

- https://huggingface.co/TheBloke/Llama-2-7b-Chat-GPTQ

|

| 215 |

+

- [https://github.com/ggerganov/llama.cpp](https://github.com/ggerganov/llama.cpp)

|

| 216 |

+

- [https://github.com/TimDettmers/bitsandbytes](https://github.com/TimDettmers/bitsandbytes)

|

| 217 |

+

- [https://github.com/PanQiWei/AutoGPTQ](https://github.com/PanQiWei/AutoGPTQ)

|

app.py

ADDED

|

@@ -0,0 +1,322 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

from typing import Iterator

|

| 3 |

+

|

| 4 |

+

import gradio as gr

|

| 5 |

+

|

| 6 |

+

from dotenv import load_dotenv

|

| 7 |

+

from distutils.util import strtobool

|

| 8 |

+

|

| 9 |

+

from llama2_wrapper import LLAMA2_WRAPPER

|

| 10 |

+

|

| 11 |

+

load_dotenv()

|

| 12 |

+

|

| 13 |

+

DEFAULT_SYSTEM_PROMPT = (

|

| 14 |

+

os.getenv("DEFAULT_SYSTEM_PROMPT")

|

| 15 |

+

if os.getenv("DEFAULT_SYSTEM_PROMPT") is not None

|

| 16 |

+

else ""

|

| 17 |

+

)

|

| 18 |

+

MAX_MAX_NEW_TOKENS = (

|

| 19 |

+

int(os.getenv("MAX_MAX_NEW_TOKENS"))

|

| 20 |

+

if os.getenv("DEFAULT_MAX_NEW_TOKENS") is not None

|

| 21 |

+

else 2048

|

| 22 |

+

)

|

| 23 |

+

DEFAULT_MAX_NEW_TOKENS = (

|

| 24 |

+

int(os.getenv("DEFAULT_MAX_NEW_TOKENS"))

|

| 25 |

+

if os.getenv("DEFAULT_MAX_NEW_TOKENS") is not None

|

| 26 |

+

else 1024

|

| 27 |

+

)

|

| 28 |

+

MAX_INPUT_TOKEN_LENGTH = (

|

| 29 |

+

int(os.getenv("MAX_INPUT_TOKEN_LENGTH"))

|

| 30 |

+

if os.getenv("MAX_INPUT_TOKEN_LENGTH") is not None

|

| 31 |

+

else 4000

|

| 32 |

+

)

|

| 33 |

+

|

| 34 |

+

MODEL_PATH = os.getenv("MODEL_PATH")

|

| 35 |

+

assert MODEL_PATH is not None, f"MODEL_PATH is required, got: {MODEL_PATH}"

|

| 36 |

+

|

| 37 |

+

LOAD_IN_8BIT = bool(strtobool(os.getenv("LOAD_IN_8BIT", "True")))

|

| 38 |

+

|

| 39 |

+

LOAD_IN_4BIT = bool(strtobool(os.getenv("LOAD_IN_4BIT", "True")))

|

| 40 |

+

|

| 41 |

+

LLAMA_CPP = bool(strtobool(os.getenv("LLAMA_CPP", "True")))

|

| 42 |

+

|

| 43 |

+

if LLAMA_CPP:

|

| 44 |

+

print("Running on CPU with llama.cpp.")

|

| 45 |

+

else:

|

| 46 |

+

import torch

|

| 47 |

+

|

| 48 |

+

if torch.cuda.is_available():

|

| 49 |

+

print("Running on GPU with torch transformers.")

|

| 50 |

+

else:

|

| 51 |

+

print("CUDA not found.")

|

| 52 |

+

|

| 53 |

+

config = {

|

| 54 |

+

"model_name": MODEL_PATH,

|

| 55 |

+

"load_in_8bit": LOAD_IN_8BIT,

|

| 56 |

+

"load_in_4bit": LOAD_IN_4BIT,

|

| 57 |

+

"llama_cpp": LLAMA_CPP,

|

| 58 |

+

"MAX_INPUT_TOKEN_LENGTH": MAX_INPUT_TOKEN_LENGTH,

|

| 59 |

+

}

|

| 60 |

+

llama2_wrapper = LLAMA2_WRAPPER(config)

|

| 61 |

+

llama2_wrapper.init_tokenizer()

|

| 62 |

+

llama2_wrapper.init_model()

|

| 63 |

+

|

| 64 |

+

DESCRIPTION = """

|

| 65 |

+

# llama2-webui

|

| 66 |

+

|

| 67 |

+

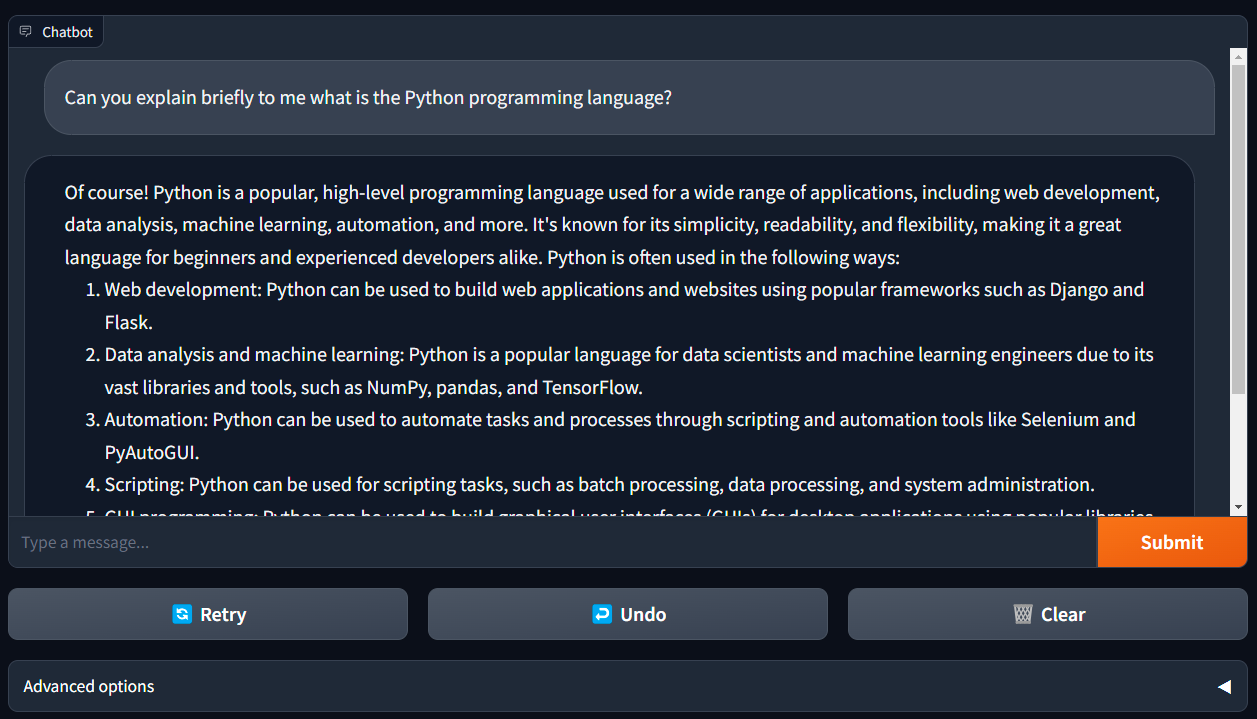

This is a chatbot based on Llama-2.

|

| 68 |

+

- Supporting models: [Llama-2-7b](https://huggingface.co/TheBloke/Llama-2-7B-Chat-GGML)/[13b](https://huggingface.co/llamaste/Llama-2-13b-chat-hf)/[70b](https://huggingface.co/llamaste/Llama-2-70b-chat-hf), all [Llama-2-GPTQ](https://huggingface.co/TheBloke/Llama-2-7b-Chat-GPTQ), all [Llama-2-GGML](https://huggingface.co/TheBloke/Llama-2-7B-Chat-GGML) ...

|

| 69 |

+

- Supporting model backends

|

| 70 |

+

- Nvidia GPU(at least 6 GB VRAM): tranformers, [bitsandbytes(8-bit inference)](https://github.com/TimDettmers/bitsandbytes), [AutoGPTQ(4-bit inference)](https://github.com/PanQiWei/AutoGPTQ)

|

| 71 |

+

- CPU(at least 6 GB RAM), Mac/AMD GPU: [llama.cpp](https://github.com/ggerganov/llama.cpp)

|

| 72 |

+

"""

|

| 73 |

+

|

| 74 |

+

|

| 75 |

+

def clear_and_save_textbox(message: str) -> tuple[str, str]:

|

| 76 |

+

return "", message

|

| 77 |

+

|

| 78 |

+

|

| 79 |

+

def display_input(

|

| 80 |

+

message: str, history: list[tuple[str, str]]

|

| 81 |

+

) -> list[tuple[str, str]]:

|

| 82 |

+

history.append((message, ""))

|

| 83 |

+

return history

|

| 84 |

+

|

| 85 |

+

|

| 86 |

+

def delete_prev_fn(history: list[tuple[str, str]]) -> tuple[list[tuple[str, str]], str]:

|

| 87 |

+

try:

|

| 88 |

+

message, _ = history.pop()

|

| 89 |

+

except IndexError:

|

| 90 |

+

message = ""

|

| 91 |

+

return history, message or ""

|

| 92 |

+

|

| 93 |

+

|

| 94 |

+

def generate(

|

| 95 |

+

message: str,

|

| 96 |

+

history_with_input: list[tuple[str, str]],

|

| 97 |

+

system_prompt: str,

|

| 98 |

+

max_new_tokens: int,

|

| 99 |

+

temperature: float,

|

| 100 |

+

top_p: float,

|

| 101 |

+

top_k: int,

|

| 102 |

+

) -> Iterator[list[tuple[str, str]]]:

|

| 103 |

+

if max_new_tokens > MAX_MAX_NEW_TOKENS:

|

| 104 |

+

raise ValueError

|

| 105 |

+

|

| 106 |

+

history = history_with_input[:-1]

|

| 107 |

+

generator = llama2_wrapper.run(

|

| 108 |

+

message, history, system_prompt, max_new_tokens, temperature, top_p, top_k

|

| 109 |

+

)

|

| 110 |

+

try:

|

| 111 |

+

first_response = next(generator)

|

| 112 |

+

yield history + [(message, first_response)]

|

| 113 |

+

except StopIteration:

|

| 114 |

+

yield history + [(message, "")]

|

| 115 |

+

for response in generator:

|

| 116 |

+

yield history + [(message, response)]

|

| 117 |

+

|

| 118 |

+

|

| 119 |

+

def process_example(message: str) -> tuple[str, list[tuple[str, str]]]:

|

| 120 |

+

generator = generate(message, [], DEFAULT_SYSTEM_PROMPT, 1024, 1, 0.95, 50)

|

| 121 |

+

for x in generator:

|

| 122 |

+

pass

|

| 123 |

+

return "", x

|

| 124 |

+

|

| 125 |

+

|

| 126 |

+

def check_input_token_length(

|

| 127 |

+

message: str, chat_history: list[tuple[str, str]], system_prompt: str

|

| 128 |

+

) -> None:

|

| 129 |

+

input_token_length = llama2_wrapper.get_input_token_length(

|

| 130 |

+

message, chat_history, system_prompt

|

| 131 |

+

)

|

| 132 |

+

if input_token_length > MAX_INPUT_TOKEN_LENGTH:

|

| 133 |

+

raise gr.Error(

|

| 134 |

+

f"The accumulated input is too long ({input_token_length} > {MAX_INPUT_TOKEN_LENGTH}). Clear your chat history and try again."

|

| 135 |

+

)

|

| 136 |

+

|

| 137 |

+

|

| 138 |

+

with gr.Blocks(css="style.css") as demo:

|

| 139 |

+

gr.Markdown(DESCRIPTION)

|

| 140 |

+

|

| 141 |

+

with gr.Group():

|

| 142 |

+

chatbot = gr.Chatbot(label="Chatbot")

|

| 143 |

+

with gr.Row():

|

| 144 |

+

textbox = gr.Textbox(

|

| 145 |

+

container=False,

|

| 146 |

+

show_label=False,

|

| 147 |

+

placeholder="Type a message...",

|

| 148 |

+

scale=10,

|

| 149 |

+

)

|

| 150 |

+

submit_button = gr.Button("Submit", variant="primary", scale=1, min_width=0)

|

| 151 |

+

with gr.Row():

|

| 152 |

+

retry_button = gr.Button("🔄 Retry", variant="secondary")

|

| 153 |

+

undo_button = gr.Button("↩️ Undo", variant="secondary")

|

| 154 |

+

clear_button = gr.Button("🗑️ Clear", variant="secondary")

|

| 155 |

+

|

| 156 |

+

saved_input = gr.State()

|

| 157 |

+

|

| 158 |

+

with gr.Accordion(label="Advanced options", open=False):

|

| 159 |

+

system_prompt = gr.Textbox(

|

| 160 |

+

label="System prompt", value=DEFAULT_SYSTEM_PROMPT, lines=6

|

| 161 |

+

)

|

| 162 |

+

max_new_tokens = gr.Slider(

|

| 163 |

+

label="Max new tokens",

|

| 164 |

+

minimum=1,

|

| 165 |

+

maximum=MAX_MAX_NEW_TOKENS,

|

| 166 |

+

step=1,

|

| 167 |

+

value=DEFAULT_MAX_NEW_TOKENS,

|

| 168 |

+

)

|

| 169 |

+

temperature = gr.Slider(

|

| 170 |

+

label="Temperature",

|

| 171 |

+

minimum=0.1,

|

| 172 |

+

maximum=4.0,

|

| 173 |

+

step=0.1,

|

| 174 |

+

value=1.0,

|

| 175 |

+

)

|

| 176 |

+

top_p = gr.Slider(

|

| 177 |

+

label="Top-p (nucleus sampling)",

|

| 178 |

+

minimum=0.05,

|

| 179 |

+

maximum=1.0,

|

| 180 |

+

step=0.05,

|

| 181 |

+

value=0.95,

|

| 182 |

+

)

|

| 183 |

+

top_k = gr.Slider(

|

| 184 |

+

label="Top-k",

|

| 185 |

+

minimum=1,

|

| 186 |

+

maximum=1000,

|

| 187 |

+

step=1,

|

| 188 |

+

value=50,

|

| 189 |

+

)

|

| 190 |

+

|

| 191 |

+

gr.Examples(

|

| 192 |

+

examples=[

|

| 193 |

+

"Hello there! How are you doing?",

|

| 194 |

+

"Can you explain briefly to me what is the Python programming language?",

|

| 195 |

+

"Explain the plot of Cinderella in a sentence.",

|

| 196 |

+

"How many hours does it take a man to eat a Helicopter?",

|

| 197 |

+

"Write a 100-word article on 'Benefits of Open-Source in AI research'",

|

| 198 |

+

],

|

| 199 |

+

inputs=textbox,

|

| 200 |

+

outputs=[textbox, chatbot],

|

| 201 |

+

fn=process_example,

|

| 202 |

+

cache_examples=True,

|

| 203 |

+

)

|

| 204 |

+

|

| 205 |

+

textbox.submit(

|

| 206 |

+

fn=clear_and_save_textbox,

|

| 207 |

+

inputs=textbox,

|

| 208 |

+

outputs=[textbox, saved_input],

|

| 209 |

+

api_name=False,

|

| 210 |

+

queue=False,

|

| 211 |

+

).then(

|

| 212 |

+

fn=display_input,

|

| 213 |

+

inputs=[saved_input, chatbot],

|

| 214 |

+

outputs=chatbot,

|

| 215 |

+

api_name=False,

|

| 216 |

+

queue=False,

|

| 217 |

+

).then(

|

| 218 |

+

fn=check_input_token_length,

|

| 219 |

+

inputs=[saved_input, chatbot, system_prompt],

|

| 220 |

+

api_name=False,

|

| 221 |

+

queue=False,

|

| 222 |

+

).success(

|

| 223 |

+

fn=generate,

|

| 224 |

+

inputs=[

|

| 225 |

+

saved_input,

|

| 226 |

+

chatbot,

|

| 227 |

+

system_prompt,

|

| 228 |

+

max_new_tokens,

|

| 229 |

+

temperature,

|

| 230 |

+

top_p,

|

| 231 |

+

top_k,

|

| 232 |

+

],

|

| 233 |

+

outputs=chatbot,

|

| 234 |

+

api_name=False,

|

| 235 |

+

)

|

| 236 |

+

|

| 237 |

+

button_event_preprocess = (

|

| 238 |

+

submit_button.click(

|

| 239 |

+

fn=clear_and_save_textbox,

|

| 240 |

+

inputs=textbox,

|

| 241 |

+

outputs=[textbox, saved_input],

|

| 242 |

+

api_name=False,

|

| 243 |

+

queue=False,

|

| 244 |

+

)

|

| 245 |

+

.then(

|

| 246 |

+

fn=display_input,

|

| 247 |

+

inputs=[saved_input, chatbot],

|

| 248 |

+

outputs=chatbot,

|

| 249 |

+

api_name=False,

|

| 250 |

+

queue=False,

|

| 251 |

+

)

|

| 252 |

+

.then(

|

| 253 |

+

fn=check_input_token_length,

|

| 254 |

+

inputs=[saved_input, chatbot, system_prompt],

|

| 255 |

+

api_name=False,

|

| 256 |

+

queue=False,

|

| 257 |

+

)

|

| 258 |

+

.success(

|

| 259 |

+

fn=generate,

|

| 260 |

+

inputs=[

|

| 261 |

+

saved_input,

|

| 262 |

+

chatbot,

|

| 263 |

+

system_prompt,

|

| 264 |

+

max_new_tokens,

|

| 265 |

+

temperature,

|

| 266 |

+

top_p,

|

| 267 |

+

top_k,

|

| 268 |

+

],

|

| 269 |

+

outputs=chatbot,

|

| 270 |

+

api_name=False,

|

| 271 |

+

)

|

| 272 |

+

)

|

| 273 |

+

|

| 274 |

+

retry_button.click(

|

| 275 |

+

fn=delete_prev_fn,

|

| 276 |

+

inputs=chatbot,

|

| 277 |

+

outputs=[chatbot, saved_input],

|

| 278 |

+

api_name=False,

|

| 279 |

+

queue=False,

|

| 280 |

+

).then(

|

| 281 |

+

fn=display_input,

|

| 282 |

+

inputs=[saved_input, chatbot],

|

| 283 |

+

outputs=chatbot,

|

| 284 |

+

api_name=False,

|

| 285 |

+

queue=False,

|

| 286 |

+

).then(

|

| 287 |

+

fn=generate,

|

| 288 |

+

inputs=[

|

| 289 |

+

saved_input,

|

| 290 |

+

chatbot,

|

| 291 |

+

system_prompt,

|

| 292 |

+

max_new_tokens,

|

| 293 |

+

temperature,

|

| 294 |

+

top_p,

|

| 295 |

+

top_k,

|

| 296 |

+

],

|

| 297 |

+

outputs=chatbot,

|

| 298 |

+

api_name=False,

|

| 299 |

+

)

|

| 300 |

+

|

| 301 |

+

undo_button.click(

|

| 302 |

+

fn=delete_prev_fn,

|

| 303 |

+

inputs=chatbot,

|

| 304 |

+

outputs=[chatbot, saved_input],

|

| 305 |

+

api_name=False,

|

| 306 |

+

queue=False,

|

| 307 |

+

).then(

|

| 308 |

+

fn=lambda x: x,

|

| 309 |

+

inputs=[saved_input],

|

| 310 |

+

outputs=textbox,

|

| 311 |

+

api_name=False,

|

| 312 |

+

queue=False,

|

| 313 |

+

)

|

| 314 |

+

|

| 315 |

+

clear_button.click(

|

| 316 |

+

fn=lambda: ([], ""),

|

| 317 |

+

outputs=[chatbot, saved_input],

|

| 318 |

+

queue=False,

|

| 319 |

+

api_name=False,

|

| 320 |

+

)

|

| 321 |

+

|

| 322 |

+

demo.queue(max_size=20).launch()

|

app_4bit_ggml.py

ADDED

|

@@ -0,0 +1,320 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import argparse

|

| 2 |

+

|

| 3 |

+

import os

|

| 4 |

+

from typing import Iterator

|

| 5 |

+

|

| 6 |

+

import gradio as gr

|

| 7 |

+

|

| 8 |

+

# from dotenv import load_dotenv

|

| 9 |

+

from distutils.util import strtobool

|

| 10 |

+

|

| 11 |

+

from llama2_wrapper import LLAMA2_WRAPPER

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

parser = argparse.ArgumentParser()

|

| 15 |

+

|

| 16 |

+

DEFAULT_SYSTEM_PROMPT = "You are a helpful, respectful and honest assistant. Always answer as helpfully as possible, while being safe. Your answers should not include any harmful, unethical, racist, sexist, toxic, dangerous, or illegal content. Please ensure that your responses are socially unbiased and positive in nature. If a question does not make any sense, or is not factually coherent, explain why instead of answering something not correct. If you don't know the answer to a question, please don't share false information."

|

| 17 |

+

|

| 18 |

+

parser.add_argument('--model_path', type=str, required=True, default='',

|

| 19 |

+

help='model_path .')

|

| 20 |

+

|

| 21 |

+

parser.add_argument('--system_prompt', type=str, required=False, default=DEFAULT_SYSTEM_PROMPT,

|

| 22 |

+

help='Inference server Appkey. Default is .')

|

| 23 |

+

|

| 24 |

+

parser.add_argument('--max_max_new_tokens', type=int, default=2048, metavar='NUMBER',

|

| 25 |

+

help='maximum new tokens (default: 2048)')

|

| 26 |

+

|

| 27 |

+

FLAGS = parser.parse_args()

|

| 28 |

+

|

| 29 |

+

|

| 30 |

+

DEFAULT_SYSTEM_PROMPT = FLAGS.system_prompt

|

| 31 |

+

MAX_MAX_NEW_TOKENS = FLAGS.max_max_new_tokens

|

| 32 |

+

|

| 33 |

+

DEFAULT_MAX_NEW_TOKENS = 1024

|

| 34 |

+

MAX_INPUT_TOKEN_LENGTH = 4000

|

| 35 |

+

|

| 36 |

+

MODEL_PATH = FLAGS.model_path

|

| 37 |

+

assert MODEL_PATH is not None, f"MODEL_PATH is required, got: {MODEL_PATH}"

|

| 38 |

+

|

| 39 |

+

LOAD_IN_8BIT = False

|

| 40 |

+

|

| 41 |

+

LOAD_IN_4BIT = True

|

| 42 |

+

|

| 43 |

+

LLAMA_CPP = True

|

| 44 |

+

|

| 45 |

+

if LLAMA_CPP:

|

| 46 |

+

print("Running on CPU with llama.cpp.")

|

| 47 |

+

else:

|

| 48 |

+

import torch

|

| 49 |

+

|

| 50 |

+

if torch.cuda.is_available():

|

| 51 |

+

print("Running on GPU with torch transformers.")

|

| 52 |

+

else:

|

| 53 |

+

print("CUDA not found.")

|

| 54 |

+

|

| 55 |

+

config = {

|

| 56 |

+

"model_name": MODEL_PATH,

|

| 57 |

+

"load_in_8bit": LOAD_IN_8BIT,

|

| 58 |

+

"load_in_4bit": LOAD_IN_4BIT,

|

| 59 |

+

"llama_cpp": LLAMA_CPP,

|

| 60 |

+

"MAX_INPUT_TOKEN_LENGTH": MAX_INPUT_TOKEN_LENGTH,

|

| 61 |

+

}

|

| 62 |

+

llama2_wrapper = LLAMA2_WRAPPER(config)

|

| 63 |

+

llama2_wrapper.init_tokenizer()

|

| 64 |

+

llama2_wrapper.init_model()

|

| 65 |

+

|

| 66 |

+

DESCRIPTION = """

|

| 67 |

+

# Llama2-Chinese-7b-webui

|

| 68 |

+

|

| 69 |

+

这是一个[Llama2-Chinese-2-7b](https://github.com/FlagAlpha/Llama2-Chinese)的推理界面。

|

| 70 |

+

- 支持的模型: [Llama-2-GGML](https://huggingface.co/FlagAlpha/Llama2-Chinese-7b-Chat-GGML)

|

| 71 |

+

- 支持的后端

|

| 72 |

+

- CPU(at least 6 GB RAM), Mac/AMD

|

| 73 |

+

"""

|