language:

- en

- ko

pipeline_tag: text-generation

tags:

- pytorch

- llama

- causal-lm

- 42dot_llm

license: cc-by-nc-4.0

42dot_LLM-PLM-1.3B

42dot LLM-PLM is a pre-trained language model (PLM) developed by 42dot and is a part of 42dot LLM (large language model). 42dot LLM-PLM is pre-trained using Korean and English text corpus and can be used as a foundation language model for several Korean and English natural language tasks. This repository contains a 1.3B-parameter version of the model.

Model Description

Hyperparameters

42dot LLM-PLM is built upon a Transformer decoder architecture similar to the LLaMA 2 and its hyperparameters are listed below.

| Params | Layers | Attention heads | Hidden size | FFN size |

|---|---|---|---|---|

| 1.3B | 24 | 32 | 2,048 | 5,632 |

Pre-training

Pre-training took about 49K GPU hours (NVIDIA A100). Related settings are listed below.

| Params | Global batch size* | Initial learning rate | Train iter.* | Max length* | Weight decay |

|---|---|---|---|---|---|

| 1.3B | 4.0M | 4E-4 | 1.4T | 4,096 | 0.1 |

(* unit: tokens)

Pre-training datasets

We used a set of publicly available text corpus, including:

- Korean: including Jikji project, mC4-ko, LBox Open, KLUE, Wikipedia (Korean) and so on.

- English: including The Pile, RedPajama, C4 and so on.

Tokenizer

The tokenizer is based on the Byte-level BPE algorithm. We trained its vocabulary from scratch using a subset of the pre-training corpus. For constructing a subset, 10M and 10M documents are sampled from Korean and English corpus respectively. The resulting vocabulary sizes about 50K.

Zero-shot evaluations

We evaluate 42dot LLM-PLM on a variety of academic benchmarks both in Korean and English. All the results are obtained using lm-eval-harness and models released on the Hugging Face Hub.

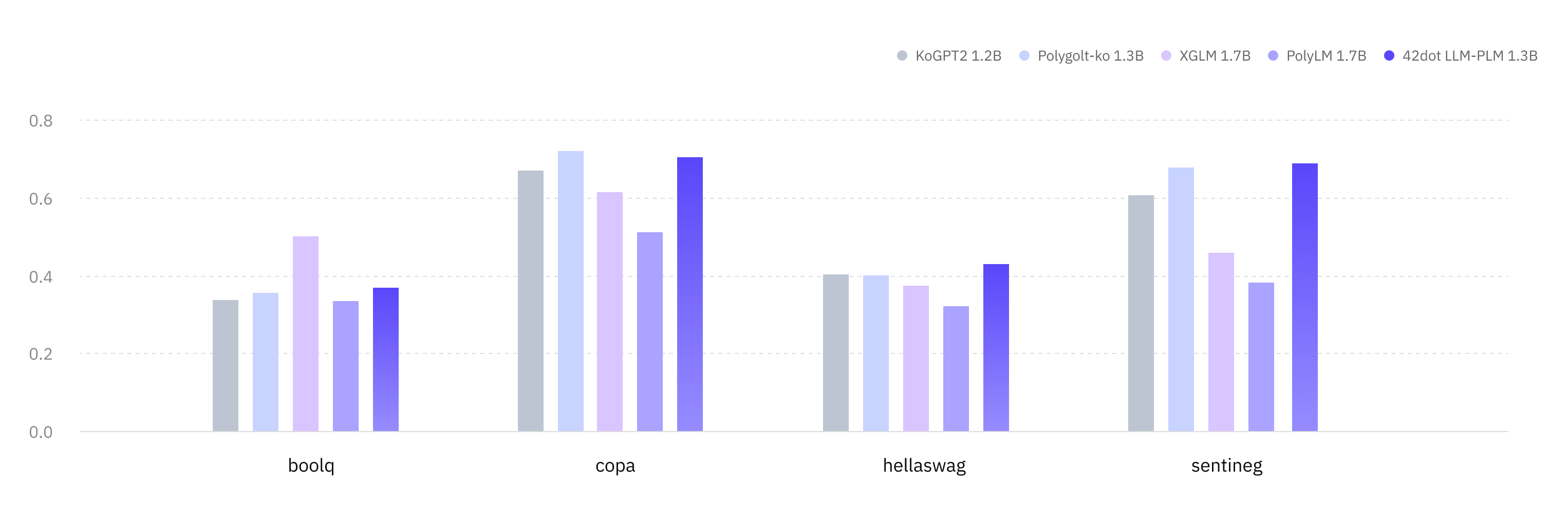

Korean (KOBEST)

| Tasks / Macro-F1 | KoGPT2 1.2B |

Polyglot-Ko 1.3B |

XGLM 1.7B |

PolyLM 1.7B |

42dot LLM-PLM 1.3B |

|---|---|---|---|---|---|

| boolq | 0.337 | 0.355 | 0.502 | 0.334 | 0.369 |

| copa | 0.67 | 0.721 | 0.616 | 0.513 | 0.704 |

| hellaswag | 0.404 | 0.401 | 0.374 | 0.321 | 0.431 |

| sentineg | 0.606 | 0.679 | 0.46 | 0.382 | 0.69 |

| average | 0.504 | 0.539 | 0.488 | 0.388 | 0.549 |

English

| Tasks / Metric | MPT 1B |

OPT 1.3B |

XGLM 1.7B |

PolyLM 1.7B |

42dot LLM-PLM 1.3B |

|---|---|---|---|---|---|

| anli_r1/acc | 0.309 | 0.341 | 0.334 | 0.336 | 0.325 |

| anli_r2/acc | 0.334 | 0.339 | 0.331 | 0.314 | 0.34 |

| anli_r3/acc | 0.33 | 0.336 | 0.333 | 0.339 | 0.333 |

| arc_challenge/acc | 0.268 | 0.234 | 0.21 | 0.198 | 0.288 |

| arc_challenge/acc_norm | 0.291 | 0.295 | 0.243 | 0.256 | 0.317 |

| arc_easy/acc | 0.608 | 0.571 | 0.537 | 0.461 | 0.628 |

| arc_easy/acc_norm | 0.555 | 0.51 | 0.479 | 0.404 | 0.564 |

| boolq/acc | 0.517 | 0.578 | 0.585 | 0.617 | 0.624 |

| hellaswag/acc | 0.415 | 0.415 | 0.362 | 0.322 | 0.422 |

| hellaswag/acc_norm | 0.532 | 0.537 | 0.458 | 0.372 | 0.544 |

| openbookqa/acc | 0.238 | 0.234 | 0.17 | 0.166 | 0.222 |

| openbookqa/acc_norm | 0.334 | 0.334 | 0.298 | 0.334 | 0.34 |

| piqa/acc | 0.714 | 0.718 | 0.697 | 0.667 | 0.725 |

| piqa/acc_norm | 0.72 | 0.724 | 0.703 | 0.649 | 0.727 |

| record/f1 | 0.84 | 0.857 | 0.775 | 0.681 | 0.848 |

| record/em | 0.832 | 0.849 | 0.769 | 0.674 | 0.839 |

| rte/acc | 0.541 | 0.523 | 0.559 | 0.513 | 0.542 |

| truthfulqa_mc/mc1 | 0.224 | 0.237 | 0.215 | 0.251 | 0.236 |

| truthfulqa_mc/mc2 | 0.387 | 0.386 | 0.373 | 0.428 | 0.387 |

| wic/acc | 0.498 | 0.509 | 0.503 | 0.5 | 0.502 |

| winogrande/acc | 0.574 | 0.595 | 0.55 | 0.519 | 0.583 |

| average | 0.479 | 0.482 | 0.452 | 0.429 | 0.492 |

Limitations and Ethical Considerations

42dot LLM-PLM shares a number of well-known limitations of other large language models (LLMs). For example, it may generate false and misinformative content since 42dot LLM-PLM is also subject to hallucination. In addition, 42dot LLM-PLM may generate toxic, harmful, and biased content due to the use of web-available training data. We strongly suggest that 42dot LLM-PLM users should be aware of those limitations and take necessary steps to mitigate those issues.

Disclaimer

The contents generated by 42dot LLM series ("42dot LLM") do not necessarily reflect the views or opinions of 42dot Inc. ("42dot"). 42dot disclaims any and all liability to any part for any direct, indirect, implied, punitive, special, incidental, or other consequential damages arising from any use of the 42dot LLM and its generated contents.

License

The 42dot LLM-PLM is licensed under the Creative Commons Attribution-NonCommercial 4.0 (CC BY-NC 4.0).

Citation

@misc{42dot2023llm,

title={42dot LLM: A Series of Large Language Model by 42dot},

author={42dot Inc.},

year={2023},

url = {https://github.com/42dot/42dot_LLM},

version = {1.0.0},

}