Llama-3.2-3B-SmartBoi

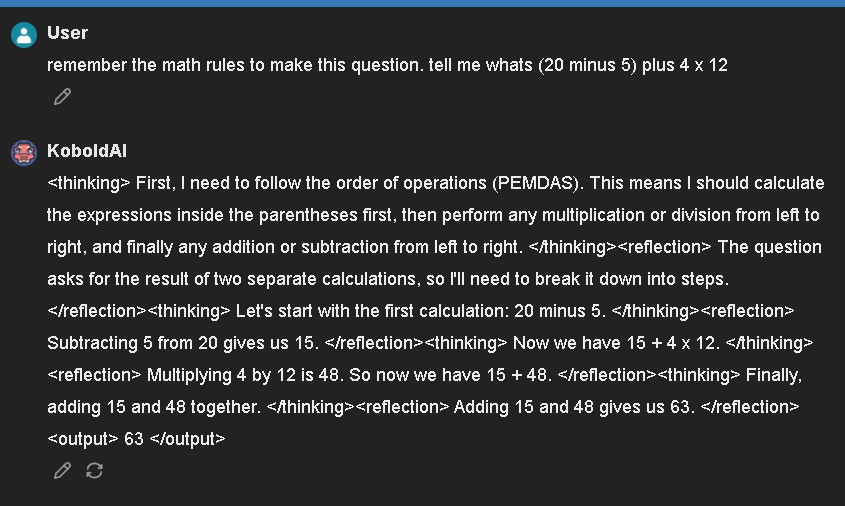

This is a finetune to include 'thinking' tags (plus others).

It makes this model a lot smarter (at least in maths), alltho the tags are only used in english somehow.

This model has not been made uncensored.

Prompt Template

This Model uses Llama-3 Chat:

<|begin_of_text|><|start_header_id|>system<|end_header_id|>

This is text

{system_prompt}<|eot_id|><|start_header_id|>user<|end_header_id|>

{prompt}<|eot_id|><|start_header_id|>assistant<|end_header_id|>

Finetune Info:

The following YAML configuration was used to finetune this model:

base_model: alpindale/Llama-3.2-3B-Instruct

model_type: LlamaForCausalLM

tokenizer_type: AutoTokenizer

load_in_8bit: false

load_in_4bit: true

strict: false

chat_template: llama3

datasets:

- path: Fischerboot/small-boi-thinkin

type: sharegpt

conversation: llama3

dataset_prepared_path: last_run_prepared

val_set_size: 0.1

output_dir: ./outputs/yuh

adapter: qlora

lora_model_dir:

sequence_len: 4096

sample_packing: false

pad_to_sequence_len: true

lora_r: 32

lora_alpha: 16

lora_dropout: 0.05

lora_target_linear: true

lora_fan_in_fan_out:

lora_target_modules:

- gate_proj

- down_proj

- up_proj

- q_proj

- v_proj

- k_proj

- o_proj

wandb_project:

wandb_entity:

wandb_watch:

wandb_name:

wandb_log_model:

gradient_accumulation_steps: 1

micro_batch_size: 1

num_epochs: 1

optimizer: adamw_bnb_8bit

lr_scheduler: cosine

learning_rate: 0.0002

train_on_inputs: false

group_by_length: false

bf16: auto

fp16:

tf32: false

gradient_checkpointing: true

early_stopping_patience:

resume_from_checkpoint:

local_rank:

logging_steps: 1

xformers_attention:

flash_attention: true

loss_watchdog_threshold: 8.0

loss_watchdog_patience: 3

eval_sample_packing: false

warmup_steps: 10

evals_per_epoch: 2

eval_table_size:

eval_max_new_tokens: 128

saves_per_epoch: 2

debug:

deepspeed:

weight_decay: 0.0

fsdp:

fsdp_config:

special_tokens:

bos_token: "<|begin_of_text|>"

eos_token: "<|end_of_text|>"

pad_token: "<|end_of_text|>"

Training results:

| Training Loss | Epoch | Step | Validation Loss |

|---|---|---|---|

| 1.5032 | 0.0000 | 1 | 1.6556 |

| 1.2011 | 0.5000 | 10553 | 0.6682 |

- Downloads last month

- 13

This model does not have enough activity to be deployed to Inference API (serverless) yet. Increase its social

visibility and check back later, or deploy to Inference Endpoints (dedicated)

instead.