本模型为数据集BAAI/IndustryCorpus2的质量评估模型,用于从语义一致性,信息密度,教育属性等维度评估预训练数据的质量。

按照我们的定义并经过实验,3分以上是相对高质量数据,4分以上绝对高质量数据,可以根据数据量按需所取。

为什么要筛选低质量的数据

下面是从数据中抽取的低质量数据,可以看到这种数据对模型的学习是有害无益的

{"text": "\\_\\__\n\nTranslated from *Chinese Journal of Biochemistry and Molecular Biology*, 2007, 23(2): 154--159 \\[译自:中国生物化学与分子生物学报\\]\n"}

{"text": "#ifndef _IMGBMP_H_\n#define _IMGBMP_H_\n\n#ifdef __cplusplus\nextern \"C\" {\n#endif\n\nconst uint8_t bmp[]={\n\\/\\/-- 调入了一幅图像:D:\\我的文档\\My Pictures\\12864-555.bmp --*\\/\n\\/\\/-- 宽度x高度=128x64 --\n0x00,0x06,0x0A,0xFE,0x0A,0xC6,0x00,0xE0,0x00,0xF0,0x00,0xF8,0x00,0x00,0x00,0x00,\n0x00,0x00,0xFE,0x7D,0xBB,0xC7,0xEF,0xEF,0xEF,0xEF,0xEF,0xEF,0xEF,0xC7,0xBB,0x7D,\n0xFE,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,\n0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,\n0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,\n0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x08,\n0x0C,0xFE,0xFE,0x0C,0x08,0x20,0x60,0xFE,0xFE,0x60,0x20,0x00,0x00,0x00,0x78,0x48,\n0xFE,0x82,0xBA,0xBA,0x82,0xBA,0xBA,0x82,0xBA,0xBA,0x82,0xBA,0xBA,0x82,0xFE,0x00,\n0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,\n0x00,0x00,0x00,0x01,0x01,0x01,0x01,0x01,0x01,0x01,0x01,0x01,0x01,0x01,0x01,0x01,\n0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,\n0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,\n0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,\n0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,\n0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,\n0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,\n0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,\n0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0xFE,0xFF,\n0x03,0x03,0x03,0x03,0x03,0x03,0x03,0x03,0x03,0xFF,0xFF,0x00,0x00,0xFE,0xFF,0x03,\n0x03,0x03,0x03,0x03,0x03,0x03,0x03,0x03,0xFF,0xFE,0x00,0x00,0x00,0x00,0xC0,0xC0,\n0xC0,0x00,0x00,0x00,0x00,0xFE,0xFF,0x03,0x03,0x03,0x03,0x03,0x03,0x03,0x03,0x03,\n0xFF,0xFE,0x00,0x00,0xFE,0xFF,0x03,0x03,0x03,0x03,0x03,0x03,0x03,0x03,0x03,0xFF,\n0xFE,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,\n0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,\n0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,\n0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0xFF,0xFF,\n0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0xFF,0xFF,0x00,0x00,0xFF,0xFF,0x0C,\n0x0C,0x0C,0x0C,0x0C,0x0C,0x0C,0x0C,0x0C,0xFF,0xFF,0x00,0x00,0x00,0x00,0xE1,0xE1,\n0xE1,0x00,0x00,0x00,0x00,0xFF,0xFF,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,\n0xFF,0xFF,0x00,0x00,0xFF,0xFF,0x0C,0x0C,0x0C,0x0C,0x0C,0x0C,0x0C,0x0C,0x0C,0xFF,\n0xFF,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,\n0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,\n0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,\n0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x0F,0x1F,\n0x18,0x18,0x18,0x18,0x18,0x18,0x18,0x18,0x18,0x1F,0x0F,0x00,0x00,0x0F,0x1F,0x18,\n0x18,0x18,0x18,0x18,0x18,0x18,0x18,0x18,0x1F,0x0F,0x00,0x00,0x00,0x00,0x00,0x00,\n0x00,0x00,0x00,0x00,0x00,0x0F,0x1F,0x18,0x18,0x18,0x18,0x18,0x18,0x18,0x18,0x18,\n0x1F,0x0F,0x00,0x00,0x0F,0x1F,0x18,0x18,0x18,0x18,0x18,0x18,0x18,0x18,0x18,0x1F,\n0x0F,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,\n0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,\n0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,\n0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,\n0x00,0x00,0x00,0x00,0x00,0xE2,0x92,0x8A,0x86,0x00,0x00,0x7C,0x82,0x82,0x82,0x7C,\n0x00,0xFE,0x00,0x82,0x92,0xAA,0xC6,0x00,0x00,0xC0,0xC0,0x00,0x7C,0x82,0x82,0x82,\n0x7C,0x00,0x00,0x02,0x02,0x02,0xFE,0x00,0x00,0xC0,0xC0,0x00,0x7C,0x82,0x82,0x82,\n0x7C,0x00,0x00,0xFE,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,\n0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,\n0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,\n0x00,0x24,0xA4,0x2E,0x24,0xE4,0x24,0x2E,0xA4,0x24,0x00,0x00,0x00,0xF8,0x4A,0x4C,\n0x48,0xF8,0x48,0x4C,0x4A,0xF8,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,\n0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,\n0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,\n0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,\n0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,\n0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0xC0,0x20,0x10,0x10,\n0x10,0x10,0x20,0xC0,0x00,0x00,0xC0,0x20,0x10,0x10,0x10,0x10,0x20,0xC0,0x00,0x00,\n0x00,0x12,0x0A,0x07,0x02,0x7F,0x02,0x07,0x0A,0x12,0x00,0x00,0x00,0x0B,0x0A,0x0A,\n0x0A,0x7F,0x0A,0x0A,0x0A,0x0B,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,\n0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,\n0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,\n0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,\n0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,\n0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x00,0x1F,0x20,0x40,0x40,\n0x40,0x50,0x20,0x5F,0x80,0x00,0x1F,0x20,0x40,0x40,0x40,0x50,0x20,0x5F,0x80,0x00,\n}; \n\n\n#ifdef __cplusplus\n}\n#endif\n\n#endif \\/\\/ _IMGBMP_H_ _SSD1306_16BIT_H_\n"}

数据构建

数据来源:随机采样预训练语料

标签构建:设计数据打分细则,借助LLM模型进行多轮打分,筛选多轮打分分差小于2的数据

数据规模:20k打分数据,中英文比例1:1

数据prompt

quality_prompt = """Below is an extract from a web page. Evaluate whether the page has a high natural language value and could be useful in an naturanl language task to train a good language model using the additive 5-point scoring system described below. Points are accumulated based on the satisfaction of each criterion:

- Zero score if the content contains only some meaningless content or private content, such as some random code, http url or copyright information, personally identifiable information, binary encoding of images.

- Add 1 point if the extract provides some basic information, even if it includes some useless contents like advertisements and promotional material.

- Add another point if the extract is written in good style, semantically fluent, and free of repetitive content and grammatical errors.

- Award a third point tf the extract has relatively complete semantic content, and is written in a good and fluent style, the entire content expresses something related to the same topic, rather than a patchwork of several unrelated items.

- A fourth point is awarded if the extract has obvious educational or literary value, or provides a meaningful point or content, contributes to the learning of the topic, and is written in a clear and consistent style. It may be similar to a chapter in a textbook or tutorial, providing a lot of educational content, including exercises and solutions, with little to no superfluous information. The content is coherent and focused, which is valuable for structured learning.

- A fifth point is awarded if the extract has outstanding educational value or is of very high information density, provides very high value and meaningful content, does not contain useless information, and is well suited for teaching or knowledge transfer. It contains detailed reasoning, has an easy-to-follow writing style, and can provide deep and thorough insights.

The extract:

<{EXAMPLE}>.

After examining the extract:

- Briefly justify your total score, up to 50 words.

- Conclude with the score using the format: "Quality score: <total points>"

...

"""

模型训练

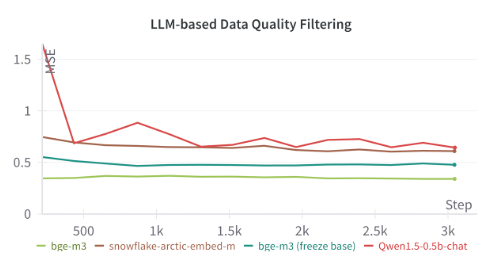

模型选型:与分类模型类似,我们同样使用的是0.5b规模的模型,并对比试验了beg-m3和qwen-0.5b,最终实验显示bge-m3综合表现最优

模型超参:基座bge-m3,全参数训练,lr=1e-5,batch_size=64, max_length = 2048

模型评估:在验证集上模型与GPT4对样本质量判定一致率为90%

高质量数据带来的训练收益

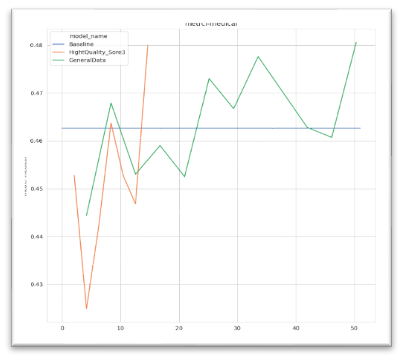

我们为了验证高质量的数据是否能带来更高效的训练效率,在同一基座模型下,使用从未筛选之前的50b数据中抽取出高质量数据,可以认为两个数据的分布大体是一致的,进行自回归训练.

曲线中可以看到,经过高质量数据训练的模型14B的tokens可以达到普通数据50B的模型表现,高质量的数据可以极大的提升训练效率。

此外,高质量的数据可以作为预训练的退火阶段的数据加入到模型中,进一步拉升模型效果,为了验证这个猜测,我们在训练行业模型时候,在模型的退火阶段加入了筛选之后高质量数据和部分指令数据转成的预训练数据,可以看到极大提高了模型的表现。

最后,高质量的预训练语料中包含着丰富的高价值知识性内容,可以从中提取出指令数据进一步提升指令数据的丰富度和知识性,这也催发了Industry-Instruction项目的诞生,我们会在那里进行详细的说明。

how to use

from transformers import (

AutoConfig,

AutoModelForSequenceClassification,

AutoTokenizer)

model_dir = "BAAI/IndustryCorpus2_DataRater"

model = AutoModelForSequenceClassification.from_pretrained(

model_dir,

trust_remote_code=False,

ignore_mismatched_sizes=False,

)

model.cuda()

model.eval()

tokenizer = AutoTokenizer.from_pretrained(

model_dir,

use_fast=True,

token=None,

trust_remote_code=False,

)

config = AutoConfig.from_pretrained(

model_dir,

finetuning_task="text-classification",

)

sentence = "黄龙国际中心位于杭州市西湖区学院路77号,地处杭州黄龙商务区核心位置。项目以“新经济、新生态、新黄龙”的理念和“知识城市创新区”的项目定位,引领着杭州城市的产业升级和创新。\n黄龙国际中心主打“知识盒子”建筑设计,拥有时尚、新潮的建筑立面,聚集不同功能的独立建筑物,打造出包容开放又具有链接性的空间。项目在2018年4月一举斩获开发项目行业最高奖——广厦奖。\n项目整体分四期开发。一期G、H、I三幢楼宇由坤和自主开发建成,于2015年4月投入使用,于2015年5月获得了美国绿色建筑委员会颁发的LEED金级认证,并成功吸引蚂蚁金服、美图、有赞等国内互联网创新巨头率先入驻。\n2016年初,坤和引进万科作为该项目股权合作方通过双方优质资源共享,在产品品质、创新等方面得到全方位提升。\n二期A、B幢由美国KPF设计,并于2018年4月一举获得房地产开发项目行业最高奖——广厦奖。A、B幢写字楼于2018年10月投入使用,B幢与全球领先的创造者社区及空间和服务提供商——WeWork正式签约。商业K-lab于2018年12月28日正式开业。\n项目三期E、F幢已于2020年投入使用。四期C、D幢,计划于2021年底投入使用。\n项目总占地面积约8.7万方,总建筑面积约40万平方米,涵盖9栋国际5A写字楼及8万方K-lab商业新物种,以知识为纽带,打造成一个集商务、商业、教育、文化、娱乐、艺术、餐饮、会展等于一体的完整城市性生态体系。项目全部投入使用后,年租金收入将超6亿元。\n"

result = tokenizer(

[sentecnce],

padding=False,

max_length=max_length,

truncation=True,

return_tensors="pt",

).to("cuda")

for key in result:

result[key] = torch.tensor(result[key])

model_out = model(**result)

predit_score = model_out.logits.tolist()[0][0]

predit_score_round = round(predit_score)

# score=4.674278736114502

- Downloads last month

- 112

Model tree for BAAI/IndustryCorpus2_DataRater

Base model

BAAI/bge-m3