FUTGA: Fine-grained Music Understanding through Temporally-enhanced Generative Augmentation

News

- [10/02] We released FUTGA-Dataset including MusicCaps, SongDescriber, HarmonixSet, and AudioSet (train and eval).

- [07/28] We released FUTGA-7B and training/inference code based on SALMONN-7B backbone!

Overview

FUTGA is an audio LLM with fine-grained music understanding, learning from generative augmentation with temporal compositions. By leveraging existing music caption datasets and large language models (LLMs), we synthesize detailed music captions with structural descriptions and time boundaries for full-length songs. This synthetic dataset enables FUTGA to identify temporal changes at key transition points, their musical functions, and generate dense captions for full-length songs.

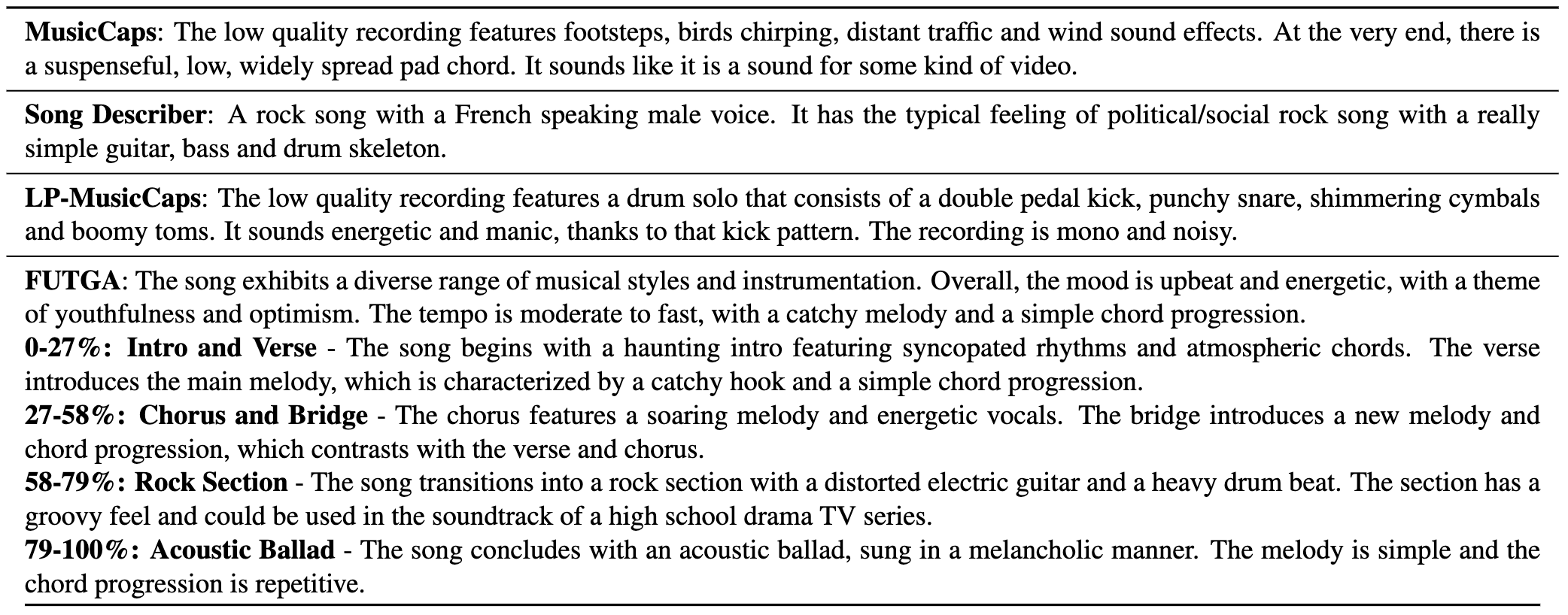

Comparing FUTGA dense captioning with MusicCaps/SongDescriber/LP-MusicCaps

How to load the model

We build FUTGA-7B based on SALMONN. Follow the instructions from SALMONN to load:

- whisper large v2 to

whisper_path, - Fine-tuned BEATs_iter3+ (AS2M) (cpt2) to

beats_path - vicuna 7B v1.5 to

vicuna_path, - FUTGA-7b to

ckpt_path.

Datasets

Please visit our dataset repo FUTGA-Dataset. We currently include MusicCaps, SongDescriber, HarmonixSet, and AudioSet (train and eval).

Citation

If you use our models or datasets in your research, please cite it as follows:

@article{wu2024futga,

title={Futga: Towards Fine-grained Music Understanding through Temporally-enhanced Generative Augmentation},

author={Wu, Junda and Novack, Zachary and Namburi, Amit and Dai, Jiaheng and Dong, Hao-Wen and Xie, Zhouhang and Chen, Carol and McAuley, Julian},

journal={arXiv preprint arXiv:2407.20445},

year={2024}

}

Model tree for JoshuaW1997/FUTGA

Base model

lmsys/vicuna-7b-v1.5