license: cc-by-nc-4.0

base_model: Qwen/Qwen2-7B-Instruct

model-index:

- name: Dolphin

results: []

tags:

- RAG

- on-device language model

- Retrieval Augmented Generation

inference: false

space: false

spaces: false

language:

- en

Dolphin: Long Context as a New Modality for on-device RAG

- Nexa Model Hub - ArXiv

Overview

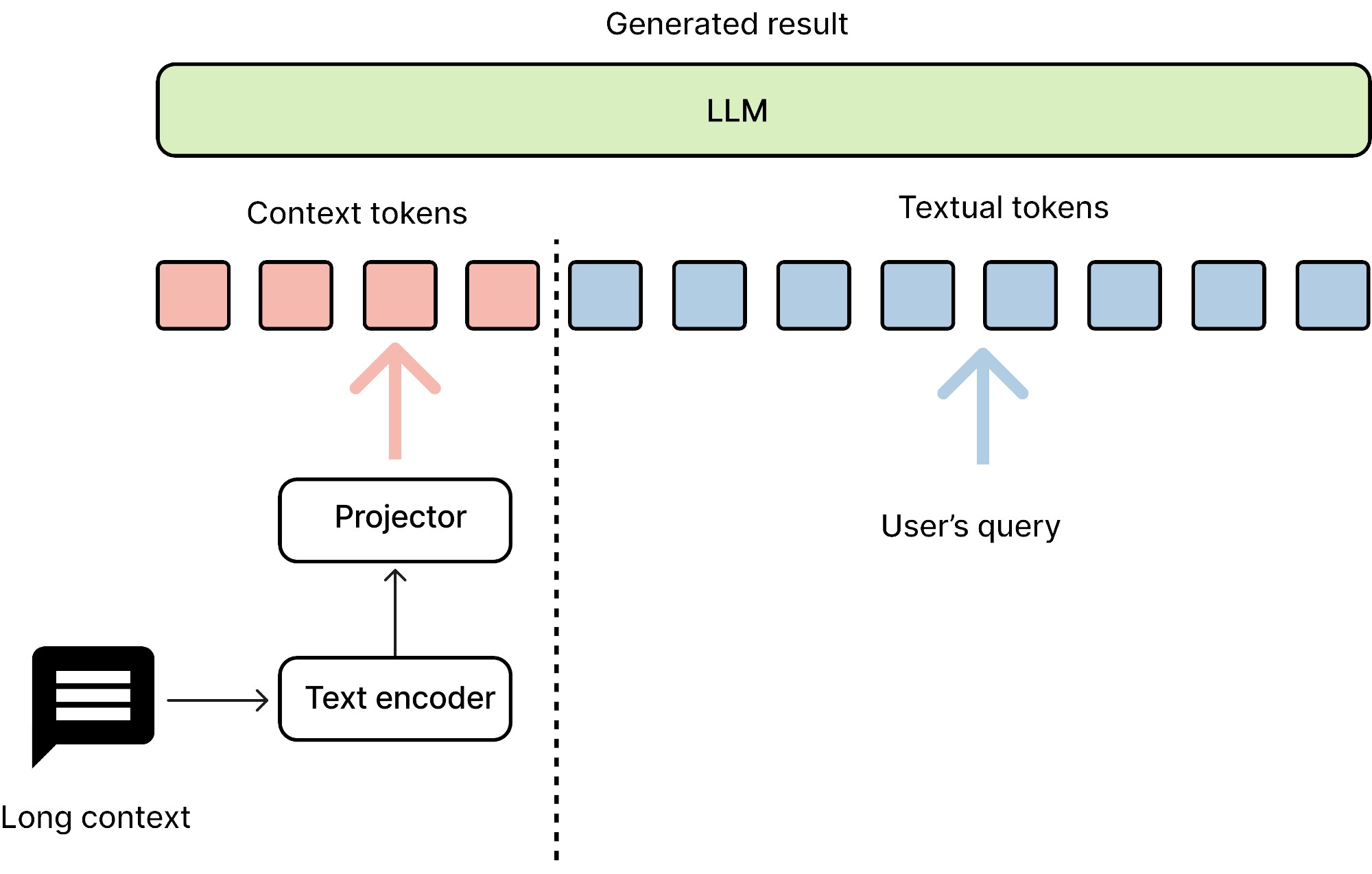

Dolphin is a novel approach to accelerate language model inference by treating long context as a new modality, similar to image, audio, and video modalities in vision-language models. This innovative method incorporates a language encoder model to encode context information into embeddings, applying multimodal model concepts to enhance the efficiency of language model inference。 Below are model highlights:

- 🧠 Context as a distinct modality

- 🗜️ Language encoder for context compression

- 🔗 Multimodal techniques applied to language processing

- ⚡ Optimized for energy efficiency and on-device use

- 📜 Specialized for long context understanding

Model Architecture

Dolphin employs a decoder-decoder framework with two main components:

- A smaller decoder (0.5B parameters) for transforming information from extensive contexts

- A larger decoder (7B parameters) for comprehending and generating responses to current queries

- The architecture also includes a projector to align embeddings between the text encoder and the main decoder.

Running the Model

from transformers import AutoTokenizer

from configuration_dolphin import DolphinForCausalLM

import time

tokenizer = AutoTokenizer.from_pretrained('nexa-collaboration/dolphin_instruct_1M_0805', trust_remote_code=True)

model = DolphinForCausalLM.from_pretrained('nexa-collaboration/dolphin_instruct_1M_0805', trust_remote_code=True)

def inference(input_text):

inputs = tokenizer(input_text, return_tensors="pt")

outputs = model.generate(**inputs, max_new_tokens=100)

return tokenizer.decode(outputs[0], skip_special_tokens=True)

input_text = "Take a selfie for me with front camera"

nexa_query = f"Below is the query from the users, please call the correct function and generate the parameters to call the function.\n\nQuery: {input_text} \n\nResponse:"

start_time = time.time()

result = inference(nexa_query)

print("Dolphin model result:\n", result)

print("Latency:", time.time() - start_time, "s")

Training Process

Dolphin's training involves three stages:

- Restoration Training: Reconstructing original context from compressed embeddings

- Continual Training: Generating context continuations from partial compressed contexts

- Instruction Fine-tuning: Generating responses to queries given compressed contexts

This multi-stage approach progressively enhances the model's ability to handle long contexts and generate appropriate responses.

Citation

If you use Dolphin in your research, please cite our paper:

@article{dolphin2024,

title={Dolphin: Long Context as a New Modality for Energy-Efficient On-Device Language Models},

author={[Author Names]},

journal={arXiv preprint arXiv:[paper_id]},

year={2024}

}

Contact

For questions or feedback, please contact us