metadata

language:

- en

license: cc-by-nc-4.0

model_name: Octopus-V4-GGUF

base_model: NexaAIDev/Octopus-v4

inference: false

model_creator: NexaAIDev

quantized_by: Nexa AI, Inc.

tags:

- function calling

- on-device language model

- gguf

- llama cpp

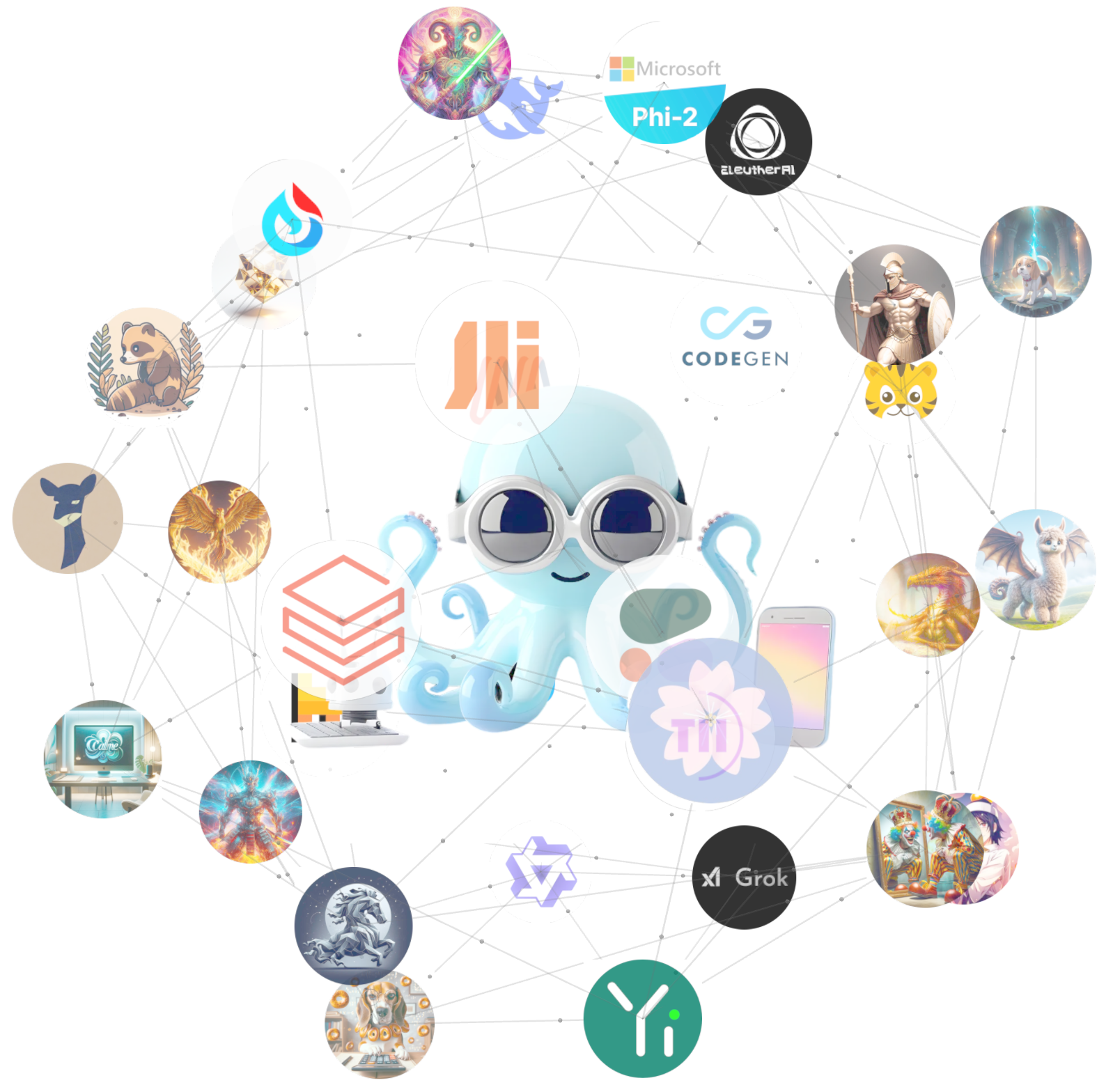

Octopus V4-GGUF: Graph of language models

- Original Model - Nexa AI Website - Octopus-v4 Github - ArXiv - Domain LLM Leaderbaord

Acknowledgement:

We sincerely thank our community members, Mingyuan and Zoey, for their extraordinary contributions to this quantization effort. Please explore Octopus-v4 for our original huggingface model.

Run with Ollama

ollama run NexaAIDev/octopus-v4-q4_k_m

Input example:

Query: Tell me the result of derivative of x^3 when x is 2?

Response: <nexa_4> ('Determine the derivative of the function f(x) = x^3 at the point where x equals 2, and interpret the result within the context of rate of change and tangent slope.')<nexa_end>

Note that <nexa_4> represents the math gpt.

Dataset and Benchmark

- Utilized questions from MMLU to evaluate the performances.

- Evaluated with the Ollama llm-benchmark method.

Quantized GGUF Models

| Name | Quant method | Bits | Size | Respons (token/second) | Use Cases |

|---|---|---|---|---|---|

| Octopus-v4.gguf | 7.20 GB | 27.64 | extremely large | ||

| Octopus-v4-Q2_K.gguf | Q2_K | 2 | 1.32 GB | 54.20 | extremely not recommended, high loss |

| Octopus-v4-Q3_K.gguf | Q3_K | 3 | 1.82 GB | 51.22 | not recommended |

| Octopus-v4-Q3_K_S.gguf | Q3_K_S | 3 | 1.57 GB | 51.78 | not very recommended |

| Octopus-v4-Q3_K_M.gguf | Q3_K_M | 3 | 1.82 GB | 50.86 | not very recommended |

| Octopus-v4-Q3_K_L.gguf | Q3_K_L | 3 | 1.94 GB | 50.05 | not very recommended |

| Octopus-v4-Q4_0.gguf | Q4_0 | 4 | 2.03 GB | 65.76 | good quality, recommended |

| Octopus-v4-Q4_1.gguf | Q4_1 | 4 | 2.24 GB | 69.01 | slow, good quality, recommended |

| Octopus-v4-Q4_K.gguf | Q4_K | 4 | 2.23 GB | 55.76 | slow, good quality, recommended |

| Octopus-v4-Q4_K_S.gguf | Q4_K_S | 4 | 2.04 GB | 53.98 | high quality, recommended |

| Octopus-v4-Q4_K_M.gguf | Q4_K_M | 4 | 1.51 GB | 58.39 | some functions loss, not very recommended |

| Octopus-v4-Q5_0.gguf | Q5_0 | 5 | 2.45 GB | 61.98 | slow, good quality |

| Octopus-v4-Q5_1.gguf | Q5_1 | 5 | 2.67 GB | 63.44 | slow, good quality |

| Octopus-v4-Q5_K.gguf | Q5_K | 5 | 2.58 GB | 58.28 | moderate speed, recommended |

| Octopus-v4-Q5_K_S.gguf | Q5_K_S | 5 | 2.45 GB | 59.95 | moderate speed, recommended |

| Octopus-v4-Q5_K_M.gguf | Q5_K_M | 5 | 2.62 GB | 53.31 | fast, good quality, recommended |

| Octopus-v4-Q6_K.gguf | Q6_K | 6 | 2.91 GB | 52.15 | large, not very recommended |

| Octopus-v4-Q8_0.gguf | Q8_0 | 8 | 3.78 GB | 50.10 | very large, good quality |

| Octopus-v4-f16.gguf | f16 | 16 | 7.20 GB | 30.61 | extremely large |

Quantized with llama.cpp