TransNormerLLM3 -- A Faster and Better LLM

Introduction

This official repository unveils the TransNormerLLM3 model along with its open-source weights for every 50 billion tokens processed during pre-training.

TransNormerLLM evolving from TransNormer, standing out as the first LLM within the linear transformer architecture. Additionally, it distinguishes itself by being the first non-Transformer LLM to exceed both traditional Transformer and other efficient Transformer models (such as, RetNet and Mamba) in terms of speed and performance.

[email protected]: We plan to scale the sequence length in pre-training stage to 10 million: https://twitter.com/opennlplab/status/1776894730015789300

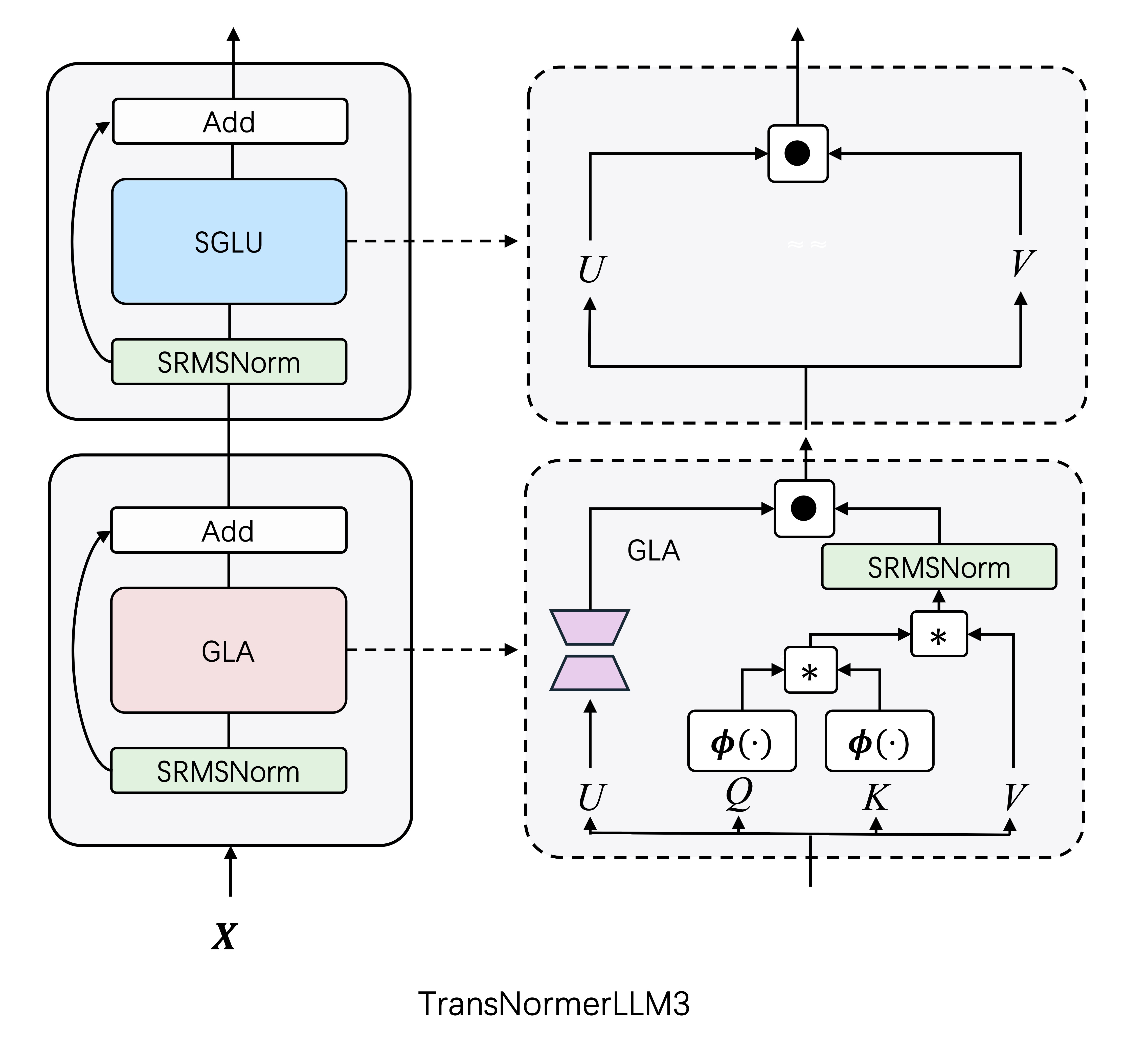

TransNormerLLM3

- TransNormerLLM3-15B features 14.83 billion parameters. It is structured with 42 layers, includes 40 attention heads, and has a total embedding size of 5120.

- TransNormerLLM3-15B is purely intergrated with Lightning Attention-2, which can maintain a stable TGS during training of unlimited sequence lengths, up until encountering firm limitations like GPU memory constraints.

- Titoken tokenizer is used with a total vocabulary size of about 100,000.

- Our training framework has been enhanced with integration to LASP (Linear Attention Sequence Parallelism), allowing for sequence parallelism within linear attention models.

- Our training framework now supprts CO2, which introduces local updates and asynchronous communication into distributed data parallel training, achieving full overlap of communication and computation.

Pre-training Logbook

- Realtime Track: https://api.wandb.ai/links/opennlplab/kip314lq

- Join to dicussion: discord <<<>>> wechat group

--23.12.25-- startup: WeChat - 预训练启航 <<<>>> Twitter - Pre-training Commences <<<>>> YouTube Recording <<<>>> bilibili 回放

--24.01.02-- first week review: WeChat - 第一周概览 <<<>>> Twitter - Week 1 Review

--24.01.09-- second week review: WeChat - 第二周概览 <<<>>> Twitter - Week 2 Review

--24.01.15-- third week review: WeChat - 第三周概览 <<<>>> Twitter - Week 3 Review

--24.01.23-- third week review: WeChat - 第四周概览 <<<>>> Twitter - Week 4 Review

--24.01.30-- third week review: WeChat - 第五周概览 <<<>>> Twitter - Week 5 Review

Released Weights

| param | token | Hugging Face | Model Scope | Wisemodel |

|---|---|---|---|---|

| 15B | 50B | 🤗step13000 | 🤖 | 🐯 |

| 15B | 100B | 🤗step26000 | 🤖 | 🐯 |

| 15B | 150B | 🤗step39000 | 🤖 | 🐯 |

| 15B | 200B | 🤗step52000 | 🤖 | 🐯 |

| 15B | 250B | 🤗step65000 | 🤖 | 🐯 |

| 15B | 300B | 🤗step78000 | 🤖 | 🐯 |

| 15B | 350B | 🤗step92000 | 🤖 | 🐯 |

| 15B | 400B | 🤗step105000 | 🤖 | 🐯 |

| 15B | 450B | 🤗step118000 | 🤖 | 🐯 |

| 15B | 500B | 🤗step131000 | 🤖 | 🐯 |

| 15B | 550B | 🤗step144000 | 🤖 | 🐯 |

| 15B | 600B | 🤗step157000 | 🤖 | 🐯 |

| 15B | 650B | 🤗step170000 | 🤖 | 🐯 |

| 15B | 700B | 🤗step183000 | 🤖 | 🐯 |

| 15B | 750B | 🤗step195500 | 🤖 | 🐯 |

| 15B | 800B | 🤗step209000 | 🤖 | 🐯 |

| 15B | 850B | 🤗step222000 | 🤖 | 🐯 |

| 15B | 900B | 🤗step235000 | 🤖 | 🐯 |

| 15B | 950B | 🤗step248000 | 🤖 | 🐯 |

| 15B | 1000B | 🤗step261000 | 🤖 | 🐯 |

| 15B | 1050B | 🤗step274000 | 🤖 | 🐯 |

| 15B | 1100B | 🤗step287000 | 🤖 | 🐯 |

| 15B | 1150B | 🤗step300000 | 🤖 | 🐯 |

| 15B | 1200B | 🤗step313500 | 🤖 | 🐯 |

| 15B | 1250B | 🤗step326000 | 🤖 | 🐯 |

| 15B | 1300B | 🤗step339500 | 🤖 | 🐯 |

| 15B | 1345B | 🤗stage1 | 🤖 | 🐯 |

from transformers import AutoModelForCausalLM, AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("OpenNLPLab/TransNormerLLM3-15B-Intermediate-Checkpoints", revision='step235000-900Btokens', trust_remote_code=True)

model = AutoModelForCausalLM.from_pretrained("OpenNLPLab/TransNormerLLM3-15B-Intermediate-Checkpoints", torch_dtype=torch.bfloat16, revision='step235000-900Btokens', device_map="auto", trust_remote_code=True)

Benchmark Results

The evaluations of all models are conducted using the official settings and the lm-evaluation-harness framework.

| Model | P | T | BoolQ | PIQA | HS | WG | ARC-e | ARC-c | OBQA | C-Eval | MMLU |

|---|---|---|---|---|---|---|---|---|---|---|---|

| TransNormerLLM3-15B | 15 | 0.05 | 62.08 | 72.52 | 55.55 | 57.14 | 62.12 | 31.14 | 32.40 | 26.18 | 27.50 |

| TransNormerLLM3-15B | 15 | 0.10 | 63.98 | 74.70 | 61.09 | 61.33 | 65.95 | 34.64 | 35.60 | 25.38 | 27.40 |

| TransNormerLLM3-15B | 15 | 0.15 | 60.34 | 75.08 | 63.99 | 62.04 | 64.56 | 34.90 | 35.20 | 22.64 | 26.60 |

| TransNormerLLM3-15B | 15 | 0.20 | 52.05 | 74.48 | 64.72 | 62.75 | 66.16 | 35.15 | 36.80 | 27.25 | 30.80 |

| TransNormerLLM3-15B | 15 | 0.25 | 66.70 | 76.50 | 66.51 | 64.80 | 66.84 | 36.18 | 39.40 | 30.87 | 36.10 |

| TransNormerLLM3-15B | 15 | 0.30 | 67.00 | 76.50 | 67.17 | 64.40 | 66.29 | 36.77 | 38.80 | 33.99 | 37.60 |

| TransNormerLLM3-15B | 15 | 0.35 | 65.78 | 75.46 | 67.88 | 66.54 | 67.34 | 38.57 | 39.60 | 36.02 | 39.20 |

| TransNormerLLM3-15B | 15 | 0.40 | 67.34 | 75.24 | 68.51 | 66.22 | 68.94 | 40.10 | 39.20 | 36.91 | 41.10 |

| TransNormerLLM3-15B | 15 | 0.45 | 69.02 | 76.28 | 69.11 | 63.77 | 65.82 | 36.01 | 39.40 | 37.17 | 42.80 |

| TransNormerLLM3-15B | 15 | 0.50 | 66.15 | 77.09 | 69.75 | 65.11 | 68.56 | 35.84 | 39.60 | 39.81 | 42.00 |

| TransNormerLLM3-15B | 15 | 0.55 | 70.24 | 74.05 | 69.96 | 65.75 | 65.61 | 36.69 | 38.60 | 40.08 | 44.00 |

| TransNormerLLM3-15B | 15 | 0.60 | 74.34 | 75.68 | 70.44 | 66.22 | 69.36 | 38.40 | 38.40 | 41.05 | 45.30 |

| TransNormerLLM3-15B | 15 | 0.65 | 73.15 | 76.55 | 71.60 | 66.46 | 69.65 | 39.68 | 40.80 | 41.20 | 44.90 |

| TransNormerLLM3-15B | 15 | 0.70 | 73.79 | 78.18 | 73.26 | 67.56 | 71.21 | 43.60 | 40.80 | 43.46 | 47.00 |

| TransNormerLLM3-15B | 15 | 0.75 | 76.45 | 78.07 | 74.22 | 69.30 | 71.21 | 43.43 | 42.20 | 43.46 | 47.80 |

| TransNormerLLM3-15B | 15 | 0.80 | 76.97 | 78.84 | 74.95 | 69.85 | 72.14 | 43.52 | 41.20 | 45.21 | 49.41 |

| TransNormerLLM3-15B | 15 | 0.85 | 72.75 | 78.35 | 75.91 | 70.48 | 74.58 | 45.22 | 41.20 | 46.27 | 49.36 |

| TransNormerLLM3-15B | 15 | 0.90 | 76.09 | 77.91 | 76.49 | 70.88 | 72.14 | 42.92 | 40.20 | 45.70 | 50.15 |

| TransNormerLLM3-15B | 15 | 0.95 | 74.28 | 78.24 | 76.63 | 72.22 | 74.12 | 44.11 | 42.40 | 46.25 | 51.43 |

| TransNormerLLM3-15B | 15 | 1.00 | 74.62 | 79.16 | 77.35 | 72.22 | 73.86 | 45.14 | 43.40 | 47.90 | 51.65 |

| TransNormerLLM3-15B | 15 | 1.05 | 76.36 | 78.94 | 77.15 | 71.35 | 74.66 | 44.45 | 42.80 | 45.87 | 52.28 |

| TransNormerLLM3-15B | 15 | 1.10 | 76.88 | 78.73 | 77.62 | 70.88 | 74.41 | 45.48 | 42.80 | 49.78 | 53.01 |

| TransNormerLLM3-15B | 15 | 1.15 | 72.87 | 79.43 | 78.12 | 72.85 | 74.75 | 46.16 | 43.20 | 49.80 | 53.04 |

| TransNormerLLM3-15B | 15 | 1.20 | 79.48 | 78.67 | 78.45 | 72.93 | 75.42 | 44.37 | 43.60 | 49.33 | 53.80 |

| TransNormerLLM3-15B | 15 | 1.25 | 79.17 | 79.16 | 78.81 | 72.93 | 75.13 | 45.99 | 43.60 | 50.44 | 54.19 |

| TransNormerLLM3-15B | 15 | 1.30 | 78.41 | 79.00 | 78.39 | 71.90 | 74.33 | 45.05 | 42.80 | 52.24 | 54.41 |

| TransNormerLLM3-15B | 15 | stage1 | 78.75 | 79.27 | 78.33 | 71.35 | 75.97 | 46.42 | 45.00 | 50.25 | 54.50 |

P: parameter size (billion). T: tokens (trillion). BoolQ: acc. PIQA: acc. HellaSwag: acc_norm. WinoGrande: acc. ARC-easy: acc. ARC-challenge: acc_norm. OpenBookQA: acc_norm. MMLU: 5-shot acc. C-Eval: 5-shot acc.

Acknowledgments and Citation

Acknowledgments

Our project is developed based on the following open source projects:

- tiktoken for the tokenizer.

- metaseq for training.

- lm-evaluation-harness for evaluation.

Citation

If you wish to cite our work, please use the following reference:

@misc{qin2024transnormerllm,

title={TransNormerLLM: A Faster and Better Large Language Model with Improved TransNormer},

author={Zhen Qin and Dong Li and Weigao Sun and Weixuan Sun and Xuyang Shen and Xiaodong Han and Yunshen Wei and Baohong Lv and Xiao Luo and Yu Qiao and Yiran Zhong},

year={2024},

eprint={2307.14995},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

@misc{qin2024lightning,

title={Lightning Attention-2: A Free Lunch for Handling Unlimited Sequence Lengths in Large Language Models},

author={Zhen Qin and Weigao Sun and Dong Li and Xuyang Shen and Weixuan Sun and Yiran Zhong},

year={2024},

eprint={2401.04658},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

@misc{sun2024linear,

title={Linear Attention Sequence Parallelism},

author={Weigao Sun and Zhen Qin and Dong Li and Xuyang Shen and Yu Qiao and Yiran Zhong},

year={2024},

eprint={2404.02882},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

- OpenNLPLab @2024 -

- OpenNLPLab @2024 -

- Downloads last month

- 87