license: apache-2.0

language:

- fr

- en

- de

- es

- it

OCRonos is a series of specialized language models trained by PleIAs for the correction of badly digitized texts, as part of the Bad Data Toolbox.

OCROnos models are versatile tools supporting the correction of OCR errors, wrong word cut/merge and overall broken text structures. The training data includes a highly diverse set of ocrized texts in multiple languages from PleIAs open pre-training corpus, drawn from cultural heritage sources (Common Corpus) and financial and administrative documents in open data (Finance Commons).

This release currently features a model based on llama-3-8b that has been the most tested to date. The model was trained using HPC resources from GENCI–IDRIS (Grant 2023-AD011014736) on Jean-Zay. Future release will focus on smaller internal models that provides a better ratio of generation cost/quality.

OCRonos is generally faithful to what the original material, provides sensible restitution of deteriorated text and will rarely rewrite correct words. On highly deteriorated content, OCRonos can act as a synthetic rewriting tool rather than a strict correction tool.

Along with the other models of PleIAs Bad Data Toolbox, OCRonos contributes to make challenging resources usable for LLM applications and, more broadly, search retrieval. It is especially fitting in situation where the original PDF sources is too damaged for correct OCRization or even non-existent/complex to retrieve.

OCRonos can be tested on a free demo along with Segmentext, another model trained by PleIAs for the text segmentation of broken PDFs.

Examples

Original input with a high rate of digitization artifacts from a financial report from Finance Commons.

Inthisrespect,the in surancebusiness inve stmen t portfolio can be considered conservativel y mana ged as itislargely com posed of cor porate, sovere ignandsupranational bonds, term l oansaswell asdemanddeposits. Followin gtheprevious year, thegroup continue dto diversi fyits holdingsinto investmen tgrade cor porate bonds.(see Note 4 ) Itshouldbenoted that bondsandterm loansareheldto matur ityinacco rdancewiththegroup’s businessmodelpolicy of “inflows”.

Technical liabilities on insur ance contracts.

Theguaran tees offered cover death,disability,r e dundancy andunem ployment aspartof aloanprotect ion insurance policy. These types o f risk are controlled throu ghthe use o f app ropriate morta litytables,statistica lchecksonloss rat ios for thepopulation groups insure dandthrough ar e insurance program.

Liabilit yade quacytest.

a goodnes sટofટfittestaimed a t ensurin gthat insuranceliabilitiesare adequate wi threspect to curren testimates of future cash flowsgenerate d by the insurance contracts isperformed at eachstate mentof accou nt.Futu recashflowsresultin g fromthecontracts take into accoun t the guaran tees and o ptions inherent therein. In the even t of inade quacy, the potential l ossesare fully reco gnize dinnetincome. Them o delingof future cash flowsintheinsuranceliability adequacytest are basedonthefollowin g assum ptions: Atthee n dof 2022,thisliabilityadequacytestdidnot reve al any anomalies.

Income state ment.

Theincome andexpenses reco gnize d for theinsurance contracts issued bythegroupappear inthei ncome stat emen t in“Ne tincome of other activities”and“Ne t expense of other acti vities ”.

Risk mana gement.

The group ado ptsa“prudent a pproach” to itsmana gemen t of therisks towhichitcouldbee x posed throughits insurance activities.

Risk o f contre partie.

As state dabove, insurance companyonlyinvests inasse ts (bankdeposit, soverei gnbonds,supra oragencies or

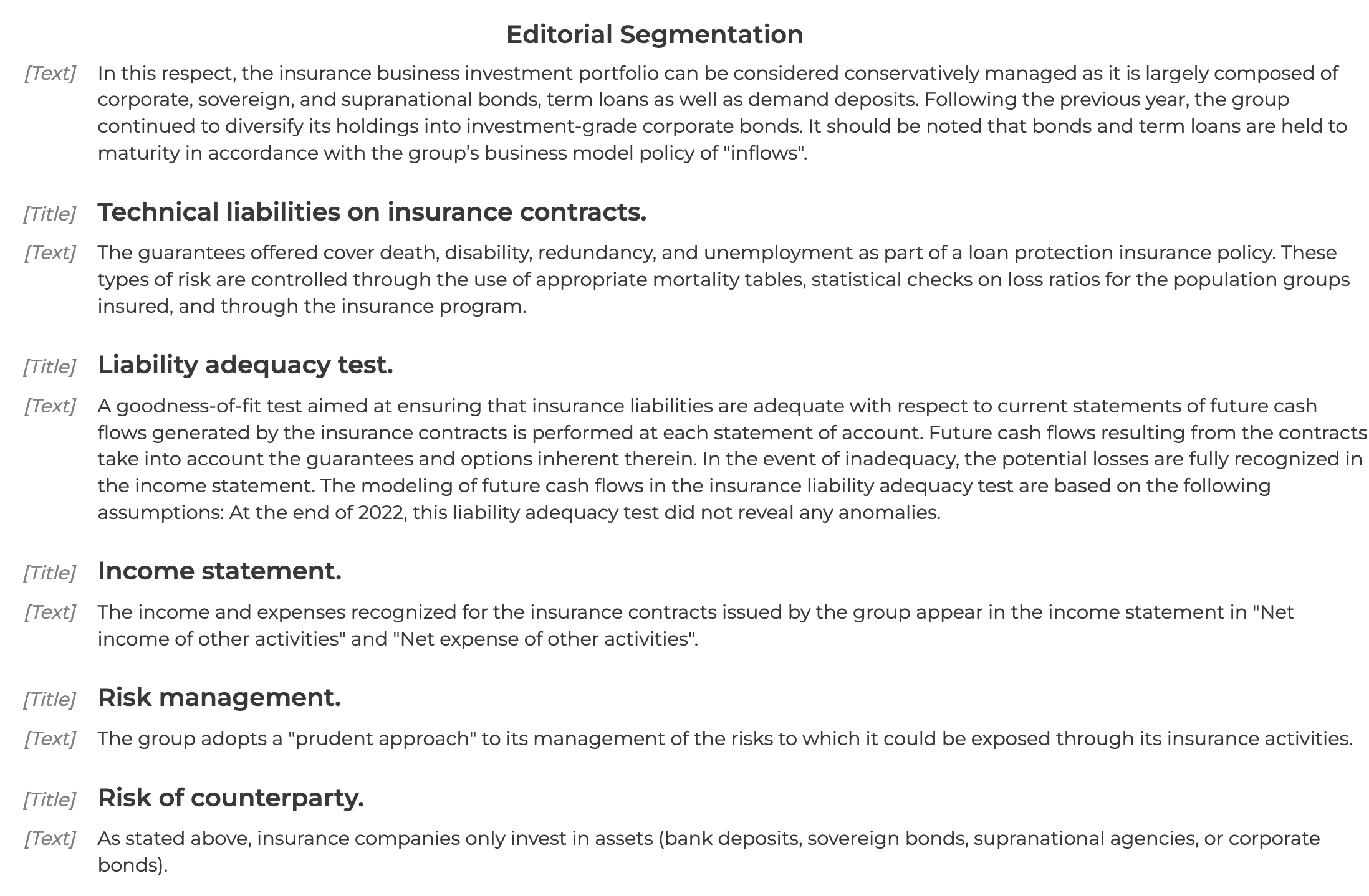

Rewrite suggested by OCRonos.

In this respect, the insurance business investment portfolio can be considered conservatively managed as it is largely composed of corporate, sovereign, and supranational bonds, term loans as well as demand deposits. Following the previous year, the group continued to diversify its holdings into investment-grade corporate bonds. It should be noted that bonds and term loans are held to maturity in accordance with the group’s business model policy of "inflows".

Technical liabilities on insurance contracts.

The guarantees offered cover death, disability, redundancy, and unemployment as part of a loan protection insurance policy. These types of risk are controlled through the use of appropriate mortality tables, statistical checks on loss ratios for the population groups insured, and through the insurance program.

Liability adequacy test.

A goodness-of-fit test aimed at ensuring that insurance liabilities are adequate with respect to current statements of future cash flows generated by the insurance contracts is performed at each statement of account. Future cash flows resulting from the contracts take into account the guarantees and options inherent therein. In the event of inadequacy, the potential losses are fully recognized in the income statement. The modeling of future cash flows in the insurance liability adequacy test are based on the following assumptions: At the end of 2022, this liability adequacy test did not reveal any anomalies.

Income statement.

The income and expenses recognized for the insurance contracts issued by the group appear in the income statement in "Net income of other activities" and "Net expense of other activities".

Risk management.

The group adopts a "prudent approach" to its management of the risks to which it could be exposed through its insurance activities. Risk of counterparty. As stated above, insurance companies only invest in assets (bank deposits, sovereign bonds, supranational agencies, or corporate bonds).

Further refinement with the editorial structuration proposed by Segmentext:

Usage

OCRonos use a custom instruction structure: "### Text ###\n[text]\n\n### Correction ###\n" and a custom eos #END#.

Typical usage with vllm:

sampling_params = SamplingParams(temperature=0.9, top_p=.95, max_tokens=4000, presence_penalty = 0, stop=["#END#"])

prompt = "### Text ###\n" + user_input + "\n\n### Correction ###\n"

outputs = llm.generate(prompts, sampling_params, use_tqdm = False)

Issues

LLMs are theoretically well suited for the task of OCR correction as they are trained to predict the most probably word, they are not usually trained on sources with digitization artifact but on native web content.

On past experiments, a common issue with OCR correction has language switching: due to the inherent noise in the input text, an LLM will transcribe in a different language or even in a different script (like cyrillic). The issue has been especially observed in smaller generalist models like GPT-3.5 or Claude-Haiku.

OCRonos largely mitigates this issue. A few instabilities have been noticed at scale, with the inclusion of repeated words but could be generally filtered out.